Abstract

Currently, researchers commonly use convolutional neural network (CNN) models for landslide remote sensing image recognition. However, with the increase in landslide monitoring data, the available multimodal landslide data contain rich feature information, and existing landslide recognition models have difficulty utilizing such data. A knowledge graph is a linguistic network knowledge base capable of storing and describing various entities and their relationships. A landslide knowledge graph is used to manage multimodal landslide data, and by integrating this graph into a landslide image recognition model, the given multimodal landslide data can be fully utilized for landslide identification. In this paper, we combine knowledge and models, introduce the use of landslide knowledge graphs in landslide identification, and propose a landslide identification method for remote sensing images that fuses knowledge graphs and ResNet (FKGRNet). We take the Loess Plateau of China as the study area and test the effect of the fusion model by comparing the baseline model, the fusion model and other deep learning models. The experimental results show that, first, with ResNet34 as the baseline model, the FKGRNet model achieves 95.08% accuracy in landslide recognition, which is better than that of the baseline model and other deep learning models. Second, the FKGRNet model with different network depths has better landslide recognition accuracy than its corresponding baseline model. Third, the FKGRNet model based on feature splicing outperforms the fused feature classifier in terms of both accuracy and F1-score on the landslide recognition task. Therefore, the FKGRNet model can make fuller use of landslide knowledge to accurately recognize landslides in remote sensing images.

1. Introduction

Landslides are geological phenomena that endanger and cause great losses to human life, property, resources and the environment. Landslides are frequent in China, with 23,952 landslides occurring nationwide during the five-year period from 2016 to 2020, accounting for 70% of the total number of geological disasters in the country. China is the country with the most widely developed loess in the world, mainly distributed in 34 prefecture-level cities in seven provinces and regions, including Shaanxi, Gansu and Shanxi, which is one of the high-risk areas for geological hazards in China [1]. One-third of geological hazards in China occur on the Loess Plateau, causing casualties, road damage, and reduction of arable land, and the majority of landslides are distributed in the western mountainous areas with complex topographic and geological conditions [2]. For example, 116 landslides have occurred in the Heifangtai area in Gansu Province, causing a total of 37 deaths, more than 100 injuries, more than 2,000,000 m2 of arable land abandoned, 413,000 m2 of farmland destroyed, and a total economic loss of 190 million yuan. As landslides can cause great loss to human life, property safety and the ecological environment, it is necessary to monitor and warn of loess landslides.

Landslides have complex characteristics, such as hidden, sudden and uncertain spatial and temporal evolution, sudden occurrence triggered by external factors, unclear disaster-causing conditions, a poorly understood internal structure, and unknown deformation and rupture processes and mechanisms [3]. At present, there are three main methods of landslide monitoring, namely, the geological monitoring method [4], remote sensing monitoring method [5] and integrated space-air-ground monitoring method [6], which are used to monitor the internal properties of landslides, external disaster-causing factors and other indirect information. The geological monitoring method is mainly based on the monitoring of the geological environment, including direct and indirect information, and the geological monitoring data are mainly obtained through external measurements by surveyors in a simple but labor-intensive and time-consuming process. Remote sensing monitoring methods are widely used in landslide monitoring because of their wide range, high speed and rich image information. Displacement changes are the most significant manifestation of landslide stability deterioration, and destabilization before damage and surface displacements are monitored quantitatively by optical remote sensing [7], interferometric synthetic aperture radar (InSAR) [8], and airborne LiDAR monitoring systems [9]. By using a high-precision remote sensing monitoring method, we can accurately monitor the historical and present slope deformation by comparing and analyzing the multiperiod image data of the same area, thus realizing the monitoring of landslide potential from a “space-air” perspective. The integrated space-air-ground monitoring method uses geological monitoring methods to obtain geological disaster data and then integrates modern monitoring technology, such as InSAR and LiDAR, and geographic information technology to conduct a “census” of landslide hazards, monitor the dynamic evolution of landslides, and generate high-precision data for landslide prediction [6].

Landslide monitoring generates a huge amount of multimodal data, including text data and image data, which are of limited use for current landslide hazard analysis methods. In terms of landslide text data, analysis methods for landslide hazards include landslide catalog-based analysis, deterministic methods, heuristic-based methods, and statistical methods. The landslide inventory-based analysis method is a primitive method for predicting regional landslide hazard susceptibility. DeGraff et al. used landslide history hazard maps to construct isopleth maps of landslide deposits and then qualitatively assessed landslide susceptibility classes based on geological conditions in the study area [10]. Deterministic methods usually use a simple slope limit equilibrium model to assess slope stability for the purpose of regional landslide susceptibility prediction [11]. Deterministic methods can better explore the relationships between landslide hazards and causal factors, but data collection is difficult, and the causal factors are spatially variable. Heuristic-based methods rank and weight factors affecting slope instability based on subjective experience to determine the probability of regional landslide occurrence, and include hierarchical analysis, the fuzzy logic method and linear weight combination [12]. In recent years, statistical methods, such as the weight-of-evidence method and logistic regression, have been widely used in landslide susceptibility analysis.

In terms of landslide image data, landslide monitoring as an image processing problem is very amenable to machine learning techniques. Support vector machines [13], random forests [14], artificial neural networks [15], and deep learning [16] have been widely used in landslide classification. Deep learning, a branch of machine learning, is used to construct deep artificial neural networks similar to human neural network systems that analyze and interpret the input data, extract the features of the data and combine them into abstract high-level features, and plays an important role in fields such as computer vision and natural language processing [17]. Convolutional neural networks (CNNs) use convolutional operations to extract features from input images, effectively learning feature expressions from many samples, and have a stronger model generalization capability. LeNet was the first proposed CNN model and laid the foundation for the development of CNN [18]. The AlexNet model increases the depth of the CNN model, employs a ReLU as the activation function, and uses a dropout technique [19]. VGGNet inherited the framework of AlexNet and LeNet and increases the depth of the network by stacking convolutional layers with 3 × 3 convolutional kernels to improve the performance of the network [20]. The Inception-v1 module of GoogLeNet uses sparse links to reduce the number of model parameters and ensure the efficient use of computational resources, and the network depth reaches 22 layers while improving the performance of the network [21]. ResNet consists of several residual blocks that are connected across layers, weakening the strong links between each layer, and is used to solve degradation problems in deep networks [22]. DenseNet uses a simple connectivity model consisting of dense blocks that connect all layers directly, reducing the number of required parameters and the computational costs of the model [23]. EfficientNetV2 introduces the Fused-MBConv module and an incremental learning strategy that uses adaptive regularization to accelerate training by gradually increasing the image size during training [24]. The Vision Transformer splits the whole input image into small image blocks, using the linear embedding sequence of these small image blocks as the input of the transformer, and then using supervised learning for image classification training [25]. The Swin Transformer is a hierarchical transformer that limits the computation of self-attention to nonoverlapping local windows by shifting windows, while considering cross-window connections to improve efficiency and compatibility with a wide range of vision tasks [26].

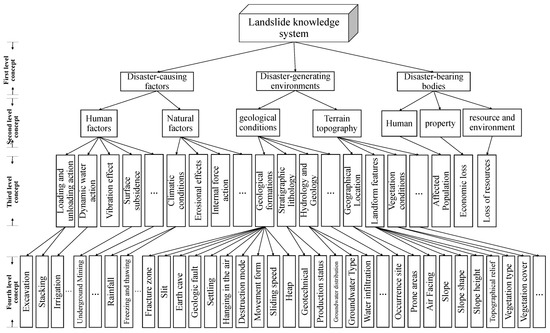

The massive landslide monitoring data accumulated over time are large in amount, broad in range of sources, and complex in structure, but only a small portion of this data can be transformed into useful knowledge. From the perspective of landslide causation mechanisms, landslides contain three constituent factors, i.e., disaster-causing factors, disaster-generating environments and disaster-bearing bodies, and landslides are the result of the joint action of these three factors. To carry out landslide research, build a knowledge system for the study of landslides, increase the knowledge of and relationships between disaster-causing factors, the disaster-generating environment and the disaster-bearing bodies and carry out spatiotemporal prediction for landslide hazards, it is important to attain improved disaster mitigation and public service capabilities. Outcomes of this research will help scientists grasp the causes of landslide hazards, improve early monitoring, forecasting and early warning of landslides, protect people’s lives and reduce property losses caused by landslides, and improve disaster mitigation and public service. Realizing the transformation of massive unstructured data to structured knowledge, iteratively enhance data and knowledge, and providing intelligent landslide analysis services have become key bottlenecks in disaster management [27].

A knowledge graph is a semantic network knowledge base composed of various entities and relationships in the objective world, which can describe various objects and their relationships intuitively, naturally and efficiently and can be used to discover hidden knowledge and patterns. Building upon this basis, a unique geological knowledge graph has been formed in the field of geology. For a typical geographic phenomenon such as a landslide, which is rich in mechanism knowledge and spatiotemporal process information, a landslide knowledge graph is constructed to store and manage multisource heterogeneous data. It is based on the landslide domain knowledge system and aims to reveal the specific manifestations and deep intrinsic factors of landslide occurrence. The TransE [28] model, which is a knowledge representation learning model, makes full use of landslide data in the case of small sample sizes and high-dimensional heterogeneity of prediction index entities and their relationships. It predicts missing triads by leveraging semantic networks constructed from knowledge graphs and addresses the issue of incompleteness in complex prediction tasks based on knowledge graphs with high accuracy and scalability. Thus, it is a potential method to perform landslide spatial identification.

Currently, the analysis and applications of landslide monitoring mainly use unimodal data, such as text data or image data, while multimodal data are rarely fused for analysis. The current data sources for multimodal learning include images, text, audio, video, and other sources from the fields of video classification [29], sentiment analysis [30], cross-modal search [31], and image synthesis [32]. The advantages of multimodal learning are that it makes up for the limitations of unimodal information, is less affected by noise in individual modalities, has redundancy and complementarity among modalities, and can obtain information with richer features to improve the performance of the whole model.

A landslide knowledge graph is utilized to manage multimodal data generated from landslide monitoring and is incorporated into the landslide image recognition task. The information derived from different modalities complements each other, thereby enhancing the accuracy of landslide remote sensing image recognition. Therefore, we construct a landslide knowledge graph based on the landslide knowledge domain. Subsequently, we incorporate the landslide knowledge graph into the task of landslide remote sensing image recognition, proposing a recognition model that integrates the landslide knowledge graph with remote sensing images. Additionally, we present two distinct feature fusion methods.

2. Materials

2.1. Study Area

The study area is located in the Loess Plateau distribution area, which includes the provinces of Gansu, Shaanxi and Shanxi in China, as shown in Figure 1. Loess landforms in the study area are widely distributed, geological hazards are frequent, and loess landslides have become the most important type of geological hazard in the Loess Plateau [33]. The triggering factors of landslides in areas with loess include natural and human factors. Natural factors include rainfall, freezing and thawing, river erosion, and earthquakes; human factors include slope excavation, slope top-loading, irrigation, and reservoir storage [34]. Rainfall is one of the important factors that causes landslides; the rainfall in the Loess Plateau area is not high but is concentrated, and loess landslides mostly occur in areas with more rainfall [35]. Loess landforms are also closely related to loess landslides. Loess landforms can be divided into loess beams, loess plateaus, loess mounts and river valley terraces. Loess beam valley landforms account for 50.3% of the loess plateau area and cause 38.3% of loess landslides; loess mount valley landforms account for 21.8% of the loess plateau area and cause 21.2% of loess landslides [33].

Figure 1.

Study area.

2.2. Experimental Data

The landslide data used in this paper were derived from the Loess Plateau landslide database constructed by Sheng Hu’s team [36]. The team carried out landslide unmanned aerial vehicle (UAV) survey work along six typical routes of the Loess Plateau from March 2017 to November 2018, extracted information such as geometric feature parameters and terrain feature parameters of 307 loess landslides using ArcGIS and Global Mapper, and catalogued the landslides to obtain a database of loess plateau landslides based on high-resolution images and terrain data. There are 307 landslide sample points in the study area, among which the largest landslide area is 3,129,630 m2 and the smallest landslide area is 541 m2; 235 loess landslides are distributed in loess hilly areas and 72 loess landslides are distributed in loess plateau areas. To classify and learn the landslide remote sensing images, 307 non-landslide sample points were selected from the study area to construct the non-landslide data sample set. The image data of landslide data and non-landslide data were obtained separately through Google Earth, with a time range of March 2017–November 2018 and a pixel size of 500*500, from which the landslide image dataset for classification was constructed.

Tens or even hundreds of landslide factors are involved in loess landslides, and the attribute values of landslide factors are mostly high-dimensional heterogeneous data. The amount of available data is usually small, which increases the difficulty of landslide spatial recognition. From the landslide knowledge graph, the factors affecting landslide occurrence can be divided into two types. One type is composed of the internal factors of landslide occurrence, such as geological features, topography and geomorphology. The other is composed of the external factors that play a decisive role in landslide development, such as climate change, human activities, groundwater, earthquakes, etc. The occurrence of landslides is the result of the combined effect of many internal and external factors, but landslides do not necessarily occur only when external conditions are met. As long as one or several external factors act on the landslide body and can trigger its internal factors, landslides may occur. By selecting landslide knowledge from different states in the dynamic evolution of landslides, including the internal environment and external disaster-causing factors, determining landslide knowledge from a multidimensional perspective and making different combinations, the degree of understanding of landslide movement and the accuracy of identification can be greatly improved.

In this paper, based on the landslide knowledge graph, we use expert knowledge and related literature analysis [33,37,38,39] to analyze the influence of information in the landslide knowledge graph on the accuracy of landslide remote sensing image recognition by considering the topography and geomorphology, basic geology and human activities in the study area. We take the knowledge of geomorphological features, curvature features, climatic conditions, geological conditions, feature distance and thematic index features in the landslide knowledge graph as examples. As shown in Table 1, the digital elevation model (DEM) was downloaded from Aster satellite 30 m resolution data; slope, aspect, plane curvature and profile curvature data were extracted from DEM data based on ArcGIS software; the landform type was obtained by visual inspection of data images obtained from Google Earth; the normalized difference vegetation index (NDVI) data were extracted from the 1 km resolution monthly NDVI dataset in China; the precipitation data were extracted from the 1 km resolution annual precipitation data in China in 2018; stratigraphic lithology data were extracted from the 1:200,000 digital geological map of China; the water system network and road network were extracted from the 1:250,000 national basic geographic database, and then the water system distance factor and road distance factor were obtained using the Euclidean distance tool.

Table 1.

Landslide knowledge and corresponding factors.

2.3. Landslide Knowledge Graph

Landslide monitoring data is difficult to obtain. Furthermore, landslides exhibit complex characteristics such as suddenness and uncertain spatiotemporal evolution. From the perspective of landslide genesis, there are three components of landslides: disaster-causing factors, disaster-generating environments and disaster-bearing bodies. This paper constructs a landslide knowledge system based on the characteristics of loess landslides, with these core elements and their interrelationships, which can express the mechanism, evolutionary state and disaster-causing mode of landslide occurrence, as shown in Figure 2. Mechanism knowledge includes the key elements of landslide occurrence, state knowledge is used to describe the unique spatiotemporal evolution of landslide movement, and the disaster-causing mode is the comprehensive application of mechanism knowledge and state knowledge.

Figure 2.

Landslide knowledge system.

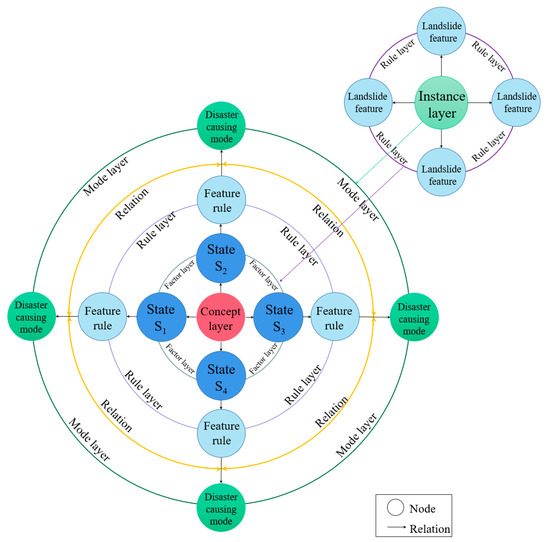

In this paper, we propose a landslide knowledge representation model, as shown in Figure 3, that accounts for spatiotemporal and mechanistic features, introduces knowledge representation concepts such as semantic networks in knowledge engineering, models conceptual entities and relationships of landslides, realizes the expression of basic attribute features, process knowledge and mechanistic knowledge of landslides, builds a bridge for mapping landslide data to landslide knowledge graphs, and constructs landslide knowledge graphs.

Figure 3.

Landslide knowledge representation model.

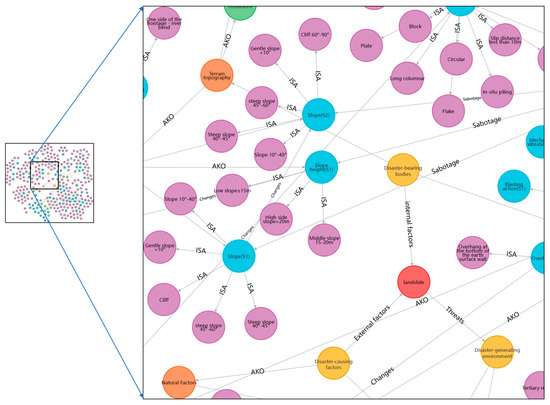

Based on the knowledge system of the landslide domain, landslide knowledge graphs are important for describing the mechanistic knowledge and spatiotemporal processes of landslides and for revealing the specific and deep intrinsic factors of landslide occurrence according to the knowledge represented and organized by knowledge graphs. Landslide knowledge graphs need to have the ability to express regular mechanistic knowledge and spatiotemporal process knowledge, as well as the ability to integrate, process and analyze landslide multisource heterogeneous data. Knowledge graph management technology is highly applicable to the landslide field. We apply the techniques of knowledge graphs, NLP and deep learning to landslide spatial recognition and intelligent queries of landslide events. On the one hand, we are able to extract landslide domain knowledge from multisource data such as structured relational remote sensing databases, semi-structured web news, and unstructured monographs and construct a large-scale landslide knowledge graph. On the other hand, we can quickly and accurately carry out landslide knowledge management or make decisions and provide technical support for query, analysis and decision making of landslide events. The landslide knowledge graph schema layer is shown in Figure 4.

Figure 4.

Example of the schema layer of the landslide knowledge graph (Figure S1).

3. Methods

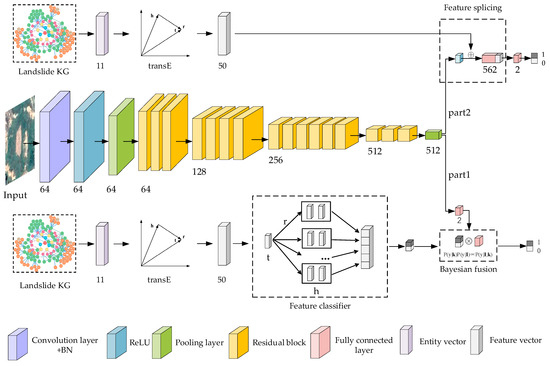

In this study, we propose a remote sensing image landslide recognition method based on a fused knowledge graph and ResNet network model (FKGRNet), which uses landslide knowledge graphs as features for remote sensing landslide image recognition. In this section, we briefly introduce knowledge representation learning, the TransE model, and the ResNet model, introduce ResNet and the knowledge fusion mechanism and propose two feature fusion methods.

3.1. Knowledge Representation Learning

A landslide knowledge graph provides a knowledge summary of the landslide generation mechanism and landslide evolution. Inference technology aims to discover potential and unknown knowledge based on existing knowledge in knowledge graphs according to a certain inference mechanism so that machines have the same inference and decision-making ability as humans. Using machine learning or deep learning methods, triples of the form (head entity, relationship, tail entity) unique to the knowledge graph are used to determine the missing triples so that the knowledge graph can be supplemented and improved to achieve assisted analysis and decision support. With the rapid development of deep learning and embedding techniques, the translation-based model TransE [28] has become a mainstream representation learning approach for knowledge graph inference. Entities and relations of triads are mapped to a dense low-dimensional vector space, and a score function is designed to measure the validity of the triads according to the transfer hypothesis, which in turn predicts the missing triads.

TransE

The training samples of the TransE model are taken from the set of (head entity, relation, tail entity) triples derived from the landslide knowledge graph instead of a small portion of the original landslide records. During training, the head entities, relations, and tail entities are replaced by their respective corresponding feature vectors to overcome the influence of high-dimensional heterogeneity of the predictors. Meanwhile, a small amount of landslide data can generate enough triads for training, and each entity in a triad can be defined as a high-dimensional vector of landslide data. Therefore, the TransE model is suitable for dealing with small sample sets and the high-dimensional data structures contained in landslide knowledge graphs.

The knowledge inference process of the TransE model assumes that the head entity vector h plus the relationship vector r in the vector space is approximately equal to the tail entity vector t in the vector space, i.e.,

The features in the landslide event entity need to be represented by a vector, and each dimension of the vector represents a feature of the landslide. denotes a vector of landslide event entities, and represents a vector of non-landslide event entities. Here, denotes the data of a dimension of a landslide event entity and denotes the data of a dimension of a non-landslide event entity. The set of relationships between entities is {, , }, where denotes a similarity relationship, denotes a dissimilarity relationship, and denotes the relationship to be measured. Similarity relationships are for entities of the same type, and dissimilarity relationships are for entities of different types; for example, a relationship between landslide event entities constitutes a similarity relationship, and a relationship between a landslide event entity and non-landslide event entity constitutes dissimilarity. Under ideal conditions, both sides of Equation (1) are equal, and Equation (2) is obtained:

In an actual situation, is not zero, so the relationship with the smallest is chosen as the prediction result of the model for the relationship between entities. The TransE model constructs the set of incorrect triads (negative samples) from the set of correct triads (positive samples) during training, and the negative samples replace the entities or relationships in the correct triads randomly, and the loss value is calculated during model training. The model is trained through parameter optimization and multilevel iteration, and its feedback mechanism in the dataset “motivates” the feature generation of the correct triad and “penalizes” the incorrect triad. The model then adjusts the distances between the scores of the correct triad and the incorrect triad accordingly. Finally, the loss function is minimized so that the patterns in the data can be recognized effectively.

3.2. ResNet Model and Knowledge Fusion Mechanism

In deep learning, linear or nonlinear mapping of unimodal information is usually performed to produce higher-order semantic feature representations of individual modal information. In the process of prediction, the information of a single modality usually does not contain all the valid information needed to produce accurate predictions, while the multimodal fusion process fuses the information of two or more modalities, which enables information complementation, broadens the coverage of the information contained in the input data, improves the accuracy of the prediction results, and increases the robustness of the prediction model [40]. Therefore, fusing the features of different modalities is an important means to improve the model identification performance. Similarly, for the abundant multimodal information in the landslide domain, multimodal fusion can be used to improve the recognition performance by combining information from different source domains.

Multimodal fusion is classified into prefusion, post-fusion and hybrid fusion according to the sequential relationship between multimodal fusion and modal modeling of each modality. Prefusion refers to the feature-level fusion performed by integrating or combining features from all modalities before modal modeling [41]. Post=fusion refers to performing the modeling of each modality separately and then combining the outputs or decisions of the models to produce the final decision results and complete the decision-level fusion [42]; hybrid fusion refers to fusion at the feature level and the decision level by combining the prefusion and post-fusion approaches [43]. In the prefusion implementation process, the features of each input modality are first extracted, the extracted features are then combined into a feature set, and the integrated features are fed into a model as input data to output the prediction results. Prefusion has a low computational complexity, but the ensemble features resulting from the transformation and scaling process of each modal feature usually have high dimensionality, and dimensionality reduction techniques are usually used to solve the problem of redundant vectors in space [44,45]. In the post=fusion implementation process, the features of each modality are first extracted, the extracted features of each modality are input into the corresponding models, each model outputs a prediction result, and then the prediction results of each model are integrated to form the final prediction result. Compared with prefusion, post-fusion can handle the asynchronous nature of the data in a simpler way, the system can be extended with the increase in the number of modes, the prediction model of each mode can better model that mode, and a prediction can be made when the model input is missing some modes. We consider the knowledge graph modal information as knowledge features to be fused with the image features, so post-fusion is used as the main fusion method of the model.

3.2.1. ResNet

CNNs employ convolutional layers to automatically extract many spatial visual features of images and have become the mainstream method of image classification. Thanks to the good performance of convolutional neural networks in image classification, we can use convolutional neural networks from computer science to classify landslide remote sensing images.

A CNN usually consists of a convolutional layer, pooling layer and fully connected layer. Generally, the layers of the neural network are continuously deepened to extract more complex features. However, deep convolutional networks are subject to degradation, which means that the accuracy decreases after a certain number of network layers are reached. Based on deep convolutional networks, ResNet introduces the idea of residual learning, which consists of stacked residual blocks, and the residual structure is shown in Figure 5. Assuming that the input of the neural network is x, F(x) is the residual mapping, and the constant mapping function is H(x). The residual block contains the weight layer, and the input is directly connected to the output through the cross-layer connection, which converts the constant mapping function of the network H(x) = x training into a residual function F(x) = H(x) − x. The residual block does not introduce additional parameters and does not affect the complexity of the original network. In addition, the cross-layer connection allows the features of different layers to be passed to each other, which alleviates the gradient disappearance problem to a certain extent.

Figure 5.

Schematic diagram of the residual structure.

We use precision, recall, accuracy, and F1-score to evaluate the performance of the model for the landslide identification task. Precision is the proportion of the samples identified by the model as loess landslides that are actually loess landslides, recall is the proportion of the samples that are actually loess landslides that are correctly identified by the model as loess landslides, accuracy is the ratio of the number of loess landslide samples correctly identified by the model to the number of all samples, and F1-score is a weighted average of precision and recall that is calculated as follows:

3.2.2. Knowledge Fusion Mechanism

In the following, we describe the process of constructing a classification recognition model by fusing a knowledge graph and landslide remote sensing images. For landslide knowledge graphs and landslide remote sensing of image data from different source domains, different baseline models are used and fused using a post-fusion approach. For the landslide knowledge graph, the data types include text, values and relationships between entities. The TransE model of knowledge graph representation learning is used. The attributes of the landslide entities in the landslide knowledge graph are grouped into triads, and the triads are trained using TransE to obtain the feature vectors of the landslide entities. For the landslide remote sensing image, the data type is RGB three-channel image data, and the landslide image data are trained using ResNet to obtain the landslide image features. In the following, we discuss the fusion of landslide image features and the landslide knowledge graph entity feature vectors.

For landslide image data, the landslide images are first preprocessed. Data preprocessing is an important step when using deep neural networks for image classification tasks. Using data enhancement techniques, including random horizontal or vertical rotation and normalization, can improve the generalization performance of the model, reduce the risk of overfitting, increase the robustness of the network, and accelerate the convergence of the model. The landslide image data are then fed into the ResNet model for feature extraction through a series of convolution layers, usually using small-sized filters (e.g., 1 × 1, 3 × 3, or 5 × 5) to extract local features of the image, and the landslide image is converted into a feature map by convolution operations. After the convolutional layers, each batch of input landslide image data is normalized using a batch normalization operation, and then a nonlinear activation function is applied to enhance the expressiveness of the network. The core of ResNet consists of the residual blocks, each of which contains multiple convolutional and batch normalization layers, as well as jump connections that add the input feature maps directly to the output feature maps, thus constituting residual learning. This residual structure helps to solve the gradient disappearance problem and allows the network to train more deeply. After the last residual block, a global average pooling layer is applied to convert the feature maps of the landslide images into vector form as the final feature representation. Finally, the fully connected layer connects the output of the global average pooling layer to the classifier to generate the final landslide classification.

For the landslide knowledge graph data, the training samples of the TransE model are taken from the set of triples of landslide entity vectors derived from the landslide knowledge graph according to Section 3.1. We use TransE to train the triad of landslide entity vectors according to Equation (1) and obtain the feature vectors of landslide knowledge map entities. We designed two feature fusion methods to verify the role of landslide knowledge graphs in landslide image classification: feature classification and feature splicing, as shown in Figure 6. Part 1 indicates the fusion method of the feature classifier, and part 2 indicates the fusion method of feature splicing.

Figure 6.

Structure of the fused feature classifier and feature splicing model; part 1 indicates the feature classifier-based fusion method, and part 2 indicates the feature splicing-based fusion method.

Feature Classifier

The feature classifier-based fusion mechanism is used after obtaining the feature vectors of the landslide knowledge graph entities. Part 1 in Figure 6 represents the network structure diagram of the feature classifier-based FKGRNet fusion model, and the feature classifier is designed according to Equation (1). The tail entity vector t is represented by the vector to be measured T. Suppose we are randomly given n sets of landslide vectors Si and non-landslide vectors Pi representing the head vector h. We compare the score distance between the vector to be measured T and the landslide vector Si and non-landslide vector Pi with the similarity relation rsimilarity. Define the score distance functions of the landslide vector Si and the non-landslide vector Pi as

where dis1 represents the score distance between the vector to be measured T and the landslide vector Si plus the similarity relation rsimilarity, and dis2 represents the score distance between the similarity relation rsimilarity plus the non-landslide vector Pi and the vector to be measured T.

After calculating the two values of dis1 and dis2, the model uses the vector with the smaller distance as the prediction result. For example, if the value of dis1 is greater than the value of dis2, it means that the score distance between the vector T to be measured and the landslide vector Si is greater than the score distance between the vector T to be measured and the non-landslide vector Pi under the similarity relation rsimilarity. This means that the vector T to be measured is more similar to the landslide vector Si in this vector group, and the event represented by the vector T to be measured is discriminated as a landslide in this vector group. After comparing the n sets of landslide vectors Si and non-landslide vectors Pi, a feature vector of dimension n is obtained. The elements in this feature vector are all 0 or 1, with 0 indicating a non-landslide result and 1 indicating a landslide result. Then, the classifier counts the distribution of 0 elements and 1 elements in this feature vector to obtain the prior classification probability two-dimensional vector of landslide knowledge graph entities, which represent the landslide probability and non-landslide probability, respectively.

We denote the data source by , where Ii is a particular image, ki denotes the landslide knowledge vector corresponding to that image, and denotes its corresponding category label.

Therefore, the trained feature vectors of the entities are fed to the classifier, and the priori classification probability vector of the landslide knowledge graph of the to-be-measured vector is output. The ResNet model takes the to-be-measured image data through the convolutional and fully connected layers and outputs the a priori classification probability vector of the landslide image data. The classification probability vectors of multimodal data to be measured are input to the integrator, and the feature vectors are combined using a multivariate Bayesian formulation [46] to obtain the classification results. The symbol ⊗ indicates the fusion between the knowledge graph prior classification probability vector and the image prior classification probability vector using the Bayesian formula. The landslide probability based on the knowledge graph and the landslide image data is positively correlated with the product of the prior probability of the landslide knowledge graph and the prior probability of the landslide image data. The formula is as follows:

where denotes the probability that a certain landslide event is classified as belonging to a certain category with the support of image and knowledge graph data. denotes the image prior probability, and denotes the knowledge graph prior probability. denotes a proportionality relationship between and .

Feature Splicing

The feature splicing-based fusion mechanism is a multimodal landslide data fusion approach for splicing landslide feature vectors from different modalities to obtain a comprehensive feature representation. We use this fusion mechanism for feature vector fusion of landslide knowledge graph entities and images to construct the FKGRNet model based on the feature splicing fusion approach, as shown in part 2 of Figure 6. The symbol denotes the fusion between the knowledge graph entity feature vectors and the image a priori classification probability vectors using the feature splicing approach.

First, the preprocessed landslide images are input to the ResNet model, and the landslide image features are extracted through the convolution layer and converted into a landslide feature map. Then, the feature vectors of the landslide images are output through batch normalization, a nonlinear activation layer and a global average pooling layer. A ReLU layer is added after the ResNet fully connected layer for nonlinear activation, and then the feature vectors of the landslide knowledge graph entities are stitched with the image feature vectors to obtain a 512-dimensional feature splicing layer. The feature splicing layer stitches the feature vectors of landslide knowledge graph entities and images in dimensionality and connects them together to form a higher-dimensional integrated feature vector. This approach allows the model to utilize information from both landslide knowledge graph entities and images for a more comprehensive landslide classification. The splicing operation can be implemented by simply performing a join operation between the two vectors. Subsequently, the feature vectors fused in the feature splicing layer are used for binary classification of landslide data to determine whether a landslide has occurred. In this way, the landslide classification results based on the feature splicing fusion approach can be interpreted and analyzed by interpreting the output of the classifier. The advantage of the feature splicing-based fusion mechanism is that it is simple and easy to implement and can fuse information from different modalities together directly, thus making full use of the multimodal data.

4. Results

In this section, we first present the recognition results of the ResNet model for landslide remote sensing images and show the recognition results of the feature classifier for landslide knowledge graph data. Then, we discuss the recognition results of the FKGRNet model based on the feature classifier and the recognition results of the FKGRNet model based on feature splicing. Next, we outline the performance of the different models on the remote sensing image dataset and compare the results with the recognition results of the FKGRNet model. Finally, we conduct ablation experiments to investigate the role of knowledge in the landslide knowledge graph on the fusion model.

4.1. Identification Based on the ResNet Model

From Section 2.2, a total of 307 landslide samples were found in the study area, and 307 sample points were randomly selected as non-landslide samples in the non-landslide area. The landslide image dataset was divided according to a ratio of 8:2; the training set contained 492 images, and the validation set contained 122 images. A Windows-based computer with a NVIDIA GeForce GTX 3080TI 16G GPU and Intel Core i11-11900k processor was used for the experiments. The ResNet model based on the PyTorch environment was loaded with weights pretrained on the ImageNet dataset, the number of iterations was set to 50 epochs, and the batch size was 32 for the Adam optimizer. The initial learning rate was set to 0.001, and the learning rate was reduced by half every 5 epochs using a learning rate optimization strategy. The input image was resized to 224 × 224 pixels, and data enhancement methods such as random horizontal and vertical flipping were used to normalize the image pixel values to (0,1) to speed up model convergence.

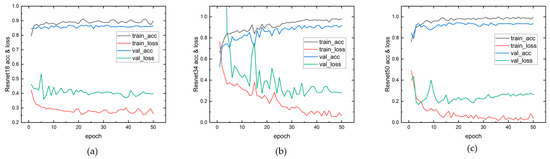

We trained the ResNet model using different network depths. Figure 7 shows the training accuracy, training loss, validation accuracy and validation loss of the ResNet model for different network depths as well as the convergence of the model training. As seen in Table 2, as the network depth of the ResNet model increases, the landslide recognition accuracy increases, and the ability of ResNet to extract features increases, which illustrates the effectiveness of the ResNet residual module for landslide image feature extraction.

Figure 7.

Experimental results of ResNet with different network depths; (a) Recognition results of ResNet18. (b) Recognition results of ResNet34. (c) Recognition results of ResNet50.

Table 2.

Comparison of recognition results of ResNet models with different network depths.

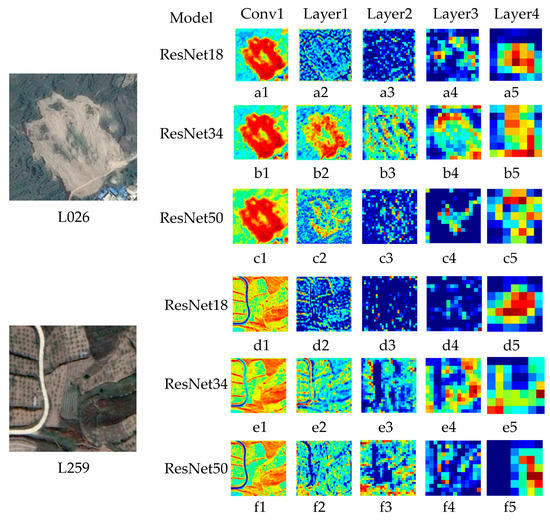

Figure 8 illustrates the feature maps produced for landslide images L026 and L259 by the ResNet model at various depths. The brightness of the color in each feature map represents the level of significant features captured by the respective convolutional layer at each location. Notably, the landslide features in image L026 are prominently visible, and they are effectively extracted across all three network depths. In terms of the network structure of ResNet34, it can be seen from b1-b5 in Figure 8 that the ResNet convolutional module also becomes increasingly sensitive to the extraction of image features, with the model extracting significant features in the landslide region. In terms of the network depth of ResNet, it can be seen from a4, b4 and c4 in Figure 8 that, as the network depth increases, ResNet becomes more focused on the landslide region of the image. However, image L259 is mistakenly classified as a non-landslide image after undergoing feature extraction by the ResNet network. A further analysis of the images reveals that the landslide features in image L259 are predominantly concentrated in the middle and lower parts, but they are not adequately extracted during the convolutional layer processing step. This inadequacy might explain the incorrect identification of image L259 as a non-landslide image.

Figure 8.

Feature maps observed for image L026 and image L256 at different ResNet depths. Here, a1 to a5 represent the feature maps produced for image L026 by the ResNet18 model for conv1, layer1, layer2, layer3, and layer4, respectively. The meanings of the other image numbers follow a similar pattern to that of a1 to a5.

4.2. Feature Classifier-Based Recognition

To train the entity vector of the landslide knowledge graph, training samples and validation samples need to be selected to construct the dataset required for the training of the TransE model, and both the training and validation sets must consist of landslide samples and non-landslide samples. The training set and validation set were divided according to an 8:2 ratio using the landslide samples and non-landslide samples, and the landslide knowledge was kept in one-to-one correspondence with the landslide images. According to Section 2.2, the landslide event entities and their attribute features in the study area were obtained from the landslide knowledge graph and converted into a vector representation, and each landslide event was formally expressed as

e = (Landform features, Climatic conditions, Geological conditions, Feature Distance, Curvature characteristics, Thematic index characteristics) = (Slope, Elevation, Aspect, Landform type, Precipitation, Stratigraphic lithology, Water system distance, Road distance, Plane curvature, Profile curvature, NDVI)

In the formula, slope, elevation, precipitation, NDVI, water system distance, road distance, plane curvature, and profile curvature are numerical attribute features, and aspect, landform type, and stratigraphic lithology are literal attribute features.

Each landslide factor in the landslide knowledge graph dataset is processed. The slope, elevation, precipitation, NDVI, water system distance and road distance are numerical attribute features, and the values are kept constant. The plane curvature and profile curvature are equally divided into three intervals and numbered sequentially. The aspect is divided into 12 main slide intervals according to the main slide direction, the landform type is divided into 4 categories according to the geomorphology, and the stratigraphic lithology is divided into 74 categories according to the lithology. After the above processing, the text-based descriptions of landslide vector and non-landslide vector are converted into numerical descriptions.

The landslide vector , the non-landslide vector and the vector to be measured are grouped into triads of similar relations, dissimilar relations and relations to be measured according to the rules in Table 3. For example, the similarity relationship between landslide vector and landslide vector constitutes a triad , while the relationship between the vector to be measured and landslide vector constitutes a triad .

Table 3.

Landslide knowledge graph triad construction rules.

The numbers of triples constructed from the slippery slope vector , the non-landslide slope vector and the vector to be tested are shown in Table 4. The training set includes 1968 similar and dissimilar relationship triples and 122 relationship triples to be measured, and the validation set includes 488 similar and dissimilar relationship triples.

Table 4.

Number of triplet entries constructed according to the rules.

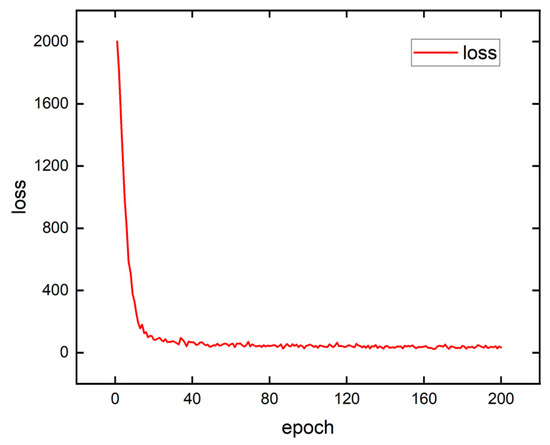

After the hyperparameters are adjusted and optimized, the learning rate is set to 0.005, and the number of iteration epochs is set to 200. After modeling with TransE, the distributed vectors of entities and relationships are output, and each entity vector is converted to a 50-dimensional vector. The model loss is shown in Figure 9, which shows that the model converges. Using the additivity of vectors, the relationship between a pair of entities is judged, and link prediction of the landslide knowledge graph is performed.

Figure 9.

TransE model training loss.

From Section 3.1, a discriminant vector , ], , ], …, , ], …, , ]] is given randomly when the vector to be measured is input to the feature classifier for landslide identification. Here, , ] is a randomly given set of landslide vectors and non-landslide vectors. According to Formula (2), the vector to be measured is compared with each element vector group , ] in the discriminant vector . If the distance of the similar relationship between the vector to be measured and the landslide vector is smaller than the distance of the similar relationship between the vector to be measured and the non-landslide vector , the vector to be measured is considered closer to the landslide vector in this element vector group and in the recognition result vector. After discriminating all the element vector groups in the discriminant vector , a 246-dimensional identification result vector is output. By calculating the frequencies of 0 and 1 in the recognition result vector, the recognition result vector is mapped to a two-dimensional classification probability vector. The model recognition results are shown in Table 5. From the table, the recognition accuracy of the feature classifier for the landslide entity vector in the landslide knowledge graph is 86.07%, and the F1-score reaches 85.85%.

Table 5.

Recognition results of the FKGRNet model with the fused feature classifier.

4.3. Recognition Results of the FKGRNet Model Based on the Feature Classifier

The classification result vectors of the feature classifier and the ResNet model are fed into the feature classifier for fusion, and the landslide recognition results based on the fused feature classifier model are obtained. Figure 10 shows the accuracy of the feature classifier-based FKGRNet model compared with the baseline model. From Figure 10 and Table 5, taking the ResNet34 baseline model as an example for analysis, the accuracy of identifying loess landslides is 86.07% for the feature classifier and 91.80% for ResNet34. The accuracy of the fused feature classifier model is 7.37% and 1.64% higher than that of the feature classifier and ResNet34, respectively, indicating that, with the combination of landslide knowledge graph data and landslide images, the accuracy of the fusion model for landslide identification has been significantly improved. In terms of performance, the F1-score of the fused feature classifier model is 7.58% and 1.63% higher than that of the feature classifier and ResNet34, respectively, indicating that the performance of the fused feature classifier model is better than that of the separate models. Meanwhile, the FKGRNet fusion model based on different network depths outperforms its corresponding baseline network in terms of accuracy and F1-score, which indicates the importance of landslide knowledge graph data processed by TransE and feature classifier in landslide image recognition. As the network depth increases, the recognition effect of the baseline model improves. The recognition accuracy of FKGRNet reaches its highest value when the network depth is 50, but the landslide recognition accuracy improvement exhibited by the fusion model becomes smaller compared with that of the baseline model.

Figure 10.

Accuracy of FKGRNet based on the feature classifier with different network depths compared with the baseline model; (a) Comparison results of the FKGRNet18 model based on the feature classifier and ResNet18. (b) Comparison results of the FKGRNet34 model based on the feature classifier and ResNet34. (c) Comparison results of the FKGRNet50 model based on the feature classifier and ResNet50.

4.4. Recognition Results of the FKGRNet Model Based on Feature Splicing

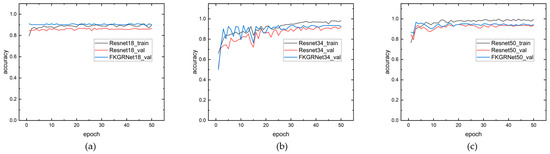

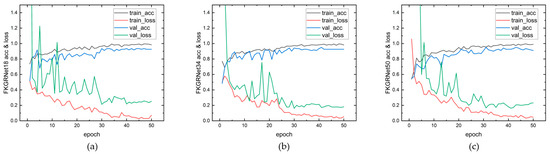

The landslide knowledge graph feature vectors based on the TransE model and the image feature vectors based on the ResNet model are feature spliced, and landslide binary classification is performed by the fully connected layer to obtain the landslide recognition results. Figure 11 shows the training accuracy, training loss, validation accuracy and validation loss of the feature splicing-based FKGRNet model for different network depths. From Figure 11 and Table 6, the accuracy of the feature splicing-based FKGRNet34 model for landslide recognition reaches 95.08%, which is 3.28% higher than that of the baseline model (ResNet34), indicating that the fusion of the knowledge graph feature vectors and the landslide image feature vectors by splicing has a positive effect on the accuracy of landslide recognition. In terms of performance, the F1-score of the fused feature splicing model is 3.30% higher than the baseline model, indicating that the fused feature splicing model outperforms the baseline model. Furthermore, as seen in Table 5 and Table 6, the fused feature splicing FKGRNet model is superior to the baseline model at different network depths, and the accuracy and F1-score of the fused feature splicing FKGRNet model with different network depths are better than those of the fused feature classifier FKGRNet model with the same depth.

Figure 11.

Experimental results of FKGRNet model based on feature splicing; (a) Recognition results of the FKGRNet18 model based on feature splicing. (b) Recognition results of the FKGRNet34 model based on feature splicing. (c) Recognition results of the FKGRNet50 model based on feature splicing.

Table 6.

Recognition results of the FKGRNet model with fused feature splicing.

4.5. Comparison Results with Other Models

We evaluate the mainstream convolutional neural network structures: VGGNet16, GoogLeNet, EfficientNetV2, DenseNet201, the Swin Transformer and the Vision Transformer. From Table 7, the FKGRNet model has an overall advantage over the other structures with different fusion methods when analyzed with the ResNet34 baseline model, and the FKGRNet model obtains the highest accuracy, precision, recall, and F1-score on the validation set. Therefore, combining a landslide knowledge graph with landslide image data to construct a multimodal landslide recognition model is helpful for improving landslide recognition accuracy.

Table 7.

Comparison of the fusion model with other models.

4.6. Ablation Experiments

To investigate the role of knowledge from the landslide knowledge graph on the fusion model, we conducted ablation experiments on the proposed network and its factors, using ResNet34 as the baseline model. We also investigate the roles of different factors in the feature classifier, the feature classifier-based FKGRNet, and the feature splicing-based FKGRNet models. In Table 8, the baseline rows represent the accuracy corresponding to different baseline models, and the first column represents the factor for each ablation. For each baseline model, there are columns for accuracy and amount of change to describe the accuracy and its change after factor ablation. First, the classification accuracy of the feature classifier, the feature classifier-based FKGRNet, and the feature splicing-based FKGRNet models all increase after ablating the profile curvature, indicating that the profile curvature factor has a negative effect on landslide classification, and its upper-level conceptual knowledge curvature feature is less useful in this study area. Second, the accuracy of the feature classifier as well as the other evaluation factor in the feature classifier-based FKGRNet model decrease after ablation, indicating that the factor and its upper-level conceptual knowledge have a positive role in the classification. Third, for the same ablation factor, in the feature classifier as well as the feature classifier-based FKGRNet model, the ablation factor has a greater effect on the accuracy, while the feature splicing-based FKGRNet model is less sensitive to changes in the ablation factor, indicating that the feature splicing-based FKGRNet model has better stability to the fluctuation of data in the landslide knowledge graph.

Table 8.

Variation in accuracy of factor ablation in the feature classifier and its FKGRNet.

To investigate the role of landslide knowledge in the fusion model, we perform further experiments. Since geological conditions, climatic conditions, and thematic index characteristics are only used as landslide factors in this experiment, the results of their ablation experiments are consistent with the corresponding factors. We conduct ablation experiments on the landform features, curvature characteristics and feature distance in the input landslide knowledge. First, the recognition accuracy of the feature classifier model based on landslide knowledge changes the most (decreases by 16.38%) after ablating the landform feature knowledge, and the accuracies of the feature classifier-based FKGRNet model and the feature splicing-based FKGRNet model in the landslide knowledge ablation experiment also decrease by 2.46% and 1.64%, respectively. This indicates that the role of landform feature knowledge in the fusion model is more important than that of other landslide knowledge. Second, after ablating the feature distance knowledge, the recognition accuracies of the three models do not change more than the they do after ablating the landslide knowledge alone, which indicates that the role of landslide knowledge factors does not follow a simple linear superposition relationship. Third, after ablating the curvature characteristic knowledge, the recognition accuracies of all three models increase, indicating that landslide knowledge has a negative effect on the landslide recognition results of the fusion model; this is consistent with the performance trend of the profile curvature factor. From the above ablation experiments, it can be seen that the knowledge contained in a landslide knowledge graph can have different roles in the landslide remote sensing image recognition task, the FKGRNet model can effectively handle multimodal landslide information, and the addition of this knowledge has a positive effect on the increase of the recognition accuracy increase achieved for landslide remote sensing images.

5. Discussion

In this study, we innovatively introduce a landslide knowledge graph representing external knowledge into landslide remote sensing image recognition and propose an FKGRNet-based remote sensing image landslide recognition method. We use ResNet as the baseline model and compare the FKGRNet model based on feature classification and the FKGRNet model based on feature splicing. Then, we compare the performance of FKGRNet models with different network depths on landslide recognition tasks and compare the recognition results with those of other deep learning models.

In terms of model fusion methods, we propose two ways of fusing landslide knowledge graph with models, namely, feature classifier and feature splicing. Since deep learning models have an upper limit on their performance on image recognition tasks, after the introduction of external knowledge, combining knowledge with models can complement the recognition of knowledge and remote sensing images. The TransE model can convert the knowledge in the landslide knowledge graph into entity vectors, and the ResNet model can extract the features of remote sensing images of landslides and fuse them by different combining methods. From Table 5 and Table 6, the fusion model significantly outperforms the ResNet baseline model in the landslide recognition task, while the feature splicing-based FKGRNet model outperforms the feature classifier-based FKGRNet model in both accuracy and F1-score in the landslide recognition task.

In terms of the network depth of the models, we compared the performance of the ResNet baseline model, the feature classifier-based FKGRNet model, and the feature splicing-based FKGRNet model on the landslide recognition task using network depths of 18, 34, and 50 layers. As seen in Table 2, Table 5 and Table 6, ResNet50, the feature classifier-based FKGRNet model and the feature splicing-based FKGRNet model all obtained the highest accuracy and F1-score on the landslide recognition task. Additionally, as the network depth increased, the accuracy of the baseline model, the feature classifier-based FKGRNet model and the feature splicing-based FKGRNet model gradually increased on the landslide recognition task, and we believe that the stacking of ResNet residual modules has a positive effect on landslide image feature extraction.

In terms of the role of knowledge in the fusion model, we first extracted the image features using ResNet and then compared them to those of the fusion model for landslide recognition. As shown in Figure 8, we used the ResNet34 model to extract features from landslide image L259, but the model did not recognize it as a landslide image. As seen from e5 in Figure 8, after implementing multiple convolutional layers in ResNet, the model did not accurately focus on the landslide region of this image, which may have contributed to the failed recognition result. Two approaches were used to fuse the knowledge: a feature classifier-based fusion approach and a feature splicing-based fusion approach. In the case of the feature classifier-based fusion approach, the entity data in the landslide knowledge graph was fused using Bayesian Equation (8) after executing the feature classifier to obtain a prior probability vector of landslide knowledge. When it was difficult for ResNet to recognize landslide images, i.e., when the probability of ResNet recognizing the current image as a landslide image was approximately 0.5, the feature classifier-based fusion approach was of great help. This is because in this case, the Bayesian Equation (7) allowed the prior probability vector of landslide knowledge to dominate, thus improving the recognition effect. Regarding the fusion method used for feature splicing, the feature vectors of the landslide knowledge were spliced with the image features at the fully connected layer using feature splicing. This allowed both the image information and knowledge information to be considered when the model outputted its landslide recognition results.

In the ablation experiments, to investigate the role of knowledge in the landslide knowledge graph on the fusion model, we conducted ablation experiments on the landslide concept knowledge and its factors using ResNet34 as the baseline model for fusion. As shown in Table 8, the role of the ablation factor was basically the same in the feature classifier, the feature classifier-based FKGRNet model and the feature splicing-based FKGRNet model, but the degree of the role varied under different fusion models. For example, after ablating the slope factor, the accuracy of the feature classifier was reduced by 6.57%, the accuracy of the FKGRNet feature classifier was reduced by 1.64%, and the FKGRNet feature splicing was reduced by 0.82%. This indicates that the FKGRNet model based on feature splicing is less sensitive to changes in ablation factors, and at the same time, there is better stability in the fluctuation of data in the landslide knowledge graph. The accuracy of the feature classifier-based FKGRNet model remained unchanged under the ablation of factors such as Landform and NDVI; we believe this accuracy may be the upper limit of the recognition accuracy of the model.

The research in this paper has the following limitations: (1) the amount of landslide sample data is small, and the landslide identification task still needs publicly available datasets with a large number of landslide images and landslide point information for further study; (2) the amount of monitoring data in the landslide knowledge graph is too small, only some publicly available loess landslide data are currently used, and if more monitoring data of loess landslide sample points are available, the landslide knowledge map can be enriched and the rich semantic and data information in the landslide knowledge map can be further utilized; and (3) due to certain limitations, we did not use high-resolution remote sensing satellite images such as the Gaofen series and only used Google Earth images for analysis.

6. Conclusions

Since landslides produce a large amount of multimodal data, and the current landslide identification methods have difficulty handling multimodal data, in this paper, we use a landslide knowledge graph to perform landslide identification and propose a landslide identification method based on FKGRNet for remote sensing images. There are four main contributions of this paper. First, we fuse knowledge and a model and propose a landslide image identification method based on the FKGRNet model, which can handle multimodal data in landslides and add knowledge as features to the landslide image identification model. Second, we propose two knowledge and model fusion mechanisms, feature classifier and feature splicing, and conduct experiments on the two mechanisms to verify the effectiveness of the knowledge and the impact of different fusion methods on the accuracy of the model with respect to recognizing landslides. We conduct experiments on FKGRNet models with different network depths and compare them with other deep learning models to verify the superiority of FKGRNet. Finally, we investigate the role of knowledge in the landslide knowledge graph in the task of landslide recognition by the model through ablation experiments. The experimental results show that the FKGRNet model based on different network depths and different fusion methods outperforms its corresponding baseline network in terms of the accuracy and F1-score metrics, indicating that the landslide knowledge graph data have an active role in landslide remote sensing image recognition, and adding the landslide knowledge graph as knowledge to the landslide remote sensing image recognition task can effectively improve the accuracy of the landslide recognition model.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/2072-4292/15/13/3407/s1, Figure S1: Full image of the landslide knowledge graph.

Author Contributions

Conceptualization, C.Z. and B.X.; methodology, B.X.; software, B.X. and W.J.; validation, B.X., W.J. and W.S.; formal analysis, B.X.; investigation, Y.Y. and B.X.; resources, W.L. and Y.S.; data curation, Y.S.; writing—original draft preparation, B.X.; writing—review and editing, B.X., C.Z., and J.H.; visualization, B.X.; supervision, C.Z. and J.H.; project administration, C.Z. and B.X.; funding acquisition, C.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 42171453; the National Key K&D Program of China,grant number 2022YFB3904201; the Open Fund of the Key Laboratory of JiangHuai Arable Land Resources Protection and Eco-restoration, Ministry of Natural Resources, grant number 2022-ARPE-KF04.

Data Availability Statement

The Loess Plateau Landslide Database is available at https://doi.org/10.3974/geodb.2020.04.08.V1 (accessed on 16 October 2022). The DEM dataset is available at http://www.gscloud.cn (accessed on 17 October 2022). The precipitation dataset, geomorphology dataset, NDVI dataset are available at http://www.geodata.cn (accessed on 20 October 2022). The National Basic Geographic Database is available at https://www.webmap.cn (accessed on 18 October 2022). The digital geological map of China is available at http://dcc.ngac.org.cn (accessed on 18 October 2022).

Acknowledgments

Acknowledgement for the data support from “National Earth System Science Data Center, National Science & Technology Infrastructure of China (http://www.geodata.cn)”.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sun, Y.; Clemens, S.C.; Morrill, C.; Lin, X.; Wang, X.; An, Z. Influence of Atlantic Meridional Overturning Circulation on the East Asian Winter Monsoon. Nat. Geosci. 2012, 5, 46–49. [Google Scholar] [CrossRef]

- Zhuang, J.; Peng, J.; Wang, G.; Javed, I.; Wang, Y.; Li, W. Distribution and Characteristics of Landslide in Loess Plateau: A Case Study in Shaanxi Province. Eng. Geol. 2018, 236, 89–96. [Google Scholar] [CrossRef]

- Miele, P.; Di Napoli, M.; Guerriero, L.; Ramondini, M.; Sellers, C.; Annibali Corona, M.; Di Martire, D. Landslide Awareness System (LAwS) to Increase the Resilience and Safety of Transport Infrastructure: The Case Study of Pan-American Highway (Cuenca–Ecuador). Remote Sens. 2021, 13, 1564. [Google Scholar] [CrossRef]

- Xu, W.; Kang, Y.; Chen, L.; Wang, L.; Qin, C.; Zhang, L.; Liang, D.; Wu, C.; Zhang, W. Dynamic Assessment of Slope Stability Based on Multi-Source Monitoring Data and Ensemble Learning Approaches: A Case Study of Jiuxianping Landslide. Geol. J. 2023, 58, 2353–2371. [Google Scholar] [CrossRef]

- Li, H.; He, Y.; Xu, Q.; Deng, J.; Li, W.; Wei, Y. Detection and Segmentation of Loess Landslides via Satellite Images: A Two-Phase Framework. Landslides 2022, 19, 673–686. [Google Scholar] [CrossRef]

- Xu, Q.; Dong, X.; Li, W. Integrated Space-Air-Ground Early Detection, Monitoring and Warning System for Potential Catastrophic Geohazards. Geomat. Inf. Sci. Wuhan Univ. 2019, 44, 957–966. [Google Scholar] [CrossRef]

- Travelletti, J.; Malet, J.-P.; Delacourt, C. Image-Based Correlation of Laser Scanning Point Cloud Time Series for Landslide Monitoring. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 1–18. [Google Scholar] [CrossRef]

- Chen, Y.; Yu, S.; Tao, Q.; Liu, G.; Wang, L.; Wang, F. Accuracy Verification and Correction of D-InSAR and SBAS-InSAR in Monitoring Mining Surface Subsidence. Remote Sens. 2021, 13, 4365. [Google Scholar] [CrossRef]

- Pradhan, B.; Al-Najjar, H.A.H.; Sameen, M.I.; Mezaal, M.R.; Alamri, A.M. Landslide Detection Using a Saliency Feature Enhancement Technique From LiDAR-Derived DEM and Orthophotos. IEEE Access 2020, 8, 121942–121954. [Google Scholar] [CrossRef]

- De Graff, J. Using Isopleth Maps of Landslide Deposits as a Tool in Timber Sale Planning. Bull. Assoc. Eng. Geol. 1985, 22, 445–453. [Google Scholar] [CrossRef]

- Montgomery, D.R.; Dietrich, W.E. A Physically Based Model for the Topographic Control on Shallow Landsliding. Water Resour. Res. 1994, 30, 1153–1171. [Google Scholar] [CrossRef]

- Roslee, R.; Sharir, K.; Lai, G.T.; Simon, N.; Ern, L.K.; Madran, E.; Saidin, A.S. Application of Analytical Hierarchy Process (AHP) for Landslide Hazard Analysis (LHA) in Kota Kinabalu Area, Sabah, Malaysia. IOP Conf. Ser. Earth Environ. Sci. 2022, 1103, 012031. [Google Scholar] [CrossRef]

- Huang, W.; Ding, M.; Li, Z.; Zhuang, J.; Yang, J.; Li, X.; Meng, L.; Zhang, H.; Dong, Y. An Efficient User-Friendly Integration Tool for Landslide Susceptibility Mapping Based on Support Vector Machines: SVM-LSM Toolbox. Remote Sens. 2022, 14, 3408. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, Y.; Liang, Y.; Sun, P.; Li, Y.; Su, X.; Wang, A.; Meng, X. Landslide Risk Assessment Using a Combined Approach Based on InSAR and Random Forest. Remote Sens. 2022, 14, 2131. [Google Scholar] [CrossRef]

- Daviran, M.; Shamekhi, M.; Ghezelbash, R.; Maghsoudi, A. Landslide Susceptibility Prediction Using Artificial Neural Networks, SVMs and Random Forest: Hyperparameters Tuning by Genetic Optimization Algorithm. Int. J. Environ. Sci. Technol. 2023, 20, 259–276. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Xu, Y.; Ghamisi, P.; Kopp, M.; Kreil, D. Landslide4Sense: Reference Benchmark Data and Deep Learning Models for Landslide Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5633017. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: Boston, MA, USA, 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Honolulu, HI, USA, 2017; pp. 2261–2269. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Du, Z.; Li, Y.; Zhang, Y.; Tan, Y.; Zhao, W. Knowledge Graph Construction Method on Natural Disaster Emergency. Geomat. Inf. Sci. Wuhan Univ. 2020, 45, 1344–1355. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-Relational Data. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2013; Volume 26. [Google Scholar]

- Liu, Y.; Feng, X.; Zhou, Z. Multimodal Video Classification with Stacked Contractive Autoencoders. Signal Process. 2016, 120, 761–766. [Google Scholar] [CrossRef]

- Pang, L.; Ngo, C.-W. Mutlimodal Learning with Deep Boltzmann Machine for Emotion Prediction in User Generated Videos. In Proceedings of the 5th ACM on International Conference on Multimedia Retrieval, Shanghai, China, 23–26 June 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 619–622. [Google Scholar]

- Wang, B.; Yang, Y.; Xu, X.; Hanjalic, A.; Shen, H.T. Adversarial Cross-Modal Retrieval. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; ACM: Mountain View, CA, USA, 2017; pp. 154–162. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D. StackGAN: Text to Photo-Realistic Image Synthesis with Stacked Generative Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Venice, Italy, 2017; pp. 5908–5916. [Google Scholar]

- Hu, S. Spatial Pattern of Landslide in Loess Plateau and Its Influence on Geomorphologic Evolution. Ph.D. Thesis, Northwest University, Xi’an, China, 2019. (In Chinese). [Google Scholar]

- Zhang, M.; Liu, J. Controlling Factors of Loess Landslides in Western China. Environ. Earth Sci. 2010, 59, 1671–1680. [Google Scholar] [CrossRef]

- Peng, J.; Wang, S.; Wang, Q.; Zhuang, J.; Huang, W.; Zhu, X.; Leng, Y.; Ma, P. Distribution and Genetic Types of Loess Landslides in China. J. Asian Earth Sci. 2019, 170, 329–350. [Google Scholar] [CrossRef]

- Hu, S.; Qiu, H.; Wang, N.; Cui, Y.; Cao, M. High-resolution Image based Landslides Dataset in Loess Plateau. Digit. J. Glob. Chang. Data Repos. 2020, 7. [Google Scholar] [CrossRef]

- Reichenbach, P.; Rossi, M.; Malamud, B.D.; Mihir, M.; Guzzetti, F. A Review of Statistically-Based Landslide Susceptibility Models. Earth-Sci. Rev. 2018, 180, 60–91. [Google Scholar] [CrossRef]

- Ma, S.; Qiu, H.; Hu, S.; Pei, Y.; Yang, W.; Yang, D.; Cao, M. Quantitative Assessment of Landslide Susceptibility on the Loess Plateau in China. Phys. Geogr. 2020, 41, 489–516. [Google Scholar] [CrossRef]

- Tang, Y.; Feng, F.; Guo, Z.; Feng, W.; Li, Z.; Wang, J.; Sun, Q.; Ma, H.; Li, Y. Integrating Principal Component Analysis with Statistically-Based Models for Analysis of Causal Factors and Landslide Susceptibility Mapping: A Comparative Study from the Loess Plateau Area in Shanxi (China). J. Clean. Prod. 2020, 277, 124159. [Google Scholar] [CrossRef]

- Lahat, D.; Adali, T.; Jutten, C. Multimodal Data Fusion: An Overview of Methods, Challenges, and Prospects. Proc. IEEE 2015, 103, 1449–1477. [Google Scholar] [CrossRef]

- Nefian, A.V.; Liang, L.; Pi, X.; Liu, X.; Murphy, K. Dynamic Bayesian Networks for Audio-Visual Speech Recognition. EURASIP J. Adv. Signal Process. 2002, 2002, 783042. [Google Scholar] [CrossRef]

- Snoek, C.G.M.; Worring, M.; Smeulders, A.W.M. Early versus Late Fusion in Semantic Video Analysis. In Proceedings of the 13th annual ACM International Conference on Multimedia, Singapore, 6–11 November 2005; ACM: Singapore, 2005; pp. 399–402. [Google Scholar]

- Wu, Z.; Cai, L.; Meng, H. Multi-Level Fusion of Audio and Visual Features for Speaker Identification. In Advances in Biometrics; Zhang, D., Jain, A.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 493–499. [Google Scholar]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M.S. Multimodal Fusion for Multimedia Analysis: A Survey. Multimed. Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]