PVL-Cartographer: Panoramic Vision-Aided LiDAR Cartographer-Based SLAM for Maverick Mobile Mapping System

Abstract

1. Introduction

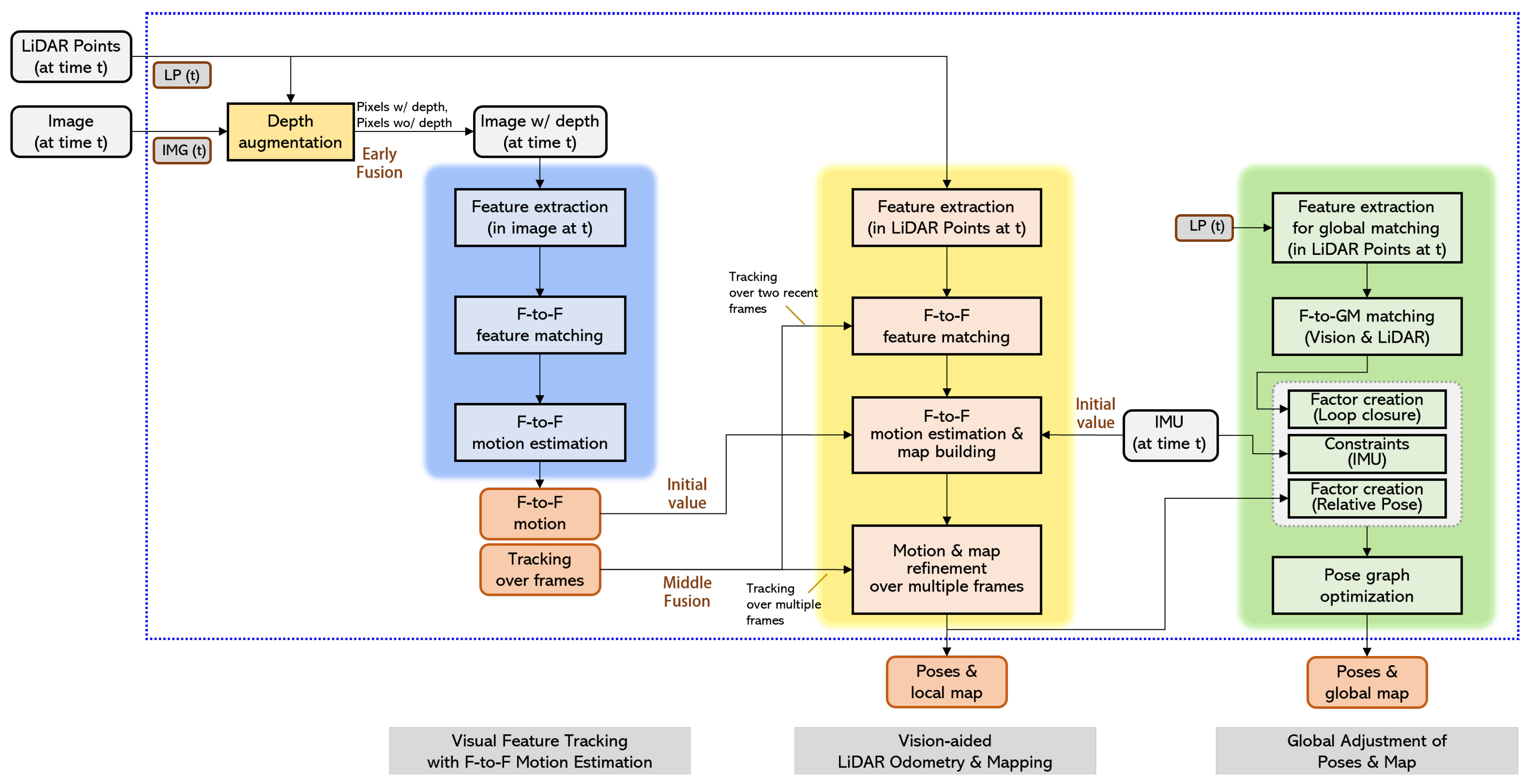

- The proposed SLAM system integrates various sensors, such as panoramic cameras, LiDAR sensors, and IMUs, to attain high-precision and sturdy performance.

- The novel early fusion of LiDAR range maps and visual features allows our SLAM system to generate outcomes with absolute scale without the need for external data sources such as GPS or ground control points.

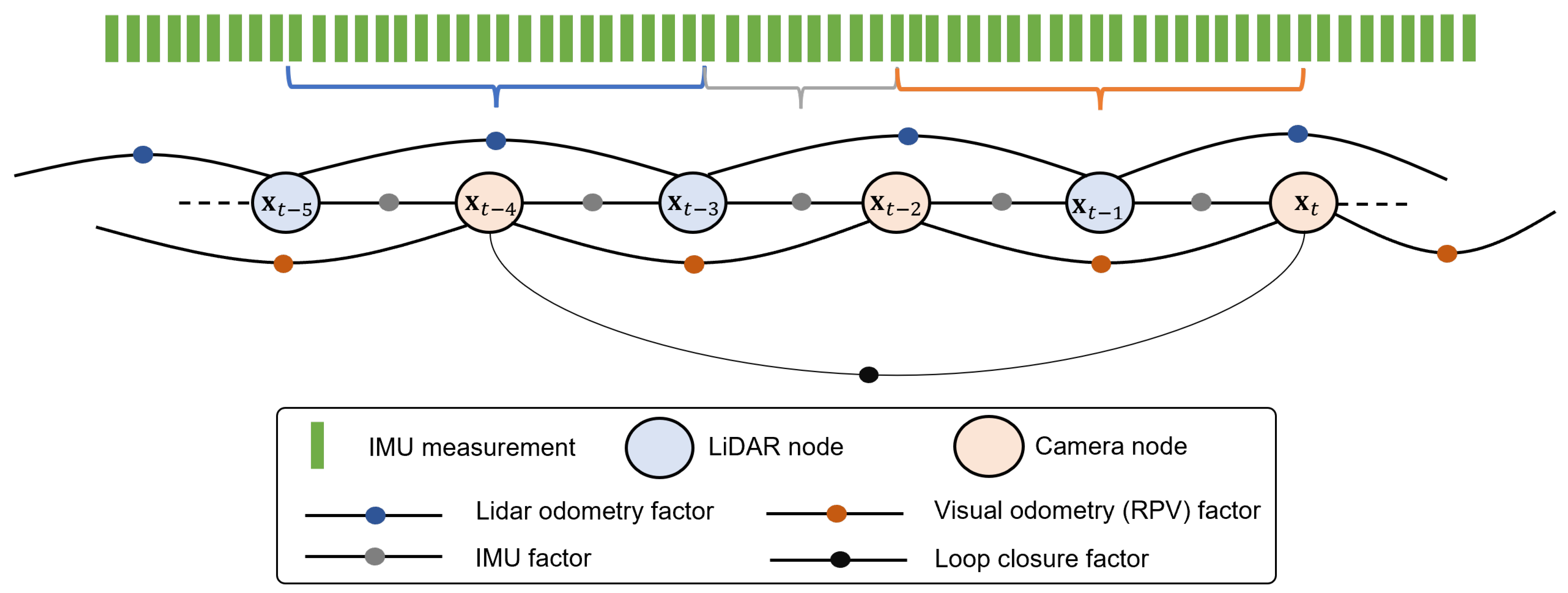

- The middle fusion technique is another key novelty of our research. Employing a pose graph formulation facilitates the smooth combination of data from various sensors, and it empowers our SLAM system to deliver precise and reliable localization and mapping outcomes.

- We carried out comprehensive tests in demanding outdoor environments to showcase the efficacy and resilience of our proposed system, even in situations with limited features. In summary, our research contributes to advancing more precise and robust SLAM systems for various real-world applications.

2. Related Work

2.1. Visual SLAM

2.2. Panoramic Visual SLAM

2.3. LiDAR SLAM

2.4. Sensor-Fusion-Based SLAM

3. Methodology

3.1. Mobile Mapping System

- Trimble MX series: such as Trimble MX7 and Trimble MX9.

- Leica Pegasus: including models such as Leica Pegasus Two, Leica Pegasus: Backpack, and the latest addition, Leica Pegasus TRK.

- RIEGL VMX series: includes models such as the RIEGL VMX-1HA and VMX-RAIL.

- MobileMapper series by Spectra Precision: including models such as the MobileMapper 300 and MobileMapper 50.

- Velodyne Alpha Prime.

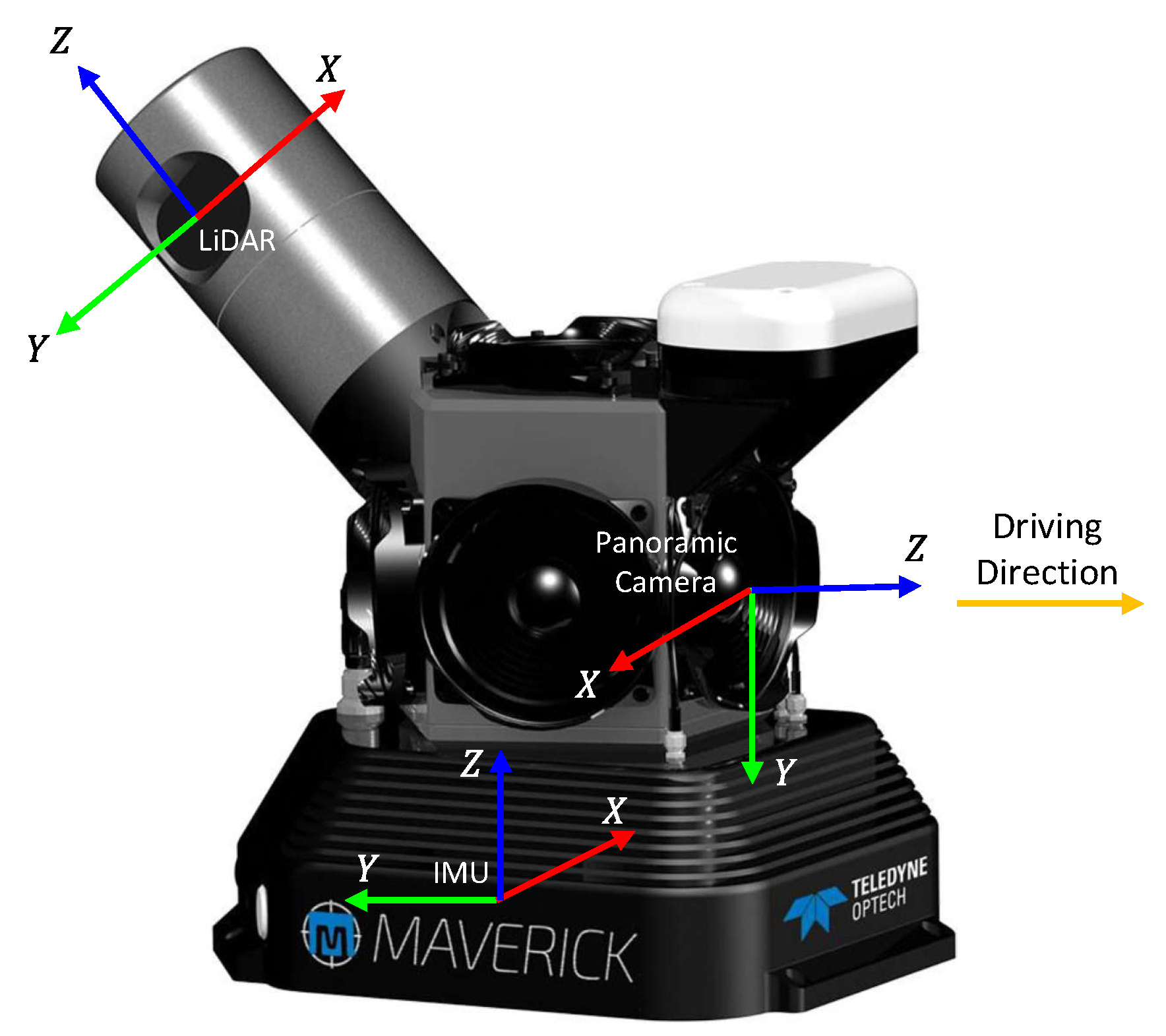

3.2. Maverick MMS and Notation

- IMU calibration: Initially, the IMU was calibrated. The Maverick MMS incorporates the NovAtel SPAN on OEM6, which combines GNSS technology with advanced inertial sensors for positioning and navigation. In the GNSS-IMU system, calibration begins by utilizing initial values from “SETIMUTOANTOFFSET” (or NVM). Subsequently, the “LEVERARMCALIBRATE” command is employed to control the IMU-to-antenna lever arm calibration. The calibration process continues for 600 s or until the standard deviation is below 0.05m, at which point the estimated lever arm converges to an acceptable level.

- LiDAR calibration: To accurately determine the position of the LiDAR relative to the body frame, a selection of control points on the wall and ground was made. Using the mission data collected by Maverick, a self-calibration method was employed, which involved time corrections, LiDAR channel corrections, boresight corrections, and position corrections. The self-calibration process was repeated until the boresight values were corrected to be below 0.008 degrees.

- Panoramic camera calibration: The six cameras are calibrated using Zhang’s method [39], which involves capturing checkerboard patterns from different orientations using all cameras. The calibration procedure includes a closed-form solution, followed by a non-linear refinement based on the maximum likelihood criterion. Afterward, LynxView is used to boresight the camera with respect to the body frame. The method involves selecting LiDAR Surveyed Lines and Camera Lines, and formulating the constraint as a non-linear optimization problem to determine the optimum rigid transformation.

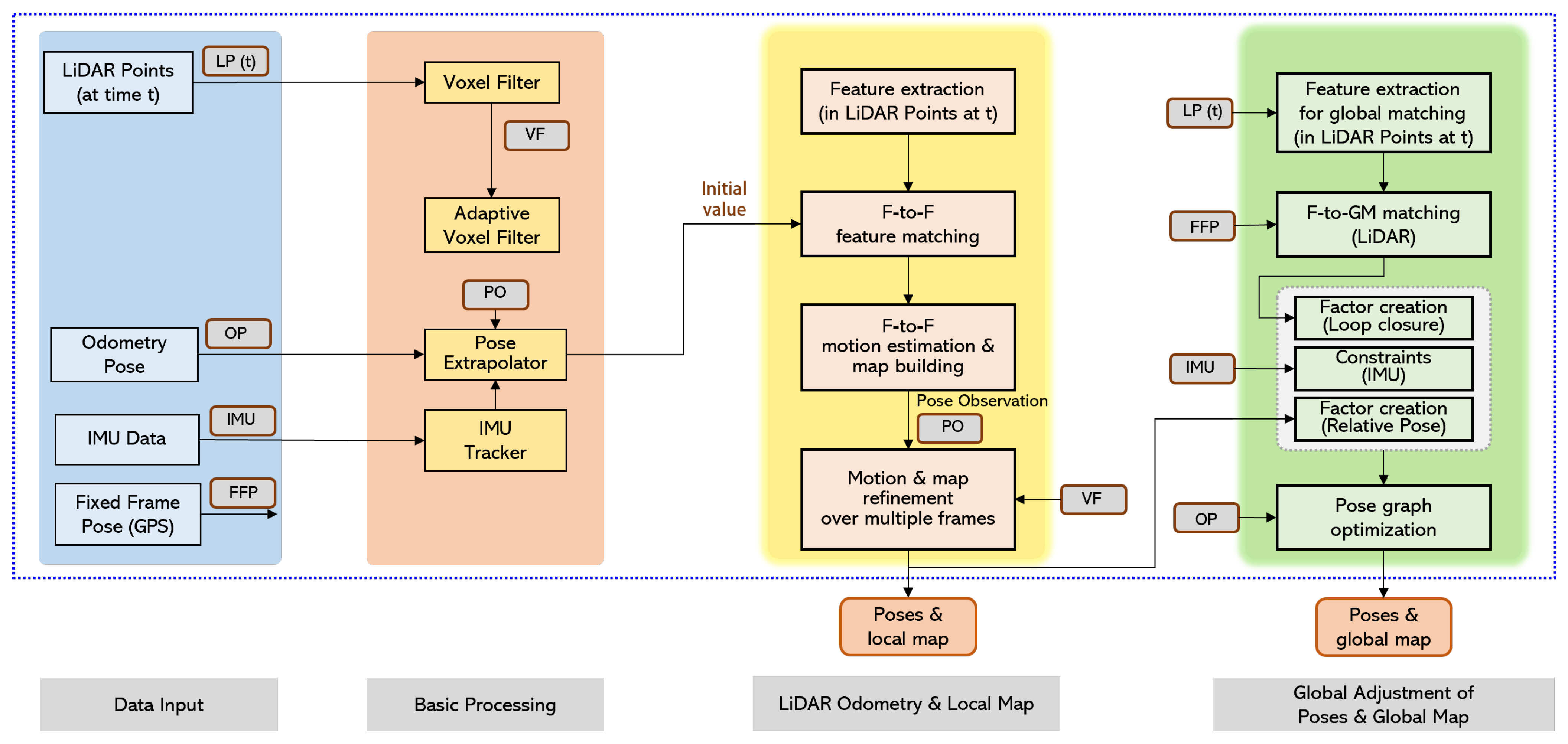

3.3. Google Cartographer

3.3.1. Local Map Construction

3.3.2. Ceres Scan Matching

3.4. RPV-SLAM with Early Fusion

3.4.1. Feature and Range Module

3.4.2. Tracking Module

3.5. PVL-Cartographer SLAM with Pose-Graph-Based Middle Fusion

3.6. Global Map Optimization and Loop Closure for PVL-Cartographer

4. Experiments

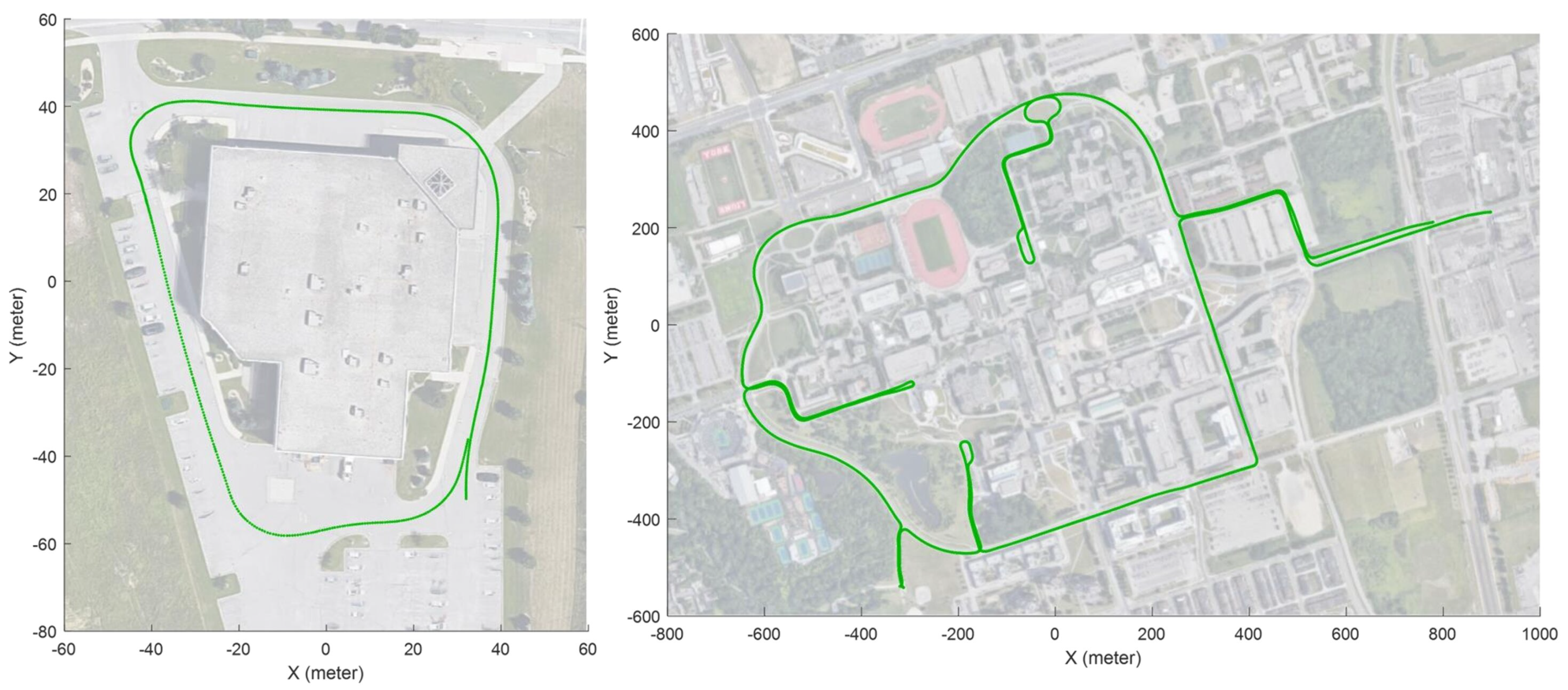

4.1. Dataset

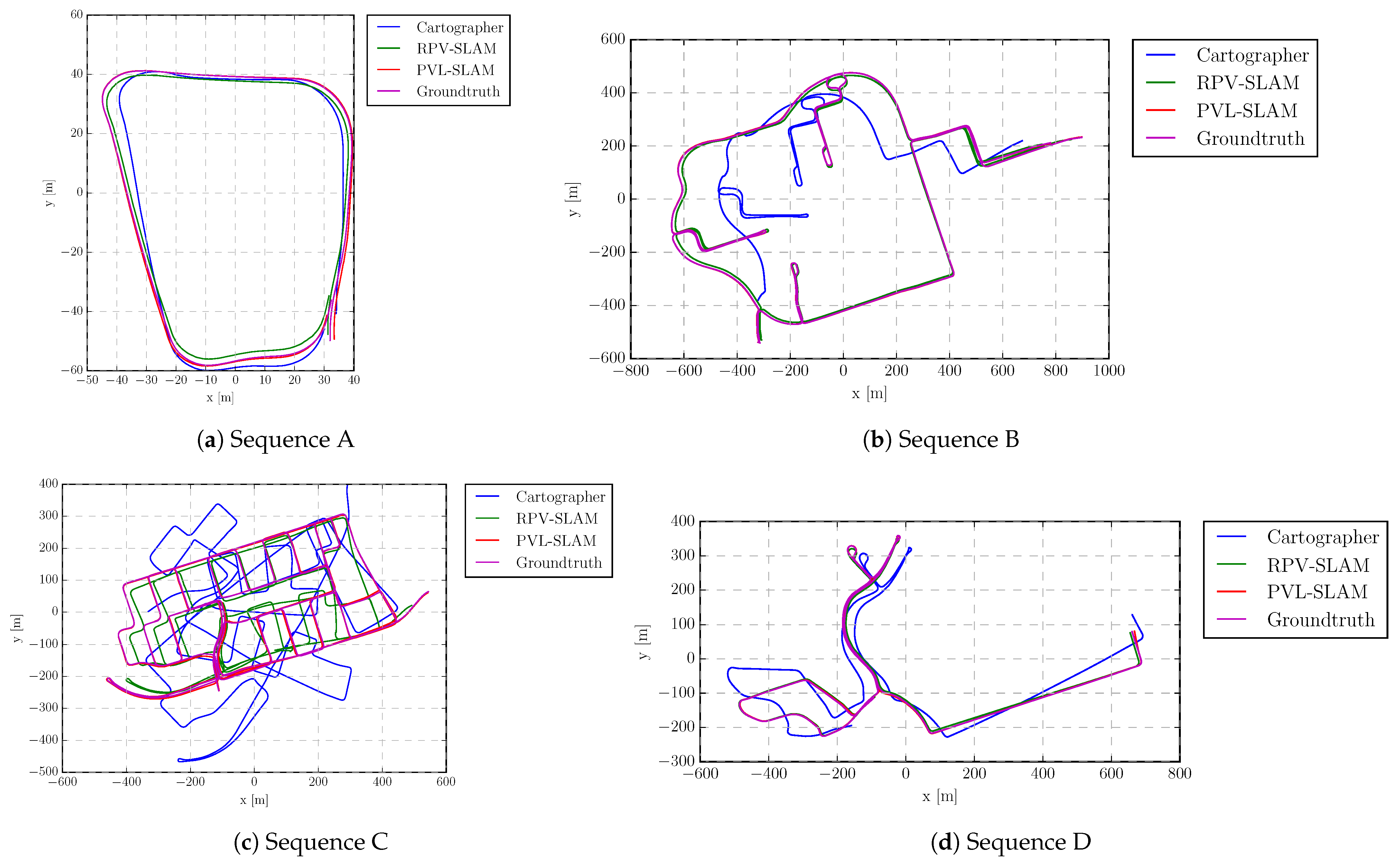

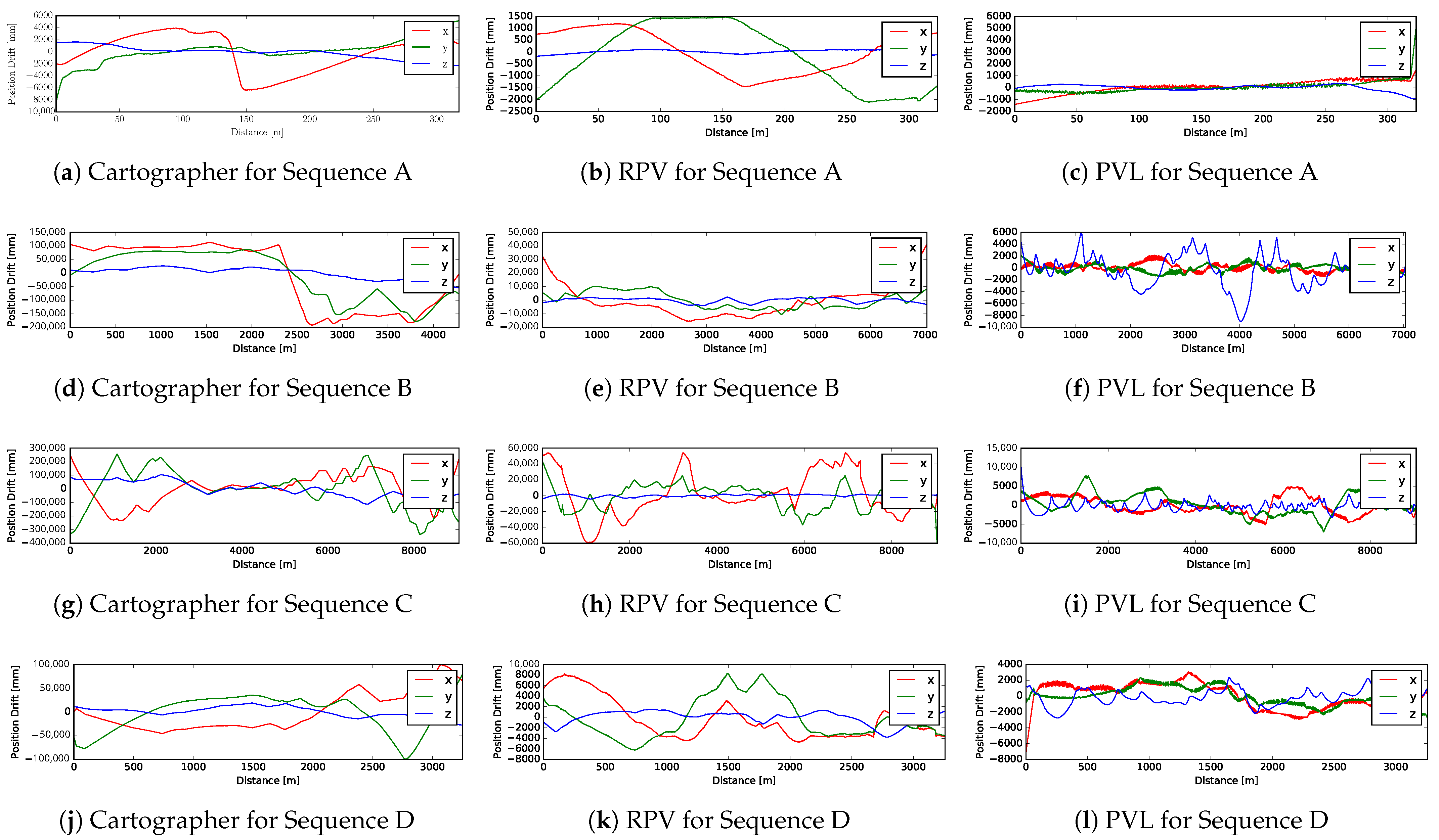

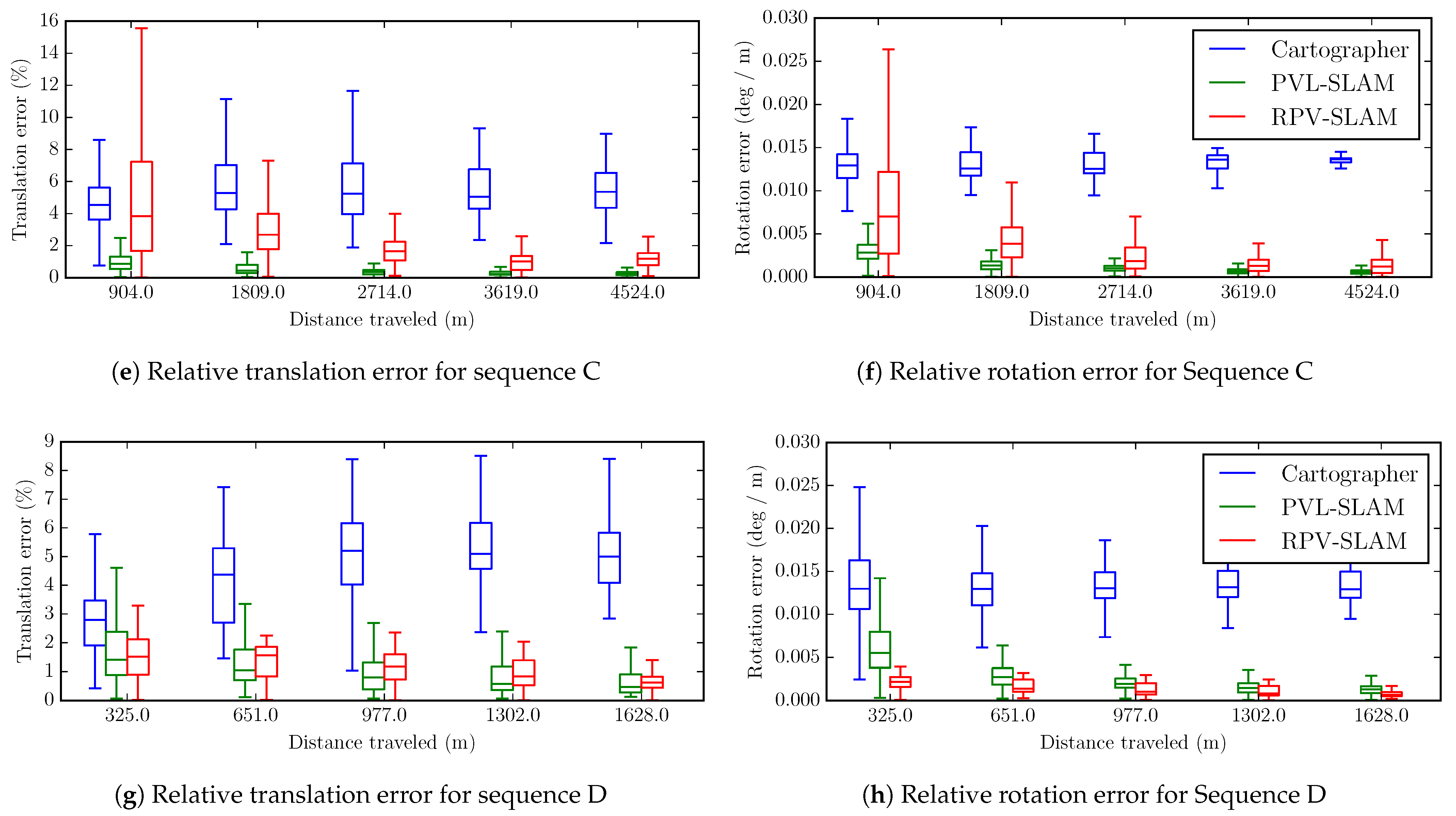

4.2. Results

4.3. Discussion

5. Conclusions

- First, by using advanced depth estimation or completion methods, more comprehensive range maps can be created, allowing for the overlay of ranges on additional visual features;

- Second, integrating range measurements into both local and global bundle adjustments could enhance the system’s accuracy;

- Third, efforts are underway to upgrade the existing PVL-Cartographer SLAM to a visual-LiDAR-IMU-GPS SLAM system featuring a more tightly integrated pose graph or factor graph;

- Fourth, the system could be expanded by developing a SLAM pipeline that incorporates both visual and LiDAR features;

- Fifth, applying deep neural network techniques for feature classification and pose correction may improve the system’s overall performance.

- Finally, exploring the use of the panoramic-vision-LiDAR fusion method in other areas of applications, such as object detection based on RGB imagery acquired by unmanned aerial vehicles (UAVs) [42]. The combination of panoramic images with a broad FOV and the LiDAR data should improve the performance of the transfer-learning-based methods for small-sized object detection.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huai, J.; Zhang, Y.; Yilmaz, A. Real-time large scale 3D reconstruction by fusing kinect and imu data. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume II-3/W5, 2015 ISPRS Geospatial Week 2015, La Grande Motte, France, 28 Septmber–3 October 2015; Volume 2. [Google Scholar]

- Alsadik, B. Ideal angular orientation of selected 64-channel multi beam lidars for mobile mapping systems. Remote Sens. 2020, 12, 510. [Google Scholar] [CrossRef]

- Lin, M.; Cao, Q.; Zhang, H. PVO: Panoramic visual odometry. In Proceedings of the 2018 3rd International Conference on Advanced Robotics and Mechatronics (ICARM), Singapore, 18–20 July 2018; pp. 491–496. [Google Scholar]

- Tardif, J.P.; Pavlidis, Y.; Daniilidis, K. Monocular visual odometry in urban environments using an omnidirectional camera. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 2531–2538. [Google Scholar]

- Shi, Y.; Ji, S.; Shi, Z.; Duan, Y.; Shibasaki, R. GPS-supported visual SLAM with a rigorous sensor model for a panoramic camera in outdoor environments. Sensors 2012, 13, 119–136. [Google Scholar] [CrossRef] [PubMed]

- Ji, S.; Qin, Z.; Shan, J.; Lu, M. Panoramic SLAM from a multiple fisheye camera rig. ISPRS J. Photogramm. Remote Sens. 2020, 159, 169–183. [Google Scholar] [CrossRef]

- Sumikura, S.; Shibuya, M.; Sakurada, K. OpenVSLAM: A versatile visual SLAM framework. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, FL, USA, 21–25 October 2019; pp. 2292–2295. [Google Scholar]

- Zhang, J.; Kaess, M.; Singh, S. Real-time depth enhanced monocular odometry. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4973–4980. [Google Scholar]

- Graeter, J.; Wilczynski, A.; Lauer, M. Limo: Lidar-monocular visual odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7872–7879. [Google Scholar]

- Grisetti, G.; Kümmerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Proceedings, Part II 13, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Kerl, C.; Sturm, J.; Cremers, D. Robust odometry estimation for RGB-D cameras. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3748–3754. [Google Scholar]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3-D mapping with an RGB-D camera. IEEE Trans. Robot. 2013, 30, 177–187. [Google Scholar] [CrossRef]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. Kinectfusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Nießner, M.; Dai, A.; Fisher, M. Combining Inertial Navigation and ICP for Real-time 3D Surface Reconstruction. In Proceedings of the Eurographics (Short Papers), Strasbourg, France, 7–11 April 2014; pp. 13–16. [Google Scholar]

- Kang, J.; Zhang, Y.; Liu, Z.; Sit, A.; Sohn, G. RPV-SLAM: Range-augmented panoramic visual SLAM for mobile mapping system with panoramic camera and tilted LiDAR. In Proceedings of the 2021 20th International Conference on Advanced Robotics (ICAR), Ljubljana, Slovenia, 6–10 December 2021; pp. 1066–1072. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems, Rome, Italy, 13–15 July 2014; Volume 2, pp. 1–9. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. Visual-lidar odometry and mapping: Low-drift, robust, and fast. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2174–2181. [Google Scholar]

- Lin, J.; Zhang, F. Loam livox: A fast, robust, high-precision LiDAR odometry and mapping package for LiDARs of small FoV. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3126–3131. [Google Scholar]

- Li, L.; Kong, X.; Zhao, X.; Li, W.; Wen, F.; Zhang, H.; Liu, Y. SA-LOAM: Semantic-aided LiDAR SLAM with loop closure. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 7627–7634. [Google Scholar]

- Mendes, E.; Koch, P.; Lacroix, S. ICP-based pose-graph SLAM. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 195–200. [Google Scholar]

- Behley, J.; Stachniss, C. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018; Volume 2018, p. 59. [Google Scholar]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguere, P.; Behley, J.; Stachniss, C. Suma++: Efficient lidar-based semantic slam. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4530–4537. [Google Scholar]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2020; pp. 5135–5142. [Google Scholar]

- Shan, T.; Englot, B.; Ratti, C.; Daniela, R. LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5692–5698. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Dwijotomo, A.; Abdul Rahman, M.A.; Mohammed Ariff, M.H.; Zamzuri, H.; Wan Azree, W.M.H. Cartographer slam method for optimization with an adaptive multi-distance scan scheduler. Appl. Sci. 2020, 10, 347. [Google Scholar] [CrossRef]

- Nüchter, A.; Bleier, M.; Schauer, J.; Janotta, P. Continuous-time slam—improving google’s cartographer 3d mapping. In Latest Developments in Reality-Based 3D Surveying and Modelling; Remondino, F., Georgopoulos, A., González-Aguilera, D., Agrafiotis, P., Eds.; MDPI: Basel, Switzerland, 2018; pp. 53–73. [Google Scholar]

- Elhashash, M.; Albanwan, H.; Qin, R. A Review of Mobile Mapping Systems: From Sensors to Applications. Sensors 2022, 22, 4262. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Zhang, Z.; Scaramuzza, D. A Tutorial on Quantitative Trajectory Evaluation for Visual(-Inertial) Odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting Tassels in RGB UAV Imagery With Improved YOLOv5 Based on Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

| Rotation Angles [Degrees] | Position [Meters] | |

|---|---|---|

| [179.579305611, −44.646008315, 0.600971839] | [0.111393, 0.010340, −0.181328] | |

| [0, 0, −179.9] | [−0.031239, 0, −0.1115382] |

| Sequence A | Sequence B | Sequence C | Sequence D | |

|---|---|---|---|---|

| Sensors | Maverick MMS: Ladybug-5 + Velodyne HDL-32 + IMU | |||

| Region | Parking lot | Campus area | Residential area | Residential area |

| Camera frames | 717 | 8382 | 10,778 | 4500 |

| Image size | 4096 × 2048 | 8000 × 4000 | 8000 × 4000 | 8000 × 4000 |

| LiDAR frames | 1432 | 17,395 | 22,992 | 9615 |

| Distance travelled | 324 m | 7035 m | 7965 m | 3634 m |

| Running time | 94 s | 19 min | 22 min | 10 min |

| Ground truth | GNSS/IMU | GNSS/IMU | GNSS/IMU | GNSS/IMU |

| Loop | One small loop | One large loop + a few small loops | Many medium-size loops | A few loops |

| Dynamic objects | Parking, barrier and person | Car, bus and person | Car, bus and person | Car, bus and person |

| Compared methods | ORB-SLAM2 (camera-only) | |||

| VINS-Mono-SLAM (camera + IMU) | ||||

| LOAM (LiDAR) | ||||

| Google-Cartographer-SLAM (LiDAR + IMU) | ||||

| RPV-SLAM (Panoramic camera + LiDAR) | ||||

| Our PVL-Cartographer SLAM (Panoramic camera + LiDAR + IMU) | ||||

| ORB SLAM2 | VINS-Mono | LOAM | Cartographer | RPV-SLAM | PVL-SLAM | |

|---|---|---|---|---|---|---|

| Sequence A | 5.894 | 3.9974 | Fail | 4.023 | 1.618 | 0.766 |

| Sequence B | 100.870 | 86.897 | Fail | 152.230 | 12.910 | 2.599 |

| Sequence C | 155.908 | 160.765 | Fail | 183.619 | 30.661 | 3.739 |

| Sequence D | 10.665 | 12.875 | Fail | 58.576 | 5.673 | 2.204 |

| Overall | 68.3343 | 66.1336 | Fail | 99.612 | 12.7155 | 2.327 |

| ORB SLAM2 | VINS-Mono | LOAM | Cartographer | RPV-SLAM | PVL-SLAM | |

|---|---|---|---|---|---|---|

| Sequence A | 7.769 | 4.685 | Fail | 6.789 | 3.934 | 3.027 |

| 0.0677 | 0.0410 | 0.0507 | 0.0040 | 0.0236 | ||

| Sequence B | 13.770 | 10.779 | Fail | 15.047 | 3.096 | 1.273 |

| 0.0099 | 0.0109 | 0.0090 | 0.0009 | 0.0019 | ||

| Sequence C | 4.879 | 3.987 | Fail | 5.764 | 3.752 | 0.853 |

| 0.0289 | 0.0301 | 0.0133 | 0.0057 | 0.0018 | ||

| Sequence D | 2.878 | 3.085 | Fail | 4.650 | 1.347 | 2.555 |

| 0.0148 | 0.0178 | 0.0137 | 0.0017 | 0.0035 | ||

| Overall | 7.324 | 5.634 | Fail | 9.843 | 2.393 | 1.069 |

| 0.030 | 0.025 | 0.059 | 0.002 | 0.003 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Kang, J.; Sohn, G. PVL-Cartographer: Panoramic Vision-Aided LiDAR Cartographer-Based SLAM for Maverick Mobile Mapping System. Remote Sens. 2023, 15, 3383. https://doi.org/10.3390/rs15133383

Zhang Y, Kang J, Sohn G. PVL-Cartographer: Panoramic Vision-Aided LiDAR Cartographer-Based SLAM for Maverick Mobile Mapping System. Remote Sensing. 2023; 15(13):3383. https://doi.org/10.3390/rs15133383

Chicago/Turabian StyleZhang, Yujia, Jungwon Kang, and Gunho Sohn. 2023. "PVL-Cartographer: Panoramic Vision-Aided LiDAR Cartographer-Based SLAM for Maverick Mobile Mapping System" Remote Sensing 15, no. 13: 3383. https://doi.org/10.3390/rs15133383

APA StyleZhang, Y., Kang, J., & Sohn, G. (2023). PVL-Cartographer: Panoramic Vision-Aided LiDAR Cartographer-Based SLAM for Maverick Mobile Mapping System. Remote Sensing, 15(13), 3383. https://doi.org/10.3390/rs15133383