Flying Laboratory of Imaging Systems: Fusion of Airborne Hyperspectral and Laser Scanning for Ecosystem Research

Abstract

1. Introduction

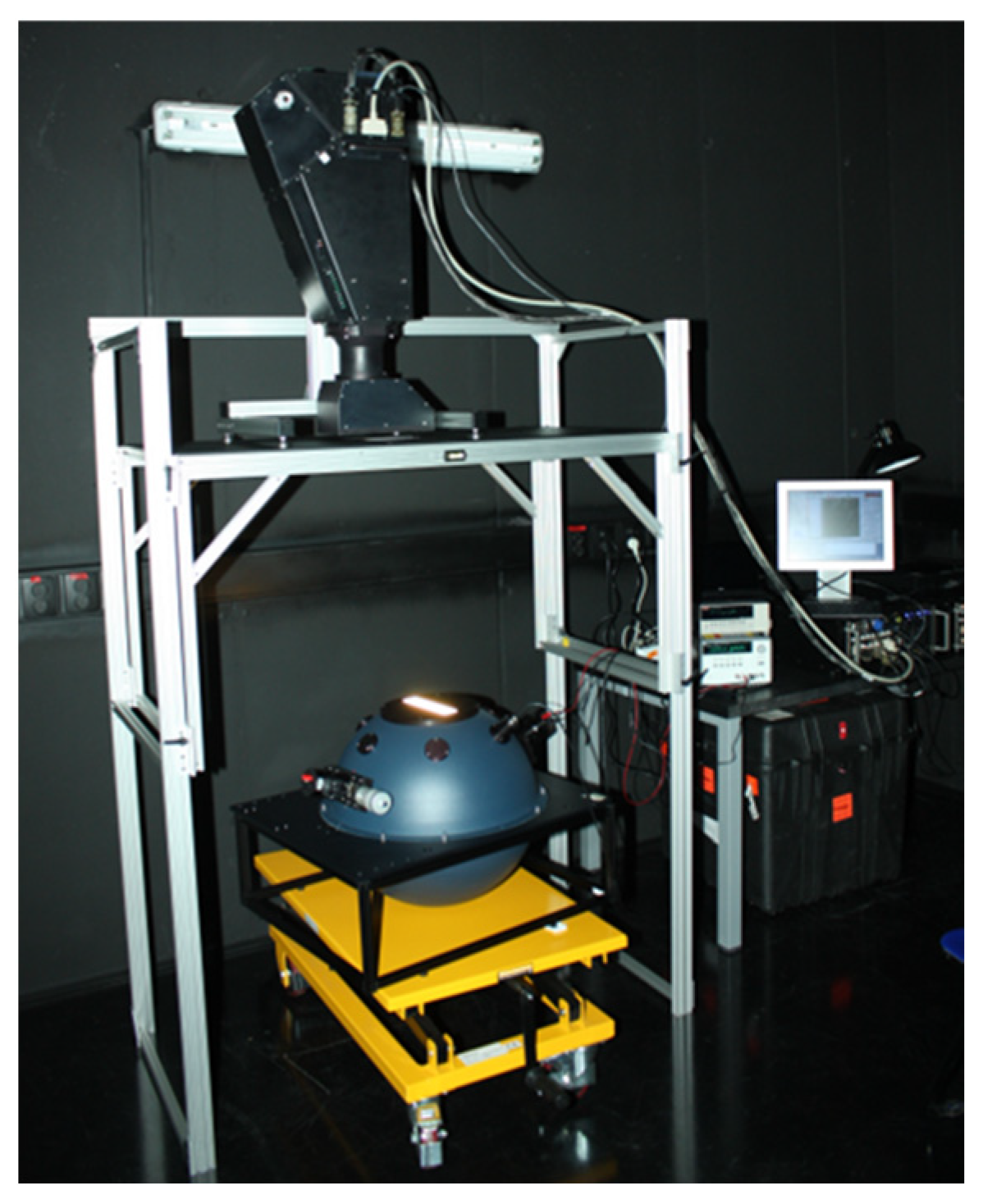

2. System Design and Instruments

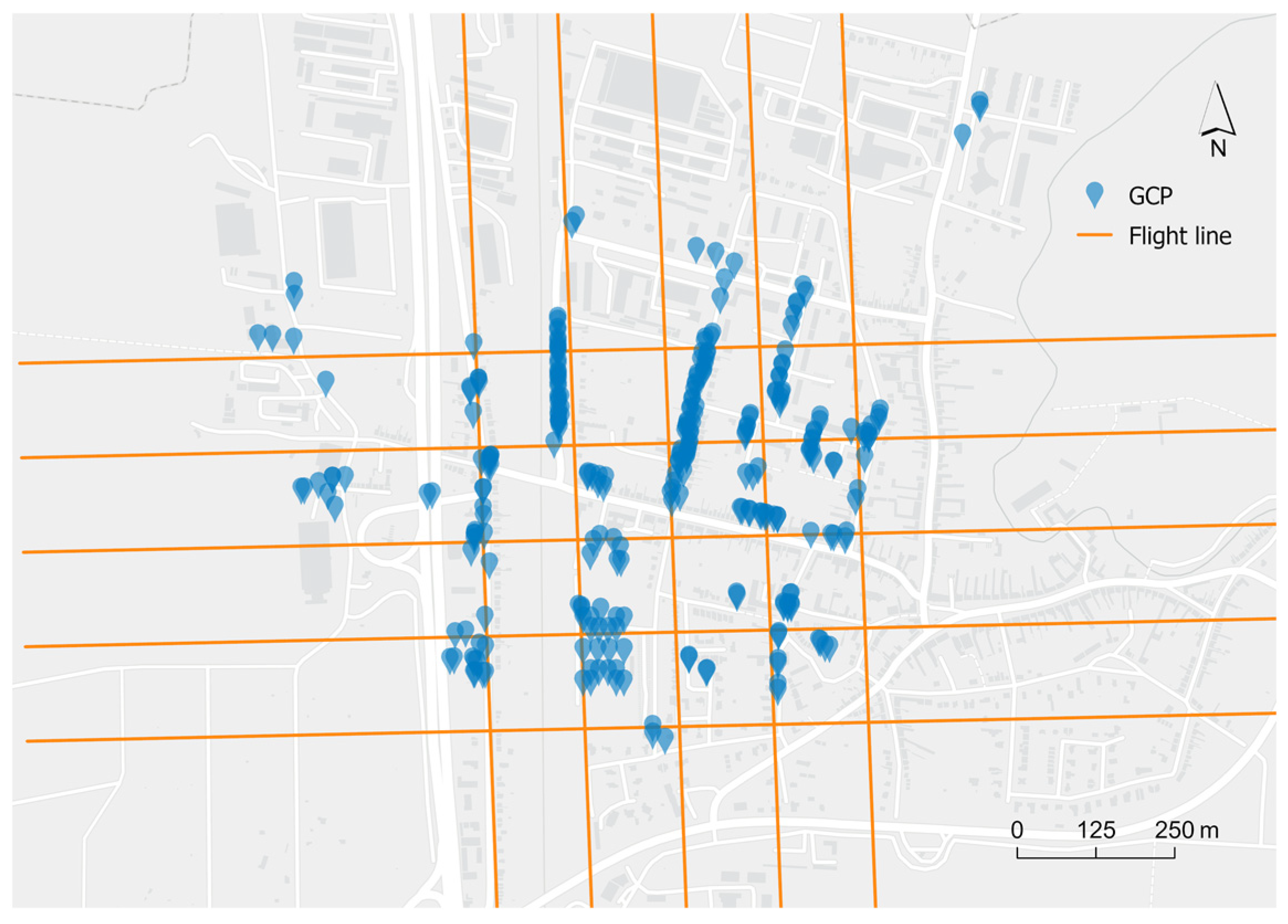

3. Flight Planning and Data Acquisition

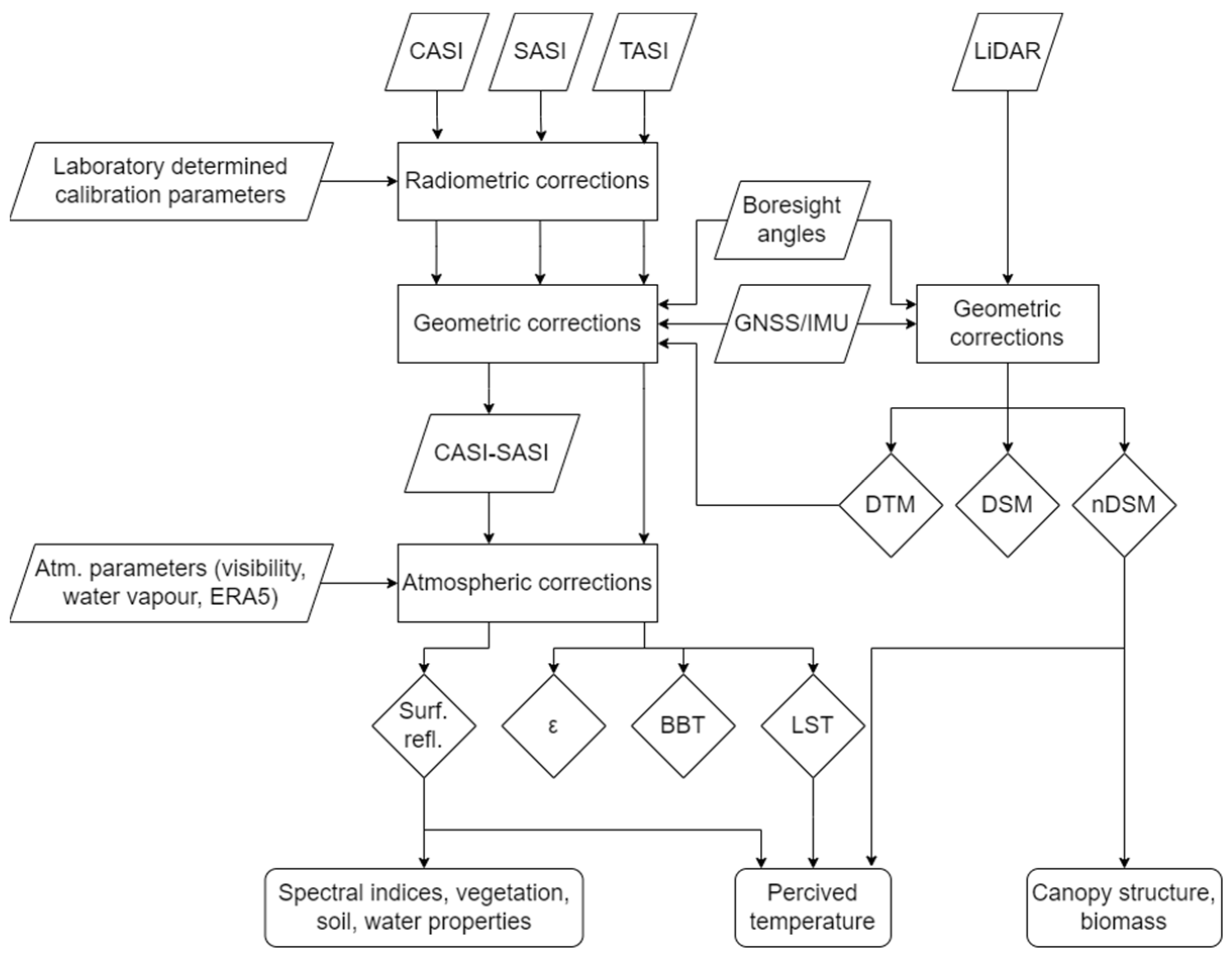

4. Data Pre-Processing and Products

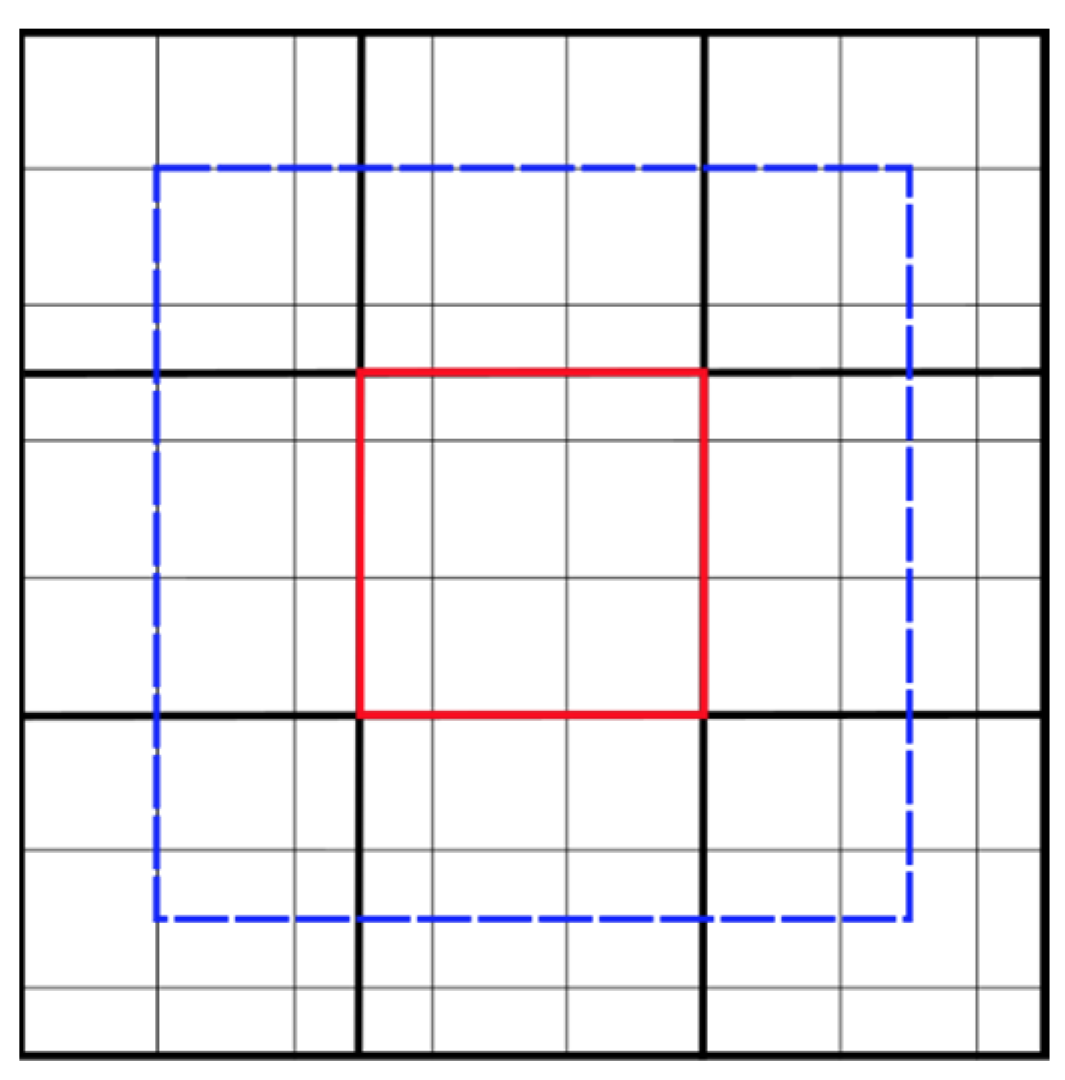

4.1. Boresight Alignment

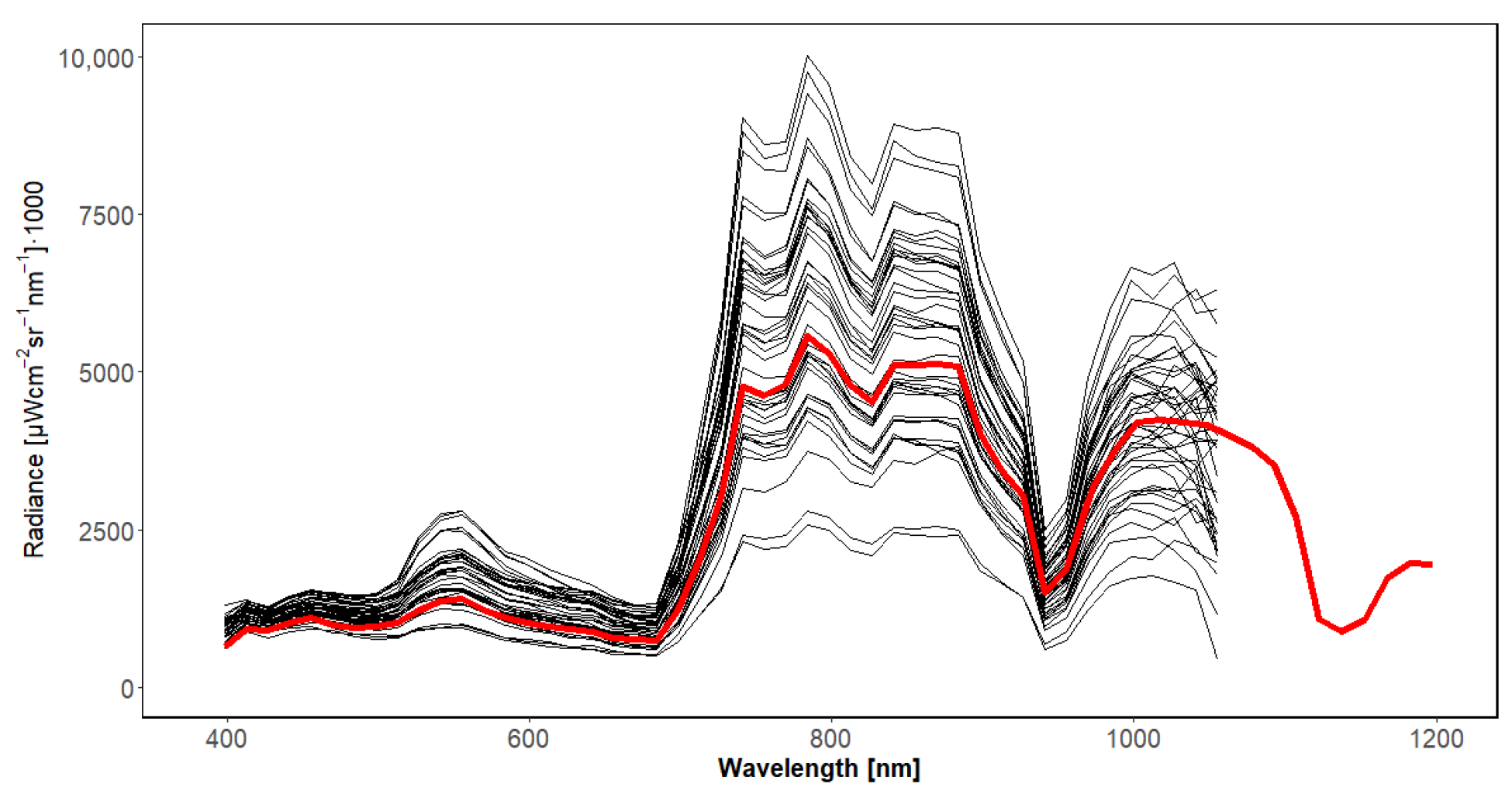

4.2. CASI and SASI Data

4.2.1. Radiometric Corrections

4.2.2. Georeferencing

4.2.3. Fusion of CASI and SASI Data

4.2.4. Atmospheric Correction

4.3. TASI Data

4.3.1. Thermal Radiometric Corrections

4.3.2. Thermal Atmospheric Corrections

- Land surface temperature (kinetic temperature) [K] (Figure 7b);

- Land surface emissivity [-];

- Land leaving radiance [W m−2 sr−1 m−1];

- Broadband brightness temperature for emissivity = 1 [K].

4.4. Laser Scanning Data

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Pettorelli, N.; Laurance, W.F.; O’Brien, T.G.; Wegmann, M.; Nagendra, H.; Turner, W. Satellite Remote Sensing for Applied Ecologists: Opportunities and Challenges. J. Appl. Ecol. 2014, 51, 839–848. [Google Scholar] [CrossRef]

- Ustin, S.L.; Middleton, E.M. Current and Near-Term Advances in Earth Observation for Ecological Applications. Ecol. Process. 2021, 10, 1. [Google Scholar] [CrossRef] [PubMed]

- Xiao, J.; Chevallier, F.; Gomez, C.; Guanter, L.; Hicke, J.A.; Huete, A.R.; Ichii, K.; Ni, W.; Pang, Y.; Rahman, A.F.; et al. Remote Sensing of the Terrestrial Carbon Cycle: A Review of Advances over 50 Years. Remote Sens. Environ. 2019, 233, 111383. [Google Scholar] [CrossRef]

- Farley, S.S.; Dawson, A.; Goring, S.J.; Williams, J.W. Situating Ecology as a Big-Data Science: Current Advances, Challenges, and Solutions. BioScience 2018, 68, 563–576. [Google Scholar] [CrossRef]

- Fisher, R.A.; Koven, C.D.; Anderegg, W.R.L.; Christoffersen, B.O.; Dietze, M.C.; Farrior, C.E.; Holm, J.A.; Hurtt, G.C.; Knox, R.G.; Lawrence, P.J.; et al. Vegetation Demographics in Earth System Models: A Review of Progress and Priorities. Glob. Chang. Biol. 2018, 24, 35–54. [Google Scholar] [CrossRef]

- Raffa, K.F.; Aukema, B.H.; Bentz, B.J.; Carroll, A.L.; Hicke, J.A.; Turner, M.G.; Romme, W.H. Cross-Scale Drivers of Natural Disturbances Prone to Anthropogenic Amplification: The Dynamics of Bark Beetle Eruptions. BioScience 2008, 58, 501–517. [Google Scholar] [CrossRef]

- Berger, K.; Machwitz, M.; Kycko, M.; Kefauver, S.C.; Van Wittenberghe, S.; Gerhards, M.; Verrelst, J.; Atzberger, C.; Van Der Tol, C.; Damm, A.; et al. Multi-Sensor Spectral Synergies for Crop Stress Detection and Monitoring in the Optical Domain: A Review. Remote Sens. Environ. 2022, 280, 113198. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Höfle, B.; Vierling, L.A.; Abellán, A.; Asner, G.P.; Deems, J.S.; Glennie, C.L.; Joerg, P.C.; LeWinter, A.L.; Magney, T.S.; et al. Beyond 3-D: The New Spectrum of Lidar Applications for Earth and Ecological Sciences. Remote Sens. Environ. 2016, 186, 372–392. [Google Scholar] [CrossRef]

- Kamoske, A.G.; Dahlin, K.M.; Read, Q.D.; Record, S.; Stark, S.C.; Serbin, S.P.; Zarnetske, P.L.; Dornelas, M. Towards Mapping Biodiversity from above: Can Fusing Lidar and Hyperspectral Remote Sensing Predict Taxonomic, Functional, and Phylogenetic Tree Diversity in Temperate Forests? Glob. Ecol. Biogeogr. 2022, 31, 1440–1460. [Google Scholar] [CrossRef]

- Lausch, A.; Borg, E.; Bumberger, J.; Dietrich, P.; Heurich, M.; Huth, A.; Jung, A.; Klenke, R.; Knapp, S.; Mollenhauer, H.; et al. Understanding Forest Health with Remote Sensing, Part III: Requirements for a Scalable Multi-Source Forest Health Monitoring Network Based on Data Science Approaches. Remote Sens. 2018, 10, 1120. [Google Scholar] [CrossRef]

- Senf, C. Seeing the System from Above: The Use and Potential of Remote Sensing for Studying Ecosystem Dynamics. Ecosystems 2022, 25, 1719–1737. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [PubMed]

- White, M.A.; de BEURS, K.M.; Didan, K.; Inouye, D.W.; Richardson, A.D.; Jensen, O.P.; O’Keefe, J.; Zhang, G.; Nemani, R.R.; van Leeuwen, W.J.D.; et al. Intercomparison, Interpretation, and Assessment of Spring Phenology in North America Estimated from Remote Sensing for 1982–2006. Glob. Chang. Biol. 2009, 15, 2335–2359. [Google Scholar] [CrossRef]

- Jung, M.; Reichstein, M.; Ciais, P.; Seneviratne, S.I.; Sheffield, J.; Goulden, M.L.; Bonan, G.; Cescatti, A.; Chen, J.; de Jeu, R.; et al. Recent Decline in the Global Land Evapotranspiration Trend Due to Limited Moisture Supply. Nature 2010, 467, 951–954. [Google Scholar] [CrossRef]

- Verrelst, J.; Rivera-Caicedo, J.P.; Reyes-Muñoz, P.; Morata, M.; Amin, E.; Tagliabue, G.; Panigada, C.; Hank, T.; Berger, K. Mapping Landscape Canopy Nitrogen Content from Space Using PRISMA Data. ISPRS J. Photogramm. Remote Sens. 2021, 178, 382–395. [Google Scholar] [CrossRef]

- Bachmann, M.; Alonso, K.; Carmona, E.; Gerasch, B.; Habermeyer, M.; Holzwarth, S.; Krawczyk, H.; Langheinrich, M.; Marshall, D.; Pato, M.; et al. Analysis-Ready Data from Hyperspectral Sensors—The Design of the EnMAP CARD4L-SR Data Product. Remote Sens. 2021, 13, 4536. [Google Scholar] [CrossRef]

- Curnick, D.J.; Davies, A.J.; Duncan, C.; Freeman, R.; Jacoby, D.M.P.; Shelley, H.T.E.; Rossi, C.; Wearn, O.R.; Williamson, M.J.; Pettorelli, N. SmallSats: A New Technological Frontier in Ecology and Conservation? Remote Sens. Ecol. Conserv. 2022, 8, 139–150. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned Aerial Systems for Photogrammetry and Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicle Forest Remote Sensing—A Systematic Review. Part I: A General Framework. Forests 2021, 12, 327. [Google Scholar] [CrossRef]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicles Forest Remote Sensing—A Systematic Review. Part II: Research Applications. Forests 2021, 12, 397. [Google Scholar] [CrossRef]

- Pavelka, K.; Raeva, P.; Pavelka, K. Evaluating the Performance of Airborne and Ground Sensors for Applications in Precision Agriculture: Enhancing the Postprocessing State-of-the-Art Algorithm. Sensors 2022, 22, 7693. [Google Scholar] [CrossRef] [PubMed]

- Pavelka, K.; Šedina, J.; Pavelka, K. Knud Rasmussen Glacier Status Analysis Based on Historical Data and Moving Detection Using RPAS. Appl. Sci. 2021, 11, 754. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry Applications of UAVs in Europe: A Review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Chadwick, K.; Asner, G. Organismic-Scale Remote Sensing of Canopy Foliar Traits in Lowland Tropical Forests. Remote Sens. 2016, 8, 87. [Google Scholar] [CrossRef]

- Cogliati, S.; Sarti, F.; Chiarantini, L.; Cosi, M.; Lorusso, R.; Lopinto, E.; Miglietta, F.; Genesio, L.; Guanter, L.; Damm, A.; et al. The PRISMA Imaging Spectroscopy Mission: Overview and First Performance Analysis. Remote Sens. Environ. 2021, 262, 112499. [Google Scholar] [CrossRef]

- Cooper, S.; Okujeni, A.; Pflugmacher, D.; Van Der Linden, S.; Hostert, P. Combining Simulated Hyperspectral EnMAP and Landsat Time Series for Forest Aboveground Biomass Mapping. Int. J. Appl. Earth Obs. Geoinf. 2021, 98, 102307. [Google Scholar] [CrossRef]

- Chlus, A.; Townsend, P.A. Characterizing Seasonal Variation in Foliar Biochemistry with Airborne Imaging Spectroscopy. Remote Sens. Environ. 2022, 275, 113023. [Google Scholar] [CrossRef]

- Novotný, J.; Navrátilová, B.; Janoutová, R.; Oulehle, F.; Homolová, L. Influence of Site-Specific Conditions on Estimation of Forest above Ground Biomass from Airborne Laser Scanning. Forests 2020, 11, 268. [Google Scholar] [CrossRef]

- Chadwick, K.D.; Brodrick, P.G.; Grant, K.; Goulden, T.; Henderson, A.; Falco, N.; Wainwright, H.; Williams, K.H.; Bill, M.; Breckheimer, I.; et al. Integrating Airborne Remote Sensing and Field Campaigns for Ecology and Earth System Science. Methods Ecol. Evol. 2020, 11, 1492–1508. [Google Scholar] [CrossRef]

- Forzieri, G.; Tanteri, L.; Moser, G.; Catani, F. Mapping Natural and Urban Environments Using Airborne Multi-Sensor ADS40–MIVIS–LiDAR Synergies. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 313–323. [Google Scholar] [CrossRef]

- Urban, J.; Pikl, M.; Zemek, F.; Novotný, J. Using Google Street View Photographs to Assess Long-Term Outdoor Thermal Perception and Thermal Comfort in the Urban Environment during Heatwaves. Front. Environ. Sci. 2022, 10, 878341. [Google Scholar] [CrossRef]

- Asner, G.P. Carnegie Airborne Observatory: In-Flight Fusion of Hyperspectral Imaging and Waveform Light Detection and Ranging for Three-Dimensional Studies of Ecosystems. J. Appl. Remote Sens. 2007, 1, 013536. [Google Scholar] [CrossRef]

- Asner, G.P.; Knapp, D.E.; Boardman, J.; Green, R.O.; Kennedy-Bowdoin, T.; Eastwood, M.; Martin, R.E.; Anderson, C.; Field, C.B. Carnegie Airborne Observatory-2: Increasing Science Data Dimensionality via High-Fidelity Multi-Sensor Fusion. Remote Sens. Environ. 2012, 124, 454–465. [Google Scholar] [CrossRef]

- Kampe, T.U. NEON: The First Continental-Scale Ecological Observatory with Airborne Remote Sensing of Vegetation Canopy Biochemistry and Structure. J. Appl. Remote Sens. 2010, 4, 043510. [Google Scholar] [CrossRef]

- Cook, B.; Corp, L.; Nelson, R.; Middleton, E.; Morton, D.; McCorkel, J.; Masek, J.; Ranson, K.; Ly, V.; Montesano, P. NASA Goddard’s LiDAR, Hyperspectral and Thermal (G-LiHT) Airborne Imager. Remote Sens. 2013, 5, 4045–4066. [Google Scholar] [CrossRef]

- ARES|Airborne Research of the Earth System. Available online: https://ares-observatory.ch/ (accessed on 7 June 2023).

- NEODAAS-Airborne. Available online: https://nerc-arf-dan.pml.ac.uk/ (accessed on 7 June 2023).

- FLIS—Department of Airborne Activities. Available online: https://olc.czechglobe.cz/en/flis-2/ (accessed on 8 June 2023).

- Hanuš, J.; Fabiánek, T.; Fajmon, L. Potential of Airborne Imaging Spectroscopy at CzechGlobe. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 15–17. [Google Scholar] [CrossRef]

- Riegl. Airborne Laser Scanner LMS-Q780 General Description and Data Interface (Manual); Riegl: Horn, Austria, 2014. [Google Scholar]

- CzechGlobe|Virtual Tour. Available online: http://czechglobe.pano3d.eu (accessed on 8 June 2023).

- Rascher, U.; Alonso, L.; Burkart, A.; Cilia, C.; Cogliati, S.; Colombo, R.; Damm, A.; Drusch, M.; Guanter, L.; Hanus, J.; et al. Sun-Induced Fluorescence—A New Probe of Photosynthesis: First Maps from the Imaging Spectrometer HyPlant. Glob. Chang. Biol. 2015, 21, 4673–4684. [Google Scholar] [CrossRef]

- Siegmann, B.; Alonso, L.; Celesti, M.; Cogliati, S.; Colombo, R.; Damm, A.; Douglas, S.; Guanter, L.; Hanuš, J.; Kataja, K.; et al. The High-Performance Airborne Imaging Spectrometer HyPlant—From Raw Images to Top-of-Canopy Reflectance and Fluorescence Products: Introduction of an Automatized Processing Chain. Remote Sens. 2019, 11, 2760. [Google Scholar] [CrossRef]

- FLEX—Earth Online. Available online: https://earth.esa.int/eogateway/missions/flex (accessed on 23 March 2023).

- Launching the Revolutionary PTR-TOF 6000 X2 Trace VOC Analyzer|IONICON. Available online: https://www.ionicon.com/blog/2017/launching-the-revolutionary-ptr-tof-6000-x2-trace-voc-analyzer (accessed on 17 May 2023).

- Dashora, A.; Lohani, B.; Deb, K. Two-Step Procedure of Optimisation for Flight Planning Problem for Airborne LiDAR Data Acquisition. Int. J. Math. Model. Numer. Optim. 2013, 4, 323. [Google Scholar] [CrossRef]

- Richter, R.; Schläpfer, D. Atmospheric/Topographic Correction for Airborne Imagery (ATCOR-4 User Guide) 2021; ReSe Applications LLC: Langeggweg, Switzerland, 2021. [Google Scholar]

- Itres. Standard Processing and Data QA Manual; Itres: Calgary, AB, Canada, 2013. [Google Scholar]

- Richter, R.; Schläpfer, D. Geo-Atmospheric Processing of Airborne Imaging Spectrometry Data. Part 2: Atmospheric/Topographic Correction. Int. J. Remote Sens. 2002, 23, 2631–2649. [Google Scholar] [CrossRef]

- Schläpfer, D.; Richter, R. Geo-Atmospheric Processing of Airborne Imaging Spectrometry Data. Part 1: Parametric Orthorectification. Int. J. Remote Sens. 2002, 23, 2609–2630. [Google Scholar] [CrossRef]

- Inamdar, D.; Kalacska, M.; Darko, P.O.; Arroyo-Mora, J.P.; Leblanc, G. Spatial Response Resampling (SR2): Accounting for the Spatial Point Spread Function in Hyperspectral Image Resampling. MethodsX 2023, 10, 101998. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Zhang, L.; Ong, C.; Rodger, A.; Liu, J.; Sun, X.; Zhang, H.; Jian, X.; Tong, Q. Improved Aerosol Optical Thickness, Columnar Water Vapor, and Surface Reflectance Retrieval from Combined CASI and SASI Airborne Hyperspectral Sensors. Remote Sens. 2017, 9, 217. [Google Scholar] [CrossRef]

- Berk, A.; Anderson, G.P.; Bernstein, L.S.; Acharya, P.K.; Dothe, H.; Matthew, M.W.; Adler-Golden, S.M.; Chetwynd, J.H., Jr.; Richtsmeier, S.C.; Pukall, B.; et al. MODTRAN4 radiative transfer modeling for atmospheric correction. In Proceedings of the 1999 SPIE’s International Symposium on Optical Science Engineering, and Instrumentation, Denver, CO, USA, 18–23 July 1999; p. 348. [Google Scholar] [CrossRef]

- Guanter, L.; Gómez-Chova, L.; Moreno, J. Coupled Retrieval of Aerosol Optical Thickness, Columnar Water Vapor and Surface Reflectance Maps from ENVISAT/MERIS Data over Land. Remote Sens. Environ. 2008, 112, 2898–2913. [Google Scholar] [CrossRef]

- Green, R.O.; Conel, J.E.; Margolis, J.; Chovit, C.; Faust, J. In-Flight Calibration and Validation of the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS). 1996. Available online: https://hdl.handle.net/2014/25023 (accessed on 20 May 2023).

- Secker, J.; Staenz, K.; Gauthier, R.P.; Budkewitsch, P. Vicarious Calibration of Airborne Hyperspectral Sensors in Operational Environments. Remote Sens. Environ. 2001, 76, 81–92. [Google Scholar] [CrossRef]

- He, W.; Yao, Q.; Li, C.; Yokoya, N.; Zhao, Q. Non-Local Meets Global: An Integrated Paradigm for Hyperspectral Denoising. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6861–6870. [Google Scholar]

- Copernicus|Climate Data Store. Available online: https://cds.climate.copernicus.eu/#!/home (accessed on 8 June 2023).

- Guillory, A. ERA5. Available online: https://www.ecmwf.int/en/forecasts/datasets/reanalysis-datasets/era5 (accessed on 23 March 2023).

- Gillespie, A.; Rokugawa, S.; Matsunaga, T.; Cothern, J.S.; Hook, S.; Kahle, A.B. A Temperature and Emissivity Separation Algorithm for Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) Images. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1113–1126. [Google Scholar] [CrossRef]

- Cheng, J.; Liang, S.; Wang, J.; Li, X. A Stepwise Refining Algorithm of Temperature and Emissivity Separation for Hyperspectral Thermal Infrared Data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1588–1597. [Google Scholar] [CrossRef]

- Kealy, P.S.; Hook, S.J. Separating Temperature and Emissivity in Thermal Infrared Multispectral Scanner Data: Implications for Recovering Land Surface Temperatures. IEEE Trans. Geosci. Remote Sens. 1993, 31, 1155–1164. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Jimenez-Munoz, J.C.; Soria, G.; Romaguera, M.; Guanter, L.; Moreno, J.; Plaza, A.; Martinez, P. Land Surface Emissivity Retrieval From Different VNIR and TIR Sensors. IEEE Trans. Geosci. Remote Sens. 2008, 46, 316–327. [Google Scholar] [CrossRef]

- Vermote, E.F.; Tanre, D.; Deuze, J.L.; Herman, M.; Morcette, J.-J. Second Simulation of the Satellite Signal in the Solar Spectrum, 6S: An Overview. IEEE Trans. Geosci. Remote Sens. 1997, 35, 675–686. [Google Scholar] [CrossRef]

- Pérez-Planells, L.; Valor, E.; Coll, C.; Niclòs, R. Comparison and Evaluation of the TES and ANEM Algorithms for Land Surface Temperature and Emissivity Separation over the Area of Valencia, Spain. Remote Sens. 2017, 9, 1251. [Google Scholar] [CrossRef]

- Payan, V.; Royer, A. Analysis of Temperature Emissivity Separation (TES) Algorithm Applicability and Sensitivity. Int. J. Remote Sens. 2004, 25, 15–37. [Google Scholar] [CrossRef]

- Matsunaga, T. A Temperature-Emissivity Separation Method Using an Empirical Relationship between the Mean, the Maximum, and the Minimum of the Thermal Infrared Emissivity Spectrum. J. Remote Sens. Soc. Jpn. 1994, 14, 230–241. [Google Scholar]

- Pivovarnik, M. New Approaches in Airborne Thermal Image Processing for Landscape Assessment. Ph.D. Thesis, Brno University of Technology, Brno, Czech Republic, 2017. [Google Scholar]

- Sabol, D.E., Jr.; Gillespie, A.R.; Abbott, E.; Yamada, G. Field Validation of the ASTER Temperature–Emissivity Separation Algorithm. Remote Sens. Environ. 2009, 113, 2328–2344. [Google Scholar] [CrossRef]

- Michel, A.; Granero-Belinchon, C.; Cassante, C.; Boitard, P.; Briottet, X.; Adeline, K.R.M.; Poutier, L.; Sobrino, J.A. A New Material-Oriented TES for Land Surface Temperature and SUHI Retrieval in Urban Areas: Case Study over Madrid in the Framework of the Future TRISHNA Mission. Remote Sens. 2021, 13, 5139. [Google Scholar] [CrossRef]

- Mapserver CzechGlobe. Available online: https://mapserver.czechglobe.cz/en/map (accessed on 8 June 2023).

- EUFAR—The EUropean Facility for Airborne Research. Available online: http://eufar.net/ (accessed on 8 June 2023).

- Open Access to CzeCOS Research Infrastructure Hosted byGlobal Change Research Institute CAS. Available online: https://www.czechglobe.cz/en/open-access-en/czecos-en/ (accessed on 8 June 2023).

- Gastellu-Etchegorry, J.-P.; Lauret, N.; Yin, T.; Landier, L.; Kallel, A.; Malenovsky, Z.; Bitar, A.A.; Aval, J.; Benhmida, S.; Qi, J.; et al. DART: Recent Advances in Remote Sensing Data Modeling With Atmosphere, Polarization, and Chlorophyll Fluorescence. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2640–2649. [Google Scholar] [CrossRef]

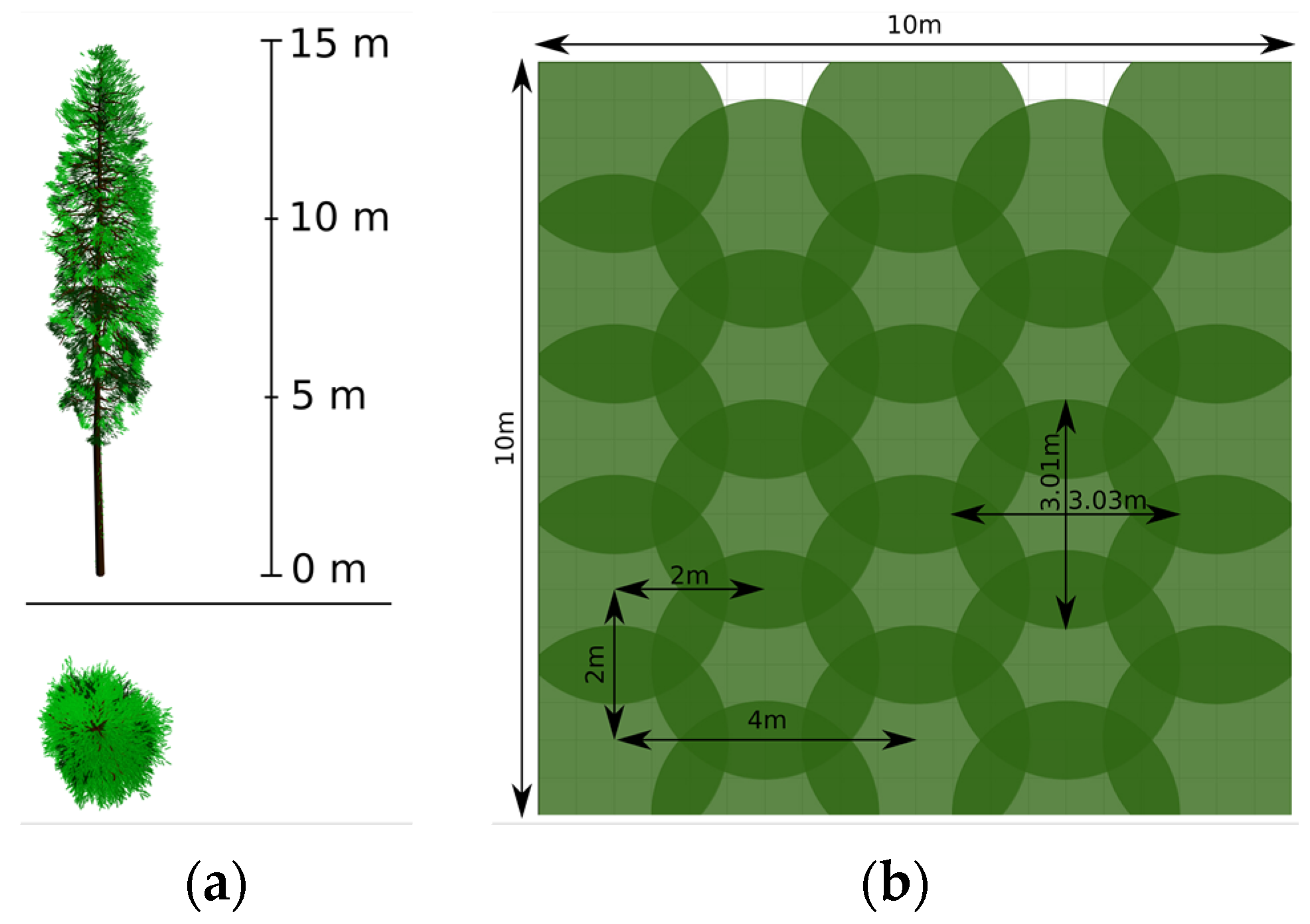

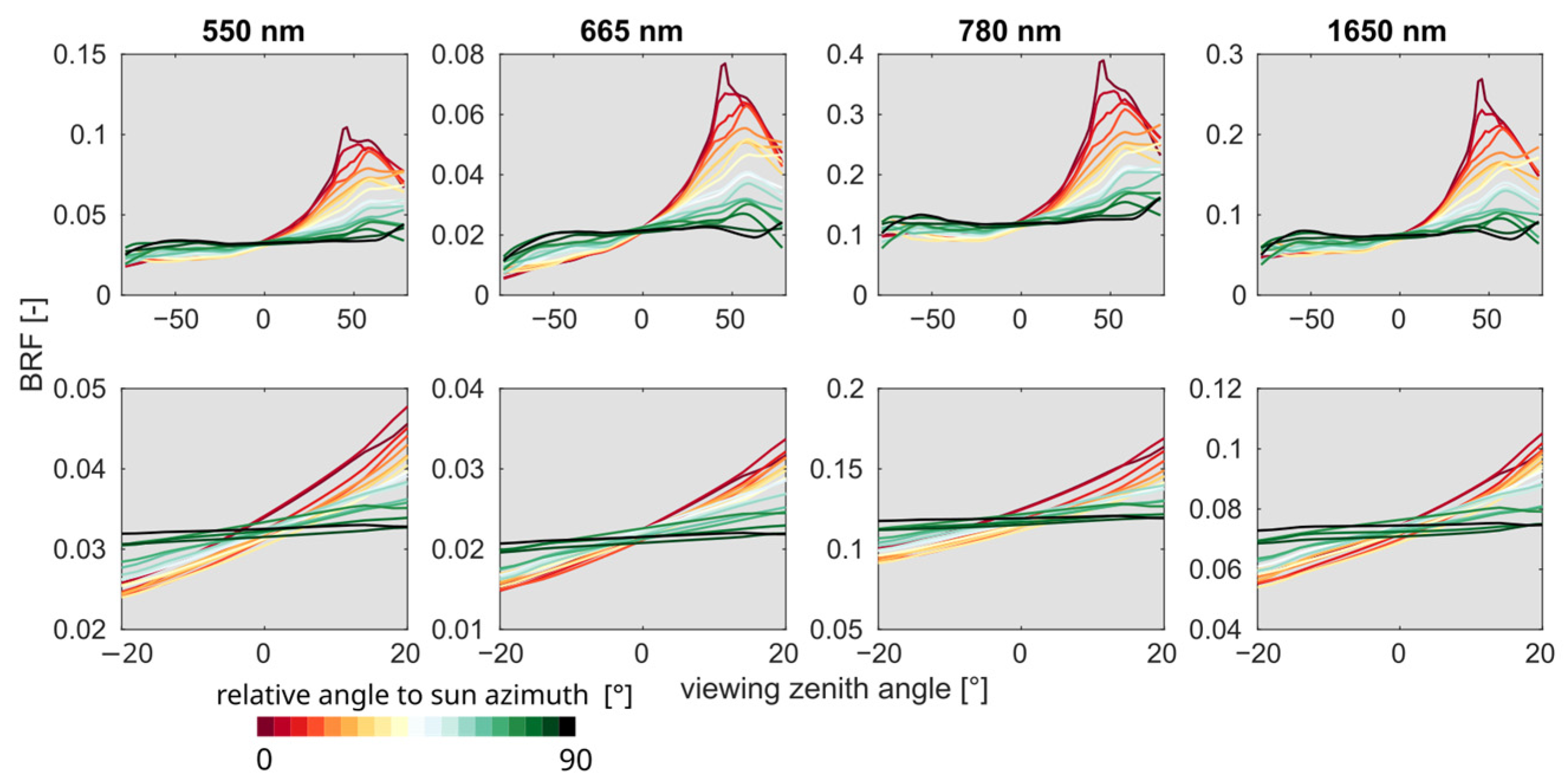

- Malenovský, Z.; Regaieg, O.; Yin, T.; Lauret, N.; Guilleux, J.; Chavanon, E.; Duran, N.; Janoutová, R.; Delavois, A.; Meynier, J.; et al. Discrete Anisotropic Radiative Transfer Modelling of Solar-Induced Chlorophyll Fluorescence: Structural Impacts in Geometrically Explicit Vegetation Canopies. Remote Sens. Environ. 2021, 263, 112564. [Google Scholar] [CrossRef]

- Janoutová, R.; Homolová, L.; Novotný, J.; Navrátilová, B.; Pikl, M.; Malenovský, Z. Detailed Reconstruction of Trees from Terrestrial Laser Scans for Remote Sensing and Radiative Transfer Modelling Applications. Silico Plants 2021, 3, diab026. [Google Scholar] [CrossRef]

- Homolová, L.; Janoutová, R.; Lukeš, P.; Hanuš, J.; Novotný, J.; Brovkina, O.; Loayza Fernandez, R.R. In Situ Data Supporting Remote Sensing Estimation of Spruce Forest Parameters at the Ecosystem Station Bílý Kříž. Beskydy 2018, 10, 75–86. [Google Scholar] [CrossRef]

| CASI-1500 | SASI-600 | TASI-600 | |

|---|---|---|---|

| Spectral domain | VNIR | SWIR | LWIR |

| Spectral range [nm] | 380–1050 | 950–2450 | 8000–11,500 |

| Max. spectral resolution [nm] | 2.7 | 15 | 110 |

| Max. number of spectral bands | 288 | 100 | 32 |

| Across-track spatial pixels | 1500 | 600 | 600 |

| Field of view [°] | 40 | 40 | 40 |

| Instantaneous field of view [mrad] | 0.49 | 1.2 | 1.2 |

| Typical spatial resolution 1 [m] | 0.5–2.0 | 1.25–5.0 | 1.25–5.0 |

| LMS Q780 | |

|---|---|

| Laser pulse repetition rate [kHz] | Up to 400 |

| Maximum measuring range [m] | Up to 5800 1 |

| Wavelength [nm] | 1064 |

| Laser beam divergence [mrad] | ≤0.25 |

| Field of view [°] | 60 |

| Typical point density [pts/m2] | 0.5–4 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hanuš, J.; Slezák, L.; Fabiánek, T.; Fajmon, L.; Hanousek, T.; Janoutová, R.; Kopkáně, D.; Novotný, J.; Pavelka, K.; Pikl, M.; et al. Flying Laboratory of Imaging Systems: Fusion of Airborne Hyperspectral and Laser Scanning for Ecosystem Research. Remote Sens. 2023, 15, 3130. https://doi.org/10.3390/rs15123130

Hanuš J, Slezák L, Fabiánek T, Fajmon L, Hanousek T, Janoutová R, Kopkáně D, Novotný J, Pavelka K, Pikl M, et al. Flying Laboratory of Imaging Systems: Fusion of Airborne Hyperspectral and Laser Scanning for Ecosystem Research. Remote Sensing. 2023; 15(12):3130. https://doi.org/10.3390/rs15123130

Chicago/Turabian StyleHanuš, Jan, Lukáš Slezák, Tomáš Fabiánek, Lukáš Fajmon, Tomáš Hanousek, Růžena Janoutová, Daniel Kopkáně, Jan Novotný, Karel Pavelka, Miroslav Pikl, and et al. 2023. "Flying Laboratory of Imaging Systems: Fusion of Airborne Hyperspectral and Laser Scanning for Ecosystem Research" Remote Sensing 15, no. 12: 3130. https://doi.org/10.3390/rs15123130

APA StyleHanuš, J., Slezák, L., Fabiánek, T., Fajmon, L., Hanousek, T., Janoutová, R., Kopkáně, D., Novotný, J., Pavelka, K., Pikl, M., Zemek, F., & Homolová, L. (2023). Flying Laboratory of Imaging Systems: Fusion of Airborne Hyperspectral and Laser Scanning for Ecosystem Research. Remote Sensing, 15(12), 3130. https://doi.org/10.3390/rs15123130