1. Introduction

Currently, the easy-to-use unmanned remote sensing vehicles (UAVs) and the availability of low-cost image acquisition systems have made remote sensing imaging (RSI) more popular and convenient [

1]. It has become possible to obtain a wide range of high-quality RSI without a considerable amount of time and elaborate planning. The RSI has been adopted in several tasks, namely precision agriculture, cartography, urban studies, and landscape archaeology for over a decade [

2]. One effective implementation is to classify and detect the vehicle in RSI. It is progressively adopted to smart transportation for traffic flow estimation, parking space allocation, vehicle identification, and so on. Hence, it is the future trend to use RSI for vehicle and transportation-related applications [

3]. Over the past years, vehicle identification in RSI has been extensively used in several fields and thus attracted considerable interest. Despite the serious amount of effort dedicated to these tasks, the current method still requires significant expansion to overcome these problems [

4]. Firstly, direction and scale variability makes it highly challenging to precisely locate the vehicle object. Next, complex backgrounds rise interclass similarity and intraclass variability. At last, some RSI is captured in a lower resolution, which can lead to lacking an abundant detailed appearance for distinguishing vehicles from similar objects. As compared with each image of the vehicle [

5], the RSI captured from the perpendicular perspective lose the ‘face’ of the vehicle, and the vehicle commonly shows a rectilinear structure. Therefore, the presence of non-vehicle rectilinear objects such as electrical units and trash bins can complicate the task of air conditioning units on the top of buildings, which causes several false alarms [

6]. Thus, researchers are trying to employ contemporary DL-based object detection methods to push the boundary of triumph in these regards.

With the latest improvements in machine learning (ML) approaches, we are now capable of achieving higher object detection rates in cluttered scenes [

7]. ML is a subfield of artificial intelligence (AI) that focuses on the design of algorithms and statistical models, which allows computers to learn and make predictions without being explicitly programmed. The detection and classification of vehicles using aerial images become more realistic with deep neural networks (DNN). The technique for vehicle detection using aerial images is categorized into two classes, the traditional ML approaches and the DL methods [

8]. DL for computer vision (CV) grows more conventional every year, particularly due to Convolutional Neural Networks (CNN) that are capable of learning expressive and powerful descriptors from an image for a great number of tasks: detection, classification, segmentation, and so on [

9]. This ubiquity of CNN in CV is now beginning to affect remote sensing, as they could address several tasks such as object detection or land use classification in RSI. Furthermore, a new architecture has appeared, derived from Fully Convolutional Networks (FCN) [

5], capable of outputting dense pixel-wise annotation and therefore capable of achieving fine-grained classification. Federated learning (FL) is an ML approach that trains the model using multiple independent sessions. In contrast, the classical centralized ML model, where local datasets are combined into one training session, considers that local data samples can be identically distributed. FL allows many actors for building a common, robust ML approach with no data distribution, thus resolving challenging problems such as data privacy, data security, data access rights, and access to heterogeneous data.

Javadi et al. [

10] examined the capability of 3D mapping features for improving the efficiency of DNN for vehicle detection. Charouh et al. [

11] presented a structure for reducing the complexity of CNN-oriented AVS approaches, whereas a BS-related element was established as a pre-processing stage for optimizing the count of convolutional functions implemented by the CNN component. A CNN-based detector with a suitable count of convolutions was executed for all the image candidates for handling the overlapping issue and enhancing detection efficiency. In [

12], emergency vehicle detection (EVD) for police cars, fire brigades, and ambulances is performed dependent upon its horn sounds. A database in Google Audioset ontology has gathered and extracted features by Mel-frequency Cepstral Coefficient (MFCC). The three DNN approaches such as CNN, dense layer, and RNN, with distinct configurations and parameters, are examined. Afterwards, an ensemble approach was planned with better-chosen methods and executing experimental testing on several configurations with hyperparameter tuning. The authors in [

13] examined a model for vehicle detection in multi-modal aerial imagery through an improved YOLOv3 and DNN, which behaviours mid-level fusion. The presented mid-level fusion structure is a primary of its type that is utilized for detecting vehicles in multi-modal aerial imagery using a hierarchical object detection network. Joshi et al. [

14] presented the EDL-MMLCC method (ensemble of DL-related multimodal land cover classification) utilizing remote sensing images (RSI). In addition, the trained procedure of DL approaches is improved by utilising the hosted cuckoo optimization (HCO) technique. Lastly, the SSA with regularized ELM (RELM) technique was executed for the land cover classifier.

Li et al. [

15] presented an anchor-free target detection approach for solving these challenges. Primarily, a multi-attention feature pyramid network (MA-FPN) has been planned for addressing the control of noise and background data on vehicle target recognition by fusing attention data from the feature pyramid network (FPN) infrastructure. Secondly, a more precise foveal area (MPFA) has been presented for providing the best ground truth for the anchor-free process to determine a further precise positive instance selective region. In [

16], the authors established a new vehicle detection and classification method for smart traffic observation which utilizes CNN for segmenting UAVs. These segmentation images can be examined to identify the vehicles with incorporated innovative customized pyramid pooling. Lastly, such vehicles can be tracked using Kalman filter (KF), kernelized filter-based approaches for coping with and accomplishing huge traffic flows with lesser human intervention.

Several models exist in the literature to perform the classification process. Though several ML and DL models for anomaly classification are available in the literature, it is still needed to enhance the classification performance. Owing to the continual deepening of the model, the number of parameters of DL models also increases quickly, which results in model overfitting. At the same time, different hyperparameters have a significant impact on the efficiency of the CNN model. Particularly, hyperparameters such as epoch count, batch size, and learning rate selection are essential to attain an effectual outcome. Since the trial and error method for hyperparameter tuning is a tedious and erroneous process, metaheuristic algorithms can be applied. Therefore, in this work, we employ an HHO algorithm for the parameter selection of the HybridNet model.

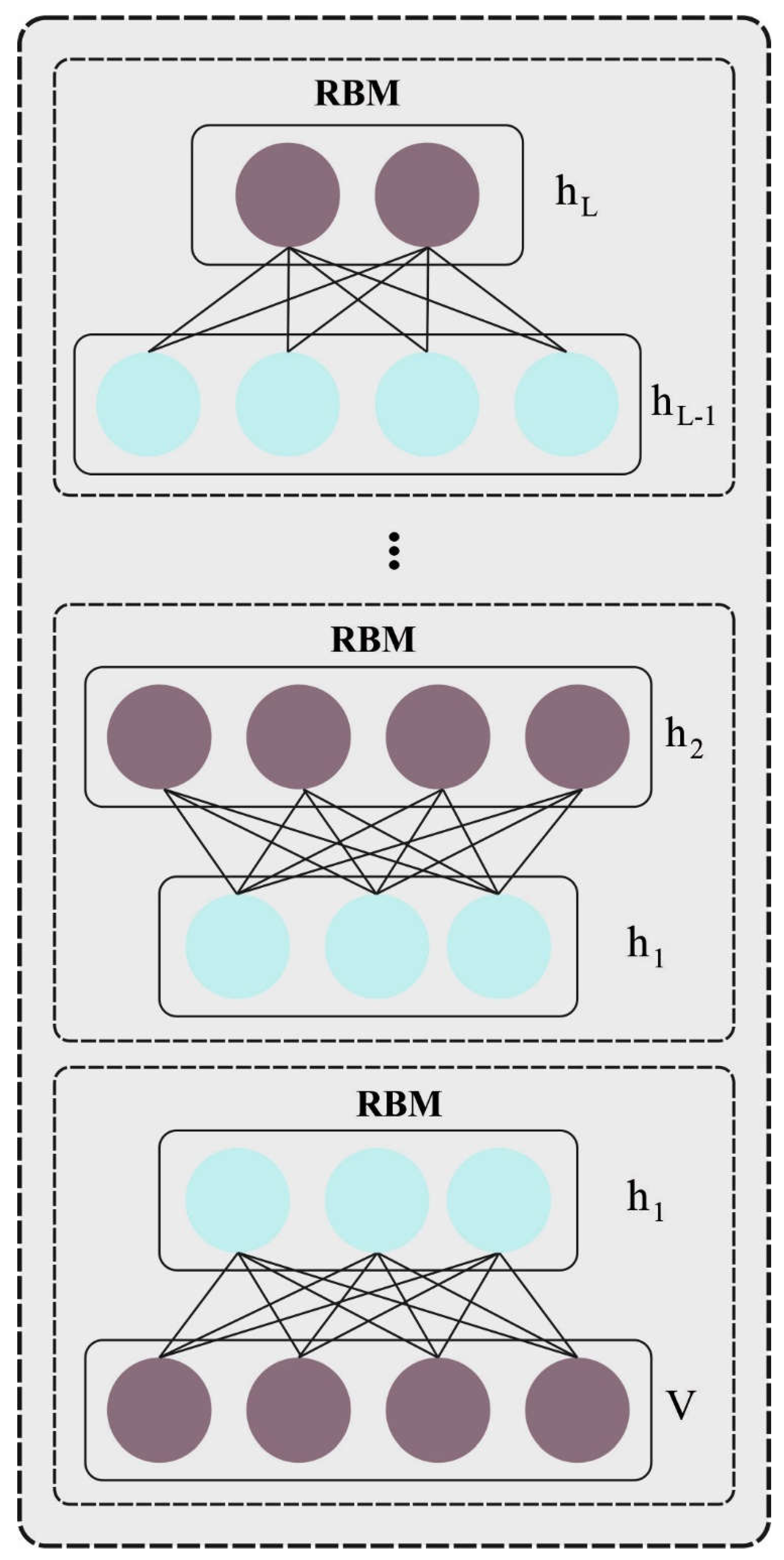

This study presents an Anomaly Detection in Pedestrian Walkways for Intelligent Transportation Systems using Federated Learning and Harris Hawks Optimizer (ADPW-FLHHO) algorithm on Remote Sensing Images. The ADPW-FLHHO technique uses the HybridNet model for feature vector generation. In addition, the HHO algorithm is implemented for the optimal hyperparameter tuning process. For anomaly detection, the ADPW-FLHHO technique uses a multi deep belief network (MDBN) model. The experimental results of the ADPW-FLHHO technique can be studied on the UCSD anomaly detection dataset.

The rest of the paper is organized as follows.

Section 2 provides the related works and

Section 3 offers the proposed model. Then,

Section 4 gives the result analysis and

Section 5 concludes the paper.

4. Discussion

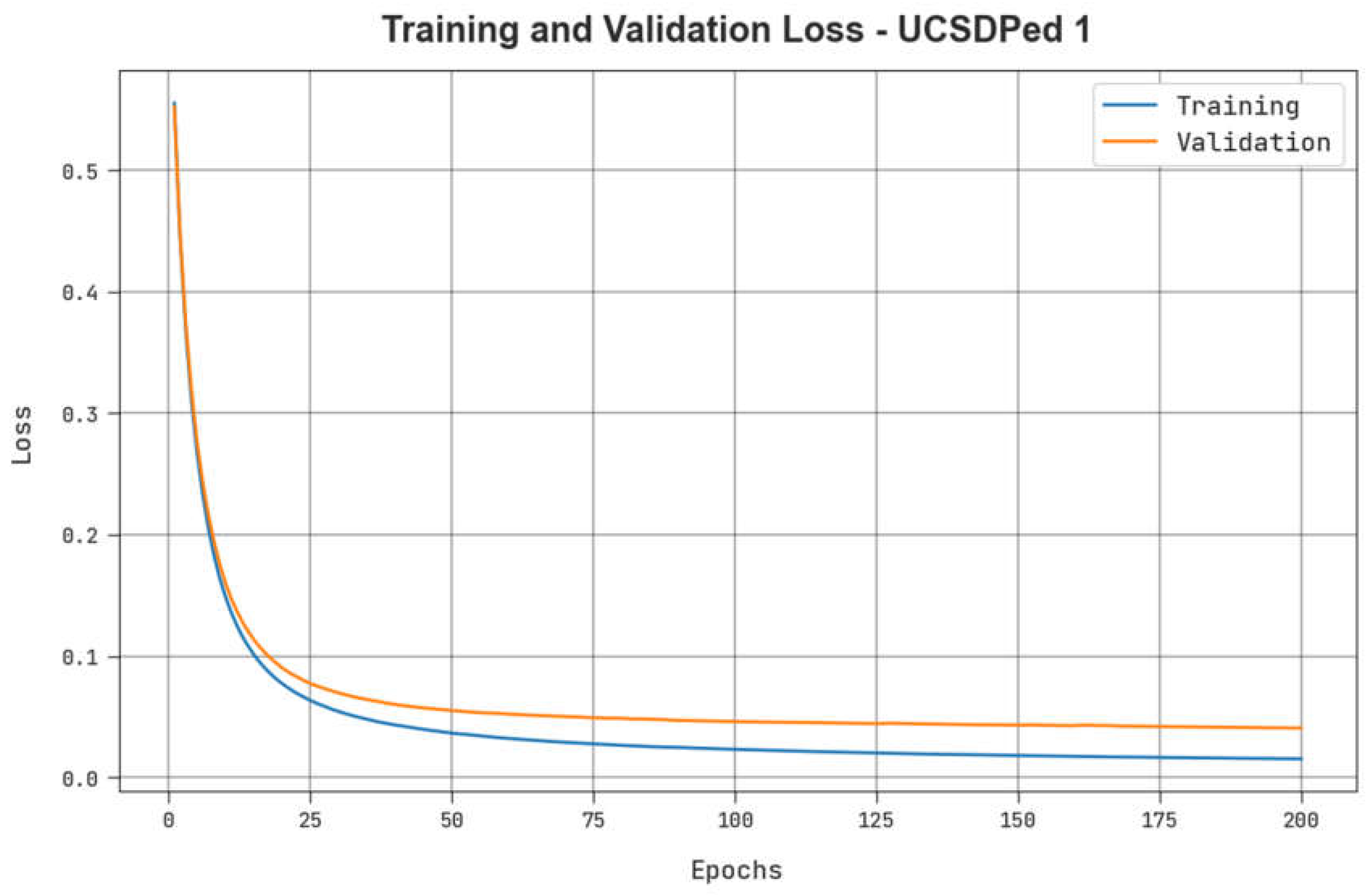

Table 2 illustrates the TPR outcomes of the ADPW-FLHHO technique on the UCSDPed1 dataset. The figure indicates that the values of TPR are raised with an increase in FPR. It is noticed that the MPPCA, SF, and MDT models resulted in the least TPR values while the AMDN model accomplishes certainly boosted TPR values. Contrastingly, the EADN model accomplishes near-optimal performance with reasonable TPR values. Nevertheless, the ADPW-FLHHO technique reaches maximum performance with higher TPR values on the UCSDPed-1 dataset.

Table 3 highlights a comparative

inspection of the ADPW-FLHHO method with the recent algorithm on the UCSDPed1 dataset [

21]. The figure demonstrates the ineffectual outcomes of the TSN-Optical Flow, spatiotemporal, and TSN-RGB models with closer

of 92.86%, 91.57%, and 90.49%, respectively. Contrastingly, the binary SVM and MIL-C3D models have managed to report a certainly increased

of 96.73% and 94.99%, respectively. Although the EADN model accomplishes a considerable

of 98.36%, the ADPW-FLHHO technique ensures its supremacy with a maximum

of 99.36%.

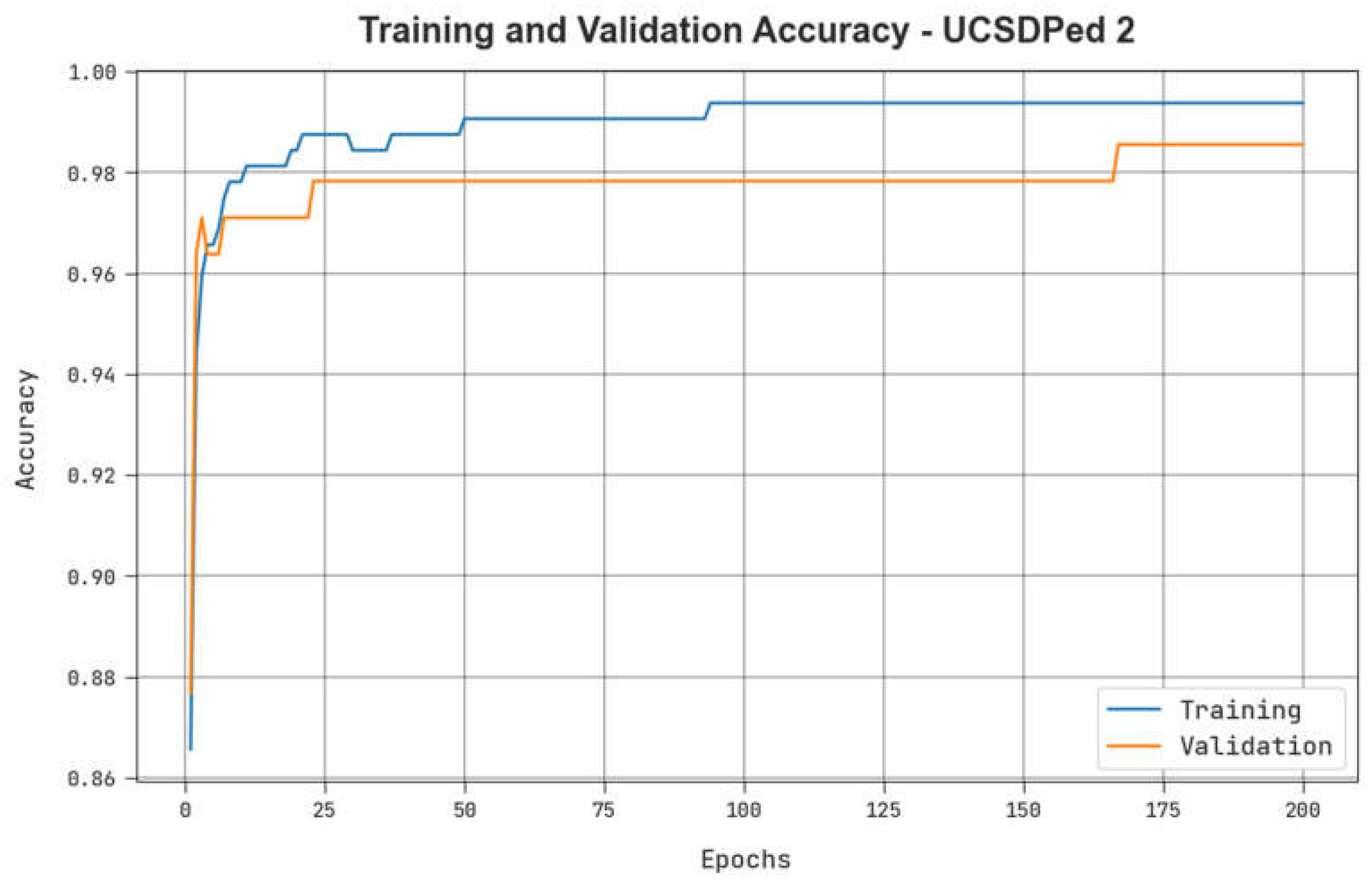

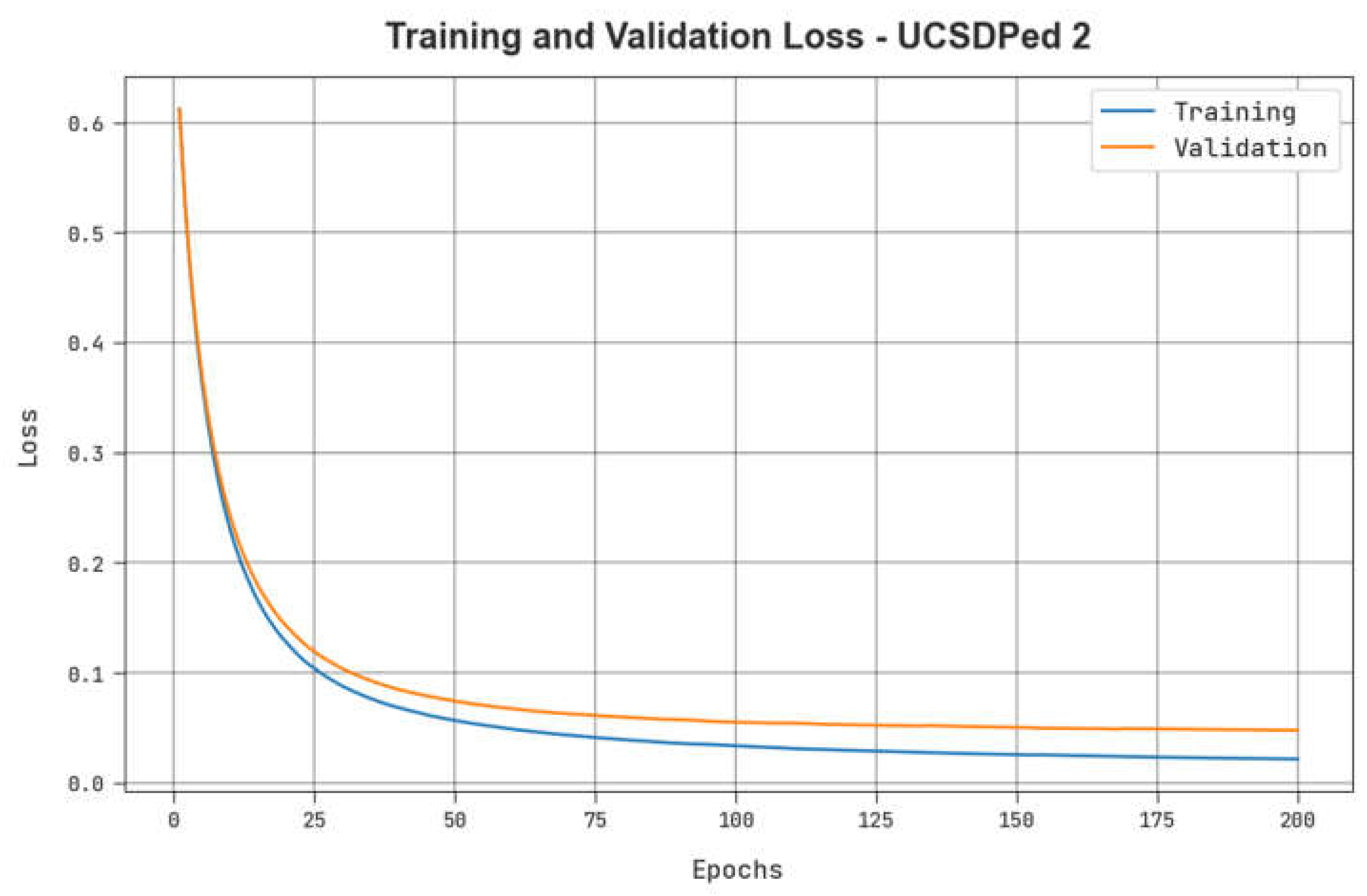

Table 4 reveals the TPR results of the ADPW-FLHHO technique on the UCSDPed2 dataset. The figure indicates that the values of TPR are raised with an increase in FPR. It is noticed that the MPPCA, SF, and MDT models resulted in the lowest TPR values while the AMDN model accomplishes certainly boosted TPR values. In contrast, the EADN model accomplishes near-optimal performance with reasonable TPR values. Nevertheless, the ADPW-FLHHO technique reaches maximum performance with maximum TPR values on the UCSDPed-1 dataset.

Table 5 shows a comparative

inspection of the ADPW-FLHHO method with recent approaches on the UCSDPed2 dataset. The figure demonstrates the ineffectual outcomes of the TSN-RGB, Spatiotemporal, and TSN-Optical Flow with closer

of 90.44%, 92.48%, and 94.36%, correspondingly. Contrastingly, the MIL-C3D and Binary SVM models have managed to report a certainly increased

of 95.5% and 97.16%, correspondingly. Although the EADN technique accomplishes a considerable

of 98.30%, the ADPW-FLHHO technique ensures its supremacy with a higher

of 99.19%.

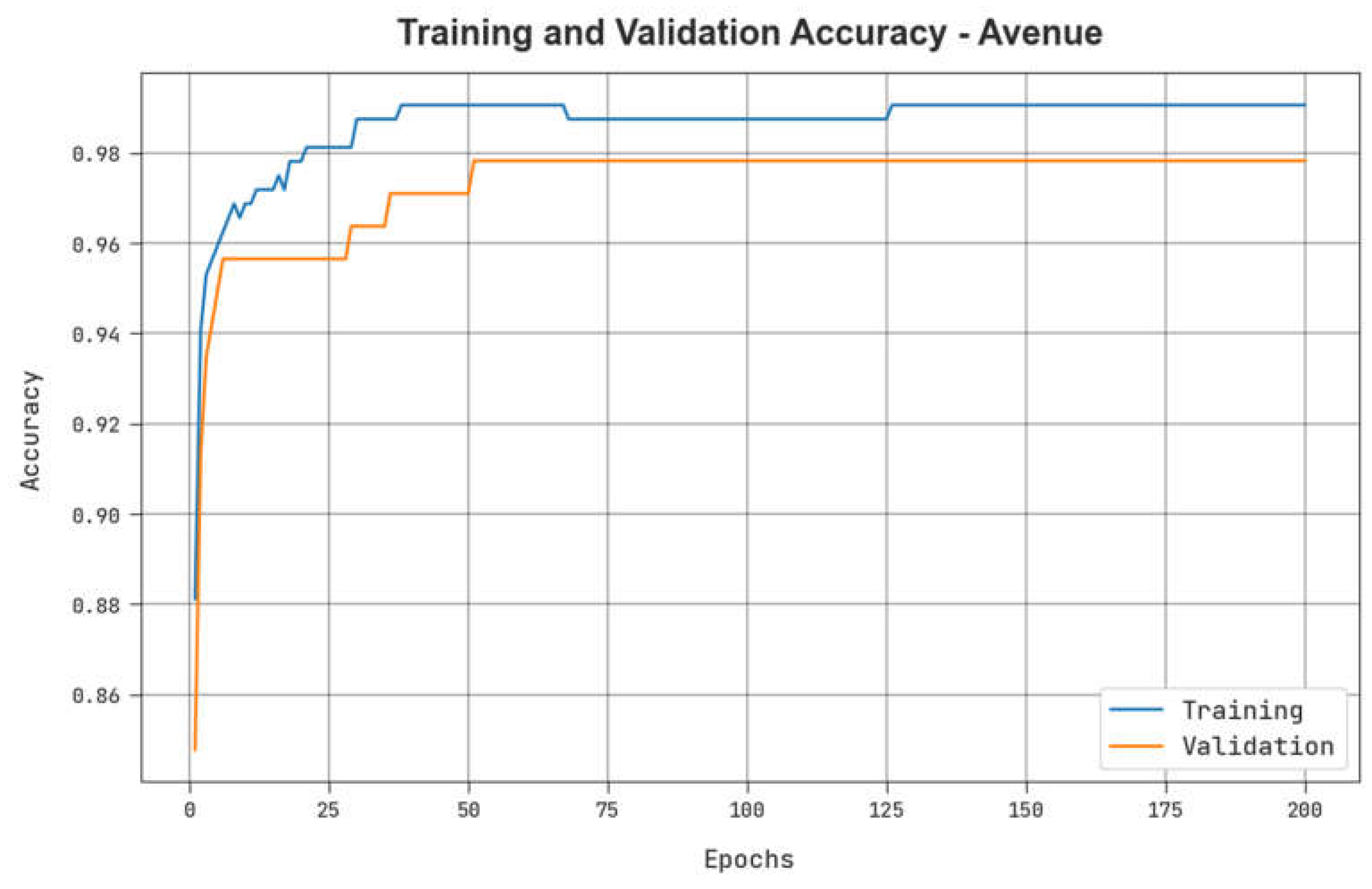

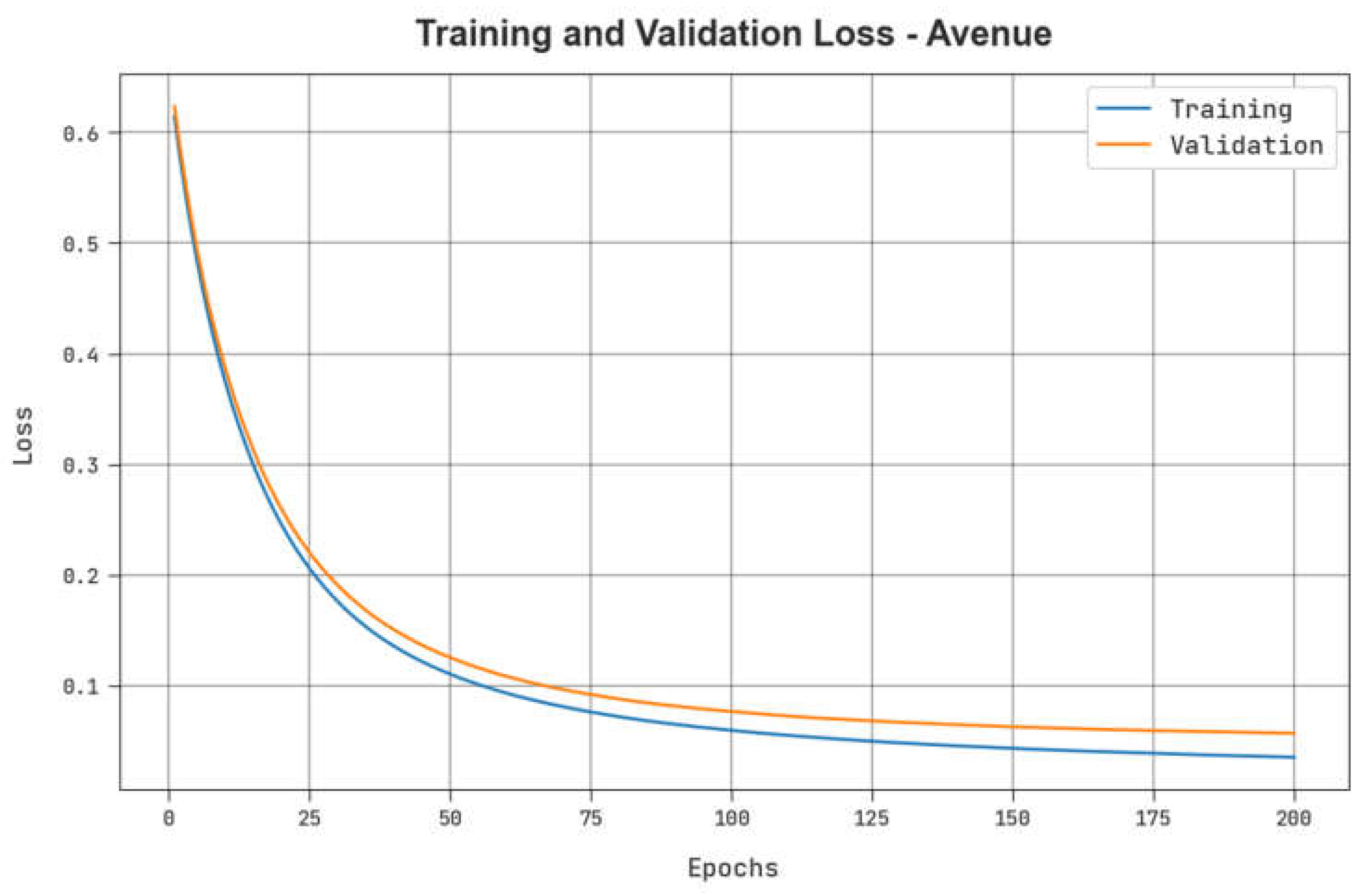

Table 6 describes the TPR results of the ADPW-FLHHO technique on the Avenue dataset. The figure indicates that the values of TPR are raised with an increase in FPR. It is noticed that the MPPCA, SF, and MDT models resulted in the lowest TPR values while the AMDN model accomplishes certainly enhanced TPR values. Contrastingly, the EADN model accomplishes near-optimal performance with reasonable TPR values. Nevertheless, the ADPW-FLHHO technique reaches maximum performance with maximal TPR values on the UCSDPed-1 dataset.

Table 7 highlights a comparative

inspection of the ADPW-FLHHO technique with recent methods on the Avenue dataset. The results demonstrate the ineffectual outcomes of the TSN-RGB, Spatiotemporal, and TSN-Optical Flow with a closer

of 89.47%, 91.41%, and 93.31%, respectively. Contrastingly, the binary MIL-C3D and Binary SVM approaches have reported a certainly increased

of 95.02% and 96.21%, respectively.

Though the EADN model accomplishes a considerable of 97.78%, the ADPW-FLHHO technique ensures its supremacy with a maximum of 98.90%. From the detailed results and discussion, it is assumed that the ADPW-FLHHO technique highlights proficient results over other models. The proposed model can alert authorities to identify abnormal objects in the pedestrian walkways, which results in a quick response time during an emergency, and potentially prevent accidents or other safety incidents. This can enhance the accessibility for people with disabilities or mobility issues, making pedestrian walkways more inclusive. It has the potential to improve safety, security, efficiency, accessibility, and urban planning, making pedestrian walkways more pleasant and inclusive spaces for everyone.