Research on SUnet Winter Wheat Identification Method Based on GF-2

Abstract

1. Introduction

- (1)

- Construct a high-resolution winter wheat public dataset based on the China GF-2 satellite. The dataset contains six bands of RGB, near-infrared, NDVI and NDVIincrease, and has rich image samples and labeling information.

- (2)

- Propose the SUNET network model, which introduces the Batch normalization layer and the Shuffle Attention mechanism. The results of the comparison test and the ablation experiment show that the generalization ability and classification accuracy of the SUNET model have been improved.

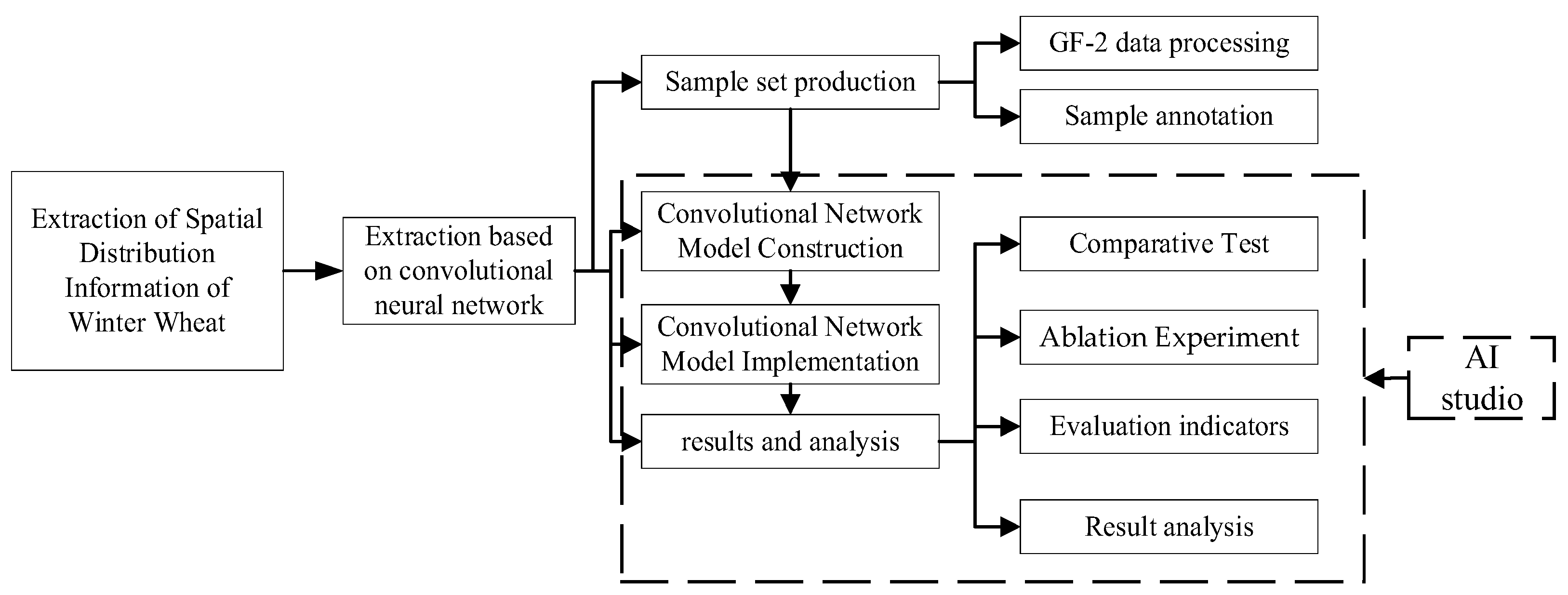

2. Methodology

2.1. Research Area and Data Source

2.1.1. Research Area

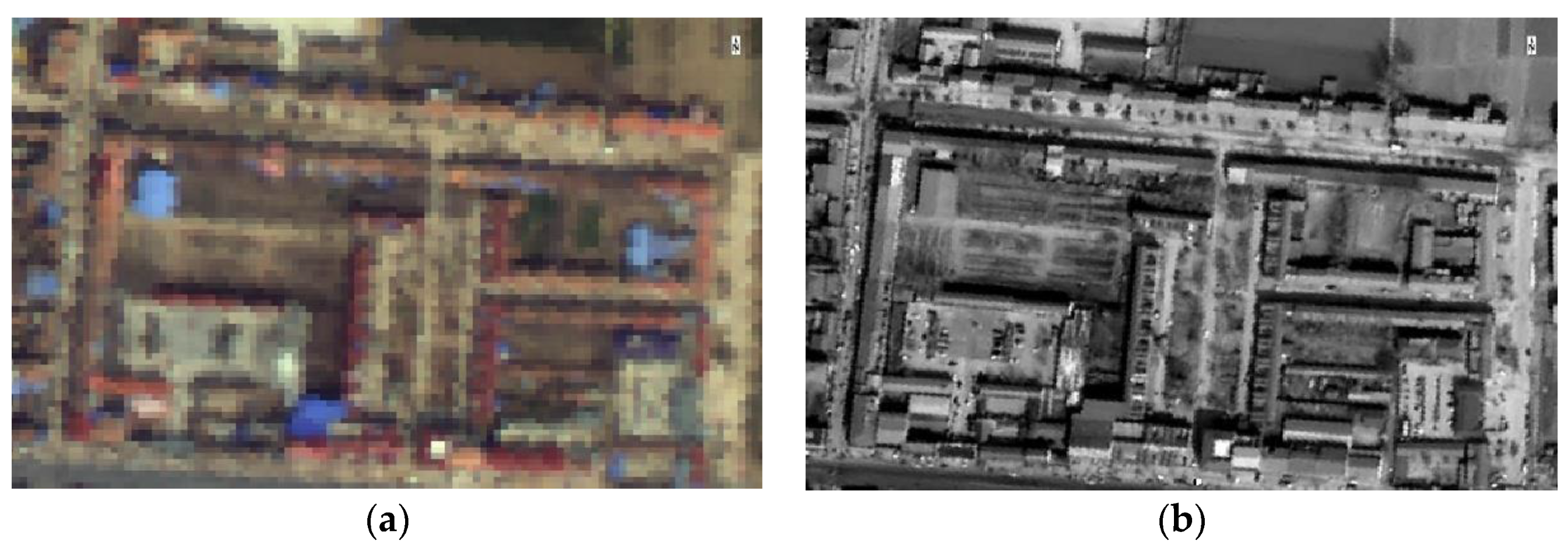

2.1.2. Data Source

2.2. Technical Process

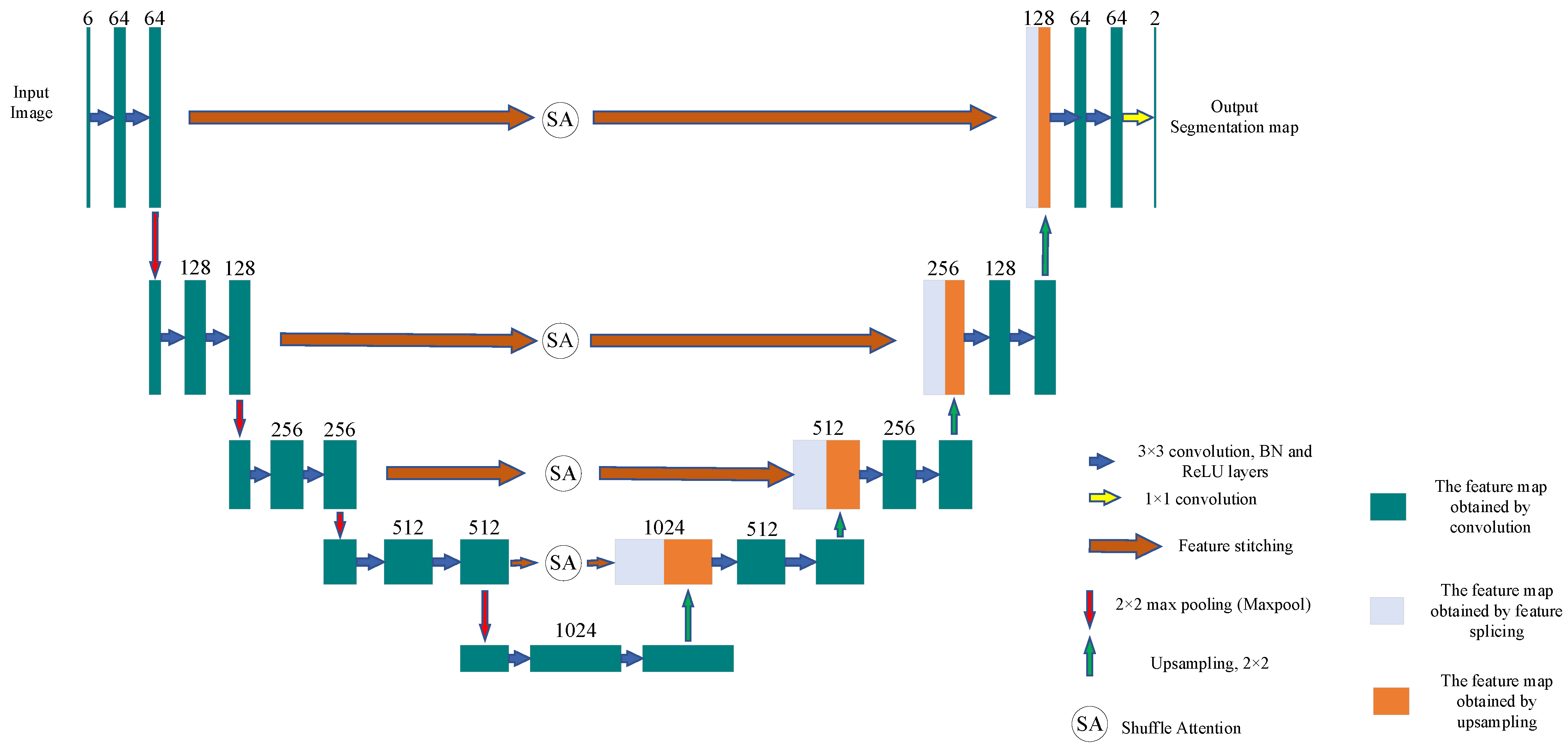

2.3. SUNet Network Construction

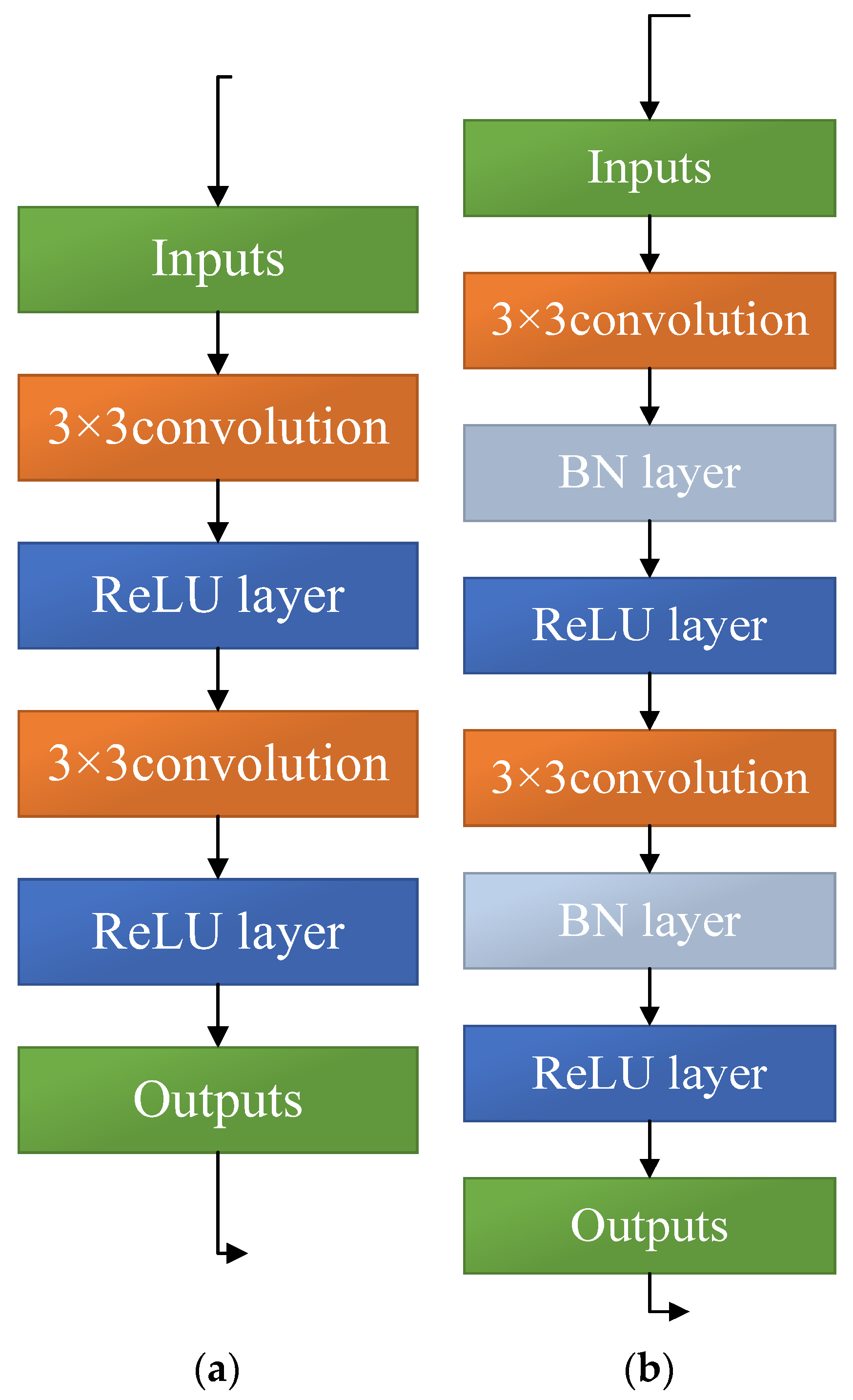

2.3.1. Batch Normalization

2.3.2. Shuffle Attention (SA)

2.4. Experimental Setup

2.4.1. Lab Environment

2.4.2. Data Pre-Processing

2.4.3. Loss Function

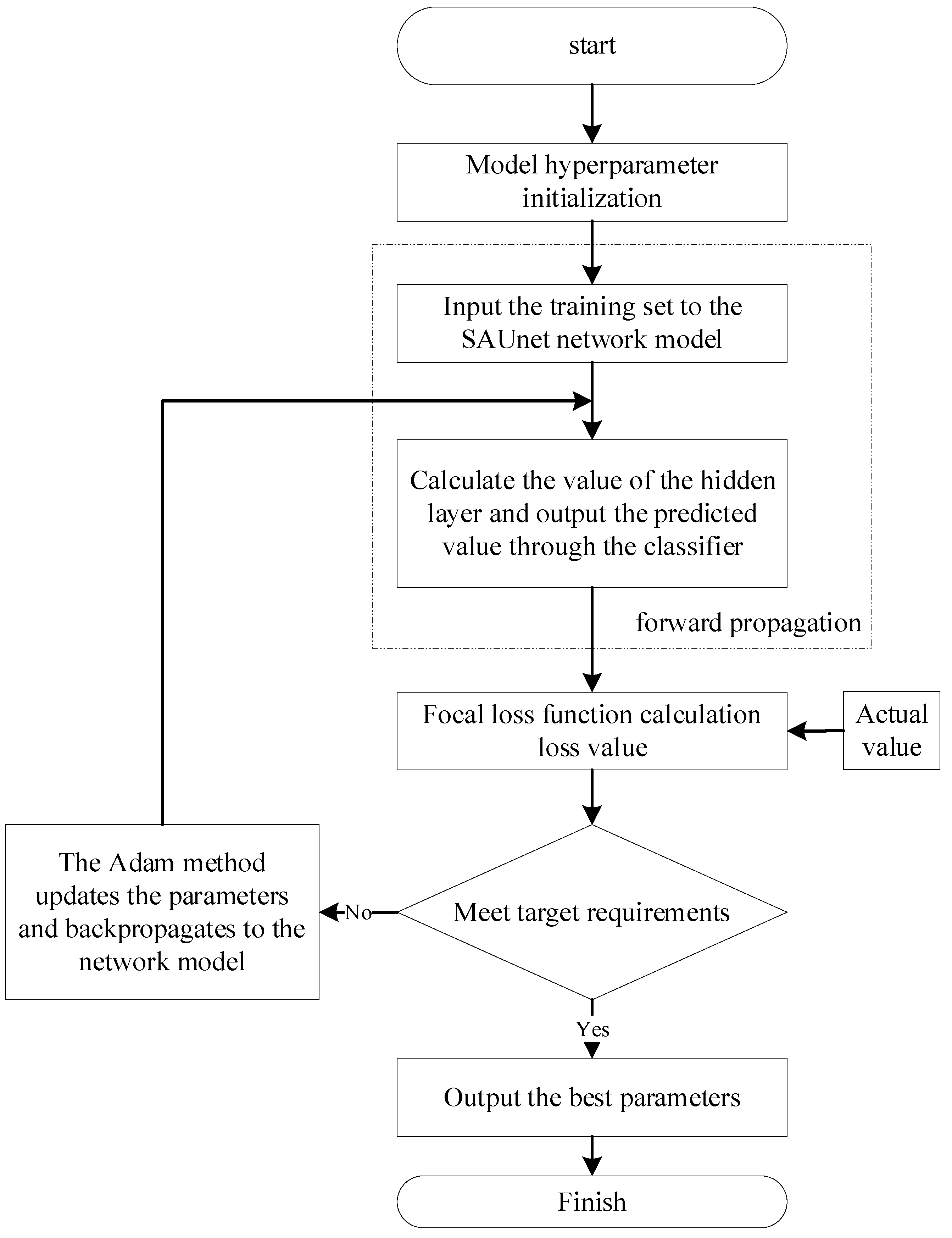

2.4.4. Training Process

- (1)

- The super parameter initialization of the network model training process is determined.

- (2)

- The training data are input into the network model for forwarding calculation. The features are extracted by the convolution layer, pooling layer and deconvolution layer hidden in the network coding and decoding part. Finally, all the sample pixels are classified in the classification layer to obtain a set of predictive values xp.

- (3)

- The focal loss function is used to calculate the error between the predicted value xp and the true value. If the error meets the target requirement, the training is completed. Otherwise, the training is continued.

- (4)

- The loss value is derived by the Adam optimizer, and the parameters are back-propagated to realize the parameter update of the SUNet network model, thus reducing the loss value.

2.4.5. Evaluation Metrics

2.4.6. Experimental Description

3. Experimental Section and Results

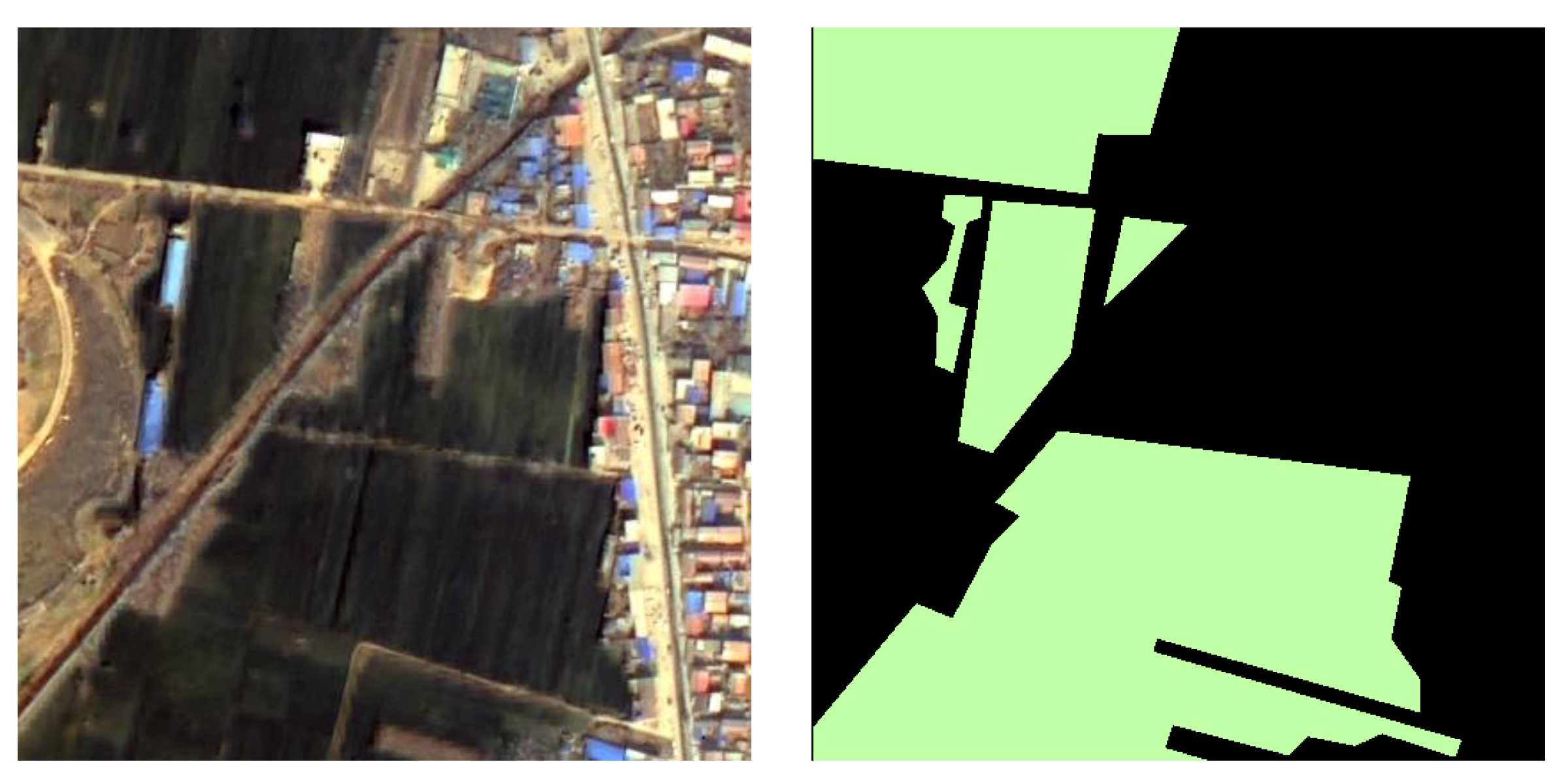

3.1. Datasets

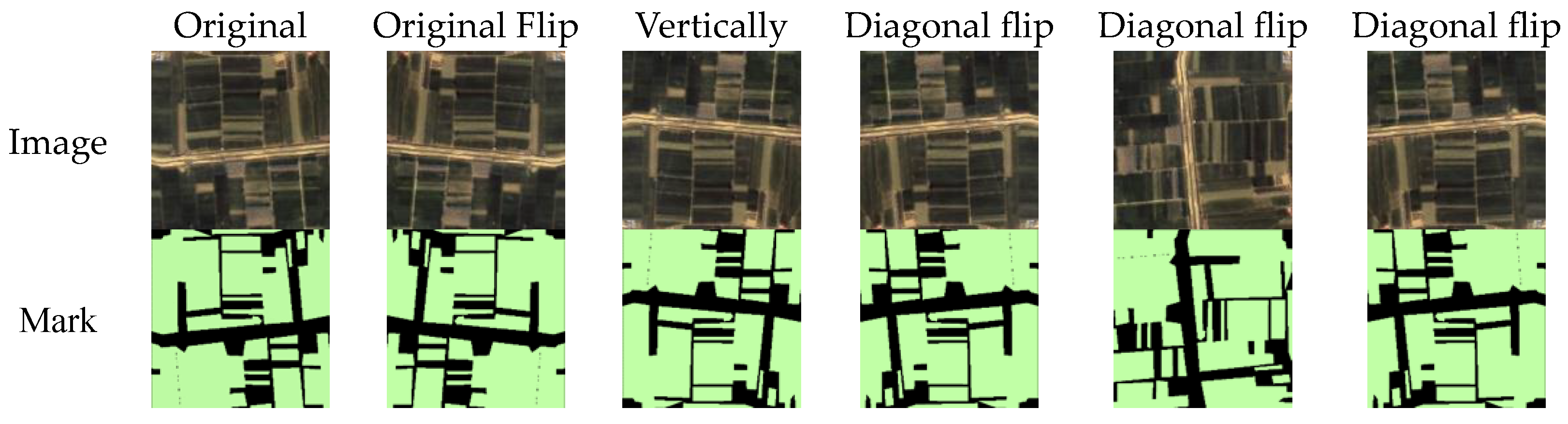

Data Augmentation

3.2. Results

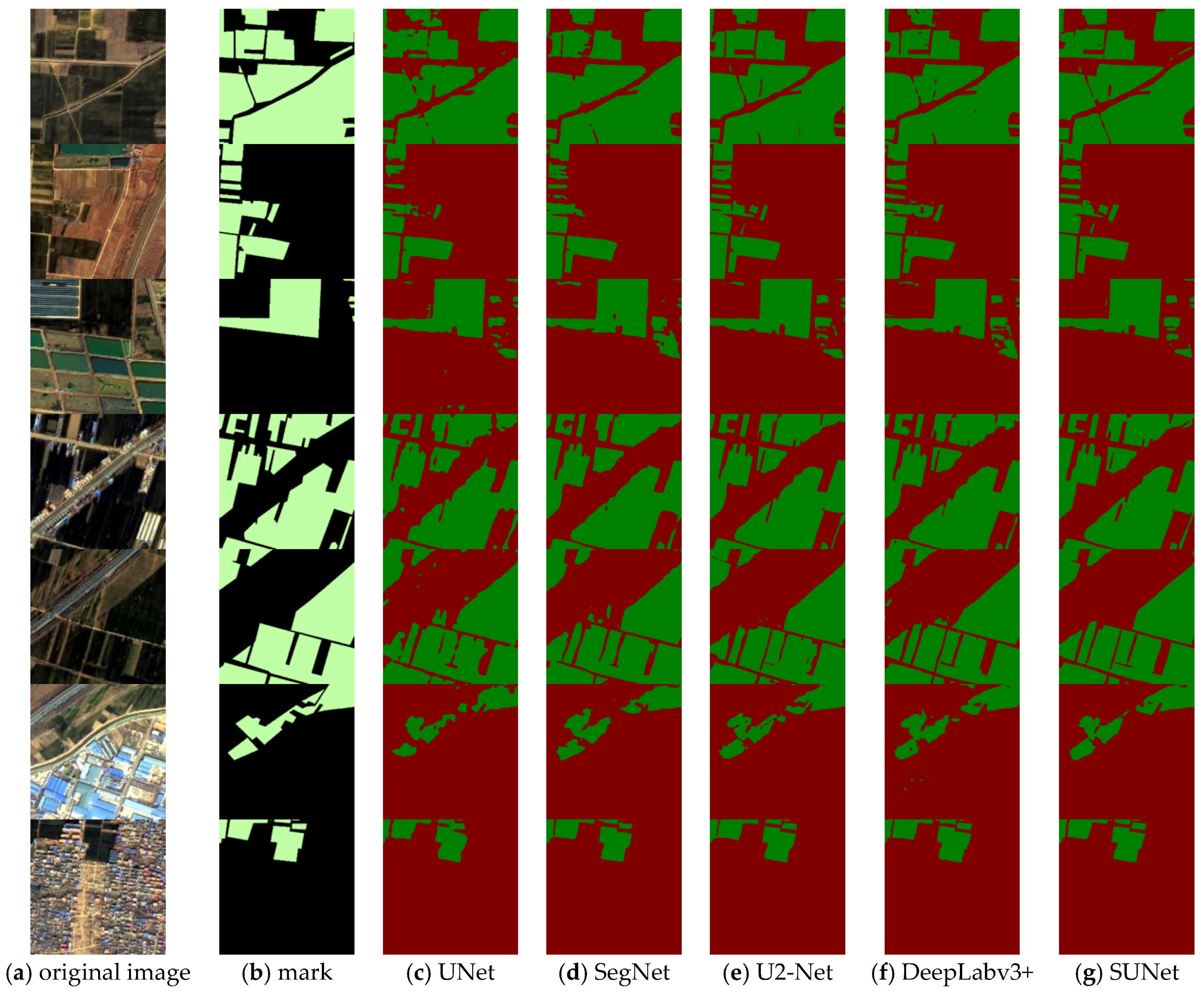

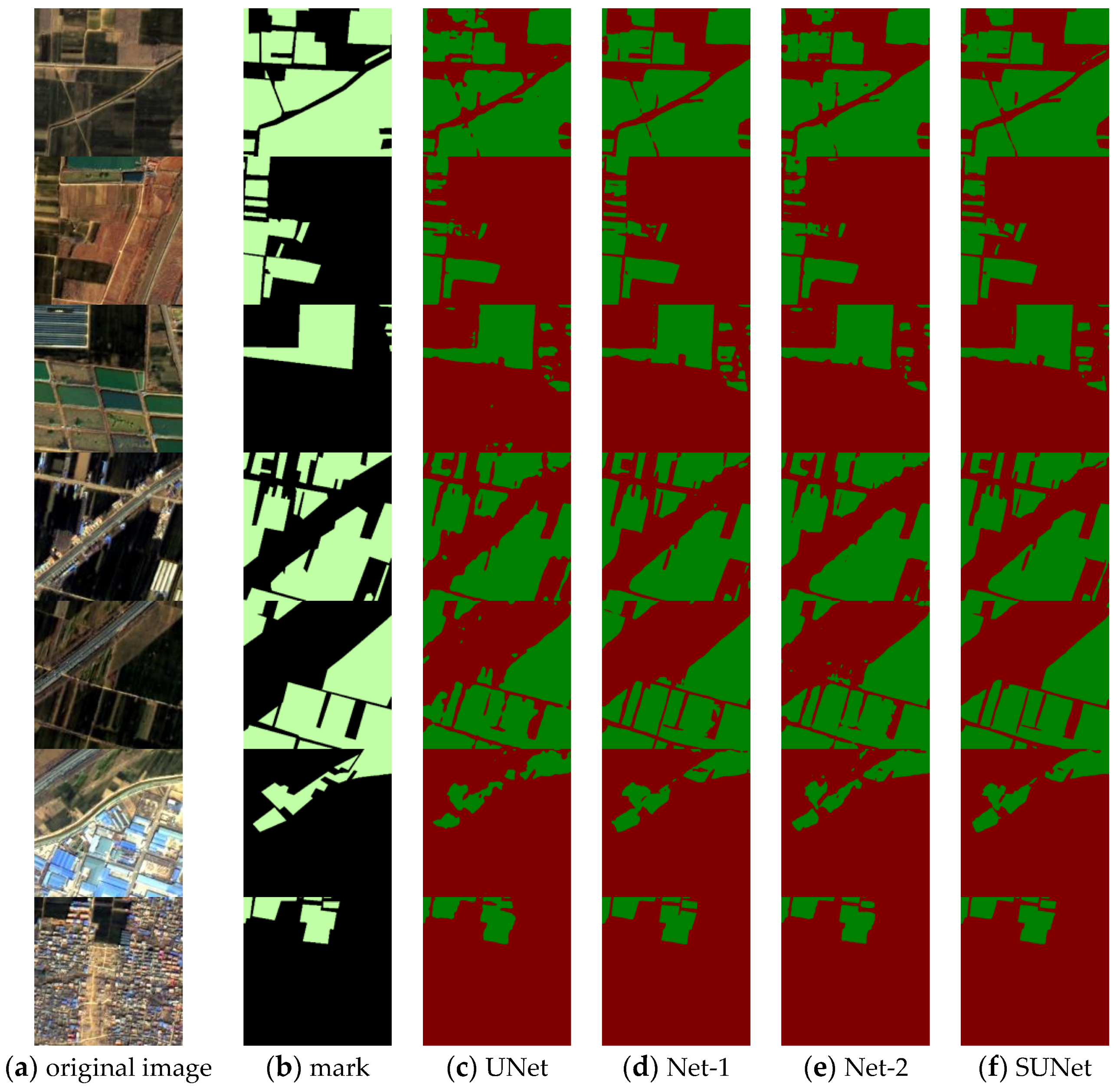

3.2.1. Comparative Test Result

3.2.2. Ablation Experiment Results

4. Discussion

4.1. Comparative Test Discussion

4.2. Ablation Experiment Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zheng, W.Q. Analysis of Wheat Price Formation Mechanism and Fluctuation Characteristics in China; Chinese Academy of Agricultural Sciences: Beijing, China, 2016. [Google Scholar]

- Chen, X.F.; Leeuw, G.; Arola, A.; Liu, S.; Liu, Y.; Li, Z.; Zhang, K. Joint retrieval of the aerosol fine mode fraction and optical depth using MODIS spectral reflectance over northern and eastern China: Artificial neural network method. Remote Sens. Environ. 2020, 249, 112006. [Google Scholar] [CrossRef]

- Chen, X.F.; Zhao, L.M.; Zheng, F.J.; Li, J.; Li, L.; Ding, H.; Zhang, K.; Liu, S.; Li, D.; de Leeuw, G. Neural Network AEROsol Retrieval for Geostationary Satellite (NNAeroG) Based on T emporal, Spatial and Spectral Measurements. Remote Sens. 2022, 14, 980. [Google Scholar] [CrossRef]

- Meng, S.Y.; Zhong, Y.F.; Luo, C.; Hu, X.; Wang, X.; Huang, S. Optimal Temporal Window Selection for Winter Wheat and Rapeseed Mapping with Sentinel-2 Images: A Case Study of Zhongxiang in China. Remote Sens. 2020, 12, 226. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; IEEE: New York, NY, USA; pp. 1–6. [Google Scholar]

- Traore, B.B.; Kamsu-Foguem, B.; Tangara, F. Deep convolution neural network for image recognition. Ecol. Inform. 2018, 48, 257–268. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Shi, X.R.; Zhou, F.; Yang, S.; Zhang, Z.J.; Su, T. Automatic Target Recognition for Synthetic Aperture Radar Images Based on Super-Resolution Generative Adversarial Network and Deep Convolutional Neural Network. Remote Sens. 2019, 11, 135. [Google Scholar] [CrossRef]

- Gao, G.W.; Yu, Y.; Yang, J.; Qi, G.J.; Yang, M. Hierarchical Deep CNN Feature Set-Based Representation Learning for Robust Cross-Resolution Face Recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 2550–2560. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef]

- Kang, K.; Ouyang, W.; Li, H.; Wang, X. Object detection from video tubelets with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 817–825. [Google Scholar]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Liao, L.Y.; Du, L.; Guo, Y.C. Semi-Supervised SAR Target Detection Based on an Improved Faster R-CNN. Remote Sens. 2022, 14, 143. [Google Scholar] [CrossRef]

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large kernel matters—Improve semantic segmentation by global convolutional network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4353–4361. [Google Scholar]

- Mou, L.; Hua, Y.; Zhu, X.X. Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high-resolution aerial images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 755–769. [Google Scholar] [CrossRef]

- Yang, H.; Yu, B.; Luo, J.; Chen, F. Semantic segmentation of high spatial resolution images with deep neural networks. GIScience Remote Sens. 2019, 58, 749–768. [Google Scholar] [CrossRef]

- Mo, Y.J.; Wu, Y.; Yang, X.N.; Liu, F.; Liao, Y. Review the state-of-the-art technologies of semantic segmentation based on deep learning. Neurocomputing 2022, 493, 626–646. [Google Scholar] [CrossRef]

- Younis, M.C.; Keedwell, E. Semantic segmentation on small datasets of satellite images using convolutional neural networks. J. Appl. Remote Sens. 2020, 13, 046510. [Google Scholar] [CrossRef]

- Yu, X.; Wu, X.; Luo, C.; Ren, P. Deep learning in remote sensing scene classification: A data augmentation enhanced convolutional neural network framework. GIScience Remote Sens. 2017, 54, 741–758. [Google Scholar] [CrossRef]

- Fu, G.; Liu, C.J.; Zhou, R.; Sun, T.; Zhang, Q. Classification for High Resolution Remote Sensing Imagery Using a Fully Convolutional Network. Remote Sens. 2017, 9, 498. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L.J. Remote Sensing Image Scene Classification Using CNN-CapsNet. Remote Sens. 2019, 11, 494. [Google Scholar] [CrossRef]

- Tian, C.W.; Fei, L.K.; Zheng, W.X.; Xu, Y.; Zuo, W.; Lin, C.W. Deep Learning on Image Denoising: An Overview. Neural Netw. 2020, 131, 252–275. [Google Scholar] [CrossRef]

- Izadi, S.; Sutton, D.; Hamarneh, G. Image denoising in the deep learning era. Artif. Intell. Rev. 2023, 56, 5929–5974. [Google Scholar] [CrossRef]

- Liu, D.; Jia, J.L.; Zhao, Y.Q. Overview of Image Denoising Methods Based on Deep Learning. Comput. Eng. Appl. 2021, 57, 1–13. [Google Scholar]

- Zhao, L.M.; Liu, S.M.; Chen, X.F.; Wu, Z.; Yang, R.; Shi, T.; Zhang, Y.; Zhou, K.; Li, J. Hyperspectral Identification of Ginseng Growth Years and Spectral Importance Analysis Based on Random Forest. Appl. Sci. 2022, 12, 5852. [Google Scholar] [CrossRef]

- Chen, X.F.; Zheng, F.J.; Guo, D.; Wang, L.; Zhao, L.; Li, J.; Li, L.; Zhang, Y.; Zhang, K.; Xi, M.; et al. A review of machine learning methods for aerosol quantitative remote sensing. J. Remote Sens. 2021, 25, 2220–2233. [Google Scholar]

- Zhang, C.; Gao, S.; Yang, X.; Li, F.; Yue, M.; Han, Y.; Zhao, H.; Zhang, Y.N.; Fan, K. Convolutional neural network-based remote sensing images segmentation method for extracting winter wheat spatial distribution. Appl. Sci. 2018, 8, 1981. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoderdecoderarchitecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. arXiv 2017, arXiv:1606.00915. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y. Research on Spatial Distribution Information Extraction of Winter Wheat Based on Multi-scale Feature Convolutional Neural Network. Master’s Thesis, Shandong Agricultural University, Tai’an, China, 2021. [Google Scholar] [CrossRef]

- Wang, S.Y.; Xu, Z.G.; Zhang, C.M.; Zhang, J.H.; Mu, Z.S.; Zhao, T.Y.; Wang, Y.Y.; Gao, S.; Yin, H.; Zhang, Z.Y. Improved winter wheat spatial distribution extraction using a convolutional neural network and partly connected conditional random field. Remote Sens. 2020, 12, 821. [Google Scholar] [CrossRef]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. RefineNet: Multi-path refinement networks for high-resolution semantic segmentation. arXiv 2016, arXiv:1611.06612v3. [Google Scholar]

- Teimouri, N.; Dyrmann, M.; Jørgensen, R.N. A novel spatio-temporal FCN-LSTM network for recognizing various crop types using multi-temporal radar images. Remote Sens. 2019, 11, 990. [Google Scholar] [CrossRef]

- Zhou, K.; Liu, L.; Zhang, Y.N.; Miao, R.; Yang, Y. GEE-supported winter wheat area extraction and growth monitoring in Henan Province. Sci. Agric. Sin. 2021, 54, 2302–2318. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Zhang, Q.L.; Yang, Y.B. Sa-net: Shuffle attention for deep convolutional neural networks. Proceeding of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA; pp. 2235–2239. [Google Scholar]

- Wu, Y.X.; He, K.M. Group Normalization. Int. J. Comput. Vis. 2020, 128, 742–755. [Google Scholar] [CrossRef]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Chen, S.R.; Yan, L.; Shen, P.P. Design and Implementation of High-resolution Remote Sensing Satellite Image Preprocessing System. Surv. Spat. Geogr. Inf. 2021, 44, 1–3. [Google Scholar]

- Liu, S.M.; Zhang, Y.L.; Zhao, L.M.; Chen, X.F.; Zhou, R.; Zheng, F.; Li, Z.; Li, J.; Yang, H.; Li, H.; et al. QUantitative and Automatic Atmospheric Correction (QUAAC) Application and Validation. Sensors 2022, 22, 3280. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.F.; Zhang, H.; Xu, H.; Ma, J.Y. A Survey of Image Fusion Methods Based on Deep Learning. Chin. J. Image Graph. 2023, 28, 3–36. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Wang, J.; Tian, H.; Wu, M.; Wang, L.; Wang, C. Rapid Remote Sensing Mapping of Winter Wheat in Henan Province. J. Geo-Inf. Sci. 2017, 19, 846–853. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

| Sensor Type | Spatial Resolution | Band Number | Spectral Range | Width | Remark |

|---|---|---|---|---|---|

| Panchromatic | 1 m | 1 | 0.45~0.90 µm | 45 km | |

| 2 | 0.45~0.52 µm | Blue | |||

| 3 | 0.52~0.59 µm | Green | |||

| Multispectral | 4 m | 4 | 0.63~0.69 µm | Red | |

| 5 | 0.77~0.89 µm | Near infrared |

| Surroundings | Name |

|---|---|

| Deep Learning Framework | PaddlePaddle-gpu 2.2.0 |

| Programming language | Python 3.7 |

| CPU | Intel® X®(R) Gold 6271C (2.6 Hz) |

| GPU | Tesla V100 (32 G) |

| RAM | 32 G |

| Hard disk | 100 G |

| Tag Category | Tag Value | March Features | May Features |

|---|---|---|---|

| Non-winter wheat | 0 |  |  |

| Winter wheat | 1 |  |  |

| Hyperparameter Name | Parameter Value |

|---|---|

| epoch | 200 |

| Batch size | 4 |

| Initial learning rate | 0.0001 |

| Learning rate decay method | Cosine annealing decay |

| Optimizer | Adam |

| Model | Unet | SegNet | U2-Net | Deeplabv3+ | SUNet |

|---|---|---|---|---|---|

| Index | |||||

| mIou | 0.9261 | 0.9348 | 0.9437 | 0.9422 | 0.9514 |

| OA | 0.9663 | 0.9703 | 0.9746 | 0.9739 | 0.9781 |

| precision | 0.9460 | 0.9490 | 0.9596 | 0.9560 | 0.9619 |

| recall | 0.9497 | 0.9597 | 0.9619 | 0.9633 | 0.9707 |

| F1 | 0.9478 | 0.9543 | 0.9608 | 0.9596 | 0.9663 |

| kappa | 0.9229 | 0.9324 | 0.9419 | 0.9403 | 0.9501 |

| model size | 51.1 M | 113 M | 168 M | 175 M | 51.2 M |

| Model | UNet | Net-1 | Net-2 | SUNet |

|---|---|---|---|---|

| Index | ||||

| mIou | 0.9261 | 0.9454 | 0.9361 | 0.9514 |

| OA | 0.9663 | 0.9753 | 0.9710 | 0.9781 |

| precision | 0.9460 | 0.9604 | 0.9516 | 0.9619 |

| recall | 0.9497 | 0.9634 | 0.9588 | 0.9707 |

| F1 | 0.9478 | 0.9619 | 0.9552 | 0.9663 |

| kappa | 0.9229 | 0.9436 | 0.9337 | 0.9501 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, K.; Zhang, Z.; Liu, L.; Miao, R.; Yang, Y.; Ren, T.; Yue, M. Research on SUnet Winter Wheat Identification Method Based on GF-2. Remote Sens. 2023, 15, 3094. https://doi.org/10.3390/rs15123094

Zhou K, Zhang Z, Liu L, Miao R, Yang Y, Ren T, Yue M. Research on SUnet Winter Wheat Identification Method Based on GF-2. Remote Sensing. 2023; 15(12):3094. https://doi.org/10.3390/rs15123094

Chicago/Turabian StyleZhou, Ke, Zhengyan Zhang, Le Liu, Ru Miao, Yang Yang, Tongcan Ren, and Ming Yue. 2023. "Research on SUnet Winter Wheat Identification Method Based on GF-2" Remote Sensing 15, no. 12: 3094. https://doi.org/10.3390/rs15123094

APA StyleZhou, K., Zhang, Z., Liu, L., Miao, R., Yang, Y., Ren, T., & Yue, M. (2023). Research on SUnet Winter Wheat Identification Method Based on GF-2. Remote Sensing, 15(12), 3094. https://doi.org/10.3390/rs15123094