Abstract

Land Use and Land Cover (LULC) classification using remote sensing data is a challenging problem that has evolved with the update and launch of new satellites in orbit. As new satellites are launched with higher spatial and spectral resolution and shorter revisit times, LULC classification has evolved to take advantage of these improvements. However, these advancements also bring new challenges, such as the need for more sophisticated algorithms to process the increased volume and complexity of data. In recent years, deep learning techniques, such as convolutional neural networks (CNNs), have shown promising results in this area. Training deep learning models with complex architectures require cutting-edge hardware, which can be expensive and not accessible to everyone. In this study, a simple CNN based on the LeNet architecture is proposed to perform LULC classification over Sentinel-2 images. Simple CNNs such as LeNet require less computational resources compared to more-complex architectures. A total of 11 LULC classes were used for training and validating the model, which were then used for classifying the sub-basins. The analysis showed that the proposed CNN achieved an Overall Accuracy of 96.51% with a kappa coefficient of 0.962 in the validation data, outperforming traditional machine learning methods such as Random Forest, Support Vector Machine and Artificial Neural Networks, as well as state-of-the-art complex deep learning methods such as ResNet, DenseNet and EfficientNet. Moreover, despite being trained in over seven million images, it took five h to train, demonstrating that our simple CNN architecture is only effective but is also efficient.

1. Introduction

Accurate and up-to-date information about Land Use and Land Cover (LULC) classification is essential for a wide range of applications, from understanding natural and anthropogenic phenomena on the Earth’s surface and climate modeling [1] to territorial and urban planning [2]. LULC classes are important for understanding the impacts of human activities on the environment and for making informed decisions about how to manage and protect natural resources. Land Use (LU) refers to the human activities that take place on the Earth’s surface, such as agriculture, urbanization, and forestry [3]. However, Land Cover (LC) describes the physical characteristics of the Earth’s surface, such as vegetation, water bodies and bare soil. To obtain a LULC classification product using Remote Sensing (RS), it is necessary to assign a class label to every pixel or object of an image [4]. RS techniques involve the use of satellite or airborne sensors to capture information about the electromagnetic radiation emitted or reflected from the Earth’s surface [5]. This information can be processed and analyzed to extract various types of geospatial data, including LULC products. LULC products are continuously developing as RS and methods grow. However, there still exists low consistency among LULC products due to heterogeneity conditions, both in LULC and in the orography of the region [2]. This leads to a high intraclass and a low interclass variance that makes the classification process a complex task in particular regions, such as mountainous ones.

There are various methods to generate LULC maps, each with its advantages and disadvantages. Manual techniques can be simple and straightforward approaches, but they become impractical when dealing with large study areas and high number of classes [6]. In such cases, using artificial intelligence algorithms is a more efficient alternative. Among these algorithms, traditional Machine Learning (ML) and Deep Learning (DL) are techniques that have gained popularity due to their accuracy, robustness, and ability to analyze large datasets with complex features. By utilizing these methods, is possible to obtain a high-quality LULC map with relatively less time and effort [7]. LULC classifications using ML algorithms have been a popular research topic in recent years, with many articles exploring this area [8,9,10]. The most commonly used algorithms are: Artificial Neural Networks (ANN), Decision Tree (DT), K-Nearest Neighbors (KNN), Random Forest (RF), Support Vector Machine (SVM), XGBoost and LightGBM [11,12,13]. These traditional ML algorithms usually work very well when the study area is small and the classes to be mapped are relatively few. In [10], the authors presented a hybrid approach for the LULC classification of multitemporal, multiregion, and multisensor Landsat data using Classification And Regression Trees (CART), DT and a SVM-based clustering in cascade in order to obtain a total of eleven classes (including clouds and shadows). Swetanisha et al. [11] presented a study on the use of ML models to classify LULC in seven classes. The study provides useful insights into the application of multiple ML models, including RF, SVM and KNN for LULC classification, and presents a comprehensive evaluation of different models. Saralioglu et al. [14] compared the performance of various ML methods for the LULC classification of forest and agriculture dominated landscapes using Landsat-8 and Landsat-9 satellite images. All the above works proposed methods that rely on traditional ML supervised or unsupervised techniques, which may not be sufficient to capture complex relationships within the data. Additionally, the spatial resolution of Landsat imagery is not enough if you want to perform a detailed LULC classification. Therefore, a better option in terms of spatial resolution is to use Sentinel-2 (S2) images. In the research conducted by Park et al. [13], they applied ML algorithms such as RF, XGBoost, and a LightGBM for LULC classification, and presented the model tuning process for each algorithm using S2, Landsat and a normalized Digital Elevation Model (DEM). Alshari et al. [12] proposed to increase the accuracy of ML models for mapping seven different LULC classes by combining a neural-based method with an object-based method, producing a model addressed by an ANN (limited parameters) and a RF (hyperparameter) called ANN_RF. They used S2 and Landsat 8 and showed that the proposed model had better results in the OA using S2 data. Razafinimaro et al. [15] used supervised classification with six traditional ML models. Analysis factors were used to further investigate their importance for S2, Landsat 8 and other RS information. The results showed that the KNN achieved the highest accuracy during the analysis of medium and low spatial resolution images with the number of spectral bands lower than or equal to four. The RF completely dominated the other analysis cases. The three previous articles worked with S2 images, which allowed them to obtain more detailed LULC products. Nevertheless, those studies only focused on a limited number of LULC categories, and it is unclear how well the algorithms would perform when classifying more complex or diverse LULC types. Additionally, the studies did not provide a detailed analysis of the impact of image pre-processing techniques on classification accuracy. In general, these six works [10,11,12,13,14,15] and other research in the literature [16] do not address the issue of imbalanced data [17], which is a common problem in LULC classification tasks. Furthermore, when working with areas with large spatial extent and many classes, the above algorithms did not work properly. In this cases, DL techniques, such as convolutional neural networks (CNNs), have shown promising results in this field [4]. CNNs are a type of artificial neural network that are particularly well-suited for image processing tasks, as they are able to automatically learn relevant features from the input data [18]. Sánchez et al. [19] compared different traditional ML and CNN algorithms to identify nineteen LU classes using sequences of S2 images around a relatively small area. In this research they showed that the best results were achieved by a deep neural network containing two-dimensional convolutional layers (Conv2D) and principal component analysis (PCA). Kroupi et al. [20] developed a pipeline for analyzing S2 images using a Deep Convolutional Neural Network (DCNN) for an automatic unsupervised ten-class LULC classification. Taberner et al. [21] evaluated the performance and explained the operation of a DL algorithm, based on a bi-directional recurrent network of long-short-term memory (2-BiLSTM). In this research, sixteen agricultural LULC classes were classified using S2 data. The article details the methodology and experimental results, showing that the proposed approach outperformed traditional ML approaches for this task. The last three discussed works do not provide detailed information about the computational time required to train and test the model. This is very important when deciding which model to use/replicate for similar tasks. Another common limitation of the above works, as with others in the literature [7,22,23], is the high computational resources needed, especially when dealing with large and complex datasets; this is due to complex CNN architectures [7,22,23].

To deal with the different issues highlighted above, in this research we propose a simple but robust CNN architecture capable of mapping eleven LULC classes in Andean and mountainous areas with a high accuracy percentage. We use a simple architecture that overcomes several challenges associated with more complex CNN architectures, such as high computational costs [23] and longer wait times for results. By using this approach, we aim to provide an efficient and accessible solution for LULC classification using DL techniques [20]. In addition, we describe in detail the hyperparameters (HP) optimization process. This is something that is omitted or described very briefly in LULC classification studies, leading to a lack of information about the HP optimization process in the field of RS [13].

This study aims at automatically accurately and efficiently mapping eleven specific LULC classes that are prevalent in the study area using a simple yet robust CNN and S2 images. The selection of these classes were based on their importance in characterizing the LULC patterns and environmental conditions in Andean sub-basins. The accurate identification and mapping of these LULC classes are essential for understanding the changes in the Earth’s surface over time and for assessing the environmental impacts of human activities.

The remainder of this paper is structured as follows. The study area, the RS dataset, and the proposed approach for LULC classification are described in Section 2. Section 3 illustrates the experimental results obtained on the study area. In Section 4, we discuss the results of our study, as well as provide some comparative analyses with other ML and DL algorithms. Finally, conclusions and future works are given in Section 5.

2. Materials and Methods

2.1. Study Area and RS Data

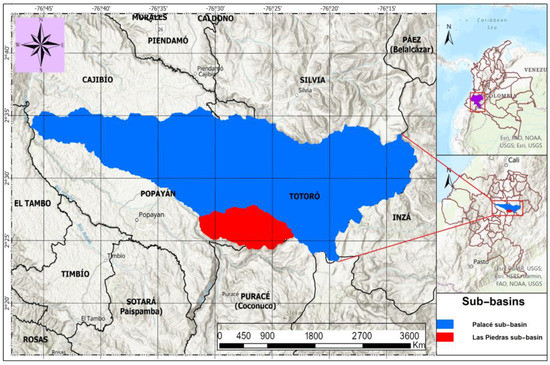

The study areas selected for this research are the Las Piedras and Palacé River sub-basins, both located in the southwest part of Colombia. The study areas are presented in Figure 1. The Las Piedras River sub-basin [24] (in red) covers an area of approximately 66 km2 and is part of the larger Cauca River basin [25]. This sub-basin is characterized by a rugged topography, with steep slopes and narrow valleys. The main river, Las Piedras River, originates in the high Andean mountains and flows towards the southwest before merging with the Cauca River. The sub-basin is home to a rich array of flora and fauna, including several endemic species, and provides important ecosystem services such as water supply for agricultural and domestic use [24]. The Palacé River sub-basin [26] (in blue) covers an area of approximately 645 km2. It is located in the central and northeastern part of the Cauca department and includes the municipalities of Totoró, Cajibío, Silvia and Popayán, and is characterized by a mix of tropical and Andean ecosystems. The sub-basin is home to a rich diversity of flora and fauna, including several endangered and endemic species. The Palacé sub-basin is formed by six tributaries: Cofre river, Blanco river, Molino river, Chamizal creek, Guangubio river, and Minchicao river. The upper part of the sub-basin where Palacé river originates exhibits typical high-altitude páramo conditions, which are part of the Guanacas-Puracé-Coconucos páramo complex located in the Andean region spanning the Huila and Cauca departments. Agriculture is the main source of income in the upper part of the Palacé sub-basin, with potato cultivation and extensive livestock breeding serving as the major economic activities in the region [27]. These two sub-basins are important ecological and socio-economical resources that provide critical ecosystem services and support a rich diversity of flora and fauna in Colombia.

Figure 1.

Location of the study area. Red: Las Piedras sub-basin, Blue: Palacé sub-basin.

The proposed approach is developed for its application over images acquired by the S2 series satellite [28] over these two Andean regions. The goal of this research is to have a multiclass LULC classification over the Las Piedras and Palacé River sub-basins according to the CORINE Land Cover (Coordination of Information on the Environment) methodology [29], also known as CLC, adapted for Colombia. The study area is located within the intertropical convergence zone, which is an area near the equator where trade winds from the northern and southern hemispheres converge. This convergence often results in the formation of cumulus clouds, which can lead to high levels of cloud cover in the region [30]. Therefore, obtaining clear images of the area of interest is challenging due to the frequent presence of clouds. To improve the chances of acquiring images with lower levels of cloud cover over the study area, data from both Sentinel-2A and Sentinel-2B satellites were used [28]. The S2 satellite series is well-suited for this type of research because it provides high-spatial-resolution images (up to 10 m per pixel) with a wide coverage area, allowing for more detailed observations in comparison with Landsat satellite series. Despite using these resources, obtaining images with minimal cloud coverage was challenging. The most recent images of the study area without significant cloud coverage were obtained in 2020 and were used in this study. To generate images that cover the entire area of both sub-basins without any cloud or shadow interference, it was essential to merge the two tiles listed in Table 1 with the help of suitable pre-processing techniques, as described later. Due to the size of the two sub-basins, a single S2 tile is insufficient to cover the entire extent, thus requiring more images to map the areas of interest. To obtain the images required for the study, the platform provided by the European Space Agency (ESA) for the dissemination of data from Sentinel missions was used [31]. Specifically, the Level-2A product was downloaded, which includes reflectance data that have already been corrected for atmospheric effects using the Sen2Cor method [32,33]. This tool is used to remove the effects of atmospheric haze, clouds, and aerosols that can interfere with the accuracy of RS data. Additionally, a DEM with a resolution of 12.5 m was acquired from the Japan Aerospace Exploration Agency (JAXA) through the ALOS PALSAR satellite mission [34] to provide topographic information about the study area. The DEM was acquired between 2010 and 2011 and resampled to 10 m for compatibility with the S2 data.

Table 1.

S2 tiles merged to build the dataset for training the proposed model.

2.2. Proposed Approach for LULC Classification in Andean Sub-Basins in Colombia

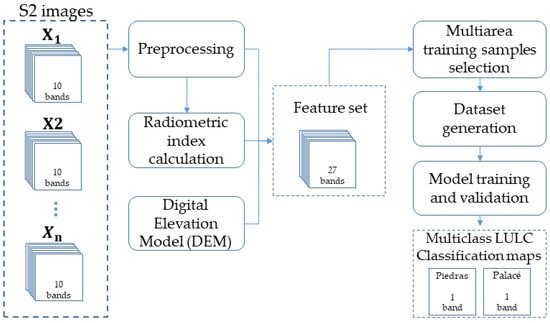

In this study, we propose an approach that combines RS and DL to perform LULC classification automatically. The proposed approach (see Figure 2) consists of several stages that are carefully designed to achieve accurate and reliable results at a low computational time. The first stage involves gathering S2 satellite images. The next step is data pre-processing, where the collected images undergo various corrections and enhancements to improve their quality and ensure consistency across the entire satellite data information. Later, some radiometric indices are calculated to assist in distinguishing between different LULC types. In the subsequent stage, a feature set is created by combining the pre-processed bands of the original S2 images, the calculated indices, and DEM. This feature set provides a more comprehensive representation of the LULC types and helps in identifying the boundaries between different classes. Next, training samples are selected to construct the dataset that will be used to train and validate the DL model. Finally, a map is generated for each of the sub-basins analyzed in this research through the multiclass LULC classification. The complete methodology is designed to exploit the spectral and spatial information available in the S2 RS data and to leverage the capabilities of DL algorithms for an accurate and efficient LULC classification, while guaranteeing a lower computational burden. In the following sections, we will provide a detailed description of each stage of the proposed approach.

Figure 2.

General block scheme of the proposed approach for LULC classification of Andean sub-basins in Colombia.

2.2.1. Data Pre-Processing and Harmonization

Data pre-processing and harmonization are crucial steps in the LULC classification process. After clipping the S2 images, an outlier removal is conducted to eliminate pixels with values that are significantly different from the rest of the pixels in the image. These outliers can occur due to a variety of factors, such as sensor errors, atmospheric disturbances, and other components. By removing these values, the accuracy of subsequent analyses could be improved [35]. Next, a filtering of clouds and shadows is performed since they can significantly impact the reflectance values of the land surface. To address this issue, the ESA has developed an algorithm that filters clouds and shadows automatically using the SCL map obtained with the Sen2Cor algorithm [32,33]. Whenever a S2 image has any amount of clouds/shadows, the SCL map is used to detect and remove them. The filtered portion is then replaced by a portion of another image, as close as possible in date to the target image, but without clouds/shadows in that specific area. As a result, the images used for LULC classification in both sub-basins are free of clouds/shadows, allowing us to obtain a classification of every single pixel in the image. Even though this is not the most accurate method, it produces reliable enough results for the analysis carried out in this research. After completing the previous steps, a resampling of S2 bands that were not originally at 10 m spatial resolution is performed. Specifically, the six bands originally at 20 m were downscaled to 10 m using the multiresolution analysis fusion method with the high-pass modulation presented in [36]. This is a pansharpening technique used in order to gain the best at the highest spatial and spectral resolutions for all the bands. After this, the harmonization process is executed by applying histogram matching. Note that the images in Table 1 have not been acquired on the same date, thus requiring radiometric homogenization to guarantee that the proposed approach works similarly in both study areas. Employing this method guarantees that the pixel values in the images are uniformly distributed, a critical component in the examination of RS data. Then, to create a complete image of each sub-basin, the different tiles are joined. Images are rescaled in the range [0, 1], instead of keeping them in the [0, 10,000] range, for DL models to work in the required configurations.

2.2.2. Radiometric Indices

To differentiate between the eleven selected LULC classes, that is to say water bodies, páramo, urban areas, planted forest, pastures, natural grassland, agricultural areas, dense forest, mixed forest, mineral extraction areas, and shrubs, supplementary features were required. In addition to the original ten bands extracted from the S2 images, the B1, B9, and B10 bands were excluded from consideration, as they are specifically intended for atmospheric correction purposes. To generate additional features, radiometric indices were calculated. These indices are combinations of spectral bands that can highlight specific features and patterns in the data. They help to identify areas with vegetation, water, urban areas, and bare soil. When used in combination with DL models, radiometric indices can enhance the accuracy and efficiency of the models by providing additional information that may not be readily apparent in the raw satellite imagery [25]. The selection of a specific radiometric index is related to its capability to highlight certain LULC classes, allowing its separation from the other LULCs. In this research, the authors propose the use of sixteen indices (see Table 2). Each index is sensitive to specific physical and biochemical properties of vegetation and soils, providing unique and complementary information about the eleven LULC types used in this research. For instance, the Adjusted Transformed Soil Adjusted VI (ATSAVI) and Soil Adjusted Vegetation Index (SAVI) are useful in distinguishing between natural grassland and agricultural areas [37,38], while the Green Leaf Index (GLI) and Enhanced Vegetation Index (EVI) can detect differences in forest density [39]. The Chlorophyll Index Red Edge (CIRedEdge) and Coloration Index (CI) are particularly useful in identifying different vegetation types such as páramo, shrubs, and forest [40,41]. The Normalized Difference Water Index (NDWI), the Simple Ratio—Ferrous Minerals (FM), and the Simple Ratio—Iron Oxide (IO) are effective in detecting water bodies, urban areas and mineral extraction areas [42,43]. Additionally, the DEM for each sub-basin is also used to provide additional information. The DEM information can help identify areas of different elevations and slopes, which can be useful in identifying mountainous regions, valleys, and other terrain features [44]. In this study, the inclusion of the sixteen radiometric indices was carefully chosen by selecting them based on their ability to enhance specific features of interest in the classification process. While including more indices could potentially increase the level of detail in the classification, it was found that this came at a significant computational cost without any significant improvement in performance. Conversely, using fewer indices decreased computational cost, but the resulting classification was not as accurate. Therefore, sixteen selected indices were found to be the optimal number for achieving the desired level of accuracy while also maintaining computational efficiency.

Table 2.

List of radiometric indices extracted from each sub-basin.

2.2.3. Model Setup and Classification Process

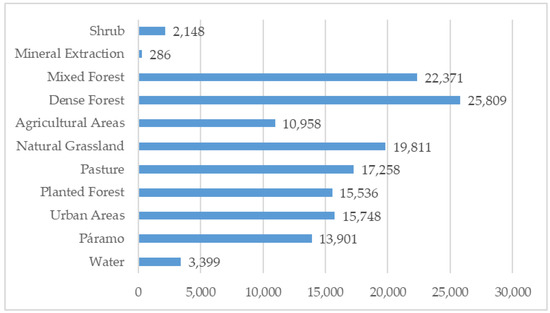

In order to perform a multiclass classification task under complex conditions such as those studied in this research, classical ML algorithms are commonly used due to their low computational cost compared, for example, to DL algorithms [3,4,19,20,23]. However, in these types of scenarios, it has been demonstrated that classical algorithms such as RF or SVM do not work properly for achieving an accurate LULC classification [10]. This is because in LULC classifications with many classes and large spatial extents, large amounts of data are necessary, and classical algorithms are insufficient to handle such large volumes of data. An option to overcome this issue is to work with DL models such as CNNs. According to the literature [4,20,21], CNNs have the ability to work with large volumes of data and can adapt quite well to these conditions, thus achieving fairly precise LULC mapping products, even when working with multiclass classifications and large spatial extents. However, according to the state-of-the-art research [7,22,23], increasingly complex CNN architectures are being developed to achieve these types of results. This leads to the need for more computational resources to train such models, resulting in an increase in the economic cost of acquiring the necessary hardware to manage these models, as very complex architectures cannot be trained on any computer system, requiring state-of-the-art GPUs to handle these complex architectures [7,54]. Therefore, in this research, we propose a robust yet simple architecture that solves these issues. The proposed model is based on the LeNet architecture [55], which is a very simple CNN with very few convolutional layers, causing the computational cost associated with training this CNN to be very low. Through several experimental tests, a balance was reached between the complexity of the architecture and the robustness of the model, achieving results that even outperformed larger and more-complex architectures in similar tasks [3,7,22,23,54]. Before training the model, the dataset must be prepared. For this purpose, point samples are selected by photointerpretation of the twenty-seven-band feature set composed of the 10 pre-processed bands of the original S2 image, the sixteen radiometric indices calculated as per Table 2, and the DEM. The selected point samples are stored in a plain text file, which is then used, along with the feature set, to automatically clip small 3 × 3 sample images at 10 m spatial resolution for each of the eleven LULC classes of interest in this work. These labeled images are saved in eleven different folders corresponding to the LULC classes and used to train, validate, and test the DL model. The initial training samples present an imbalance, as shown in Figure 3, due to the naturally unequal distribution of coverages in the Andean sub-basins, which is due to the orographic and climatic conditions of the region. Therefore, an artificial balancing of the original samples is necessary to avoid bias in the algorithm’s learning process. Hence, the initial samples were augmented to a count of 26,000 for all eleven LULC categories. The size of the final dataset is determined by several factors, such as the number of bands present in the feature set and the number of classes used for classification. The more bands and classes included, the larger the dataset will be. Therefore, it is crucial to carefully consider these factors when building a dataset, as a larger dataset may result in more accurate classification results but it may also require more computing power and storage space [2]. It is important to note that the size of the dataset is not the only factor that determines its quality. Other factors, such as the quality of the original images and the accuracy of the labeling process, also play a significant role in ensuring that the dataset is effective for DL purposes [4]. Considering the aforementioned factors, the dataset used for this research was built with a total of:

Dataset = 26,000 × 27 × 11 = 7,722,000 images

Figure 3.

Original distribution of the 11 LULC samples selected.

Each of the images that make up the dataset has a size of 3 × 3 pixels. After the dataset is prepared, it is split into two parts for DL purposes: 80% is dedicated to training, while the remaining 20% is used for validation [56,57]. This partitioning is essential in preventing overfitting and assessing the performance of the model accurately.

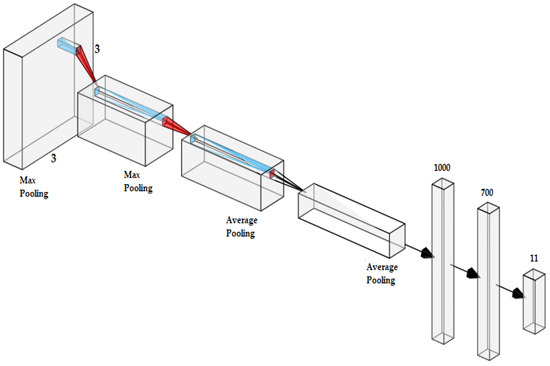

The construction of the proposed CNN is performed using Tensorflow API, Keras. This high-level API allows researchers to quickly prototype and build CNNs with minimal code. It provides a simple and intuitive syntax for defining models, as well as a wide range of built-in layers, loss functions, and optimizers, making it suitable for a variety of tasks, including image classification. In addition to its ease of use, Keras also provides a range of tools for monitoring and visualizing model performance [58]. This includes built-in metrics for evaluating model accuracy and loss, as well as support for callbacks that allow researchers to monitor training progress and adjust model parameters in real-time. The schematic diagram shown in Figure 4, depicts the architecture built for this research. This schematic was created using the free tool described in [59]. The architecture comprises a total of seven layers, which include four convolutional layers and three fully connected layers. The convolutional kernel size was set up to 3 × 3, matching the input file size. This convolutional kernel size is able to capture distinctive features of the eleven LULC classes. Although matching the kernel size to the input file size can potentially lead to convolution issues, we mitigated this by applying padding techniques [60]. The CNN layers are designed to extract and process the features from the input images, allowing for the accurate classification of the data. To optimize the performance of the model, several hyperparameters were carefully selected. The batch size was set to 16, which determines the number of samples processed at each iteration. The number of epochs, which indicates the number of times the model is trained on the entire dataset, was set to 150. Additionally, the learning rate was set to 0.0005, which determines the step size at each iteration while minimizing the loss function. It is important to note that the selection of hyperparameters can significantly impact the performance of the model, and as such, they should be carefully tuned to achieve optimal results. Furthermore, the architecture used in this research can serve as a reference for future research in the field of DL and satellite image classification. Further information regarding the configuration of the model can be found in Table 3. The table presents a comprehensive and detailed overview of all the relevant parameters, settings, and specifications that were utilized in the model’s development. This information is critical for understanding the inner workings of the model and is essential for replicating the results and conducting further analyses.

Figure 4.

Proposed CNN model based on the original LeNet architecture published in [55].

Table 3.

CNN parameters for the feature extraction network used in this study.

3. Results

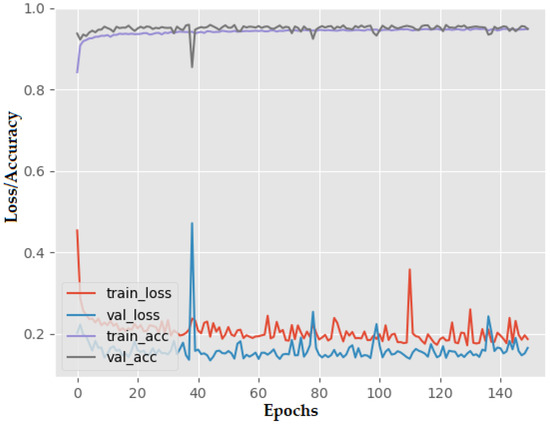

To evaluate the performance of the proposed DL model for the eleven LULC classifications of Andean sub-basins in Colombia, 4 metrics were employed: Overall Accuracy (OA), precision, recall, and kappa coefficient. To assess the performance of the architecture, we trained our model 30 times using a large dataset. The model results obtained an OA of 96.06 in the validation data for all the test, and by the central limit theorem [61] the model training process was validated. In the best case, our model achieved an Overall Accuracy (OA) of 95.49% on the training data and an OA of 96.51% on the validation data, as shown in Figure 5. Notably, our model was particularly successful in accurately classifying the LULC categories for each sub-basin, as can be seen in Table 4 and Table 5, especially for LULC classes with more artificial samples, such as mineral extraction and agricultural areas. The reason for this might be that the training samples gathered for these categories usually exhibit a higher degree of spectral purity when contrasted with other LULC classes such as urban areas or water bodies, which may have narrow characteristics such as roads and rivers that can lead to spectral confusion. Furthermore, it was expected that the model would perform well on LULC categories such as “dense forest” and “mixed forest” because they had the most original samples available. However, it turned out that these two categories had the poorest recall scores, which indicates that the model encountered challenges in distinguishing between the subtle differences in spectral signatures that exist within these classes. The complexity and heterogeneity of forest ecosystems could be a contributing factor as it results in variations in spectral signatures across different forest types and canopy structures. Moreover, shadows and occlusions caused by overlapping canopy layers and understory vegetation can add to the challenge of accurately classifying forested areas. Despite the availability of a relatively large number of original samples, these challenges may have contributed to the lower recall scores observed for the “dense forest” and “mixed forest” LULC classes. Regardless of facing these challenges, the DL model performed remarkably well, as evidenced by a kappa coefficient of 0.962, an overall precision of 96.58% and an overall recall of 96.52% (Table 4 and Table 5). The kappa coefficient value is indicative of a high degree of concordance between the observed and expected results in the classification task [10]. The overall precision value indicates that the model makes few false-positive predictions. This means that the model correctly classifies samples that belong to a specific class, and there are few samples that are classified as belonging to a class, but in fact, they do not. The overall recall value suggests that the model performs well at identifying all positive samples in the dataset, meaning it can identify most of the samples that belong to a specific class.

Figure 5.

Training loss and accuracy on the dataset.

Table 4.

Confusion matrix for the proposed approach applied over the 11 LULC.

Table 5.

Overall Accuracy and kappa coefficient of the proposed approach.

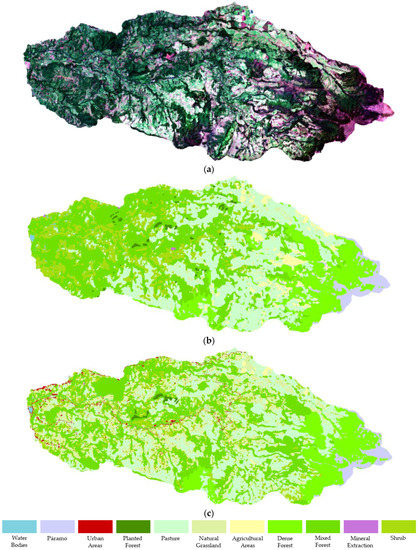

To establish the perfect balance between model complexity and dataset characteristics, such as the number of bands, the number and type of radiometric indices, the total number of images, and their sizes (i.e., 3 × 3, 5 × 5, 7 × 7), various combinations were tested using the Las Piedras sub-basin. This sub-basin is smaller than the other one, making it easier to model. Once it was verified that the results worked with a small dataset from this region, the dataset was expanded using combined data from both sub-basins. The kernel size used to construct the dataset was one of the most influential variables, especially in the qualitative result (or descriptive result) of the LULC classification. It determined whether the images would be small, such as 3 × 3, or large, such as 11 × 11. When using a relatively large kernel size, such as 11 × 11 or larger, the quantitative accuracy was slightly higher than when using a small kernel size. However, the time required to process this information increased significantly, and the qualitative results were not as good, resulting in an inadequate classification, as shown in Figure 6b. In general, the classification failed to reflect the thinner details that can be observed by photointerpretation in the S2 images in that sub-basin (Figure 6a). LULCs such as narrow rivers and narrow roads are not identified by the algorithm under this configuration, as these land covers are underrepresented when a dataset with a large kernel size is constructed. Other classes such as shrubs, pastures and natural grassland are also inaccurately represented by the algorithm, where their spatial arrangement does not correspond to what was mapped by the algorithm under this setup. To improve the classification result, other tests were conducted by increasing the kernel size to form the dataset images. However, there was no improvement, so the kernel size was reduced to 3 × 3, resulting in a significant qualitative improvement, as shown in Figure 6c. Under this configuration, the model performance was outstanding, even mapping small rivers and small roads accurately. This is a significant advancement in this type of work since in other similar LULC classification research, this has not been possible [10,62].

Figure 6.

The Las Piedras sub-basin can be represented in different ways: natural color (a), 11 × 11 size images classification (b), and 3 × 3 size images classification (c).

Once the optimal dataset parameters were established, which in this case were a kernel size of 3 × 3 for the images and 16 radiometric indices to form small twenty-seven-band sample images, the necessary parameters were established to produce a model that was robust enough without having a complex architecture that increased the computational cost associated with training such models. The number of layers in the model was an essential parameter because it determined its ability to learn and generalize patterns in the data. Since a small image size was used, it was not necessary to use many convolutional layers, so four of these layers were sufficient for the network to learn deeply and abstract representations of the dataset. Adding more layers only increased the complexity of the architecture and the computational cost, but not its performance. On the contrary, if fewer than four convolutional layers were used, the model would be too shallow and insufficient to learn from the data. The number of filters per convolutional layer was also a parameter manipulated to obtain an optimal CNN as it determined the number of features the network could detect in the input data. Each filter in the CNN convolutional layer was used to detect certain patterns and features in the data. In this research, having 100 filters in the first layer, 150 in the second, 300 in the third, and 530 in the last layer was essential to achieve a balance between the network representational capacity and generalization ability.

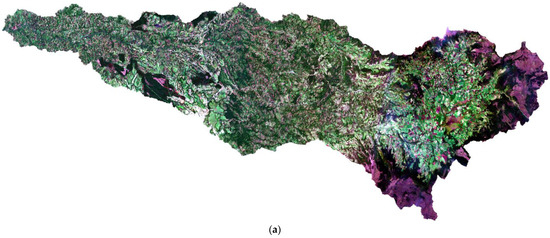

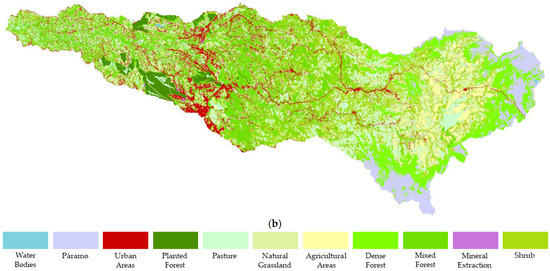

Figure 7 presents the true color image (Figure 7a) and the results (Figure 7b) for the Palacé sub-basin, which were obtained using the best model implemented on a dataset that was constructed from both sub-basins, as well as a dataset of images with 3 × 3 size. This configuration was chosen based on the results of tests that were conducted, which indicated that the best outcomes were achieved with this approach. The results of the qualitative analysis in this sub-basin, which is approximately 10 times larger in spatial extent than the Las Piedras sub-basin, show that, in fact, the previous parameters chosen for the model were the correct ones.

Figure 7.

Two different depictions of the Palacé sub-basin are presented: (a) natural color representation; (b) classification result obtained with a 3 × 3 kernel size.

4. Discussion

4.1. CPU vs. GPU Performance Evaluation

To evaluate the performance of the proposed approach for LULC classification using a simple but robust DL model, the assessment was performed over the whole dataset. DL models are known for their ability to perform complex tasks, such as image classification, however, training these models requires a significant amount of data, and to achieve successful results, it often requires the use of complex CNN architectures, such as those in [3,7,22,23,54]. For example, if a semantic segmentation approach is used, a popular option is the U-Net architecture [63], which is a widely used approach that has an encoder–decoder structure with skip connections. These skip connections facilitate the localization of features, thereby improving the accuracy of the model. Another option could be the DeepLab architecture [64], which utilizes atrous convolution to capture multiscale contextual information and dilate the receptive field of the network. Additionally, the recent success of transformer-based models in natural language processing and computer vision has led to the development of several transformer-based architectures for semantic segmentation, such as the Hybrid Transformer and the CNN for Pixel-Level Multispectral Image Land Cover Classification (HyFormer) [54]. Any of these models show promising results and are expected to play a significant role in the near future of the RS semantic segmentation field. However, these options may imply that powerful GPUs are needed to make the training time feasible. In this research project, we conducted a comparison between training a constructed model using a cutting-edge GPU (NVIDIA RTX 3090) and a standard CPU (Intel(R) Core(TM) i9-9900K CPU @ 3.60 GHz). The results, displayed in Table 6, show that for the dataset built for this research, and with the simple CNN LeNet-based architecture implemented, it is not necessary to have the latest computational resources to train the architecture. While using a powerful GPU can significantly reduce the training time, the difference in results was not significant enough to justify the cost of a top-of-the-line GPU for this project.

Table 6.

GPU vs. CPU training processing time required for the proposed approach.

4.2. Comparison with Other Machine Learning Methods

Table 7 is presented in order to make a comparison between our proposed approach and some traditional ML models for the LULC classification over Andean regions [10]. As demonstrated by the results in Table 7, our model outperforms traditional ML algorithms, since this type of model usually works very well when the study area is small and the classes to be mapped are relatively few (less than ten), but not in other situations. The results indicate that traditional ML techniques may not be sufficiently robust for generating an accurate LULC map in Andean regions. Therefore, in such scenarios, employing DL techniques is preferred.

Table 7.

Comparison results between the approach and traditional ML models in the same study area (in bold are the best results).

4.3. Comparison with other Deep Learning Methods

The quantitative experimental results obtained with our proposed approach have also been compared with those obtained from other state-of-the-art complex DL architectures [65,66,67,68,69,70,71], and the comparisons are presented in Table 8. These experiments have been conducted using the same dataset and computational resources to ensure a fair comparison. Our proposed approach is evaluated against a range of well-established DL architectures, such as: AlexNet [65], ZFNet [66], InceptionV1 [67], VGG16 [68], VGG19 [68], ResNet18 [69], ResNet50 [69], DenseNet121 [70] and EfficientNetB7 [71]. AlexNet, for instance, is a widely popular DL network that employs an eight-layer CNN to analyze visual imagery. ZFNet, or Zeiler and Fergus Net, is another CNN that has a similar architecture to AlexNet but with some modifications made by modifying hyperparameters and filter sizes to enhance its performance. InceptionV1, also known as GoogleNet, was developed by Google researchers introducing the concept of “inception modules”, which enables the network to simultaneously compute multiple filter sizes and pooling operations at different layers. VGG16 and VGG19, developed by the Visual Geometry Group at the University of Oxford, are deep convolutional neural networks with small filter sizes that consist of 16 and 19 layers, respectively. ResNet18 and ResNet50 are part of the ResNet family of convolutional neural networks; they introduced the concept of “residual learning”, which helps the network to tackle the vanishing gradient problem, which is a common issue in deep neural networks. DenseNet121 introduced the concept of “dense connectivity”, which enables each layer to receive feature maps from all previous layers in a feed-forward fashion. Finally, EfficienNetB7 is part of the EfficientNet family of neural networks. It uses a compound scaling method to optimize both accuracy and efficiency by balancing network depth, width, and resolution. As shown in Table 8, the results obtained from our proposed approach demonstrate superior performance in terms of both validation metrics and computational time, compared to the other architectures mentioned above, achieving high accuracy with the lowest computational resources. This demonstrates the efficiency and effectiveness of the proposed method for LULC classification over Andean regions.

Table 8.

Comparison results between the proposed approach and other DL approaches using the same test dataset (in bold are the best results.

To enhance the comprehensiveness of this research, we have extended the comparison to other studies that implemented more-complex architectures, but on different study areas, datasets, and experimental conditions. In Table 9, a comparison is presented between the results obtained from our research and those obtained from other DL approaches that perform similar tasks but rely on complex architectures. Despite the use of a simpler architecture, the proposed approach still has better results than other approaches under varying experimental conditions. The training time and other evaluation metrics are not included in this report (Table 9), since not all of the reviewed articles provide a comprehensive analysis of these parameters.

Table 9.

Comparison results between the proposed approach and others DL approaches with different datasets, computational resources and experimental conditions (in bold is the best result).

Despite the use of a simpler architecture, all the experiments and results presented in this study demonstrated that a simple CNN can achieve high accuracy in LULC classification maps when a dataset with the necessary characteristics is built. This is an important finding, as it suggests that it is not always necessary to build complex CNN architectures to achieve good results in LULC classification. One of the key advantages of using a simple CNN architecture is that it does not require expensive computational resources to train. This makes it an accessible and efficient solution for researchers and practitioners who do not have access to cutting-edge hardware. It is also worth noting the large number of classes (eleven) that were mapped in this study, as it represents a significant improvement over other research that typically maps fewer classes. This demonstrates the potential of DL techniques to accurately classify a wide range of LULC categories. Furthermore, the study demonstrated that the algorithm works quite well in large spatial extents, which is not usually the case for other works in the literature. Typically, other studies only analyze small spatial extents, and when they study large areas, their models do not usually adapt well to the high intraclass and low interclass variance [4,8,10,16,20,23]. However, in this study, the approach taken proved to be highly adaptable to all the study areas. The success of this approach can be said to depend largely on the pre-processing performed, which was conducted to eliminate, e.g., clouds, and shadows. These two classes can be particularly problematic in LULC classification, as they can be misclassified with other classes such as water bodies and urban areas due to their similarity in their spectral response, leading to a lower overall performance of the model.

5. Conclusions

A simple yet robust DL-based LULC classification method that works for Sentinel-2 data over Andean sub-basins has been presented. The results highlight that while DL models do require large amounts of data to be trained in an appropriate way, there is no need for too deep or complex CNN architectures. This implies that it is possible to achieve successful results without the need for the latest and most expensive computational resources. This finding has implications for both researchers and practitioners who may be limited by budget constraints, as it suggests that successful DL projects are achievable with moderate computational resources. As a future research direction, it is crucial to consider the limited availability of cloud-free images over Andean regions, which emphasizes the need to identify alternative data sources or analysis methods to supplement the existing imagery. This may involve leveraging historical data or incorporating other RS techniques, such synthetic aperture radar, to enhance the accuracy and comprehensiveness of the study. Overall, the use of simple CNN architectures for LULC classification represents an accessible and efficient solution for researchers and practitioners. The success of this approach depends largely on the quality of the data used, the pre-processing performed, and the careful selection and tuning of the CNN hyperparameters. As a future research direction, we would like to explore alternative methods and algorithms to improve the accuracy of cloud- and cloud-shadow detection due to the fact that the SCL map used in our study is not perfect and may miss some clouds and their shadows.

Author Contributions

Conceptualization, D.A.A.-C. and Y.T.S.-C.; methodology, D.A.A.-C., Y.T.S.-C., J.F.M.-O. and E.L.P.-F.; software, D.A.A.-C. and J.F.M.-O.; validation, D.A.A.-C., J.F.M.-O. and A.F.-C.; formal analysis, D.A.A.-C., and Y.T.S.-C.; investigation, D.A.A.-C., Y.T.S.-C. and J.F.M.-O.; data curation, D.A.A.-C., Y.T.S.-C. and J.F.M.-O.; writing—original draft preparation, D.A.A.-C. and Y.T.S.-C.; writing—review and editing, D.A.A.-C., Y.T.S.-C., J.F.M.-O., E.L.P.-F. and A.F.-C.; supervision, Y.T.S.-C. and A.F.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Universidad del Cauca (501100005682) under grant numbers ID 5645, ID 5650, ID 5797, and ID 5142 supported by the Water Security and Sustainable Development Hub funded by the UK Research and Innovation’s Global Challenges Research Fund (GCRF) [grant number: ES/S008179/1].

Data Availability Statement

Data available on request to the corresponding author.

Acknowledgments

This research received support from various projects including the “Young Researchers and Innovators in the Cauca Department”, “Development of Food and Water Security Strategies for the Economic Reactivation of Rural Communities”, “Bioeconomic Strengthening for Social and Productive Reactivation” and the “Water Security and Sustainable Development Hub.” These projects were funded by the science ministry in Colombia and the UK Research and Innovation’s Global Challenges Research Fund (GCRF). The authors also express their gratitude to Viviana Camacho de Angulo for her valuable advice and contributions to the research and to the University of Cauca for the support during the development of this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Oswald, S.M.; Hollosi, B.; Žuvela-Aloise, M.; See, L.; Guggenberger, S.; Hafner, W.; Prokop, G.; Storch, A.; Schieder, W. Using urban climate modelling and improved land use classifications to support climate change adaptation in urban environments: A case study for the city of Klagenfurt, Austria. Urban Clim. 2020, 31, 100582. [Google Scholar] [CrossRef]

- Benhammou, Y.; Alcaraz-Segura, D.; Guirado, E.; Khaldi, R.; Achchab, B.; Herrera, F.; Tabik, S. Sentinel2GlobalLULC: A Sentinel-2 RGB image tile dataset for global land use/cover mapping with deep learning. Sci. Data 2022, 9, 20. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Rottensteiner, F.; Heipke, C. Classification of land cover and land use based on convolutional neural networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 251–258. [Google Scholar] [CrossRef]

- Carranza-García, M.; García-Gutiérrez, J.; Riquelme, J.C. A Framework for Evaluating Land Use and Land Cover Classification Using Convolutional Neural Networks. Remote Sens. 2019, 11, 274. [Google Scholar] [CrossRef]

- Chuvieco, E.; Li, J.; Yang, X. Advances in Earth Observation of Global Change, 1st ed.; Springer: Dordrecht, The Netherlands, 2010; ISBN 978-90-481-9084-3. [Google Scholar]

- Smyth, T.A.; Wilson, R.; Rooney, P.; Yates, K.L. Extent, accuracy and repeatability of bare sand and vegetation cover in dunes mapped from aerial imagery is highly variable. Aeolian Res. 2022, 56, 100799. [Google Scholar] [CrossRef]

- Lilay, M.Y.; Taye, G.D. Semantic segmentation model for land cover classification from satellite images in Gambella National Park, Ethiopia. SN Appl. Sci. 2023, 5, 15. [Google Scholar] [CrossRef]

- Yuh, Y.G.; Tracz, W.; Matthews, H.D.; Turner, S.E. Application of machine learning approaches for land cover monitoring in northern Cameroon. Ecol. Inform. 2023, 74, 101955. [Google Scholar] [CrossRef]

- Keshtkar, H.; Voigt, W.; Alizadeh, E. Land-cover classification and analysis of change using machine-learning classifiers and multi-temporal remote sensing imagery. Arab. J. Geosci. 2017, 10, 154. [Google Scholar] [CrossRef]

- Pencue-Fierro, E.L.; Solano-Correa, Y.T.; Corrales-Munoz, J.C.; Figueroa-Casas, A. A Semi-Supervised Hybrid Approach for Multitemporal Multi-Region Multisensor Landsat Data Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5424–5435. [Google Scholar] [CrossRef]

- Swetanisha, S.; Panda, A.R.; Behera, D.K. Land use/land cover classification using machine learning models. Int. J. Electr. Comput. Eng. (IJECE) 2022, 12, 2040–2046. [Google Scholar] [CrossRef]

- Alshari, E.A.; Abdulkareem, M.B.; Gawali, B.W. Classification of land use/land cover using artificial intelligence (ANN-RF). Front. Artif. Intell. 2023, 5, 964279. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Lee, Y.; Lee, J. Assessment of Machine Learning Algorithms for Land Cover Classification Using Remotely Sensed Data. Sens. Mater. 2021, 33, 3885. [Google Scholar] [CrossRef]

- Saralioglu, E.; Vatandaslar, C. Land use/land cover classification with Landsat-8 and Landsat-9 satellite images: A comparative analysis between forest- and agriculture-dominated landscapes using different machine learning methods. Acta Geod. Geophys. 2022, 57, 695–716. [Google Scholar] [CrossRef]

- Razafinimaro, A.; Hajalalaina, A.R.; Rakotonirainy, H.; Zafimarina, R. Land cover classification based optical satellite images using machine learning algorithms. Int. J. Adv. Intell. Inform. 2022, 8, 362–380. [Google Scholar] [CrossRef]

- Putri, K.A. Analysis of Land Cover Classification Results Using ANN, SVM, and RF Methods with R Programming Language (Case Research: Surabaya, Indonesia). IOP Conf. Ser. Earth Environ. Sci. 2023, 1127, 14. [Google Scholar] [CrossRef]

- Khosravi, I.; Jouybari-Moghaddam, Y. Hyperspectral Imbalanced Datasets Classification Using Filter-Based Forest Methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4766–4772. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Sánchez, A.-M.S.; González-Piqueras, J.; de la Ossa, L.; Calera, A. Convolutional Neural Networks for Agricultural Land Use Classification from Sentinel-2 Image Time Series. Remote Sens. 2022, 14, 5373. [Google Scholar] [CrossRef]

- Kroupi, E.; Kesa, M.; Navarro-Sánchez, V.D.; Saeed, S.; Pelloquin, C.; Alhaddad, B.; Moreno, L.; Soria-Frisch, A.; Ruffini, G. Deep convolutional neural networks for land-cover classification with Sentinel-2 images. JARS 2019, 13, 024525. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; García-Haro, F.; Martínez, B.; Gilabert, M. Deep learning para la clasificación de usos de suelo agrícola con Sentinel-2. Rev. Teledetec. 2020, 56, 35–48. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Corpetti, T.; Zhao, L. WTS: A Weakly towards Strongly Supervised Learning Framework for Remote Sensing Land Cover Classification Using Segmentation Models. Remote Sens. 2021, 13, 394. [Google Scholar] [CrossRef]

- Pedrayes, O.D.; Lema, D.G.; García, D.F.; Usamentiaga, R.; Alonso, Á. Evaluation of Semantic Segmentation Methods for Land Use with Spectral Imaging Using Sentinel-2 and PNOA Imagery. Remote Sens. 2021, 13, 2292. [Google Scholar] [CrossRef]

- Ruiz, O.D.M.; Idrobo, M.J.P.; Otero, S.J.D.; Figueroa, C.A. Effects of productive activities on the water quality for human consumption in an andean basin, a case study. Rev. Int. Contam. Ambient. 2017, 33, 361–375. [Google Scholar] [CrossRef]

- Lopez, I.D.; Figueroa, A.; Corrales, J.C. Multi-Dimensional Data Preparation: A Process to Support Vulnerability Analysis and Climate Change Adaptation. IEEE Access 2020, 8, 87228–87242. [Google Scholar] [CrossRef]

- Perdomo Chavarro, D. Determinación de la Variación de Microcontaminates (Agroquímicos) en la Subcuenca del Río Palacé Mediante un Modelo Matemático; Trabajo de grado-pregrado, Uniautónoma del Cauca, Facultad de Ciencias Ambientales y Desarrollo Sostenible, Programa de Ingeniería Ambiental y Sanitaria: Popayán, Colombia, 2021. [Google Scholar]

- Chantre Velasco, M. Análisis Comparativo de Cambios de Área en Coberturas en la Parte alta de la Subcuenca río Palacé, a Través de Imágenes Landsat Entre 1989 y 2016; Universidad de Manizales: Manizales, Colombia, 2017. [Google Scholar]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.R.; Murayama, Y.; Ranagalage, M. Sentinel-2 Data for Land Cover/Use Mapping: A Review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Scepanovic, S.; Antropov, O.; Laurila, P.; Rauste, Y.; Ignatenko, V.; Praks, J. Wide-Area Land Cover Mapping with Sentinel-1 Imagery Using Deep Learning Semantic Segmentation Models. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 10357–10374. [Google Scholar] [CrossRef]

- Essien, P.; Figueiredo, C.A.O.B.; Takahashi, H.; Klutse, N.A.B.; Wrasse, C.M.; Afonso, J.M.D.S.; Quispe, D.P.; Lomotey, S.O.; Ayorinde, T.T.; Sobral, J.H.A.; et al. Intertropical Convergence Zone as the Possible Source Mechanism for Southward Propagating Medium-Scale Traveling Ionospheric Disturbances over South American Low-Latitude and Equatorial Region. Atmosphere 2022, 13, 15. [Google Scholar] [CrossRef]

- European Space Agency (ESA) Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/dhus/#/home (accessed on 29 March 2023).

- Louis, J.; Pflug, B.; Main-Knorn, M.; Debaecker, V.; Mueller-Wilm, U.; Iannone, R.Q.; Giuseppe Cadau, E.; Boccia, V.; Gascon, F. Sentinel-2 Global Surface Reflectance Level-2a Product Generated with Sen2Cor. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 8522–8525. [Google Scholar]

- Louis, J.; Devignot, O.; Pessiot, L. Level-2A Algorithm Theoretical Basis Document; Remote Sensing Systems: Santa Rosa, CA, USA, 2021; p. 78. [Google Scholar]

- Alaska Satellite Facility ASF Data Search. Available online: https://search.asf.alaska.edu/#/.html (accessed on 29 March 2023).

- Yang, H.; Li, X.; Zhao, L.; Chen, S. An accurate and robust registration framework based on outlier removal and feature point adjustment for remote sensing images. Int. J. Remote Sens. 2021, 42, 8979–9002. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Li, Z.; Atkinson, P.M. Fusion of Sentinel-2 images. Remote Sens. Environ. 2016, 187, 241–252. [Google Scholar] [CrossRef]

- Ren, H.; Feng, G. Are soil-adjusted vegetation indices better than soil-unadjusted vegetation indices for above-ground green biomass estimation in arid and semi-arid grasslands? Grass Forage Sci. 2014, 70, 611–619. [Google Scholar] [CrossRef]

- Baret, F.; Guyot, G. Potentials and limits of vegetation indices for LAI and APAR assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Daughtry, C.S.T.; Eitel, J.U.H.; Long, D.S. Remote Sensing Leaf Chlorophyll Content Using a Visible Band Index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Nouaim, W.; Chakiri, S.; Rambourg, D.; Karaoui, I.; Ettaqy, A.; Chao, J.; Allouza, M.; Razoki, B.; Yazidi, M.; El Hmidi, F. Mapping the water erosion risk in the Lakhdar river basin (central High Atlas, Morocco). Geol. Ecol. Landscapes 2018, 3, 22–28. [Google Scholar] [CrossRef]

- Gao, B.-C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Dogan, H.M. Applications of remote sensing and Geographic Information Systems to assess ferrous minerals and iron oxide of Tokat province in Turkey. Int. J. Remote Sens. 2008, 29, 221–233. [Google Scholar] [CrossRef]

- Liu, X.; Peng, Y.; Lu, Z.; Li, W.; Yu, J.; Ge, D.; Xiang, W. Feature-Fusion Segmentation Network for Landslide Detection Using High-Resolution Remote Sensing Images and Digital Elevation Model Data. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4500314. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Hancock, D.W.; Dougherty, C.T. Relationships between Blue- and Red-based Vegetation Indices and Leaf Area and Yield of Alfalfa. Crops Sci. 2007, 47, 2547–2556. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.; Zur, Y.; Stark, R.; Gritz, U. Non-Destructive and Remote Sensing Techniques for Estimation of Vegetation Status; Papers in Natural Resources. In Proceedings of the Third European Conference on Precision Agriculture, Montpellier, France, 18–20 June 2001. [Google Scholar]

- Tucker, C.; Elgin, J.; McMurtrey, J.; Fan, C. Monitoring corn and soybean crop development with hand-held radiometer spectral data. Remote Sens. Environ. 1979, 8, 237–248. [Google Scholar] [CrossRef]

- Gobron, N.; Pinty, B.; Verstraete, M.M.; Widlowski, J.-L. Advanced vegetation indices optimized for up-coming sensors: Design, performance, and applications. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2489–2505. [Google Scholar] [CrossRef]

- Crippen, R. Calculating the vegetation index faster. Remote Sens. Environ. 1990, 34, 71–73. [Google Scholar] [CrossRef]

- Carlson, T.N.; Ripley, D.A. On the relation between NDVI, fractional vegetation cover, and leaf area index. Remote Sens. Environ. 1997, 62, 241–252. [Google Scholar] [CrossRef]

- Carter, G.A. Ratios of leaf reflectances in narrow wavebands as indicators of plant stress. Int. J. Remote Sens. 1994, 15, 697–703. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Yan, C.; Fan, X.; Fan, J.; Yu, L.; Wang, N.; Chen, L.; Li, X. HyFormer: Hybrid Transformer and CNN for Pixel-Level Multispectral Image Land Cover Classification. Int. J. Environ. Res. Public Health 2023, 20, 3059. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Muckley, L.; Garforth, J. Multi-Input ConvLSTM for Flood Extent Prediction. In Proceedings of the Pattern Recognition. ICPR International Workshops and Challenges; Del Bimbo, A., Cucchiara, R., Sclaroff, S., Farinella, G.M., Mei, T., Bertini, M., Escalante, H.J., Vezzani, R., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 75–85. [Google Scholar]

- Thangadeepiga, E.; Alagu Raja, R.A. Remote Sensing-Based Crop Identification Using Deep Learning. In Intelligent Data Engineering and Analytics; Satapathy, S.C., Zhang, Y.-D., Bhateja, V., Majhi, R., Eds.; Springer: Singapore, 2021; pp. 109–122. [Google Scholar]

- The Sequential Model|TensorFlow Core. Available online: https://www.tensorflow.org/guide/keras/sequential_model (accessed on 15 March 2023).

- Lenail, A. NN-SVG: Publication-Ready Neural Network Architecture Schematics. J. Open Source Softw. 2019, 4, 747. [Google Scholar] [CrossRef]

- Alrasheedi, F.; Zhong, X.; Huang, P.-C. Padding Module: Learning the Padding in Deep Neural Networks. IEEE Access 2023, 11, 7348–7357. [Google Scholar] [CrossRef]

- Muñoz-Ordóñez, J.; Cobos, C.; Mendoza, M.; Herrera-Viedma, E.; Herrera, F.; Tabik, S. Framework for the Training of Deep Neural Networks in TensorFlow Using Metaheuristics. In Intelligent Data Engineering and Automated Learning—IDEAL 2018; Yin, H., Camacho, D., Novais, P., Tallón-Ballesteros, A.J., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 801–811. [Google Scholar]

- Zanaga, D.; Van De Kerchove, R.; Daems, D.; De Keersmaecker, W.; Brockmann, C.; Kirches, G.; Wevers, J.; Cartus, O.; Santoro, M.; Fritz, S.; et al. ESA WorldCover 10 m 2021 V200; European Space Agency: Paris, France, 2022. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 10691–10700. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).