1. Introduction

For any autonomous robot system, such as unmanned aerial vehicles and autonomous vehicles, the accurate and robust localization of a mobile carrier is one of the fundamental technologies [

1]. Traditionally, the integrated navigation and positioning technology based on the global navigation satellite system (GNSS) and inertial navigation system (INS) is usually regarded as a reliable method to achieve high-accuracy positioning [

2]. However, in complex urban environments, there are a large number of GNSS multipath or rejection areas due to the blockage of GNSS signals by urban objects such as tall buildings, tunnels and street trees. As a result, the integrated positioning method based on GNSS/INS is not effective in achieving a continuous and robust positioning of targets in large urban environments. In summary, there is an urgent need to upgrade and expand the traditional positioning techniques by introducing heterogeneous and complementary measurement information from other sensors.

In recent years, the multi-sensor fusion positioning technology based on simultaneous localization and mapping (SLAM) has received extensive attention from related enterprises and researchers [

3]. It can not only make use of the excellent characteristics of cameras, LiDAR and other sensors, including the independence from environmental occlusion and signal refraction in complex areas, but can also effectively make up for the signal lock-out defect of GNSS signals in the parking lot or tunnel area. Moreover, incremental map reconstruction can be achieved by sensing the external environment. Depending on the primary sensor, SLAM-based multi-sensor fusion positioning solutions can be divided into vision-based SLAM and LiDAR-based SLAM [

4]. Due to the superiority of the sensors, the solution of LiDAR-based SLAM allows for a higher frequency and more accurate acquisition of spatial fingerprint information, thus achieving a more accurate positioning than vision-based SLAM [

5,

6,

7]. Secondly, analyzed at the algorithm level, LiDAR odometry is more lightweight in processing environmental features than visual odometry and more suitable for vehicle-mounted platforms with limited computational resources [

8,

9]. Therefore, the LiDAR-inertial odometry (LIO)-based SLAM scheme is widely used to obtain 3D geographic information of a complex environment, as well as carrier positioning and map reconstruction.

Throughout the development of the LiDAR-based SLAM, it can be seen that the registration of the point cloud of LiDAR is a key step in the pose estimation of a mobile carrier. It strictly affects the pose estimation and the map reconstruction results. The commonly used point cloud registration methods include normal distribution transform (NDT) [

10], iterative closest point (ICP) [

11], generalized iterative closest point (GICP) [

12] and other improved algorithms [

13,

14,

15,

16]. The core of NDT algorithm is used to take the probability density function of the source point cloud and the target point cloud as the objective function; then, it uses a nonlinear optimization method to minimize the probability density between them to obtain the optimal solution. Andreasson et al. [

17] avoids an explicit nearest neighbor search by establishing segmented continuous and differentiable probability distributions, and the registration speed is effectively improved. Although the real-time performance is better, the covariance matrix needs to be constructed at multiple points, which has a low robustness in the sparse area of the point cloud. Caballero et al. [

18] proposed an improved NDT algorithm that was used to model the alignment problem as a distance field. The optimization equation is constructed by using the distance between the feature points of the current frame and the prior map, which improves the speed by an order of magnitude. However, the robustness of the localization algorithm is not guaranteed for unknown sections where the priori map is missing or unreliable [

19].

As another method of point cloud registration, the ICP algorithm has a higher positioning accuracy than NDT, but it needs to search for the nearest neighbor again and obtain the transformation matrix in each iteration process, so the calculation efficiency needs to be improved. Koide et al. [

20] proposed a generalized iterative nearest point algorithm that used a Gaussian probability model to fit the distribution of the point cloud to reduce the computational complexity. However, its accuracy is still limited by the maximum number of iterations. In addition, the algorithm is heavily influenced by the observation noise and the accuracy of the initial positional transformation matrix, and there is a risk of the algorithm falling into local minima. In order to break out of the logical limitation of being limited to local optimal solutions, Yang et al. [

21] proposed Go-ICP, a branch-and-bound scheme to impose domain restrictions on the objective function of rigid alignment. This processing reduced the abnormal influence of the local minimum, and made the registration result of the point cloud approach to the global optimal solution. In 2021, Pan et al. [

22] proposed MULLS-ICP, which uses an improved ICP algorithm based on double-threshold filtering and multi-scale linear least squares to realize the registration between the current frame and local sub-map, but the high computational cost of multiple filtering is difficult to adapt to the vehicle platform with limited computational resources. To sum up, on the basis of reducing the calculation cost, a high-precision real-time point cloud registration algorithm suitable for a vehicle platform still needs to be investigated.

In addition, as a local sensor integrator, the LIO has a cumulative offset between its local map and the global map when it performs a positional estimation of the current frame, which largely limits the positioning accuracy of the LIO position building scheme in large outdoor environments. Fortunately, the global observation information from GNSS can provide a credible global constraint correction for LIO [

23]. Conversely, LIO systems can also compensate for the limitations of GNSS in terms of continuous precise positioning due to multipath effects and non-line-of-sight (NLOS) problems. Therefore, LIO-GNSS fusion positioning technology provides a feasible technical scheme for realizing globally weak drift and locally accurate positioning and mapping targets.

The mainstream LIO-GNSS fusion algorithms can be divided into two categories, filter-based methods and optimization-based methods, based on the method of sensor measurement data fusion. Li et al. [

24] used the filter-based method as the integration strategy. They use the extended Kalman filter to realize LIO-GNSS tight coupling, but did not set up an anomaly detection mechanism, so it was prone to the dispersion of the positional estimates in GNSS multipath regions or point cloud degradation regions. To resolve this issue, Li et al. [

25] uses an edge fault-tolerant mechanism to improve the robustness of the algorithm in case of single-sensor failure. However, it weakens the linearization error at the cost of increasing the amount of computation, which is contrary to the lightweight principle of large outdoor scenes. As another fusion method, the optimization-based method uses multiple iterations to approach the optimal solution, which can effectively handle such non-linear heterogeneous data fusion problems. Soloviev et al. [

26] proposed an optimization-based LIO-GNSS scheme, but only the horizontal components of GNSS measurements were used to optimize the LIO pose estimation results, with low utilization of the measurement information. Shan et al. [

27] puts forward an optimization framework that introduces 3D GNSS measurement factors to assist LIO, but the measurement information of a single key frame is redundant, and the reliability of GNSS factors added when driving to the GNSS multipath area is poor. Sun et al. [

28] proposed a GNSS corner factor to constrain the local pose, but it does not consider the shortage of corners on straight road sections, so its application in a large-scale complex outdoor environment is limited.

From the above analysis, it can be seen that the research points of the LIO-GNSS fusion scheme are as follows:

Realizing real-time and high-precision point cloud alignment based on compressed computational costs.

On the basis of making full use of GNSS measurement information, global cumulative error correction of LIO is carried out by GNSS.

To address the above issues, in this contribution, we propose a LiDAR-inertial-GNSS fusion positioning system based on voxelized accurate registration. Firstly, a voxelized point cloud downsampling method based on curvature segmentation is proposed. Rough classification is carried out by a curvature threshold, and the voxelized point cloud downsampling is performed using HashMap instead of the random sample consensus algorithm. Therefore, the spatial distribution attributes of the source point cloud are retained to a greater extent. Secondly, a point cloud registration model based on the nearest neighbors of the point and neighborhood point sets is constructed. Thirdly, an optimization-based method is used to build a higher-order Markov model based on sliding windows, and a GNSS factor and loop factor are introduced into the factor graph to constrain LIO globally. Finally, on this basis, a GNSS residual construction method based on the GNSS reliability weight is proposed to make full use of GNSS measurement information. Therefore, the goal of positioning and mapping with a light weight, high precision and high applicability in a complex urban environment can be achieved.

2. System Overview

The proposed algorithm framework is shown in

Figure 1. The main functions of each module are as follows.

The front-end of the system is mainly used to preprocess IMU observations and LiDAR original point cloud sequences, and to optimize the generation of local maps by inter-frame matching. The LiDAR raw point cloud sequence is clustered and segmented by a breadth-first-search combined with the Euclidean angle threshold, and then edge and plane feature point clouds are extracted. These two types of feature clouds are downsampled for point cloud alignment, and the local inter-frame matching is optimized using the IMU pre-integration as the initial pose estimate. Finally, the LIO local pose estimates are used to pre-process the GNSS global observations, including the temporal interpolation alignment of GNSS and LIO local observations and coordinate system alignment, so as to achieve the space–time synchronization among sensors.

The back-end mainly uses the residuals of pose estimates of each sensor to optimize the map. The residual factors from local sensors include the IMU pre-integration and LiDAR observation residual, whereas the global residual factors include the GNSS observation residual and loop residual. It should be noted that the global residual factors are added only when their existence is detected, and, when there is no global residual factor, the system only performs local position, such as when the carrier is travelling in a flat and straight tunnel environment. When global corrections are available, the obtained global positioning results are used to update the local pose estimates in the sliding window to obtain the best pose estimates with local accurate registration and global drift-free.

3. Point Cloud Voxelization Downsampling and Alignment

The accuracy of the registration of the environmental point cloud extracted by LiDAR strictly affects the result of the subsequent local pose estimation. Therefore, the processing steps of the front-end point cloud of the system need to be described in detail. This paper mainly involves the improved point cloud downsampling method and registration method.

3.1. Voxelized Downsampling Based on Curvature Segmentation

This paper presents a voxelized downsampling method based on curvature segmentation. Given a set of raw point cloud sequences collected by LiDAR, all points in the raw point cloud sequences are traversed and coarse clustering is performed using a breadth-first algorithm. Furthermore, the geometric angle threshold based on Euclidean distance is used to finely segment the point cloud clusters with similar depth. Let the scanning center of LiDAR be

and the two adjacent edge points

and

in the point cloud cluster with depths

and

, respectively (

). Let the number of point clouds in the point cloud cluster where point

is located be

. Then, the roughness of point cloud

is:

Set the roughness threshold as , then traverse . We classify the points of as the set of edge feature points, classify the points of as the set of plane feature points and perform downsampling operations on them, respectively.

This method is mainly used in the feature extraction step of LiDAR odometry [

4]; we extend it to the downsampling step. This means that, for any application where downsampling of point clouds is required, such as artefact inspection, the method can better restore the spatial distribution properties of point clouds by downsampling in clusters.

Next, this paper proposes a point cloud downsampling strategy based on HashMap, instead of the random sample consensus (RANSAC), so that the downsampling result of the point cloud is closer to the approximate center of gravity of voxels. Let the coordinate of a feature point in a set of point cloud sequences in the voxel space be . If the voxel grid size is , the dimension of the voxel grid in the direction is , and the index of in the direction within the voxel grid is . The same applies to the and directions.

After obtaining the 3D index of feature points in the voxel space, if the random sorting strategy of [

11] is adopted, the sorting complexity will be

, which has a negative impact on the down-sampling time. Therefore, this paper uses the hash function to sort the index of feature points quickly and map them to

containers

. The hash function is:

To avoid hash conflicts, set the conflict detection conditions as follows:

Once the hash conflict is detected, the index value in the current container is output and the container is emptied, and the new index value is put into the container.

To sum up, the main improvement of this section lies in extending the curvature segmentation step originally used for feature extraction to the downsampling step, and using hash mapping instead of the random sampling method for point cloud sampling. For the LiDAR odometer, using the clustering line and surface features again after the feature extraction step can improve the accuracy of downsampling single-frame or discontinuous point clouds at a weak time cost, thus providing more accurate point cloud distribution results for the pose estimation step between consecutive frames. In addition, using HashMap to downsample can further improve the sampling efficiency, and the time consumption of quadratic curvature segmentation is almost negligible. For other applications that need to downsample point clouds, the point cloud clustering method based on curvature segmentation can restore the spatial distribution of point clouds more accurately, and the benefits of this method are extensive and obvious.

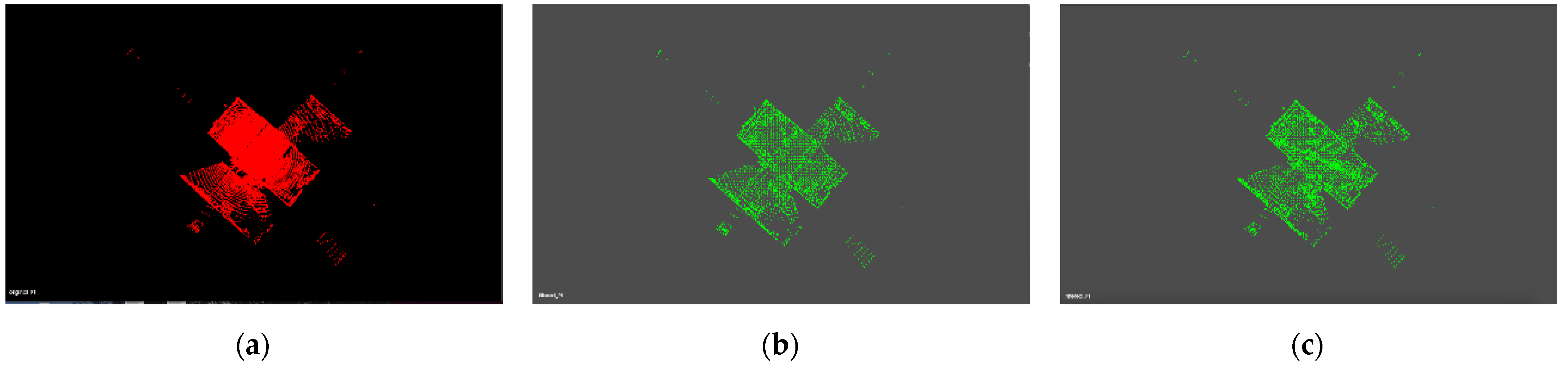

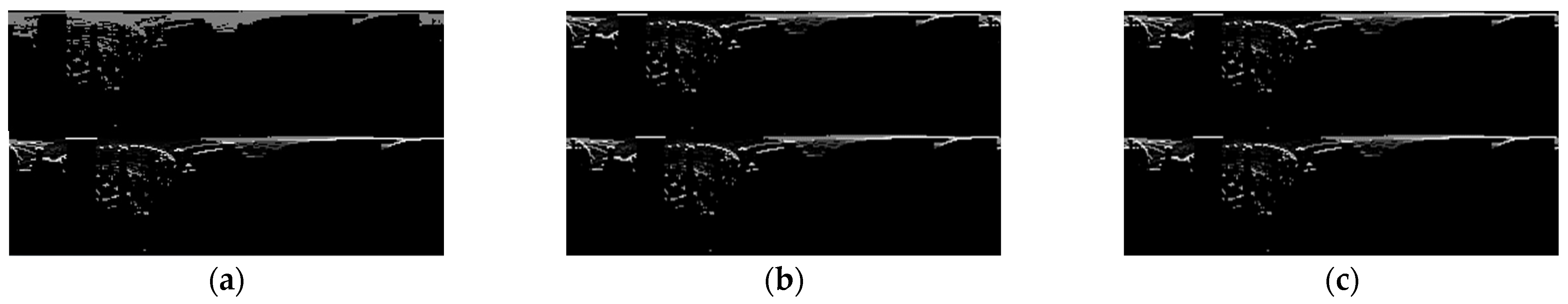

The results and time consumption of the improved point cloud downsampling process are shown in

Figure 2 and

Table 1. Cloud number

, the line feature extraction threshold is 1, the surface feature extraction threshold is 0.1 and

r = 0.3. It can be seen from

Figure 2c that the present method has a clearer reduction in the spatial distribution of diagonal lines within a rectangular point cloud. Therefore, it can be proved that our method can retain the texture feature information of the source point cloud to a greater extent, and the accuracy and real-time performance of the downsampling results can be improved.

3.2. Voxelized Point Cloud Registration

The purpose of point cloud registration is to update the rigid frame transformation of a moving carrier by comparing two consecutive frames of point clouds or similar point clouds detected by a loopback to solve for the carrier’s pose. Traditional LIO usually uses ICP to realize the precise registration of point clouds. The ICP can be briefly described as follows: given a set of source point cloud

and target point cloud

, the nearest neighbor search of KDTree is used to obtain the inter-frame pose transformation relationship

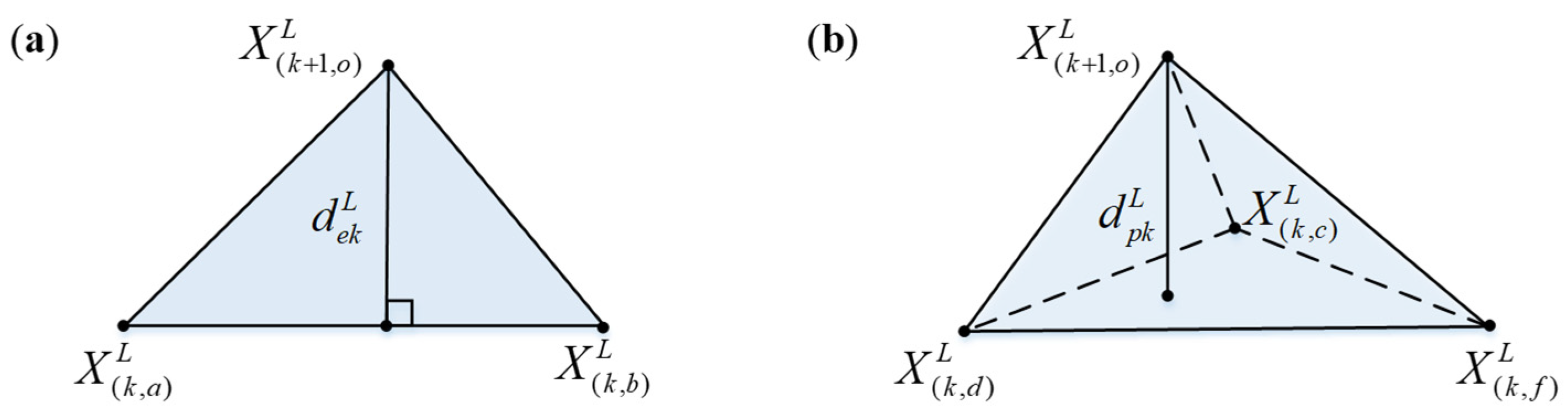

, and the optimal solution is achieved through multiple iterations. However, an unreasonable initial position selection will make ICP fall into the misunderstanding of the local optimal solution, and the calculation resource consumption of the single-point nearest neighbor search is large. In view of the defects of the ICP algorithm, this paper utilizes a method based on the distribution of feature points in voxels, as shown in

Figure 3.

As shown in

Figure 3. the problem of constructing the nearest neighbor model of a point pair by using a tree diagram is transformed into constructing the nearest neighbor model of a point and a neighborhood point set. Firstly, the two sets of point cloud sequences are approximated as Gaussian distributions, i.e.,

and

, where

.

and

are covariance matrices of two sets of point cloud sequences, respectively. Let the distance between a pair of corresponding points between the target point cloud and the source point cloud be:

Let the neighborhood point set of

be

, where

is the neighborhood judgment threshold. Thus, the distance between the extended point and the neighborhood point set is:

As a result of

and

, the rigid body transformation error

is calculated as:

In this way, the smoothing of all neighboring point clouds in the neighborhood of

is achieved. Let

and

; because

is a high-dimensional Gaussian distribution, its probability density function expansion form is:

The negative logarithmic form of Equation (7) is:

Solving the inter-frame pose transformation matrix

T by maximum likelihood method:

Furthermore, after introducing the number

of point clouds in the neighborhood

, Equation (9) can be written as:

In addition to the smoothing of all neighboring point clouds in the neighborhood of

, an iteration termination threshold

was established to avoid falling into a blind region of local optima after multiple iterations as follows:

where

and

are the root mean square error of the previous

iterations and the previous

iterations, respectively. The iteration is completed when the absolute value of the change in the root mean square error

, or the maximum number of iterations, is reached.

6. Discussion

SLAM-based multi-sensor fusion positioning technology expands the application field of traditional GNSS-based mapping techniques and makes continuous and reliable positioning in complex urban environments with good/intermittent/rejection GNSS a reality. The superior sensing capability of LIO for natural sources has been demonstrated in the literature [

5,

6] and others. However, the accuracy limitation of point cloud registration and the local pose drift of LIO limit the application of LIO in large outdoor environments to some extent. This paper focuses on the above two technical bottlenecks faced by the existing LiDAR SLAM and proposes simplified but effective improvement solutions.

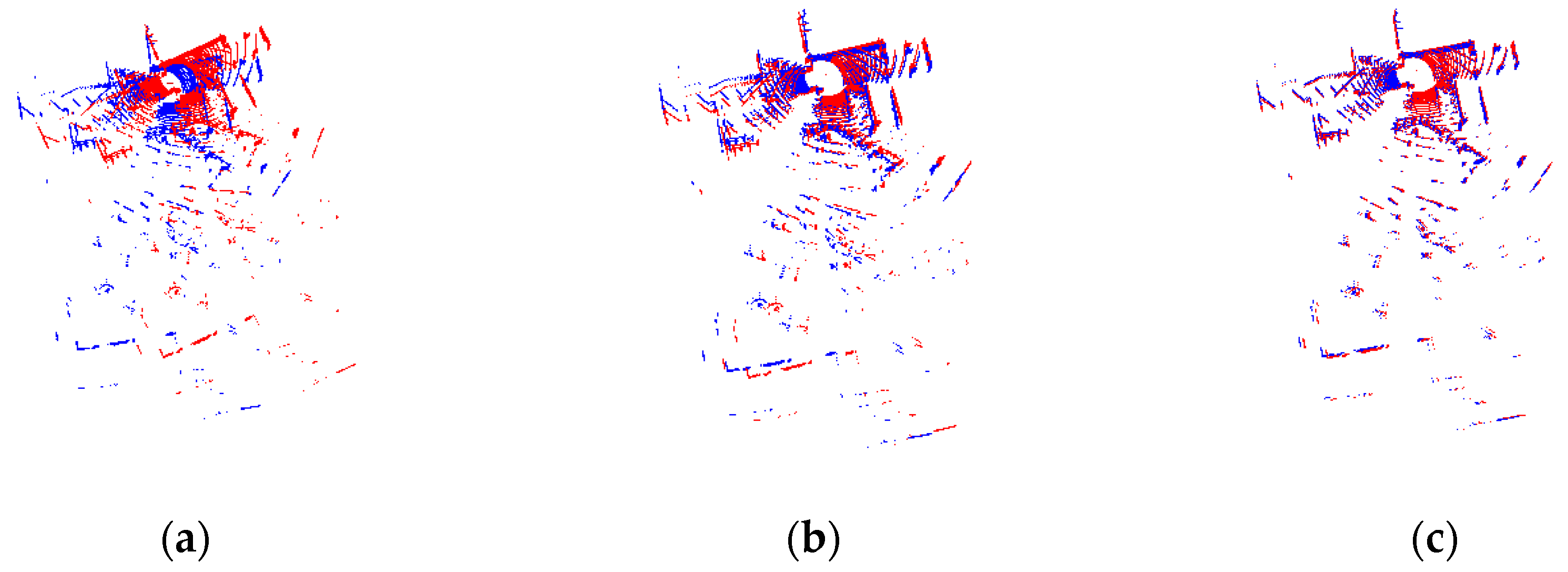

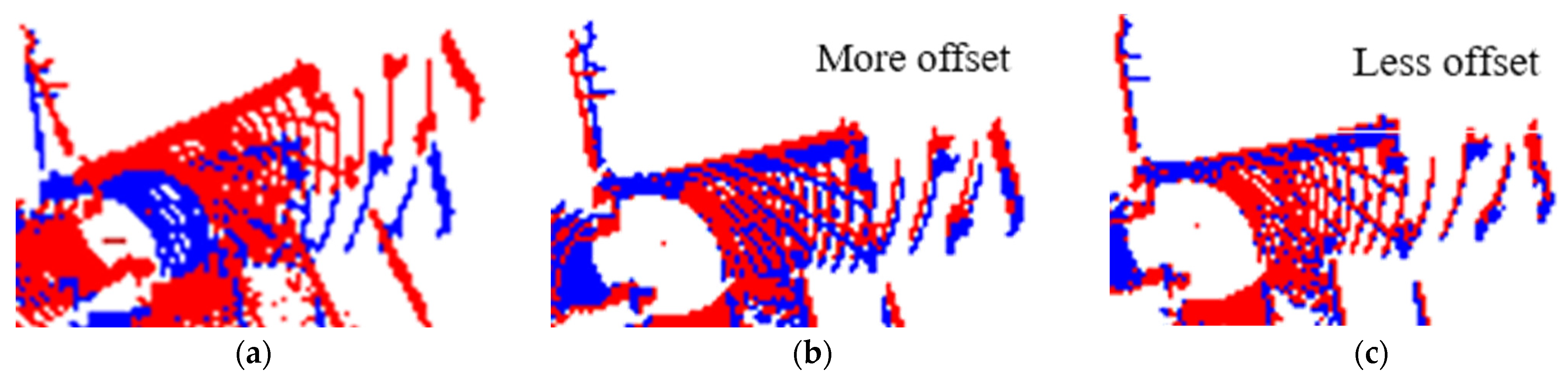

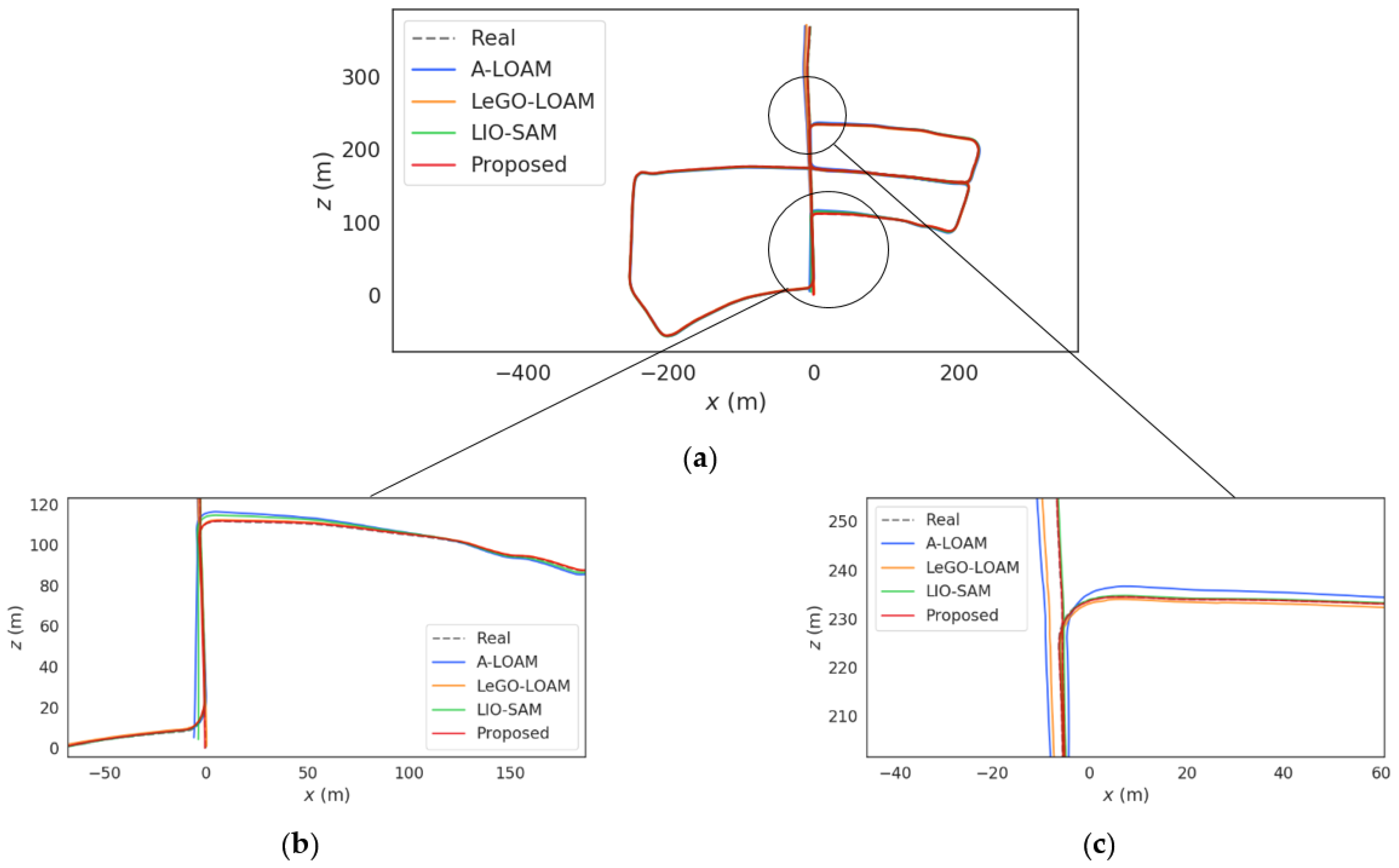

First of all, the primary technical bottleneck is how to improve the accuracy of point cloud registration. Koideet al. [

20] demonstrated that smoothing the point cloud cluster can improve the fault tolerance of point cloud registration and improve the accuracy of the point cloud from 1.20m to 0.89m. However, from our practical tests, it has been shown that, in long and large turning or translating sections in real environments, if the number of iterations is not limited, it may still fall into the local optimum problem. Therefore, on the basis of constructing a registration equation based on smooth voxelization filtering, we further use the judgment condition of iteration termination for the secondary constraint, so as to reduce the over-fitting problem of local point clouds in a typical environment. According to the registration experiment in

Section 5.1 and the positional estimation accuracy experiment in

Section 5.2, it can be seen that our registration method has a higher accuracy than the conventional methods. According to

Figure 11, it can be seen that accurate point cloud alignment leads to an accurate initial value estimation of the positional attitude, which has a considerable positive effect on the positional estimation of the vehicle platform with a complex moving state.

Secondly, there is the challenge of how to effectively use GNSS measurements and loopback detection mechanisms to converge the local positioning results of LIO to a globally optimal solution. Firstly, the main step of loop detection dependency is point cloud registration. The previous paragraph has specifically analyzed the superiority of our method. Thanks to the positive point cloud registration results, we can reason that our global constraint using loopback detection is superior, as demonstrated in

Section 5.2.2. Next, some related research has been carried out on the research of GNSS and LIO fusion positioning. Optimization-based methods have been applied in [

31,

32] and so on. However, taking [

27] for example, in the traditional graph optimization model, the covariance effect of measurements is only used to determine whether to add factors or not, which is crude for the screening of the measurements, especially for GNSS, a measurement signal that is greatly influenced by the environmental catadioptric surface, where its observation information has not been fully applied. Therefore, in this paper, on the basis of the rough screening of GNSS observations, the covariance of the quantitative measurements is added as a weight to the graph optimization model. It achieves a more adequate and accurate global constraint on the LIO local poses using GNSS observations. From the ablation experiment results in

Section 4.3.2, it is evident that the method of the weighted GNSS residual added in this paper achieves a satisfactory positioning accuracy. According to the above results, we can draw a reasonable inference: even when entering an indoor parking lot or tunnel, the accumulated error of LIO in this algorithm will start to accumulate from a lower initial value of drift. In contrast, for the LIO algorithms without GNSS constraints, they already have a large deviation between the local map and the global map before entering the denial environment. Therefore, the cumulative error range of this algorithm is acceptable. Once the GNSS signal is restored, the local error will be corrected within the time alignment interval of 0.1s as set in

Section 4.3.2. This provides an accurate and continuous positional estimation for the in-vehicle platform during travel in complex urban environments.

7. Conclusions

In this paper, a LiDAR-IMU-GNSS fusion positioning algorithm with accurate local alignment and weak global drift is proposed for the high-precision continuous positioning of mobile carriers in complex urban environments.

Firstly, a voxelized point cloud downsampling method based on curvature segmentation is proposed. Rough classification is carried out by a curvature threshold, and the voxelized point cloud downsampling is performed using HashMap instead of RANSAC, so that the spatial feature distribution attributes of the source point cloud, including texture feature information, such as surfaces and curves, are retained to a greater extent.

Secondly, a point cloud registration model based on the nearest neighbors of the point and neighborhood point sets is constructed. Furthermore, an iterative termination threshold is set to reduce the probability of the local optimal solution. This greatly improves the real-time performance of the point cloud registration and can also play a large role in aligning the point cloud between the front and back frames of a fast-moving carrier with large displacement.

Finally, we propose a LIO-GNSS fusion positioning model based on graph optimization that uses GNSS observations weighted by confidence to globally correct local drift. In addition, the loop detection mechanism using the above-mentioned point cloud registration algorithm is also added into the fusion system, resulting in further global constraints of the driving areas with prior maps. Experimental results show that our algorithm can realize a more continuous and accurate pose estimation and map reconstruction in complex urban environments than similar state-of-the-art algorithms.

In the future work, there are still several issues in our work that deserve further exploration. Firstly, we plan to build a deeper constraint relationship between LIO and GNSS and make use of the rich planar features perceived by LiDAR in the urban environments to compensate for GNSS occlusion or the presence of multipath areas in the direction of the constraint. It will reduce the probability of the unreliability of SPP positioning results in the urban environments. Secondly, considering the environments with multi-sensor failures, such as tunnels with a high environmental texture similarity, where both GNSS rejection and point cloud degradation failures exist. We consider a more accurate degradation direction detection using the degeneracy factor (DF) algorithm proposed in [

5], and make a non-linear optimization correction for the positional attitude in that direction. Finally, the perception ability of 3D environmental features by using only LiDAR, IMU and GNSS sensors is still relatively limited. We plan to use the observation residuals from other sensors to add multi-dimensional feature constraints to the fusion positioning algorithm, such as cameras, wheel odometers and so on, so as to make full use of environmental features and realize accurate and real-time navigation and positioning targets with high environmental universality.