Automatic Semantic Segmentation of Benthic Habitats Using Images from Towed Underwater Camera in a Complex Shallow Water Environment

Abstract

:1. Introduction

2. Materials and Methods

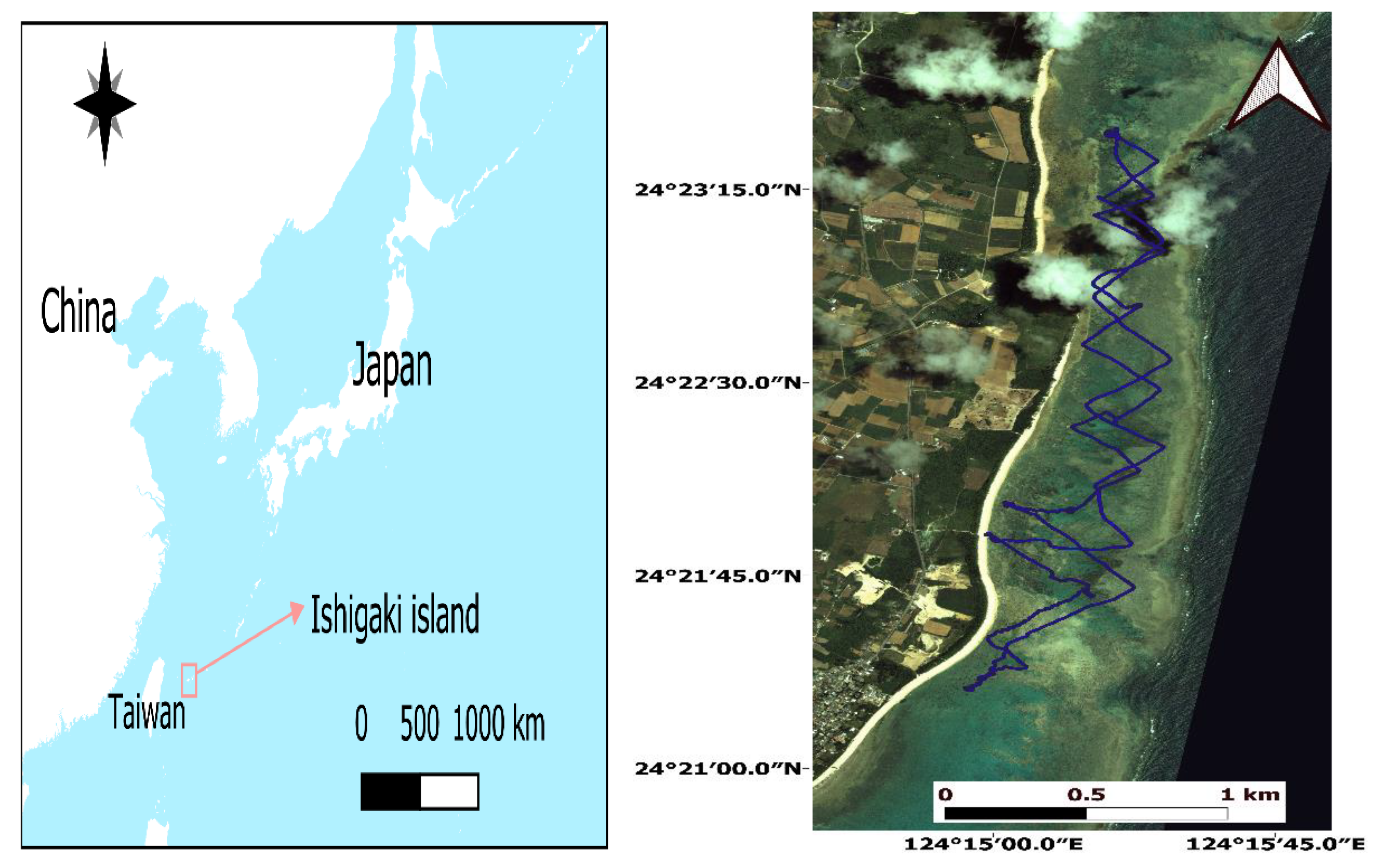

2.1. Study Area

2.2. Benthic Cover Field Data Collection

2.3. Methodology

- All images from the video to JPG converter program were divided into five benthic cover datasets with dominant habitats—brown algae, other algae, corals (Acropora and Porites), blue corals (H. coerulea), seagrass, and the sediments (mud, sand, pebbles, and boulders)—included in all images.

- A total of 125 converted images were selected individually by an expert and divided equally to represent the above benthic cover categories.

- These images included all challenging variations in the underwater images, including poor illumination, blurring, shadows, and differences in brightness.

- A manual digitizing was applied carefully for these images. Each image displayed two or three categories, converted to raster form using ARC GIS software.

- Manually categorized images were reviewed by two other experts. These experts compared manually categorized images to original images to guarantee correctness before evaluating proposed methods.

- A color invariant (shadow ratio) detection equation [40] using the ratio between blue and green bands was used to separate images automatically.

- RGB color space images that showed positive and negative values, indicating high illumination, low brightness variation, and no shadow effects, were used for segmentation.

- Otherwise, RGB color space images with only negative values, indicating low illumination, high brightness variation, and shadow effects, were converted to (CIE) LAB and YCbCr color spaces before segmentation.

- The Cr band from YCbCr color spaces represents the difference between the red component and a reference value, and the Ac band from (CIE) LAB color spaces represents the magnitude of red and green tones. Converted images were used for segmentation.

- Proposed unsupervised algorithms were assessed for segmentation and compared to manually categorized images.

2.4. Proposed Unsupervised Algorithms for Automatic Benthic Habitat Segmentation

2.4.1. K-Means Algorithm

2.4.2. Otsu Algorithm

2.4.3. Fast and Robust Fuzzy C-Means Algorithm

2.4.4. Superpixel-Based Fast Fuzzy C-Means Algorithm

3. Results

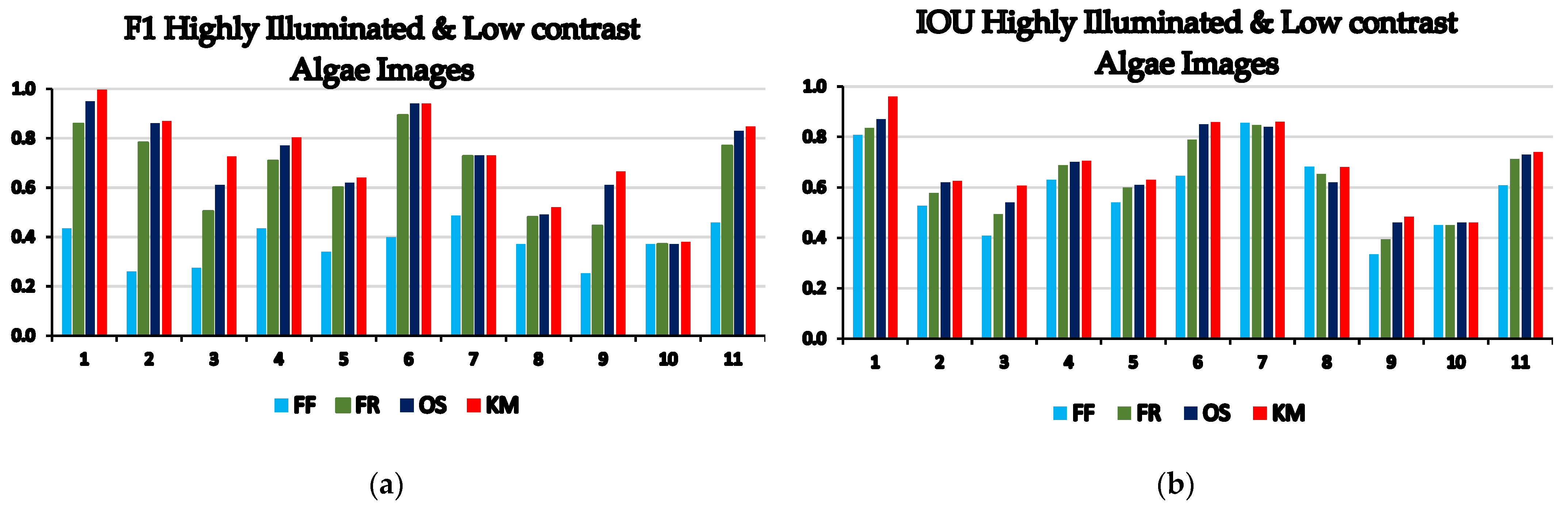

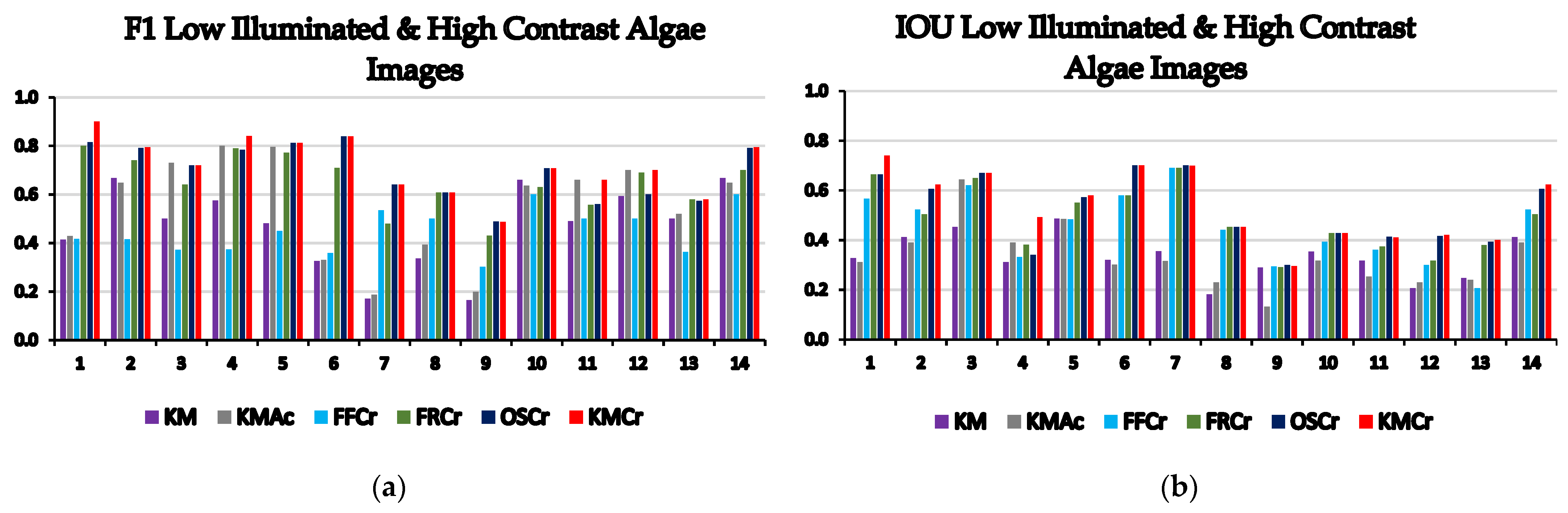

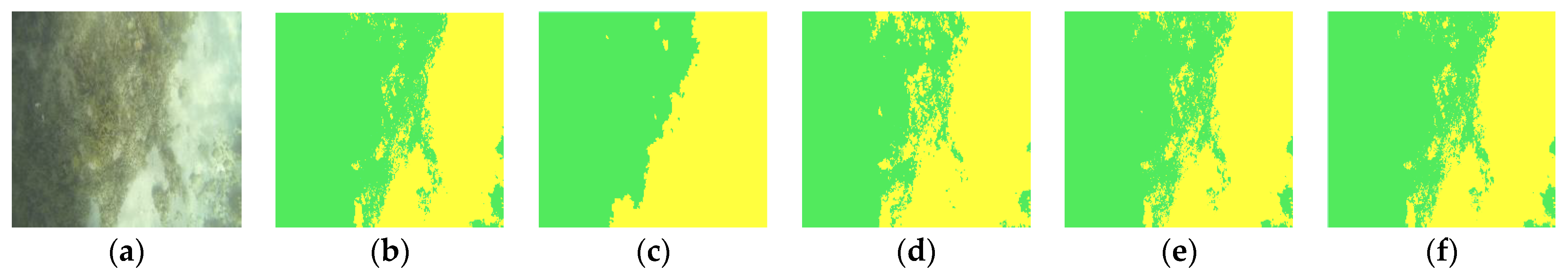

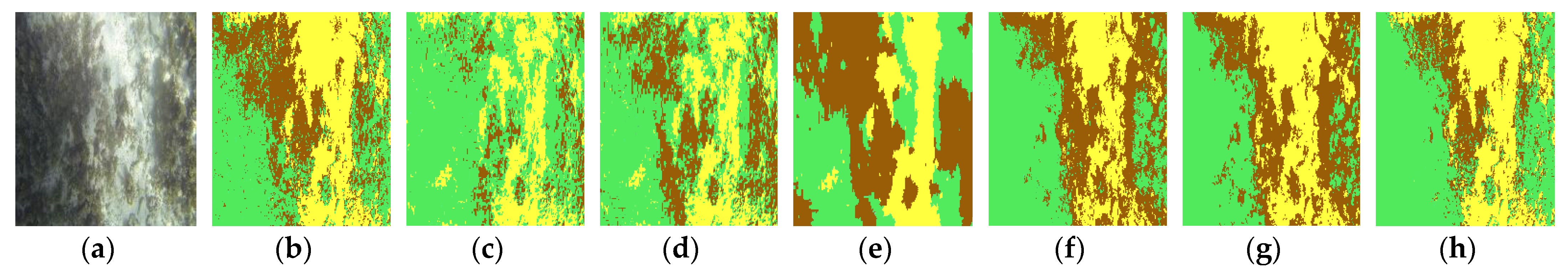

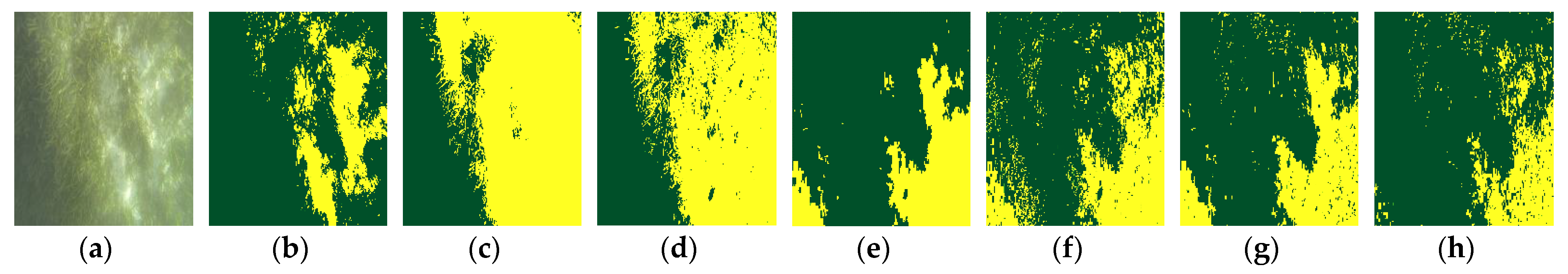

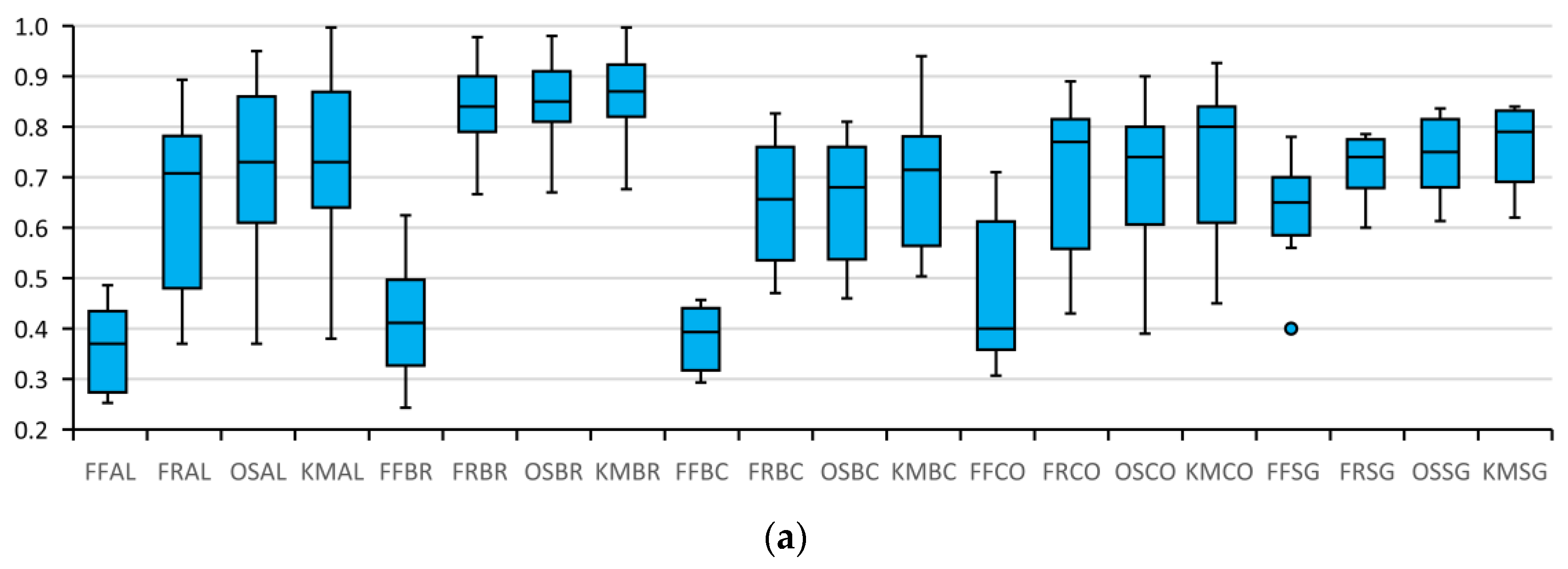

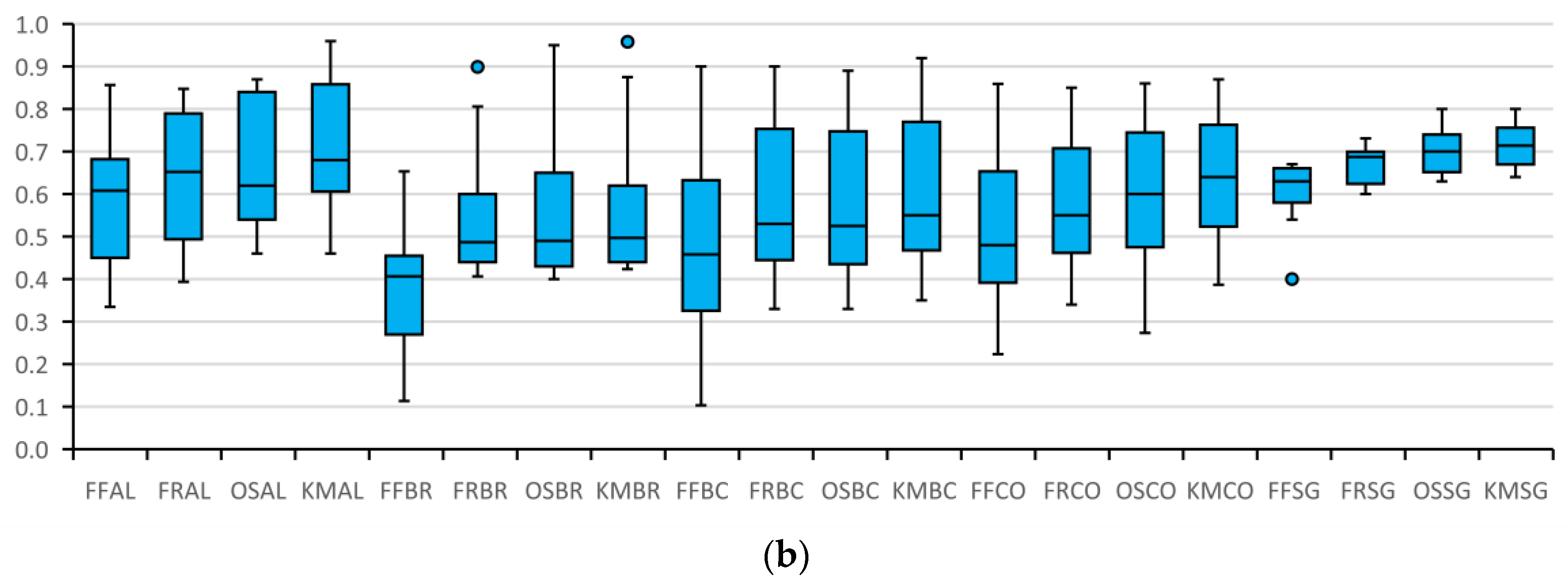

3.1. Results of Automatic Segmentation of Algal Images

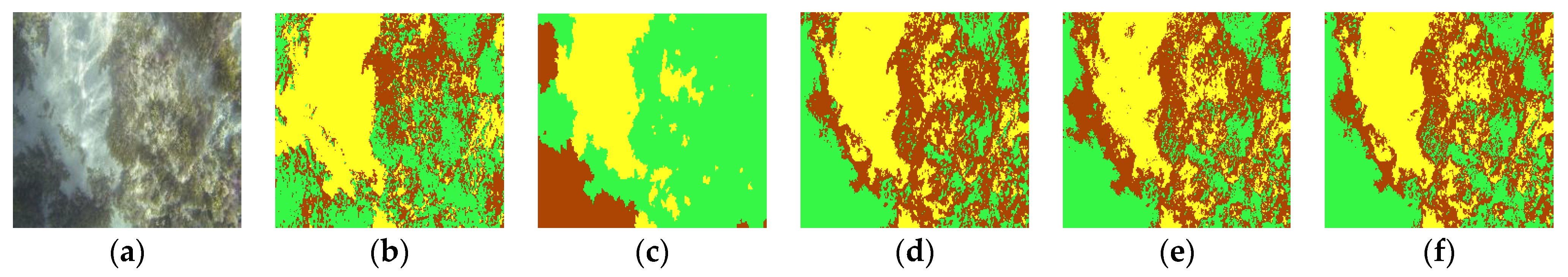

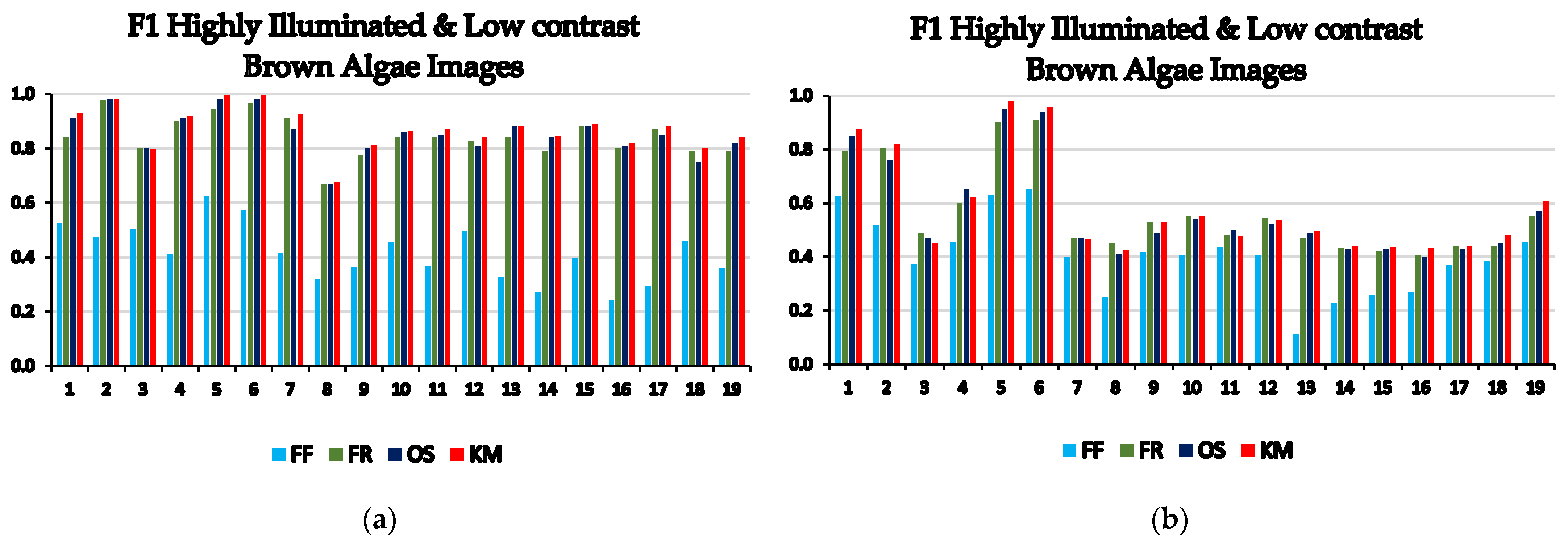

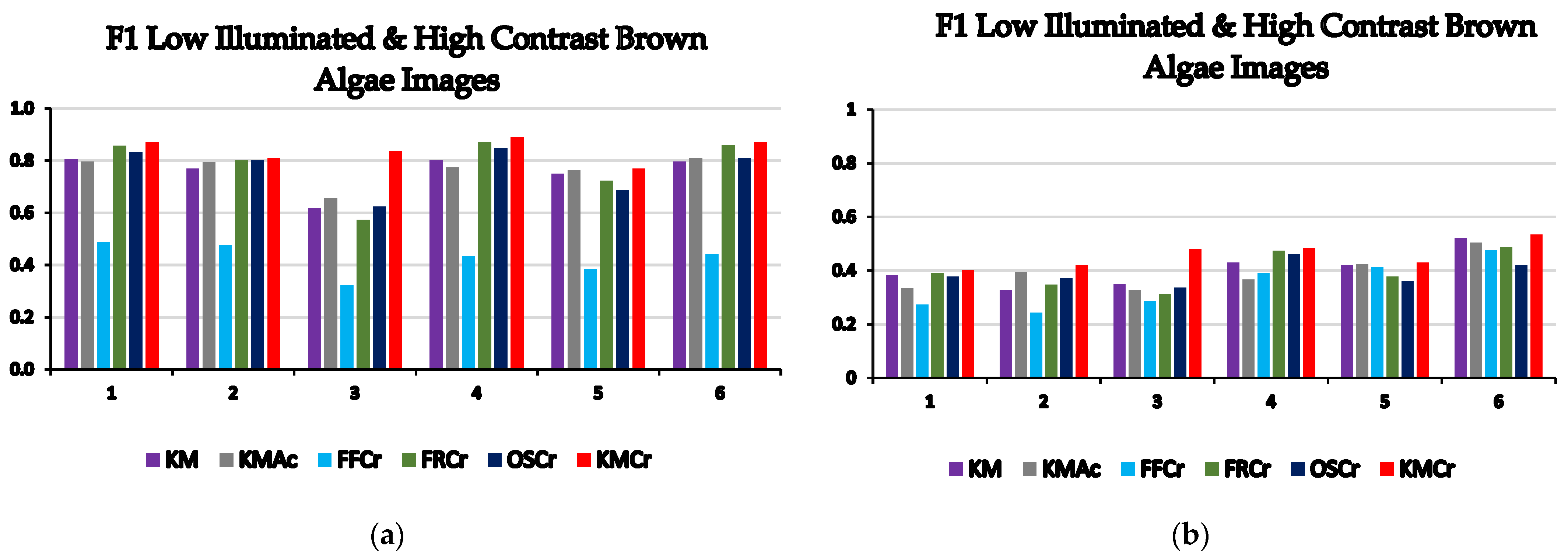

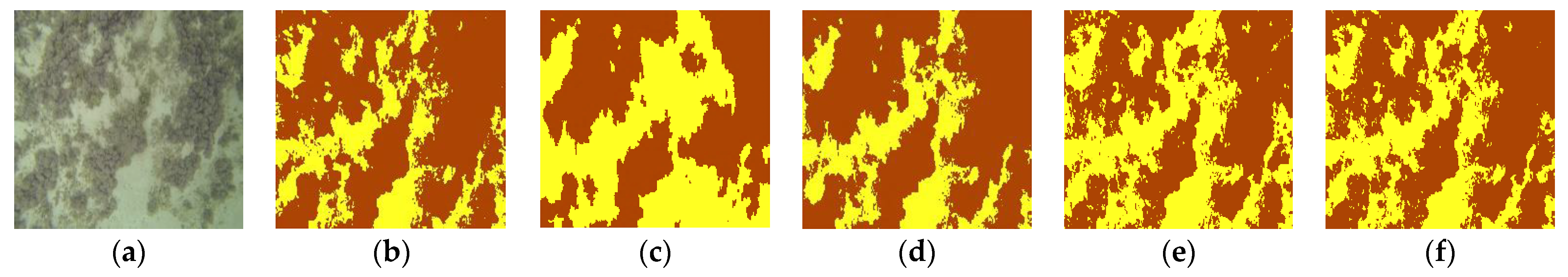

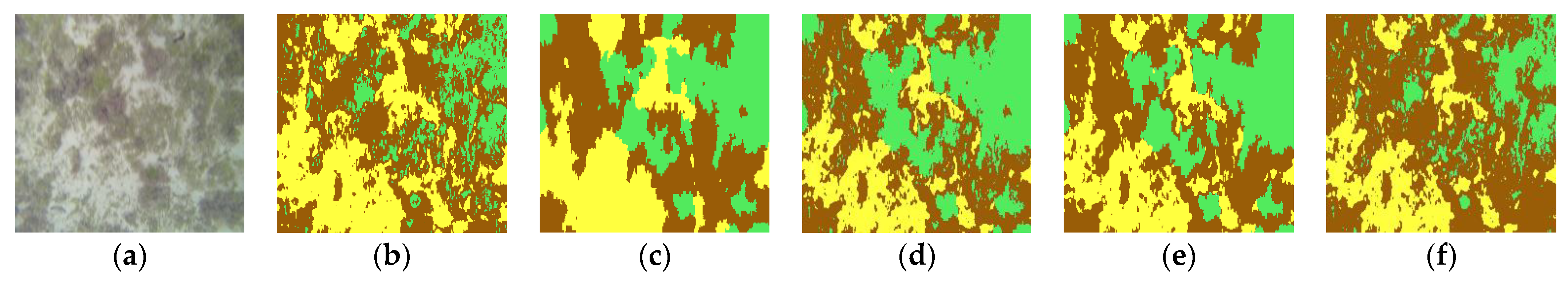

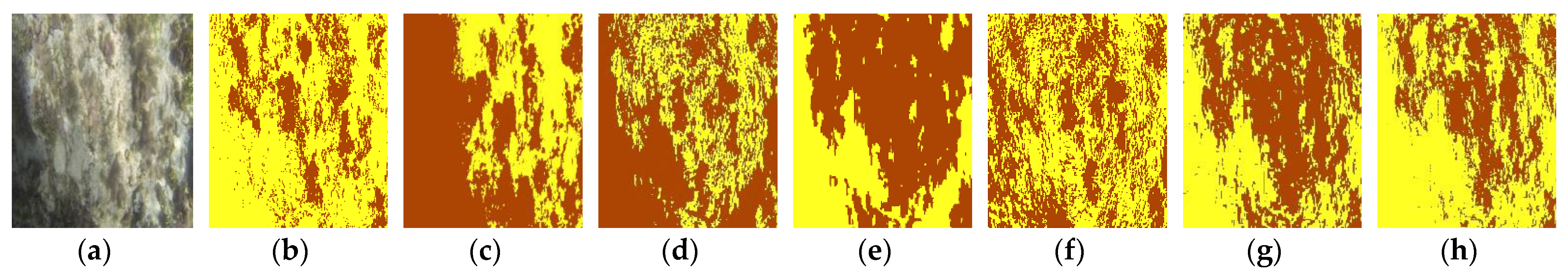

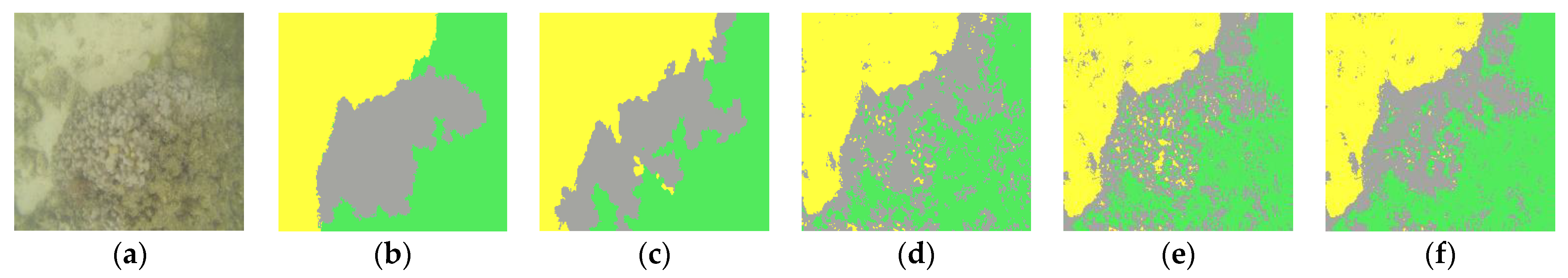

3.2. Results of Automatic Segmentation of Brown Algae Images

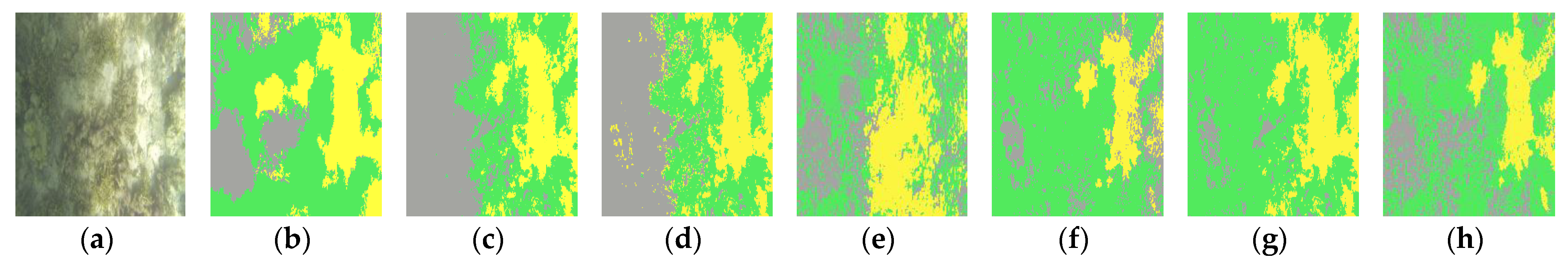

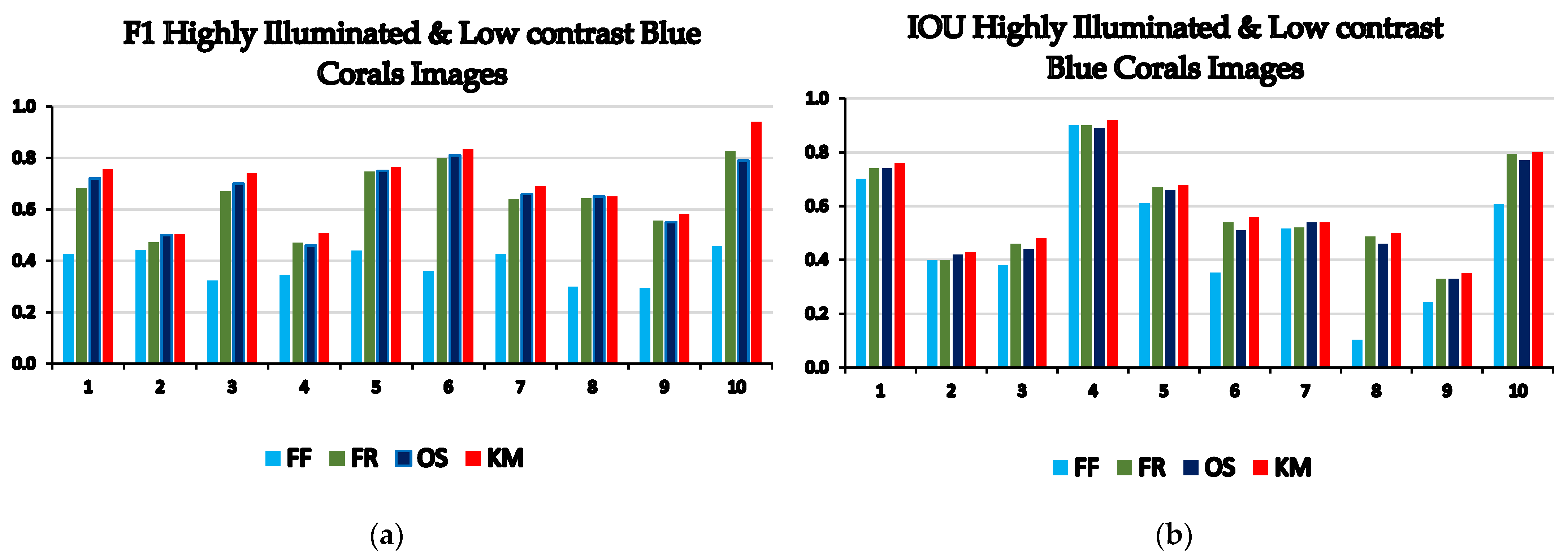

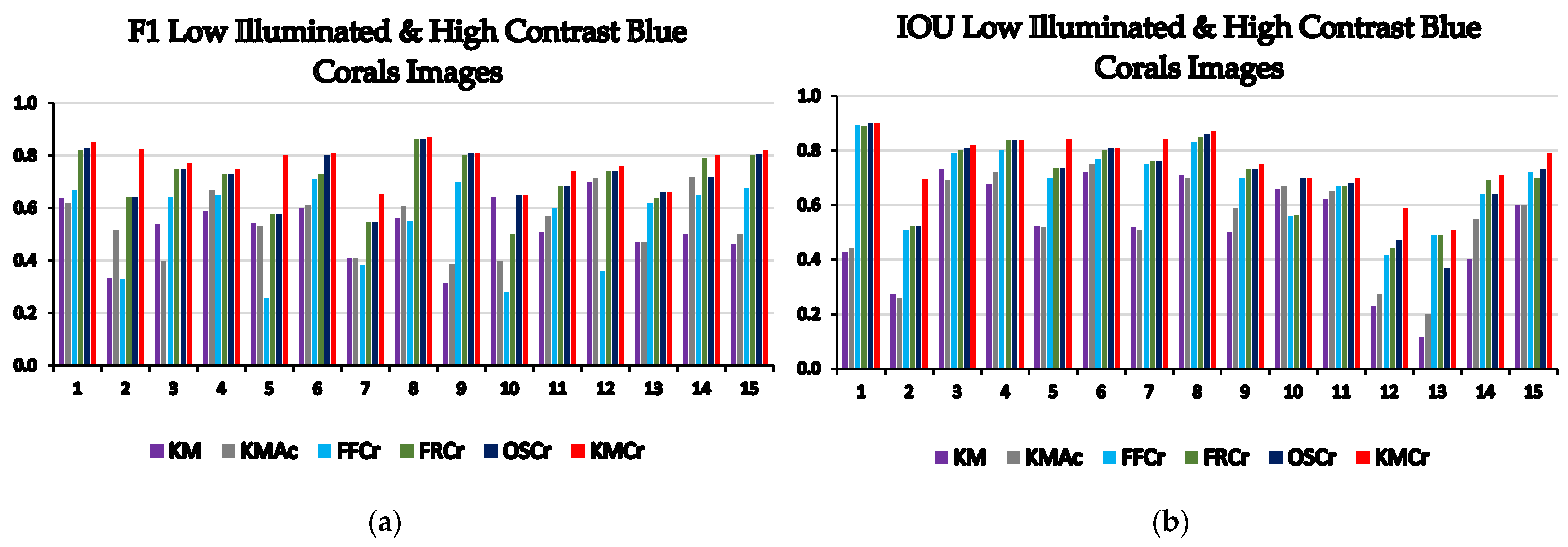

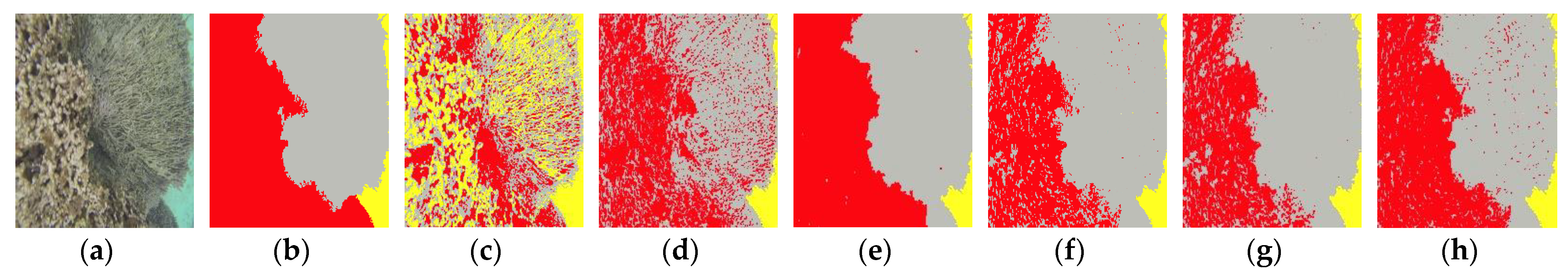

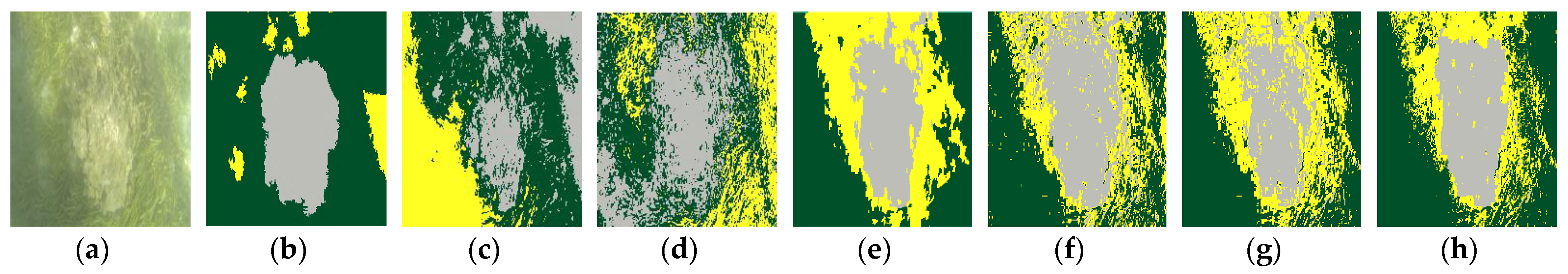

3.3. Results of Automatic Segmentation of Blue Coral Images

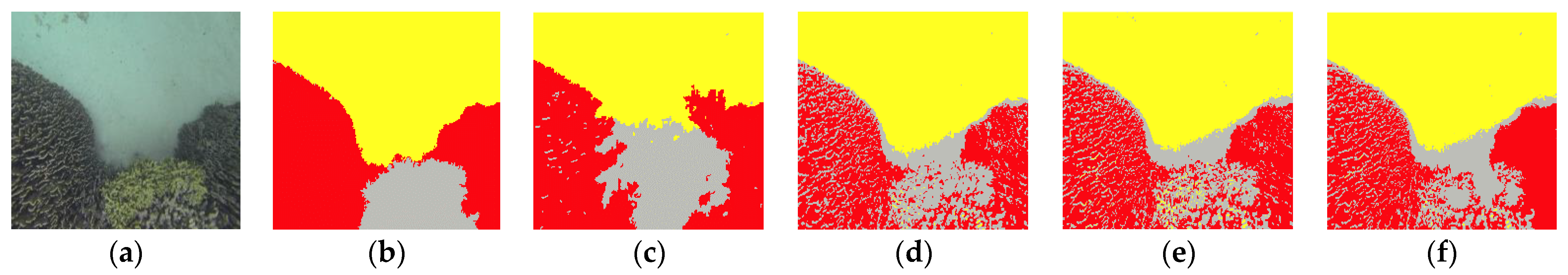

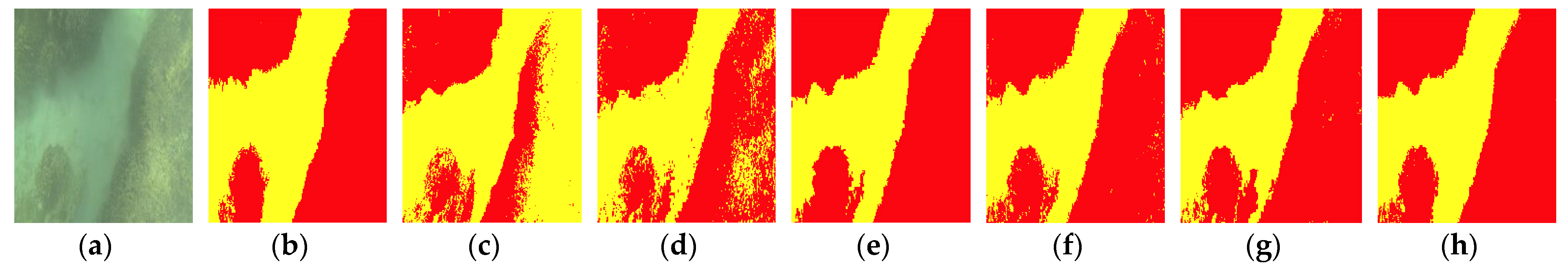

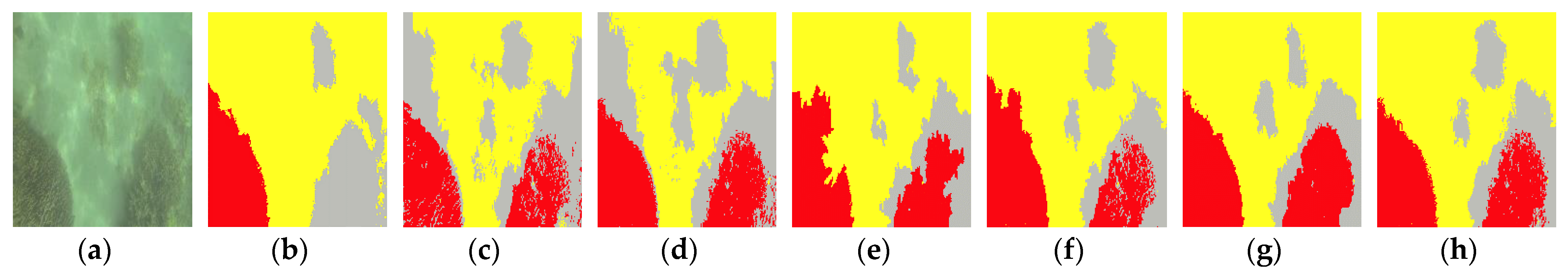

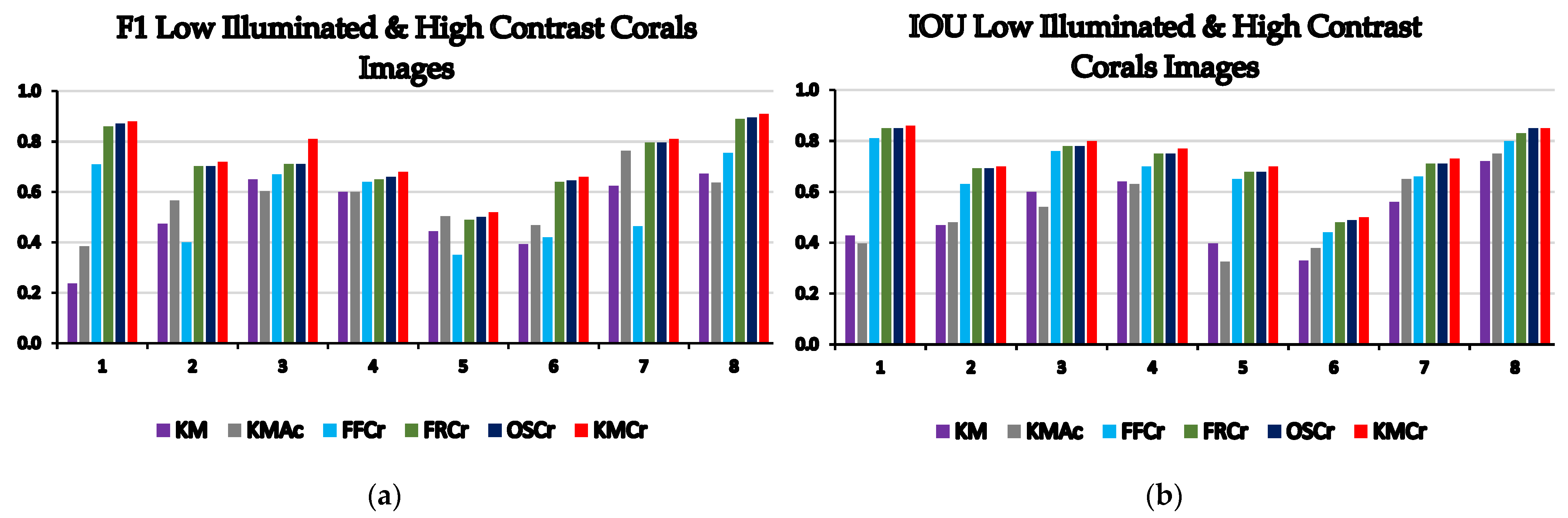

3.4. Results of Automatic Segmentation of Coral Images

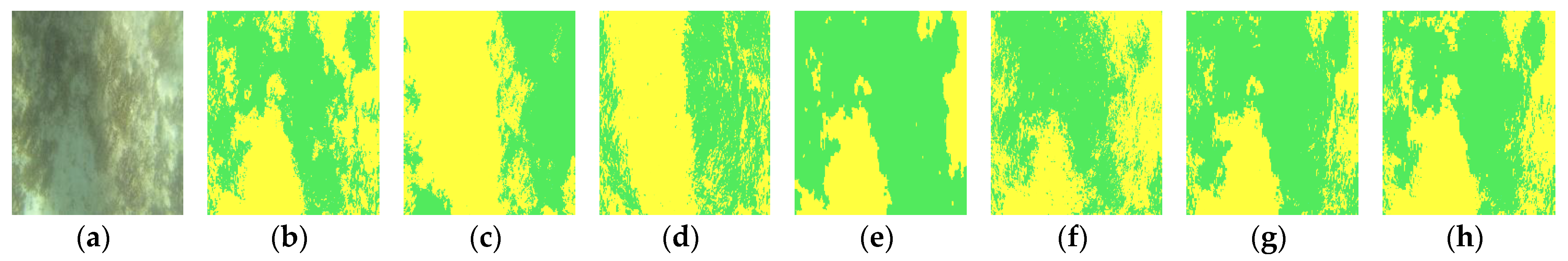

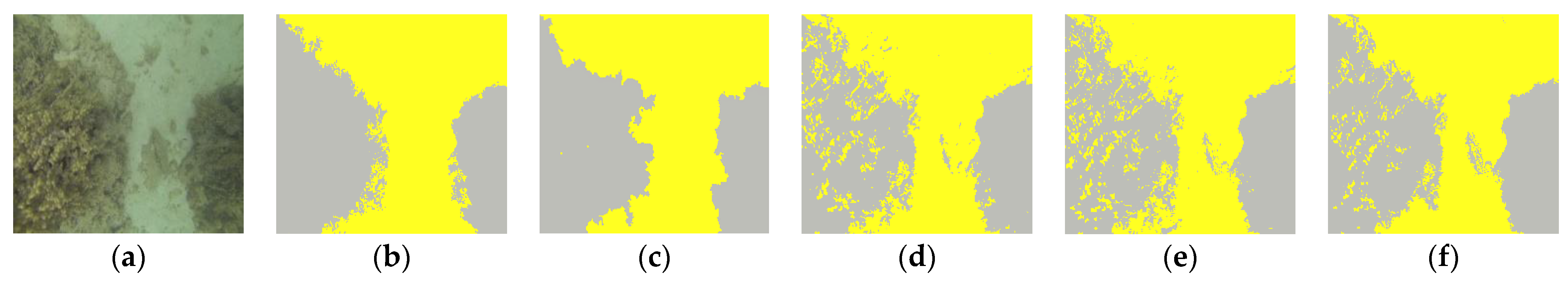

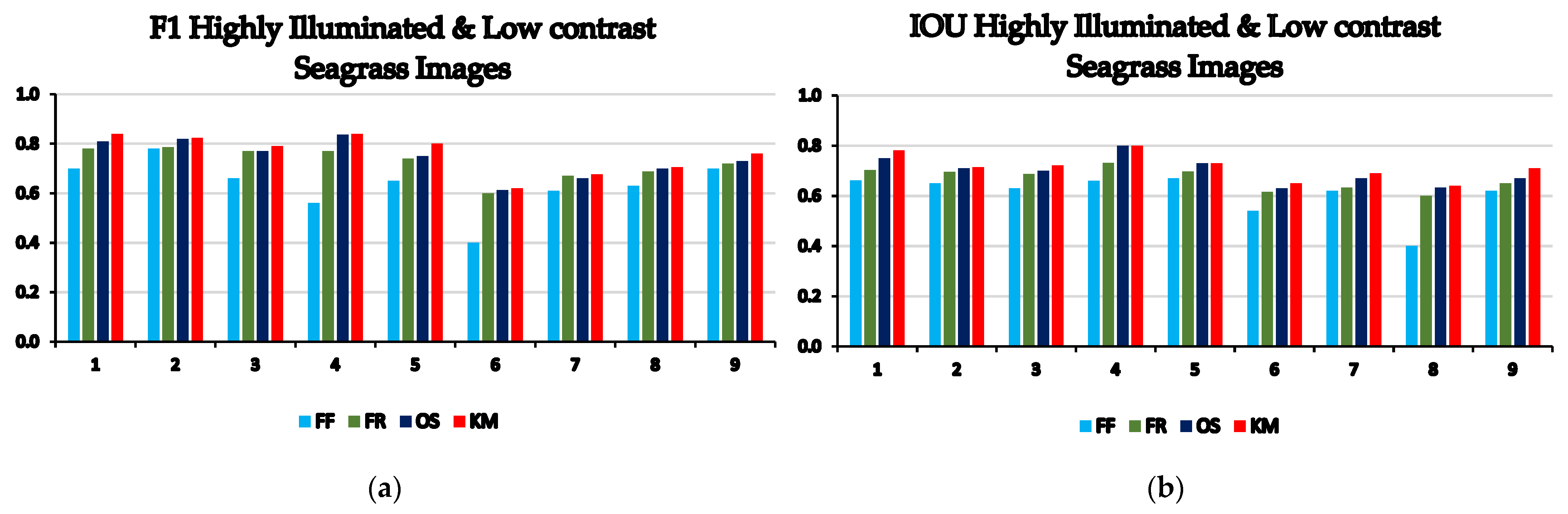

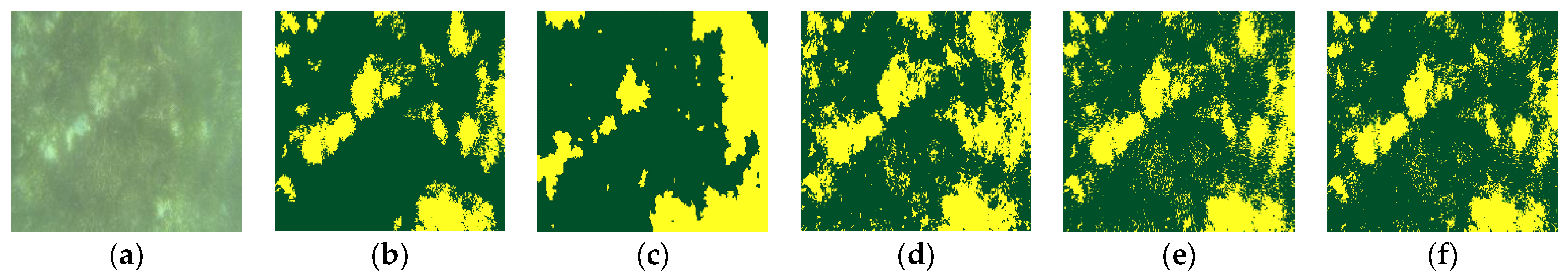

3.5. Results of Automatic Segmentation of Seagrass Images

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Li, A.S.; Chirayath, V.; Segal-Rozenhaimer, M.; Torres-Perez, J.L.; Van Den Bergh, J. NASA NeMO-Net’s Convolutional Neural Network: Mapping Marine Habitats with Spectrally Heterogeneous Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5115–5133. [Google Scholar] [CrossRef]

- Mizuno, K.; Terayama, K.; Hagino, S.; Tabeta, S.; Sakamoto, S.; Ogawa, T.; Sugimoto, K.; Fukami, H. An efficient coral survey method based on a large-scale 3-D structure model obtained by Speedy Sea Scanner and U-Net segmentation. Sci. Rep. 2020, 10, 12416. [Google Scholar] [CrossRef] [PubMed]

- Balado, J.; Olabarria, C.; Martínez-Sánchez, J.; Rodríguez-Pérez, J.R.; Pedro, A. Semantic segmentation of major macroalgae in coastal environments using high-resolution ground imagery and deep learning. Int. J. Remote Sens. 2021, 42, 1785–1800. [Google Scholar] [CrossRef]

- Gapper, J.J.; El-Askary, H.; Linstead, E.; Piechota, T. Coral reef change detection in remote Pacific Islands using support vector machine classifiers. Remote Sens. 2019, 11, 1525. [Google Scholar] [CrossRef] [Green Version]

- Pavoni, G.; Corsini, M.; Pedersen, N.; Petrovic, V.; Cignoni, P. Challenges in the deep learning-based semantic segmentation of benthic communities from Ortho-images. Appl. Geomat. 2020, 12, 131–146. [Google Scholar] [CrossRef]

- Chirayath, V.; Instrella, R. Fluid lensing and machine learning for centimeter-resolution airborne assessment of coral reefs in American Samoa. Remote Sens. Environ. 2019, 235, 111475. [Google Scholar] [CrossRef]

- Floor, J.; Kris, K.; Jan, T. Science, uncertainty and changing storylines in nature restoration: The case of seagrass restoration in the Dutch Wadden Sea. Ocean Coast. Manag. 2018, 157, 227–236. [Google Scholar] [CrossRef]

- Piazza, P.; Cummings, V.; Guzzi, A.; Hawes, I.; Lohrer, A.; Marini, S.; Marriott, P.; Menna, F.; Nocerino, E.; Peirano, A.; et al. Underwater photogrammetry in Antarctica: Long-term observations in benthic ecosystems and legacy data rescue. Polar Biol. 2019, 42, 1061–1079. [Google Scholar] [CrossRef] [Green Version]

- Mizuno, K.; Sakagami, M.; Deki, M.; Kawakubo, A.; Terayama, K.; Tabeta, S.; Sakamoto, S.; Matsumoto, Y.; Sugimoto, Y.; Ogawa, T.; et al. Development of an Efficient Coral-Coverage Estimation Method Using a Towed Optical Camera Array System [Speedy Sea Scanner (SSS)] and Deep-Learning-Based Segmentation: A Sea Trial at the Kujuku-Shima Islands. IEEE J. Ocean. Eng. 2019, 45, 1386–1395. [Google Scholar] [CrossRef]

- Price, D.M.; Robert, K.; Callaway, A.; Lo lacono, C.; Hall, R.A.; Huvenne, V.A.I. Using 3D photogrammetry from ROV video to quantify cold-water coral reef structural complexity and investigate its influence on biodiversity and community assemblage. Coral Reefs 2019, 38, 1007–1021. [Google Scholar] [CrossRef] [Green Version]

- Hamylton, S.M. Mapping coral reef environments: A review of historical methods, recent advances and future opportunities. Prog. Phys. Geogr. 2017, 41, 803–833. [Google Scholar] [CrossRef]

- Mahmood, A.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F.; Hovey, R.; Kendrick, G.; Fisher, R.B. Deep Learning for Coral Classification. In Handbook of Neural Computation; Academic Press: Cambridge, MA, USA, 2017; pp. 383–401. ISBN 9780128113196. [Google Scholar]

- Gómez-Ríos, A.; Tabik, S.; Luengo, J.; Shihavuddin, A.S.M.; Herrera, F. Coral species identification with texture or structure images using a two-level classifier based on Convolutional Neural Networks. Knowl. Based Syst. 2019, 184, 104891. [Google Scholar] [CrossRef] [Green Version]

- Agrafiotis, P.; Skarlatos, D.; Forbes, T.; Poullis, C.; Skamantzari, M.; Georgopoulos, A. Underwater photogrammetry in very shallow waters: Main challenges and caustics effect removal. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Riva del Garda, Italy, 4–7 June 2018; Volume XLII–2, pp. 15–22. [Google Scholar]

- Mahmood, A.; Ospina, A.G.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F.; Hovey, R.; Fisher, R.B.; Kendrick, G.A. Automatic hierarchical classification of kelps using deep residual features. Sensors 2020, 20, 447. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mahmood, A.; Bennamoun, M.; An, S.; Sohel, F.A.; Boussaid, F.; Hovey, R.; Kendrick, G.A.; Fisher, R.B. Deep Image Representations for Coral Image Classification. IEEE J. Ocean. Eng. 2018, 44, 121–131. [Google Scholar] [CrossRef] [Green Version]

- Beijbom, O.; Treibitz, T.; Kline, D.I.; Eyal, G.; Khen, A.; Neal, B.; Loya, Y.; Mitchell, B.G.; Kriegman, D. Improving Automated Annotation of Benthic Survey Images Using Wide-band Fluorescence. Sci. Rep. 2016, 6, 23166. [Google Scholar] [CrossRef] [PubMed]

- Yuval, M.; Eyal, G.; Tchernov, D.; Loya, Y.; Murillo, A.C.; Treibitz, T. Repeatable Semantic Reef-Mapping through Photogrammetry. Remote Sens. 2021, 13, 659. [Google Scholar] [CrossRef]

- Lumini, A.; Nanni, L.; Maguolo, G. Deep learning for plankton and coral classification. Appl. Comput. Inform. 2019, 15, 2. [Google Scholar] [CrossRef]

- Beijbom, O.; Edmunds, P.J.; Kline, D.I.; Mitchell, B.G.; Kriegman, D. Automated annotation of coral reef survey images. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1170–1177. [Google Scholar]

- Rashid, A.R.; Chennu, A. A trillion coral reef colors: Deeply annotated underwater hyperspectral images for automated classification and habitat mapping. Data 2020, 5, 19. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Büscher, J.V.; Köser, K.; Greinert, J.; Song, H.; Chen, Y.; Schoening, T. Automated activity estimation of the cold-water coral lophelia pertusa by multispectral imaging and computational pixel classification. J. Atmos. Ocean. Technol. 2021, 38, 141–154. [Google Scholar] [CrossRef]

- Williams, I.D.; Couch, C.; Beijbom, O.; Oliver, T.; Vargas-Angel, B.; Schumacher, B.; Brainard, R. Leveraging automated image analysis tools to transform our capacity to assess status and trends on coral reefs. Front. Mar. Sci. 2019, 6, 222. [Google Scholar] [CrossRef] [Green Version]

- Beijbom, O.; Edmunds, P.J.; Roelfsema, C.; Smith, J.; Kline, D.I.; Neal, B.P.; Dunlap, M.J.; Moriarty, V.; Fan, T.Y.; Tan, C.J.; et al. Towards automated annotation of benthic survey images: Variability of human experts and operational modes of automation. PLoS ONE 2015, 10, e0130312. [Google Scholar] [CrossRef] [PubMed]

- Zurowietz, M.; Langenkämper, D.; Hosking, B.; Ruhl, H.A.; Nattkemper, T.W. MAIA—A machine learning assisted image annotation method for environmental monitoring and exploration. PLoS ONE 2018, 13, e0207498. [Google Scholar] [CrossRef] [PubMed]

- Mahmood, A.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F.; Hovey, R.; Kendrick, G.; Fisher, R.B. Automatic Annotation of Coral Reefs using Deep Learning. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–5. [Google Scholar]

- Modasshir, M.; Rekleitis, I. Enhancing Coral Reef Monitoring Utilizing a Deep Semi-Supervised Learning Approach. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 23–27 May 2020; pp. 1874–1880. [Google Scholar]

- King, A.; Bhandarkar, S.M.; Hopkinson, B.M. A comparison of deep learning methods for semantic segmentation of coral reef survey images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1475–1483. [Google Scholar]

- Yu, X.; Ouyang, B.; Principe, J.C.; Farrington, S.; Reed, J.; Li, Y. Weakly supervised learning of point-level annotation for coral image segmentation. In Proceedings of the OCEANS 2019 MTS/IEEE Seattle, Seattle, WA, USA, 27–31 October 2019. [Google Scholar]

- Wang, S.; Chen, W.; Xie, S.M.; Azzari, G.; Lobell, D.B. Weakly supervised deep learning for segmentation of remote sensing imagery. Remote Sens. 2020, 12, 207. [Google Scholar] [CrossRef] [Green Version]

- Xu, L. Deep Learning for Image Classification and Segmentation with Scarce Labelled Data. Doctoral Thesis, University of Western Australia, Crawley, Australia, 2021. [Google Scholar]

- Yu, X.; Ouyang, B.; Principe, J.C. Coral image segmentation with point-supervision via latent dirichlet allocation with spatial coherence. J. Mar. Sci. Eng. 2021, 9, 157. [Google Scholar] [CrossRef]

- Alonso, I.; Yuval, M.; Eyal, G.; Treibitz, T.; Murillo, A.C. CoralSeg: Learning coral segmentation from sparse annotations. J. Field Robot. 2019, 36, 1456–1477. [Google Scholar] [CrossRef]

- Prado, E.; Rodríguez-Basalo, A.; Cobo, A.; Ríos, P.; Sánchez, F. 3D fine-scale terrain variables from underwater photogrammetry: A new approach to benthic microhabitat modeling in a circalittoral Rocky shelf. Remote Sens. 2020, 12, 2466. [Google Scholar] [CrossRef]

- Song, H.; Mehdi, S.R.; Zhang, Y.; Shentu, Y.; Wan, Q.; Wang, W.; Raza, K.; Huang, H. Development of coral investigation system based on semantic segmentation of single-channel images. Sensors 2021, 21, 1848. [Google Scholar] [CrossRef]

- Akkaynak, D.; Treibitz, T. Sea-THRU: A method for removing water from underwater images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Volume 2019-June, pp. 1682–1691. [Google Scholar]

- Pavoni, G.; Corsini, M.; Callieri, M.; Fiameni, G.; Edwards, C.; Cignoni, P. On improving the training of models for the semantic segmentation of benthic communities from orthographic imagery. Remote Sens. 2020, 12, 3106. [Google Scholar] [CrossRef]

- Hongo, C.; Kiguchi, M. Assessment to 2100 of the effects of reef formation on increased wave heights due to intensified tropical cyclones and sea level rise at Ishigaki Island, Okinawa, Japan. Coast. Eng. J. 2021, 63, 216–226. [Google Scholar] [CrossRef]

- GoPro Hero3 + (Black Edition) Specs. Available online: https://www.cnet.com/products/gopro-hero3-plus-black-edition/specs/ (accessed on 20 August 2021).

- Sirmaçek, B.; Ünsalan, C. Damaged building detection in aerial images using shadow information. In Proceedings of the 4th International Conference on Recent Advances Space Technologies, Istanbul, Turkey, 11–13 June 2009; pp. 249–252. [Google Scholar]

- Chen, W.; Hu, X.; Chen, W.; Hong, Y.; Yang, M. Airborne LiDAR remote sensing for individual tree forest inventory using trunk detection-aided mean shift clustering techniques. Remote Sens. 2018, 10, 1078. [Google Scholar] [CrossRef] [Green Version]

- Basar, S.; Ali, M.; Ochoa-Ruiz, G.; Zareei, M.; Waheed, A.; Adnan, A. Unsupervised color image segmentation: A case of RGB histogram based K-means clustering initialization. PLoS ONE 2020, 15, e0240015. [Google Scholar] [CrossRef] [PubMed]

- Chang, Z.; Du, Z.; Zhang, F.; Huang, F.; Chen, J.; Li, W.; Guo, Z. Landslide susceptibility prediction based on remote sensing images and GIS: Comparisons of supervised and unsupervised machine learning models. Remote Sens. 2020, 12, 502. [Google Scholar] [CrossRef] [Green Version]

- Khairudin, N.A.A.; Rohaizad, N.S.; Nasir, A.S.A.; Chin, L.C.; Jaafar, H.; Mohamed, Z. Image segmentation using k-means clustering and otsu’s thresholding with classification method for human intestinal parasites. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chennai, India, 16–17 September 2020; Volume 864. [Google Scholar]

- Alam, M.S.; Rahman, M.M.; Hossain, M.A.; Islam, M.K.; Ahmed, K.M.; Ahmed, K.T.; Singh, B.C.; Miah, M.S. Automatic human brain tumor detection in mri image using template-based k means and improved fuzzy c means clustering algorithm. Big Data Cogn. Comput. 2019, 3, 27. [Google Scholar] [CrossRef] [Green Version]

- Luo, L.; Bachagha, N.; Yao, Y.; Liu, C.; Shi, P.; Zhu, L.; Shao, J.; Wang, X. Identifying linear traces of the Han Dynasty Great Wall in Dunhuang Using Gaofen-1 satellite remote sensing imagery and the hough transform. Remote Sens. 2019, 11, 2711. [Google Scholar] [CrossRef] [Green Version]

- Xu, S.; Liao, Y.; Yan, X.; Zhang, G. Change detection in SAR images based on iterative Otsu. Eur. J. Remote Sens. 2020, 53, 331–339. [Google Scholar] [CrossRef]

- Yu, Y.; Bao, Y.; Wang, J.; Chu, H.; Zhao, N.; He, Y.; Liu, Y. Crop row segmentation and detection in paddy fields based on treble-classification otsu and double-dimensional clustering method. Remote Sens. 2021, 13, 901. [Google Scholar] [CrossRef]

- Srinivas, C.; Prasad, M.; Sirisha, M. Remote Sensing Image Segmentation using OTSU Algorithm Vishnu Institute of Technology Input image. Int. J. Comput. Appl. 2019, 178, 46–50. [Google Scholar]

- Wiharto, W.; Suryani, E. The comparison of clustering algorithms K-means and fuzzy C-means for segmentation retinal blood vessels. Acta Inform. Med. 2020, 28, 42–47. [Google Scholar] [CrossRef]

- Yan, W.; Shi, S.; Pan, L.; Zhang, G.; Wang, L. Unsupervised change detection in SAR images based on frequency difference and a modified fuzzy c-means clustering. Int. J. Remote Sens. 2018, 39, 3055–3075. [Google Scholar] [CrossRef]

- Lei, T.; Jia, X.; Zhang, Y.; He, L.; Meng, H.; Nandi, A.K. Significantly Fast and Robust Fuzzy C-Means Clustering Algorithm Based on Morphological Reconstruction and Membership Filtering. IEEE Trans. Fuzzy Syst. 2018, 26, 3027–3041. [Google Scholar] [CrossRef] [Green Version]

- Ghaffari, R.; Golpardaz, M.; Helfroush, M.S.; Danyali, H. A fast, weighted CRF algorithm based on a two-step superpixel generation for SAR image segmentation. Int. J. Remote Sens. 2020, 41, 3535–3557. [Google Scholar] [CrossRef]

- Lei, T.; Jia, X.; Zhang, Y.; Liu, S.; Meng, H.; Nandi, A.K. Superpixel-Based Fast Fuzzy C-Means Clustering for Color Image Segmentation. IEEE Trans. Fuzzy Syst. 2019, 27, 1753–1766. [Google Scholar] [CrossRef] [Green Version]

- Shang, R.; Peng, P.; Shang, F.; Jiao, L.; Shen, Y.; Stolkin, R. Semantic segmentation for sar image based on texture complexity analysis and key superpixels. Remote Sens. 2020, 12, 2141. [Google Scholar] [CrossRef]

- Liu, D.; Yu, J. Otsu method and K-means. In Proceedings of the 9th International Conference on Hybrid Intelligent Systems, Shenyang, China, 12–14 August 2009; Volume 1, pp. 344–349. [Google Scholar]

- Dallali, A.; El Khediri, S.; Slimen, A.; Kachouri, A. Breast tumors segmentation using Otsu method and K-means. In Proceedings of the 2018 4th International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Sousse, Tunisia, 21–24 March 2018. [Google Scholar] [CrossRef]

- Dubey, A.K.; Gupta, U.; Jain, S. Comparative study of K-means and fuzzy C-means algorithms on the breast cancer data. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 18–29. [Google Scholar] [CrossRef]

- Kumar, A.; Tiwari, A. A Comparative Study of Otsu Thresholding and K-means Algorithm of Image Segmentation. Int. J. Eng. Tech. Res. 2019, 9, 2454–4698. [Google Scholar] [CrossRef]

- Hassan, A.A.H.; Shah, W.M.; Othman, M.F.I.; Hassan, H.A.H. Evaluate the performance of K-Means and the fuzzy C-Means algorithms to formation balanced clusters in wireless sensor networks. Int. J. Electr. Comput. Eng. 2020, 10, 1515–1523. [Google Scholar] [CrossRef]

- Akkaynak, D.; Treibitz, T.; Shlesinger, T.; Tamir, R.; Loya, Y.; Iluz, D. What is the space of attenuation coefficients in underwater computer vision? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 568–577. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef] [Green Version]

- Bourmaud, G.; Mégret, R.; Giremus, A.; Berthoumieu, Y. Global motion estimation from relative measurements using iterated extended Kalman filter on matrix LIE groups. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Wang, S.; Kubota, T.; Siskind, J.M.; Wang, J. Salient closed boundary extraction with ratio contour. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 546–561. [Google Scholar] [CrossRef] [Green Version]

- Lorenzo-Valdés, M.; Sanchez-Ortiz, G.I.; Elkington, A.G.; Mohiaddin, R.H.; Rueckert, D. Segmentation of 4D cardiac MR images using a probabilistic atlas and the EM algorithm. Med. Image Anal. 2004, 8, 255–265. [Google Scholar] [CrossRef]

- Wang, Z. A New Approach for Segmentation and Quantification of Cells or Nanoparticles. IEEE Trans. Ind. Inform. 2016, 12, 962–971. [Google Scholar] [CrossRef]

- Hooshmand Moghaddam, V.; Hamidzadeh, J. New Hermite orthogonal polynomial kernel and combined kernels in Support Vector Machine classifier. Pattern Recognit. 2016, 60, 921–935. [Google Scholar] [CrossRef]

- Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Fan, P.; Lang, G.; Yan, B.; Lei, X.; Guo, P.; Liu, Z.; Yang, F. A method of segmenting apples based on gray-centered rgb color space. Remote Sens. 2021, 13, 1211. [Google Scholar] [CrossRef]

- Su, Y.; Gao, Y.; Zhang, Y.; Alvarez, J.M.; Yang, J.; Kong, H. An Illumination-Invariant Nonparametric Model for Urban Road Detection. IEEE Trans. Intell. Veh. 2019, 4, 14–23. [Google Scholar] [CrossRef]

| Benthic Habitat | F1-FF | F1-FR | F1-OS | F1-KM | IOU-FF | IOU-FR | IOU-OS | IOU-KM |

|---|---|---|---|---|---|---|---|---|

| AL | 0.37 | 0.65 | 0.71 | 0.74 | 0.59 | 0.64 | 0.66 | 0.69 |

| BR | 0.41 | 0.85 | 0.86 | 0.87 | 0.40 | 0.56 | 0.57 | 0.58 |

| BC | 0.38 | 0.65 | 0.66 | 0.70 | 0.48 | 0.58 | 0.58 | 0.60 |

| CO | 0.46 | 0.70 | 0.70 | 0.73 | 0.52 | 0.58 | 0.58 | 0.63 |

| SG | 0.63 | 0.72 | 0.74 | 0.76 | 0.61 | 0.67 | 0.70 | 0.72 |

| Benthic Habitat | F1 KM | F1 KMAc | F1 FFCr | F1 FRCr | F1 OSCr | F1 KMCr | IOU KM | IOU KMAc | IOU FFCr | IOU FRCr | IOU OSCr | IOU KMCr |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AL | 0.47 | 0.55 | 0.45 | 0.65 | 0.70 | 0.72 | 0.33 | 0.33 | 0.45 | 0.48 | 0.52 | 0.54 |

| BR | 0.76 | 0.77 | 0.42 | 0.78 | 0.77 | 0.84 | 0.41 | 0.39 | 0.35 | 0.40 | 0.39 | 0.46 |

| BC | 0.52 | 0.54 | 0.54 | 0.71 | 0.72 | 0.77 | 0.51 | 0.54 | 0.68 | 0.70 | 0.70 | 0.76 |

| CO | 0.51 | 0.57 | 0.55 | 0.72 | 0.72 | 0.75 | 0.52 | 0.52 | 0.68 | 0.72 | 0.72 | 0.74 |

| SG | 0.54 | 0.53 | 0.63 | 0.68 | 0.69 | 0.73 | 0.26 | 0.26 | 0.39 | 0.57 | 0.57 | 0.62 |

| Comparison Test | p-Value t-Test F1 | p-Value Wilcoxon Test F1 | p-Value t-Test IOU | p-Value Wilcoxon-Test IOU | Comparison Results |

|---|---|---|---|---|---|

| KM vs. OS | 0.03 (h = 1) | 0.051 (h = 0) | 0.127 (h = 0) | 0.154 (h = 0) | Not significantly different |

| KM vs. FR | 0.006 (h = 1) | 0.004 (h = 1) | 0.037 (h = 1) | 0.042 (h = 1) | Significantly different |

| KM vs. FF | <0.001 (h = 1) | <0.001 (h = 1) | <0.001 (h = 1) | <0.001 (h = 1) | Significantly different |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohamed, H.; Nadaoka, K.; Nakamura, T. Automatic Semantic Segmentation of Benthic Habitats Using Images from Towed Underwater Camera in a Complex Shallow Water Environment. Remote Sens. 2022, 14, 1818. https://doi.org/10.3390/rs14081818

Mohamed H, Nadaoka K, Nakamura T. Automatic Semantic Segmentation of Benthic Habitats Using Images from Towed Underwater Camera in a Complex Shallow Water Environment. Remote Sensing. 2022; 14(8):1818. https://doi.org/10.3390/rs14081818

Chicago/Turabian StyleMohamed, Hassan, Kazuo Nadaoka, and Takashi Nakamura. 2022. "Automatic Semantic Segmentation of Benthic Habitats Using Images from Towed Underwater Camera in a Complex Shallow Water Environment" Remote Sensing 14, no. 8: 1818. https://doi.org/10.3390/rs14081818

APA StyleMohamed, H., Nadaoka, K., & Nakamura, T. (2022). Automatic Semantic Segmentation of Benthic Habitats Using Images from Towed Underwater Camera in a Complex Shallow Water Environment. Remote Sensing, 14(8), 1818. https://doi.org/10.3390/rs14081818