3.1. Problem Formulation

Consider a general matrix representation of a point cloud with

N points and

K attributes,

where

denotes the

ith attribute and

denotes the

jth point. Specifically, the actual number of

K varies according to the output feature size of each layer. The attributes contain 3D coordinates and context features. The context features can be the original input features or the extracted features. For instance, the input feature of velodyne LiDAR is the one-dimensional laser reflection intensity, and it is the three-dimensional RGB colors of the RGB-D camera. Additionally, the extracted features come from the neural network layers. To distinguish 3D coordinates from the other attributes, we store them in the first three columns of

and call that submatrix

, while storing the rest in the last

columns of

and call that submatrix

.

The target of the LS module in

Figure 2 is to create a sampling matrix,

where

represents the

ith sampled point and

represents the

jth point before sampling.

N is the original points size and

is the sampled points size. This matrix is used to select

(

) points from the original points. Let the sampled point cloud be

and the original point cloud be

. To achieve this, column

should be a one-hot vector, defined as

There should be only one original point selected in each column

, defined as

With the sampling matrix

and original point cloud

, we can acquire the new sampling point cloud

through matrix multiplication:

The invariance properties of the sampling approach are pivotal. Since the intrinsic distribution of 3D points remains the same when we permutate, shift, and rotate a point cloud, the outputs of the sampling strategy are also not expected to be changed. These invariance properties will be analyzed on the coordinate matrix alone because the features of each point () will not be influenced by them.

Definition 1. A sampling strategy is permutation-invariant when, given input , ∀ permutation matrix of size N, Definition 2. A sampling strategy is shift-invariant when, given input , ∀ shift matrix of size 3, Definition 3. A sampling strategy is rotation-invariant when, given input , ∀ rotation matrix of size 3, The softmax function is also permutation-invariant, which is already proved in [

10].

Lemma 1. Given , ∀ permutation matrix of size N, 3.2. Network Architecture

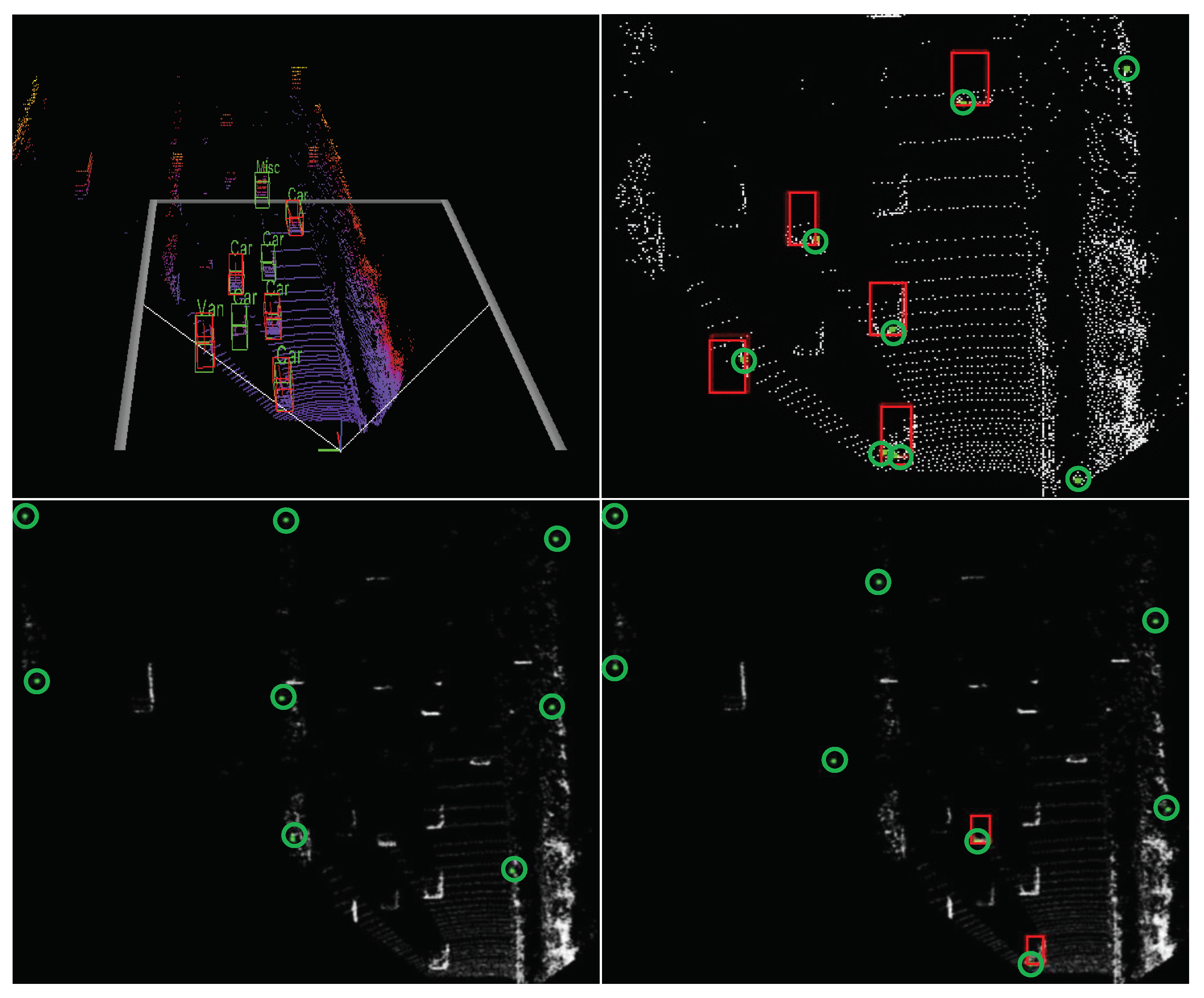

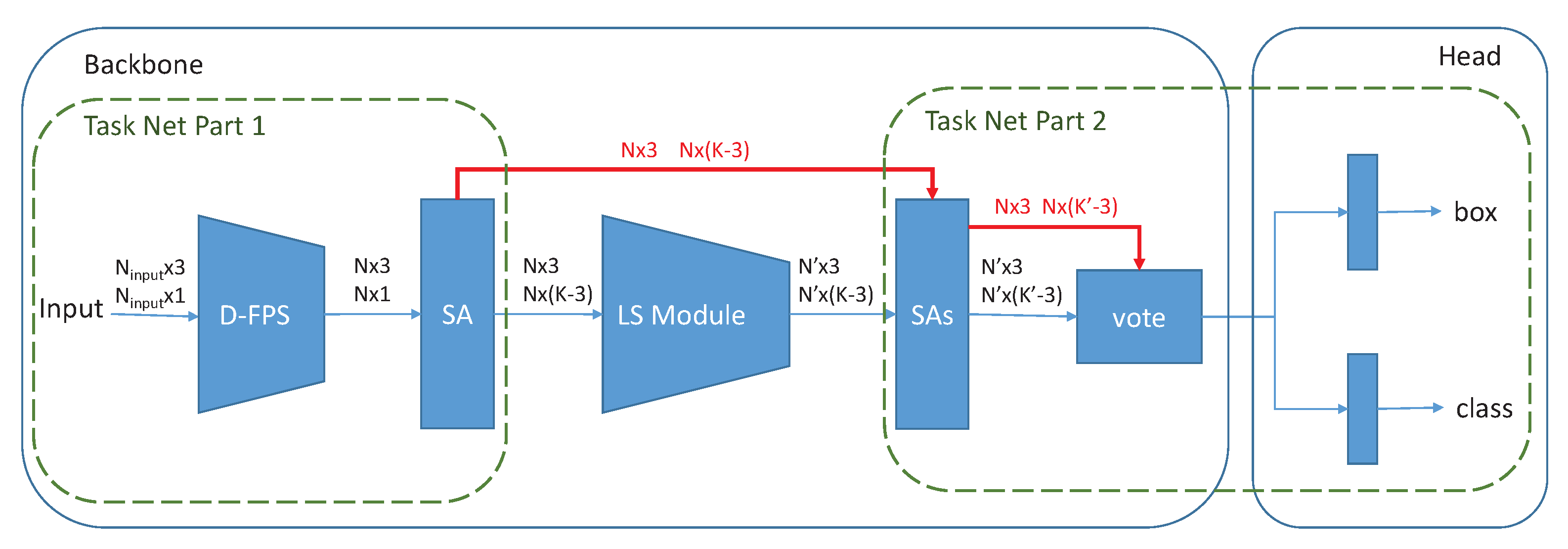

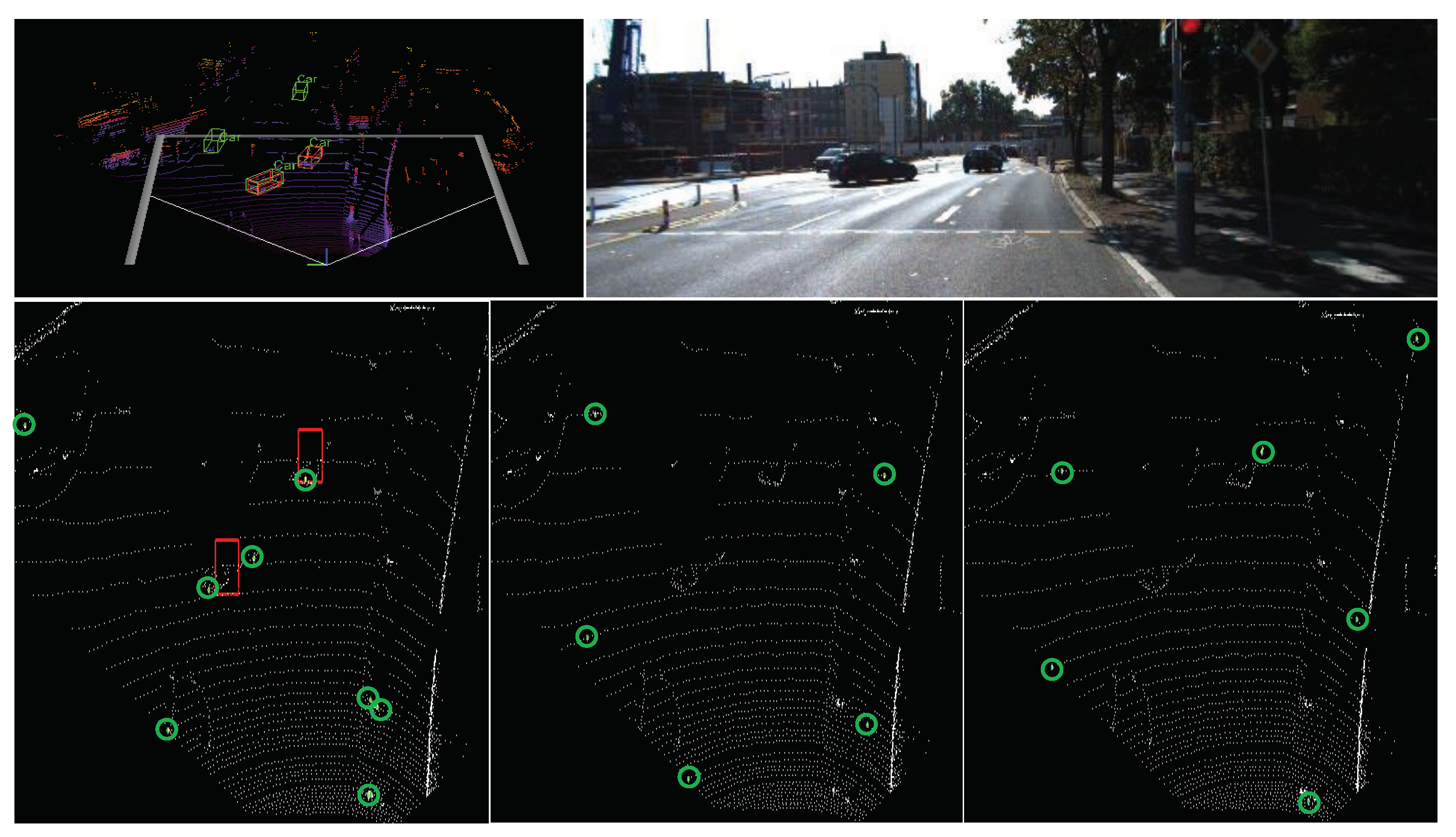

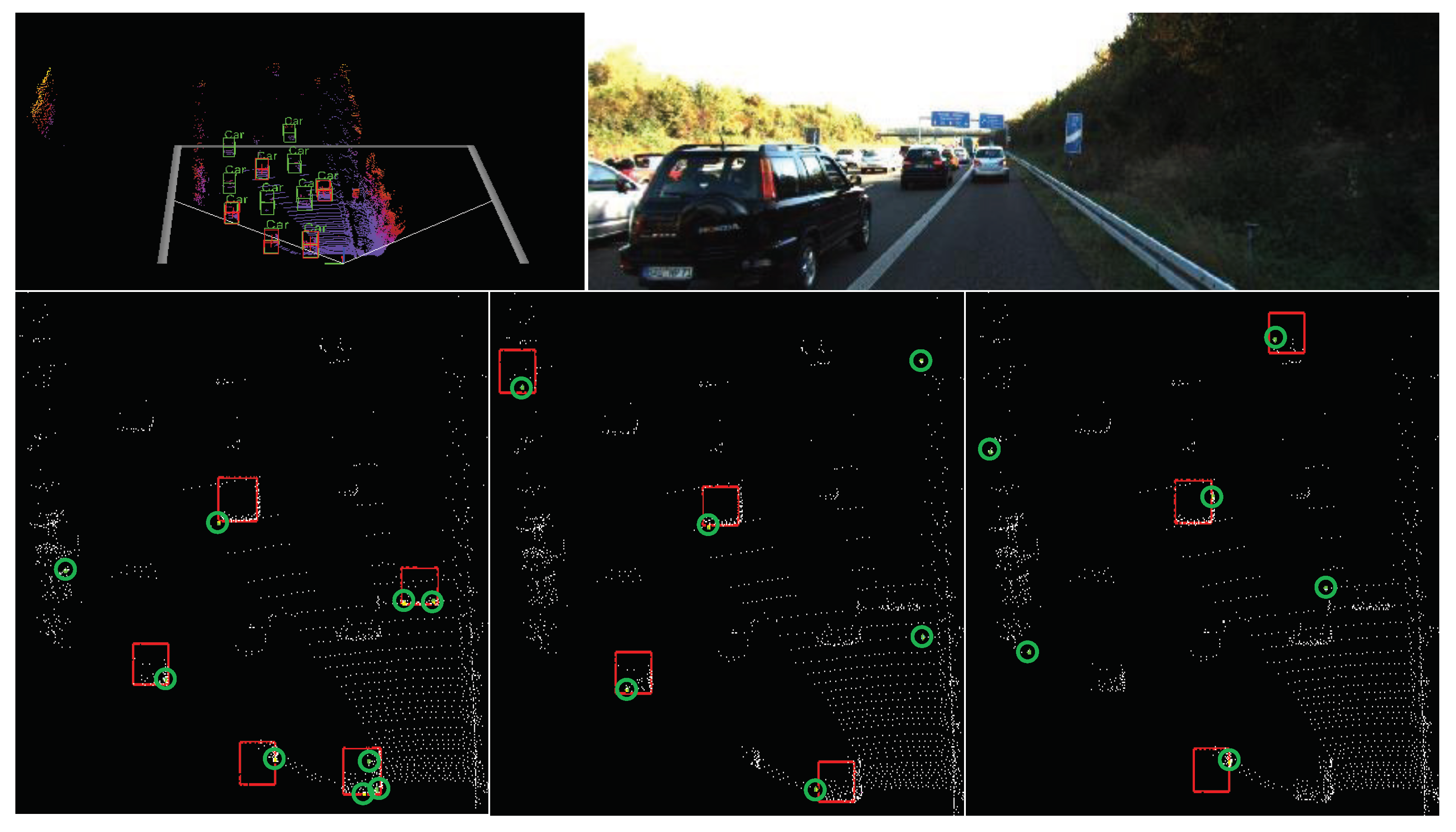

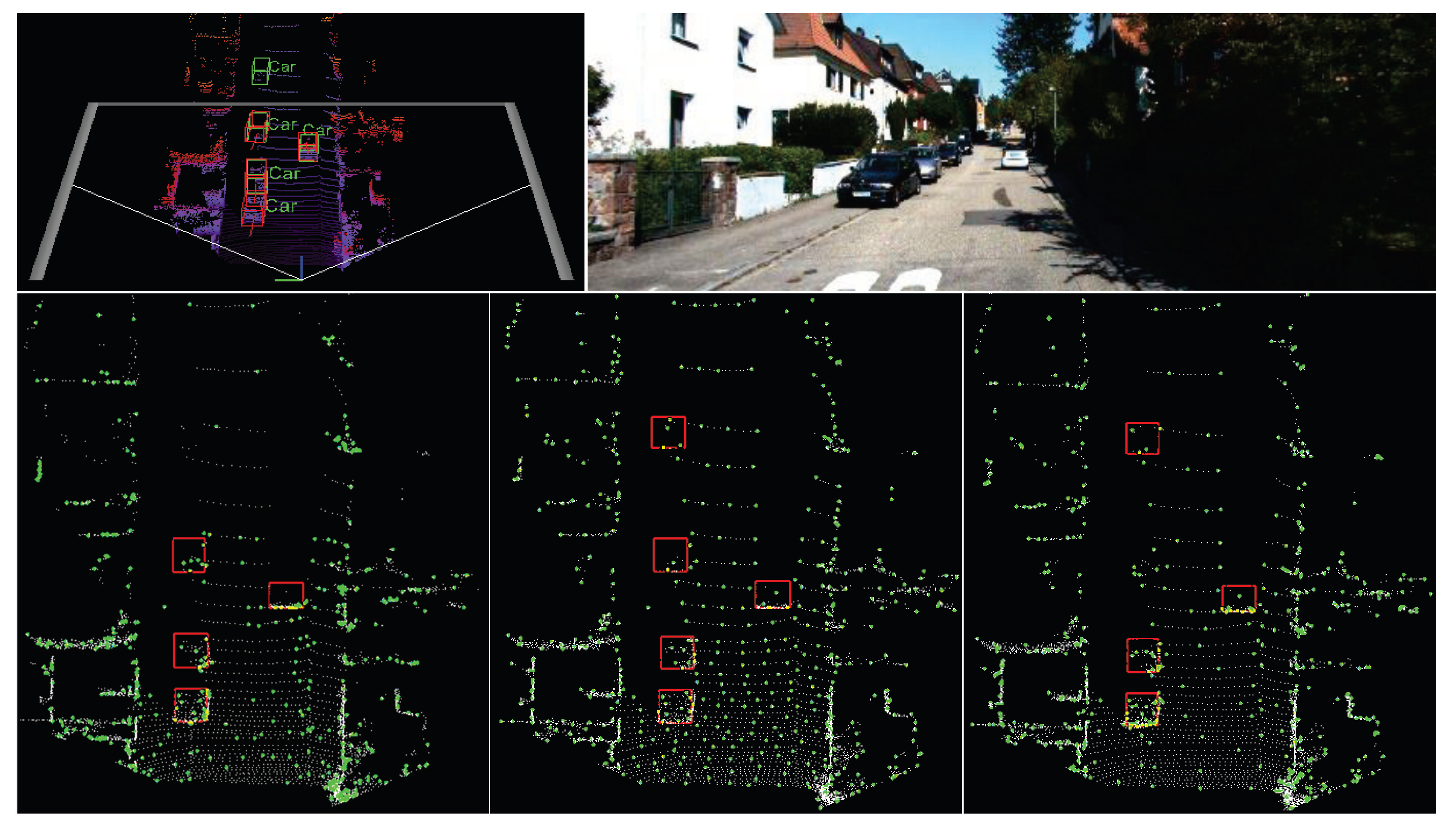

The entire network structure of LSNet is displayed in

Figure 2. It is a point-based, single-stage 3D object detection network with a feature extraction backbone and a detection head. The backbone, similar to many other point-based methods [

7,

29,

31,

34], uses the multi-scale set abstraction(SA) proposed by PointNet++ [

29] to gather neighborhood information and extract features, making it a PointNet-based model as well. Multiple SA modules were stacked to abstract high-level features and enlarge the receptive field. Inspired by VoteNet [

31] and 3DSSD [

7], a vote layer was added to improve network performance. For downsampling points, the FPS sampling method, i.e., D-FPS in 3DSSD [

7], is used to downsample the raw points roughly, while the LS module is used to further sample the points delicately. In addition, there are two 3D detection heads in the proposed model, one for box regression and the other for classification.

In relation to the LiDAR point cloud, the inputs of the model consist of 3D coordinates and 1D laser reflection intensity, i.e., , , . The predicted object in the KITTI 3D object detection dataset can be represented by a 3D bounding box , including its center, , , , size, h, w, l, and orientation, , which indicates the heading angle around the up-axis.

First, FPS based on 3D Euclidean distance is used to sample a subset of the raw points . Then, the vanilla multi-scale SA module is applied to extract the low-level features , which will be viewed as the inputs of the LS module with their coordinates. Working from these middle features, the LS module generates the sampling point cloud and . After several SA modules and a vote layer, the final features are fed into the detection head to predict the box and class of the object. After this, NMS is applied to remove the redundant boxes. Non-maximum suppression (NMS) is a critical post-processing procedure to suppress redundant bounding boxes based on the order of detection confidence, which is widely used in object detection tasks.

According to PointNet++, the SA module has many 1D-convolution-like layers, which are composed of shared-MLP layers. For each point, the SA module groups the surrounding points within a specific radius and uses shared-MLP to extract the features. The box regression head and the classification head are both fully connected (FC) layers.

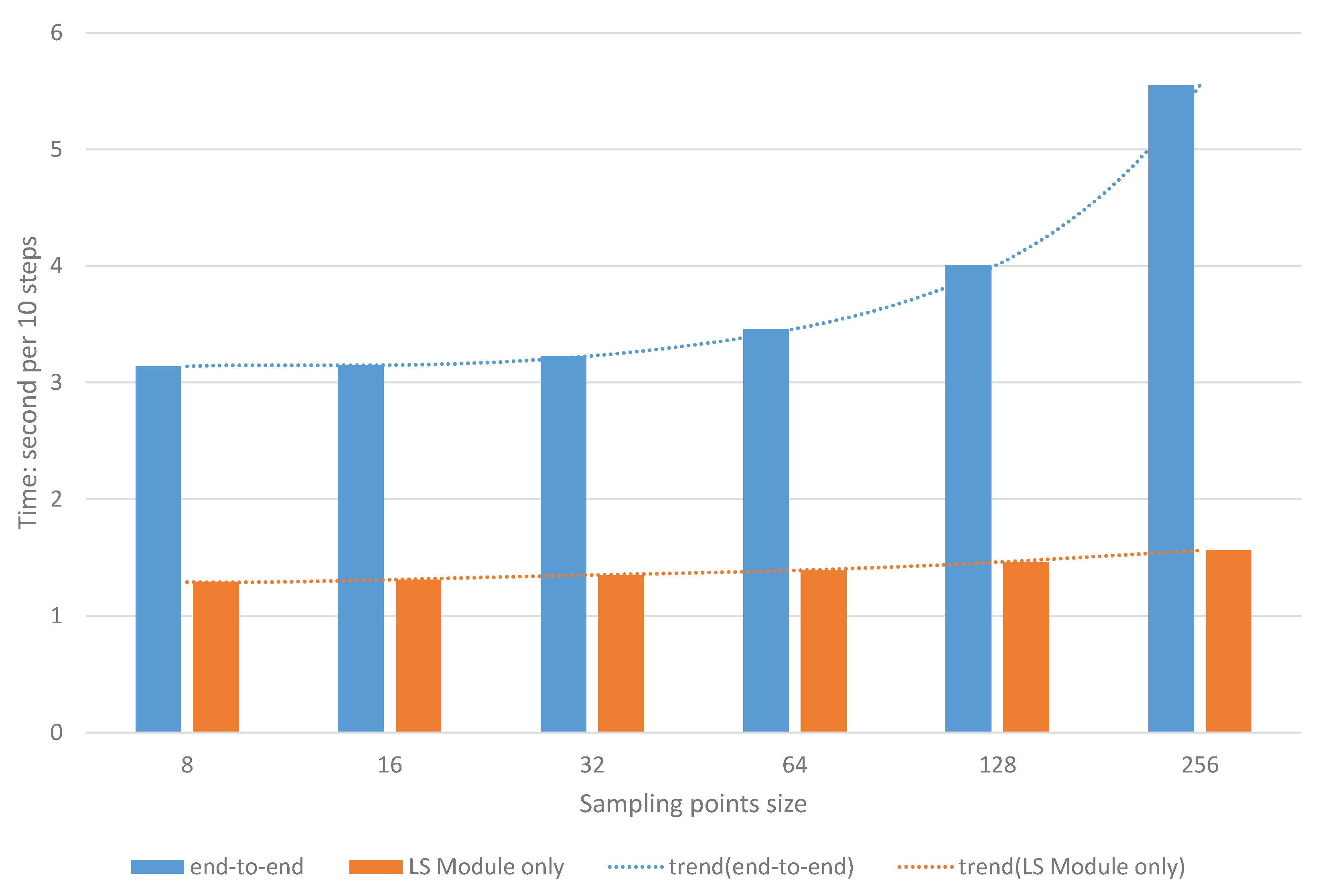

3.3. LS Module

The traditional sampling approaches are neither differentiable nor task-agnostic. Therefore, they cannot be trained using the loss method. Since the sampling process is discrete, we need to convert it to a continuous issue to smoothly integrate the sampling operation into a neural network. Ref. [

8] proposed S-Net and [

9] proposed its variant SampleNet to ameliorate this shortcoming. These sampling strategies have several defects. First, they generate new point coordinates, which are not in the subset of the original points. In addition, they can only be placed at the beginning of the total network and the entire model lacks the ability to be trained end-to-end. Another issue is due to the fact that the sampling network extracts features from coordinate inputs, while the task network also extracts features from the raw inputs. This duplicated effort inevitably results in a level of redundant extraction in regard to low-level features. A final issue is that the sampling network is relatively complex and time-consuming. This problem will become more severe as the number of points grows. A sampling process that requires burdensome levels of computation to function defeats the purpose of its application to the issue. In consideration of these issues, the discussed methods are not suitable for autonomous driving tasks.

To overcome such problems, the LS module was developed. As illustrated in

Figure 3, the network architecture of the LS module has only a few layers, which keeps the complexity low. Rather than extracting useful features to create a sampling matrix from a fresh start, these features are instead extracted by the task network part 1 and are shared, and the matrix is the output based on them to improve computational efficiency and to avoid the repeated extraction of the underlying features.

The input of the LS module is

, which is the subset of the points sampled by FPS with the features extracted by the former SA module. First a shared-MLP convolution layer is applied to obtain the local feature

of each point,

Function

represents the shared-MLP convolution layer with its weights

W. Then, a symmetric feature-wise max pooling operation is used to obtain a global feature vector

,

With the global features and the local features, we concatenate them of each point and pass these features to the shared-MLP convolution layers and use the sigmoid function to generate a matrix

, defined as

has the same shape as the sampling matrix . It is the output of the LS module while also being the middle value of .

To sample data based on

, the sampling matrix is further adjusted to

(used in the inference stage) or

(used in the training stage).

can be computed as

where the

function and the

function are applied to each column of

, i.e.,

with the shape of original points size

N. Since

has

columns, corresponding to

sampled points, and each column of

is a one-hot vector, Equation (

5) can be used to obtain the final sampled points

.

However, the

operation and the

operation are not differentiable, indicating that Equation (

14) cannot be used in the training stage to enable backpropagation. Inspired by the Gumbel-softmax trick [

10,

37,

38], softmax is applied to each column of

with parameter

to approximate the

operation. The generated sampling matrix is called

,

where parameter

is the annealing temperature, as

, each column in

degenerates into a one-hot distribution such as

. When the distribution of each column in

does not degenerate to a one-hot distribution, the features of sampled points

are not the same as before.

is computed by the matrix multiplication with

,

Nevertheless, it is desirable to keep the coordinates of the sampled points the same as they were previously. So, the

operation and the

operation are applied to

to generate sampling matrix

. Then, the coordinates of the sampled points

are computed as

Additionally, before Equation (

15), a random relaxation trick is employed to further boost the performance of the model, represented as

where

r is the decay rate and

is the upper boundary of the random number. Parameter

is decayed with the training step exponentially and eventually approaches 0 when there is no relaxation.

In actuality, the sampling matrix introduces the attention mechanism to the model. Each column of indicates the newly generated sampling point’s attention on old points. Then, the new features in contain the point-wise attention on the old points. Since each column of is a one-hot distribution, the coordinates of the sampled points calculated with mean its attention is focused on the single old point when it comes to coordinate generation.

In all the above functions, the shared-MLP function and the function are point-wise operations, while the random relaxation is an element-wise operation. In addition, the function operates from the feature dimension and selects the max value of each feature from all points. This means these functions do not change the permutation equivariance of the LS module. Separate from these functions, Lemma 1 shows the permutation invariance of . Thus, our proposed sampling method is permutation-invariant (Definition 1).

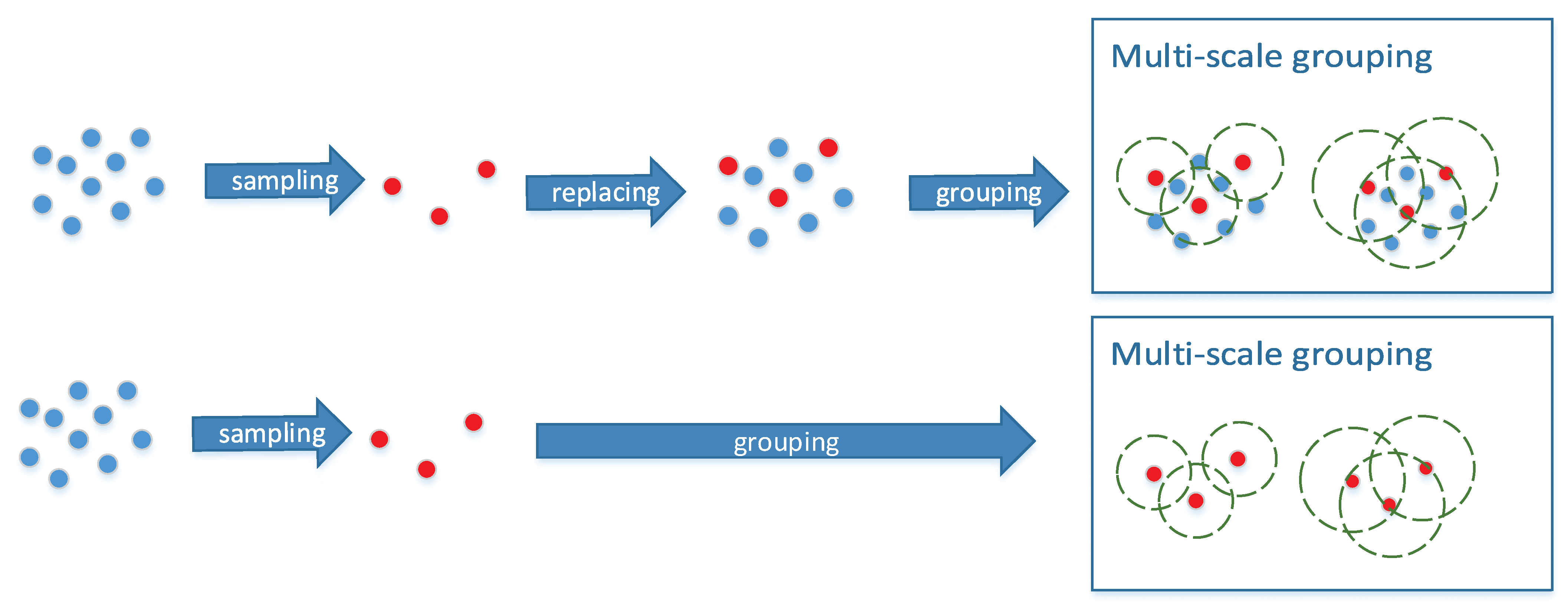

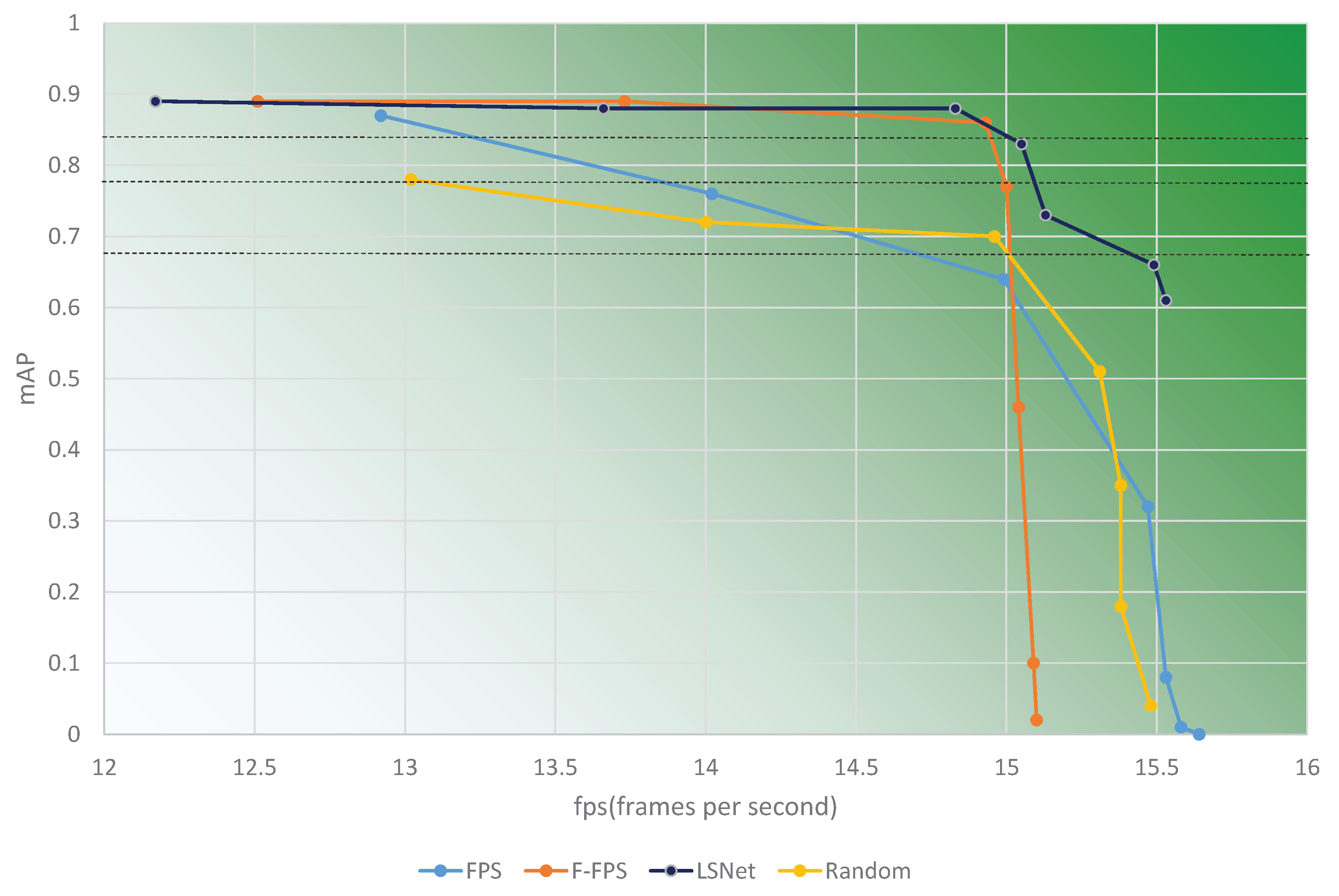

3.4. SA Module

The set abstraction procedure proposed by Qi et al., PointNet++, which is widely used in many point-based models, can be roughly divided into a sampling layer, grouping layer, and a PointNet layer. To obtain better coverage of the entire point set, PointNet++ uses FPS to select grouping center points from N input points in the sampling layer. Based on the coordinates of these center points, the model will gather Q points within a specified radius, contributing to a group set. In relation to the PointNet layer, a mini-PointNet (composed of multiple shared-MLP layers) is used to encode the local region patterns of each group into feature vectors. In this paper, the grouping layer and the PointNet layer are retained in our SA module. The LS module is used instead of FPS to generate a subset of points serving as the grouping center points, while the grouping layer is adjusted to fit our learned sampling model.

As shown in

Figure 4, multi-scale grouping is used to group the points of each center point with different scales. Features at different scales are learned by different shared-MLP layers and then concatenated to form a multi-scale feature. If the points sampled by the LS module are viewed as ball centers and perform the ball grouping process on the original dataset

N, similar to PointNet++, the entire network cannot be trained through backpropagation since the outputs of the LS module are not passed to the following network explicitly. Two methods have been developed to address this issue. The first method is to ignore the old dataset before sampling and instead use the newly sampled dataset for both the grouping center points and grouping pool. The other possibility is to use the new sampled dataset as grouping center point and replace the points of the old dataset with the new points in their corresponding positions. Using this method, it is possible to concatenate the features of the new sampled points to each group and pass the outputs (new points) of the LS module to the network.

Within each group, the local relative location of each point from the center point is used to replace the absolute location . Importantly, the extracted features will not be affected by shifting or rotating the point cloud. So, it follows that the inputs to the LS module remain the same despite the shift and rotation operations, which also indicates that the proposed sampling method is shift-invariant (Definition 2) and rotation-invariant (Definition 3).

3.5. Loss

Sampling loss. Unlike the D-FPS and F-FPS methods, the point in the sampling subset generated by the LS module is not unique and the high duplicate rate will result in unwanted levels of computational usage while being unable to make full use of a limited sampling size. This problem increases in severity as the sampling decreases in size.

As illustrated in Equation (

20), a sampling loss has been presented to reduce the duplicate rate and sample unique points to as great an extent as feasible. We accumulate each row of

, i.e.,

.

represents the sampling value of each point in the original dataset

. The ideal case is that the point in

is sampled 0 or 1 time. Since each column in

can be summarized to 1 and tends to be a one-hot distribution, the accumulation of

should tend to be near 0 or 1 if the point is not sampled more than once. Equation (

20) is designed to control this issue. The more the accumulation of

nears 0 or 1, the less the loss.

Each row of

indicates the old point’s attention on the newly generated sampling points. If there are many high values in one row, this old point is highly relevant to more than one new point, and the new point’s features will be deeply affected by the old point with high attention when each column in

tends to be a one-hot distribution. That is, these new points tends to be similar to the same old point, which leads to repeated sampling. However, we expect a variety of new sampling points. In a word, we utilized Equation (

20) to restrain each old point’s attention.

Task loss. In the 3D object detection task, the task loss consists of 3D bounding box regression loss

, classification loss

, and vote loss

.

,

, and

are the balance weights for these loss terms, respectively.

Cross-entropy loss is used to calculate classification loss

while vote loss related to the vote layer is calculated as VoteNet [

31]. Additionally, the regression loss in the model is similar to the regression loss in 3DSSD [

7]. The regression loss includes distance regression loss

, size regression loss

, angle regression loss

, and corner loss

. The smooth-

loss is utilized for

and

, in which the targets are offsets from the candidate points to their corresponding instance centers and sizes of the corresponding instances, respectively. Angle regression loss contains orientation classification loss and residual prediction loss. Corner loss is the distance between the predicted eight corners and assigned ground-truth.

Total loss. The overall loss is composed of sampling loss and task loss with

and

adopted to balance these two losses.