Mapping Canopy Cover in African Dry Forests from the Combined Use of Sentinel-1 and Sentinel-2 Data: Application to Tanzania for the Year 2018

Abstract

:1. Introduction

2. Study Area

3. Materials and Methods

3.1. Sentinel-1 and Sentinel-2 Layers

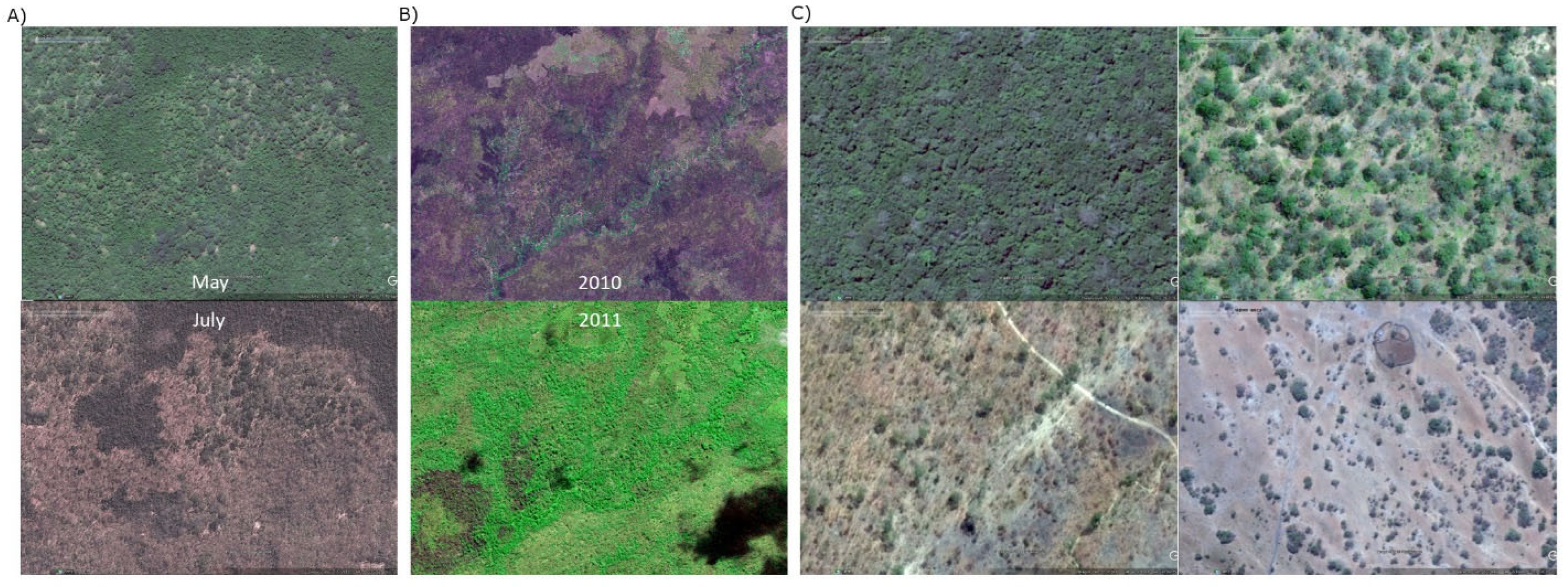

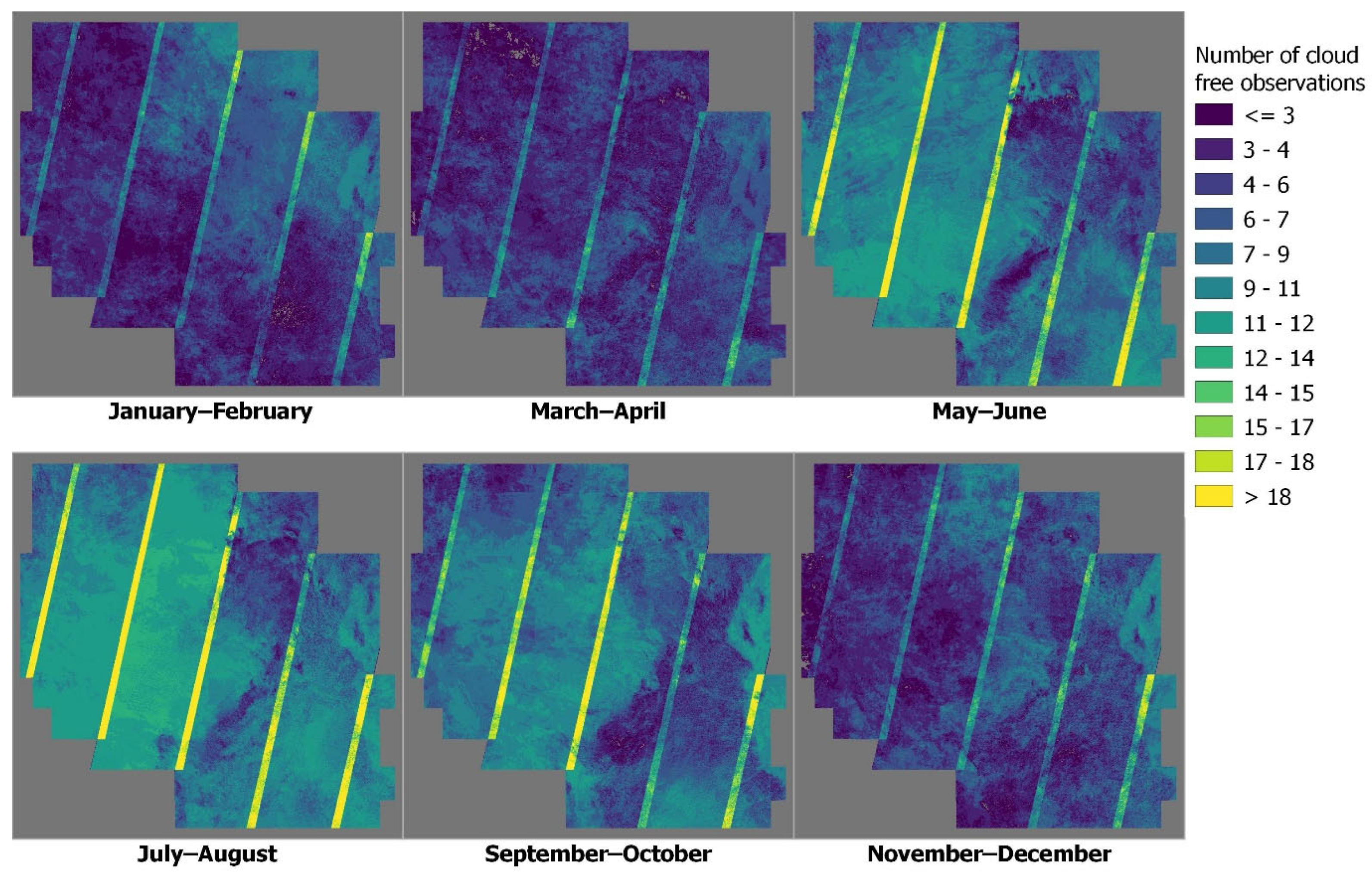

3.1.1. Optical Data: Sentinel-2

NDVI Bi-Monthly Time Series

Shadow Index

Composite for Visual Interpretation

3.1.2. SAR Data: Sentinel-1

3.1.3. Gap Filling

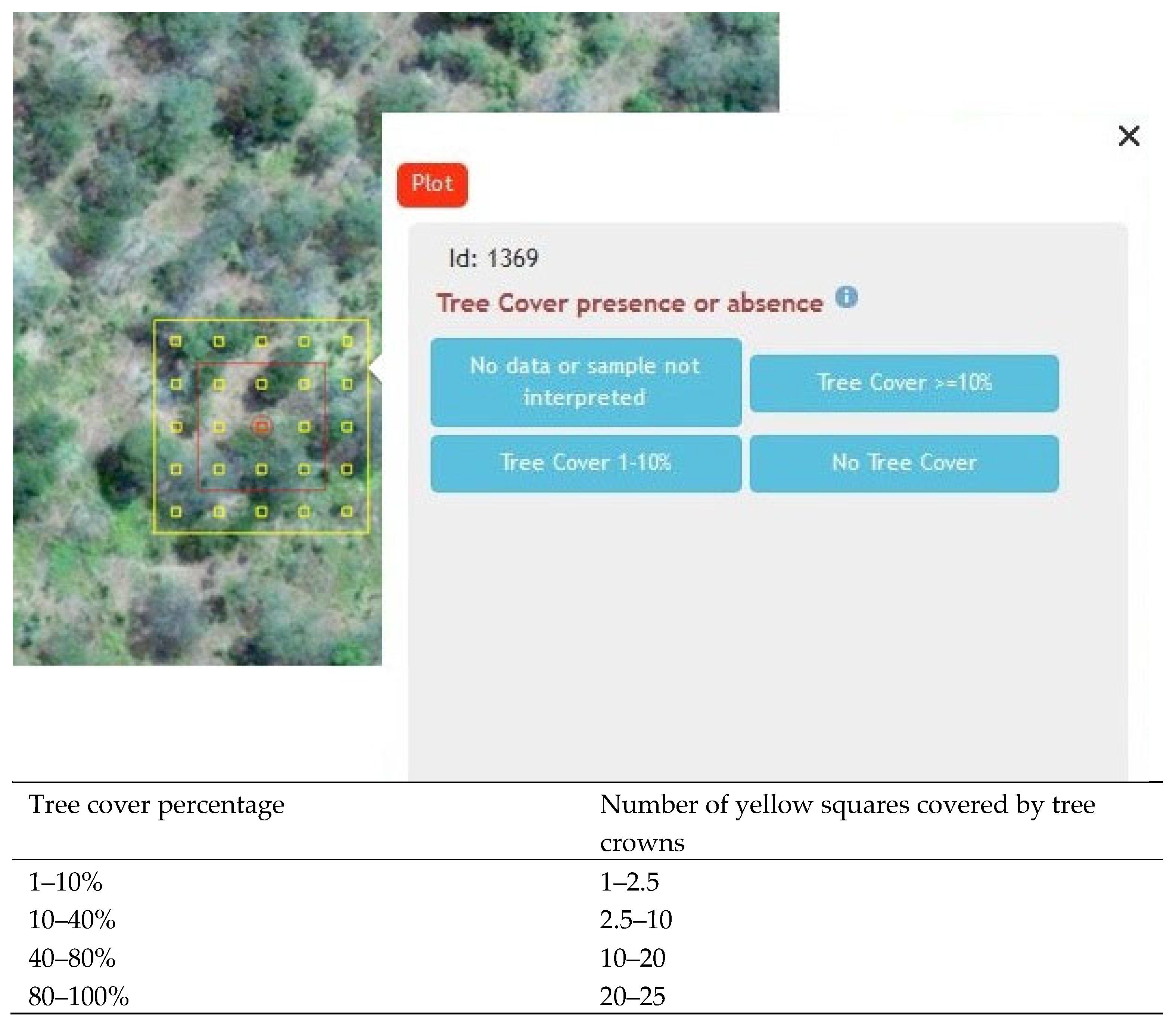

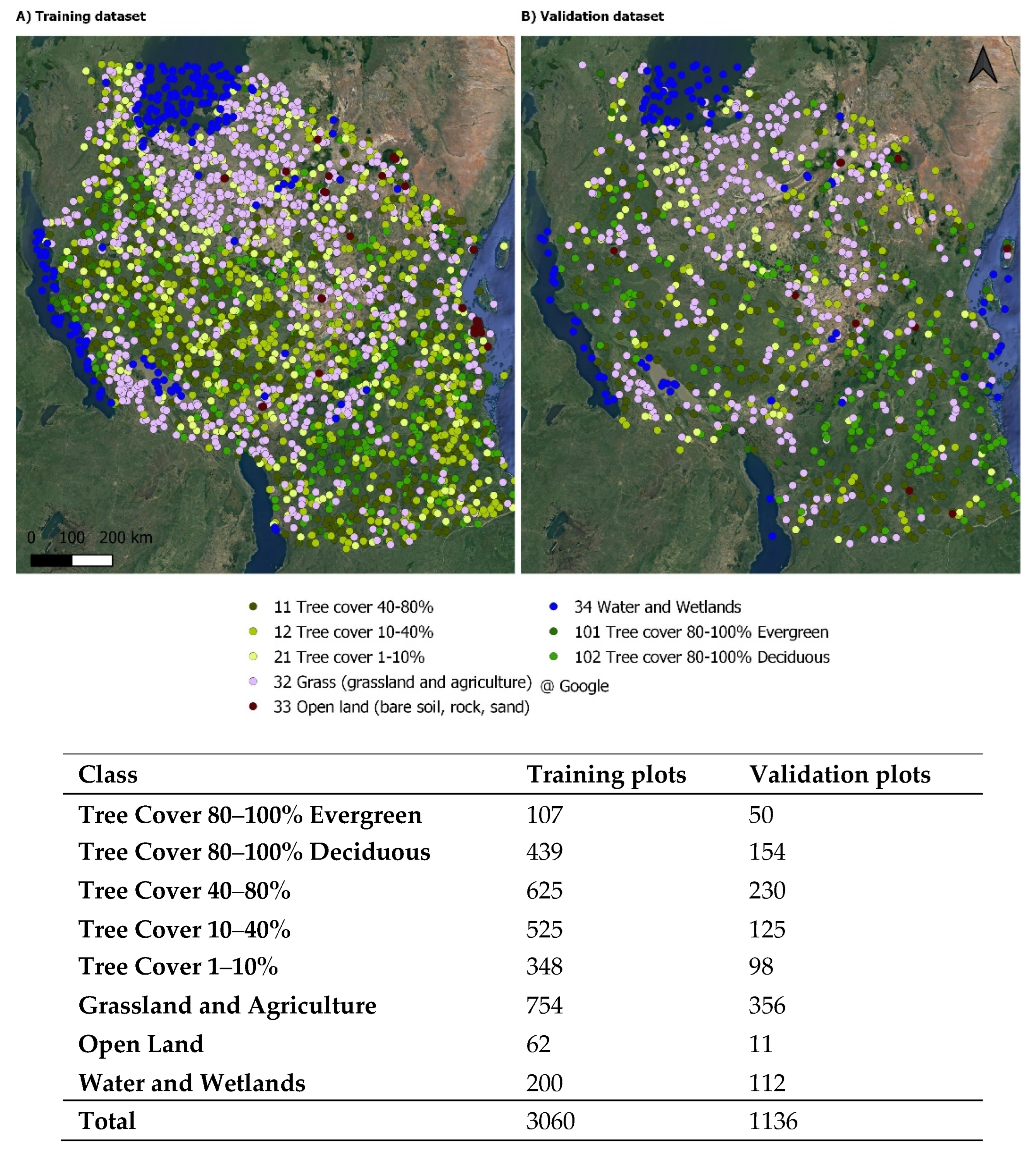

3.2. Reference Dataset Design

3.2.1. Classification Legend

3.2.2. Sampling and Response Design

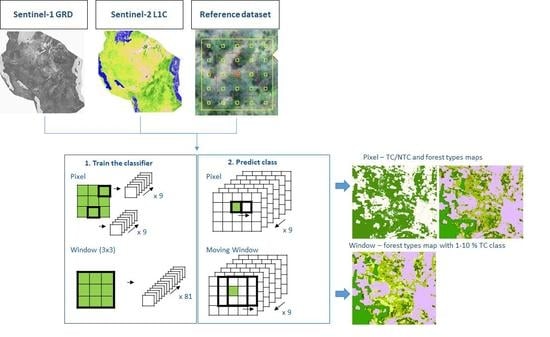

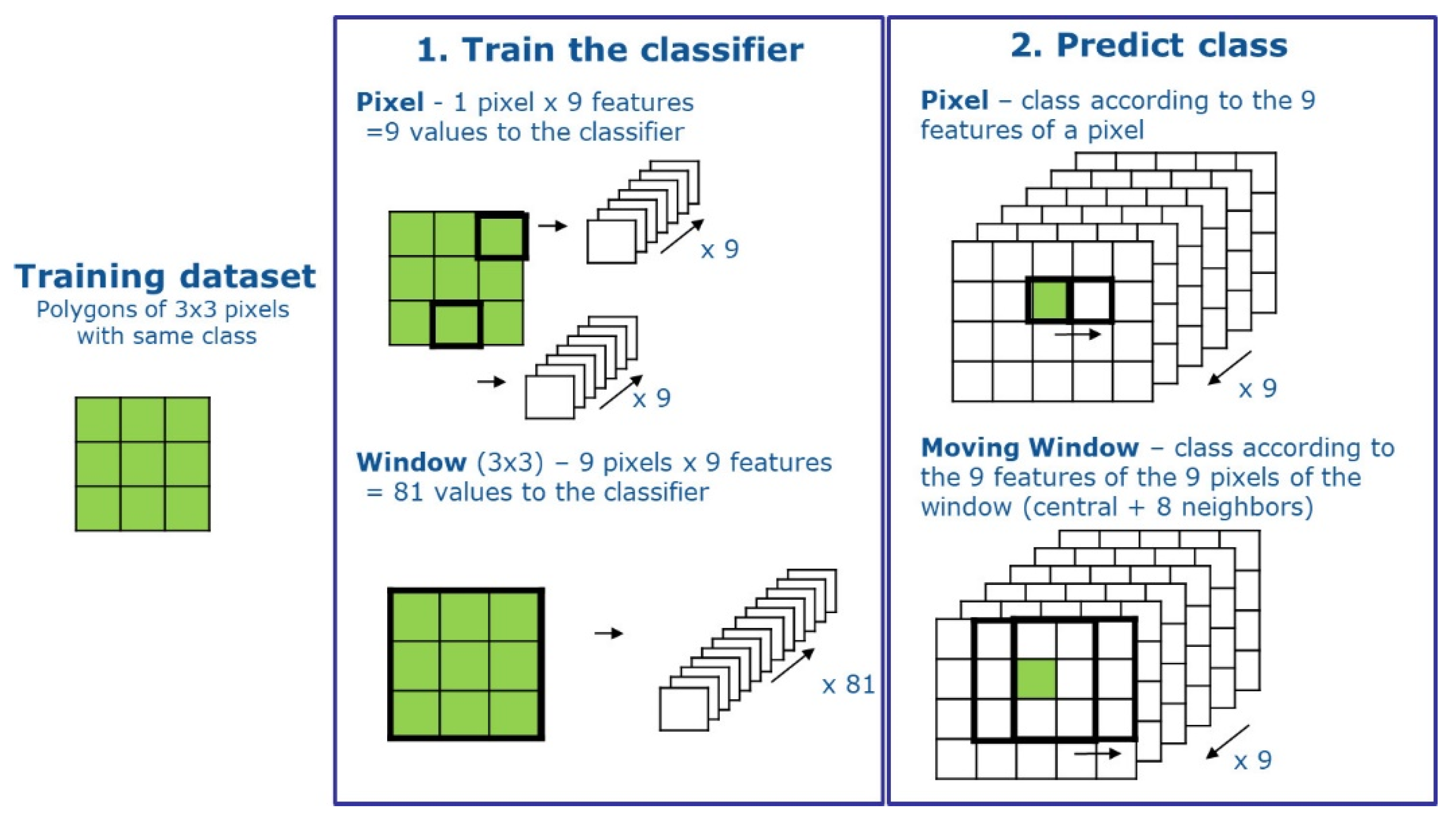

3.3. Classification Workflows

3.4. Accuracy Assessment

4. Results

4.1. S1 and S2 Composites

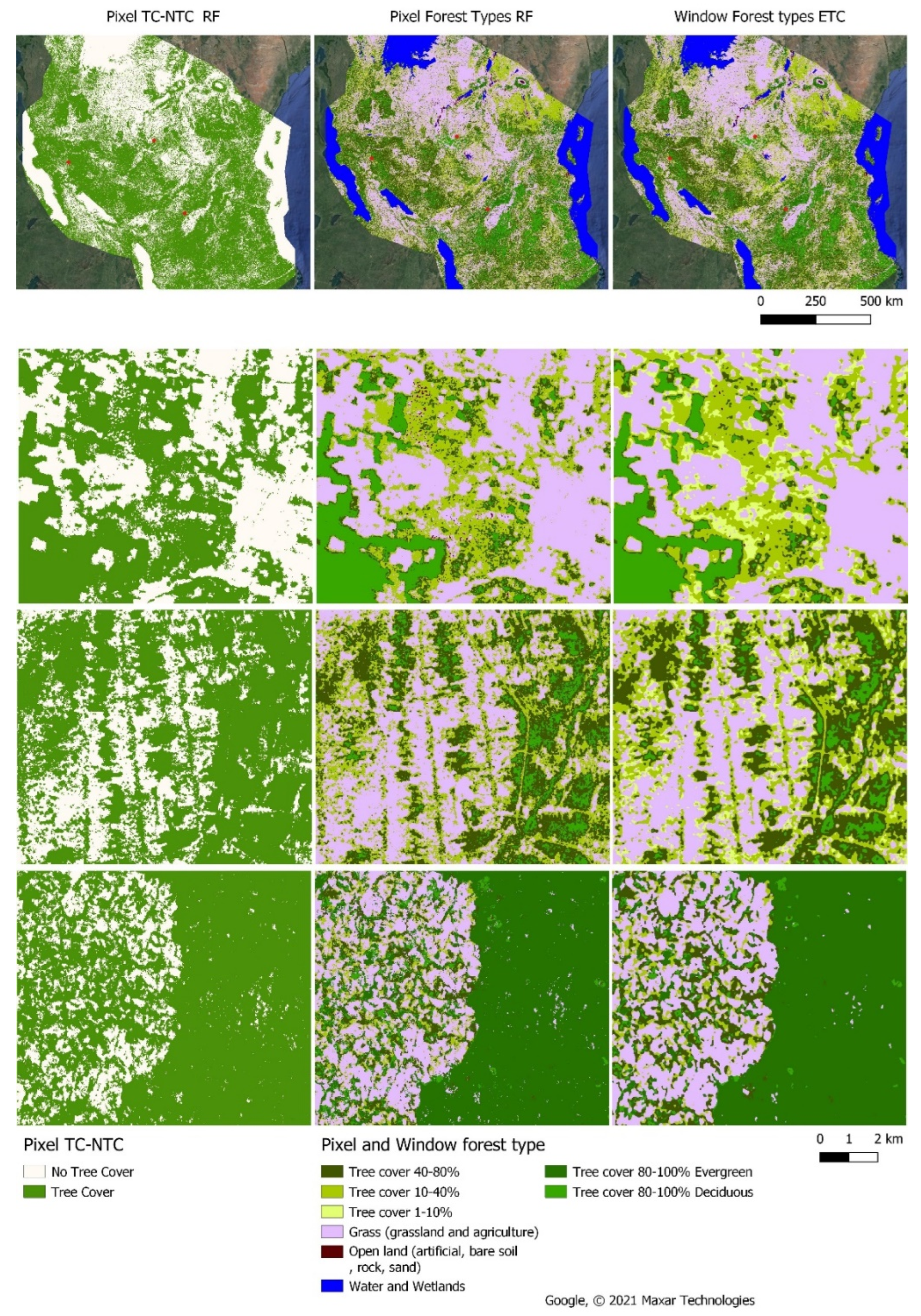

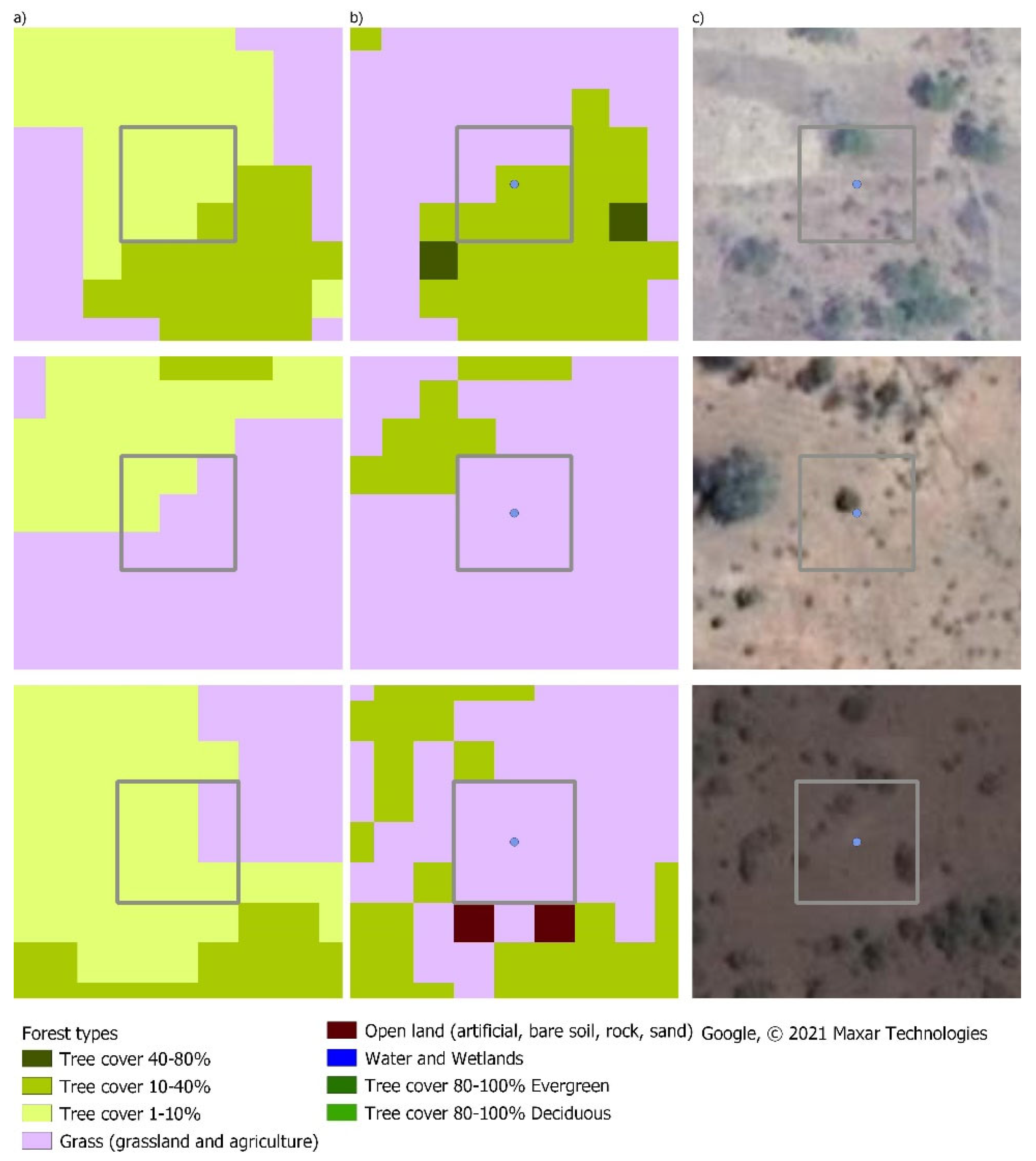

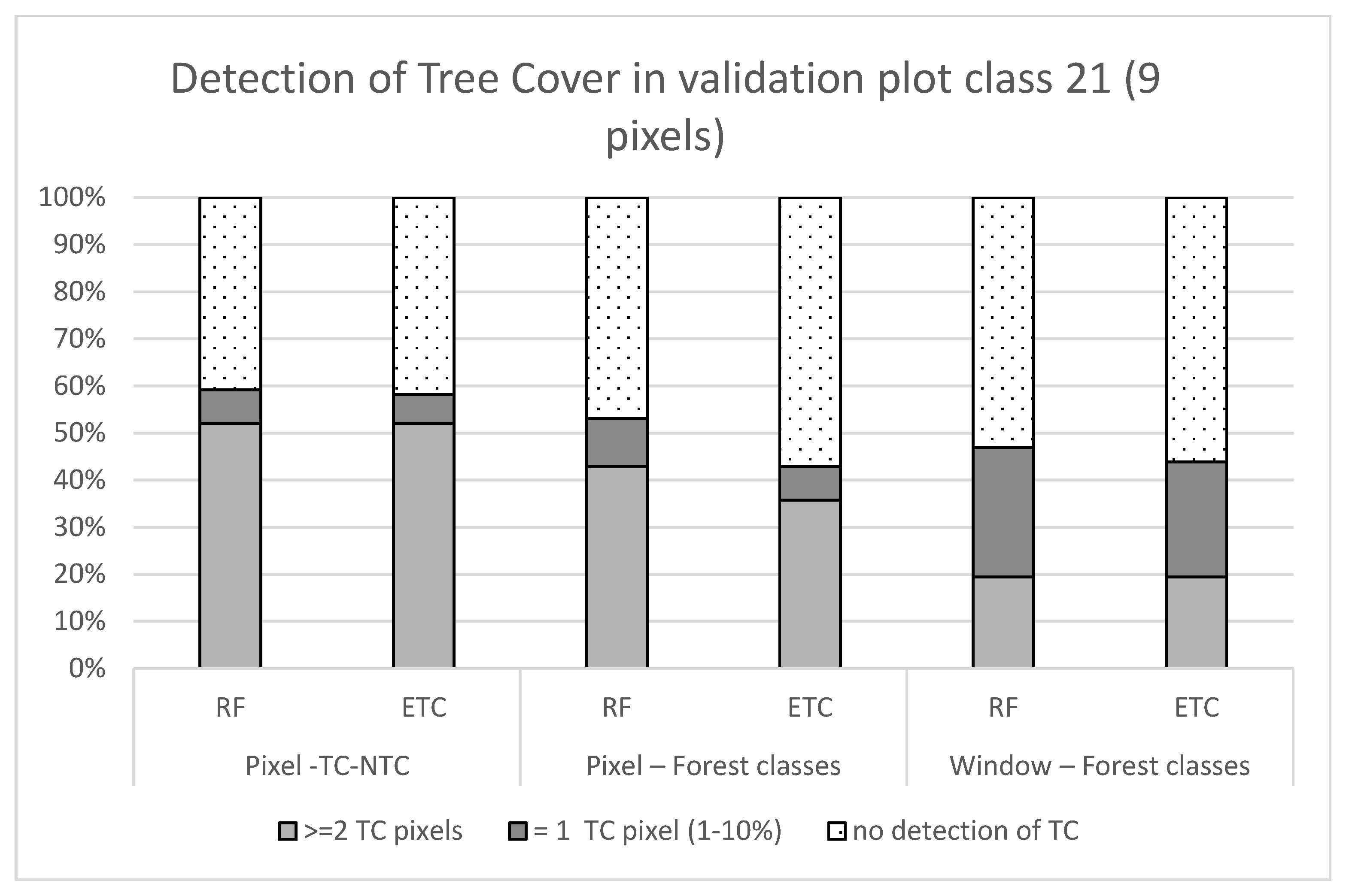

4.2. The Tree Cover–No Tree Cover and Forest Types Maps

4.3. Features’ Importance

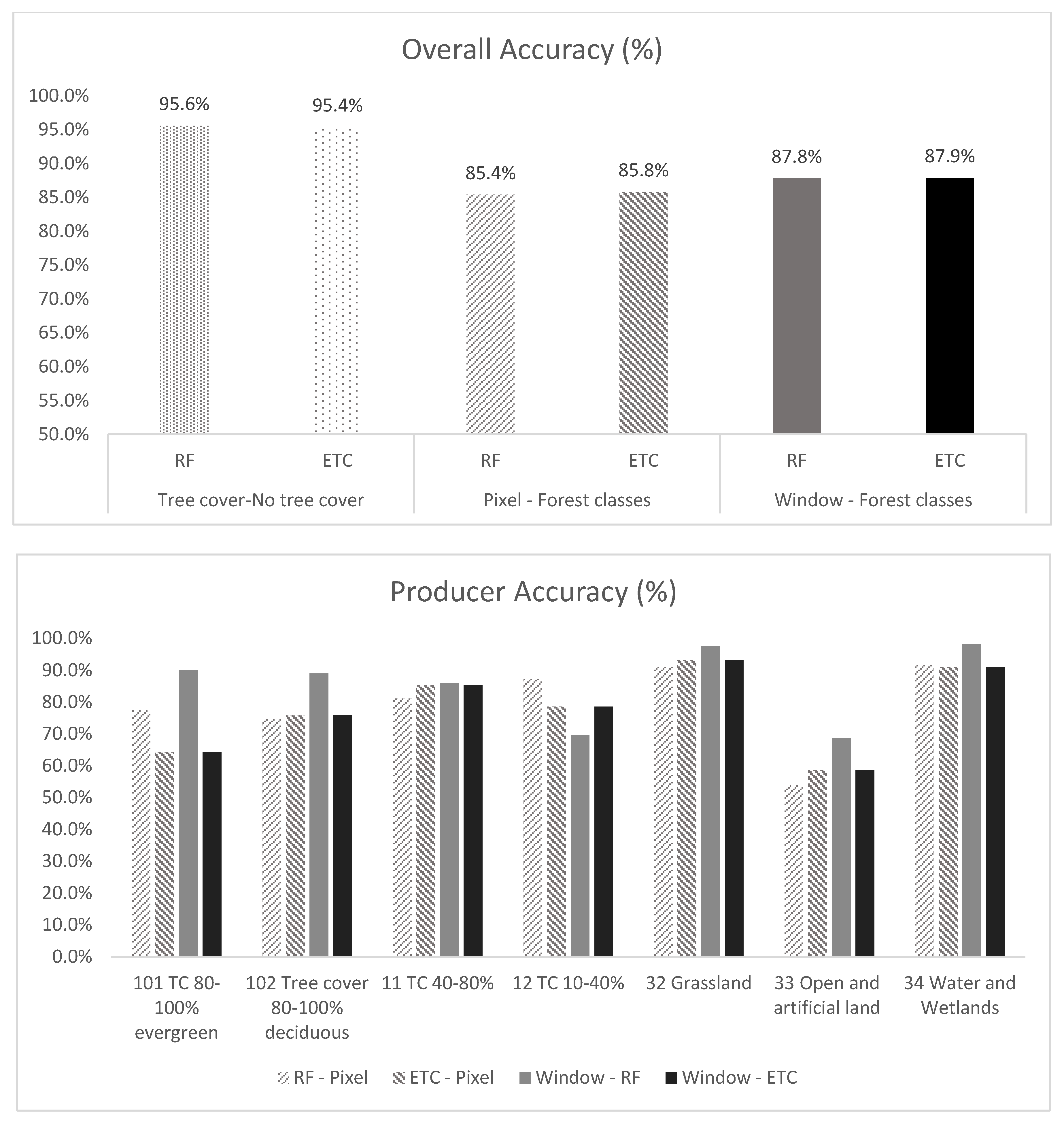

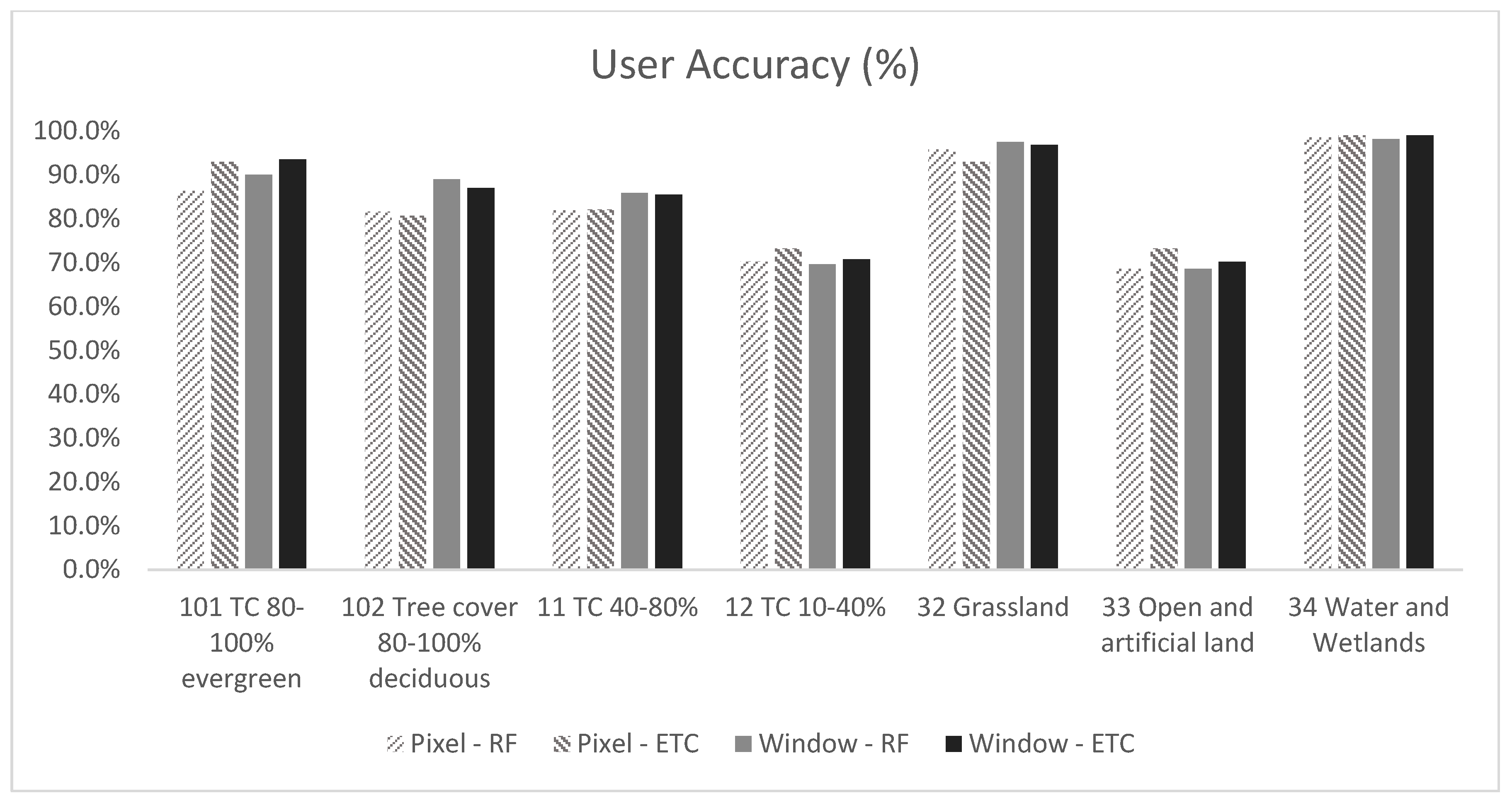

4.4. Accuracy Assessment

4.5. Forest Cover Types’ Area Estimation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sandker, M.; Carrillo, O.; Leng, C.; Lee, D.; D’Annunzio, R.; Fox, J. The Importance of High–Quality Data for REDD+ Monitoring and Reporting. Forests 2021, 12, 99. [Google Scholar] [CrossRef]

- Morales-Hidalgo, D.; Kleinn, C.; Scott, C.T. Voluntary Guidelines on National Forest Monitoring; FAO, Ed.; Food and Agriculture Organization of the United Nations: Rome, Italy, 2017; ISBN 978-92-5-109619-2. [Google Scholar]

- Nesha, M.K.; Herold, M.; De Sy, V.; Duchelle, A.E.; Martius, C.; Branthomme, A.; Garzuglia, M.; Jonsson, O.; Pekkarinen, A. An assessment of data sources, data quality and changes in national forest monitoring capacities in the Global Forest Resources Assessment 2005–2020. Environ. Res. Lett. 2021, 16, 054029. [Google Scholar] [CrossRef]

- UNFCCC National Forest Monitoring System. Available online: https://redd.unfccc.int/fact-sheets/national-forest-monitoring-system.html (accessed on 9 January 2021).

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vancutsem, C.; Achard, F.; Pekel, J.-F.; Vieilledent, G.; Carboni, S.; Simonetti, D.; Gallego, J.; Aragão, L.E.O.C.; Nasi, R. Long-term (1990–2019) monitoring of forest cover changes in the humid tropics. Sci. Adv. 2021, 7, eabe1603. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.-H.; Sexton, J.O.; Noojipady, P.; Huang, C.; Anand, A.; Channan, S.; Feng, M.; Townshend, J.R. Global, Landsat-based forest-cover change from 1990 to 2000. Remote Sens. Environ. 2014, 155, 178–193. [Google Scholar] [CrossRef] [Green Version]

- Badjana, H.M.; Olofsson, P.; Woodcock, C.E.; Helmschrot, J.; Wala, K.; Akpagana, K. Mapping and estimating land change between 2001 and 2013 in a heterogeneous landscape in West Africa: Loss of forestlands and capacity building opportunities. Int. J. Appl. Earth Obs. Geoinf. 2017, 63, 15–23. [Google Scholar] [CrossRef]

- Bouvet, A.; Mermoz, S.; Le Toan, T.; Villard, L.; Mathieu, R.; Naidoo, L.; Asner, G.P. An above-ground biomass map of African savannahs and woodlands at 25 m resolution derived from ALOS PALSAR. Remote Sens. Environ. 2018, 206, 156–173. [Google Scholar] [CrossRef]

- Bastin, J.-F.; Berrahmouni, N.; Grainger, A.; Maniatis, D.; Mollicone, D.; Moore, R.; Patriarca, C.; Picard, N.; Sparrow, B.; Abraham, E.M.; et al. The extent of forest in dryland biomes. Science 2017, 356, 635–638. [Google Scholar] [CrossRef] [Green Version]

- INPE Projeto Prodes—Monitoramento Da Floresta Amazônica Brasileira Por Satélite. Available online: http://www.obt.inpe.br/OBT/assuntos/programas/amazonia/prodes (accessed on 12 June 2020).

- Achard, F.; Eva, H.D.; Mayaux, P.; Stibig, H.-J.; Belward, A. Improved estimates of net carbon emissions from land cover change in the tropics for the 1990s. Global Biogeochem. Cycles 2004, 18, GB2008. [Google Scholar] [CrossRef]

- Næsset, E.; Ørka, H.O.; Solberg, S.; Bollands\aas, O.M.; Hansen, E.H.; Mauya, E.; Zahabu, E.; Malimbwi, R.; Chamuya, N.; Olsson, H.; et al. Mapping and estimating forest area and aboveground biomass in miombo woodlands in Tanzania using data from airborne laser scanning, TanDEM-X, RapidEye, and global forest maps: A comparison of estimated precision. Remote Sens. Environ. 2016, 175, 282–300. [Google Scholar] [CrossRef]

- Baumann, M.; Levers, C.; Macchi, L.; Bluhm, H.; Waske, B.; Gasparri, N.I.; Kuemmerle, T. Mapping continuous fields of tree and shrub cover across the Gran Chaco using Landsat 8 and Sentinel-1 data. Remote Sens. Environ. 2018, 216, 201–211. [Google Scholar] [CrossRef]

- Timberlake, J.; Chidumayo, E.; Sawadogo, L. Distribution and Characteristics of African Dry Forests and Woodlands. In The Dry Forests and Woodlands of Africa: Managing for Products and Services; Earthscan: London, UK; Washington, DC, USA, 2010; ISBN 978-1-84977-654-7. [Google Scholar]

- Furley, P.A. Tropical savannas and associated forests: Vegetation and plant ecology. Prog. Phys. Geogr. Earth Environ. 2007, 31, 203–211. [Google Scholar] [CrossRef]

- FAO. Trees, Forests and Land Use in Drylands: The First Global Assessment; Food and Agriculture Organization of the United Nations: Rome, Italy, 2019; ISBN iSBN9789251093269. [Google Scholar]

- Reiche, J.; Hamunyela, E.; Verbesselt, J.; Hoekman, D.; Herold, M. Improving near-real time deforestation monitoring in tropical dry forests by combining dense Sentinel-1 time series with Landsat and ALOS-2 PALSAR-2. Remote Sens. Environ. 2018, 204, 147–161. [Google Scholar] [CrossRef]

- Reiche, J.; Mullissa, A.; Slagter, B.; Gou, Y.; Tsendbazar, N.E.; Odongo-Braun, C.; Vollrath, A.; Weisse, M.J.; Stolle, F.; Pickens, A.; et al. Forest disturbance alerts for the Congo Basin using Sentinel-1. Environ. Res. Lett. 2021, 16. [Google Scholar] [CrossRef]

- Hojas-Gascón, L.; Belward, A.; Eva, H.; Ceccherini, G.; Hagolle, O.; Garcia, J.; Cerutti, P. Potential improvement for forest cover and forest degradation mapping with the forthcoming Sentinel-2 program. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2015, 40, 417–423. [Google Scholar] [CrossRef] [Green Version]

- Anchang, J.Y.; Prihodko, L.; Ji, W.; Kumar, S.S.; Ross, C.W.; Yu, Q.; Lind, B.; Sarr, M.A.; Diouf, A.A.; Hanan, N.P. Toward Operational Mapping of Woody Canopy Cover in Tropical Savannas Using Google Earth Engine. Front. Environ. Sci. 2020, 8, 4. [Google Scholar] [CrossRef] [Green Version]

- Lopes, M.; Frison, P.L.; Durant, S.M.; Schulte to Bühne, H.; Ipavec, A.; Lapeyre, V.; Pettorelli, N. Combining optical and radar satellite image time series to map natural vegetation: Savannas as an example. Remote Sens. Ecol. Conserv. 2020, 6, 316–326. [Google Scholar] [CrossRef]

- Zhang, W.; Brandt, M.; Wang, Q.; Prishchepov, A.V.; Tucker, C.J.; Li, Y.; Lyu, H.; Fensholt, R. From woody cover to woody canopies: How Sentinel-1 and Sentinel-2 data advance the mapping of woody plants in savannas. Remote Sens. Environ. 2019, 234, 111465. [Google Scholar] [CrossRef]

- Shimizu, K.; Ota, T.; Mizoue, N. Detecting Forest Changes Using Dense Landsat 8 and Sentinel-1 Time Series Data in Tropical Seasonal Forests. Remote Sens. 2019, 11, 1899. [Google Scholar] [CrossRef] [Green Version]

- Heckel, K.; Urban, M.; Schratz, P.; Mahecha, M.; Schmullius, C. Predicting Forest Cover in Distinct Ecosystems: The Potential of Multi-Source Sentinel-1 and -2 Data Fusion. Remote Sens. 2020, 12, 302. [Google Scholar] [CrossRef] [Green Version]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Bey, A.; Jetimane, J.; Lisboa, S.N.; Ribeiro, N.; Sitoe, A.; Meyfroidt, P. Mapping smallholder and large-scale cropland dynamics with a flexible classification system and pixel-based composites in an emerging frontier of Mozambique. Remote Sens. Environ. 2020, 239, 111611. [Google Scholar] [CrossRef]

- Lopes, C.; Leite, A.; Vasconcelos, M.J. Open-access cloud resources contribute to mainstream REDD+: The case of Mozambique. Land Use Policy 2019, 82, 48–60. [Google Scholar] [CrossRef]

- Saah, D.; Tenneson, K.; Matin, M.; Uddin, K.; Cutter, P.; Poortinga, A.; Nguyen, Q.H.; Patterson, M.; Johnson, G.; Markert, K.; et al. Land Cover Mapping in Data Scarce Environments: Challenges and Opportunities. Front. Environ. Sci. 2019, 7, 150. [Google Scholar] [CrossRef] [Green Version]

- Saah, D.; Tenneson, K.; Poortinga, A.; Nguyen, Q.; Chishtie, F.; Aung, K.S.; Markert, K.N.; Clinton, N.; Anderson, E.R.; Cutter, P.; et al. Primitives as building blocks for constructing land cover maps. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 101979. [Google Scholar] [CrossRef]

- McSweeney, C.; New, M.; Lizcano, G. UNDP Climate Change Country Profiles: Tanzania. Available online: https://digital.library.unt.edu/ark:/67531/metadc226754/ (accessed on 1 December 2021).

- MWE. Tanzania Second National Communication to the United Nations Framework Convention on Climate Change; Division of Environment Tanzania: Dar es Salaam, Tanzania, 2014. [Google Scholar]

- Felix, M.; Gheewala, S.H. Review of Biomass Energy Dependency in Tanzania. Energy Procedia 2011, 9, 338–343. [Google Scholar] [CrossRef] [Green Version]

- Lovett, J.C. Moist forests of Tanzania. Swara 1985, 8, 8–9. [Google Scholar]

- Burgess, N.D.; Clarke, G.P.; Rodgers, W.A. Coastal forests of eastern Africa: Status, endemism patterns and their potential causes. Biol. J. Linn. Soc. 1998, 64, 337–367. [Google Scholar] [CrossRef]

- Isango, J. Stand Structure and Tree Species Composition of Tanzania Miombo Woodlands: A Case Study from Miombo Woodlands of Community Based Forest Management in Iringa District; Working Papers of the Finnish Forest Research Institute. In Proceedings of the 1st MITIMIOMBO Project Workshop, Morogoro, Tanzania, 6–12 February 2007; Volume 50, pp. 43–56. [Google Scholar]

- FAO. Global Forest Resources Assessment 2015; Food and Agriculture Organization of the United Nations: Rome, Italy, 2015. [Google Scholar]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and Change Detection Using Landsat TM Data: When and How to Correct Atmospheric Effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Housman, I.W.; Chastain, R.A.; Finco, M.V. An evaluation of forest health insect and disease survey data and satellite-based remote sensing forest change detection methods: Case studies in the United States. Remote Sens. 2018, 10, 1184. [Google Scholar] [CrossRef] [Green Version]

- Meroni, M.; D’Andrimont, R.; Vrieling, A.; Fasbender, D.; Lemoine, G.; Rembold, F.; Seguini, L.; Verhegghen, A. Comparing land surface phenology of major European crops as derived from SAR and multispectral data of Sentinel-1 and -2. Remote Sens. Environ. 2021, 253, 112232. [Google Scholar] [CrossRef] [PubMed]

- Gao, B. NDWI–A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Rikimaru, A.; Roy, P.S.; Miyatake, S. Tropical forest cover density mapping. Trop. Ecol. 2002, 43, 39–47. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Hojas Gascón, L.; Ceccherini, G.; García Haro, F.; Avitabile, V.; Eva, H. The Potential of High Resolution (5 m) RapidEye Optical Data to Estimate Above Ground Biomass at the National Level over Tanzania. Forests 2019, 10, 107. [Google Scholar] [CrossRef] [Green Version]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- ESA Sentinel-1 SAR User Guide. Available online: https://sentinel.esa.int/web/sentinel/user-guides/sentinel-1-sar (accessed on 21 September 2021).

- ESA The Sentinel-1 Toolbox—Version 7. Available online: https://sentinels.copernicus.eu/web/sentinel/866toolboxes/sentinel-1 (accessed on 21 September 2021).

- Frison, P.L.; Fruneau, B.; Kmiha, S.; Soudani, K.; Dufrêne, E.; Le Toan, T.; Koleck, T.; Villard, L.; Mougin, E.; Rudant, J.P. Potential of Sentinel-1 data for monitoring temperate mixed forest phenology. Remote Sens. 2018, 10, 2049. [Google Scholar] [CrossRef] [Green Version]

- Rüetschi, M.; Schaepman, M.E.; Small, D. Using Multitemporal Sentinel-1 C-band Backscatter to Monitor Phenology and Classify Deciduous and Coniferous Forests in Northern Switzerland. Remote Sens. 2017, 10, 55. [Google Scholar] [CrossRef] [Green Version]

- MNRT. National Forest Resources Monitoring and Assessment of Tanzania Mainland (NAFORMA); Main results; Ministry of Natural Resources and Tourism, Tanzania Forest Services Agency: Dar es Salaam, Tanzania, 2015.

- Mauya, E.W.; Mugasha, W.A.; Njana, M.A.; Zahabu, E.; Malimbwi, R. Carbon stocks for different land cover types in Mainland Tanzania. Carbon Balance Manag. 2019, 14, 4. [Google Scholar] [CrossRef] [Green Version]

- FAO. Map Accuracy Assessment and Area Estimation Map Accuracy Assessment and Area Estimation: A Practical Guide. In National Forest Monitoring Assessment Working Paper; Food and Agriculture Organization of the United Nations: Rome, Italy, 2016; p. 69. [Google Scholar]

- Bey, A.; Sánchez-Paus Díaz, A.; Maniatis, D.; Marchi, G.; Mollicone, D.; Ricci, S.; Bastin, J.-F.; Moore, R.; Federici, S.; Rezende, M.; et al. Collect Earth: Land Use and Land Cover Assessment through Augmented Visual Interpretation. Remote Sens. 2016, 8, 807. [Google Scholar] [CrossRef] [Green Version]

- Schepaschenko, D.; See, L.; Lesiv, M.; Bastin, J.-F.; Mollicone, D.; Tsendbazar, N.-E.; Bastin, L.; McCallum, I.; Laso Bayas, J.C.; Baklanov, A.; et al. Recent Advances in Forest Observation with Visual Interpretation of Very High-Resolution Imagery. Surv. Geophys. 2019, 40, 839–862. [Google Scholar] [CrossRef] [Green Version]

- Soille, P.; Burger, A.; De Marchi, D.; Kempeneers, P.; Rodriguez, D.; Syrris, V.; Vasilev, V. A versatile data-intensive computing platform for information retrieval from big geospatial data. Future Gener. Comput. Syst. 2018, 81, 30–40. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Stehman, S.V.; Czaplewski, R.L. Design and analysis for thematic map accuracy assessment: Fundamental principles. Remote Sens. Environ. 1998, 64, 331–344. [Google Scholar] [CrossRef]

- Stehman, S.V.; Wickham, J.D. Pixels, blocks of pixels, and polygons: Choosing a spatial unit for thematic accuracy assessment. Remote Sens. Environ. 2011, 115, 3044–3055. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Zhang, Y.; Ling, F.; Wang, X.; Foody, G.M.; Boyd, D.S.; Li, X.; Du, Y.; Atkinson, P.M. Tracking small-scale tropical forest disturbances: Fusing the Landsat and Sentinel-2 data record. Remote Sens. Environ. 2021, 261, 112470. [Google Scholar] [CrossRef]

- Lima, T.A. Comparing Sentinel-2 MSI and Landsat 8 OLI Imagery for Monitoring Selective Logging in the Brazilian Amazon. Remote Sens. 2019, 8, 961. [Google Scholar] [CrossRef] [Green Version]

- Verhegghen, A.; Eva, H.; Ceccherini, G.; Achard, F.; Gond, V.; Gourlet-Fleury, S.; Cerutti, P. The Potential of Sentinel Satellites for Burnt Area Mapping and Monitoring in the Congo Basin Forests. Remote Sens. 2016, 8, 986. [Google Scholar] [CrossRef] [Green Version]

- Simonetti, D.; Pimple, U.; Langner, A.; Marelli, A. Pan-Tropical Sentinel-2 Cloud-Free Annual Composite. Data Brief 2021, 39, 107488. [Google Scholar] [CrossRef] [PubMed]

- Schnell, S.; Kleinn, C.; Ståhl, G. Monitoring trees outside forests: A review. Environ. Monit. Assess. 2015, 187, 600. [Google Scholar] [CrossRef] [PubMed]

- Brandt, M.; Tucker, C.J.; Kariryaa, A.; Rasmussen, K.; Abel, C.; Small, J.; Chave, J.; Rasmussen, L.V.; Hiernaux, P.; Diouf, A.A.; et al. An unexpectedly large count of trees in the West African Sahara and Sahel. Nature 2020, 587, 78–82. [Google Scholar] [CrossRef] [PubMed]

- FAO. Assessing forest degradation—Towards the development of globally applicable guidelines. In Forest Resources Assessment Working Paper 117; Food and Agriculture Organization of the United Nations: Rome, Italy, 2011. [Google Scholar]

- Langner, A.; Miettinen, J.; Kukkonen, M.; Vancutsem, C.; Simonetti, D.; Vieilledent, G.; Verhegghen, A.; Gallego, J.; Stibig, H.J. Towards operational monitoring of forest canopy disturbance in evergreen rain forests: A test case in continental Southeast Asia. Remote Sens. 2018, 10, 544. [Google Scholar] [CrossRef] [Green Version]

| Satellite Constellation | Index | Temporal Interval | Compositing Technique |

|---|---|---|---|

| Sentinel-2 | NDVI | Bi-monthly (6 layers) | Maximum |

| Sentinel-2 | Shadow Index | Annual | Maximum of monthly 90th percentile |

| Sentinel-1 | VV | Annual | Minimum of monthly 10th percentile |

| Sentinel-1 | VH | Annual | Minimum of monthly 10th percentile |

| Level 0 | Level 1 | Level 2 | |||

|---|---|---|---|---|---|

| 1 | Tree Cover ≥ 10% | 10 | 80–100% tree canopy closure | 101 | Evergreen or semi-evergreen |

| 102 | Deciduous | ||||

| 11 | 40–80% tree canopy closure | ||||

| 12 | 10–40% tree canopy closure | ||||

| 2 | Tree Cover 1–10% | 21 | Grass and “singles trees” | ||

| 3 | No Tree Cover | 32 | Grass and/or agriculture | ||

| 33 | Open land (artificial, bare soil, rock, sand) | ||||

| 34 | Water/wetlands | ||||

| Forest Type | Tree Cover–No Tree Cover | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Pixel | Window | Pixel | ||||||||

| Area (km2) | Area Estimated (km2) | 95% CI | Area (km2) | Area Estimated (km2) | 95% CI | Area (km2) | Area Estimated (km2) | 95% CI | ||

| Tree cover 80–100% evergreen | 101 | 24,699 | 27,599 | 1661 | 21,291 | 26,049 | 1502 | |||

| Tree cover 80–100% deciduous | 102 | 98,517 | 107,678 | 3889 | 99,560 | 100,335 | 2957 | |||

| Tree cover 40–80% | 11 | 248,271 | 250,108 | 5959 | 248,529 | 243,559 | 5098 | |||

| Tree cover 10–40% | 12 | 190,722 | 153,638 | 5472 | 178,737 | 266,181 | 4950 | |||

| Tree cover 1–10% | 21 | - | - | - | 24,098 | 86,357 | 5175 | |||

| Tree cover | 562,209 | 539,023 | 572,215 | 722,482 | 587,980 | 558,727 | 4052 | |||

| Grassland | 32 | 325,985 | 342,982 | 4408 | 316,811 | 285,696 | 5248 | |||

| Open and artificial land | 33 | 5782 | 7364 | 1373 | 4705 | 6239 | 1203 | |||

| Water and wetlands | 34 | 59,516 | 64,122 | 1584 | 59,728 | 63,299 | 1267 | |||

| No tree cover | 391,283 | 414,469 | 381,244 | 355,234 | 365,479 | 394,732 | 4052 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Verhegghen, A.; Kuzelova, K.; Syrris, V.; Eva, H.; Achard, F. Mapping Canopy Cover in African Dry Forests from the Combined Use of Sentinel-1 and Sentinel-2 Data: Application to Tanzania for the Year 2018. Remote Sens. 2022, 14, 1522. https://doi.org/10.3390/rs14061522

Verhegghen A, Kuzelova K, Syrris V, Eva H, Achard F. Mapping Canopy Cover in African Dry Forests from the Combined Use of Sentinel-1 and Sentinel-2 Data: Application to Tanzania for the Year 2018. Remote Sensing. 2022; 14(6):1522. https://doi.org/10.3390/rs14061522

Chicago/Turabian StyleVerhegghen, Astrid, Klara Kuzelova, Vasileios Syrris, Hugh Eva, and Frédéric Achard. 2022. "Mapping Canopy Cover in African Dry Forests from the Combined Use of Sentinel-1 and Sentinel-2 Data: Application to Tanzania for the Year 2018" Remote Sensing 14, no. 6: 1522. https://doi.org/10.3390/rs14061522

APA StyleVerhegghen, A., Kuzelova, K., Syrris, V., Eva, H., & Achard, F. (2022). Mapping Canopy Cover in African Dry Forests from the Combined Use of Sentinel-1 and Sentinel-2 Data: Application to Tanzania for the Year 2018. Remote Sensing, 14(6), 1522. https://doi.org/10.3390/rs14061522