A Two-Stage Pansharpening Method for the Fusion of Remote-Sensing Images

Abstract

:1. Introduction

- (1)

- We developed a two-stage PS method based on the CS and variational models, namely, the global sparse gradient-based improved adaptive IHS (GIAIHS) method, and reduced the instability of fused image global information.

- (2)

- We used the GSG information of the image to construct the weight function. GSG is a better representation of the accuracy and robustness gradient information of an image, and we used variational ideas to obtain the optimal solution for the GSG information of the image.

- (3)

- As all existing methods currently use a one-stage direct fusion method to obtain fusion results, loss of information during the fusion process is not considered. In this paper, a two-stage PS fusion algorithm was designed on this basis, which further refines the image for direct fusion, greatly improving the null spectral information of the image. In addition, the method can meet different satellite data needs and maintain a balance between spatial enhancement and spectral fidelity.

2. Related Works

| Algorithm 1: GIAIHS algorithm. Proposed algorithm for two-stage restoration. |

Input: MS image: M, PAN image: P. Output: Fusion image: . ←PAN image; ; ← MS image gradient; and ; ; ← ; and ; . |

3. Proposed Method

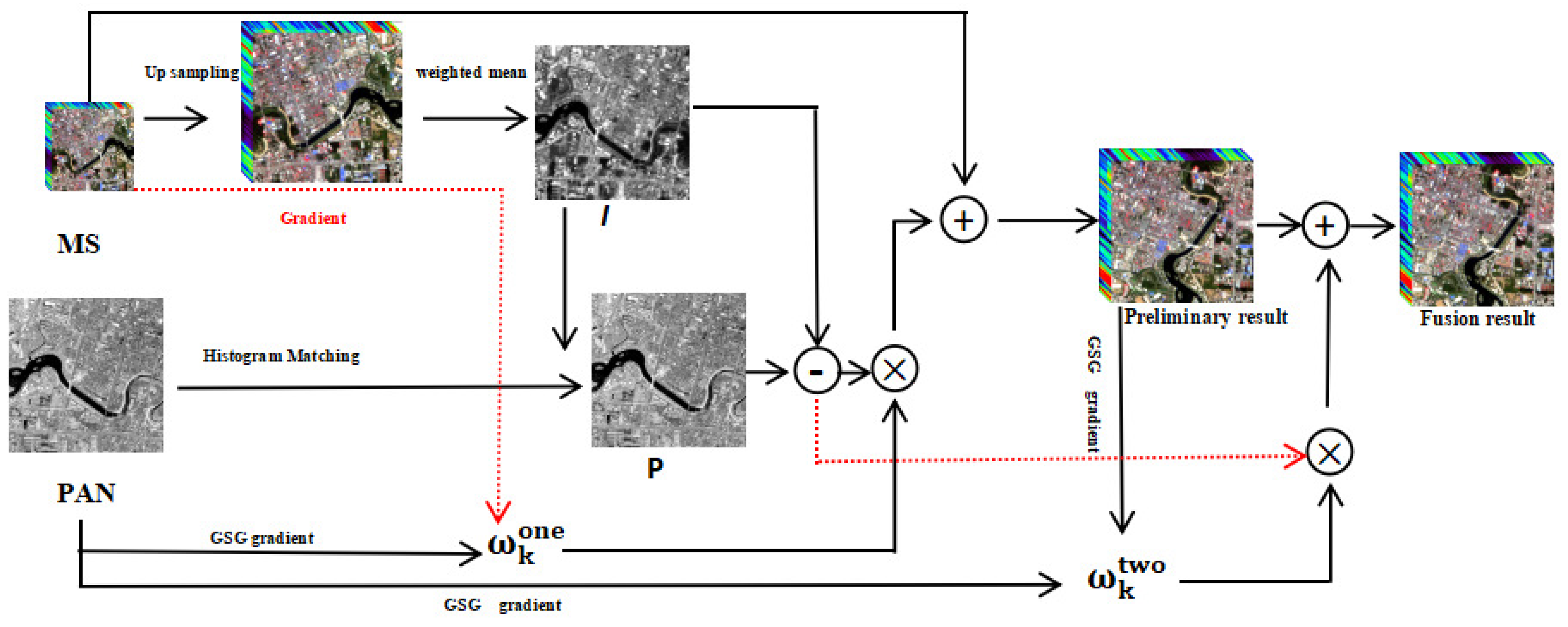

3.1. GIAIHS Fusion Model

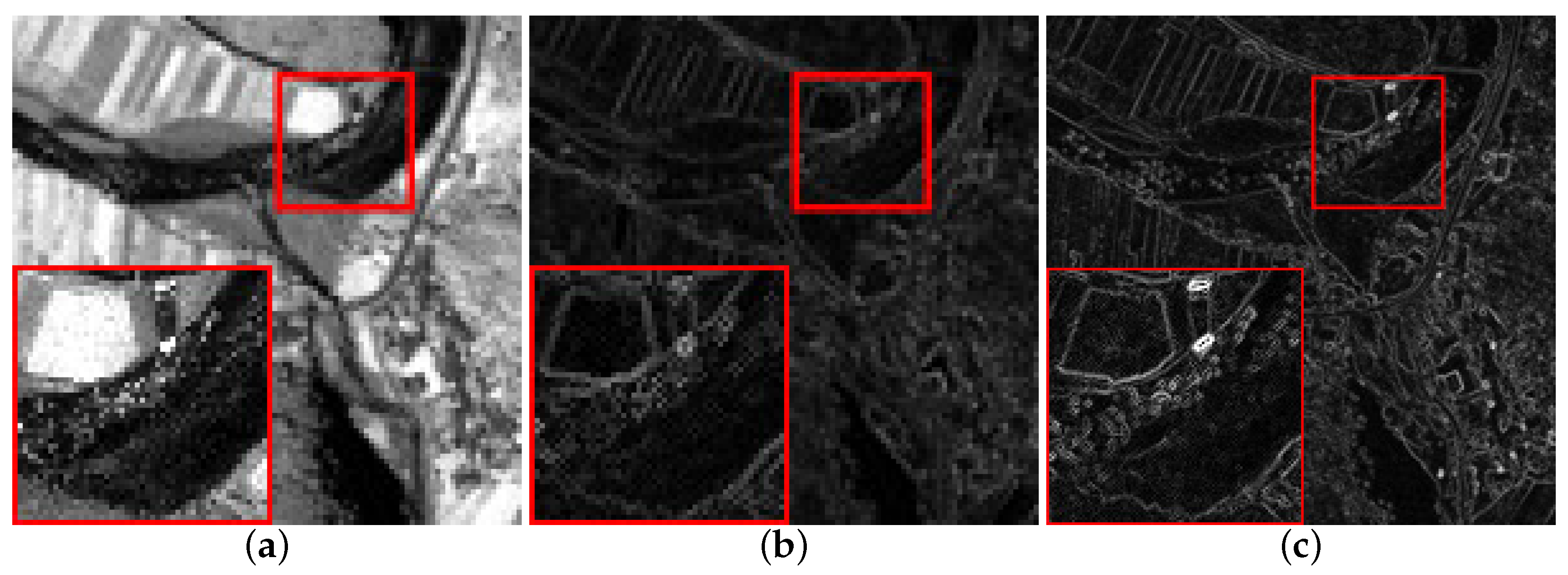

3.2. Weight Function

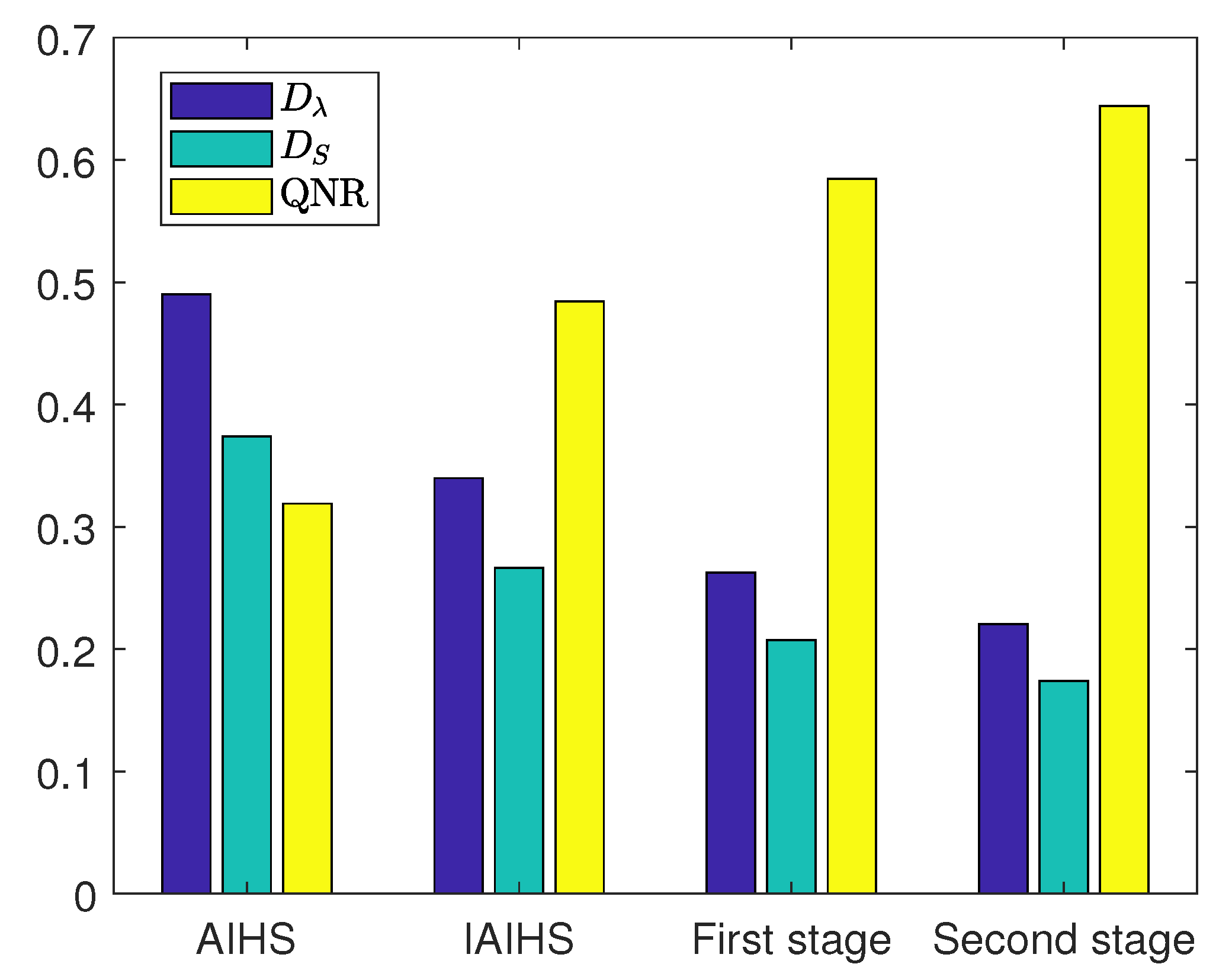

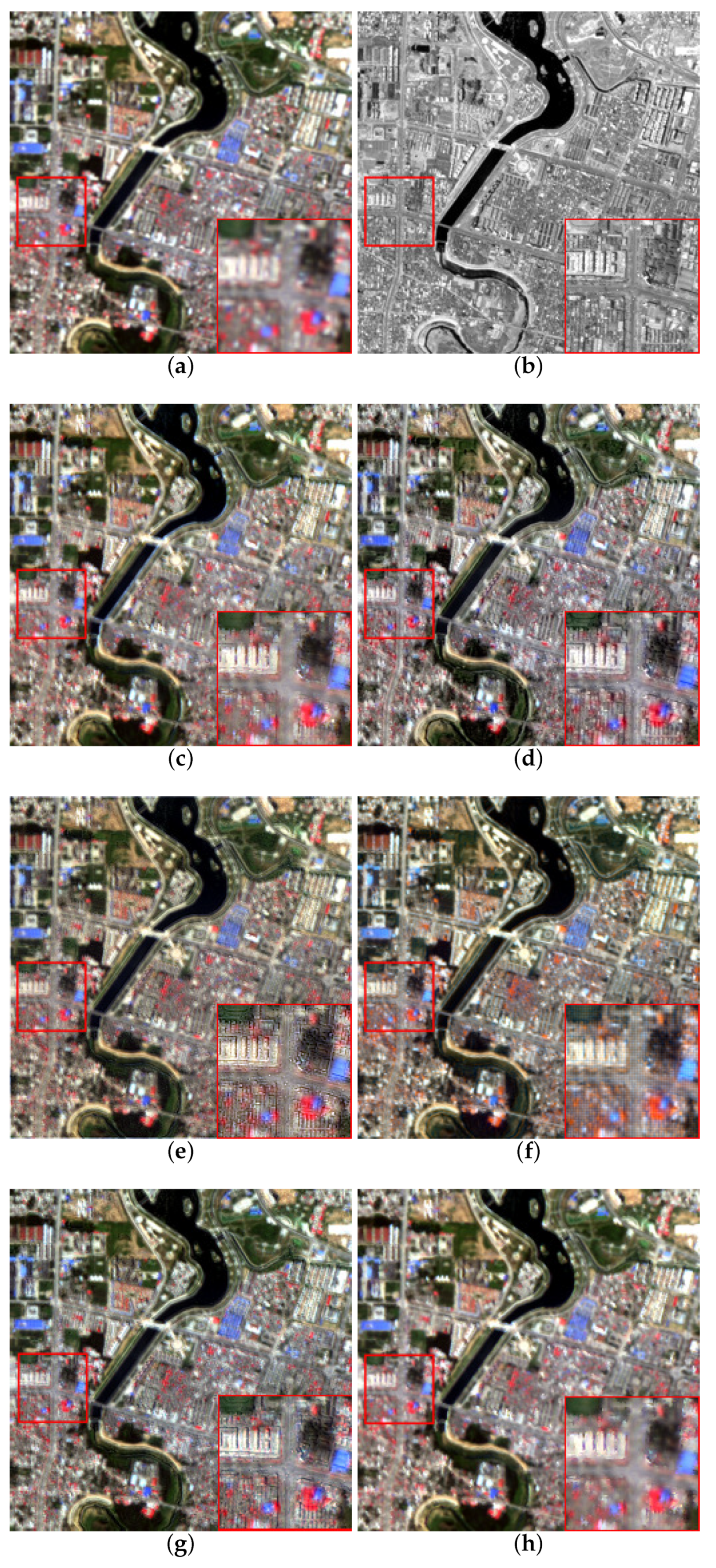

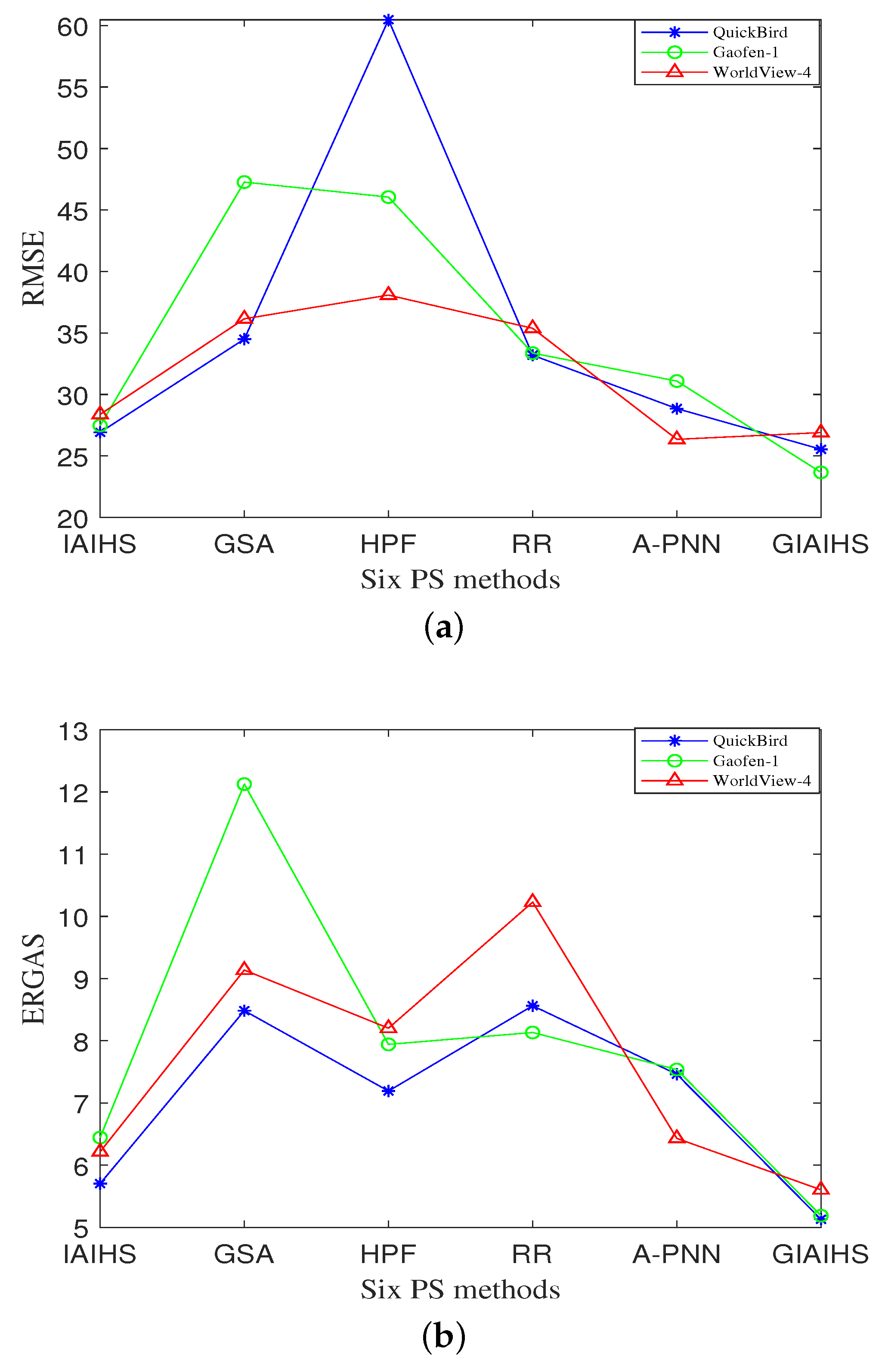

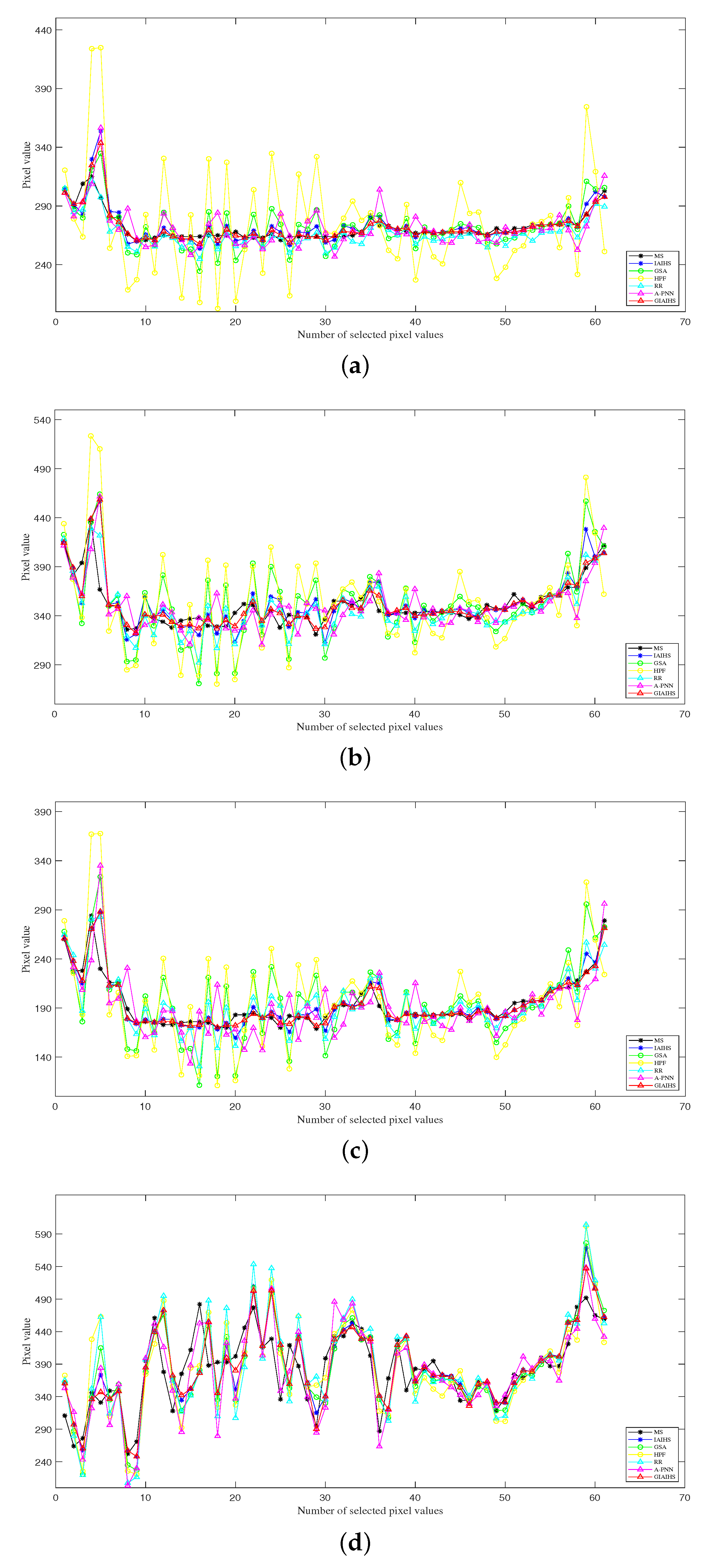

3.3. Results

4. Experiments and Analysis

4.1. Experimental Setup

4.2. Datasets

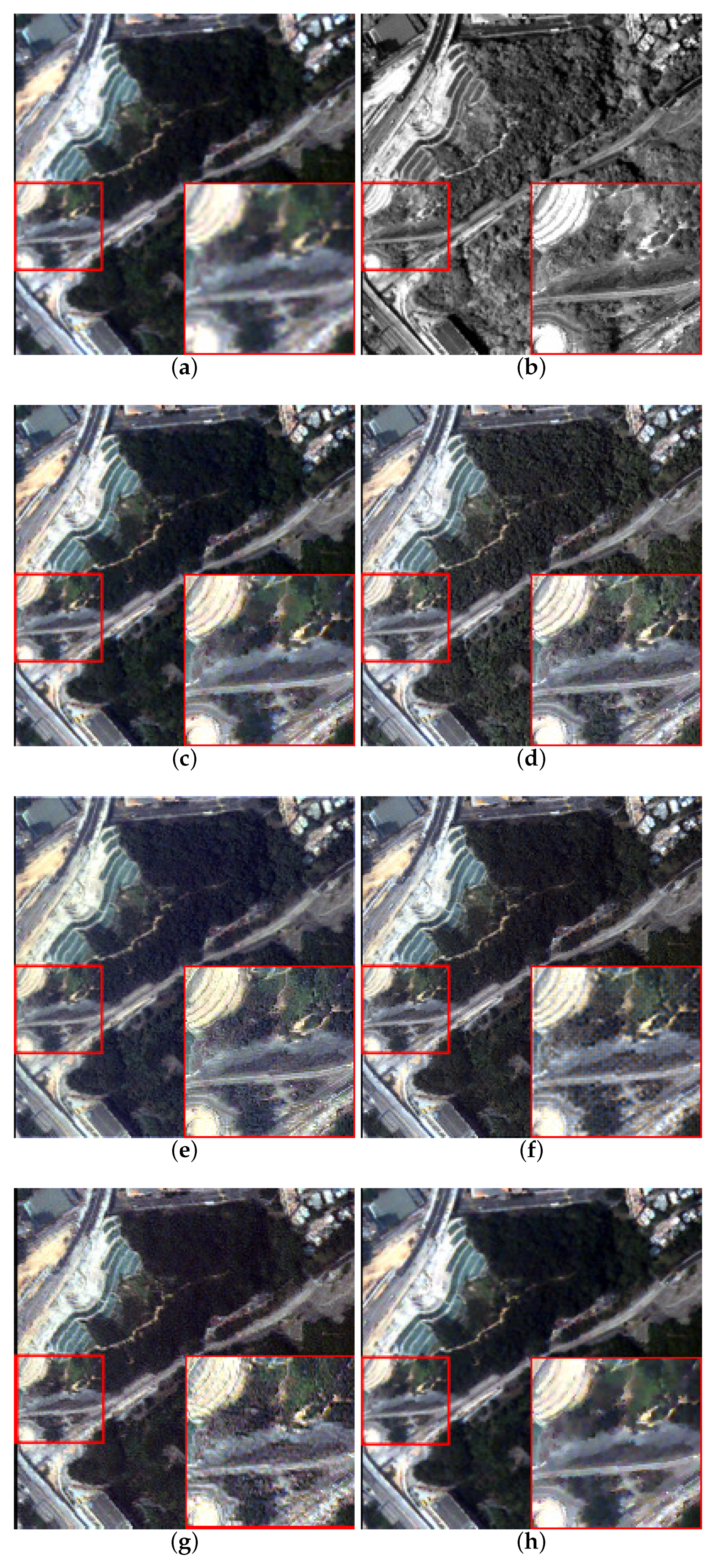

4.3. Experiments and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shah, V.P.; Younan, N.H.; King, R.L. An efficient pansharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1323–1335. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Tu, T.M.; Su, S.C. A new look at IHS like image fusion methods. Inform. Fus. 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Benediktsson, J.A. Pansharpening with matting model. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5088–5099. [Google Scholar] [CrossRef]

- Rahmani, S.; Strait, M.; Merkurjev, D.; Merkurjev, D. An adaptive IHS pansharpening method. IEEE Geosci. Remote Sens. Lett. 2010, 52, 746–750. [Google Scholar] [CrossRef] [Green Version]

- Leung, Y.; Liu, J.M.; Zhang, J. An improved adaptive intensity hue saturation method for the fusion of remote sensing images. IEEE Geosci. Remote Sens. Lett. 2013, 11, 985–989. [Google Scholar] [CrossRef]

- Chen, Y.X.; Zhang, G.X. A pansharpening method based on evolutionary optimization and IHS transformation. Math. Probl. Eng. 2017, 2017, 8269078. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.X.; Liu, C.; Zhou, A.; Zhang, G.X. MIHS: A multiobjective pan sharpening method for remote sensing images. In Proceedings of the IEEE Congress on Evolutionary Computation, Wellington, New Zealand, 10–13 June 2019; pp. 1068–1073. [Google Scholar]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal MMSE pansharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 228–236. [Google Scholar] [CrossRef]

- Vivone, G. Robust band-dependent spatial-detail approaches for panchromatic sharpening. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6421–6433. [Google Scholar] [CrossRef]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component substitution based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2010, 49, 295–309. [Google Scholar] [CrossRef]

- Shahdoosti, H.R.; Javaheri, N. Pansharpening of clustered MS and PAN images considering mixed pixels. IEEE Geosci. Remote Sens. Lett. 2017, 14, 826–830. [Google Scholar] [CrossRef]

- Zhao, X.L. Image fusion based on IHS transform and principal component analysis transform. In Proceedings of the International Conference on Computer Technology Electronics and Communication, Allahabad, India, 17–19 September 2010; pp. 304–307. [Google Scholar]

- Alparone, L.; Baronti, S.; Aiazzi, B.; Garzelli, A. Spatial methods for multi-spectral pansharpening: Multi-resolution analysis demystified. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2563–2576. [Google Scholar] [CrossRef]

- Wang, Z.J.; Ziou, D.; Armenakis, C.; Li, D.R.; Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2017, 43, 1391–1402. [Google Scholar] [CrossRef]

- Restaino, R.; Mura, M.D.; Vivone, G.; Chanussot, J. Context adaptive pansharpening based on image segmentation. IEEE Trans. Geosci. Remote Sens. 2016, 55, 753–766. [Google Scholar] [CrossRef] [Green Version]

- Vivone, G.; Marano, S.; Chanussot, J. Pansharpening: Context-based generalized laplacian pyramids by robust regression. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6152–6167. [Google Scholar] [CrossRef]

- Restaino, R.; Vivone, G.; Dalla, M.M.; Chanussot, J. Fusion of multispectral and panchromatic images based on morphological operators. Photogramm. Eng. Remote Sens. 2016, 25, 2882–2895. [Google Scholar] [CrossRef] [Green Version]

- Aiazzi, B. MTF tailored multiscale fusion of high resolution ms and pan imagery. Photogramm. Eng. Remote Sens. 2015, 72, 591–596. [Google Scholar] [CrossRef]

- Ballester, C.; Caselle, S.V.; Igual, L.; Verdera, J.; Rougé, B. A variational model for P+XS Image fusion. Int. J. Comput. Vis. 2006, 69, 43–58. [Google Scholar] [CrossRef]

- Palsson, F.; Ulfarsson, M.O.; Sveinsson, J.R. Model based reduced rank pansharpening. IEEE Geosci. Remote Sens. Lett. 2020, 17, 656–660. [Google Scholar] [CrossRef]

- Vega, M.; Mateos, J.; Molina, R.; Katsaggelos, A.K. Super resolution of multispectral images using TV image models. Knowl.-Based Intell. Inf. Eng. Syst. 2008, 19, 408–415. [Google Scholar]

- Duran, J.; Buades, A.; Coll, B.; Sbert, C. A nonlocal variational model for pansharpening image fusion. SIAM J. Imaging Sci. 2014, 7, 761–796. [Google Scholar] [CrossRef]

- Li, S.T.; Dian, R.W.; Fang, L.Y.; Bioucas, J.M. Fusing hyperspectral and multispectral images via coupled sparse tensor factorization. IEEE Trans. Image Process. 2018, 27, 4118–4130. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Wang, Y.; Zhao, X.L.; Meng, D.Y.; Xu, Z.B. Hyperspectral and multisectral image fusion via nonlocal low-rank tensor decomposition and spectral unmixing. IEEE Geosci. Remote Sens. 2020, 58, 7654–7671. [Google Scholar] [CrossRef]

- Andrea, G. A review of image fusion algorithms based on the super resolution paradigm. Remote Sens. 2016, 8, 797. [Google Scholar]

- Tian, X.; Chen, Y.; Yang, C.; Zhang, M.; Ma, J. A variational pansharpening method based on gradient sparse representation. IEEE Signal Process. Lett. 2020, 27, 1180–1184. [Google Scholar] [CrossRef]

- Fu, X.; Lin, Z.; Huang, Y.; Ding, X. A variational pansharpening with local gradient constraints. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10265–10274. [Google Scholar]

- Deng, L.J.; Vivone, G.; Guo, W.; Dalla Mura, M.; Chanussot, J. A variational pansharpening approach based on reproducible kernel Hilbert space and heaviside function. IEEE Trans. Image Process. 2018, 27, 4330–4344. [Google Scholar] [CrossRef]

- Zhang, Z.Y.; Huang, T.Z.; Deng, L.J.; Huang, J.; Zhao, X.L.; Zheng, C.C. A framelet-based iterative pan-sharpening approach. Remote Sens. 2018, 10, 622. [Google Scholar] [CrossRef] [Green Version]

- Xu, T.; Huang, T.Z.; Deng, L.J.; Zhao, X.L.; Huang, J. Hyperspectral image superresolution using unidirectional total variation with tucker decomposition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4381–4398. [Google Scholar] [CrossRef]

- Deng, L.J.; Feng, M.; Tai, X.C. The fusion of panchromatic and multispectral remote sensing images via tensor-based sparse modeling and hyper-Laplacian prior. Inf. Fusion 2019, 52, 76–89. [Google Scholar] [CrossRef]

- Huang, W.; Xiao, L.; Wei, Z.; Liu, H.; Tang, S. A new pan sharpening method with deep neural networks. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1037–1041. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef] [Green Version]

- Scarpa, G.; Vitale, S.; Cozzolino, D. Target-adaptive CNN-based pansharpening. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5443–5457. [Google Scholar] [CrossRef] [Green Version]

- Wei, Y.C.; Yuan, Q.Q.; Shen, H.F.; Zhang, L.P. Boosting the accuracy of multispectral image pansharpening by learning a deep residual network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1795–1799. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Tu, W.; Huang, S.; Lu, H. PCDRN: Progressive cascade deep residual network for pansharpening. Remote Sens. 2020, 12, 676. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Jiang, J. Pan-GAN: An unsupervised pansharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Fu, X.; Wang, W.; Huang, Y.; Ding, X.; Paisley, J. Deep multiscale detail networks for multiband spectral image sharpening. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2090–2104. [Google Scholar] [CrossRef]

- Deng, L.J.; Vivone, G.; Jin, C.; Chanussot, J. Detail injection-based deep convolutional neural networks for pansharpening. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6995–7010. [Google Scholar] [CrossRef]

- Jin, C.; Deng, L.J.; Huang, T.Z.; Vivone, G. Laplacian pyramid networks: A new approach for multispectral pansharpening. Inf. Fusion 2022, 78, 158–170. [Google Scholar] [CrossRef]

- Cao, X.; Fu, X.; Xu, C.; Meng, D. Deep spatial-spectral global reasoning network for hyperspectral image denoising. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Liu, P.; Xiao, L. A nonconvex pansharpening model with spatial and spectral gradient difference-induced nonconvex sparsity priors. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Xiang, Z.; Xiao, L.; Liao, W.; Philips, W. MC-JAFN: Multilevel contexts-based joint attentive fusion network for pansharpening. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Li, K.; Zhang, W.; Tian, X.; Ma, J.; Zhou, H.; Wang, Z. Variation-Net: Interpretable variation-inspired deep network for pansharpening. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Guo, P.; Zhuang, P.; Guo, Y. Bayesian pan-sharpening with multiorder gradient-based deep network constraints. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 950–962. [Google Scholar] [CrossRef]

- Lei, D.; Huang, Y.; Zhang, L.; Li, W. Multibranch feature extraction and feature multiplexing network for pansharpening. IEEE Trans. Geosci. Remote Sens. 2021, 59, 2231–2244. [Google Scholar] [CrossRef]

- Zhang, H.; Ma, J. GTP-PNet: A residual learning network based on gradient transformation prior for pansharpening. ISPRS J. Photogramm. Remote Sens. 2021, 172, 223–239. [Google Scholar] [CrossRef]

- Hu, J.; Du, C.; Fan, S. Two-stage pansharpening based on multi-level detail injection network. IEEE Access. 2020, 8, 156442–156455. [Google Scholar] [CrossRef]

- Wu, Y.; Huang, M.; Li, Y.; Feng, S.; Wu, D. A distributed fusion framework of multispectral and panchromatic images based on residual network. Remote Sens. 2021, 13, 2556. [Google Scholar] [CrossRef]

- Vitale, S.; Scarpa, G. A detail-preserving cross-scale learning strategy for CNN-based pansharpening. Remote Sens. 2020, 12, 348. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Zhou, Z.; Liu, H.; Xie, G. MSDRN: Pansharpening of multispectral images via multi-scale deep residual network. Remote Sens. 2021, 13, 1200. [Google Scholar] [CrossRef]

- Naushad, R.; Kaur, T.; Ghaderpour, E. Deep transfer learning for land use and land cover classification: A comparative study. Sensors 2021, 21, 8083. [Google Scholar] [CrossRef]

- Xu, H.; Le, Z.; Huang, J.; Ma, J. A cross direction and progressive network for pansharpening. Remote Sens. 2021, 13, 3045. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. A new pansharpening algorithm based on total variation. IEEE Geosci. Remote Sens Lett. 2013, 11, 318–322. [Google Scholar] [CrossRef]

- Osher, S. Nonlocal operators with applications in imaging. Multiscale Model. Simul. 2008, 7, 1005–1028. [Google Scholar]

- Zhang, R. Research of Global Sparse Gradient Based Image Processing Methods. Ph.D. Dissertation, Xidian University, Xi’an, China, 2017. [Google Scholar]

- Yang, X.M.; Jian, L.H.; Yan, B.Y.; Liu, K.; Zhang, L.; Liu, Y.G. A sparse representation based pansharpening method. Future Gener. Comput. Syst. 2018, 88, 385–399. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A global quality measurement of pansharpened multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- Pushparaj, J.; Hegde, A. Evaluation of pansharpening methods for spatial and spectral quality. Appl. Geomat. 2008, 9, 1–12. [Google Scholar] [CrossRef]

- Choi, M. A new intensity hue saturation fusion approach to image fusion with a tradeoff parameter. IEEE Trans. Geosci. Remote Sens. 2006, 6, 1672–1682. [Google Scholar] [CrossRef] [Green Version]

- Alparone, L.; Aiazzi, B.; Baront, I.S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef] [Green Version]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Zhang, K.; Li, Y.; Zuo, W. Plug-and-Play image restoration with deep denoiser prior. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 99, 1. [Google Scholar] [CrossRef]

| Method | RMSE | RASE | ERGAS | Q4 | QNR | ||

|---|---|---|---|---|---|---|---|

| IAIHS | 26.92 | 8.20 | 1.95 | 0.81 | 0.17 | 0.10 | 0.75 |

| GSA | 34.50 | 10.50 | 2.72 | 0.68 | 0.31 | 0.28 | 0.49 |

| HPF | 60.47 | 18.40 | 4.88 | 0.42 | 0.17 | 0.19 | 0.67 |

| RR | 33.19 | 10.10 | 2.28 | 0.78 | 0.16 | 0.11 | 0.75 |

| A-PNN | 28.85 | 8.78 | 2.28 | 0.74 | 0.21 | 0.04 | 0.76 |

| GIAIHS | 25.54 | 7.77 | 1.84 | 0.88 | 0.11 | 0.05 | 0.85 |

| Method | RMSE | RASE | ERGAS | Q4 | QNR | ||

|---|---|---|---|---|---|---|---|

| IAIHS | 27.47 | 7.36 | 1.86 | 0.84 | 0.14 | 0.29 | 0.61 |

| GSA | 47.27 | 12.67 | 3.24 | 0.68 | 0.33 | 0.50 | 0.33 |

| HPF | 46.05 | 12.34 | 3.10 | 0.71 | 0.09 | 0.32 | 0.62 |

| RR | 33.36 | 8.94 | 2.28 | 0.81 | 0.13 | 0.23 | 0.67 |

| A-PNN | 31.09 | 8.33 | 2.15 | 0.83 | 0.07 | 0.27 | 0.68 |

| GIAIHS | 23.66 | 6.34 | 1.61 | 0.89 | 0.07 | 0.15 | 0.79 |

| Method | RMSE | RASE | ERGAS | Q4 | QNR | ||

|---|---|---|---|---|---|---|---|

| IAIHS | 28.38 | 7.61 | 1.40 | 0.75 | 0.16 | 0.10 | 0.76 |

| GSA | 36.15 | 9.69 | 1.91 | 0.78 | 0.14 | 0.16 | 0.72 |

| HPF | 38.08 | 10.21 | 3.26 | 0.48 | 0.19 | 0.18 | 0.66 |

| RR | 35.38 | 9.49 | 1.80 | 0.68 | 0.16 | 0.14 | 0.72 |

| A-PNN | 26.35 | 7.07 | 1.65 | 0.77 | 0.09 | 0.10 | 0.82 |

| GIAIHS | 26.90 | 7.21 | 1.27 | 0.83 | 0.11 | 0.04 | 0.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Liu, G.; Zhang, R.; Liu, J. A Two-Stage Pansharpening Method for the Fusion of Remote-Sensing Images. Remote Sens. 2022, 14, 1121. https://doi.org/10.3390/rs14051121

Wang Y, Liu G, Zhang R, Liu J. A Two-Stage Pansharpening Method for the Fusion of Remote-Sensing Images. Remote Sensing. 2022; 14(5):1121. https://doi.org/10.3390/rs14051121

Chicago/Turabian StyleWang, Yazhen, Guojun Liu, Rui Zhang, and Junmin Liu. 2022. "A Two-Stage Pansharpening Method for the Fusion of Remote-Sensing Images" Remote Sensing 14, no. 5: 1121. https://doi.org/10.3390/rs14051121

APA StyleWang, Y., Liu, G., Zhang, R., & Liu, J. (2022). A Two-Stage Pansharpening Method for the Fusion of Remote-Sensing Images. Remote Sensing, 14(5), 1121. https://doi.org/10.3390/rs14051121