A Flexible Multi-Temporal and Multi-Modal Framework for Sentinel-1 and Sentinel-2 Analysis Ready Data

Abstract

:1. Introduction

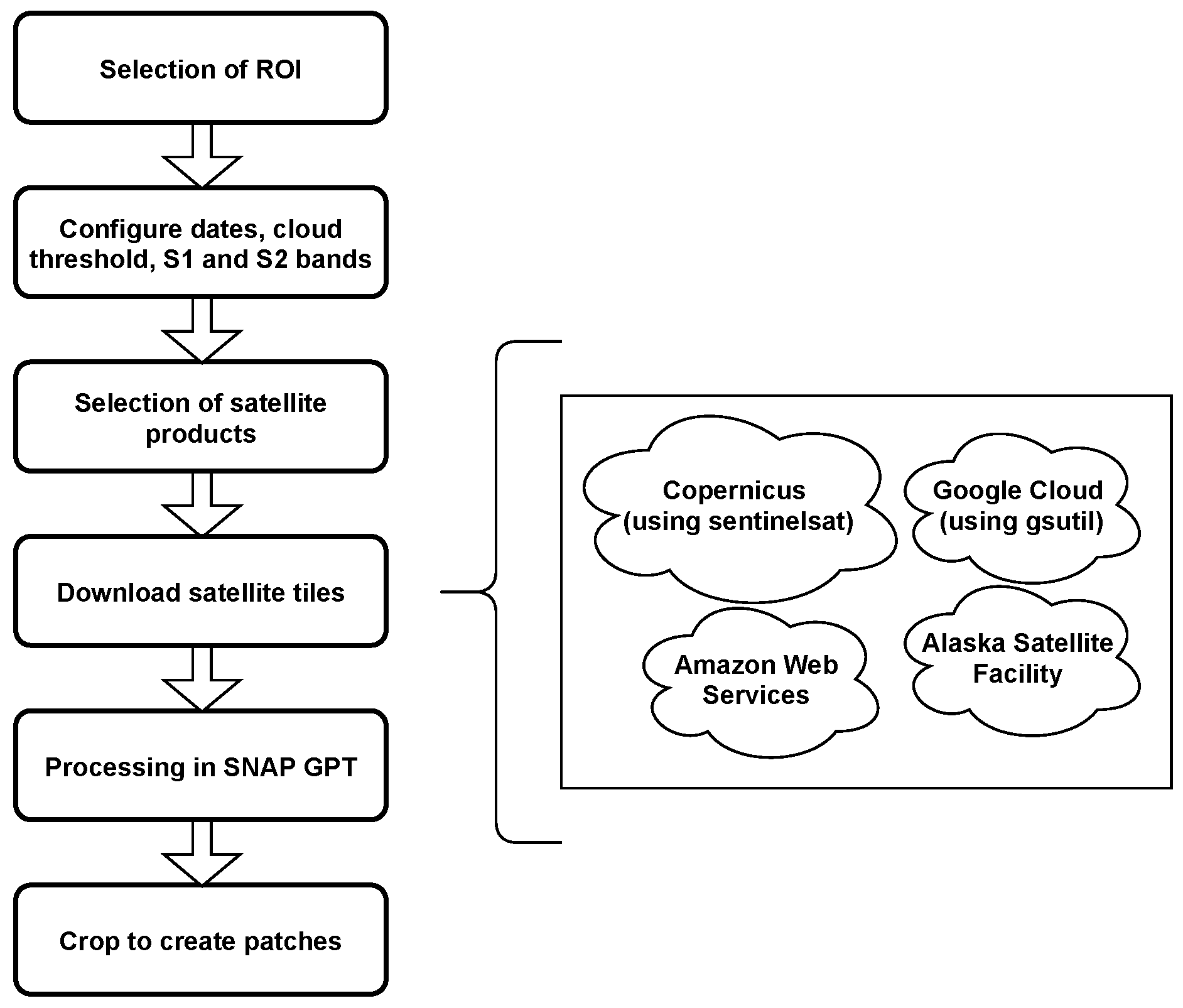

2. ARD Framework

2.1. User-Defined Configuration

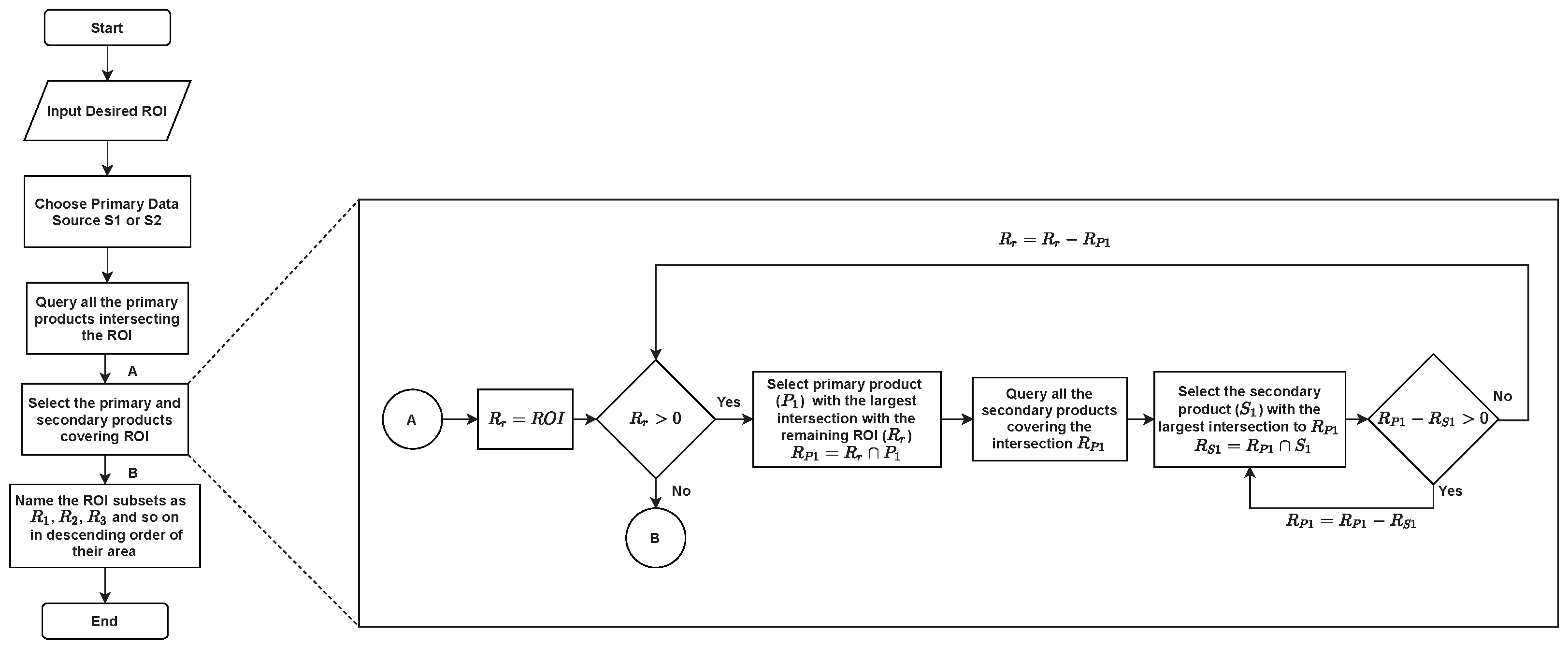

2.2. Sentinel Scene Discovery

- Products: “Products are a compilation of elementary granules of fixed size, along with a single orbit. A granule is the minimum indivisible partition of a product (containing all possible spectral bands).”

- Tiles: “For Level-1C and Level-2A Sentinel-2 products, the granules, also called tiles, are approximately 100 × 100 km2 ortho-images in UTM/WGS84 projection.”

- Patches: rectangular or square cut-outs of defined pixel sizes from the complete image.

- Scene: a collection of images covering the entire spatial extent of the target ROI.

2.3. Data Download and Access

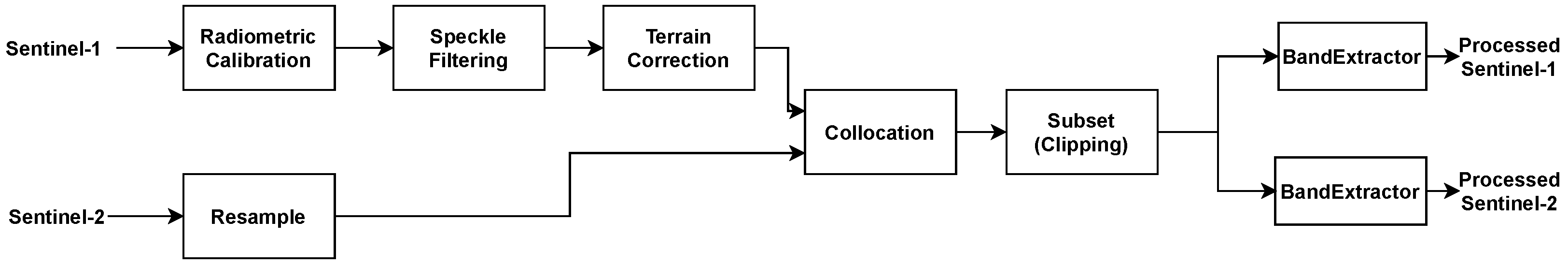

2.4. Processing Pipelines

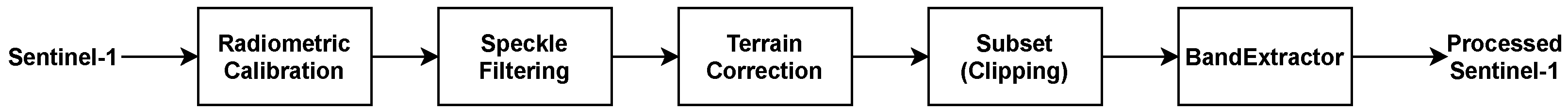

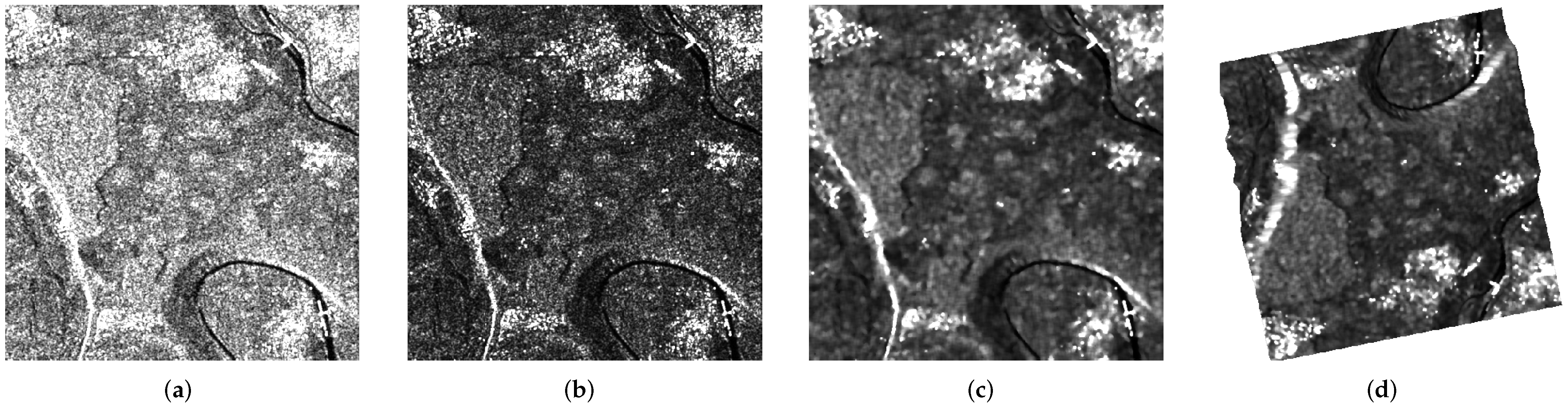

2.4.1. Sentinel-1 Product Processing

2.4.2. Sentinel-2 Product Processing

2.4.3. Collocation of Sentinel-1 and Sentinel-2 Products

2.5. Patch Creation and Output

2.6. Docker and Parallel Processing

3. Results

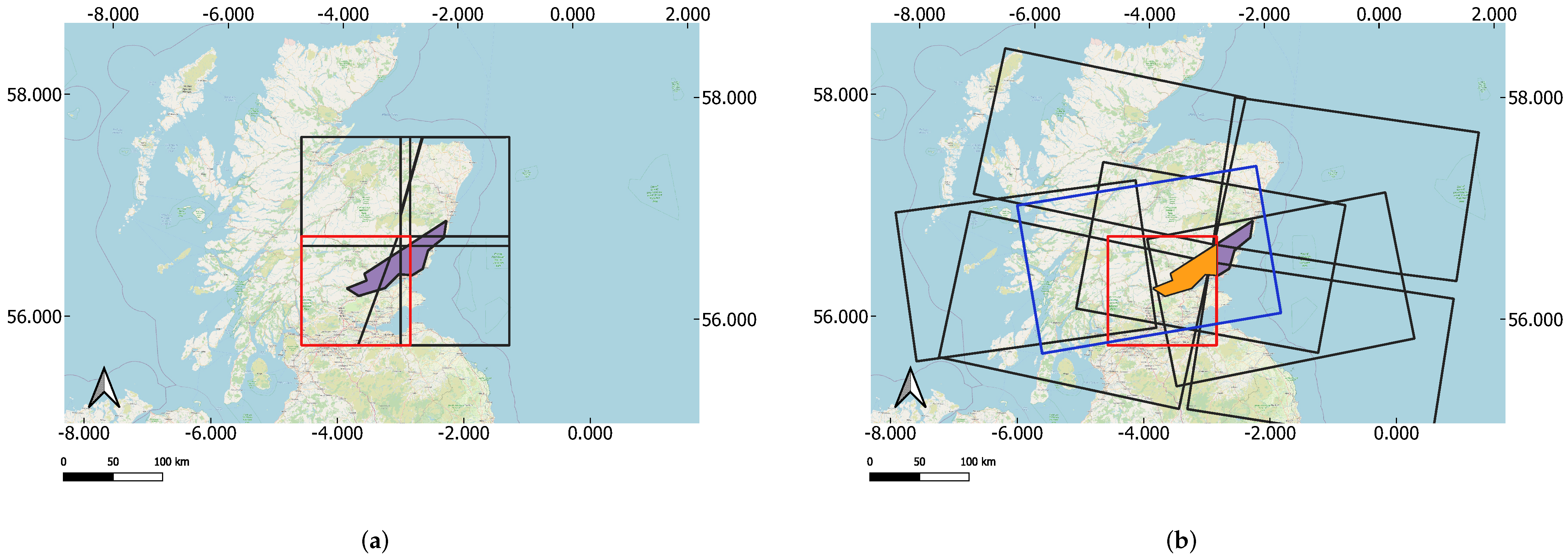

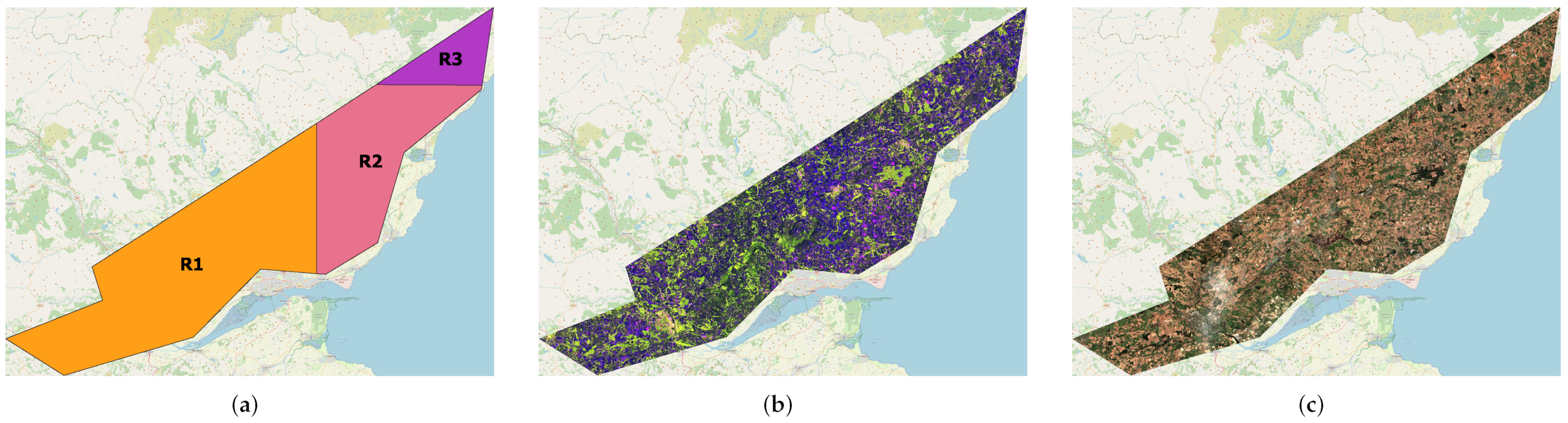

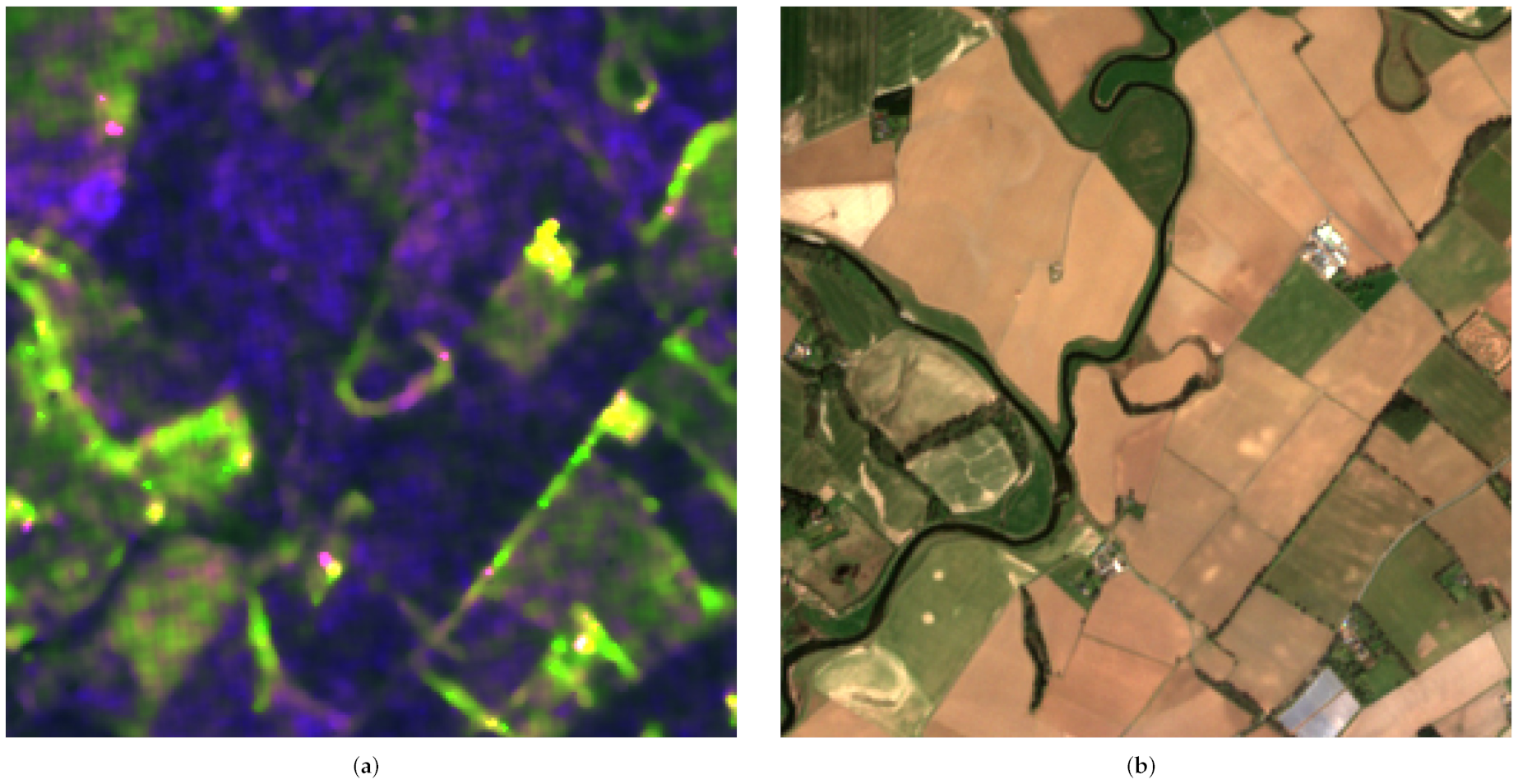

3.1. Case Study: ARD Generation

3.2. Challenges and Optimisations

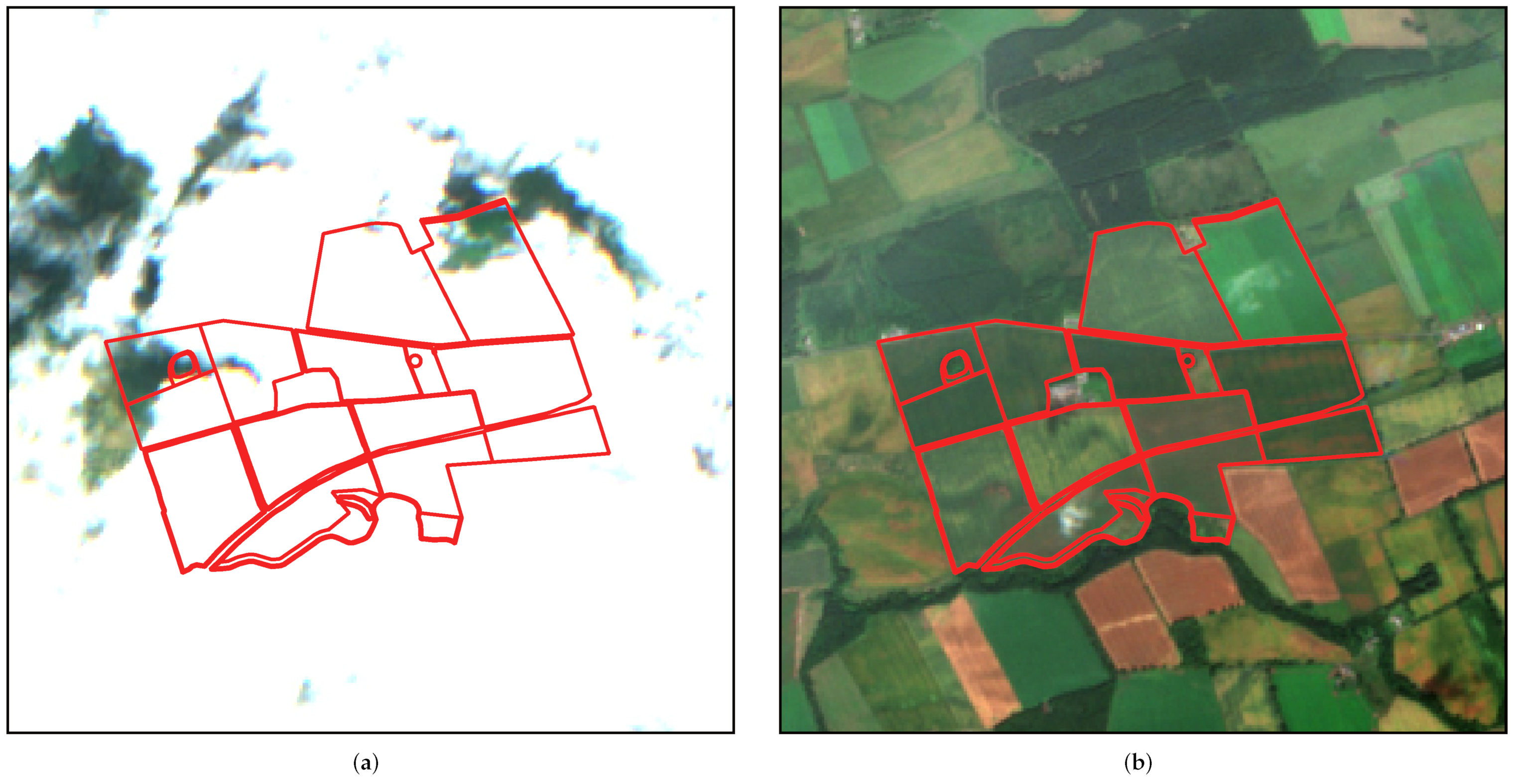

3.2.1. Sentinel-2 Product Selection Based on Cloud Criteria

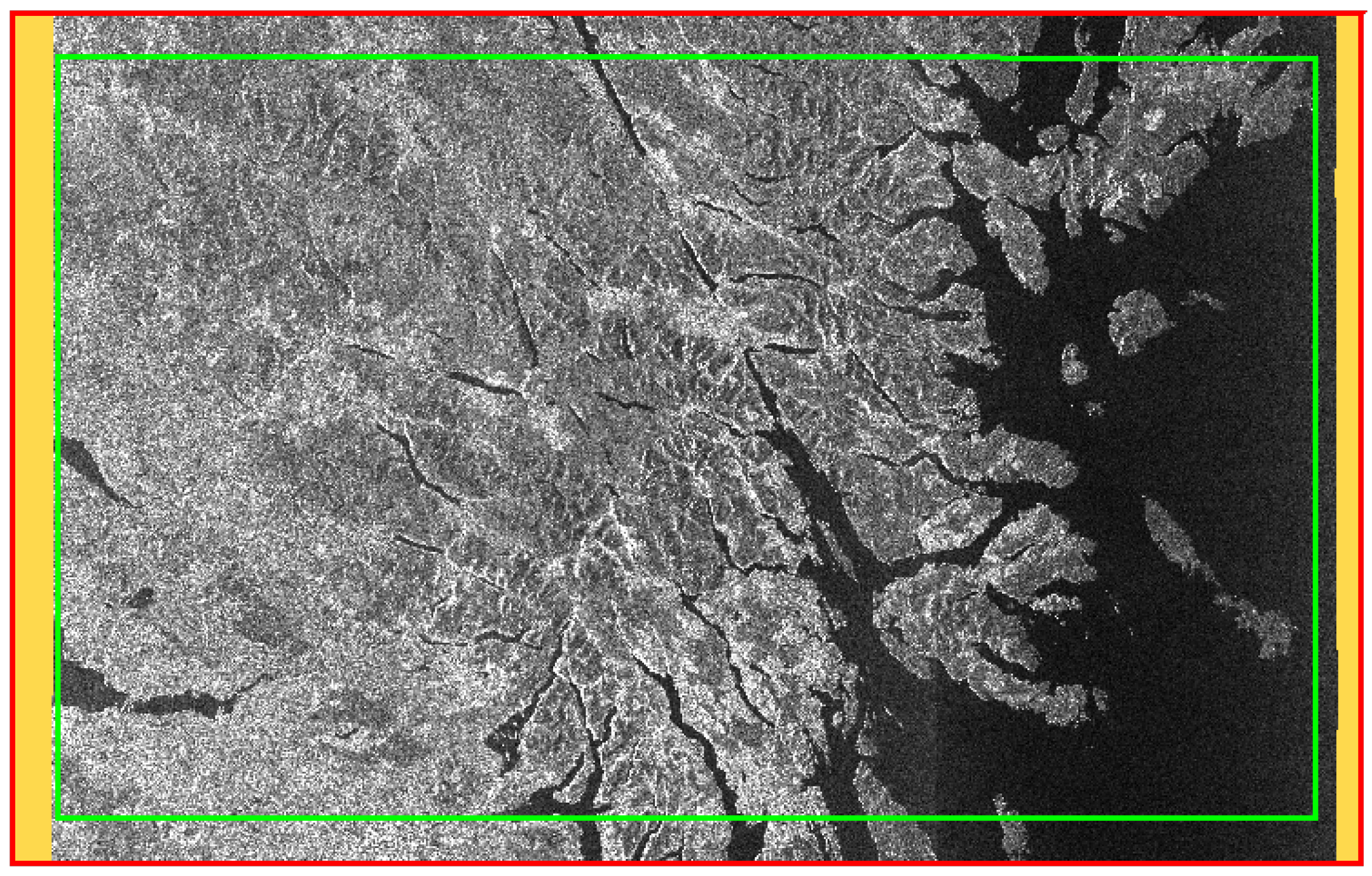

3.2.2. “No-Data” Values in Sentinel-1 Products

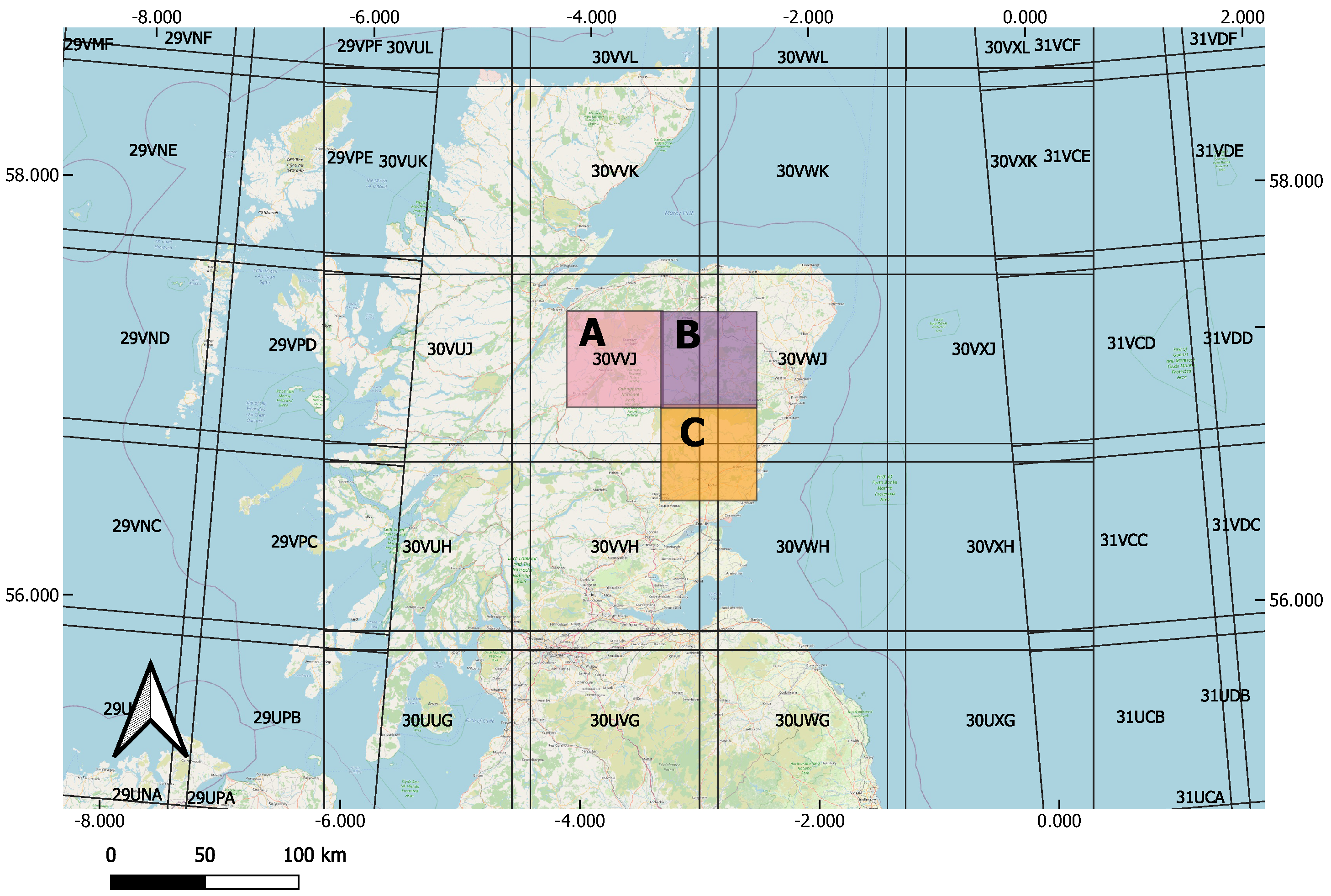

3.3. Effect of Tile Overlap

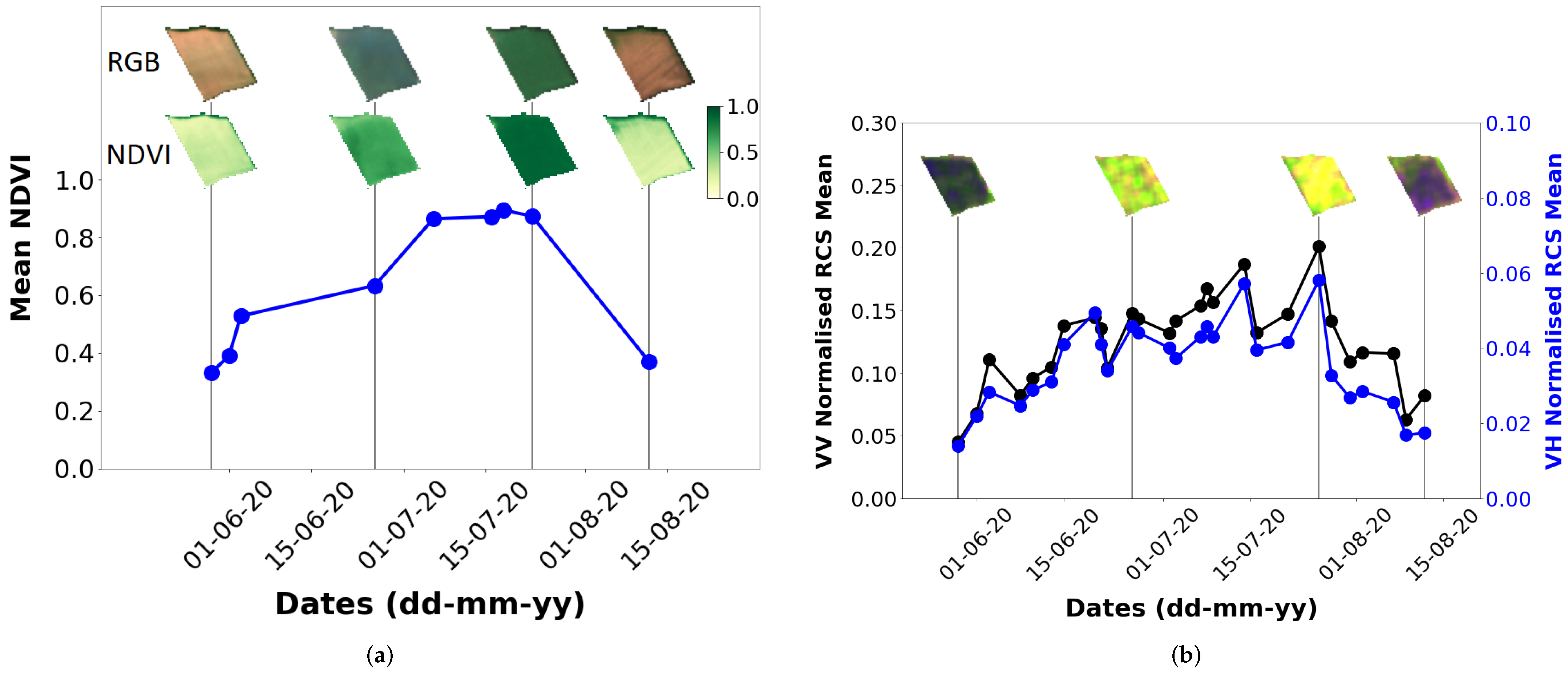

3.4. Application: Multi-Modal and Multi-Temporal ARD for Crop Monitoring

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

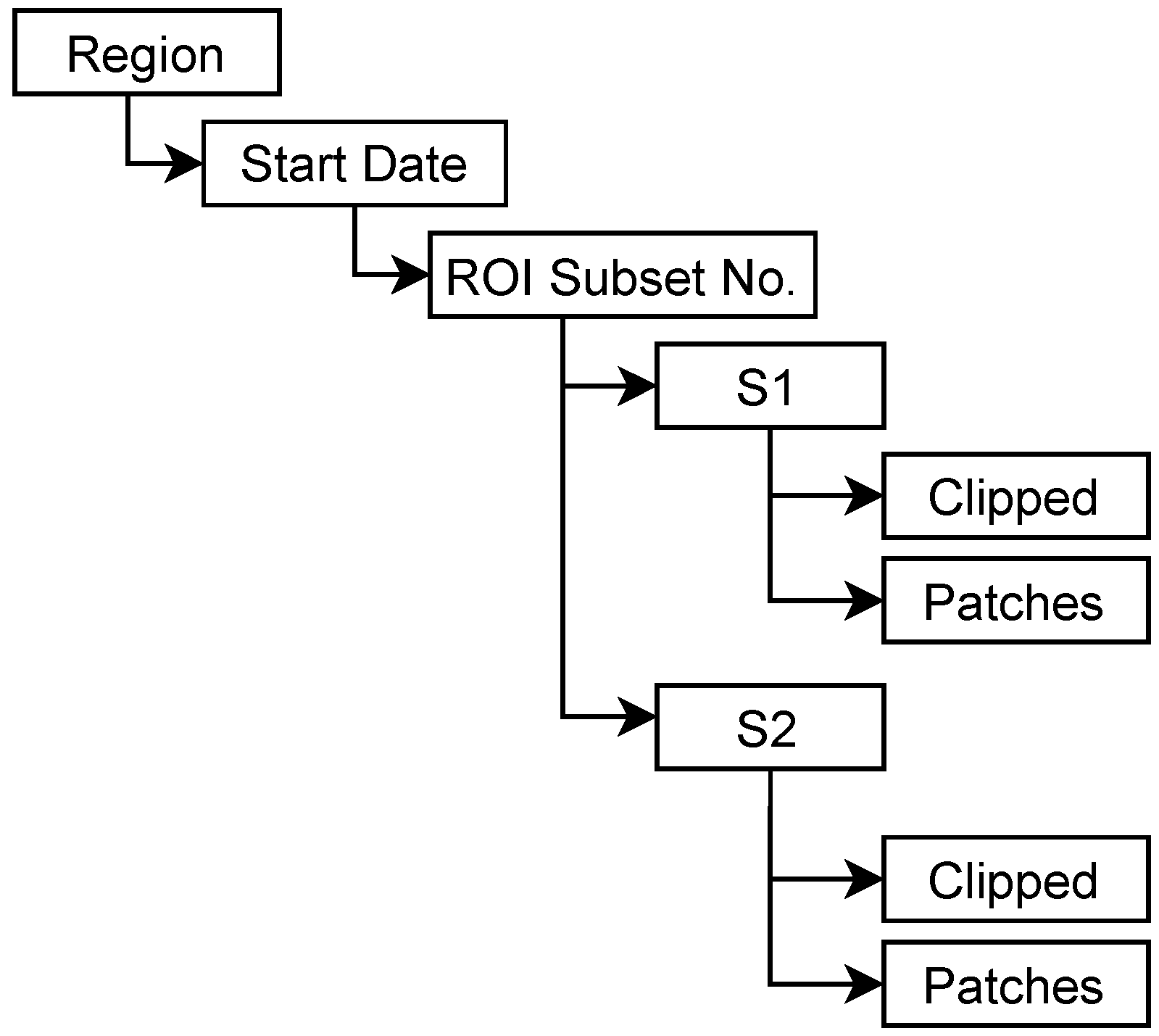

Appendix A.1. Naming Convention

Appendix A.2. Configuration Parameters

| Parameter | Description |

|---|---|

| Name | This will set the name of the folder as per convention mentioned in Appendix A. |

| dates | Pair of dates (in format YYYYMMDD) specifying the start and end of the period of interest. |

| geojson | Geojson string representing the ROI. |

| cloudcover | Pair of integers (in range 0..100) specifying lower and upper threshold for cloud cover at the tile level for queries of Sentinel-2 products. |

| cloud mask filtering | This option is set to build maximum cloud-free Sentinel-2 image based on per pixel cloud mask from scene classification mask. |

| size | Pair of integers specifying the row and column size, in pixels, of patch to generate. |

| overlap | Pair of integers specifying the horizontal and vertical overlap between patches, where 0 indicates no overlap, while 1 indicates maximum overlap. |

| bands_S1 | The polarization bands required for Sentinel-1 GRD products. |

| bands_S2 | The multi-spectral and mask bands required for Sentinel-2 Level-2A products. |

| callback_snap | Configurable function used to run custom processing for each set of (potentially) multi-modal, multi-temporal products. |

| callback_find_products | Configurable function used to identify sets of multi-modal, multi-temporal products. |

| Parameter | Description |

|---|---|

| Rebuild | This will delete any earlier processed products and rebuild the processed products. |

| Skip week | This will skip all weeks that do not yield products covering complete ROI. |

| Primary product | This option will select primary product as Sentinel-1 or Sentinel-2. The default primary product is set as Sentinel-2. The secondary products are selected around the primary product within the “Secondary Time Delta” days. |

| Skip secondary | This will skip the listing and processing of secondary product. This option is used when only one out of Sentinel-1 or Sentinel-2 products is relevant. |

| External Bucket | This will check for Long-Term Archived (LTA) products from AWS, Google, Sentinelhub, ASF. |

| Available area | This option will list part of an ROI that matches the required specifications, even if the whole ROI is not available. |

| Secondary Time Delta | This option specifies the delta time between primary and secondary products in days. |

| Primary product frequency | This option selects the frequency in days between primary products. |

References

- Anderson, K.; Ryan, B.; Sonntag, W.; Kavvada, A.; Friedl, L. Earth observation in service of the 2030 Agenda for Sustainable Development. Geo-Spat. Inf. Sci. 2017, 20, 77–96. [Google Scholar] [CrossRef]

- Schumann, G.J.P.; Brakenridge, G.R.; Kettner, A.J.; Kashif, R.; Niebuhr, E. Assisting Flood Disaster Response with Earth Observation Data and Products: A Critical Assessment. Remote Sens. 2018, 10, 1230. [Google Scholar] [CrossRef] [Green Version]

- Guzinski, R.; Kass, S.; Huber, S.; Bauer-Gottwein, P.; Jensen, I.H.; Naeimi, V.; Doubkova, M.; Walli, A.; Tottrup, C. Enabling the Use of Earth Observation Data for Integrated Water Resource Management in Africa with the Water Observation and Information System. Remote Sens. 2014, 6, 7819–7839. [Google Scholar] [CrossRef] [Green Version]

- Bouvet, A.; Mermoz, S.; Ballère, M.; Koleck, T.; Le Toan, T. Use of the SAR Shadowing Effect for Deforestation Detection with Sentinel-1 Time Series. Remote Sens. 2018, 10, 1250. [Google Scholar] [CrossRef] [Green Version]

- Wangchuk, S.; Bolch, T. Mapping of glacial lakes using Sentinel-1 and Sentinel-2 data and a random forest classifier: Strengths and challenges. Sci. Remote Sens. 2020, 2, 100008. [Google Scholar] [CrossRef]

- Gargiulo, M.; Dell’Aglio, D.A.G.; Iodice, A.; Riccio, D.; Ruello, G. Integration of Sentinel-1 and Sentinel-2 Data for Land Cover Mapping Using W-Net. Sensors 2020, 20, 2969. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Mack, B.; Conrad, C. Crop Type Classification Using Fusion of Sentinel-1 and Sentinel-2 Data: Assessing the Impact of Feature Selection, Optical Data Availability, and Parcel Sizes on the Accuracies. Remote Sens. 2020, 12, 2779. [Google Scholar] [CrossRef]

- Meraner, A.; Ebel, P.; Zhu, X.X.; Schmitt, M. Cloud removal in Sentinel-2 imagery using a deep residual neural network and SAR-optical data fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar] [CrossRef]

- Gao, Q.; Zribi, M.; Escorihuela, M.J.; Baghdadi, N. Synergetic Use of Sentinel-1 and Sentinel-2 Data for Soil Moisture Mapping at 100 m Resolution. Sensors 2017, 17, 1966. [Google Scholar] [CrossRef] [Green Version]

- Huang, L.; Liu, B.; Li, B.; Guo, W.; Yu, W.; Zhang, Z.; Yu, W. OpenSARShip: A Dataset Dedicated to Sentinel-1 Ship Interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 195–208. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Z.; Yao, W.; Datcu, M.; Xiong, H.; Yu, W. OpenSARUrban: A Sentinel-1 SAR Image Dataset for Urban Interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 187–203. [Google Scholar] [CrossRef]

- Ardö, J. A Sentinel-2 Dataset for Uganda. Data 2021, 6, 35. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. Introducing Eurosat: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 204–207. [Google Scholar] [CrossRef]

- Weikmann, G.; Paris, C.; Bruzzone, L. TimeSen2Crop: A Million Labeled Samples Dataset of Sentinel 2 Image Time Series for Crop-Type Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4699–4708. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Zhu, X.X. The SEN1-2 dataset for deep learning in SAR-optical data fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-1, 141–146. [Google Scholar] [CrossRef] [Green Version]

- Schmitt, M.; Hughes, L.; Qiu, C.; Zhu, X. SEN12MS—A Curated Dataset of Georeferenced Multi-Spectral Sentinel-1/2 Imagery for Deep Learning and Data Fusion. arXiv 2019, arXiv:1906.07789. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.X.; Hu, J.; Qiu, C.; Shi, Y.; Kang, J.; Mou, L.; Bagheri, H.; Haberle, M.; Hua, Y.; Huang, R.; et al. So2Sat LCZ42: A Benchmark Data Set for the Classification of Global Local Climate Zones [Software and Data Sets]. IEEE Geosci. Remote Sens. Mag. 2020, 8, 76–89. [Google Scholar] [CrossRef] [Green Version]

- Augustin, H.; Sudmanns, M.; Tiede, D.; Lang, S.; Baraldi, A. Semantic Earth Observation Data Cubes. Data 2019, 4, 102. [Google Scholar] [CrossRef] [Green Version]

- Lewis, A.; Oliver, S.; Lymburner, L.; Evans, B.; Wyborn, L.; Mueller, N.; Raevksi, G.; Hooke, J.; Woodcock, R.; Sixsmith, J.; et al. The Australian Geoscience Data Cube—Foundations and lessons learned. Remote Sens. Environ. 2017, 202, 276–292. [Google Scholar] [CrossRef]

- Lewis, A.; Lymburner, L.; Purss, M.B.J.; Brooke, B.; Evans, B.; Ip, A.; Dekker, A.G.; Irons, J.R.; Minchin, S.; Mueller, N.; et al. Rapid, high-resolution detection of environmental change over continental scales from satellite data – the Earth Observation Data Cube. Int. J. Digit. Earth 2016, 9, 106–111. [Google Scholar] [CrossRef]

- Giuliani, G.; Chatenoux, B.; Bono, A.D.; Rodila, D.; Richard, J.P.; Allenbach, K.; Dao, H.; Peduzzi, P. Building an Earth Observations Data Cube: Lessons learned from the Swiss Data Cube (SDC) on generating Analysis Ready Data (ARD). Big Earth Data 2017, 1, 100–117. [Google Scholar] [CrossRef] [Green Version]

- Giuliani, G.; Chatenoux, B.; Honeck, E.; Richard, J.P. Towards Sentinel-2 Analysis Ready Data: A Swiss Data Cube Perspective. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8659–8662. [Google Scholar] [CrossRef] [Green Version]

- Ariza Porras, C.; Bravo, G.; Villamizar, M.; Moreno, A.; Castro, H.; Galindo, G.; Cabera, E.; Valbuena, S.; Lozano-Rivera, P. CDCol: A Geoscience Data Cube that Meets Colombian Needs. In Colombian Conference on Computing; Springer: Cham, Switzerland, 2017; pp. 87–99. [Google Scholar] [CrossRef]

- Cheng, M.C.; Chiou, C.R.; Chen, B.; Liu, C.; Lin, H.C.; Shih, I.L.; Chung, C.H.; Lin, H.Y.; Chou, C.Y. Open Data Cube (ODC) in Taiwan: The Initiative and Protocol Development. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5654–5657. [Google Scholar] [CrossRef]

- Dhu, T.; Dunn, B.; Lewis, B.; Lymburner, L.; Mueller, N.; Telfer, E.; Lewis, A.; McIntyre, A.; Minchin, S.; Phillips, C. Digital earth Australia – unlocking new value from earth observation data. Big Earth Data 2017, 1, 64–74. [Google Scholar] [CrossRef] [Green Version]

- Digital Earth Africa (DE Africa). Available online: https://www.earthobservations.org/documents/gwp20_22/DE-AFRICA.pdf (accessed on 5 October 2021).

- Ticehurst, C.; Zhou, Z.S.; Lehmann, E.; Yuan, F.; Thankappan, M.; Rosenqvist, A.; Lewis, B.; Paget, M. Building a SAR-Enabled Data Cube Capability in Australia Using SAR Analysis Ready Data. Data 2019, 4, 100. [Google Scholar] [CrossRef] [Green Version]

- The “Road to 20” International Data Cube Deployments. Available online: https://ecb55191-c6e7-461e-a453-1feef4c7e8b7.filesusr.com/ugd/8959d6_cfcba3751fe642bc9faec776ab98cb20.pdf (accessed on 5 October 2021).

- Frantz, D. FORCE—Landsat + Sentinel-2 Analysis Ready Data and Beyond. Remote Sens. 2019, 11, 1124. [Google Scholar] [CrossRef] [Green Version]

- Baumann, P.; Mazzetti, P.; Ungar, J.; Barbera, R.; Barboni, D.; Beccati, A.; Bigagli, L.; Boldrini, E.; Bruno, R.; Calanducci, A.; et al. Big Data Analytics for Earth Sciences: The EarthServer approach. Int. J. Digit. Earth 2016, 9, 3–29. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Giuliani, G.; Masó, J.; Mazzetti, P.; Nativi, S.; Zabala, A. Paving the Way to Increased Interoperability of Earth Observations Data Cubes. Data 2019, 4, 113. [Google Scholar] [CrossRef] [Green Version]

- Giuliani, G.; Chatenoux, B.; Piller, T.; Moser, F.; Lacroix, P. Data Cube on Demand (DCoD): Generating an earth observation Data Cube anywhere in the world. Int. J. Appl. Earth Obs. Geoinf. 2020, 87, 102035. [Google Scholar] [CrossRef]

- Appel, M.; Pebesma, E. On-Demand Processing of Data Cubes from Satellite Image Collections with the gdalcubes Library. Data 2019, 4, 92. [Google Scholar] [CrossRef] [Green Version]

- Planque, C.; Lucas, R.; Punalekar, S.; Chognard, S.; Hurford, C.; Owers, C.; Horton, C.; Guest, P.; King, S.; Williams, S.; et al. National Crop Mapping Using Sentinel-1 Time Series: A Knowledge-Based Descriptive Algorithm. Remote Sens. 2021, 13, 846. [Google Scholar] [CrossRef]

- El Mendili, L.; Puissant, A.; Chougrad, M.; Sebari, I. Towards a Multi-Temporal Deep Learning Approach for Mapping Urban Fabric Using Sentinel 2 Images. Remote Sens. 2020, 12, 423. [Google Scholar] [CrossRef] [Green Version]

- Roy, D.P.; Huang, H.; Boschetti, L.; Giglio, L.; Yan, L.; Zhang, H.H.; Li, Z. Landsat-8 and Sentinel-2 burned area mapping - A combined sensor multi-temporal change detection approach. Remote Sens. Environ. 2019, 231, 111254. [Google Scholar] [CrossRef]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. ISPRS J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- SNAP. Available online: https://step.esa.int/main/toolboxes/SNAP/ (accessed on 6 October 2021).

- Lewis, A.; Lacey, J.; Mecklenburg, S.; Ross, J.; Siqueira, A.; Killough, B.; Szantoi, Z.; Tadono, T.; Rosenavist, A.; Goryl, P.; et al. CEOS Analysis Ready Data for Land (CARD4L) Overview. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7407–7410. [Google Scholar] [CrossRef]

- CEOS Analysis Ready Data for Land (CARD4L). Available online: https://ceos.org/document_management/Meetings/Plenary/30/Documents/5.5_CEOS-CARD4L-Description_v.22.docx (accessed on 16 November 2021).

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Sentinel Online—Sentinel-2 Level-2A Processing. Available online: https://sentinels.copernicus.eu/web/sentinel/user-guides/sentinel-2-msi/processing-levels/level-2 (accessed on 29 November 2021).

- Sentinel Online—Data Products. Available online: https://sentinels.copernicus.eu/web/sentinel/missions/sentinel-2/data-products (accessed on 6 October 2021).

- Sentinelsat. Available online: https://sentinelsat.readthedocs.io/en/master/index.html (accessed on 6 October 2021).

- Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/ (accessed on 6 October 2021).

- Copernicus Sentinel-1 Data 2018, 2019, 2020. Retrieved from ASF DAAC 28 July 2021, Processed by ESA. Available online: https://asf.alaska.edu/data-sets/sar-data-sets/sentinel-1/sentinel-1-data-and-imagery/ (accessed on 31 January 2022).

- Sentinel-2 Data. Available online: https://cloud.google.com/storage/docs/public-datasets/sentinel-2 (accessed on 6 October 2021).

- Registry of Open Data on AWS Sentinel-1. Available online: https://registry.opendata.aws/sentinel-1/ (accessed on 6 October 2021).

- Registry of Open Data on AWS Sentinel-2. Available online: https://registry.opendata.aws/sentinel-2/ (accessed on 6 October 2021).

- SAR Basics Tutorial. Available online: http://step.esa.int/docs/tutorials/S1TBX%20SAR%20Basics%20Tutorial.pdf (accessed on 6 October 2021).

- Filipponi, F. Sentinel-1 GRD Preprocessing Workflow. Proceedings 2019, 18, 11. [Google Scholar] [CrossRef] [Green Version]

- Truckenbrodt, J.; Freemantle, T.; Williams, C.; Jones, T.; Small, D.; Dubois, C.; Thiel, C.; Rossi, C.; Syriou, A.; Giuliani, G. Towards Sentinel-1 SAR Analysis-Ready Data: A Best Practices Assessment on Preparing Backscatter Data for the Cube. Data 2019, 4, 93. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.S.; Wen, J.H.; Ainsworth, T.; Chen, K.S.; Chen, A. Improved Sigma Filter for Speckle Filtering of SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 202–213. [Google Scholar] [CrossRef]

- USGS EROS Archive—Digital Elevation—Shuttle Radar Topography Mission (SRTM) 1 Arc-Second Global. Available online: https://www.usgs.gov/centers/eros/science/usgs-eros-archive-digital-elevation-shuttle-radar-topography-mission-srtm-1-arc (accessed on 15 November 2021).

- GDAL. Available online: https://pypi.org/project/GDAL/ (accessed on 15 November 2021).

- Synergetic Use of Radar and Optical Data. Available online: http://step.esa.int/docs/tutorials/S1TBX%20Synergetic%20use%20127of%20S1%20(SAR)%20and%20S2%20(optical)%20data%20Tutorial.pdf (accessed on 18 October 2021).

- Metaflow: A Framework for Real-Life Data Science. Available online: https://metaflow.org/ (accessed on 26 August 2021).

- Merkel, D. Docker: Lightweight linux containers for consistent development and deployment. Linux J. 2014, 2014, 2. [Google Scholar]

- Kurtzer, G.M.; Sochat, V.; Bauer, M.W. Singularity: Scientific containers for mobility of compute. PLoS ONE 2017, 12, e0177459. [Google Scholar] [CrossRef]

- Stubenrauch, C.J.; Rossow, W.B.; Kinne, S.; Ackerman, S.; Cesana, G.; Chepfer, H.; Girolamo, L.D.; Getzewich, B.; Guignard, A.; Heidinger, A.; et al. Assessment of Global Cloud Datasets from Satellites: Project and Database Initiated by the GEWEX Radiation Panel. Bull. Am. Meteorol. Soc. 2013, 94, 1031–1049. [Google Scholar] [CrossRef]

- King, M.D.; Platnick, S.; Menzel, W.P.; Ackerman, S.A.; Hubanks, P.A. Spatial and Temporal Distribution of Clouds Observed by MODIS Onboard the Terra and Aqua Satellites. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3826–3852. [Google Scholar] [CrossRef]

- Sentinel-2 Tiling Grid Kml. Available online: https://sentinels.copernicus.eu/documents/247904/1955685/S2A_OPER_GIP_TILPAR_MPC__20151209T095117_V20150622T000000_21000101T000000_B00.kml (accessed on 11 October 2021).

- Measuring Vegetation (NDVI & EVI). Available online: https://earthobservatory.nasa.gov/features/MeasuringVegetation/measuring_vegetation_2.php (accessed on 16 November 2021).

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with Erts; NASA Special Publication; NASA: Washington, DC, USA, 1974; Volume 351, p. 309. [Google Scholar]

- Tan, C.W.; Zhang, P.P.; Zhou, X.X.; Wang, Z.X.; Xu, Z.Q.; Mao, W.; Li, W.X.; Huo, Z.Y.; Guo, W.S.; Yun, F. Quantitative monitoring of leaf area index in wheat of different plant types by integrating NDVI and Beer-Lambert law. Sci. Rep. 2020, 10, 929. [Google Scholar] [CrossRef] [PubMed]

- Aranguren, M.; Castellón, A.; Aizpurua, A. Wheat Yield Estimation with NDVI Values Using a Proximal Sensing Tool. Remote Sens. 2020, 12, 2749. [Google Scholar] [CrossRef]

- Vreugdenhil, M.; Wagner, W.; Bauer-Marschallinger, B.; Pfeil, I.; Teubner, I.; Rüdiger, C.; Strauss, P. Sensitivity of Sentinel-1 Backscatter to Vegetation Dynamics: An Austrian Case Study. Remote Sens. 2018, 10, 1396. [Google Scholar] [CrossRef] [Green Version]

- Filgueiras, R.; Mantovani, E.C.; Althoff, D.; Fernandes Filho, E.I.; Cunha, F.F.D. Crop NDVI Monitoring Based on Sentinel 1. Remote Sens. 2019, 11, 1441. [Google Scholar] [CrossRef] [Green Version]

- Alvarez-Mozos, J.; Villanueva, J.; Arias, M.; Gonzalez-Audicana, M. Correlation Between NDVI and Sentinel-1 Derived Features for Maize. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 6773–6776. [Google Scholar] [CrossRef]

| ROI Subset | ROI Subset Area (%) | Sentinel Tiles |

|---|---|---|

| R1 | 60.91 | Sentinel-1: S1A_IW_GRDH_1SDV_20210421T175920_20210421T175945_037552_046DBA_1823 |

| Sentinel-2: S2B_MSIL2A_20210422T113309_N0300_R080_T30VVH_20210422T130934 | ||

| R2 | 30.79 | Sentinel-1: S1B_IW_GRDH_1SDV_20210422T175020_20210422T175045_026583_032CA4_6AA2 |

| Sentinel-2: S2B_MSIL2A_20210422T113309_N0300_R080_T30VWH_20210422T130934 | ||

| R3 | 8.29 | Sentinel-1: S1B_IW_GRDH_1SDV_20210422T175020_20210422T175045_026583_032CA4_6AA2 |

| Sentinel-2: S2B_MSIL2A_20210422T113309_N0300_R080_T30VWJ_20210422T130934 |

| Scenario | Sentinel-2 Tile Coverage | Selection Time (s) | Processing Time (s) | Total Time (s) |

|---|---|---|---|---|

| A | 1 | 1.2 | 267.8 | 269.0 |

| B | 2 | 2.2 | 343.4 | 345.7 |

| C | 4 | 3.4 | 442.7 | 446.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Upadhyay, P.; Czerkawski, M.; Davison, C.; Cardona, J.; Macdonald, M.; Andonovic, I.; Michie, C.; Atkinson, R.; Papadopoulou, N.; Nikas, K.; et al. A Flexible Multi-Temporal and Multi-Modal Framework for Sentinel-1 and Sentinel-2 Analysis Ready Data. Remote Sens. 2022, 14, 1120. https://doi.org/10.3390/rs14051120

Upadhyay P, Czerkawski M, Davison C, Cardona J, Macdonald M, Andonovic I, Michie C, Atkinson R, Papadopoulou N, Nikas K, et al. A Flexible Multi-Temporal and Multi-Modal Framework for Sentinel-1 and Sentinel-2 Analysis Ready Data. Remote Sensing. 2022; 14(5):1120. https://doi.org/10.3390/rs14051120

Chicago/Turabian StyleUpadhyay, Priti, Mikolaj Czerkawski, Christopher Davison, Javier Cardona, Malcolm Macdonald, Ivan Andonovic, Craig Michie, Robert Atkinson, Nikela Papadopoulou, Konstantinos Nikas, and et al. 2022. "A Flexible Multi-Temporal and Multi-Modal Framework for Sentinel-1 and Sentinel-2 Analysis Ready Data" Remote Sensing 14, no. 5: 1120. https://doi.org/10.3390/rs14051120

APA StyleUpadhyay, P., Czerkawski, M., Davison, C., Cardona, J., Macdonald, M., Andonovic, I., Michie, C., Atkinson, R., Papadopoulou, N., Nikas, K., & Tachtatzis, C. (2022). A Flexible Multi-Temporal and Multi-Modal Framework for Sentinel-1 and Sentinel-2 Analysis Ready Data. Remote Sensing, 14(5), 1120. https://doi.org/10.3390/rs14051120