Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard

Abstract

:1. Introduction

2. Materials and Methods

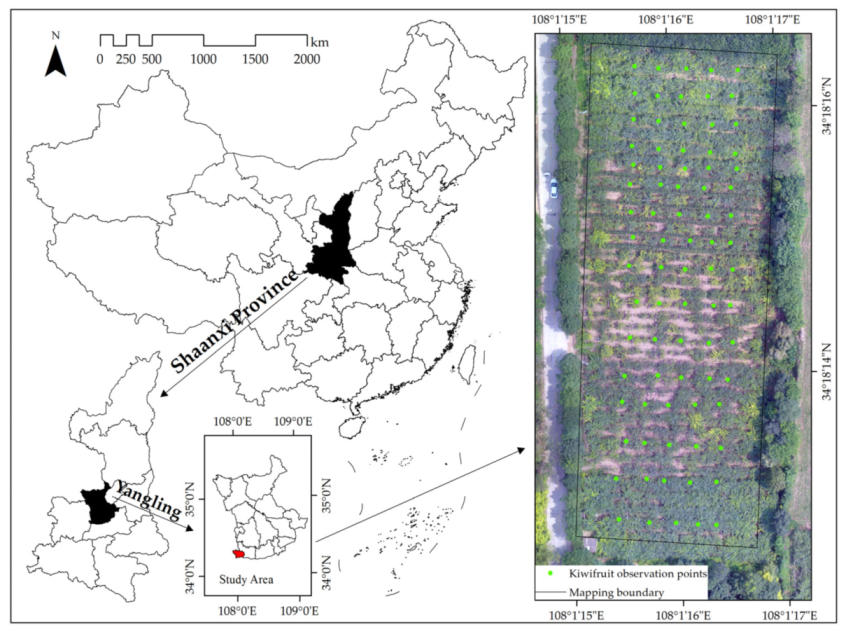

2.1. Study Area Overview

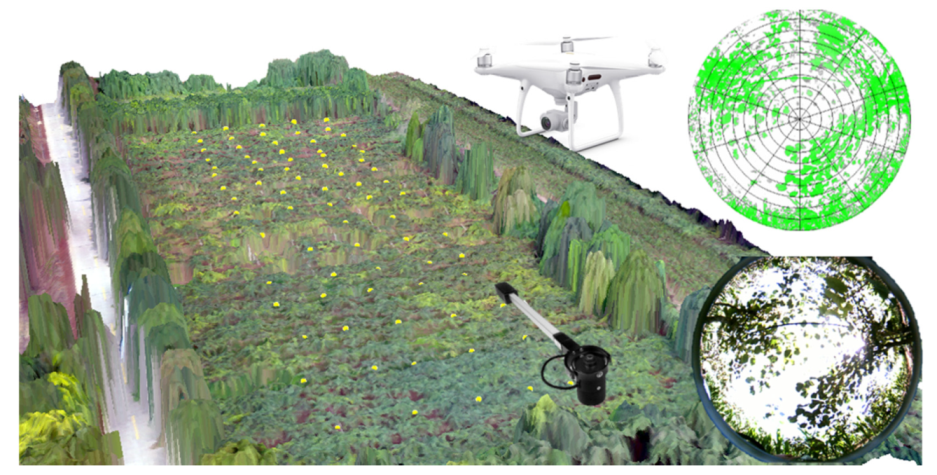

2.2. Data Acquisition

2.2.1. LAI Measurement of Kiwi Orchard

2.2.2. UAV RGB Image Acquisition and Preprocessing

2.3. Methods

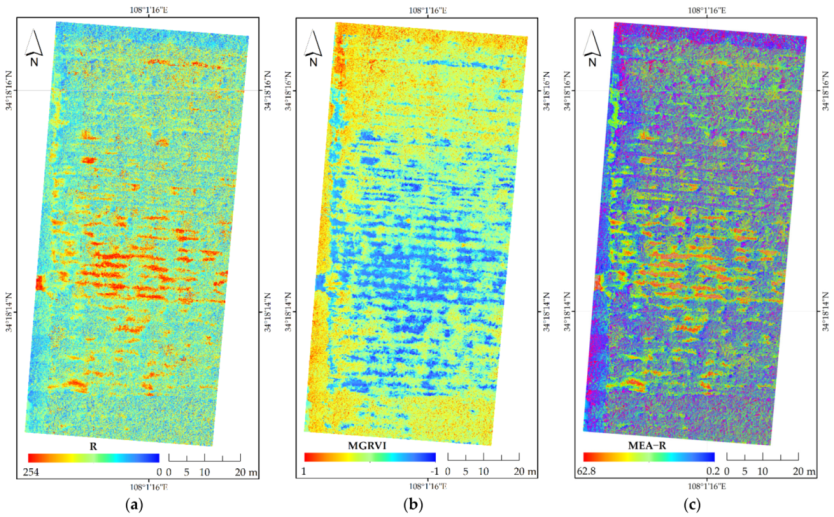

2.3.1. Extraction of Spectral and Texture Features

2.3.2. Model Calibration and Evaluation

3. Results

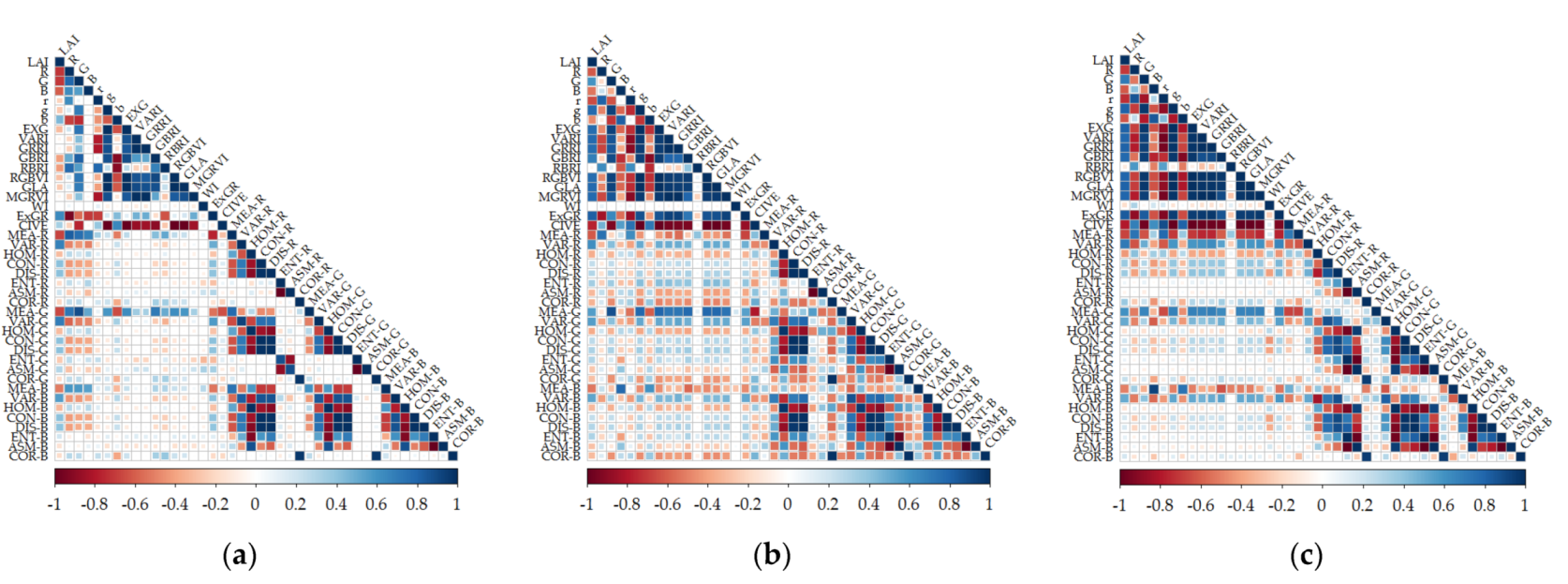

3.1. Correlation between LAI and UAV RGB Image Parameters

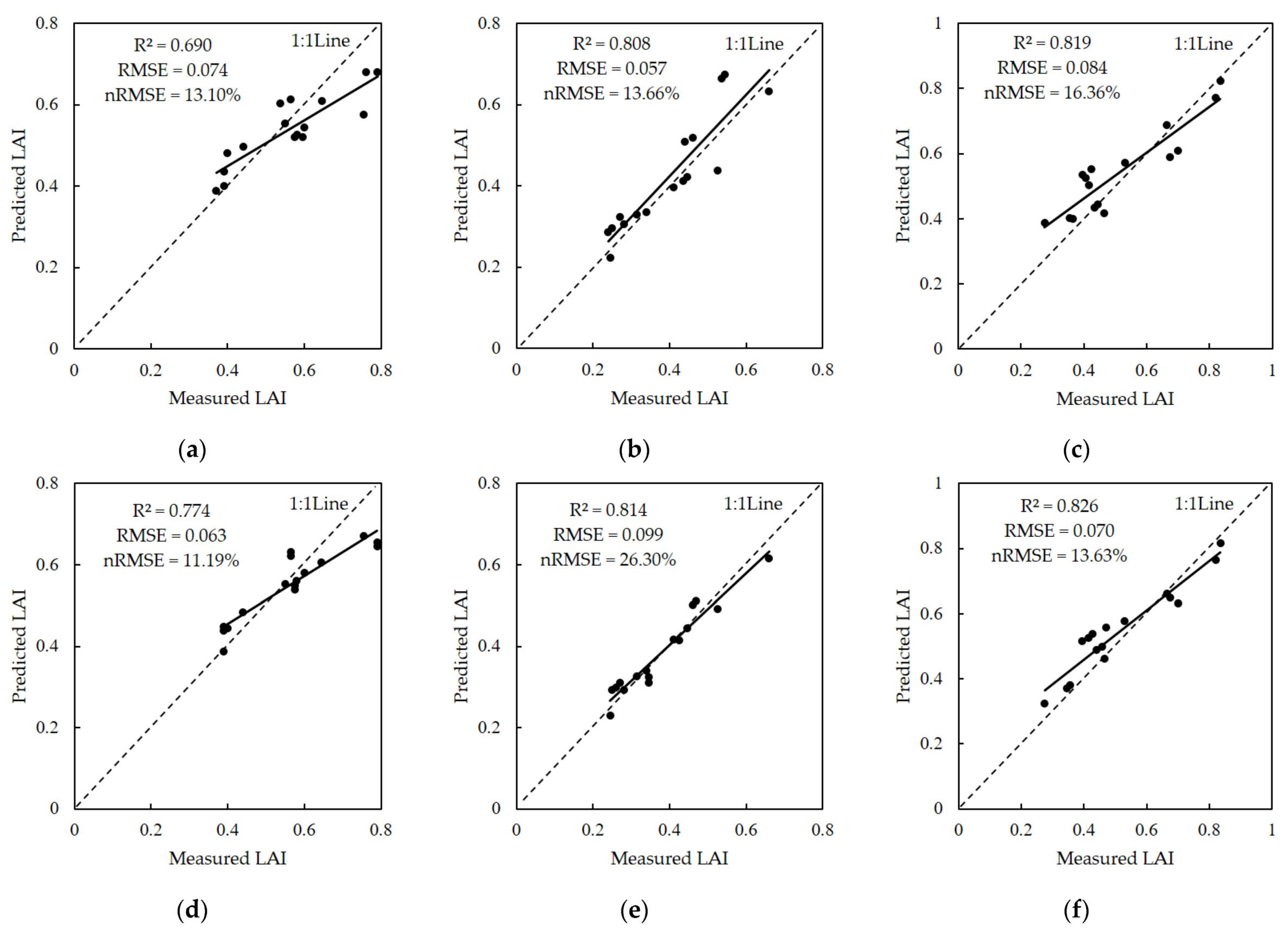

3.2. LAI Modeling and Accuracy Verification

3.2.1. Unitary Linear Model Construction and Precision Analysis

3.2.2. LAI Estimation Models Established by Spectral Index Only

3.2.3. LAI Estimation Models Combined with Texture Features

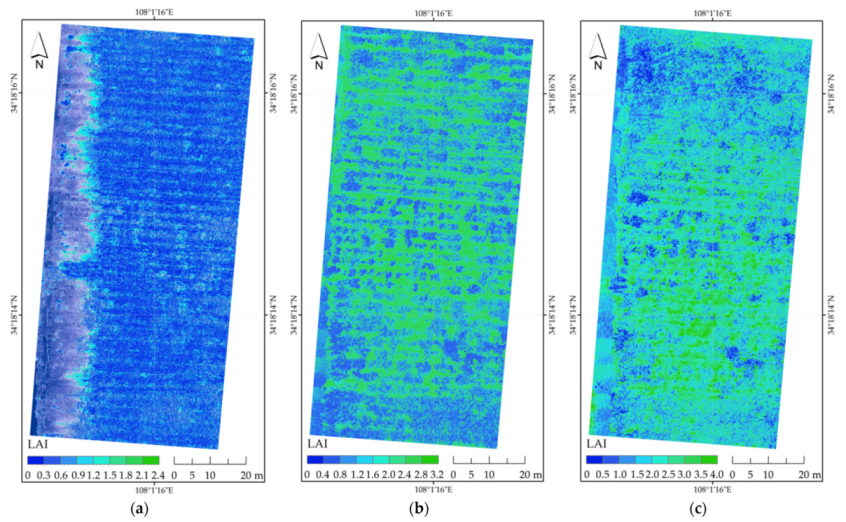

3.3. Model Selection and Inversion Mapping

4. Discussion

4.1. Feasibility of LAI Estimation by UAV RGB Images

4.2. Advantages of Estimation after Combining with Texture Features

4.3. Model Optimization Selection of LAI

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tian, Y.; Huang, H.; Zhou, G.; Zhang, Q.; Tao, J.; Zhang, Y.; Lin, J. Aboveground mangrove biomass estimation in Beibu Gulf using machine learning and UAV remote sensing. Sci. Total Environ. 2021, 781, 146816. [Google Scholar] [CrossRef]

- Kong, B.; Yu, H.; Du, R.; Wang, Q. Quantitative Estimation of Biomass of Alpine Grasslands Using Hyperspectral Remote Sensing. Rangel. Ecol. Manag. 2019, 72, 336–346. [Google Scholar] [CrossRef]

- Ali, A.; Imran, M. Evaluating the potential of red edge position (REP) of hyperspectral remote sensing data for real time estimation of LAI & chlorophyll content of kinnow mandarin (Citrus reticulata) fruit orchards. Sci. Hortic. 2020, 267, 109326. [Google Scholar] [CrossRef]

- Zhang, Y.; Hui, J.; Qin, Q.; Sun, Y.; Zhang, T.; Sun, H.; Li, M. Transfer-learning-based approach for leaf chlorophyll content estimation of winter wheat from hyperspectral data. Remote Sens. Environ. 2021, 267, 112724. [Google Scholar] [CrossRef]

- Gano, B.; Dembele, J.S.B.; Ndour, A.; Luquet, D.; Beurier, G.; Diouf, D.; Audebert, A. Using UAV Borne, Multi-Spectral Imaging for the Field Phenotyping of Shoot Biomass, Leaf Area Index and Height of West African Sorghum Varieties under Two Contrasted Water Conditions. Agronomy 2021, 11, 850. [Google Scholar]

- Zhang, J.; Li, M.; Sun, Z.; Liu, H.; Sun, H.; Yang, W. Chlorophyll Content Detection of Field Maize Using RGB-NIR Camera. IFAC-Paper 2018, 51, 700–705. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain yield prediction of rice using multi-temporal UAV-based RGB and multispectral images and model transfer—Case study of small farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Zhang, L.; Han, J.; Bian, C.; Li, G.; Liu, J.; Jin, L. Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2020, 162, 161–172. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Combining Unmanned Aerial Vehicle (UAV)-Based Multispectral Imagery and Ground-Based Hyperspectral Data for Plant Nitrogen Concentration Estimation in Rice. Front Plant Sci. 2018, 9, 936. [Google Scholar] [CrossRef]

- Qiu, Z.; Ma, F.; Li, Z.; Xu, X.; Ge, H.; Du, C. Estimation of nitrogen nutrition index in rice from UAV RGB images coupled with machine learning algorithms. Comput. Electron. Agric. 2021, 189, 106421. [Google Scholar] [CrossRef]

- Zhou, Y.; Lao, C.; Yang, Y.; Zhang, Z.; Chen, H.; Chen, Y.; Chen, J.; Ning, J.; Yang, N. Diagnosis of winter-wheat water stress based on UAV-borne multispectral image texture and vegetation indices. Agric. Water Manag. 2021, 256, 107076. [Google Scholar] [CrossRef]

- Lama, G.F.C.; Crimaldi, M.; Pasquino, V.; Padulano, R.; Chirico, G.B. Bulk Drag Predictions of Riparian Arundo donax Stands through UAV-Acquired Multispectral Images. Water 2021, 13, 1333. [Google Scholar]

- Taddia, Y.; Russo, P.; Lovo, S.; Pellegrinelli, A. Multispectral UAV monitoring of submerged seaweed in shallow water. Appl. Geomat. 2020, 12, 19–34. [Google Scholar] [CrossRef] [Green Version]

- Fernández-Lozano, J.; Sanz-Ablanedo, E. Unraveling the Morphological Constraints on Roman Gold Mining Hydraulic Infrastructure in NW Spain. A UAV-Derived Photogrammetric and Multispectral Approach. Remote Sens. 2021, 13, 291. [Google Scholar]

- Benos, L.; Tagarakis, A.C.; Dolias, G.; Berruto, R.; Kateris, D.; Bochtis, D. Machine Learning in Agriculture: A Comprehensive Updated Review. Sensors 2021, 21, 3758. [Google Scholar]

- Sadeghifar, T.; Lama, G.F.C.; Sihag, P.; Bayram, A.; Kisi, O. Wave height predictions in complex sea flows through soft-computing models: Case study of Persian Gulf. Ocean Eng. 2022, 245, 110467. [Google Scholar] [CrossRef]

- Hashim, W.; Eng, L.S.; Alkawsi, G.; Ismail, R.; Alkahtani, A.A.; Dzulkifly, S.; Baashar, Y.; Hussain, A. A Hybrid Vegetation Detection Framework: Integrating Vegetation Indices and Convolutional Neural Network. Symmetry 2021, 13, 2190. [Google Scholar]

- Watson, D.J. Comparative Physiological Studies on the Growth of Field Crops: I. Variation in Net Assimilation Rate and Leaf Area between Species and Varieties, and within and between Years. Ann. Bot. 1947, 11, 41–76. [Google Scholar]

- Negrón Juárez, R.I.; da Rocha, H.R.; e Figueira, A.M.S.; Goulden, M.L.; Miller, S.D. An improved estimate of leaf area index based on the histogram analysis of hemispherical photographs. Agric. For. Meteorol. 2009, 149, 920–928. [Google Scholar] [CrossRef] [Green Version]

- Vose, J.M.; Barton, D.; Clinton, N.H.; Sullivan, P.V.B. Vertical leaf area distribution, light transmittance, and application of the Beer–Lambert Law in four mature hardwood stands in the southern Appalachians. Can. J. For. Res. 1995, 25, 1036–1043. [Google Scholar]

- Wilhelm, W.W.; Ruwe, K.; Schlemmer, M.R. Comparison of three leaf area index meters in a corn canopy. Crop Sci. 2000, 40, 1179–1183. [Google Scholar]

- Glatthorn, J.; Pichler, V.; Hauck, M.; Leuschner, C. Effects of forest management on stand leaf area: Comparing beech production and primeval forests in Slovakia. For. Ecol. Manag. 2017, 389, 76–85. [Google Scholar] [CrossRef]

- Jiang, S.; Zhao, L.; Liang, C.; Hu, X.; Yaosheng, W.; Gong, D.; Zheng, S.; Huang, Y.; He, Q.; Cui, N. Leaf- and ecosystem-scale water use efficiency and their controlling factors of a kiwifruit orchard in the humid region of Southwest China. Agric. Water Manag. 2022, 260, 107329. [Google Scholar] [CrossRef]

- Srinet, R.; Nandy, S.; Patel, N.R. Estimating leaf area index and light extinction coefficient using Random Forest regression algorithm in a tropical moist deciduous forest, India. Ecol. Inform. 2019, 52, 94–102. [Google Scholar] [CrossRef]

- Ren, B.; Li, L.; Dong, S.; Liu, P.; Zhao, B.; Zhang, J. Photosynthetic Characteristics of Summer Maize Hybrids with Different Plant Heights. Agron. J. 2017, 109, 1454. [Google Scholar]

- Hassanijalilian, O.; Igathinathane, C.; Doetkott, C.; Bajwa, S.; Nowatzki, J.; Haji Esmaeili, S.A. Chlorophyll estimation in soybean leaves infield with smartphone digital imaging and machine learning. Comput. Electron. Agric. 2020, 174, 105433. [Google Scholar] [CrossRef]

- Lu, J.; Cheng, D.; Geng, C.; Zhang, Z.; Xiang, Y.; Hu, T. Combining plant height, canopy coverage and vegetation index from UAV-based RGB images to estimate leaf nitrogen concentration of summer maize. Biosyst. Eng. 2021, 202, 42–54. [Google Scholar] [CrossRef]

- Raj, R.; Walker, J.P.; Pingale, R.; Nandan, R.; Naik, B.; Jagarlapudi, A. Leaf area index estimation using top-of-canopy airborne RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102282. [Google Scholar] [CrossRef]

- Shao, G.; Han, W.; Zhang, H.; Liu, S.; Wang, Y.; Zhang, L.; Cui, X. Mapping maize crop coefficient Kc using random forest algorithm based on leaf area index and UAV-based multispectral vegetation indices. Agric. Water Manag. 2021, 252, 106906. [Google Scholar] [CrossRef]

- Guo, Z.-c.; Wang, T.; Liu, S.-l.; Kang, W.-p.; Chen, X.; Feng, K.; Zhang, X.-q.; Zhi, Y. Biomass and vegetation coverage survey in the Mu Us sandy land-based on unmanned aerial vehicle RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 94, 102239. [Google Scholar] [CrossRef]

- Li, Y.; Liu, H.; Ma, J.; Zhang, L. Estimation of leaf area index for winter wheat at early stages based on convolutional neural networks. Comput. Electron. Agric. 2021, 190, 106480. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Flores, P.; Zhang, Z.; Igathinathane, C.; Jithin, M.; Naik, D.; Stenger, J.; Ransom, J.; Kiran, R. Distinguishing seedling volunteer corn from soybean through greenhouse color, color-infrared, and fused images using machine and deep learning. Ind. Crop. Prod. 2021, 161, 113223. [Google Scholar] [CrossRef]

- Waheed, A.; Goyal, M.; Gupta, D.; Khanna, A.; Hassanien, A.E.; Pandey, H.M. An optimized dense convolutional neural network model for disease recognition and classification in corn leaf. Comput. Electron. Agric. 2020, 175, 105456. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVMVI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Guo, Y.; Fu, Y.H.; Chen, S.; Robin Bryant, C.; Li, X.; Senthilnath, J.; Sun, H.; Wang, S.; Wu, Z.; de Beurs, K. Integrating spectral and textural information for identifying the tasseling date of summer maize using UAV based RGB images. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102435. [Google Scholar] [CrossRef]

- Sumesh, K.C.; Ninsawat, S.; Som-ard, J. Integration of RGB-based vegetation index, crop surface model and object-based image analysis approach for sugarcane yield estimation using unmanned aerial vehicle. Comput. Electron. Agric. 2021, 180, 105903. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar]

- Laliberte, A.S.; Rango, A. Texture and Scale in Object-Based Analysis of Subdecimeter Resolution Unmanned Aerial Vehicle (UAV) Imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar]

- Murray, H.; Lucieer, A.; Williams, R. Texture-based classification of sub-Antarctic vegetation communities on Heard Island. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 138–149. [Google Scholar]

- Kelsey, K.C.; Neff, J.C. Estimates of Aboveground Biomass from Texture Analysis of Landsat Imagery. Remote Sens. 2014, 6, 6407–6422. [Google Scholar]

- Sarker, L.R.; Nichol, J.E. Improved forest biomass estimates using ALOS AVNIR-2 texture indices. Remote Sens. Environ. 2011, 115, 968–977. [Google Scholar]

- Chen, J.M.; Cihlar, J. Retrieving leaf area index of boreal conifer forests using Landsat TM images. Remote Sens. Environ. 1996, 55, 153–162. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Pena, J.M.; De Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar]

- Soudani, K.; Fran?Ois, C.; Maire, G.L.; Dantec, V.L.; Dufrêne, E. Comparative analysis of IKONOS, SPOT, and ETM+ data for leaf area index estimation in temperate coniferous and deciduous forest stands. Remote Sens. Environ. 2006, 102, 161–175. [Google Scholar]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar]

- Sellaro, R.; Crepy, M.; Trupkin, S.A.; Karayekov, E.; Buchovsky, A.S.; Rossi, C.; Casal, J.J. Cryptochrome as a Sensor of the Blue/Green Ratio of Natural Radiation in Arabidopsis. Plant Physiol. 2010, 154, 401–409. [Google Scholar]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, H.; Wang, D.; Li, H.; Mouazen, A.M. A novel spectral index for estimation of relative chlorophyll content of sugar beet. Comput. Electron. Agric. 2021, 184, 106088. [Google Scholar] [CrossRef]

- Wu, J.; Wang, D.; Bauer, M.E. Assessing broadband vegetation indices and QuickBird data in estimating leaf area index of corn and potato canopies. Field Crop. Res. 2007, 102, 33–42. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Li, H.; Chen, Z.-x.; Jiang, Z.-w.; Wu, W.-b.; Ren, J.-q.; Liu, B.; Tuya, H. Comparative analysis of GF-1, HJ-1, and Landsat-8 data for estimating the leaf area index of winter wheat. J. Integr. Agric. 2017, 16, 266–285. [Google Scholar] [CrossRef]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef] [Green Version]

- Cen, H.; Wan, L.; Zhu, J.; Li, Y.; Li, X.; Zhu, Y.; Weng, H.; Wu, W.; Yin, W.; Xu, C.; et al. Dynamic monitoring of biomass of rice under different nitrogen treatments using a lightweight UAV with dual image-frame snapshot cameras. Plant Methods 2019, 15, 32. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined Spectral and Spatial Modeling of Corn Yield Based on Aerial Images and Crop Surface Models Acquired with an Unmanned Aircraft System. Remote Sens. 2014, 6, 10335–10355. [Google Scholar]

- Yamaguchi, T.; Tanaka, Y.; Imachi, Y.; Yamashita, M.; Katsura, K. Feasibility of Combining Deep Learning and RGB Images Obtained by Unmanned Aerial Vehicle for Leaf Area Index Estimation in Rice. Remote Sens. 2021, 13, 84. [Google Scholar]

- Sun, B.; Wang, C.; Yang, C.; Xu, B.; Zhou, G.; Li, X.; Xie, J.; Xu, S.; Liu, B.; Xie, T.; et al. Retrieval of rapeseed leaf area index using the PROSAIL model with canopy coverage derived from UAV images as a correction parameter. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102373. [Google Scholar] [CrossRef]

- Adnan, M.; Abaid-ur-Rehman, M.; Latif, M.A.; Ahmad, N.; Akhter, N. Mapping wheat crop phenology and the yield using machine learning (ML). Int. J. Adv. Comput. Sci. Appl. 2018, 9, 301–306. [Google Scholar]

- Liu, Y.; Heuvelink, G.B.M.; Bai, Z.; He, P.; Xu, X.; Ding, W.; Huang, S. Analysis of spatio-temporal variation of crop yield in China using stepwise multiple linear regression. Field Crop. Res. 2021, 264, 108098. [Google Scholar] [CrossRef]

- Ta, N.; Chang, Q.; Zhang, Y. Estimation of Apple Tree Leaf Chlorophyll Content Based on Machine Learning Methods. Remote Sens. 2021, 13, 3902. [Google Scholar] [CrossRef]

| Growth Stages | Date | Number of Images |

|---|---|---|

| Initial flowering stage (IF) | 8 May | 145 |

| Young fruit stage (YF) | 5 June | 144 |

| Fruit enlargement stage (FE) | 8 July | 146 |

| Parameters | Name | Formulas | Sources |

|---|---|---|---|

| R | DN value of Red Channel | Conventional empirical parameters | |

| G | DN value of Green Channel | ||

| B | DN value of Blue Channel | ||

| r | Normalized Redness Intensity | ||

| g | Normalized Greenness Intensity | ||

| b | Normalized Blueness Intensity | ||

| EXG | Excess Green Index | [45] | |

| VARI | Visible Atmospherically Resistant Index | [46] | |

| GRRI | Green Red Ratio Index | [47] | |

| GBRI | Green Blue Ratio Index | [48] | |

| RBRI | Red Blue Ratio Index | [48] | |

| RGBVI | Red Green Blue Vegetation Index | [49] | |

| GLA | Green Leaf Algorithm | [50] | |

| MGRVI | Modified Green Red Vegetation Index | [49] | |

| WI | Woebbecke Index | [51] | |

| ExGR | Excess Green Red Index | [52] | |

| CIVE | Color Index of Vegetation | [53] |

| Parameters | Name | Formulas | Sources |

|---|---|---|---|

| MEA | Mean | [39] | |

| VAR | Variance | ||

| HOM | Homogeneity | ||

| CON | Contrast | ||

| DIS | Dissimilarity | ||

| ENT | Entropy | ||

| ASM | Angular Second Moment | ||

| COR | Correlation |

| Set Name | Variables | Methods for Combination |

|---|---|---|

| α | R, G, ExGR, B, b, RBRI, GBRI, CIVE, EXG, RGBVI | Spectral indices highly correlated with LAI in IF |

| β | VAR_G, VAR_R, MEA_R, MEA_G, VAR_B, MEA_B, CON_R, CON_G, CON_B, DIS_R, DIS_G, DIS_B, HOM_B, HOM_R, HOM_G, ASM_G | Texture features highly correlated with LAI in IF |

| γ | R, G, B, r, g, b, EXG, VARI, GRRI, GBRI, RGBVI, GLA, MGRVI, ExGR, CIVE | Spectral indices highly correlated with LAI in YF and FE |

| δ | MEA_R, VAR_R, HOM_R, DIS_R, ENT_R, ASM_R, COR_R, MEA_G, VAR_G, HOM_G, DIS_G, ENT_G, COR_G, MEA_B, VAR_B, HOM_B, DIS_B, ENT_B, ASM_B, COR_B | Texture features highly correlated with LAI in YF and FE |

| Growth Stages | Independent Variable | Modeling Equation | R2 | RMSE | nRMSE/% |

|---|---|---|---|---|---|

| IF | R | y = 0.0005x2 − 0.1028x + 5.2873 | 0.466 | 0.081 | 15.86 |

| YF | ExGR | y = −0.00001784x2 + 0.00006079x + 1.004 | 0.719 | 0.061 | 14.22 |

| FE | ExGR | y = 0.00006931x2 + 0.03275x + 4.215 | 0.736 | 0.108 | 17.84 |

| Growth Stages | Modeling Method | Spectral Parameters | AIC | R2 | RMSE | nRMSE/% |

|---|---|---|---|---|---|---|

| IF | SWR | G, b, GBRI | −323.23 | 0.541 | 0.075 | 14.70 |

| RFR | α | - | 0.965 | 0.021 | 4.05 | |

| YF | SWR | R, G, r, g, VARI, GRRI, GBRI, RGBVI, GLA | −365.10 | 0.819 | 0.049 | 11.55 |

| RFR | γ | - | 0.973 | 0.019 | 4.42 | |

| FE | SWR | R, G, g, GRRI, GBRI, MGRVI | −278.64 | 0.765 | 0.102 | 16.81 |

| RFR | γ | - | 0.972 | 0.035 | 5.80 |

| Growth Stages | Modeling Method | Spectral Parameters | AIC | R2 | RMSE | nRMSE/% |

|---|---|---|---|---|---|---|

| IF | SWR | R, G, B, b, GBRI, RBRI, RGBVI, VAR_R, HOM_R, CON_R, DIS_R, MEA_G, VAR_G, ASM_G, VAR_B, HOM_B, CON_B, DIS_B | −368.88 | 0.859 | 0.042 | 8.14 |

| RFR | α + β | - | 0.968 | 0.020 | 3.88 | |

| YF | SWR | R, G, B, g, VARI, GRRI, GBRI, RGBVI, GLA, MGRVI, MEA_R, VAR_R, DIS_R, ENT_R, ASM_R, COR_R, VAR_G, HOM_G, DIS_G, ENT_G, COR_G, MEA_B, VAR_B, HOM_B, DIS_B, ENT_B, ASM_B | −465.04 | 0.978 | 0.017 | 3.99 |

| RFR | γ + δ | - | 0.978 | 0.017 | 4.08 | |

| FE | SWR | R, B, r, g, VAAI, GRRI, GBRI, RGBVI, GLA, MGRVI, VAR_R, ENT_R, COR_R, MEA_G, HOM_G, ENT_G, COR_G, MEA_B, HOM_B, ENT_B, ASM_B | −343.92 | 0.947 | 0.048 | 7.99 |

| RFR | γ + δ | - | 0.977 | 0.032 | 5.30 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Ta, N.; Guo, S.; Chen, Q.; Zhao, L.; Li, F.; Chang, Q. Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard. Remote Sens. 2022, 14, 1063. https://doi.org/10.3390/rs14051063

Zhang Y, Ta N, Guo S, Chen Q, Zhao L, Li F, Chang Q. Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard. Remote Sensing. 2022; 14(5):1063. https://doi.org/10.3390/rs14051063

Chicago/Turabian StyleZhang, Youming, Na Ta, Song Guo, Qian Chen, Longcai Zhao, Fenling Li, and Qingrui Chang. 2022. "Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard" Remote Sensing 14, no. 5: 1063. https://doi.org/10.3390/rs14051063

APA StyleZhang, Y., Ta, N., Guo, S., Chen, Q., Zhao, L., Li, F., & Chang, Q. (2022). Combining Spectral and Textural Information from UAV RGB Images for Leaf Area Index Monitoring in Kiwifruit Orchard. Remote Sensing, 14(5), 1063. https://doi.org/10.3390/rs14051063