Impact of High-Cadence Earth Observation in Maize Crop Phenology Classification

Abstract

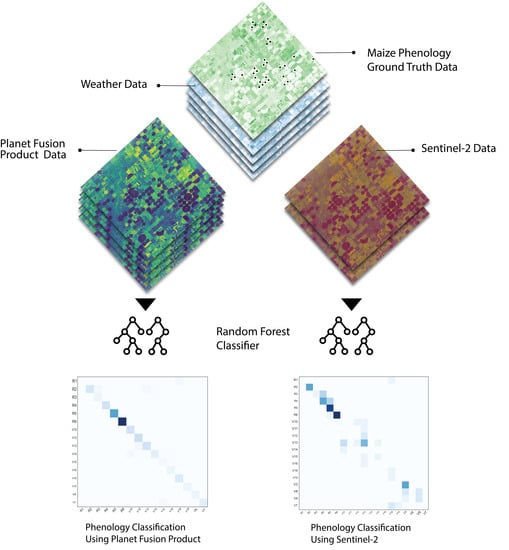

:1. Introduction

2. Materials and Methods

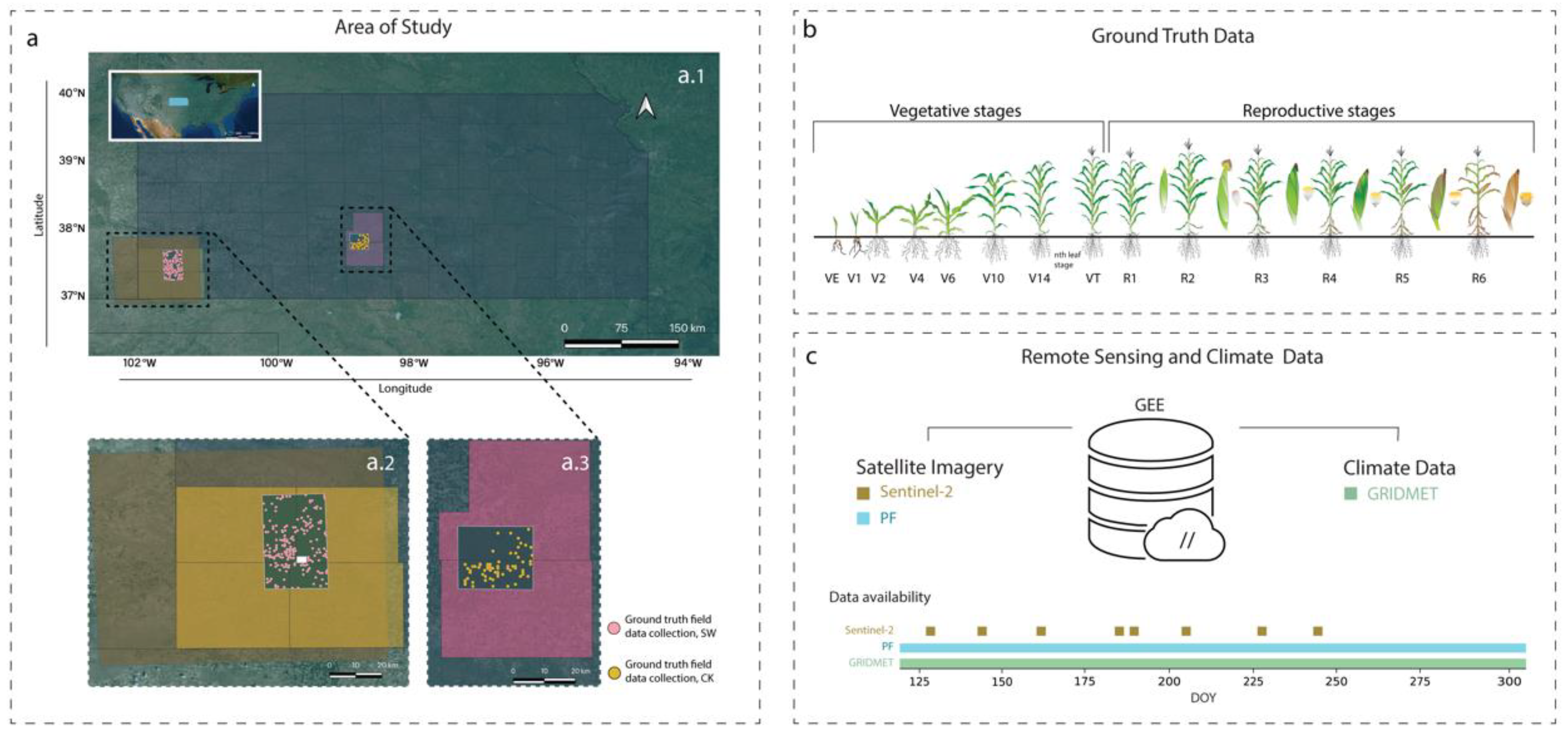

2.1. Study Area

2.2. Data Characteristics

2.2.1. Reference Ground Field Data

2.2.2. Remote Sensing Data and Weather Variables

Planet Fusion Product

Sentinel-2

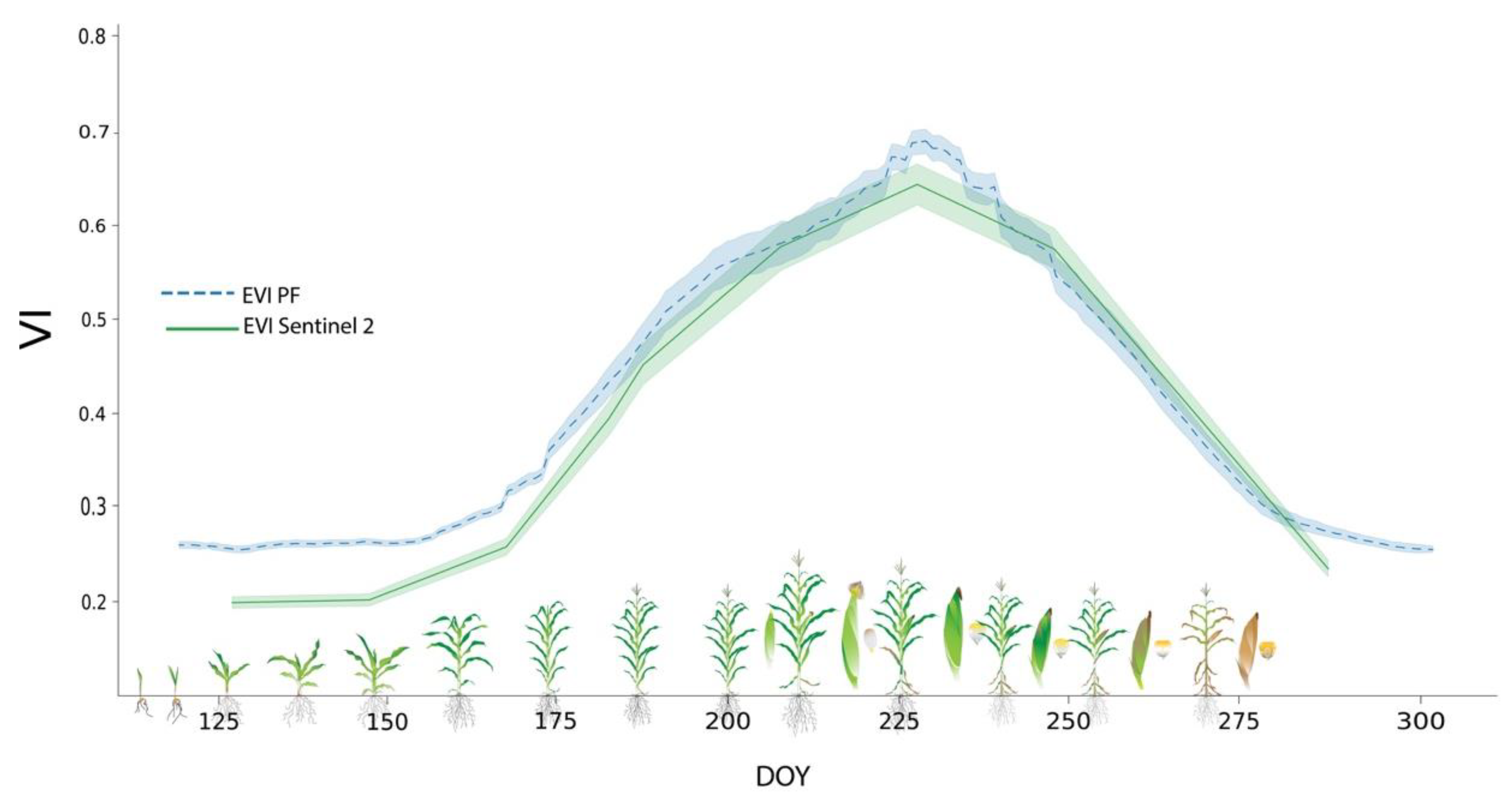

Spectral Bands and Vegetation Indices

Weather Variables

2.3. Data Preparation

2.4. Random Forest

2.4.1. Performance Metrics

2.4.2. Feature Importance

3. Results

3.1. Best Combination of Variables

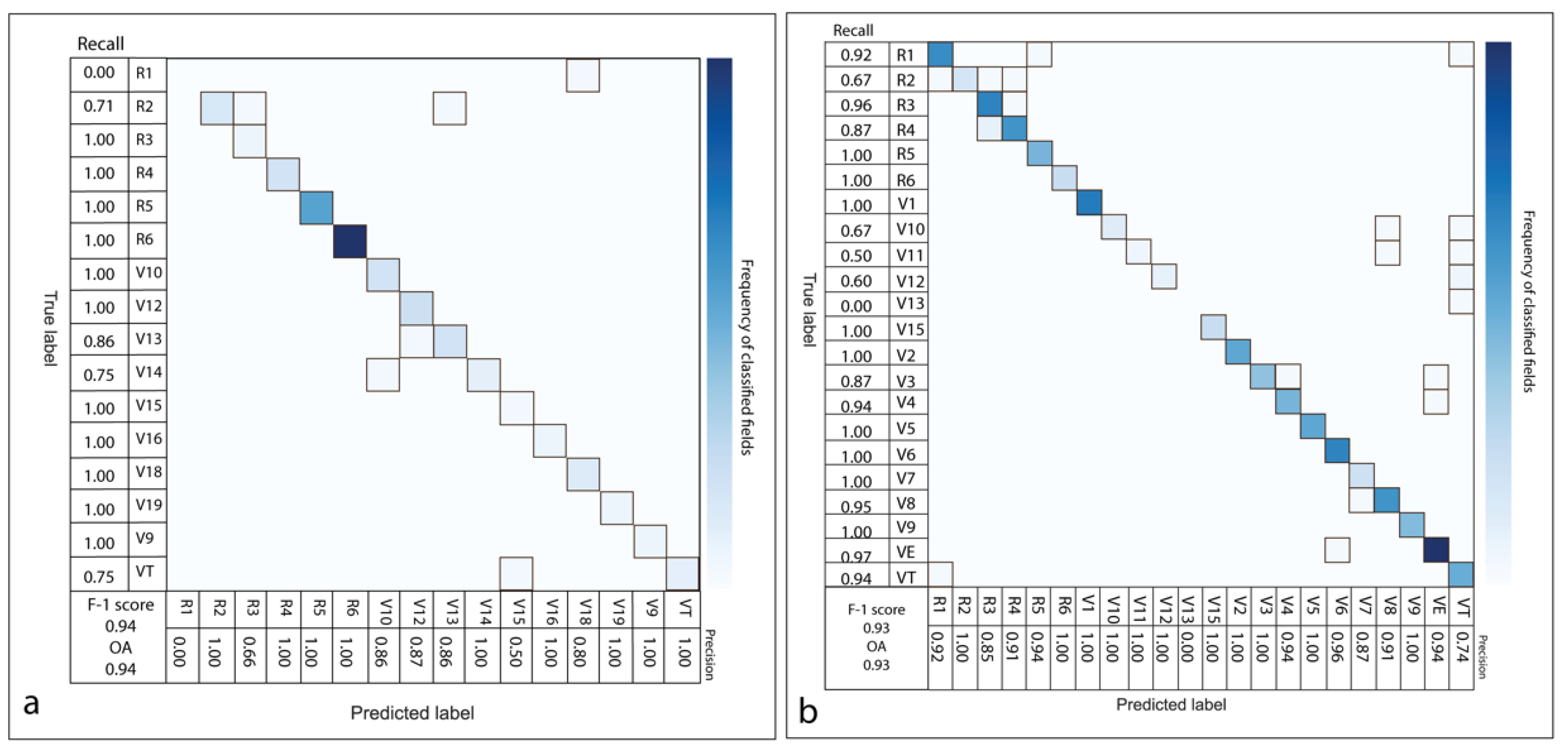

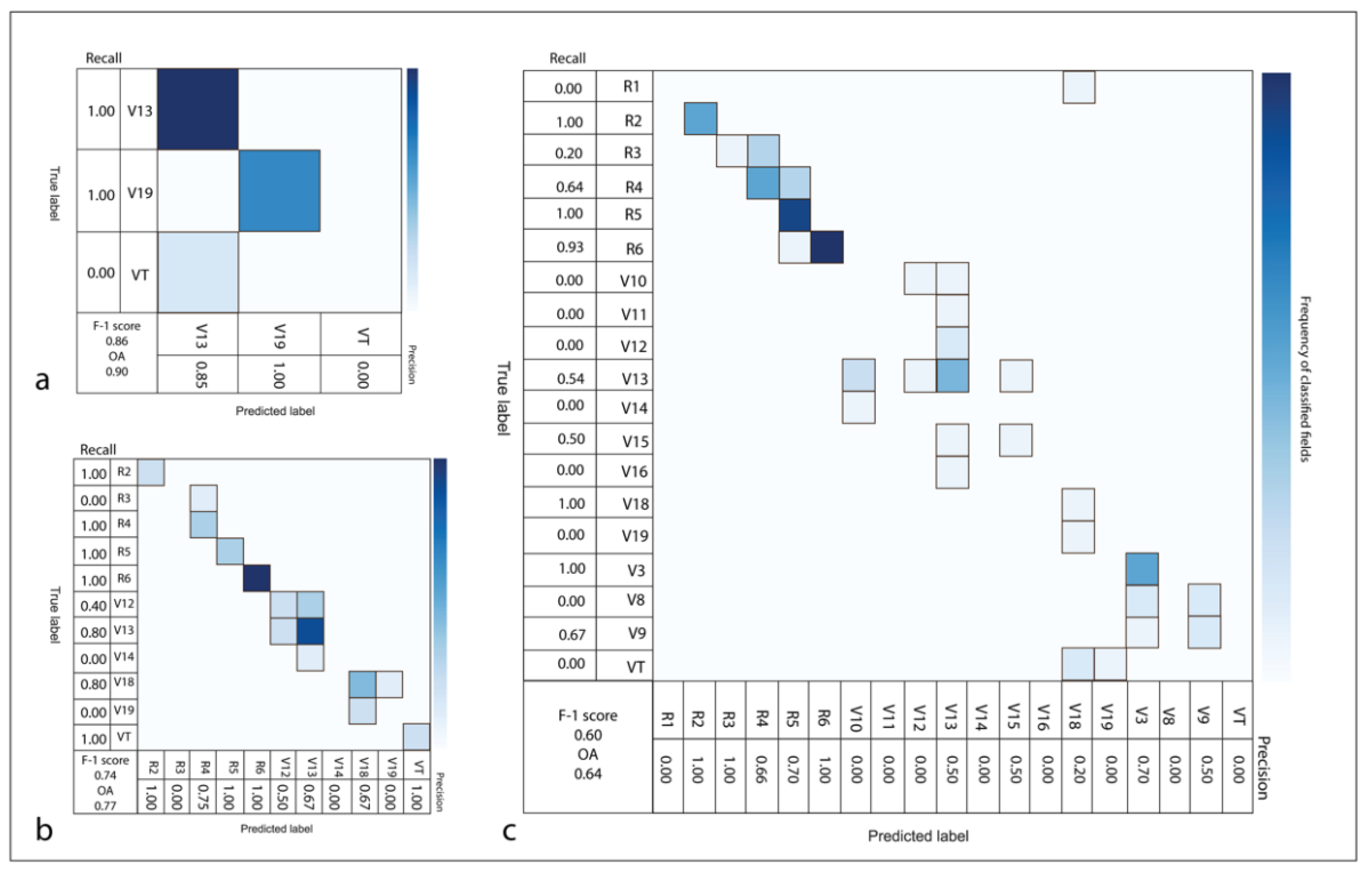

3.2. Model Performance Using PF Data in Both Regions

3.3. Model Performance Using Sentinel-2 Data

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Liang, L.; Schwartz, M.D.; Fei, S. Validating satellite phenology through intensive ground observation and landscape scaling in a mixed seasonal forest. Remote Sens. Environ. 2011, 115, 143–157. [Google Scholar] [CrossRef]

- Ruml, M.; Vulić, T. Importance of phenological observations and predictions in agriculture. J. Agric. Sci. 2005, 50, 217–225. [Google Scholar] [CrossRef]

- Henebry, G.M.; de Beurs, K.M. Remote Sensing of Land Surface Phenology: A Prospectus. In Phenology: An Integrative Environmental Science; Springer: Dordrecht, The Netherlands, 2013; pp. 385–411. [Google Scholar]

- Sakamoto, T.; Wardlow, B.D.; Gitelson, A.A.; Verma, S.B.; Suyker, A.E.; Arkebauer, T.J. A two-step filtering approach for detecting maize and soybean phenology with time-series MODIS data. Remote Sens. Environ. 2010, 114, 2146–2159. [Google Scholar] [CrossRef]

- Wang, H.; Ghosh, A.; Linquist, B.A.; Hijmans, R.J. Satellite-Based Observations Reveal Effects of Weather Variation on Rice Phenology. Remote Sens. 2020, 12, 1522. [Google Scholar] [CrossRef]

- Li, S.; Xiao, J.; Ni, P.; Zhang, J.; Wang, H.; Wang, J. Monitoring paddy rice phenology using time series MODIS data over Jiangxi Province, China. Int. J. Agric. Biol. Eng. 2014, 7, 28–36. [Google Scholar]

- Rezaei, E.E.; Siebert, S.; Hüging, H.; Ewert, F. Climate change effect on wheat phenology depends on cultivar change. Sci. Rep. 2018, 8, 4891. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sharifi, H.; Hijmans, R.J.; Hill, J.E.; Linquist, B.A. Using Stage-Dependent Temperature Parameters to Improve Phenological Model Prediction Accuracy in Rice Models. Crop Sci. 2017, 57, 444–453. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; Zhang, X. Mapping crop phenology in near real-time using satellite remote sensing: Challenges and opportunities. J. Remote Sens. 2021, 2021, 8379391. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.C.; Zhang, X.; Yang, Z.; Alfieri, J.G.; Kustas, W.P.; Mueller, R.; Johnson, D.M.; Prueger, J.H. Toward mapping crop progress at field scales through fusion of Landsat and MODIS imagery. Remote Sens. Environ. 2017, 188, 9–25. [Google Scholar] [CrossRef] [Green Version]

- United States Department of Agriculture. USDA. 2018. Available online: https://www.nass.usda.gov/Statistics_by_State/Kansas/Publications/Crop_Progress_and_Condition/historic.php (accessed on 20 December 2021).

- USDA/NASS Quickstats. Available online: https://quickstats.nass.usda.gov/ (accessed on 15 July 2021).

- White, M.A.; Thornton, P.E.; Running, S.W. A continental phenology model for monitoring vegetation responses to interannual climatic variability. Glob. Biogeochem. Cycles 1997, 11, 217–234. [Google Scholar] [CrossRef]

- Zhang, X.; Friedl, M.A.; Schaaf, C.B.; Strahler, A.H.; Hodges, J.C.; Gao, F.; Reed, B.C.; Huete, A. Monitoring vegetation phenology using MODIS. Remote Sens. Environ. 2003, 84, 471–475. [Google Scholar] [CrossRef]

- Vina, A.; Gitelson, A.A.; Rundquist, D.C.; Keydan, G.P.; Leavitt, B.; Schepers, J. Monitoring maize (Zea mays L.) phenology with remote sensing. Agron. J. 2004, 96, 1139–1147. [Google Scholar] [CrossRef]

- Houborg, R.; Fisher, J.B.; Skidmore, A.K. Advances in remote sensing of vegetation function and traits. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Rast, M.; Painter, T.H. Earth observation imaging spectroscopy for terrestrial systems: An overview of its history, techniques, and applications of its missions. Surv. Geophys. 2019, 40, 303–331. [Google Scholar] [CrossRef]

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B.; et al. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Liao, C.; Wang, J.; Dong, T.; Shang, J.; Liu, J.; Song, Y. Using spatio-temporal fusion of Landsat-8 and MODIS data to derive phenology, biomass and yield estimates for corn and soybean. Sci. Total Environ. 2019, 650, 1707–1721. [Google Scholar] [CrossRef]

- Arun, P.V.; Sadeh, R.; Avneri, A.; Tubul, Y.; Camino, C.; Buddhiraju, K.M.; Porwal, A.; Lati, R.; Zarco-Tejada, P.; Peleg, Z.; et al. Multimodal Earth observation data fusion: Graph-based approach in shared latent space. Inf. Fusion 2022, 78, 20–39. [Google Scholar] [CrossRef]

- Schramowski, P.; Stammer, W.; Teso, S.; Brugger, A.; Herbert, F.; Shao, X.; Luigs, H.-G.; Mahlein, A.-K.; Kersting, K. Making deep neural networks right for the right scientific reasons by interacting with their explanations. Nat. Mach. Intell. 2020, 2, 476–486. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sen. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Rodriguez-Galiano, V.; Sanchez-Castillo, M.; Chica-Olmo, M.; Chica-Rivas, M. Machine learning predictive models for mineral prospectivity: An evaluation of neural networks, random forest, regression trees and support vector machines. Ore Geol. Rev. 2015, 71, 804–818. [Google Scholar] [CrossRef]

- Lin, X.; Harrington, J.; Ciampitti, I.; Gowda, P.; Brown, D.; Kisekka, I. Kansas trends and changes in temperature, precipitation, drought, and frost-free days from the 1890s to 2015. J. Contemp. Water Res. Educ. 2017, 162, 18–30. [Google Scholar] [CrossRef] [Green Version]

- Hanks, E.M.; Hooten, M.B.; Baker, F.A. Reconciling multiple data sources to improve accuracy of large-scale prediction of forest disease incidence. Ecol. Appl. 2011, 21, 1173–1188. [Google Scholar] [CrossRef] [PubMed]

- Ruiz-Gutierrez, V.; Hooten, M.B.; Grant, E.H.C. Uncertainty in biological monitoring: A framework for data collection and analysis to account for multiple sources of sampling bias. Methods Ecol. Evol. 2016, 7, 900–909. [Google Scholar] [CrossRef] [Green Version]

- Hooten, M.B.; Wikle, C.K.; Dorazio, R.M.; Royle, J.A. Hierarchical spatiotemporal matrix models for characterizing invasions. Biometrics 2007, 63, 558–567. [Google Scholar] [CrossRef]

- Dickinson, J.L.; Shirk, J.; Bonter, D.; Bonney, R.; Crain, R.L.; Martin, J.; Phillips, T.; Purcell, K. The current state of citizen science as a tool for ecological research and public engagement. Front. Ecol. Environ. 2012, 10, 291–297. [Google Scholar] [CrossRef] [Green Version]

- Ciampitti, I.A.; Elmore, R.W.; Lauer, J. Corn Growth and Development; KSRE Bookstore: Manhattan, KS, USA, 2016; Available online: https://bookstore.ksre.ksu.edu/pubs/MF3305.pdf (accessed on 15 January 2022).

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Kumar, L.; Mutanga, O. Google Earth Engine applications since inception: Usage, trends, and potential. Remote Sen. 2018, 10, 1509. [Google Scholar] [CrossRef] [Green Version]

- Planet Fusion Team. Planet Fusion Monitoring Technical Specification, Version 1.0.0-beta.3, San Francisco, CA, USA. 2021. Available online: https://assets.planet.com/docs/Planet_fusion_specification_March_2021.pdfD (accessed on 1 April 2021).

- Houborg, R.; McCabe, M.F. Daily Retrieval of NDVI and LAI at 3 m Resolution via the Fusion of CubeSat, Landsat, and MODIS Data. Remote Sens. 2018, 10, 890. [Google Scholar] [CrossRef] [Green Version]

- Houborg, R.; McCabe, M.F. A Cubesat Enabled Spatio-Temporal Enhancement Method (CESTEM) utilizing Planet, Landsat and MODIS data. Remote Sens. Environ. 2018, 209, 211–226. [Google Scholar] [CrossRef]

- Frantz, D. FORCE—Landsat + Sentinel-2 analysis ready data and beyond. Remote Sens. 2019, 11, 1124. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Roy, D.P. A global analysis of Sentinel-2A, Sentinel-2B and Landsat-8 data revisit intervals and implications for terrestrial monitoring. Remote Sen. 2017, 9, 902. [Google Scholar] [CrossRef] [Green Version]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Labergellinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for Sentinel-2. In Image and Signal Processing for Remote Sensing XXIII. Int. Soc. Opt. Photonics 2017, 10427, 1042704. [Google Scholar]

- Tucker, C.J. Asymptotic nature of grass canopy spectral reflectance. Appl. Opt. 1977, 16, 1151–1156. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.P.; Cohen, W.B.; Kennedy, R.E.; Fassnacht, K.S.; Briggs, J.M. Relationships between leaf area index and Landsat TM spectral vegetation indices across three temperate zone sites. Remote Sens. Environ. 1999, 70, 52–68. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Vina, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 1248. [Google Scholar] [CrossRef] [Green Version]

- Viña, A.; Gitelson, A.A.; Nguy-Robertson, A.L.; Peng, Y. Comparison of different vegetation indices for the remote assessment of green leaf area index of crops. Remote Sens. Environ. 2011, 115, 3468–3478. [Google Scholar] [CrossRef]

- Liu, H.Q.; Huete, A. A feedback-based modification of the NDVI to minimize canopy background and atmospheric noise. IEEE Transac. Geosci. Remote Sen. 1995, 33, 457–465. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sen. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Vincini, M.; Frazzi, E. Comparing narrow and broad-band vegetation indices to estimate leaf chlorophyll content in planophile crop canopies. Precis. Agric. 2011, 12, 334–344. [Google Scholar] [CrossRef]

- Sripada, R.P.; Heiniger, R.W.; White, J.G.; Meijer, A.D. Aerial color infrared photography for determining early in-season nitrogen requirements in corn. Agron. J. 2006, 98, 968–977. [Google Scholar] [CrossRef]

- Abatzoglou, J.T. Development of gridded surface meteorological data for ecological applications and modelling. Int. J. Climatol. 2013, 33, 121–131. [Google Scholar] [CrossRef]

- Abatzoglou, J.T.; Rupp, D.E.; Mote, P.W. Seasonal climate variability and change in the Pacific Northwest of the United States. J. Clim. 2014, 27, 2125–2142. [Google Scholar] [CrossRef]

- Canny, J.F. A computation approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 670–700. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Dietterich, T.G. Ensemble Methods in Machine Learning. In Proceedings of the International Workshop on Multiple Classifier Systems, Cagliari, Italy, 21–23 June 2000; pp. 1–15. [Google Scholar]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef] [Green Version]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Tatsumi, K.; Yamashiki, Y.; Torres MA, C.; Taipe, C.L.R. Crop classification of upland fields using Random Forest of time-series Landsat ETM+ data. Comput. Electron. Agric. 2015, 115, 171–179. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, With Implication for Evaluation. In Proceedings of the European Conference on Information Retrieval, Lisbon, Portugal, 4–17 April 2005; pp. 345–359. [Google Scholar]

- Branco, P.; Torgo, L.; Ribeiro, R.P. A survey of predictive modeling on imbalanced domains. ACM Comput. Surv. 2016, 49, 1–50. [Google Scholar] [CrossRef]

- Nguyen, G.H.; Bouzerdoum, A.; Phung, S.L. Learning Pattern Classification Tasks with Imbalanced Data Sets; IntechOpen Limited: London, UK, 2009; pp. 193–208. Available online: https://www.intechopen.com/chapters/9154 (accessed on 15 January 2022).

- Peng, B.; Guan, K.; Pan, M.; Li, Y. Benefits of seasonal climate prediction and satellite data for forecasting US maize yield. Geophys. Res. Lett. 2018, 45, 9662–9671. [Google Scholar] [CrossRef]

- Bandaru, V.; Yaramasu, R.; Koutilya, P.N.V.R.; He, J.; Fernando, S.; Sahajpal, R.; Wardlow, B.D.; Suyker, A.; Justice, C. PhenoCrop: An integrated satellite-based framework to estimate physiological growth stages of corn and soybeans. Int. J. Appl. Earth Observ. Geoinf. 2020, 92, 102188. [Google Scholar] [CrossRef]

- Nieto, L.; Schwalbert, R.; Prasad, P.V.; Olson, B.J.; Ciampitti, I.A. An integrated approach of field, weather, and satellite data for monitoring maize phenology. Sci. Rep. 2021, 11, 15711. [Google Scholar] [CrossRef] [PubMed]

- Gao, F.; Anderson, M.; Daughtry, C.; Karnieli, A.; Hively, D.; Kustas, W. A within-season approach for detecting early growth stages in corn and soybean using high temporal and spatial resolution imagery. Remote Sens. Environ. 2020, 242, 111752. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Yu, L.; Gong, P.; Biging, G.S. Automated mapping of soybean and corn using phenology. ISPRS J. Photogramm. Remote Sens. 2016, 119, 151–164. [Google Scholar] [CrossRef] [Green Version]

- Zhong, L.; Yu, L.; Li, X.; Hu, L.; Gong, P. Rapid corn and soybean mapping in US Corn Belt and neighboring areas. Sci. Rep. 2016, 6, 36240. [Google Scholar] [CrossRef] [Green Version]

- Cai, Y.; Guan, K.; Lobell, D.; Potgieter, A.B.; Wang, S.; Peng, J.; Xu, T.; Asseng, S.; Zhang, Y.; You, L.; et al. Integrating satellite and climate data to predict wheat yield in Australia using machine learning approaches. Agric. For. Meteorol. 2019, 274, 144–159. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Bai, J.; Chen, X.; Dobermann, A.; Yang, H.; Cassman, K.G.; Zhang, F. Evaluation of NASA satellite-and model-derived weather data for simulation of maize yield potential in China. Agron. J. 2010, 102, 9–16. [Google Scholar] [CrossRef]

- Joshi, V.R.; Kazula, M.J.; Coulter, J.A.; Naeve, S.L.; Garcia, A.G.y. In-season weather data provide reliable yield estimates of maize and soybean in the US central Corn Belt. Int. J. Biometeorol. 2021, 65, 489–502. [Google Scholar] [CrossRef]

- Azzari, G.; Jain, M.; Lobell, D.B. Towards fine resolution global maps of crop yields: Testing multiple methods and satellites in three countries. Remote Sens. Environ. 2017, 202, 129–141. [Google Scholar] [CrossRef]

- Zhang, S.; Tao, F.; Zhang, Z. Spatial and temporal changes in vapor pressure deficit and their impacts on crop yields in China during 1980–2008. J. Meteorol. Res. 2017, 31, 800–808. [Google Scholar] [CrossRef]

- Hsiao, J.; Swann, A.L.; Kim, S.H. Maize yield under a changing climate: The hidden role of vapor pressure deficit. Agric. For. Meteorol. 2019, 279, 107692. [Google Scholar] [CrossRef] [Green Version]

- Hoens, T.R.; Qian, Q.; Chawla, N.V.; Zhou, Z.H. Building Decision Trees for The Multi-Class Imbalance Problem. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Kuala Lumpur, Malaysia, 29 May–1 June 2012; pp. 122–134. [Google Scholar]

- LP DAAC-HLSL30. (n.d.). LP DAAC-HLSL30. Available online: https://lpdaac.usgs.gov/products/hlsl30v015/ (accessed on 5 August 2021).

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Herrmann, I.; Bdolach, E.; Montekyo, Y.; Rachmilevitch, S.; Townsend, P.A.; Karnieli, A. Assessment of maize yield and phenology by drone-mounted superspectral camera. Precis. Agric. 2020, 21, 51–76. [Google Scholar] [CrossRef]

- Seeley, M.; Asner, G.P. Imaging Spectroscopy for Conservation Applications. Remote Sens. 2021, 13, 292. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nieto, L.; Houborg, R.; Zajdband, A.; Jumpasut, A.; Prasad, P.V.V.; Olson, B.J.S.C.; Ciampitti, I.A. Impact of High-Cadence Earth Observation in Maize Crop Phenology Classification. Remote Sens. 2022, 14, 469. https://doi.org/10.3390/rs14030469

Nieto L, Houborg R, Zajdband A, Jumpasut A, Prasad PVV, Olson BJSC, Ciampitti IA. Impact of High-Cadence Earth Observation in Maize Crop Phenology Classification. Remote Sensing. 2022; 14(3):469. https://doi.org/10.3390/rs14030469

Chicago/Turabian StyleNieto, Luciana, Rasmus Houborg, Ariel Zajdband, Arin Jumpasut, P. V. Vara Prasad, Brad J. S. C. Olson, and Ignacio A. Ciampitti. 2022. "Impact of High-Cadence Earth Observation in Maize Crop Phenology Classification" Remote Sensing 14, no. 3: 469. https://doi.org/10.3390/rs14030469

APA StyleNieto, L., Houborg, R., Zajdband, A., Jumpasut, A., Prasad, P. V. V., Olson, B. J. S. C., & Ciampitti, I. A. (2022). Impact of High-Cadence Earth Observation in Maize Crop Phenology Classification. Remote Sensing, 14(3), 469. https://doi.org/10.3390/rs14030469