A Survey of Computer Vision Techniques for Forest Characterization and Carbon Monitoring Tasks

Abstract

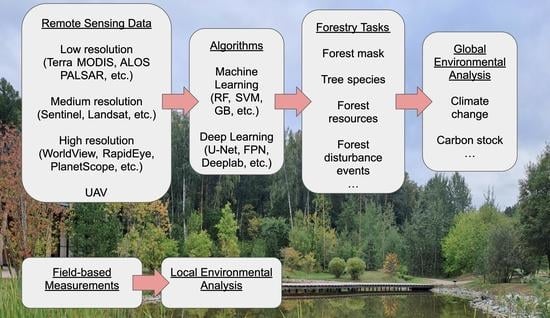

1. Introduction

2. Review Methodology

3. Remote Sensing Data and Spectral Indices for Forest Analysis

3.1. Sources of Remote Sensing Data

3.2. Popular Spectral Indices Applied for Forest Monitoring Research

4. Computer Vision Algorithms

4.1. Classical Machine Learning Algorithms

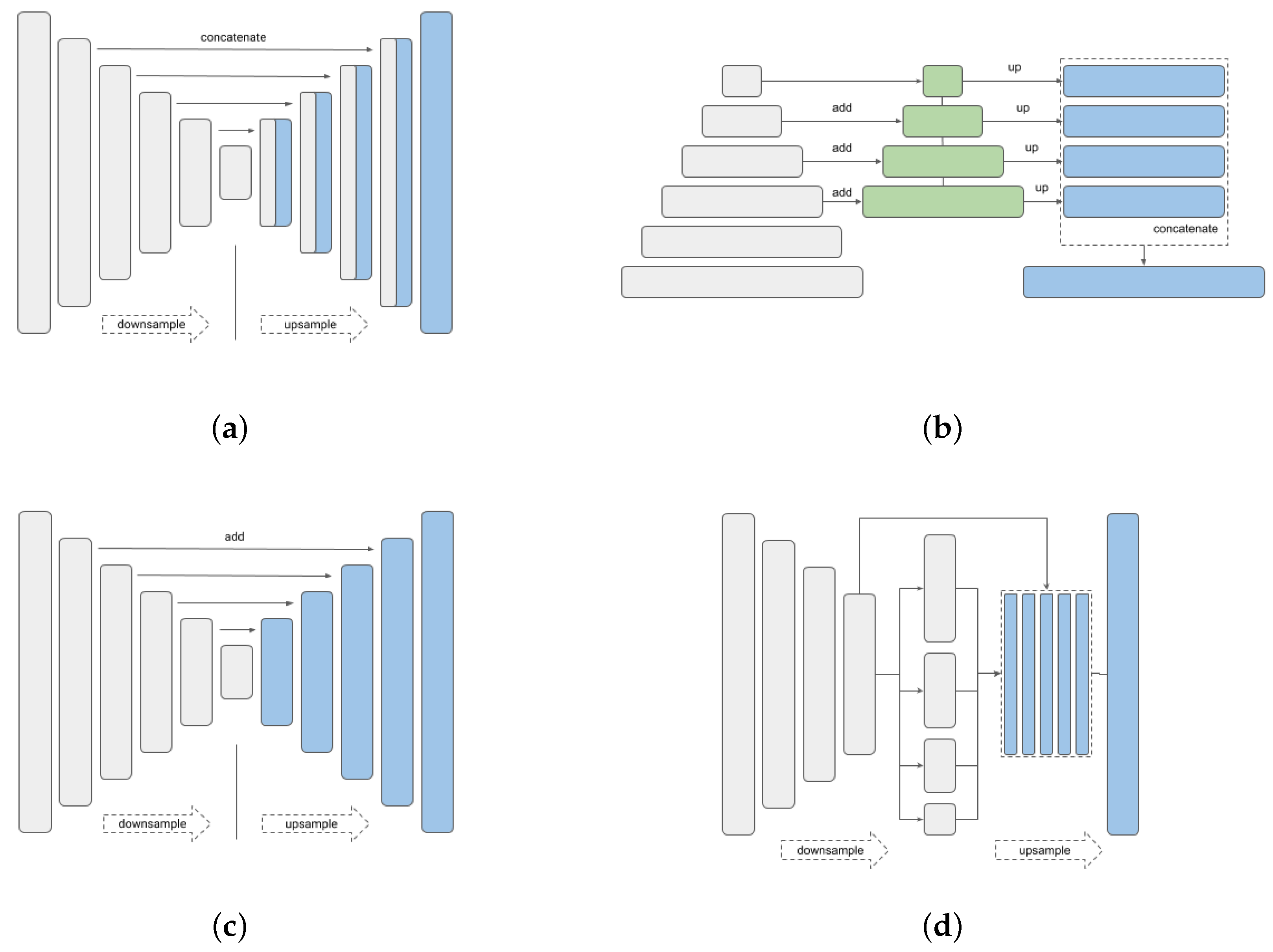

4.2. Deep Learning Algorithms

5. Evaluation Metrics

5.1. Classification

- Per-pixel mask of the target classes, ground truth;

- Per-pixel predicted mask with target classes.

5.2. Regression

6. Forest Mask Estimation on Remote Sensing Data

6.1. Use of Data of Different Spatial Resolution

6.1.1. Low Spatial Resolution

6.1.2. Medium Spatial Resolution

6.1.3. High Spatial Resolution

6.1.4. Use of Data from Unmanned Aerial Vehicle

6.2. Computer Vision Algorithms for Forest Mask Estimation. Specifics and Limitations of the Approach

7. Forest-Forming Species Classification on Remote Sensing Data

7.1. Use of Data of Different Spatial Resolution

7.1.1. Low Spatial Resolution

7.1.2. Medium Spatial Resolution

7.1.3. High Spatial Resolution

7.1.4. Use of Data from Unmanned Aerial Vehicle

7.2. Computer Vision Algorithms for Classifying Forest-Forming Species Types. Specifics and Limitations of the Approach

8. Forest Resources Estimation on Remote Sensing Data

8.1. Use of Data of Different Spatial Resolution

8.1.1. Low Spatial Resolution

8.1.2. Medium Spatial Resolution

8.1.3. High Spatial Resolution

8.1.4. Use of Data from Unmanned Aerial Vehicle

8.2. Computer Vision Algorithms for the Task of Forest Resources Estimation. Specifics and Limitations of the Approach

9. Discussion

9.1. Forest Carbon Disturbing Events

9.2. Data and Labeling Limitations

9.3. Visual Transformers as State-of-the-Art CV Algorithms Relevant for Forest Taxation Problem

10. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ARVI | Atmospherically Resistant Vegetation Index |

| BAI | Burned Area Index |

| CNN | Convolutional neural network |

| CV | Computer vision |

| DL | Deep learning |

| EVI | Enhanced Vegetation Index |

| FPN | Functional pyramid network |

| GHG | Greenhouse gas |

| kNN | k Nearest Neighbor |

| NBR | Normalised Burn Ratio |

| NBRT | Normalised Burn Ratio Thermal |

| NDMI | Normalized Difference Moisture Index |

| NDVI | Normalised Difference Vegetation Index |

| NDWI | Normalized Difference Water Index |

| NIR | Near-infrared |

| OA | Overall accuracy |

| RF | Random forest |

| RS | Remote sensing |

| LD | Linear dichroism |

| LSWI | Land Surface Water Index |

| ML | Machine learning |

| SAVI | Soil Adjusted Vegetation Index |

| SVM | Support vector machines |

| SWIR | Short-wave infrared reflectance |

| VCI | Vegetation Condition Index |

| UAV | Unmanned aerial vehicle |

References

- Peters, G.P. Beyond carbon budgets. Nat. Geosci. 2018, 11, 378–380. [Google Scholar] [CrossRef]

- Treat, C.C.; Marushchak, M.E.; Voigt, C.; Zhang, Y.; Tan, Z.; Zhuang, Q.; Virtanen, T.A.; Räsänen, A.; Biasi, C.; Hugelius, G.; et al. Tundra landscape heterogeneity, not interannual variability, controls the decadal regional carbon balance in the Western Russian Arctic. Glob. Change Biol. 2018, 24, 5188–5204. [Google Scholar] [CrossRef] [PubMed]

- Tharammal, T.; Bala, G.; Devaraju, N.; Nemani, R. A review of the major drivers of the terrestrial carbon uptake: Model-based assessments, consensus, and uncertainties. Environ. Res. Lett. 2019, 14, 093005. [Google Scholar] [CrossRef]

- Santoro, M.; Cartus, O.; Carvalhais, N.; Rozendaal, D.; Avitabile, V.; Araza, A.; De Bruin, S.; Herold, M.; Quegan, S.; Rodríguez-Veiga, P.; et al. The global forest above-ground biomass pool for 2010 estimated from high-resolution satellite observations. Earth Syst. Sci. Data 2021, 13, 3927–3950. [Google Scholar] [CrossRef]

- Koldasbayeva, D.; Tregubova, P.; Shadrin, D.; Gasanov, M.; Pukalchik, M. Large-scale forecasting of Heracleum sosnowskyi habitat suitability under the climate change on publicly available data. Sci. Rep. 2022, 12, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Harris, N.L.; Gibbs, D.A.; Baccini, A.; Birdsey, R.A.; De Bruin, S.; Farina, M.; Fatoyinbo, L.; Hansen, M.C.; Herold, M.; Houghton, R.A.; et al. Global maps of twenty-first century forest carbon fluxes. Nat. Clim. Change 2021, 11, 234–240. [Google Scholar] [CrossRef]

- Seddon, N.; Chausson, A.; Berry, P.; Girardin, C.A.; Smith, A.; Turner, B. Understanding the value and limits of nature-based solutions to climate change and other global challenges. Philos. Trans. R. Soc. B 2020, 375, 20190120. [Google Scholar] [CrossRef]

- Pingoud, K.; Ekholm, T.; Sievänen, R.; Huuskonen, S.; Hynynen, J. Trade-offs between forest carbon stocks and harvests in a steady state–A multi-criteria analysis. J. Environ. Manag. 2018, 210, 96–103. [Google Scholar] [CrossRef]

- Ontl, T.A.; Janowiak, M.K.; Swanston, C.W.; Daley, J.; Handler, S.; Cornett, M.; Hagenbuch, S.; Handrick, C.; McCarthy, L.; Patch, N. Forest management for carbon sequestration and climate adaptation. J. For. 2020, 118, 86–101. [Google Scholar] [CrossRef]

- Bourgoin, C.; Blanc, L.; Bailly, J.S.; Cornu, G.; Berenguer, E.; Oszwald, J.; Tritsch, I.; Laurent, F.; Hasan, A.F.; Sist, P.; et al. The potential of multisource remote sensing for mapping the biomass of a degraded Amazonian forest. Forests 2018, 9, 303. [Google Scholar] [CrossRef]

- Kangas, A.; Astrup, R.; Breidenbach, J.; Fridman, J.; Gobakken, T.; Korhonen, K.T.; Maltamo, M.; Nilsson, M.; Nord-Larsen, T.; Næsset, E.; et al. Remote sensing and forest inventories in Nordic countries—Roadmap for the future. Scand. J. For. Res. 2018, 33, 397–412. [Google Scholar] [CrossRef]

- Gao, Y.; Skutsch, M.; Paneque-Gálvez, J.; Ghilardi, A. Remote sensing of forest degradation: A review. Environ. Res. Lett. 2020, 15, 103001. [Google Scholar] [CrossRef]

- Lechner, A.M.; Foody, G.M.; Boyd, D.S. Applications in remote sensing to forest ecology and management. ONE Earth 2020, 2, 405–412. [Google Scholar] [CrossRef]

- Global Ecosystem Dynamics Investigation (GEDI). Available online: https://www.earthdata.nasa.gov/sensors/gedi (accessed on 20 October 2022).

- Barrett, F.; McRoberts, R.E.; Tomppo, E.; Cienciala, E.; Waser, L.T. A questionnaire-based review of the operational use of remotely sensed data by national forest inventories. Remote Sens. Environ. 2016, 174, 279–289. [Google Scholar] [CrossRef]

- Janssens-Maenhout, G.; Pinty, B.; Dowell, M.; Zunker, H.; Andersson, E.; Balsamo, G.; Bézy, J.L.; Brunhes, T.; Bösch, H.; Bojkov, B.; et al. Toward an Operational Anthropogenic CO 2 Emissions Monitoring and Verification Support Capacity. Bull. Am. Meteorol. Soc. 2020, 101, E1439–E1451. [Google Scholar] [CrossRef]

- Schepaschenko, D.; Moltchanova, E.; Fedorov, S.; Karminov, V.; Ontikov, P.; Santoro, M.; See, L.; Kositsyn, V.; Shvidenko, A.; Romanovskaya, A.; et al. Russian forest sequesters substantially more carbon than previously reported. Sci. Rep. 2021, 11, 1–7. [Google Scholar]

- Gschwantner, T.; Alberdi, I.; Bauwens, S.; Bender, S.; Borota, D.; Bosela, M.; Bouriaud, O.; Breidenbach, J.; Donis, J.; Fischer, C.; et al. Growing stock monitoring by European National Forest Inventories: Historical origins, current methods and harmonisation. For. Ecol. Manag. 2022, 505, 119868. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Ghamisi, P.; Piles, M.; Werner, M.; Cuadra, L.; Moreno-Martínez, A.; Izquierdo-Verdiguier, E.; Muñoz-Marí, J.; Mosavi, A.; Camps-Valls, G. Machine learning information fusion in Earth observation: A comprehensive review of methods, applications and data sources. Inf. Fusion 2020, 63, 256–272. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep learning in forestry using uav-acquired rgb data: A practical review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Spencer Jr, B.F.; Hoskere, V.; Narazaki, Y. Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Chen, S.; Dobriban, E.; Lee, J.H. Invariance reduces variance: Understanding data augmentation in deep learning and beyond. arXiv 2019, arXiv:1907.10905. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Tsitsi, B. Remote sensing of aboveground forest biomass: A review. Trop. Ecol. 2016, 57, 125–132. [Google Scholar]

- Xiao, J.; Chevallier, F.; Gomez, C.; Guanter, L.; Hicke, J.A.; Huete, A.R.; Ichii, K.; Ni, W.; Pang, Y.; Rahman, A.F.; et al. Remote sensing of the terrestrial carbon cycle: A review of advances over 50 years. Remote Sens. Environ. 2019, 233, 111383. [Google Scholar] [CrossRef]

- Rodríguez-Veiga, P.; Wheeler, J.; Louis, V.; Tansey, K.; Balzter, H. Quantifying forest biomass carbon stocks from space. Curr. For. Rep. 2017, 3, 1–18. [Google Scholar] [CrossRef]

- Scopus. Available online: https://www.scopus.com/ (accessed on 20 October 2022).

- Calders, K.; Adams, J.; Armston, J.; Bartholomeus, H.; Bauwens, S.; Bentley, L.P.; Chave, J.; Danson, F.M.; Demol, M.; Disney, M.; et al. Terrestrial laser scanning in forest ecology: Expanding the horizon. Remote Sens. Environ. 2020, 251, 112102. [Google Scholar] [CrossRef]

- Zeng, Y.; Hao, D.; Huete, A.; Dechant, B.; Berry, J.; Chen, J.M.; Joiner, J.; Frankenberg, C.; Bond-Lamberty, B.; Ryu, Y.; et al. Optical vegetation indices for monitoring terrestrial ecosystems globally. Nat. Rev. Earth Environ. 2022, 3, 477–493. [Google Scholar] [CrossRef]

- Chen, Y.; Guerschman, J.P.; Cheng, Z.; Guo, L. Remote sensing for vegetation monitoring in carbon capture storage regions: A review. Appl. Energy 2019, 240, 312–326. [Google Scholar] [CrossRef]

- Tang, X.; Bullock, E.L.; Olofsson, P.; Estel, S.; Woodcock, C.E. Near real-time monitoring of tropical forest disturbance: New algorithms and assessment framework. Remote Sens. Environ. 2019, 224, 202–218. [Google Scholar] [CrossRef]

- Zhang, Y.; Ling, F.; Foody, G.M.; Ge, Y.; Boyd, D.S.; Li, X.; Du, Y.; Atkinson, P.M. Mapping annual forest cover by fusing PALSAR/PALSAR-2 and MODIS NDVI during 2007–2016. Remote Sens. Environ. 2019, 224, 74–91. [Google Scholar] [CrossRef]

- Rostami, A.; Shah-Hosseini, R.; Asgari, S.; Zarei, A.; Aghdami-Nia, M.; Homayouni, S. Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning. Remote Sens. 2022, 14, 992. [Google Scholar] [CrossRef]

- Shumilo, L.; Kussul, N.; Lavreniuk, M. U-Net model for logging detection based on the Sentinel-1 and Sentinel-2 data. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4680–4683. [Google Scholar]

- Stych, P.; Jerabkova, B.; Lastovicka, J.; Riedl, M.; Paluba, D. A comparison of Worldview-2 and Landsat 8 images for the classification of forests affected by bark beetle outbreaks using a support vector machine and a neural network: A case study in the sumava mountains. Geosciences 2019, 9, 396. [Google Scholar] [CrossRef]

- Deigele, W.; Brandmeier, M.; Straub, C. A hierarchical deep-learning approach for rapid windthrow detection on planetscope and high-resolution aerial image data. Remote Sens. 2020, 12, 2121. [Google Scholar] [CrossRef]

- Lakyda, P.; Shvidenko, A.; Bilous, A.; Myroniuk, V.; Matsala, M.; Zibtsev, S.; Schepaschenko, D.; Holiaka, D.; Vasylyshyn, R.; Lakyda, I.; et al. Impact of disturbances on the carbon cycle of forest ecosystems in Ukrainian Polissya. Forests 2019, 10, 337. [Google Scholar] [CrossRef]

- NASA. Available online: https://modis.gsfc.nasa.gov/about/specifications.php (accessed on 20 October 2022).

- JAXA. Available online: https://www.eorc.jaxa.jp/ALOS/en/alos-2/a2_sensor_e.htm (accessed on 20 October 2022).

- NASA. The U.S. Geological Survey. Available online: https://landsat.gsfc.nasa.gov/satellites/landsat-8/ (accessed on 20 October 2022).

- The European Space Agency. Available online: https://sentinel.esa.int/web/sentinel/user-guides (accessed on 20 October 2022).

- MAXAR. Available online: https://earth.esa.int/eogateway/missions/worldview-2 (accessed on 20 October 2022).

- Planet. Available online: https://www.planet.com/products/planet-imagery/ (accessed on 20 October 2022).

- Airbus. Available online: https://earth.esa.int/eogateway/catalog/spot-6-and-7-esa-archive (accessed on 20 October 2022).

- Javan, F.D.; Samadzadegan, F.; Mehravar, S.; Toosi, A.; Khatami, R.; Stein, A. A review of image fusion techniques for pan-sharpening of high-resolution satellite imagery. ISPRS J. Photogramm. Remote Sens. 2021, 171, 101–117. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sensors 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Tesfaye, A.A.; Awoke, B.G. Evaluation of the saturation property of vegetation indices derived from sentinel-2 in mixed crop-forest ecosystem. Spat. Inf. Res. 2021, 29, 109–121. [Google Scholar] [CrossRef]

- Pflugmacher, D.; Rabe, A.; Peters, M.; Hostert, P. Mapping pan-European land cover using Landsat spectral-temporal metrics and the European LUCAS survey. Remote Sens. Environ. 2019, 221, 583–595. [Google Scholar] [CrossRef]

- Immitzer, M.; Neuwirth, M.; Böck, S.; Brenner, H.; Vuolo, F.; Atzberger, C. Optimal input features for tree species classification in Central Europe based on multi-temporal Sentinel-2 data. Remote Sens. 2019, 11, 2599. [Google Scholar] [CrossRef]

- Rogers, B.M.; Solvik, K.; Hogg, E.H.; Ju, J.; Masek, J.G.; Michaelian, M.; Berner, L.T.; Goetz, S.J. Detecting early warning signals of tree mortality in boreal North America using multiscale satellite data. Glob. Change Biol. 2018, 24, 2284–2304. [Google Scholar] [CrossRef]

- Dang, A.T.N.; Nandy, S.; Srinet, R.; Luong, N.V.; Ghosh, S.; Kumar, A.S. Forest aboveground biomass estimation using machine learning regression algorithm in Yok Don National Park, Vietnam. Ecol. Inform. 2019, 50, 24–32. [Google Scholar] [CrossRef]

- Marx, A.; Kleinschmit, B. Sensitivity analysis of RapidEye spectral bands and derived vegetation indices for insect defoliation detection in pure Scots pine stands. iForest-Biogeosciences For. 2017, 10, 659. [Google Scholar] [CrossRef]

- Anderegg, W.R.; Trugman, A.T.; Badgley, G.; Anderson, C.M.; Bartuska, A.; Ciais, P.; Cullenward, D.; Field, C.B.; Freeman, J.; Goetz, S.J.; et al. Climate-driven risks to the climate mitigation potential of forests. Science 2020, 368, eaaz7005. [Google Scholar] [CrossRef]

- Tran, B.N.; Tanase, M.A.; Bennett, L.T.; Aponte, C. Evaluation of spectral indices for assessing fire severity in Australian temperate forests. Remote Sens. 2018, 10, 1680. [Google Scholar] [CrossRef]

- Hislop, S.; Jones, S.; Soto-Berelov, M.; Skidmore, A.; Haywood, A.; Nguyen, T.H. Using landsat spectral indices in time-series to assess wildfire disturbance and recovery. Remote Sens. 2018, 10, 460. [Google Scholar] [CrossRef]

- Zaimes, G.N.; Gounaridis, D.; Symenonakis, E. Assessing the impact of dams on riparian and deltaic vegetation using remotely-sensed vegetation indices and Random Forests modelling. Ecol. Indic. 2019, 103, 630–641. [Google Scholar] [CrossRef]

- Huang, C.y.; Anderegg, W.R.; Asner, G.P. Remote sensing of forest die-off in the Anthropocene: From plant ecophysiology to canopy structure. Remote Sens. Environ. 2019, 231, 111233. [Google Scholar] [CrossRef]

- Huang, S.; Tang, L.; Hupy, J.P.; Wang, Y.; Shao, G. A commentary review on the use of normalized difference vegetation index (NDVI) in the era of popular remote sensing. J. For. Res. 2021, 32, 1–6. [Google Scholar] [CrossRef]

- Cunliffe, A.M.; Assmann, J.J.; Daskalova, G.N.; Kerby, J.T.; Myers-Smith, I.H. Aboveground biomass corresponds strongly with drone-derived canopy height but weakly with greenness (NDVI) in a shrub tundra landscape. Environ. Res. Lett. 2020, 15, 125004. [Google Scholar] [CrossRef]

- Jia, K. Agricultural Image Denoising, Compression and Enhancement Based on Wavelet Transform. Agronomia 2019, 36, 348–358. [Google Scholar]

- Marrs, J.; Ni-Meister, W. Machine learning techniques for tree species classification using co-registered LiDAR and hyperspectral data. Remote Sens. 2019, 11, 819. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Friedman, J.H. Stochastic gradient boosting. Comput. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobotics 2013, 7, 21. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD `16, New York, NY, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Uddin, M.P.; Mamun, M.A.; Hossain, M.A. PCA-based feature reduction for hyperspectral remote sensing image classification. IETE Tech. Rev. 2021, 38, 377–396. [Google Scholar] [CrossRef]

- Optuna. Available online: https://optuna.org/ (accessed on 20 October 2022).

- Scikit Optimize. Available online: https://scikit-optimize.github.io/ (accessed on 20 October 2022).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Berlin/Heidelberg, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Yakubovskiy, P. Segmentation Models. 2022. Available online: https://github.com/qubvel/segmentation_models (accessed on 10 September 2022).

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. Linknet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Forstmaier, A.; Shekhar, A.; Chen, J. Mapping of Eucalyptus in Natura 2000 areas using Sentinel 2 imagery and artificial neural networks. Remote Sens. 2020, 12, 2176. [Google Scholar] [CrossRef]

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Hinton, G.; Srivastava, N.; Swersky, K. Lecture 6a—A separate, adaptive learning rate for each connection. In Slides of Lecture Neural Networks for Machine Learning; 2012. Available online: https://www.cs.toronto.edu/~tijmen/csc321/slides/lecture_slides_lec6.pdf (accessed on 20 October 2022).

- Gusak, J.; Cherniuk, D.; Shilova, A.; Katrutsa, A.; Bershatsky, D.; Zhao, X.; Eyraud-Dubois, L.; Shliazhko, O.; Dimitrov, D.; Oseledets, I.; et al. Survey on Efficient Training of Large Neural Networks. In Proceedings of the 31st International Joint Conference on Artificial Intelligence IJCAI-22, Vienna, Austria, 23–29 July 2022; pp. 5494–5501. [Google Scholar] [CrossRef]

- Abdar, M.; Pourpanah, F.; Hussain, S.; Rezazadegan, D.; Liu, L.; Ghavamzadeh, M.; Fieguth, P.; Cao, X.; Khosravi, A.; Acharya, U.R.; et al. A review of uncertainty quantification in deep learning: Techniques, applications and challenges. Inf. Fusion 2021, 76, 243–297. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Hansen, M.C.; Shimabukuro, Y.E.; Potapov, P.; Pittman, K. Comparing annual MODIS and PRODES forest cover change data for advancing monitoring of Brazilian forest cover. Remote Sens. Environ. 2008, 112, 3784–3793. [Google Scholar] [CrossRef]

- Huang, X.; Friedl, M.A. Distance metric-based forest cover change detection using MODIS time series. Int. J. Appl. Earth Obs. Geoinf. 2014, 29, 78–92. [Google Scholar] [CrossRef]

- Morton, D.C.; DeFries, R.S.; Shimabukuro, Y.E.; Anderson, L.O.; Del Bon Espírito-Santo, F.; Hansen, M.; Carroll, M. Rapid assessment of annual deforestation in the Brazilian Amazon using MODIS data. Earth Interact. 2005, 9, 1–22. [Google Scholar] [CrossRef]

- Qin, Y.; Xiao, X.; Dong, J.; Zhang, G.; Shimada, M.; Liu, J.; Li, C.; Kou, W.; Moore III, B. Forest cover maps of China in 2010 from multiple approaches and data sources: PALSAR, Landsat, MODIS, FRA, and NFI. ISPRS J. Photogramm. Remote Sens. 2015, 109, 1–16. [Google Scholar] [CrossRef]

- Fernandez-Carrillo, A.; Patočka, Z.; Dobrovolnỳ, L.; Franco-Nieto, A.; Revilla-Romero, B. Monitoring bark beetle forest damage in Central Europe. A remote sensing approach validated with field data. Remote Sens. 2020, 12, 3634. [Google Scholar] [CrossRef]

- Mondal, P.; McDermid, S.S.; Qadir, A. A reporting framework for Sustainable Development Goal 15: Multi-scale monitoring of forest degradation using MODIS, Landsat and Sentinel data. Remote Sens. Environ. 2020, 237, 111592. [Google Scholar] [CrossRef]

- Chen, N.; Tsendbazar, N.E.; Hamunyela, E.; Verbesselt, J.; Herold, M. Sub-annual tropical forest disturbance monitoring using harmonized Landsat and Sentinel-2 data. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102386. [Google Scholar] [CrossRef]

- Ganz, S.; Adler, P.; Kändler, G. Forest Cover Mapping Based on a Combination of Aerial Images and Sentinel-2 Satellite Data Compared to National Forest Inventory Data. Forests 2020, 11, 1322. [Google Scholar] [CrossRef]

- Pacheco-Pascagaza, A.M.; Gou, Y.; Louis, V.; Roberts, J.F.; Rodríguez-Veiga, P.; da Conceição Bispo, P.; Espírito-Santo, F.D.; Robb, C.; Upton, C.; Galindo, G.; et al. Near real-time change detection system using Sentinel-2 and machine learning: A test for Mexican and Colombian forests. Remote Sens. 2022, 14, 707. [Google Scholar] [CrossRef]

- Bullock, E.L.; Healey, S.P.; Yang, Z.; Houborg, R.; Gorelick, N.; Tang, X.; Andrianirina, C. Timeliness in forest change monitoring: A new assessment framework demonstrated using Sentinel-1 and a continuous change detection algorithm. Remote Sens. Environ. 2022, 276, 113043. [Google Scholar] [CrossRef]

- Khovratovich, T.; Bartalev, S.; Kashnitskii, A.; Balashov, I.; Ivanova, A. Forest change detection based on sub-pixel tree cover estimates using Landsat-OLI and Sentinel 2 data. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Bristol, UK, 26–27 March 2020; Volume 507, p. 012011. [Google Scholar]

- Giannetti, F.; Pecchi, M.; Travaglini, D.; Francini, S.; D’Amico, G.; Vangi, E.; Cocozza, C.; Chirici, G. Estimating VAIA windstorm damaged forest area in Italy using time series Sentinel-2 imagery and continuous change detection algorithms. Forests 2021, 12, 680. [Google Scholar] [CrossRef]

- Zhang, R.; Jia, M.; Wang, Z.; Zhou, Y.; Mao, D.; Ren, C.; Zhao, C.; Liu, X. Tracking annual dynamics of mangrove forests in mangrove National Nature Reserves of China based on time series Sentinel-2 imagery during 2016–2020. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102918. [Google Scholar] [CrossRef]

- SentinelHub, S.L. Available online: https://docs.sentinel-hub.com/api/latest/data/sentinel-2-l2a/ (accessed on 20 October 2022).

- Layers, P.E.H.R. Available online: https://land.copernicus.eu/pan-european/high-resolution-layers (accessed on 20 October 2022).

- Abutaleb, K.; Newete, S.W.; Mangwanya, S.; Adam, E.; Byrne, M.J. Mapping eucalypts trees using high resolution multispectral images: A study comparing WorldView 2 vs. SPOT 7. Egypt. J. Remote Sens. Space Sci. 2021, 24, 333–342. [Google Scholar] [CrossRef]

- Wagner, F.H.; Ferreira, M.P.; Sanchez, A.; Hirye, M.C.; Zortea, M.; Gloor, E.; Phillips, O.L.; de Souza Filho, C.R.; Shimabukuro, Y.E.; Aragão, L.E. Individual tree crown delineation in a highly diverse tropical forest using very high resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2018, 145, 362–377. [Google Scholar] [CrossRef]

- Wagner, F.H.; Sanchez, A.; Aidar, M.P.; Rochelle, A.L.; Tarabalka, Y.; Fonseca, M.G.; Phillips, O.L.; Gloor, E.; Aragao, L.E. Mapping Atlantic rainforest degradation and regeneration history with indicator species using convolutional network. PLoS ONE 2020, 15, e0229448. [Google Scholar] [CrossRef]

- Aquino, C.; Mitchard, E.; McNicol, I.; Carstairs, H.; Burt, A.; Vilca, B.L.P.; Disney, M. Using Experimental Sites in Tropical Forests to Test the Ability of Optical Remote Sensing to Detect Forest Degradation at 0.3–30 M Resolutions. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 677–680. [Google Scholar]

- Zhang, X.; Du, L.; Tan, S.; Wu, F.; Zhu, L.; Zeng, Y.; Wu, B. Land use and land cover mapping using RapidEye imagery based on a novel band attention deep learning method in the three gorges reservoir area. Remote Sens. 2021, 13, 1225. [Google Scholar] [CrossRef]

- Kwon, S.; Kim, E.; Lim, J.; Yang, A.R. The Analysis of Changes in Forest Status and Deforestation of North Korea’s DMZ Using RapidEye Satellite Imagery and Google Earth. J. Korean Assoc. Geogr. Inf. Stud. 2021, 24, 113–126. [Google Scholar]

- Csillik, O.; Kumar, P.; Asner, G.P. Challenges in estimating tropical forest canopy height from planet dove imagery. Remote Sens. 2020, 12, 1160. [Google Scholar] [CrossRef]

- Reiner, F.; Brandt, M.; Tong, X.; Kariryaa, A.; Tucker, C.; Fensholt, R. Mapping Continental African Tree Cover at Individual Tree Level With Planet Nanosatellites. In Proceedings of the AGU Fall Meeting Abstracts, New Orleans, LA, USA, 13–17 December 2021; Volume 2021, p. B55E-1257. [Google Scholar]

- Yeom, J.; Han, Y.; Kim, T.; Kim, Y. Forest fire damage assessment using UAV images: A case study on goseong-sokcho forest fire in 2019. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2019, 37, 351–357. [Google Scholar]

- Ocer, N.E.; Kaplan, G.; Erdem, F.; Kucuk Matci, D.; Avdan, U. Tree extraction from multi-scale UAV images using Mask R-CNN with FPN. Remote Sens. Lett. 2020, 11, 847–856. [Google Scholar] [CrossRef]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual tree detection from unmanned aerial vehicle (UAV) derived canopy height model in an open canopy mixed conifer forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef]

- Singh, A.; Kushwaha, S.K.P. Forest Degradation Assessment Using UAV Optical Photogrammetry and SAR Data. J. Indian Soc. Remote Sens. 2021, 49, 559–567. [Google Scholar] [CrossRef]

- Richardson, C.W. Stochastic simulation of daily precipitation, temperature, and solar radiation. Water Resour. Res. 1981, 17, 182–190. [Google Scholar] [CrossRef]

- Othman, M.; Ash’Aari, Z.; Aris, A.; Ramli, M. Tropical deforestation monitoring using NDVI from MODIS satellite: A case study in Pahang, Malaysia. In Proceedings of the IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2018; Volume 169, p. 012047. [Google Scholar]

- Vega Isuhuaylas, L.A.; Hirata, Y.; Ventura Santos, L.C.; Serrudo Torobeo, N. Natural forest mapping in the Andes (Peru): A comparison of the performance of machine-learning algorithms. Remote Sens. 2018, 10, 782. [Google Scholar] [CrossRef]

- Xia, Q.; Qin, C.Z.; Li, H.; Huang, C.; Su, F.Z. Mapping mangrove forests based on multi-tidal high-resolution satellite imagery. Remote Sens. 2018, 10, 1343. [Google Scholar] [CrossRef]

- Dabija, A.; Kluczek, M.; Zagajewski, B.; Raczko, E.; Kycko, M.; Al-Sulttani, A.H.; Tardà, A.; Pineda, L.; Corbera, J. Comparison of support vector machines and random forests for corine land cover mapping. Remote Sens. 2021, 13, 777. [Google Scholar] [CrossRef]

- Illarionova, S.; Shadrin, D.; Ignatiev, V.; Shayakhmetov, S.; Trekin, A.; Oseledets, I. Augmentation-Based Methodology for Enhancement of Trees Map Detalization on a Large Scale. Remote Sens. 2022, 14, 2281. [Google Scholar] [CrossRef]

- Korznikov, K.A.; Kislov, D.E.; Altman, J.; Doležal, J.; Vozmishcheva, A.S.; Krestov, P.V. Using U-Net-like deep convolutional neural networks for precise tree recognition in very high resolution RGB (red, green, blue) satellite images. Forests 2021, 12, 66. [Google Scholar] [CrossRef]

- John, D.; Zhang, C. An attention-based U-Net for detecting deforestation within satellite sensor imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102685. [Google Scholar] [CrossRef]

- da Costa, L.B.; de Carvalho, O.L.F.; de Albuquerque, A.O.; Gomes, R.A.T.; Guimarães, R.F.; de Carvalho Júnior, O.A. Deep semantic segmentation for detecting eucalyptus planted forests in the Brazilian territory using sentinel-2 imagery. Geocarto Int. 2021, 37, 6538–6550. [Google Scholar] [CrossRef]

- Ahmed, N.; Saha, S.; Shahzad, M.; Fraz, M.M.; Zhu, X.X. Progressive Unsupervised Deep Transfer Learning for Forest Mapping in Satellite Image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 752–761. [Google Scholar]

- Grabska, E.; Frantz, D.; Ostapowicz, K. Evaluation of machine learning algorithms for forest stand species mapping using Sentinel-2 imagery and environmental data in the Polish Carpathians. Remote Sens. Environ. 2020, 251, 112103. [Google Scholar] [CrossRef]

- Reyes-Palomeque, G.; Dupuy, J.; Portillo-Quintero, C.; Andrade, J.; Tun-Dzul, F.; Hernández-Stefanoni, J. Mapping forest age and characterizing vegetation structure and species composition in tropical dry forests. Ecol. Indic. 2021, 120, 106955. [Google Scholar] [CrossRef]

- Majasalmi, T.; Eisner, S.; Astrup, R.; Fridman, J.; Bright, R.M. An enhanced forest classification scheme for modeling vegetation—Climate interactions based on national forest inventory data. Biogeosciences 2018, 15, 399–412. [Google Scholar] [CrossRef]

- Koontz, M.J.; Latimer, A.M.; Mortenson, L.A.; Fettig, C.J.; North, M.P. Cross-scale interaction of host tree size and climatic water deficit governs bark beetle-induced tree mortality. Nat. Commun. 2021, 12, 1–13. [Google Scholar] [CrossRef]

- Wang, K.; Wang, T.; Liu, X. A review: Individual tree species classification using integrated airborne LiDAR and optical imagery with a focus on the urban environment. Forests 2018, 10, 1. [Google Scholar] [CrossRef]

- Waring, R.; Coops, N.; Fan, W.; Nightingale, J. MODIS enhanced vegetation index predicts tree species richness across forested ecoregions in the contiguous USA. Remote Sens. Environ. 2006, 103, 218–226. [Google Scholar] [CrossRef]

- Buermann, W.; Saatchi, S.; Smith, T.B.; Zutta, B.R.; Chaves, J.A.; Milá, B.; Graham, C.H. Predicting species distributions across the Amazonian and Andean regions using remote sensing data. J. Biogeogr. 2008, 35, 1160–1176. [Google Scholar] [CrossRef]

- Fu, A.; Sun, G.; Guo, Z.; Wang, D. Forest cover classification with MODIS images in Northeastern Asia. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2010, 3, 178–189. [Google Scholar] [CrossRef]

- Sulla-Menashe, D.; Gray, J.M.; Abercrombie, S.P.; Friedl, M.A. Hierarchical mapping of annual global land cover 2001 to present: The MODIS Collection 6 Land Cover product. Remote Sens. Environ. 2019, 222, 183–194. [Google Scholar] [CrossRef]

- Cano, E.; Denux, J.P.; Bisquert, M.; Hubert-Moy, L.; Chéret, V. Improved forest-cover mapping based on MODIS time series and landscape stratification. Int. J. Remote Sens. 2017, 38, 1865–1888. [Google Scholar] [CrossRef]

- Srinet, R.; Nandy, S.; Padalia, H.; Ghosh, S.; Watham, T.; Patel, N.; Chauhan, P. Mapping plant functional types in Northwest Himalayan foothills of India using random forest algorithm in Google Earth Engine. Int. J. Remote Sens. 2020, 41, 7296–7309. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Wessel, M.; Brandmeier, M.; Tiede, D. Evaluation of different machine learning algorithms for scalable classification of tree types and tree species based on Sentinel-2 data. Remote Sens. 2018, 10, 1419. [Google Scholar] [CrossRef]

- Mngadi, M.; Odindi, J.; Peerbhay, K.; Mutanga, O. Examining the effectiveness of Sentinel-1 and 2 imagery for commercial forest species mapping. Geocarto Int. 2021, 36, 1–12. [Google Scholar] [CrossRef]

- Spracklen, B.; Spracklen, D.V. Synergistic Use of Sentinel-1 and Sentinel-2 to map natural forest and acacia plantation and stand ages in North-Central Vietnam. Remote Sens. 2021, 13, 185. [Google Scholar] [CrossRef]

- Chakravortty, S.; Ghosh, D.; Sinha, D. A dynamic model to recognize changes in mangrove species in sunderban delta using hyperspectral image analysis. In Progress in Intelligent Computing Techniques: Theory, Practice, and Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 59–67. [Google Scholar]

- Pandey, P.C.; Anand, A.; Srivastava, P.K. Spatial distribution of mangrove forest species and biomass assessment using field inventory and earth observation hyperspectral data. Biodivers. Conserv. 2019, 28, 2143–2162. [Google Scholar] [CrossRef]

- Agenzia Spaziale Italiana. Available online: https://www.asi.it/en/earth-science/prisma/ (accessed on 27 October 2022).

- Vangi, E.; D’Amico, G.; Francini, S.; Giannetti, F.; Lasserre, B.; Marchetti, M.; Chirici, G. The new hyperspectral satellite PRISMA: Imagery for forest types discrimination. Sensors 2021, 21, 1182. [Google Scholar] [CrossRef]

- Shaik, R.U.; Fusilli, L.; Giovanni, L. New approach of sample generation and classification for wildfire fuel mapping on hyperspectral (prisma) image. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium, IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 5417–5420. [Google Scholar]

- He, Y.; Yang, J.; Caspersen, J.; Jones, T. An operational workflow of deciduous-dominated forest species classification: Crown delineation, gap elimination, and object-based classification. Remote Sens. 2019, 11, 2078. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Wagner, F.H.; Aragão, L.E.; Shimabukuro, Y.E.; de Souza Filho, C.R. Tree species classification in tropical forests using visible to shortwave infrared WorldView-3 images and texture analysis. ISPRS J. Photogramm. Remote Sens. 2019, 149, 119–131. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, L.; Yan, M.; Qi, J.; Fu, T.; Fan, S.; Chen, B. High-resolution mangrove forests classification with machine learning using worldview and uav hyperspectral data. Remote Sens. 2021, 13, 1529. [Google Scholar] [CrossRef]

- Shinzato, E.T.; Shimabukuro, Y.E.; Coops, N.C.; Tompalski, P.; Gasparoto, E.A. Integrating area-based and individual tree detection approaches for estimating tree volume in plantation inventory using aerial image and airborne laser scanning data. iForest-Biogeosciences For. 2016, 10, 296. [Google Scholar] [CrossRef]

- Sothe, C.; Dalponte, M.; Almeida, C.M.d.; Schimalski, M.B.; Lima, C.L.; Liesenberg, V.; Miyoshi, G.T.; Tommaselli, A.M.G. Tree species classification in a highly diverse subtropical forest integrating UAV-based photogrammetric point cloud and hyperspectral data. Remote Sens. 2019, 11, 1338. [Google Scholar] [CrossRef]

- Cao, K.; Zhang, X. An improved res-unet model for tree species classification using airborne high-resolution images. Remote Sens. 2020, 12, 1128. [Google Scholar] [CrossRef]

- Zhang, B.; Zhao, L.; Zhang, X. Three-dimensional convolutional neural network model for tree species classification using airborne hyperspectral images. Remote Sens. Environ. 2020, 247, 111938. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Liu, Y.; Gong, W.; Hu, X.; Gong, J. Forest type identification with random forest using Sentinel-1A, Sentinel-2A, multi-temporal Landsat-8 and DEM data. Remote Sens. 2018, 10, 946. [Google Scholar] [CrossRef]

- Cao, J.; Leng, W.; Liu, K.; Liu, L.; He, Z.; Zhu, Y. Object-based mangrove species classification using unmanned aerial vehicle hyperspectral images and digital surface models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef]

- Yang, G.; Zhao, Y.; Li, B.; Ma, Y.; Li, R.; Jing, J.; Dian, Y. Tree species classification by employing multiple features acquired from integrated sensors. J. Sensors 2019, 2019. [Google Scholar] [CrossRef]

- Persson, M.; Lindberg, E.; Reese, H. Tree species classification with multi-temporal Sentinel-2 data. Remote Sens. 2018, 10, 1794. [Google Scholar] [CrossRef]

- Onishi, M.; Ise, T. Explainable identification and mapping of trees using UAV RGB image and deep learning. Sci. Rep. 2021, 11, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Illarionova, S.; Trekin, A.; Ignatiev, V.; Oseledets, I. Neural-based hierarchical approach for detailed dominant forest species classification by multispectral satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1810–1820. [Google Scholar] [CrossRef]

- Illarionova, S.; Trekin, A.; Ignatiev, V.; Oseledets, I. Tree Species Mapping on Sentinel-2 Satellite Imagery with Weakly Supervised Classification and Object-Wise Sampling. Forests 2021, 12, 1413. [Google Scholar] [CrossRef]

- Qi, T.; Zhu, H.; Zhang, J.; Yang, Z.; Chai, L.; Xie, J. Patch-U-Net: Tree species classification method based on U-Net with class-balanced jigsaw resampling. Int. J. Remote Sens. 2022, 43, 532–548. [Google Scholar] [CrossRef]

- Illarionova, S.; Shadrin, D.; Ignatiev, V.; Shayakhmetov, S.; Trekin, A.; Oseledets, I. Estimation of the Canopy Height Model From Multispectral Satellite Imagery With Convolutional Neural Networks. IEEE Access 2022, 10, 34116–34132. [Google Scholar] [CrossRef]

- Wilkes, P.; Disney, M.; Vicari, M.B.; Calders, K.; Burt, A. Estimating urban above ground biomass with multi-scale LiDAR. Carbon Balance Manag. 2018, 13, 1–20. [Google Scholar] [CrossRef]

- Department of Economic and Social Development Statistical Division. Handbook and National Accounting: Integrated Environmental and Economic Accounting; United Nations: New York, NY, USA, 1992. [Google Scholar]

- Fu, Y.; He, H.S.; Hawbaker, T.J.; Henne, P.D.; Zhu, Z.; Larsen, D.R. Evaluating k-Nearest Neighbor (k NN) Imputation Models for Species-Level Aboveground Forest Biomass Mapping in Northeast China. Remote Sens. 2019, 11, 2005. [Google Scholar] [CrossRef]

- Zhang, Y.; Liang, S.; Yang, L. A review of regional and global gridded forest biomass datasets. Remote Sens. 2019, 11, 2744. [Google Scholar] [CrossRef]

- Gao, X.; Dong, S.; Li, S.; Xu, Y.; Liu, S.; Zhao, H.; Yeomans, J.; Li, Y.; Shen, H.; Wu, S.; et al. Using the random forest model and validated MODIS with the field spectrometer measurement promote the accuracy of estimating aboveground biomass and coverage of alpine grasslands on the Qinghai-Tibetan Plateau. Ecol. Indic. 2020, 112, 106114. [Google Scholar] [CrossRef]

- Mura, M.; Bottalico, F.; Giannetti, F.; Bertani, R.; Giannini, R.; Mancini, M.; Orlandini, S.; Travaglini, D.; Chirici, G. Exploiting the capabilities of the Sentinel-2 multi spectral instrument for predicting growing stock volume in forest ecosystems. Int. J. Appl. Earth Obs. Geoinf. 2018, 66, 126–134. [Google Scholar] [CrossRef]

- Rees, W.G.; Tomaney, J.; Tutubalina, O.; Zharko, V.; Bartalev, S. Estimation of Boreal Forest Growing Stock Volume in Russia from Sentinel-2 MSI and Land Cover Classification. Remote Sens. 2021, 13, 4483. [Google Scholar] [CrossRef]

- Nink, S.; Hill, J.; Buddenbaum, H.; Stoffels, J.; Sachtleber, T.; Langshausen, J. Assessing the suitability of future multi-and hyperspectral satellite systems for mapping the spatial distribution of Norway spruce timber volume. Remote Sens. 2015, 7, 12009–12040. [Google Scholar] [CrossRef]

- Malhi, R.K.M.; Anand, A.; Srivastava, P.K.; Chaudhary, S.K.; Pandey, M.K.; Behera, M.D.; Kumar, A.; Singh, P.; Kiran, G.S. Synergistic evaluation of Sentinel 1 and 2 for biomass estimation in a tropical forest of India. Adv. Space Res. 2022, 69, 1752–1767. [Google Scholar] [CrossRef]

- Hu, Y.; Xu, X.; Wu, F.; Sun, Z.; Xia, H.; Meng, Q.; Huang, W.; Zhou, H.; Gao, J.; Li, W.; et al. Estimating forest stock volume in Hunan Province, China, by integrating in situ plot data, Sentinel-2 images, and linear and machine learning regression models. Remote Sens. 2020, 12, 186. [Google Scholar] [CrossRef]

- Dubayah, R.; Blair, J.B.; Goetz, S.; Fatoyinbo, L.; Hansen, M.; Healey, S.; Hofton, M.; Hurtt, G.; Kellner, J.; Luthcke, S.; et al. The Global Ecosystem Dynamics Investigation: High-resolution laser ranging of the Earth’s forests and topography. Sci. Remote Sens. 2020, 1, 100002. [Google Scholar] [CrossRef]

- Straub, C.; Tian, J.; Seitz, R.; Reinartz, P. Assessment of Cartosat-1 and WorldView-2 stereo imagery in combination with a LiDAR-DTM for timber volume estimation in a highly structured forest in Germany. Forestry 2013, 86, 463–473. [Google Scholar] [CrossRef]

- Vastaranta, M.; Yu, X.; Luoma, V.; Karjalainen, M.; Saarinen, N.; Wulder, M.A.; White, J.C.; Persson, H.J.; Hollaus, M.; Yrttimaa, T.; et al. Aboveground forest biomass derived using multiple dates of WorldView-2 stereo-imagery: Quantifying the improvement in estimation accuracy. Int. J. Remote Sens. 2018, 39, 8766–8783. [Google Scholar] [CrossRef]

- Günlü, A.; Ercanlı, İ.; Şenyurt, M.; Keleş, S. Estimation of some stand parameters from textural features from WorldView-2 satellite image using the artificial neural network and multiple regression methods: A case study from Turkey. Geocarto Int. 2021, 36, 918–935. [Google Scholar] [CrossRef]

- Dube, T.; Gara, T.W.; Mutanga, O.; Sibanda, M.; Shoko, C.; Murwira, A.; Masocha, M.; Ndaimani, H.; Hatendi, C.M. Estimating forest standing biomass in savanna woodlands as an indicator of forest productivity using the new generation WorldView-2 sensor. Geocarto Int. 2018, 33, 178–188. [Google Scholar] [CrossRef]

- Muhd-Ekhzarizal, M.; Mohd-Hasmadi, I.; Hamdan, O.; Mohamad-Roslan, M.; Noor-Shaila, S. Estimation of aboveground biomass in mangrove forests using vegetation indices from SPOT-5 image. J. Trop. For. Sci. 2018, 30, 224–233. [Google Scholar]

- Gülci, S.; Akay, A.E.; Gülci, N.; Taş, İ. An assessment of conventional and drone-based measurements for tree attributes in timber volume estimation: A case study on stone pine plantation. Ecol. Inform. 2021, 63, 101303. [Google Scholar] [CrossRef]

- Puliti, S.; Saarela, S.; Gobakken, T.; Ståhl, G.; Næsset, E. Combining UAV and Sentinel-2 auxiliary data for forest growing stock volume estimation through hierarchical model-based inference. Remote Sens. Environ. 2018, 204, 485–497. [Google Scholar] [CrossRef]

- Puliti, S.; Breidenbach, J.; Astrup, R. Estimation of forest growing stock volume with UAV laser scanning data: Can it be done without field data? Remote Sens. 2020, 12, 1245. [Google Scholar] [CrossRef]

- Tuominen, S.; Balazs, A.; Honkavaara, E.; Pölönen, I.; Saari, H.; Hakala, T.; Viljanen, N. Hyperspectral UAV-imagery and photogrammetric canopy height model in estimating forest stand variables. Silva Fenn. 2017, 51, 7721. [Google Scholar] [CrossRef]

- Hernando, A.; Puerto, L.; Mola-Yudego, B.; Manzanera, J.A.; Garcia-Abril, A.; Maltamo, M.; Valbuena, R. Estimation of forest biomass components using airborne LiDAR and multispectral sensors. iForest-Biogeosciences For. 2019, 12, 207. [Google Scholar] [CrossRef]

- Hyyppä, E.; Hyyppä, J.; Hakala, T.; Kukko, A.; Wulder, M.A.; White, J.C.; Pyörälä, J.; Yu, X.; Wang, Y.; Virtanen, J.P.; et al. Under-canopy UAV laser scanning for accurate forest field measurements. ISPRS J. Photogramm. Remote Sens. 2020, 164, 41–60. [Google Scholar] [CrossRef]

- Iizuka, K.; Hayakawa, Y.S.; Ogura, T.; Nakata, Y.; Kosugi, Y.; Yonehara, T. Integration of multi-sensor data to estimate plot-level stem volume using machine learning algorithms–case study of evergreen conifer planted forests in Japan. Remote Sens. 2020, 12, 1649. [Google Scholar] [CrossRef]

- Yrttimaa, T.; Saarinen, N.; Kankare, V.; Viljanen, N.; Hynynen, J.; Huuskonen, S.; Holopainen, M.; Hyyppä, J.; Honkavaara, E.; Vastaranta, M. Multisensorial close-range sensing generates benefits for characterization of managed Scots pine (Pinus sylvestris L.) stands. ISPRS Int. J. Geo-Inf. 2020, 9, 309. [Google Scholar] [CrossRef]

- Popescu, S.C.; Wynne, R.H.; Nelson, R.F. Measuring individual tree crown diameter with lidar and assessing its influence on estimating forest volume and biomass. Can. J. Remote Sens. 2003, 29, 564–577. [Google Scholar] [CrossRef]

- Hawryło, P.; Wężyk, P. Predicting growing stock volume of scots pine stands using Sentinel-2 satellite imagery and airborne image-derived point clouds. Forests 2018, 9, 274. [Google Scholar] [CrossRef]

- Mohammadi, J.; Shataee, S.; Babanezhad, M. Estimation of forest stand volume, tree density and biodiversity using Landsat ETM+ Data, comparison of linear and regression tree analyses. Procedia Environ. Sci. 2011, 7, 299–304. [Google Scholar] [CrossRef]

- Li, Z.; Zan, Q.; Yang, Q.; Zhu, D.; Chen, Y.; Yu, S. Remote estimation of mangrove aboveground carbon stock at the species level using a low-cost unmanned aerial vehicle system. Remote Sens. 2019, 11, 1018. [Google Scholar] [CrossRef]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Digital aerial photogrammetry for uneven-aged forest management: Assessing the potential to reconstruct canopy structure and estimate living biomass. Remote Sens. 2019, 11, 338. [Google Scholar] [CrossRef]

- Crusiol, L.G.T.; Nanni, M.R.; Furlanetto, R.H.; Cezar, E.; Silva, G.F.C. Reflectance calibration of UAV-based visible and near-infrared digital images acquired under variant altitude and illumination conditions. Remote Sens. Appl. Soc. Environ. 2020, 18, 100312. [Google Scholar]

- Navarro, J.A.; Algeet, N.; Fernández-Landa, A.; Esteban, J.; Rodríguez-Noriega, P.; Guillén-Climent, M.L. Integration of UAV, Sentinel-1, and Sentinel-2 data for mangrove plantation aboveground biomass monitoring in Senegal. Remote Sens. 2019, 11, 77. [Google Scholar] [CrossRef]

- Gleason, C.J.; Im, J. Forest biomass estimation from airborne LiDAR data using machine learning approaches. Remote Sens. Environ. 2012, 125, 80–91. [Google Scholar] [CrossRef]

- Shao, Z.; Zhang, L. Estimating forest aboveground biomass by combining optical and SAR data: A case study in Genhe, Inner Mongolia, China. Sensors 2016, 16, 834. [Google Scholar] [CrossRef]

- Dong, L.; Du, H.; Han, N.; Li, X.; Zhu, D.; Mao, F.; Zhang, M.; Zheng, J.; Liu, H.; Huang, Z.; et al. Application of convolutional neural network on lei bamboo above-ground-biomass (AGB) estimation using Worldview-2. Remote Sens. 2020, 12, 958. [Google Scholar] [CrossRef]

- Zhang, F.; Tian, X.; Zhang, H.; Jiang, M. Estimation of Aboveground Carbon Density of Forests Using Deep Learning and Multisource Remote Sensing. Remote Sens. 2022, 14, 3022. [Google Scholar] [CrossRef]

- Balazs, A.; Liski, E.; Tuominen, S.; Kangas, A. Comparison of neural networks and k-nearest neighbors methods in forest stand variable estimation using airborne laser data. ISPRS Open J. Photogramm. Remote Sens. 2022, 4, 100012. [Google Scholar] [CrossRef]

- Astola, H.; Seitsonen, L.; Halme, E.; Molinier, M.; Lönnqvist, A. Deep neural networks with transfer learning for forest variable estimation using sentinel-2 imagery in boreal forest. Remote Sens. 2021, 13, 2392. [Google Scholar] [CrossRef]

- vonHedemann, N.; Wurtzebach, Z.; Timberlake, T.J.; Sinkular, E.; Schultz, C.A. Forest policy and management approaches for carbon dioxide removal. Interface Focus 2020, 10, 20200001. [Google Scholar] [CrossRef] [PubMed]

- Kaarakka, L.; Cornett, M.; Domke, G.; Ontl, T.; Dee, L.E. Improved forest management as a natural climate solution: A review. Ecol. Solut. Evid. 2021, 2, e12090. [Google Scholar] [CrossRef]

- Fahey, T.J.; Woodbury, P.B.; Battles, J.J.; Goodale, C.L.; Hamburg, S.P.; Ollinger, S.V.; Woodall, C.W. Forest carbon storage: Ecology, management, and policy. Front. Ecol. Environ. 2010, 8, 245–252. [Google Scholar] [CrossRef]

- Cooper, H.V.; Vane, C.H.; Evers, S.; Aplin, P.; Girkin, N.T.; Sjögersten, S. From peat swamp forest to oil palm plantations: The stability of tropical peatland carbon. Geoderma 2019, 342, 109–117. [Google Scholar] [CrossRef]

- Seibold, S.; Rammer, W.; Hothorn, T.; Seidl, R.; Ulyshen, M.D.; Lorz, J.; Cadotte, M.W.; Lindenmayer, D.B.; Adhikari, Y.P.; Aragón, R.; et al. The contribution of insects to global forest deadwood decomposition. Nature 2021, 597, 77–81. [Google Scholar] [CrossRef]

- Kirdyanov, A.V.; Saurer, M.; Siegwolf, R.; Knorre, A.A.; Prokushkin, A.S.; Churakova, O.V.; Fonti, M.V.; Büntgen, U. Long-term ecological consequences of forest fires in the continuous permafrost zone of Siberia. Environ. Res. Lett. 2020, 15, 034061. [Google Scholar] [CrossRef]

- Ballanti, L.; Byrd, K.B.; Woo, I.; Ellings, C. Remote sensing for wetland mapping and historical change detection at the Nisqually River Delta. Sustainability 2017, 9, 1919. [Google Scholar] [CrossRef]

- DeLancey, E.R.; Simms, J.F.; Mahdianpari, M.; Brisco, B.; Mahoney, C.; Kariyeva, J. Comparing deep learning and shallow learning for large-scale wetland classification in Alberta, Canada. Remote Sens. 2019, 12, 2. [Google Scholar] [CrossRef]

- Dronova, I.; Taddeo, S.; Hemes, K.S.; Knox, S.H.; Valach, A.; Oikawa, P.Y.; Kasak, K.; Baldocchi, D.D. Remotely sensed phenological heterogeneity of restored wetlands: Linking vegetation structure and function. Agric. For. Meteorol. 2021, 296, 108215. [Google Scholar] [CrossRef]

- Bansal, S.; Katyal, D.; Saluja, R.; Chakraborty, M.; Garg, J.K. Remotely sensed MODIS wetland components for assessing the variability of methane emissions in Indian tropical/subtropical wetlands. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 156–170. [Google Scholar] [CrossRef]

- Gerlein-Safdi, C.; Bloom, A.A.; Plant, G.; Kort, E.A.; Ruf, C.S. Improving representation of tropical wetland methane emissions with CYGNSS inundation maps. Glob. Biogeochem. Cycles 2021, 35, e2020GB006890. [Google Scholar] [CrossRef]

- Rezaee, M.; Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep convolutional neural network for complex wetland classification using optical remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Brisco, B.; Homayouni, S.; Gill, E.; DeLancey, E.R.; Bourgeau-Chavez, L. Big data for a big country: The first generation of Canadian wetland inventory map at a spatial resolution of 10-m using Sentinel-1 and Sentinel-2 data on the Google Earth Engine cloud computing platform. Can. J. Remote Sens. 2020, 46, 15–33. [Google Scholar] [CrossRef]

- Jain, P.; Coogan, S.C.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M.; Kechida, A. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Signal Process. 2022, 190, 108309. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Chanussot, J. Burnt-Net: Wildfire burned area mapping with single post-fire Sentinel-2 data and deep learning morphological neural network. Ecol. Indic. 2022, 140, 108999. [Google Scholar] [CrossRef]

- Brown, A.R.; Petropoulos, G.P.; Ferentinos, K.P. Appraisal of the Sentinel-1 & 2 use in a large-scale wildfire assessment: A case study from Portugal’s fires of 2017. Appl. Geogr. 2018, 100, 78–89. [Google Scholar]

- Bujoczek, L.; Bujoczek, M.; Zięba, S. How much, why and where? Deadwood in forest ecosystems: The case of Poland. Ecol. Indic. 2021, 121, 107027. [Google Scholar] [CrossRef]

- Karelin, D.; Zamolodchikov, D.; Isaev, A. Unconsidered sporadic sources of carbon dioxide emission from soils in taiga forests. Dokl. Biol. Sci. 2017, 475, 165–168. [Google Scholar] [CrossRef]

- Cours, J.; Larrieu, L.; Lopez-Vaamonde, C.; Müller, J.; Parmain, G.; Thorn, S.; Bouget, C. Contrasting responses of habitat conditions and insect biodiversity to pest-or climate-induced dieback in coniferous mountain forests. For. Ecol. Manag. 2021, 482, 118811. [Google Scholar] [CrossRef]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of fir trees (Abies sibirica) damaged by the bark beetle in unmanned aerial vehicle images with deep learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Zielewska-Büttner, K.; Adler, P.; Kolbe, S.; Beck, R.; Ganter, L.M.; Koch, B.; Braunisch, V. Detection of standing deadwood from aerial imagery products: Two methods for addressing the bare ground misclassification issue. Forests 2020, 11, 801. [Google Scholar] [CrossRef]

- Esse, C.; Condal, A.; de Los Ríos-Escalante, P.; Correa-Araneda, F.; Moreno-García, R.; Jara-Falcón, R. Evaluation of classification techniques in Very-High-Resolution (VHR) imagery: A case study of the identification of deadwood in the Chilean Central-Patagonian Forests. Ecol. Inform. 2022, 101685. [Google Scholar] [CrossRef]

- Briechle, S.; Krzystek, P.; Vosselman, G. Silvi-Net–A dual-CNN approach for combined classification of tree species and standing dead trees from remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2021, 98, 102292. [Google Scholar] [CrossRef]

- Roelofs, R.; Shankar, V.; Recht, B.; Fridovich-Keil, S.; Hardt, M.; Miller, J.; Schmidt, L. A meta-analysis of overfitting in machine learning. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, NIPS’19, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Pasquarella, V.J.; Holden, C.E.; Woodcock, C.E. Improved mapping of forest type using spectral-temporal Landsat features. Remote Sens. Environ. 2018, 210, 193–207. [Google Scholar] [CrossRef]

- Notti, D.; Giordan, D.; Caló, F.; Pepe, A.; Zucca, F.; Galve, J.P. Potential and limitations of open satellite data for flood mapping. Remote Sens. 2018, 10, 1673. [Google Scholar] [CrossRef]

- Misra, G.; Cawkwell, F.; Wingler, A. Status of phenological research using Sentinel-2 data: A review. Remote Sens. 2020, 12, 2760. [Google Scholar] [CrossRef]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and flexible image augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Khalifa, N.E.; Loey, M.; Mirjalili, S. A comprehensive survey of recent trends in deep learning for digital images augmentation. Artif. Intell. Rev. 2021, 55, 2351–2377. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; Wang, B.; Wang, Z.; Li, H.; Li, H.; Fu, K. Research progress on few-shot learning for remote sensing image interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2387–2402. [Google Scholar] [CrossRef]

- Illarionova, S.; Nesteruk, S.; Shadrin, D.; Ignatiev, V.; Pukalchik, M.; Oseledets, I. Object-Based Augmentation for Building Semantic Segmentation: Ventura and Santa Rosa Case Study. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 1659–1668. [Google Scholar]

- Illarionova, S.; Nesteruk, S.; Shadrin, D.; Ignatiev, V.; Pukalchik, M.; Oseledets, I. MixChannel: Advanced augmentation for multispectral satellite images. Remote Sens. 2021, 13, 2181. [Google Scholar] [CrossRef]

- Ahn, J.; Cho, S.; Kwak, S. Weakly supervised learning of instance segmentation with inter-pixel relations. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2209–2218. [Google Scholar]

- Schmitt, M.; Prexl, J.; Ebel, P.; Liebel, L.; Zhu, X.X. Weakly supervised semantic segmentation of satellite images for land cover mapping—Challenges and opportunities. arXiv 2020, arXiv:2002.08254. [Google Scholar] [CrossRef]

- Tang, C.; Uriarte, M.; Jin, H.; C Morton, D.; Zheng, T. Large-scale, image-based tree species mapping in a tropical forest using artificial perceptual learning. Methods Ecol. Evol. 2021, 12, 608–618. [Google Scholar] [CrossRef]

- Guzinski, R.; Nieto, H.; Sandholt, I.; Karamitilios, G. Modelling high-resolution actual evapotranspiration through Sentinel-2 and Sentinel-3 data fusion. Remote Sens. 2020, 12, 1433. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Rahhal, M.M.A.; Dayil, R.A.; Ajlan, N.A. Vision transformers for remote sensing image classification. Remote Sens. 2021, 13, 516. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, H.; Li, J. TRS: Transformers for Remote Sensing Scene Classification. Remote Sens. 2021, 13, 4143. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 5607514. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

| Mission | Sensor | Spatial Resolution | Temporal Resolution | Distribution of Data |

|---|---|---|---|---|

| Terra MODIS | Multispectral, 36 bands | 250 m, 500 m, 1 km | 1–2 days | Open and free basis |

| ALOS PALSAR/ ALOS-2 PALSAR-2 | Synthetic Aperture Radar, L-band | From detailed (1–3 m) to low (60–100 m) depending on the acquisition mode and processing level | 14 days | On request/commercial use/ALOS Palsar 1-free |

| Landsat-8/9 | Multispectral—8 bands, panchromatic band, and thermal infrared—2 bands | Multispectral: 30 m, Panchromatic: 15 m, Thermal Infrared Sensor: 100 m | 16 days (the combined Landsat 8 and 9 revisit time is 8 days) | Open and free basis |

| Sentinel-1 | Synthetic aperture radar, C-band | From detailed (1.5 × 3.6 m) to medium (20–40 m) depending on the acquisition mode and the processing level | Mission closed (during operating time—3 days on the Equator, <1 day at the Arctic, 1–3 days in Europe and Canada) | Historical data is open and free basis |

| Sentinel-2 | Multispectral, 13 bands | 10, 20, 60 m depending on the band range | 5 and 10 days for single and combined constellation revisit | Open and free basis |

| WorldView-1 | panchromatic band | panchromatic: 0.5 m | 1.7 days | Commercial use |

| WorldView-2,3 | Multispectral—8 bands, panchromatic band | Multispectral: 1.84 m, panchromatic: 0.46 m | Up to 1.1 days | Commercial use |

| WorldView-4 | Multispectral—4 bands, panchromatic band | Multispectral: 1.24 m, panchromatic: 0.31 m | mission closed (during operating time < 1 day) | Commercial use (archive) |

| GeoEye-1 | Multispectral—4 bands, panchromatic band | Multispectral: 1.64 m, panchromatic: 0.41 m | 1.7 days | Commercial use |

| PlanetScope | Multispectral—4 bands, from 2019 additional 4 bands | 3.7–4.1 m resampled to 3 m | 1 day | On request/ commercial use |

| SPOT-6,-7 | Multispectral—4 bands, panchromatic band | Multispectral: 6 m, panchromatic: 1.5 m | 1 to 5 days | On request/ commercial use |

| Pleiades | Multispectral—4 bands, panchromatic band | Multispectral: 2 m, panchromatic: 0.5 m | 1 day | Commercial use |

| RapidEye | Multispectral—5 bands | 6.5 m, resampled to 5 m | 1 day | Commercial use |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Illarionova, S.; Shadrin, D.; Tregubova, P.; Ignatiev, V.; Efimov, A.; Oseledets, I.; Burnaev, E. A Survey of Computer Vision Techniques for Forest Characterization and Carbon Monitoring Tasks. Remote Sens. 2022, 14, 5861. https://doi.org/10.3390/rs14225861

Illarionova S, Shadrin D, Tregubova P, Ignatiev V, Efimov A, Oseledets I, Burnaev E. A Survey of Computer Vision Techniques for Forest Characterization and Carbon Monitoring Tasks. Remote Sensing. 2022; 14(22):5861. https://doi.org/10.3390/rs14225861

Chicago/Turabian StyleIllarionova, Svetlana, Dmitrii Shadrin, Polina Tregubova, Vladimir Ignatiev, Albert Efimov, Ivan Oseledets, and Evgeny Burnaev. 2022. "A Survey of Computer Vision Techniques for Forest Characterization and Carbon Monitoring Tasks" Remote Sensing 14, no. 22: 5861. https://doi.org/10.3390/rs14225861

APA StyleIllarionova, S., Shadrin, D., Tregubova, P., Ignatiev, V., Efimov, A., Oseledets, I., & Burnaev, E. (2022). A Survey of Computer Vision Techniques for Forest Characterization and Carbon Monitoring Tasks. Remote Sensing, 14(22), 5861. https://doi.org/10.3390/rs14225861