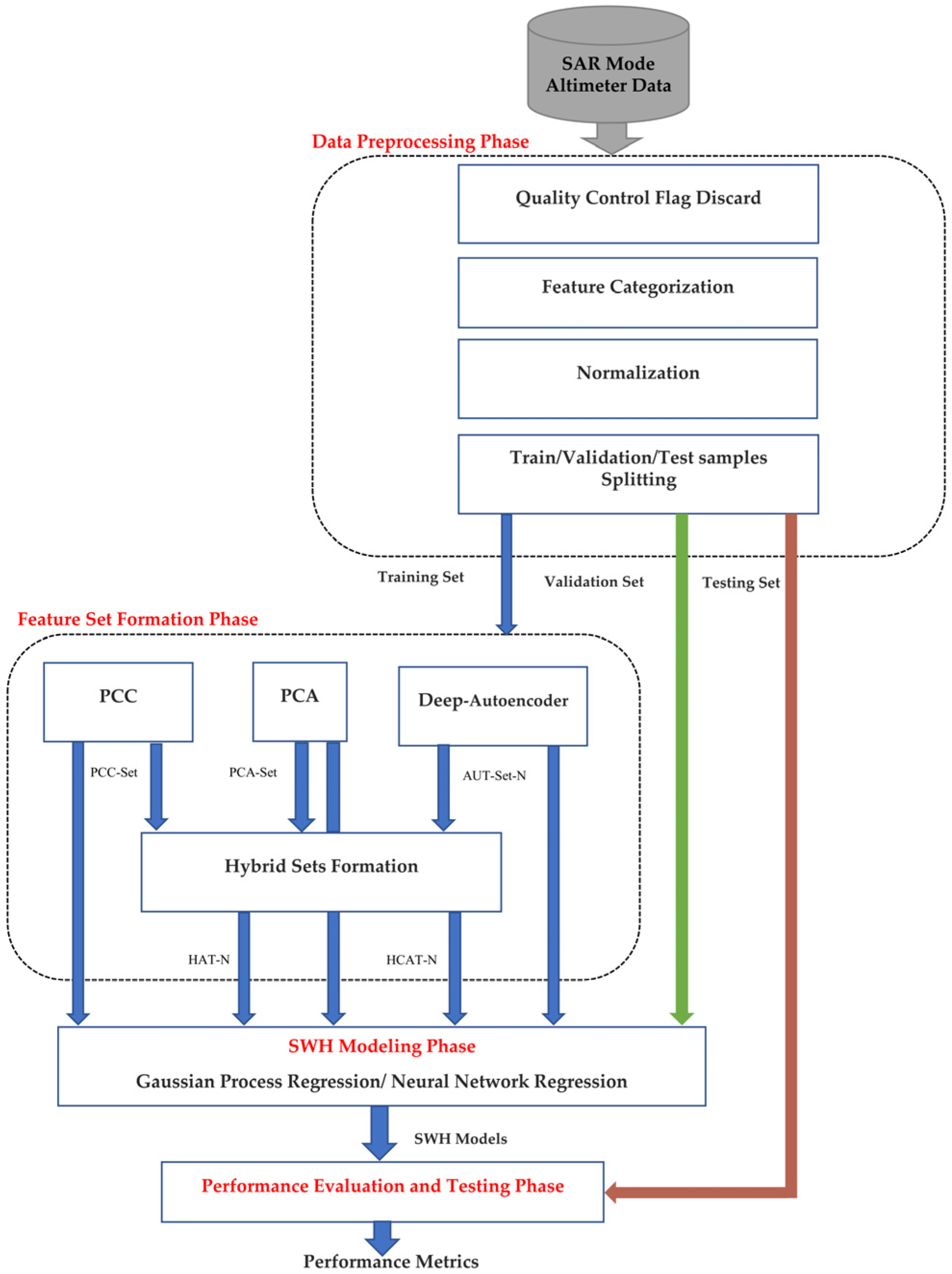

4.1. Feature Sets Formation

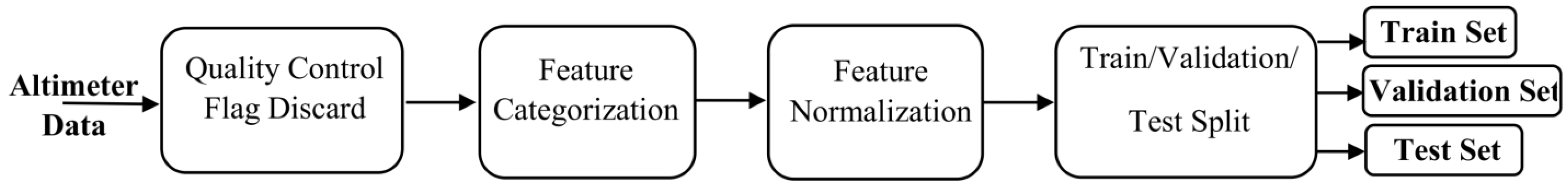

In this work, the calibrated SWH measured using the altimeter’s KU frequency band, SWH_KU_CAL, is considered the response variable. The KU-based features depicted in

Table 4 are used to form the basic and hybrid feature sets in this experiment. The ALL-Set is composed of 16 features which represent all KU-based features except the target variable and the noncalibrated version of it. To create the PCC-Set, Pearson correlation coefficients between the input features and the target variable were calculated.

Table 5 depicts the absolute values of the PCC for each input feature. Normally, SWH is highly correlated with itself and its noncalibrated version. However, the recorded

values for the other predictors are less than 0.6. For both positions P0 and P1, the calibrated and noncalibrated wind speed based on the wind function predictors, WSPD_CAL and WSPD, record the highest correlation with the target, followed by the VWND, and then the KU-altimeter backscatter coefficient, SIG0_KU. It was noticed that the correlation between the target and the rest of the predictors is low (less than 0.1); therefore, the absolute correlation coefficients between the input features and the SWH_CAL were thresholded with a value of 0.1. Thus, the PCC-Set is formulated from the features that satisfy the criterion

. The features included in the PCC-Set for P0 and P1 and their correlation values are highlighted in gray in

Table 5. The SIG0_KU, VWND, WSPD_CAL, SWH_KU_std_dev, SIG0_KU_std_dev, and WSPD are included in the PCC-Set of both positions P0 and P1. However, it was noticed that for P1, the TIME variable achieved a PCC of 0.1, and therefore, it was included in the PCC-Set of this position.

As the TIME feature records different PCC values for P0 and P1, we further investigate the correlation behavior between the TIME feature and the target variable for seven geographical positions.

Table 6 presents the |PCC| values for the TIME feature for the tested positions, the number of observations, and the time period over which the records were collected for each position. It is observable from

Table 6 that the TIME feature generally records low correlation with the SWH. For, P1, P3, and P4, the correlation coefficient equals roughly 0.1. Therefore, for the PCC threshold used in this work, the TIME feature is included in the PCC-Set of these positions. However, the PCC values for P0, P2, P5, and P6 are 10 times lower than the other positions, and thus the TIME feature is discarded from the corresponding PCC-Set.

To generate the PCA features, the PCA algorithm was fed with the ALL-Set, and the CPV was set to 95%. The PCA-Set contains the principal components that explain 95% of the variance. It was found that for both positions P0 and P1, the PCA-Set contains the first principle component only, which captures 95% of the variance contained in the data.

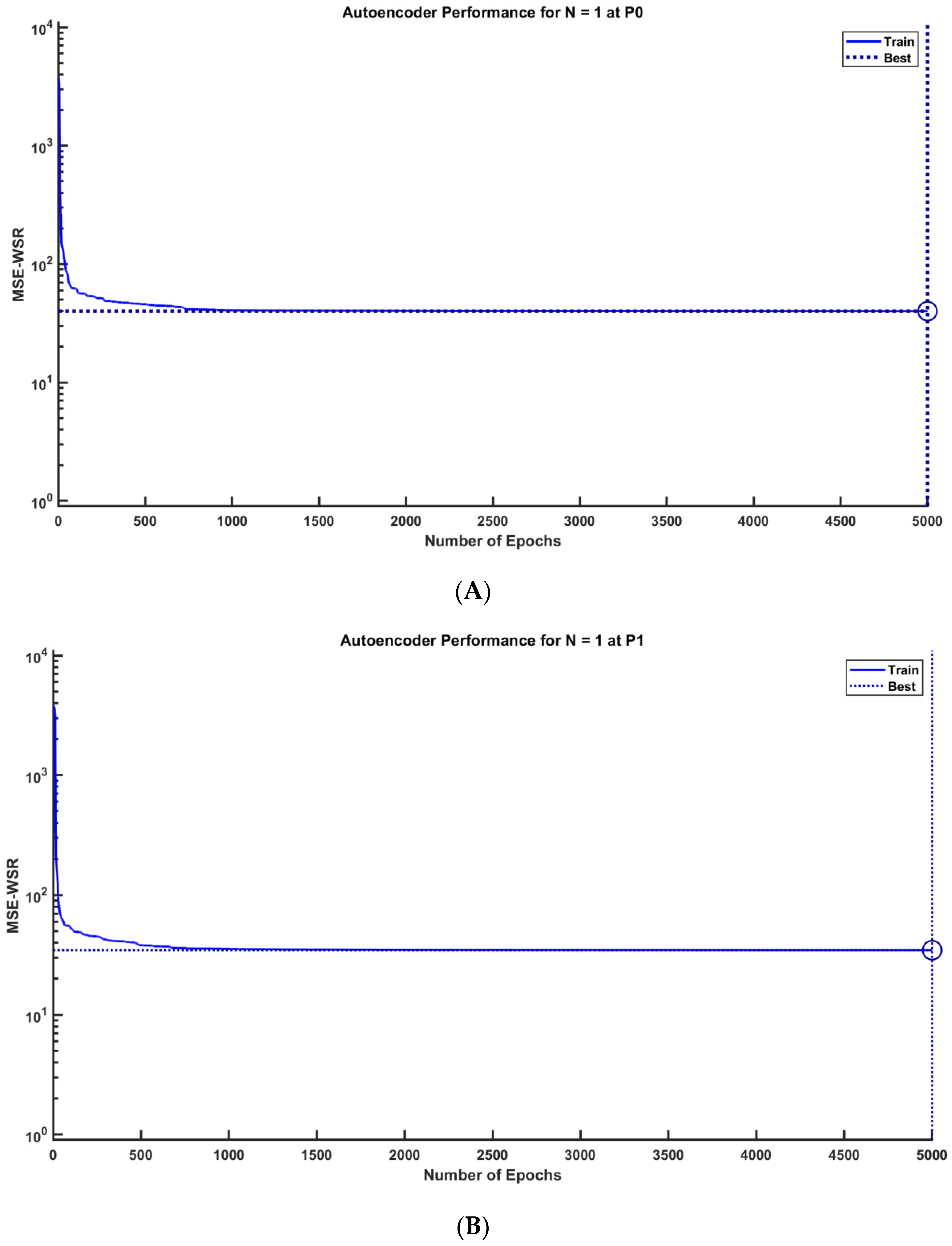

The autoencoder-derived feature sets were generated through feeding a sparse autoencoder by the ALL-Set. By setting the number of latent features output from the encoder end into a number less than the number of features in the ALL-Set, the autoencoder network was utilized as a latent-feature generator and a dimensionality reduction tool. The number of latent features output from the autoencoder, N, was set to 1, 2, and 3. Therefore, three autoencoder sets are generated: AUT-Set-1, AUT-Set-2, and AUT-Set-3. The autoencoder was trained in an unsupervised manner over 5000 epochs with the settings depicted previously in the Methods section. The performance of the autoencoder is measured using the mean squared error with weight and sparsity regularizers (MSE-WSR).

Table 7 shows the starting and stopping values of the gradient and the MSE-WSR values for positions P0 and P1 when N equals 1, 2, and 3. It is observable from

Table 8 that the MSE-WSR decreases with increasing the number of output features. Increasing the number of output features helps including more details from the original data, which aids in reducing the output cost. However, increasing the number of latent features would not guarantee better prediction performance of the regression model. Therefore, the maximum number of output features from the encoder was selected to be 3. This setting helped reduce the computational load and time, and it was proved by experiment to be sufficient to enhance the regression model performance. It is also noticed that the values of the gradient and MSE are the highest at the beginning of the training process and the lowest at the stopping, which is a normal result of algorithm learning. The behavior of the autoencoder performance against the training epochs is depicted in

Figure 5, which shows sample plots of the autoencoder performance in Experiment 1 for N = 1 at P0 and P1.

Hybrid feature sets were formed by merging features from the basic feature sets.

Table 8 depicts the features in the basic and hybrid feature sets and their number of features used for SWH_KU_CAL modeling for Positions P0 and P1.

The performance of the GPR and NNR models trained individually by the basic and hybrid sets for modeling SWH_KU_CAL is depicted in

Table 9 and

Table 10.

Table 9 shows the RMSE and R2 values for the regressors trained on position P0 data, while

Table 10 presents the regression performance for position P1. For position P0, the results show that GPR models recorded higher prediction performance than the NNR models for all feature sets. It was noticed that the basic feature sets generally yielded lower regression performance than the hybrid sets. It is noticeable that GPR models trained by the HAT sets recorded higher performance than the other hybrid sets. The best GPR model records the highest R2 value of 0.92 and an RMSE value of 0.11724. This model has a Rational Quadratic kernel and was trained by the HAT-2 set. The second-best GPR model recorded an R2 value of 0.91 and was trained by the hybrid set HAT-1. On the other hand, the NNR model trained on the AUT-Set-2 set recorded the highest performance, followed by the HAT-2-based model over the other NNR models. The best models are highlighted in dark gray, and the second-best performance regressor is highlighted in light gray in

Table 9 and

Table 10.

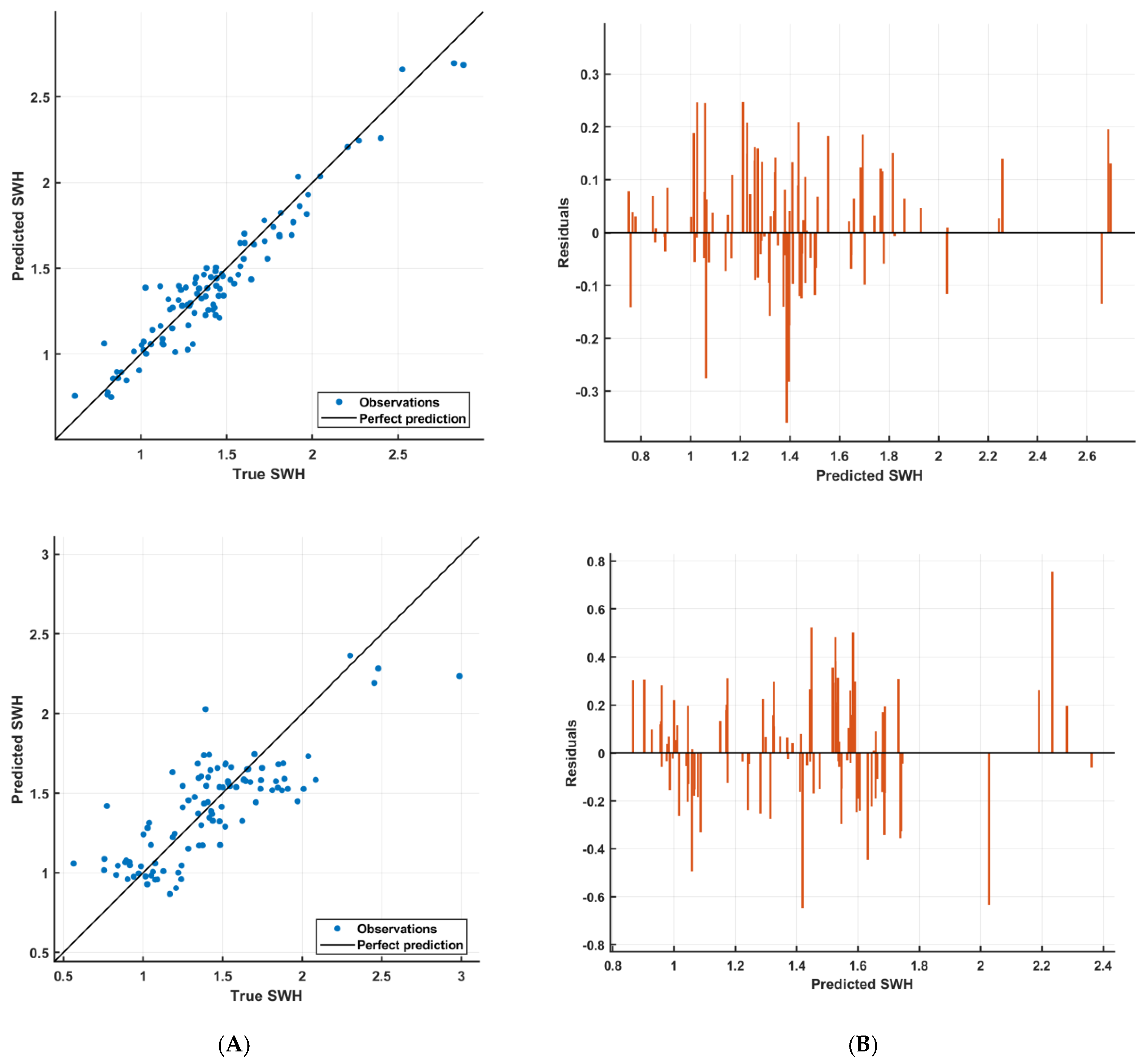

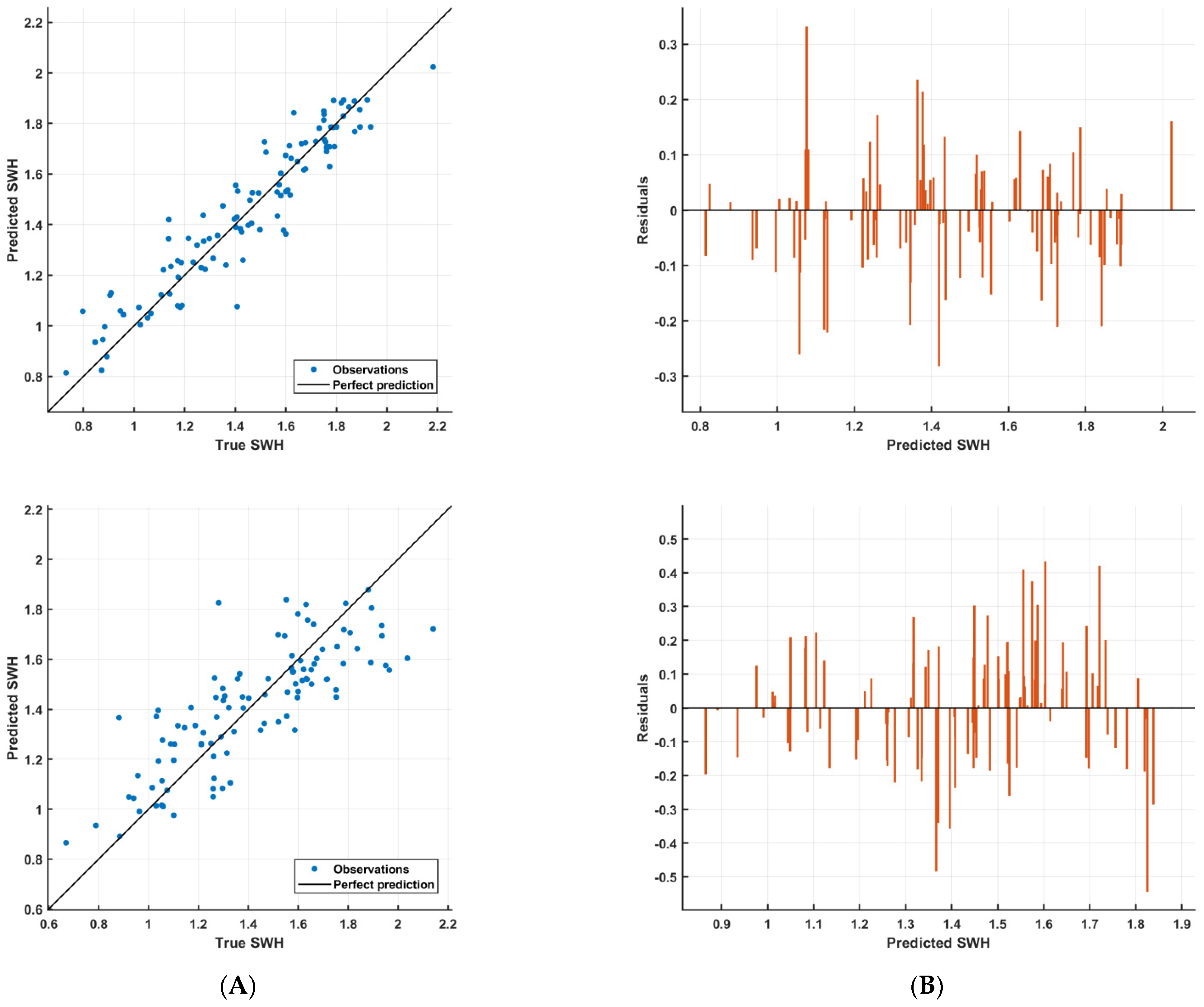

Figure 6 illustrates the goodness of fit of the SWH predictions generated for the test set by the best GPR and NNR models trained on P0 data. The plots of

Figure 6 show the predicted versus true values of the response, SWH_KU_CAL, and the residuals for the best GPR and NNR models highlighted in dark gray in

Table 9. It is clear that the GPR model predictions are closer to the diagonal line, which represents the perfect prediction, than those predicted by the NNR. This observation is consistent with the high R2 value of the GPR model and is confirmed by the residual plot. The residuals of the GPR predictions range between [−0.3, 0.3], while it ranges from [−0.8 to 0.7] for the NNR predictions.

For position P1, it is clear from

Table 9 that the GPR model trained on the HAT-1 set achieved the highest performance compared with the NNR based on the highest R2. The second-best performance is recorded by the AUT-Set-2-based GPR model with an exponential kernel. On the other hand, the best NNR model recorded 0.65 for the coefficient of determination and was trained by the AUT-Set-3. The second-best performer was the HAT-3-based NNR model. Similarly to P0, the GPR models achieved higher performance that the NNR. It is observed that the regressors trained on the PCC-Set, and the hybrid features based on it, the HCAT sets, suffered from poor performance. This could be interpreted as a result of the low correlation between the predictors in the PCC-Set and the target, which hindered the improvement of the model performance, even after fusing the PCC, PCA, and AUT features together. It was also noticed that the HAT sets provides better regression performance than the PCA-Set and the AUT sets. This indicates the improving impact of the autoencoder features on the prediction performance when added to the PCA features.

Figure 7 presents the goodness of fit of the SWH predictions generated by the best GPR and NNR models trained on P1 data. The plots of

Figure 7 illustrate the predicted versus true values of the response, SWH_KU_CAL, and the residuals for the best GPR and NNR models on the test set. It is clear that the predictions are scattered roughly symmetrically around the diagonal line for both GPR and NNR. The predictions of the GPR model are closer to the diagonal line than the NNR predictions. This observation is reflected in the residual plots, which show the difference between the true and predicted target. The error in the predictions with respect to the SWH true values ranges between [−0.3, 0.4] for the GPR model and [−0.6, 0.5] for the NNR model. The performance plots of

Figure 5 reveal the superiority of the GPR model over the NNR.

To summarize the findings of the current research, the prediction performance of the first and second-best regressors recorded by the GPR and NNR models for position P0 and P1 is presented in

Table 11. The highest average RMSEs obtained over the two positions are 0.11069 and 0.21268 for the GPR and NNR models, respectively. It was noticed that The GPR models provides better prediction performance than the NNR models in terms of RMSE and R

2 metrics for both positions. This observation was further proved by the residual plots of the regression models. It was noticed that the HAT feature sets boosted the GPR model performance over that trained by the basic PCA or AUT feature sets individually. In contrast, pure autoencoder features yielded better performance of the NNR models over that of NNR models trained individually by the basic as well as the hybrid sets. Moreover, it was observed that the HCAT sets yielded lower prediction performance than the AUT sets and HAT sets for both the GPR and NNR. This observation could be referred to the low correlation of the original predictors in the PCC-Set with the response variable. Adding such features to the PCA and autoencoder-derived features hindered the significant improvement of the model performance. It was shown that the autoencoder-derived features aid in providing improved prediction performance of the GPR and NNR models over the basic feature sets.

To discuss the results from the sea area (site) perspective, the PCC analysis showed that the DIST2COAST, BOT_DEPTH, LONGITUDE, and LATITUDE-related features are not significant with respect to SWH from the correlation perspective for both positions P0 and P1 (these features recorded very low PCC values). The observation that could be made here is that these site-related features do not contribute significantly to SWH measurements. However, the measured features showed generally higher PCC values than the site-related features, and thus could effectively affect to SWH measurements. The measured features, especially the wind speed, are characterized by their intermittent and stochastic nature. Moreover, the data of the two used positions were collected over different times, and the two positions are approximately 69 miles apart to the east, which means that the two sites had different sea states at the time of data acquisition. Such variations would interpret the difference in the best feature sets of the two positions (HAT-2 for P0 versus HAT-1 for P1 for the GPR and AUT_Set-2 versus AUT-Set-3 for the NNR). Nonetheless, the best feature sets for both sites were based on the autoencoder-derived features, which reveal the effectiveness of this technique in extracting significant features from the original data features. The autoencoder-derived features even improved the prediction performance when combined with the PCA features (in the HAT feature set).

4.2. Hypothesis Testing for Feature Significance

In order to reinforce the findings of the current study, the significance of the features included in the feature sets that yielded the highest prediction performance of the GPR and NNR is examined using hypothesis testing. In the present study, the ANOVA F-statistics test was utilized to identify the significance of the features included in the HAT-2 and AUT-Set-2 feature sets of P0 data as well as the features of HAT-1 and AUT-Set-3 features of P1. In this test, the input features are used to model the response variable using a linear regression model and determine the significance of the predicted model coefficients through statistical metrics, namely the F-value and

p-value. The null hypothesis of the test, H0, assumes that there is no relationship between the response variable, SWH, and the input features i.e., all dependent variable coefficients are zero. On the other hand, the alternative hypothesis, H1, implies that the model is accurate if there is at least one instance where any of the dependent variable coefficients are nonzero. The outcomes of the ANOVA Test of the significance of the aforementioned four feature sets in predicting the SWH are depicted in

Table 12. The significance level is considered 0.05 for the

p-value. The values obtained for both the F-value and the

p-value indicate that there is a significant association between the response variable, SWH, and the input predictors for all feature sets. Therefore, the Null hypothesis can be rejected, and the significance of the examined autoencoder-derived features and hybrid features is confirmed.

The prediction performance of the SWH regression model trained on the feature sets generated using the proposed deep-learning-based approach is further evaluated against the state of the art. Numerous research studies have addressed the problem of SWH prediction from satellite data from different perspectives and using various types of satellite data. In order to have a meaningful benchmarking, only studies that tackled the problem of SWH prediction using the IMOS Surface Waves Sub-Facility dataset are considered for comparison. The IMOS Surface Waves Sub-Facility dataset is a recent dataset that was published in 2019 and has received slight coverage in the literature. Only a single recent study was found to use the IMOS dataset for the prediction of SWH. The study by Quach et al. [

35] investigated the use of deep learning to predict significant wave height from a dataset created from collocations between the Sentinel-1SAR and altimeter satellites’ observations from the IMOS dataset. Quach et al. integrated features from the IMOS altimeter data with a number of CWAVE features that were derived from the SAR image modulation spectra and developed a deep-learning-based regression model for SWH prediction. The results of that study show an improved RMSE of the deep learning model of 0.26. In our study, we employed the autoencoder deep learning network to generate significant features from the altimeter observations for the prediction of SWH using GPR and NNR. The proposed deep-learning-based feature generation method yielded average RMSE values of 0.11069 and 0.21268 for the GPR and NNR models, respectively. Therefore, the deep-learning-based SWH modeling approach proposed in the present study provides improved prediction performance over the state of the art.