Capacity Estimation of Solar Farms Using Deep Learning on High-Resolution Satellite Imagery

Abstract

1. Introduction

1.1. Motivation

1.2. Previous Work

1.3. Problem Statement

- How do we best use deep learning to extract detected polygon areas containing solar farms from satellite imagery?

- Apart from verifying the existence and geographic location of a solar farm, can we estimate the number of individual panels?

- What is the best way to use this information to predict how much solar energy is generated annually?

1.4. Contributions

- We present a deep learning model capable of solar farm detection that achieves highly competitive performance metrics, including a mean accuracy of 96.87%, and a Jaccard Index (intersection over union of classified pixels) score of 95.5%.

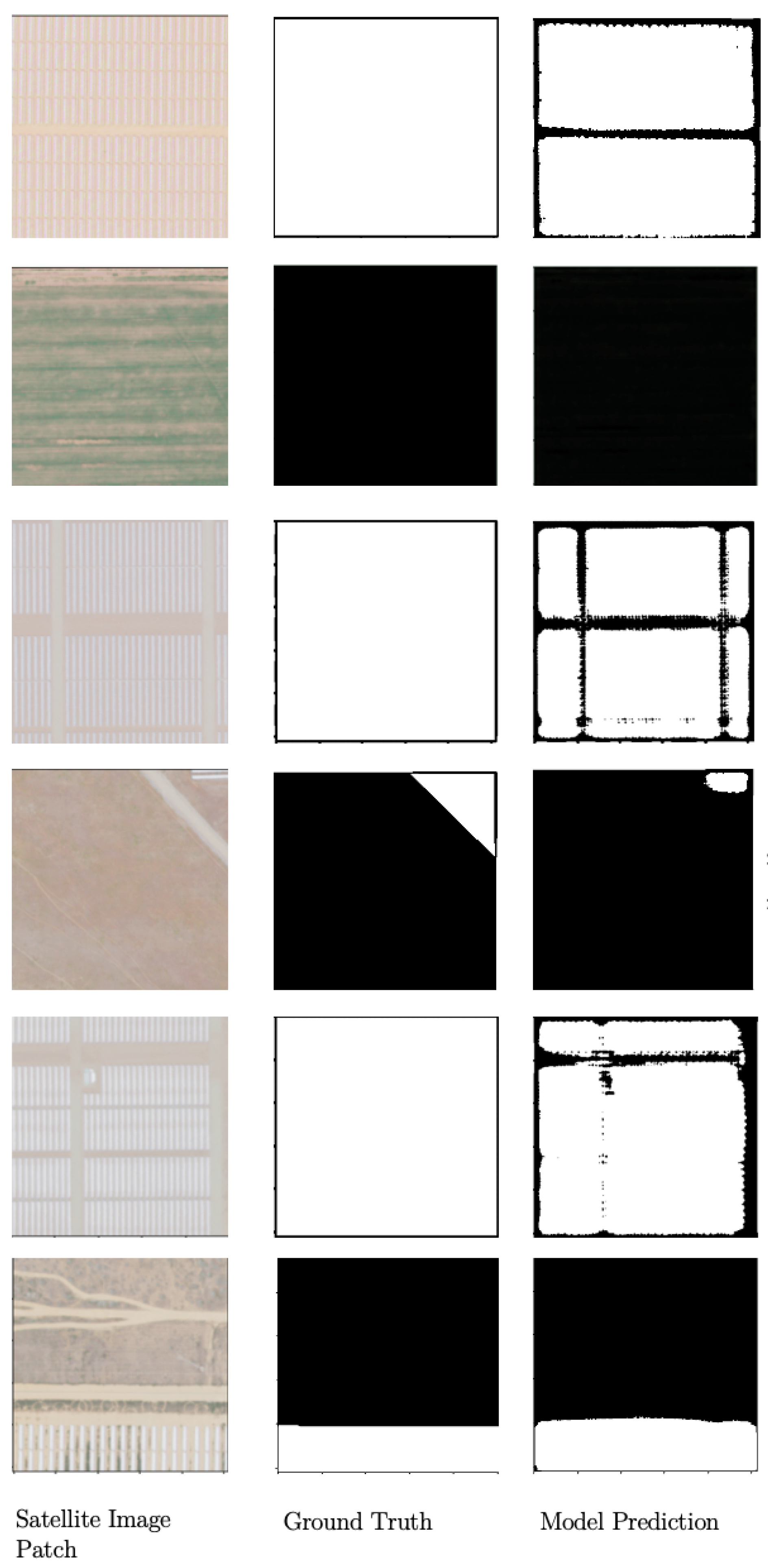

- Subjectively, our model was found to detect spaces between panels and pathways between panel rows producing a segmentation output that is better than human labeling. This has resulted in some of the most accurate detections in comparison with the existing literature.

- We share the original, pixel-wise labeled dataset of solar farms comprising 23,000 images (256 × 256 pixels each) on which the model was trained.

- Finally, we propose an original capacity evaluation model—extracting panel count, panel area, energy generation estimates, etc., of the detected solar energy facilities that were validated against publicly available data to within an average 4.5% error.

2. Materials and Methods

2.1. Dataset

2.2. Dataset Augmentation

2.3. Deep Learning Model Architecture

2.4. Model Evaluation

2.5. Capacity Evaluation Model

3. Results

3.1. Performance Metrics

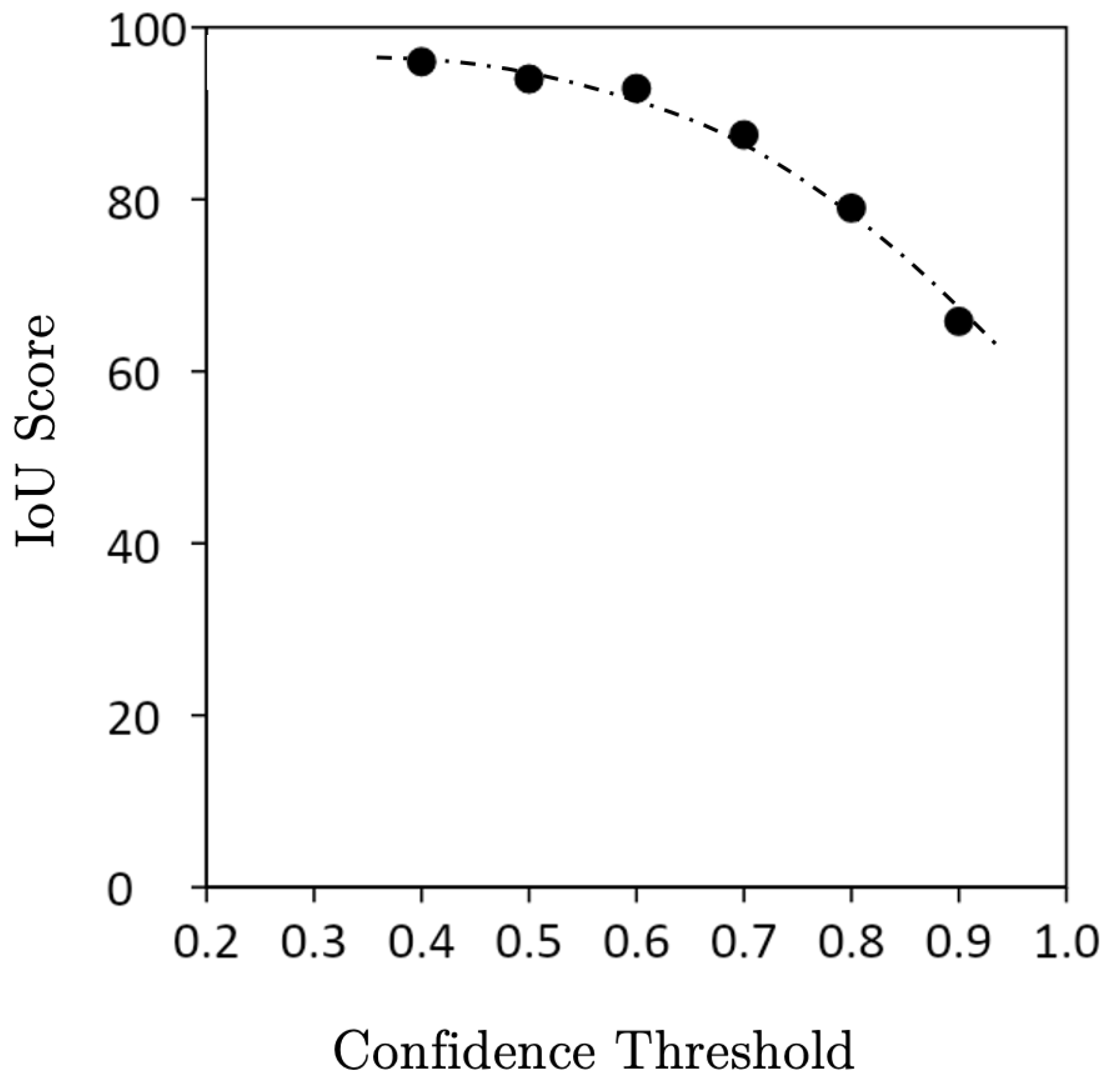

3.2. Effect of Confidence Threshold

3.3. Effect of Image Augmentation

3.4. Capacity Evaluation

4. Conclusions

- A semantic segmentation model that achieved strong performance metrics including a mean accuracy of 96.87%, a Jaccard Index of 95.5% (compared to SolarNet’s 94.2%), and that is capable of highly precise and detailed detections. This has resulted in arguably some of the most precise/accurate solar farm detection imagery in the literature.

- An original, pixel-wise labeled dataset of solar farms that was sourced, annotated, and built for this problem, comprising 23,000 256 × 256 images on which the model was trained.

- A capacity evaluation model to extract panel count, panel area, energy generation estimates, etc., of the detected solar energy facilities that were validated against publicly available data to within 10% error, and an average error of 4.5%.

Future Work

- Exploring newer neural net architectures and conducting a more detailed optimization study.

- Exploring other data sources, including hyperspectral imagery.

- Testing the performance of the CNN on data from other countries, incorporating additional training data if necessary. What remains to be conducted is automatic deployment on large geographical areas such as states and countries.

- Improving the accuracy and robustness of the capacity model. We were able to arrive at reasonably close estimates of solar farm areas, numbers of panels, and even the annual energy generated, but they are inconsistent. We enumerated some of the possible reasons for inconsistency that had to do with temporal changes, reporting, and data collection. With cleaner and more reliable data to compare to, the parameters/constants in the model, such as packing factor, can be updated with a least squares fit.

- Identifying trends and consequently underserved areas with high solar energy potential. The CNN can be deployed on the imagery of various regions to assess the deployment of commercial PV over time, and garner insights regarding the impacts of historical political, social, and economic factors on the deployment of solar renewable energy technology at scale.

- Identifying solar panel defects such as cracked solar cells, broken glass, and dust/sand build-up: defects in solar panels are unlikely to be detectable with imagery at a resolution of 0.4–0.7 m, so this will have to be completed with drone imagery.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CF | Capacity factor |

| CNN | Convolutional neural network |

| FCN | Fully connected network |

| FN | False Negative |

| FP | False Positive |

| GIS | Geographic Information System |

| GSD | Ground Sampling Distance |

| IoU | Intersection over Union |

| mAcc | mean Accuracy |

| mIoU | mean IoU |

| NAIP | National Agriculture Imagery Program |

| NREL | National Renewable Energy Labs |

| PV | Photovoltaics |

| PySAM | NREL Python System Advisor Model |

| QGIS | Quantum GIS |

| ReLu | Rectified Linear Unit |

| TN | True Negative |

| TP | True Positive |

| UNet | “U” Network |

| USDA | United States Department of Agriculture |

| USGS | United States Geological Survey |

Appendix A

References

- Chu, S.; Majumdar, A. Opportunities and challenges for a sustainable energy future. Nature 2012, 488, 294–303. [Google Scholar] [CrossRef] [PubMed]

- BP Statistical Review of World Energy 2018: Two Steps Forward, One Step Back | News and Insights | Home. Available online: https://www.bp.com/en/global/corporate/news-and-insights/press-releases/bp-statistical-review-of-world-energy-2018.html (accessed on 10 October 2022).

- Kruitwagen, L.; Story, K.T.; Friedrich, J.; Byers, L.; Skillman, S.; Hepburn, C. A global inventory of photovoltaic solar energy generating units. Nature 2021, 598, 604–610. [Google Scholar] [CrossRef] [PubMed]

- International Solar Alliance. Available online: https://newsroom.unfccc.int/news/international-solar-alliance (accessed on 10 October 2022).

- Yu, J.; Wang, Z.; Majumdar, A.; Rajagopal, R. DeepSolar: A Machine Learning Framework to Efficiently Construct a Solar Deployment Database in the United States. Joule 2018, 2, 2605–2617. [Google Scholar] [CrossRef]

- Hou, X.; Wang, B.; Hu, W.; Yin, L.; Wu, H. SolarNet: A Deep Learning Framework to Map Solar Power Plants In China From Satellite Imagery. arXiv 2019, arXiv:1912.03685. [Google Scholar]

- Malof, J.M.; Bradbury, K.; Collins, L.M.; Newell, R.G. Automatic detection of solar photovoltaic arrays in high resolution aerial imagery. Appl. Energy 2016, 183, 229–240. [Google Scholar] [CrossRef]

- National Agriculture Imagery Program (NAIP). Available online: https://naip-usdaonline.hub.arcgis.com/ (accessed on 10 October 2022).

- Science for a Changing World. Available online: https://www.usgs.gov/ (accessed on 10 October 2022).

- Biscione, V.; Bowers, J.S. Convolutional Neural Networks Are Not Invariant to Translation, but They Can Learn to Be. arXiv 2021, arXiv:2110.05861. [Google Scholar]

- Agnew, S.; Dargusch, P. Effect of residential solar and storage on centralized electricity supply systems. Nat. Clim. Chang. 2015, 5, 315–318. [Google Scholar] [CrossRef]

- Ekim, B.; Sertel, E. A Multi-Task Deep Learning Framework for Building Footprint Segmentation. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021. [Google Scholar] [CrossRef]

- NREL-PySAM—NREL-PySAM 3.0.0 Documentation. Available online: https://nrel-pysam.readthedocs.io/en/latest/version_changes/3.0.0.html (accessed on 10 October 2022).

| Solar Farm | Location | Capacity (mW) | Train/Test | Images | Labels |

|---|---|---|---|---|---|

| Mount Signal | Imperial County, CA, 324024N, 1153823W | 1165 | Train | 4000 | 4000 |

| Techren Solar | Boulder, NV, 3547N, 11459W | 700 | Train | 2500 | 2500 |

| Topaz Solar | San Luis Obispo, CA, 3523N, 1204W | 550 | Train | 6000 | 6000 |

| Copper Mountain Solar | El Dorado, NV 3547N, 11459W | 298 | Train | 2500 | 2500 |

| Desert Sunlight | Desert Center, CA, 334933N, 1152408W | 1287 | Test | 4500 | 4500 |

| Agua Caliente | Yuma County, AZ, 3257.2N, 11329.4W | 740 | Test | 4500 | 4000 |

| Solar Star | Rosamond, CA, 344950N, 1182353W | 831 | Test | 4000 | - |

| Springbok | Kern county, CA, 35.25N, 117.96W | 717 | Test | 4500 | - |

| Great Valley Solar | Fresno County, CA, 363452N, 1202246W | 200 | Test | 4000 | - |

| Mesquite | Maricopa County, AZ, 3320N, 11255W | 400 | Test | 2000 | - |

| Metric | Description | Result |

|---|---|---|

| pAcc (Pixel Accuracy) | Correctly classified pixels/total pixels | 99.19% |

| mAcc (Mean Accuracy) | Mean accuracy considering optimal threshold | 96.87% |

| mIoU (Mean IoU/Jaccard Index) | Overlap between mask and prediction | 95.5% |

| fIoU (Frequency corrected IoU) | IoU reported for each class and weighted | 97% |

| Solar Farm | Pixels Counted | Area Detected (km) | Area Reported (km) | Panel Area (km) |

|---|---|---|---|---|

| Mount Signal | 34.27 | 12.34 | 15.9 | 4.93 |

| Agua Caliente | 21.65 | 7.79 | 9.7 | 3.12 |

| Desert Sunlight | 38.53 | 13.87 | 16 | 5.55 |

| Solar Star | 25.33 | 9.12 | 13 | 3.65 |

| Springbok | 18.33 | 5.52 | 5.7 | 2.21 |

| Solar Farm | Panel Type | Panel Area (km) | # Panels Counted | # Panels Reported () | Error (%) |

|---|---|---|---|---|---|

| Mount Signal | FS 3&4 | 4.93 | 6.85 | 6.8 | <1% |

| Agua Caliente | FS S4 | 3.12 | 4.33 | 4.8 | 9.7% |

| Desert Sunlight | FS S4 | 5.55 | 7.71 | 8.0 | 3.6% |

| Solar Star | Sunpower | 3.65 | 1.55 | 1.7 | 8.8% |

| Springbok | FS S4 | 2.21 | 3.07 | 3.0 | 2.3% |

| Solar Farm | # Panels Counted | # Panels Reported | Annual Capacity Calculated (GWh) | Annual Capacity Reported (GWh) | Capacity Evaluation Error (%) | Max (Errors) (%) |

|---|---|---|---|---|---|---|

| Mount Signal | 6.85 | 6.8 | 1165.1 | 1197 | 2.7% | 2.7% |

| Agua Caliente | 4.33 | 4.8 | 736.0 | 740 | <1% | 9.7% |

| Desert Sunlight | 7.71 | 8.0 | 1309.9 | 1287 | 1.8% | 3.6% |

| Solar Star | 1.45 | 1.7 | 861.2 | 831 | 3.7% | 8.8% |

| Springbok | 3.07 | 3.0 | 623.2 | 717 | 13.1% | 13.1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ravishankar, R.; AlMahmoud, E.; Habib, A.; de Weck, O.L. Capacity Estimation of Solar Farms Using Deep Learning on High-Resolution Satellite Imagery. Remote Sens. 2023, 15, 210. https://doi.org/10.3390/rs15010210

Ravishankar R, AlMahmoud E, Habib A, de Weck OL. Capacity Estimation of Solar Farms Using Deep Learning on High-Resolution Satellite Imagery. Remote Sensing. 2023; 15(1):210. https://doi.org/10.3390/rs15010210

Chicago/Turabian StyleRavishankar, Rashmi, Elaf AlMahmoud, Abdulelah Habib, and Olivier L. de Weck. 2023. "Capacity Estimation of Solar Farms Using Deep Learning on High-Resolution Satellite Imagery" Remote Sensing 15, no. 1: 210. https://doi.org/10.3390/rs15010210

APA StyleRavishankar, R., AlMahmoud, E., Habib, A., & de Weck, O. L. (2023). Capacity Estimation of Solar Farms Using Deep Learning on High-Resolution Satellite Imagery. Remote Sensing, 15(1), 210. https://doi.org/10.3390/rs15010210