Indoor–Outdoor Point Cloud Alignment Using Semantic–Geometric Descriptor

Abstract

1. Introduction

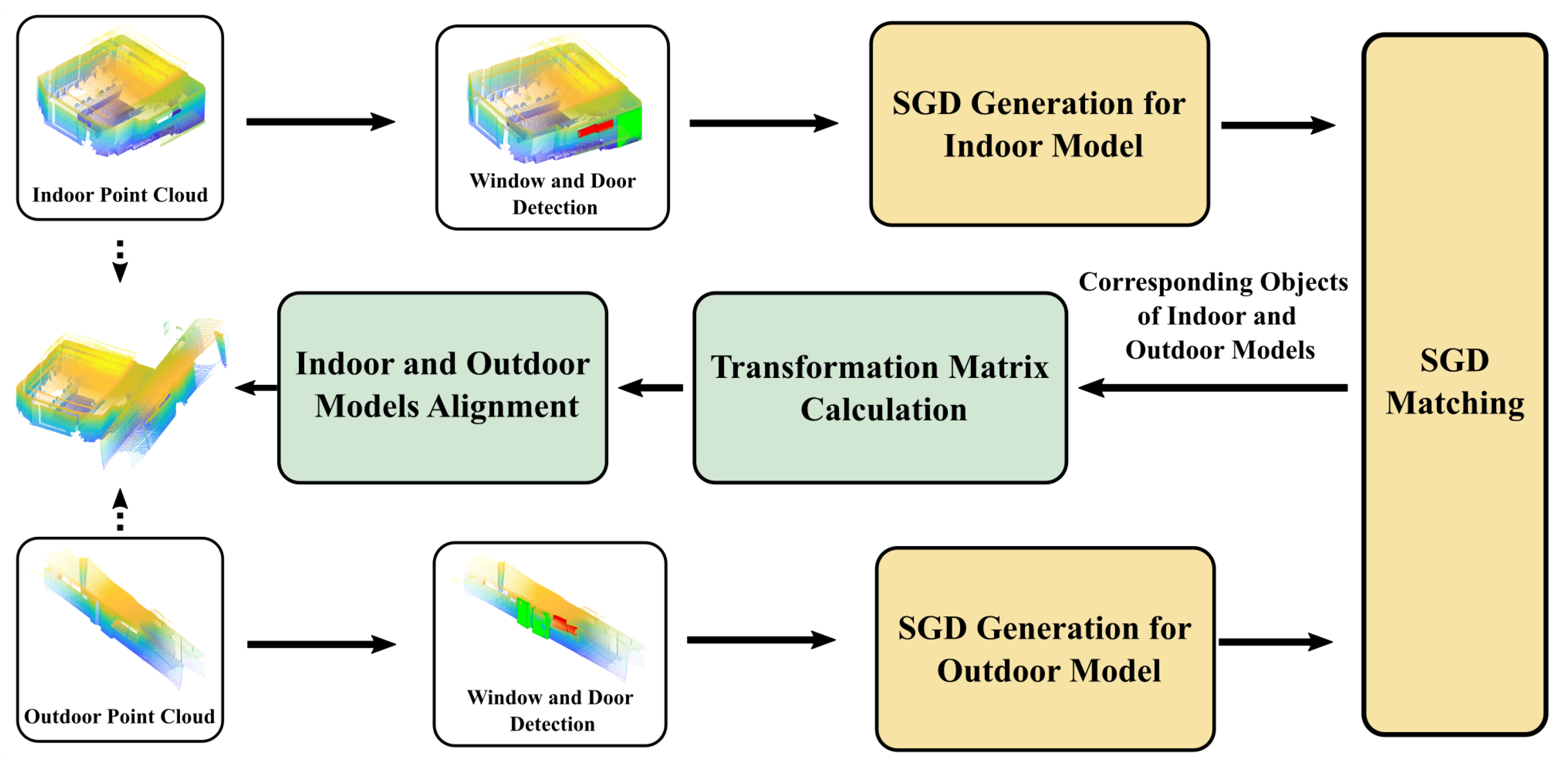

- A novel framework to use semantic objects in indoor–outdoor point cloud alignment tasks is proposed. It is the first work to include the objects’ distribution pattern in model matching, which inherently prevents the ambiguity caused by objects’ shape similarity.

- A unique feature descriptor called the SGD is proposed to include both the semantic information and relative spatial relationship of 3D objects in a scene. The Hungarian algorithm is improved to detect the same object distribution patterns automatically and output optimal matches.

- The algorithms are tested on both an experimental dataset and a public dataset. The results show that the SGD-based indoor–outdoor alignment method can provide robust matching results and achieve matching accuracy at the centimeter level.

2. Related Works

3. SGD Construction

3.1. Window and Door Detection in Point Clouds

3.2. SGD Design

4. Semantic Object Matching with SGDs

4.1. SGDU Distance Definition

4.2. SGD Matching

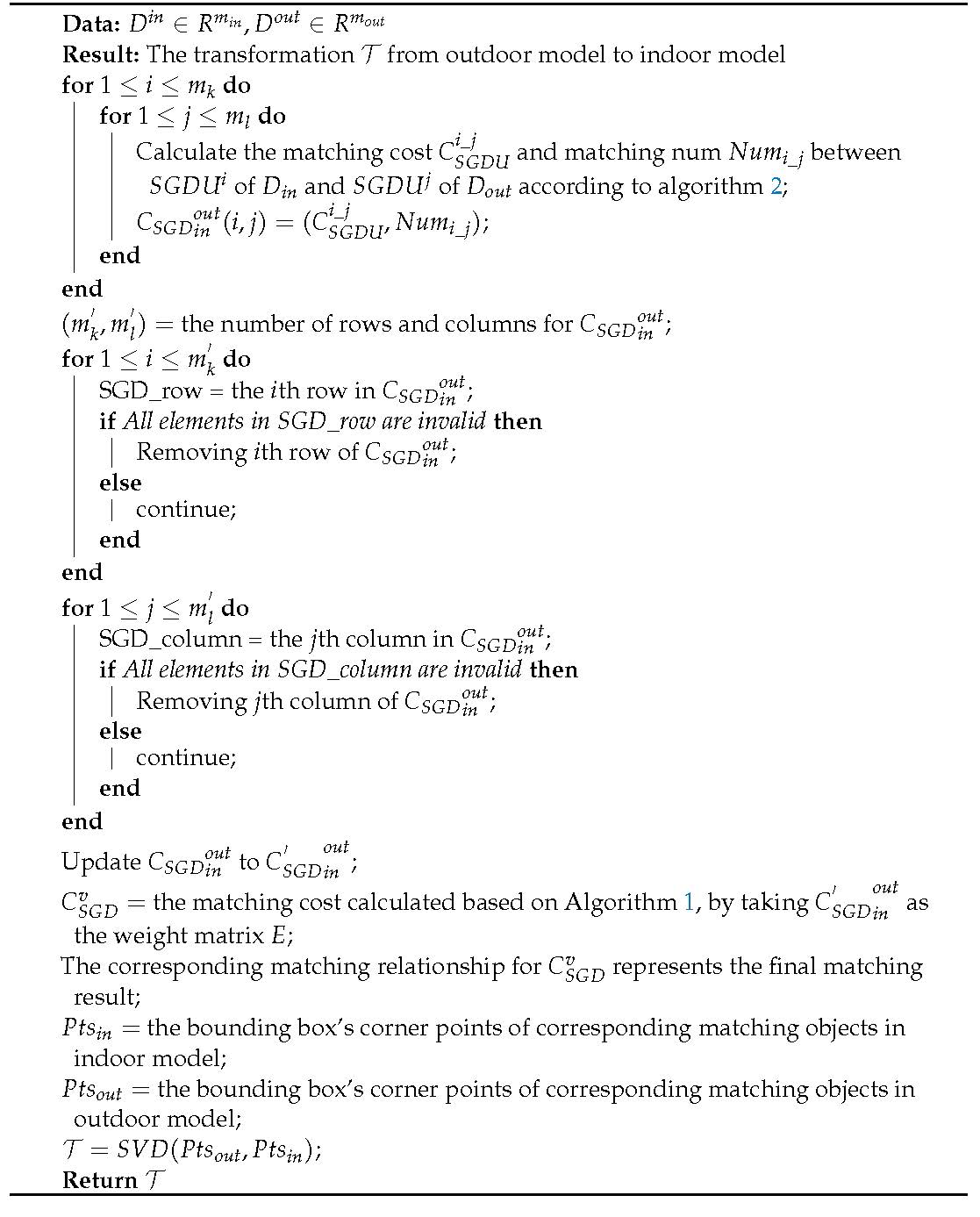

| Algorithm 1: The improved Hungarian algorithm |

|

| Algorithm 2: Calculating the match cost between two SGDUs |

|

| Algorithm 3: SGD matching and transformation calculation between indoor and outdoor models |

|

5. Experimental Results and Discussion

5.1. Experimental Dataset Description

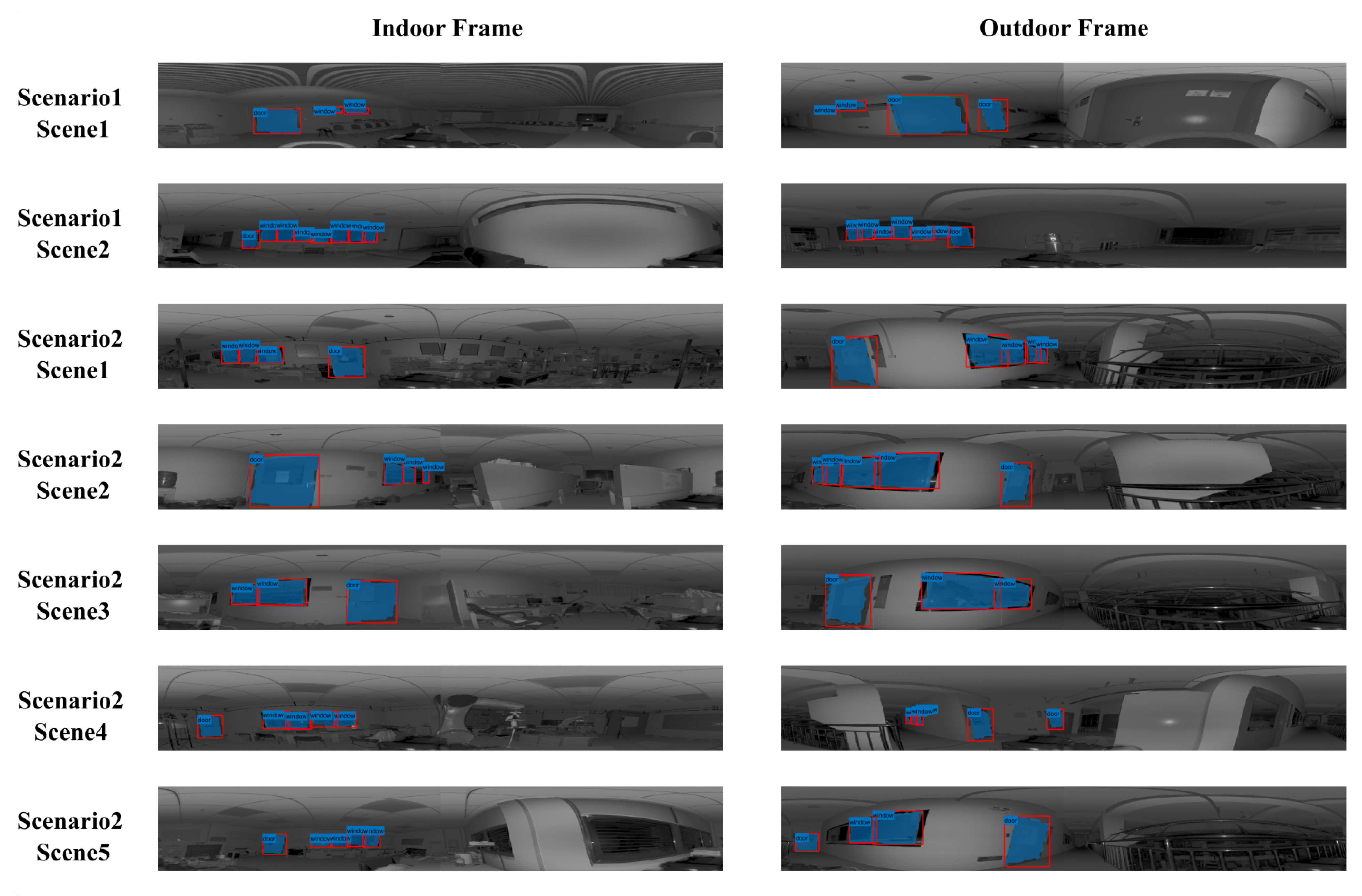

5.2. Window and Door Detection Results

5.3. Indoor and Outdoor Model Alignment Results

5.4. Evaluation on Public Dataset

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Soheilian, B.; Tournaire, O.; Paparoditis, N.; Vallet, B.; Papelard, J.P. Generation of an integrated 3D city model with visual landmarks for autonomous navigation in dense urban areas. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; IEEE: Gold Coast City, Australia, 2013; pp. 304–309. [Google Scholar] [CrossRef]

- Dudhee, V.; Vukovic, V. Building information model visualisation in augmented reality. Smart Sustain. Built Environ. 2021. ahead-of-print. [Google Scholar] [CrossRef]

- Tariq, M.A.; Farooq, U.; Aamir, E.; Shafaqat, R. Exploring Adoption of Integrated Building Information Modelling and Virtual Reality. In Proceedings of the 2019 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Swat, Pakistan, 24–25 July 2019; IEEE: Swat, Pakistan, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, C.; Wen, C.; Dai, Y.; Yu, S.; Liu, M. Urban 3D modeling with mobile laser scanning: A review. Virtual Real. Intell. Hardw. 2020, 2, 175–212. [Google Scholar] [CrossRef]

- Cao, Y.; Li, Z. Research on Dynamic Simulation Technology of Urban 3D Art Landscape Based on VR-Platform. Math. Probl. Eng. 2022, 2022, 3252040. [Google Scholar] [CrossRef]

- López, F.J.; Lerones, P.M.; Llamas, J.; Gómez-García-Bermejo, J.; Zalama, E. A review of heritage building information modeling (H-BIM). Multimodal Technol. Interact. 2018, 2, 21. [Google Scholar] [CrossRef]

- Koch, T.; Korner, M.; Fraundorfer, F. Automatic alignment of indoor and outdoor building models using 3D line segments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 10–18. [Google Scholar]

- Djahel, R.; Vallet, B.; Monasse, P. Detecting Openings For Indoor/Outdoor Registration. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2022, XLIII-B2-2022, 177–184. [Google Scholar] [CrossRef]

- Assi, R.; Landes, T.; Murtiyoso, A.; Grussenmeyer, P. Assessment of a Keypoints Detector for the Registration of Indoor and Outdoor Heritage Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 133–138. [Google Scholar] [CrossRef]

- Pan, Y.; Yang, B.; Liang, F.; Dong, Z. Iterative global similarity points: A robust coarse-to-fine integration solution for pairwise 3d point cloud registration. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 180–189. [Google Scholar]

- Li, Z.; Zhang, X.; Tan, J.; Liu, H. Pairwise Coarse Registration of Indoor Point Clouds Using 2D Line Features. ISPRS Int. J. Geo-Inf. 2021, 10, 26. [Google Scholar] [CrossRef]

- Djahel, R.; Vallet, B.; Monasse, P. Towards Efficient Indoor/outdoor Registration Using Planar Polygons. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 2, 51–58. [Google Scholar] [CrossRef]

- Previtali, M.; Barazzetti, L.; Brumana, R.; Scaioni, M. Laser scan registration using planar features. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Riva del Garda, Italy, 23–25 June 2014; Volume 45. [Google Scholar]

- Favre, K.; Pressigout, M.; Marchand, E.; Morin, L. A plane-based approach for indoor point clouds registration. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7072–7079. [Google Scholar]

- Malihi, S.; Valadan Zoej, M.J.; Hahn, M.; Mokhtarzade, M. Window detection from UAS-derived photogrammetric point cloud employing density-based filtering and perceptual organization. Remote Sens. 2018, 10, 1320. [Google Scholar] [CrossRef]

- Cohen, A.; Schönberger, J.L.; Speciale, P.; Sattler, T.; Frahm, J.M.; Pollefeys, M. Indoor-outdoor 3d reconstruction alignment. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 285–300. [Google Scholar]

- Geng, H.; Gao, Z.; Fang, G.; Xie, Y. 3D Object Recognition and Localization with a Dense LiDAR Scanner. Actuators 2022, 11, 13. [Google Scholar] [CrossRef]

- Cai, Y.; Fan, L. An efficient approach to automatic construction of 3D watertight geometry of buildings using point clouds. Remote Sens. 2021, 13, 1947. [Google Scholar] [CrossRef]

- Imanullah, M.; Yuniarno, E.M.; Sumpeno, S. Sift and icp in multi-view based point clouds registration for indoor and outdoor scene reconstruction. In Proceedings of the 2019 International Seminar on Intelligent Technology and Its Applications (ISITIA), Surabaya, Indonesia, 28–29 August 2019; pp. 288–293. [Google Scholar]

- Xiong, B.; Jiang, W.; Li, D.; Qi, M. Voxel Grid-Based Fast Registration of Terrestrial Point Cloud. Remote Sens. 2021, 13, 1905. [Google Scholar] [CrossRef]

- Popișter, F.; Popescu, D.; Păcurar, A.; Păcurar, R. Mathematical Approach in Complex Surfaces Toolpaths. Mathematics 2021, 9, 1360. [Google Scholar] [CrossRef]

- Wen, C.; Sun, X.; Hou, S.; Tan, J.; Dai, Y.; Wang, C.; Li, J. Line structure-based indoor and outdoor integration using backpacked and TLS point cloud data. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1790–1794. [Google Scholar] [CrossRef]

- Chen, S.; Nan, L.; Xia, R.; Zhao, J.; Wonka, P. PLADE: A plane-based descriptor for point cloud registration with small overlap. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2530–2540. [Google Scholar] [CrossRef]

- Li, J.; Huang, S.; Cui, H.; Ma, Y.; Chen, X. Automatic point cloud registration for large outdoor scenes using a priori semantic information. Remote Sens. 2021, 13, 3474. [Google Scholar] [CrossRef]

- Parkison, S.A.; Gan, L.; Jadidi, M.G.; Eustice, R.M. Semantic Iterative Closest Point through Expectation-Maximization. In Proceedings of the BMVC, Newcastle, UK, 3–6 September 2018; p. 280. [Google Scholar]

- Wang, L.; Sohn, G. An integrated framework for reconstructing full 3d building models. In Advances in 3D Geo-Information Sciences; Springer: Berlin/Heidelberg, Germany, 2011; pp. 261–274. [Google Scholar]

- Wang, L.; Sohn, G. Automatic co-registration of terrestrial laser scanning data and 2D floor plan. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 158–164. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12697–12705. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; pp. 5105–5114. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Dey, E.K.; Awrangjeb, M.; Stantic, B. Outlier detection and robust plane fitting for building roof extraction from LiDAR data. Int. J. Remote Sens. 2020, 41, 6325–6354. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Gool, L.V. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Cao, B.; Wang, J.; Fan, J.; Yin, J.; Dong, T. Querying similar process models based on the Hungarian algorithm. IEEE Trans. Serv. Comput. 2016, 10, 121–135. [Google Scholar] [CrossRef]

- Xie, Y.; Tang, Y.; Zhou, R.; Guo, Y.; Shi, H. Map merging with terrain-adaptive density using mobile 3D laser scanner. Robot. Auton. Syst. 2020, 134, 103649. [Google Scholar] [CrossRef]

| Abbreviation | Definition | Abbreviation | Definition |

|---|---|---|---|

| The included angle between origin vector and the x–y–z axes in coordinates | The relationship of object with respect to other objects in the point cloud | ||

| The absolute angle errors between angle elements | HA | Hungarian algorithm | |

| The Euler angles that represent the rotation between coordinates and coordinates | ICP | Iterative closest point | |

| The absolute rotation errors between two local coordinates | IoU | Intersection over union | |

| The adjacency matrix between indoor and outdoor models | N | The number of recognized objects | |

| The distribution matrix between and | The ith recognized object | ||

| The matching element in | The semantic category of object | ||

| D | The matrix definition of SGD | SGD | Semantic–geometric descriptor |

| The Euler distance between the origins of local coordinates of and | SGDU | Semantic–geometric descriptor unit | |

| The absolute distance error between two distance elements | SVD | Singular value decomposition |

| Scenario 1 Scene 1 | Scenario 1 Scene 2 | Scenario 2 Scene 1 | Scenario 2 Scene 2 | Scenario 2 Scene 3 | Scenario 2 Scene 4 | Scenario 2 Scene 5 | Mean ± std | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Indoor | Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | Outdoor | Indoor | Outdoor | ||

| MAE of windows (m) | 0.0930 | 0.1454 | 0.0877 | 0.0711 | 0.0527 | 0.0921 | 0.0593 | 0.0501 | 0.0689 | 0.0790 | 0.0759 | 0.0940 | 0.0985 | 0.0666 | 0.0810 ± 0.0242 |

| MAE of doors (m) | 0.1285 | 0.1251 | 0.1329 | 0.1375 | 0.1322 | 0.1187 | 0.1056 | 0.0878 | 0.0987 | 0.1322 | 0.0826 | 0.1213 | 0.1168 | 0.0855 | 0.1147 ± 0.0192 |

| Relative error of windows (%) | 6.1200 | 9.6100 | 6.1300 | 4.9800 | 3.9100 | 6.6200 | 4.3100 | 3.7700 | 4.4500 | 5.3100 | 5.6400 | 6.3800 | 7.1800 | 4.9100 | 5.6657 ± 1.5406 |

| Relative error of doors (%) | 8.4700 | 8.0900 | 8.4200 | 9.6400 | 9.6500 | 8.6900 | 7.8200 | 6.4200 | 6.3800 | 8.9300 | 6.1900 | 8.0500 | 8.7300 | 6.5100 | 7.9993 ± 1.1879 |

| 2D IoU of windows | 0.7681 | 0.8650 | 0.8970 | 0.8039 | 0.8908 | 0.8421 | 0.9283 | 0.8886 | 0.9621 | 0.9525 | 0.9052 | 0.8515 | 0.9063 | 0.9108 | 0.8837 ± 0.0538 |

| 2D IoU of doors | 0.8989 | 0.8765 | 0.9190 | 0.9216 | 0.9494 | 0.9810 | 0.9170 | 0.8894 | 0.8920 | 0.7618 | 0.8975 | 0.8618 | 0.9449 | 0.9373 | 0.9034 ± 0.0516 |

| 3D IoU of windows | 0.6316 | 0.5415 | 0.4017 | 0.4535 | 0.4948 | 0.5841 | 0.3758 | 0.6174 | 0.4002 | 0.4629 | 0.5072 | 0.6462 | 0.5680 | 0.5651 | 0.5179 ± 0.0896 |

| 3D IoU of doors | 0.5731 | 0.7792 | 0.7023 | 0.5829 | 0.6796 | 0.9245 | 0.5827 | 0.7861 | 0.3527 | 0.7851 | 0.7320 | 0.6737 | 0.3766 | 0.9417 | 0.6766 ± 0.1739 |

| Scenario 1 Scene 1 | Scenario 1 Scene 2 | Scenario 2 Scene 1 | Scenario 2 Scene 2 | Scenario 2 Scene 3 | Scenario 2 Scene 4 | Scenario 2 Scene 5 | Mean ± std | |

|---|---|---|---|---|---|---|---|---|

| MAE of object centers (m) | 0.0638 | 0.0630 | 0.1119 | 0.0614 | 0.0284 | 0.0688 | 0.0081 | 0.0579 ± 0.0328 |

| MAE of object corners (m) | 0.1322 | 0.0821 | 0.1474 | 0.1145 | 0.1109 | 0.1221 | 0.1151 | 0.1177 ± 0.0202 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Fang, G.; Miao, Z.; Xie, Y. Indoor–Outdoor Point Cloud Alignment Using Semantic–Geometric Descriptor. Remote Sens. 2022, 14, 5119. https://doi.org/10.3390/rs14205119

Yang Y, Fang G, Miao Z, Xie Y. Indoor–Outdoor Point Cloud Alignment Using Semantic–Geometric Descriptor. Remote Sensing. 2022; 14(20):5119. https://doi.org/10.3390/rs14205119

Chicago/Turabian StyleYang, Yusheng, Guorun Fang, Zhonghua Miao, and Yangmin Xie. 2022. "Indoor–Outdoor Point Cloud Alignment Using Semantic–Geometric Descriptor" Remote Sensing 14, no. 20: 5119. https://doi.org/10.3390/rs14205119

APA StyleYang, Y., Fang, G., Miao, Z., & Xie, Y. (2022). Indoor–Outdoor Point Cloud Alignment Using Semantic–Geometric Descriptor. Remote Sensing, 14(20), 5119. https://doi.org/10.3390/rs14205119