Abstract

Obtaining the geographic coordinates of single fruit trees enables the variable rate application of agricultural production materials according to the growth differences of trees, which is of great significance to the precision management of citrus orchards. The traditional method of detecting and positioning fruit trees manually is time-consuming, labor-intensive, and inefficient. In order to obtain high-precision geographic coordinates of trees in a citrus orchard, this study proposes a method for citrus tree identification and coordinate extraction based on UAV remote sensing imagery and coordinate transformation. A high-precision orthophoto map of a citrus orchard was drawn from UAV remote sensing images. The YOLOv5 model was subsequently used to train the remote sensing dataset to efficiently identify the fruit trees and extract tree pixel coordinates from the orchard orthophoto map. According to the geographic information contained in the orthophoto map, the pixel coordinates were converted to UTM coordinates and the WGS84 coordinates of citrus trees were obtained using Gauss–Krüger inverse calculation. To simplify the coordinate conversion process and to improve the coordinate conversion efficiency, a coordinate conversion app was also developed to automatically implement the batch conversion of pixel coordinates to UTM coordinates and WGS84 coordinates. Results show that the Precision, Recall, and F1 Score for Scene 1 (after weeding) reach 0.89, 0.97, and 0.92, respectively; the Precision, Recall, and F1 Score for Scene 2 (before weeding) reach 0.91, 0.90 and 0.91, respectively. The accuracy of the orthophoto map generated using UAV remote sensing images is 0.15 m. The accuracy of converting pixel coordinates to UTM coordinates by the coordinate conversion app is reliable, and the accuracy of converting UTM coordinates to WGS84 coordinates is 0.01 m. The proposed method is capable of automatically obtaining the WGS84 coordinates of citrus trees with high precision.

Keywords:

UAV; orthophoto map; remote sensing; Hough transform; YOLOv5; Gauss–Krüger; UTM; WGS84; coordinate transformation; deep learning 1. Introduction

China is the largest citrus-producing country in the world. As of 2021, the orchard yield of citrus had reached 55.96 million tons [1], ranking first in China’s fruit production and playing an important role in the growth of China’s agricultural economy [2]. Citrus orchard management in China has been developed from manual management to an intelligent and large-scale production pattern [3]. Accurately identifying citrus trees and extracting the coordinate information of fruit trees helps monitor growth differences among citrus trees and ensure precision orchard management. It is the basis for agricultural robots to implement precision operations such as route planning [4,5,6], weeding and pesticide application [7,8,9], and opening furrows and fertilization [10]. The traditional method of fruit tree identification and localization relies on manual measurement and statistics [11], which is time-consuming, labor-intensive, and inefficient. Computer vision-based recognition mainly includes sensor recognition [12], traditional image recognition algorithms [13], and image recognition algorithms based on deep learning [14]. It is of great significance to the intelligent, informatized, and large-scale management of citrus orchards.

Unmanned aerial vehicle (UAV) remote sensing is a low-cost and high-efficiency image acquisition method [15] that allows researchers to collect and analyze high-resolution images on demand. At present, remote sensing images based on UAVs are widely used in the detection of crop growth [16], soil moisture [17], pests and diseases [18], and other agricultural information. In recent years, the application in fruit tree identification and analysis has received more and more attention [19]. For example, Malek et al. [20] used the scale-invariant feature transform (SIFT) to extract key points from UAV remote sensing images of palm farms. They applied extreme learning machine (ELM) classifiers to analyze the extracted key points and used an active contour method based on level sets (LSs) and local binary patterns (LBPs) to integrate and analyze the key points to obtain palm trees from UAV remote sensing images. Surový et al. [21] proposed a low-cost and high-efficiency detection method for the position and height of a single tree in a forest. They used a low-altitude UAV integrated with RGB sensors to collect remote sensing image data and then create 3D point clouds and an orthophoto map. On this basis, the position and height of a single tree were estimated by identifying the local maxima of the point cloud. However, this method has certain limitations, and its measurement accuracy varies with the regularity of canopy shape and tree volume. Goldbergs et al. [22] adopted a similar approach to obtain structure from motion (SfM) 3D point clouds through UAV systems for the detection of individual trees in Australian tropical savannas. They tested the canopy height models (CHMs) of the SfM image using the max and watershed segmentation tree detection algorithm. The above research mainly uses traditional image processing algorithms to obtain individual plant information through remote sensing images. These approaches have limitations due to the accuracy of image detection such as plant occlusion and irregularity.

Many researchers have combined deep learning with aerial remote sensing images to detect ground targets, achieving excellent results. Luo et al. [23] proposed an improved YOLOv4 algorithm for the problems of small targets in aerial images and the difficult detection of complex backgrounds. By changing the different activation functions in YOLOv4 shallow and deep networks, the improved algorithm is more suitable for target identification in UAV remote sensing images. Wang et al. [24] applied a two-stage idea to identify Papaver somniferum. In the first stage, the YOLOv5s algorithm was used to detect and identify all the suspicious Papaver somniferum images from the original data. In the second stage, the DenseNet121 network was used to classify the detection results from the first stage and only retain the images containing Papaver somniferum. Qi et al. [11] combined the point cloud deep learning algorithm with volume calculation algorithms to achieve the automatic acquisition of citrus tree canopy volume. However, there are few studies on extracting geographic coordinate information of fruit trees. The automatic acquisition of geographical coordinates of citrus trees can give each location information and realize the digital modeling of orchards, which is of great significance to precision orchard management by “one tree one code”. Therefore, it is necessary to carry out research on the extraction of the geographic coordinates of citrus trees.

Coordinate extraction and coordinate system transformation is a basic method of spatial measurement data processing [25], which is widely used in geographic surveying and mapping [26], engineering surveying [27], geographic information systems [28], and other fields. The coordinate transformation method uses the coordinate information of the existing common points to match the coordinate system and then completes the transformation of coordinate points under different coordinate systems. In order to realize the conversion between World Geodetic System 1984(WGS84) and China Geodetic Coordinate System 2000 (CGCS2000) coordinate systems, Peng et al. [29] first converted the WGS84 coordinates to International Terrestrial Reference Frame (ITRF) coordinates through the seven-parameter method, then it was converted to CGCS2000 coordinates by frame conversion. Liu et al. [30] discussed two methods for converting WGS84 coordinates to local coordinates. One is to convert WGS84 coordinates to local national geodetic coordinates, then to Gaussian projection, and finally to local coordinates. The other method is to project based on the reference ellipsoid of WGS84 and convert it to the local national coordinate system through the plane coordinate system. It is pointed out that the latter method has a high level of computational efficiency and operability, allowing applications in navigation, monitoring, and other fields. The above studies mainly work on the coordinate transformation under different coordinate systems, and few studies follow the automatic acquisition of target coordinates, such as the extraction of the coordinates of citrus and other fruit trees in agricultural applications. Pei et al. [31] realized the coordinate positioning of tea leaves based on image information, obtained the binarized image of tea leaves through image post-processing, extracted the minimum circumscribed rectangle according to the outer contour of the tea leaves, and marked the center point of the rectangle, so as to obtain the picking of tea leaves point. However, it is only suitable for obtaining local coordinate points of the target, and cannot be used for extracting the coordinates of fruit trees.

By combining image recognition with coordinate extraction, this study proposes a method of citrus tree coordinate recognition and extraction based on UAV remote sensing images for obtaining the coordinate information of citrus trees efficiently and accurately. The main work is as follows:

- 1.

- UAV remote sensing images were collected to generate the orthophoto map of the citrus orchard.

- 2.

- The accuracy of the traditional target detection algorithm, based on the Hough transform and the YOLOv5-based deep learning algorithm, were analyzed to determine the higher-precision algorithm as the method to extract the pixel coordinates of the citrus tree.

- 3.

- The extracted pixel coordinates were transformed into the Universal Transverse Mercator (UTM) coordinate system according to the geographic information in the orthophoto map, and the transformation accuracy was also verified.

- 4.

- The geodetic coordinate transformation of the space rectangular coordinate system was carried out according to the inverse calculation formula of Gauss–Krüger and the transformation accuracy was verified as well.

- 5.

- A coordinate conversion app was developed to achieve the batch conversion of pixel coordinates to UTM coordinates and geodetic coordinates, which helps improve coordinate conversion efficiency and simplify work complexity.

2. Materials and Methods

2.1. Overview of the Experimental Site

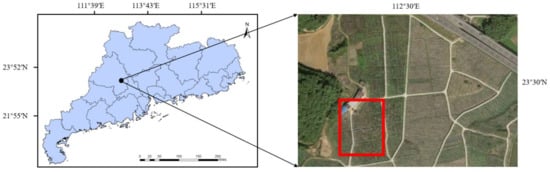

The experimental site is located in a citrus orchard in Sihui City, Guangdong Province, China (23°36′N, 112°68′E). It lies in a subtropical monsoon climate with an average annual air temperature of 21.2 °C and an average annual rainfall of about 1650 mm [32]. The site receives sufficient sunlight and abundant rainfall and is suitable for citrus growth. The overview of the experimental site is shown in Figure 1.

Figure 1.

The study area (right) circled by the red box is located in Guangdong Province (left), China (23°36′N, 112°68′E).

The citrus orchard possesses a near-plain terrain with an average slope of less than 5° and the orchard is divided by an irregular grid of about 50 × 50 m. The height of the citrus trees is between 1.5 m and 2 m with an average crown diameter of 2 m. Both the tree spacing and row spacing are around 3 m. Water tanks and irrigation pipes are installed between rows, resulting that ground equipment cannot pass between the rows of citrus trees.

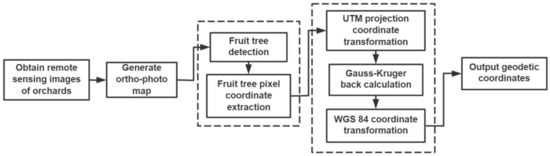

2.2. The Overall Scheme of Fruit Tree Identification and Coordinate Extraction

A method for the automatic identification and coordinate extraction of citrus trees based on an orthophoto map was proposed in this study. The remote sensing images of the citrus orchard were collected with UAVs to generate the orthophoto map of the orchard. The pixel coordinates of the citrus trees were then extracted through the image recognition algorithm and a series of coordinate transformations. Finally, the geographical coordinates of the citrus trees were obtained. The overall flow chart is shown in Figure 2.

Figure 2.

Overall flow chart: image acquisition, orthophoto map production, citrus tree identification, coordinate conversion.

2.3. Remote Sensing Data Collection and Orthophoto Mapping

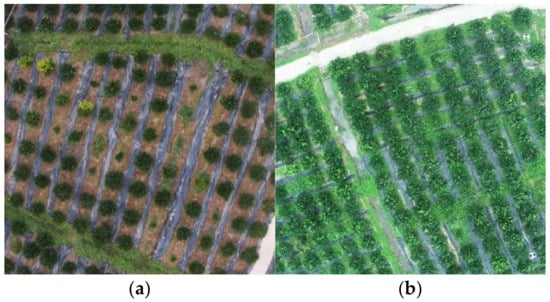

A DJI Phantom 4 RTK quadrotor UAV was selected to acquire field remote sensing images and its operational parameters are shown in Table 1. UAV remote sensing images of the orchard for Scene 1: after weeding (Figure 3a), and Scene 2: before weeding (Figure 3b) were both collected to facilitate an accurate comparison between the image recognition algorithms. High-altitude acquisition images present large-scale orchard scenes, whereas low-altitude acquisitions receive high-resolution target images. To ensure the effectiveness of image processing, the flight altitude of the UAV was set to 50 m. Meanwhile, the heading overlap rate was set to 80% and the side overlap rate was 80% in order to ensure the quality of the later orthophoto map. On the days of data collection, it was sunny with a side southwest wind, a temperature of 35.2 °C, and relative humidity of 61.7%.

Table 1.

UAV operational parameters [33].

Figure 3.

Orchard remote sensing image datasets: (a) Scene 1: after weeding, (b) Scene 2: before weeding.

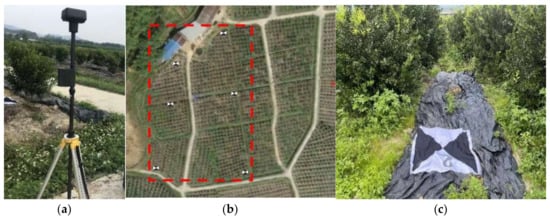

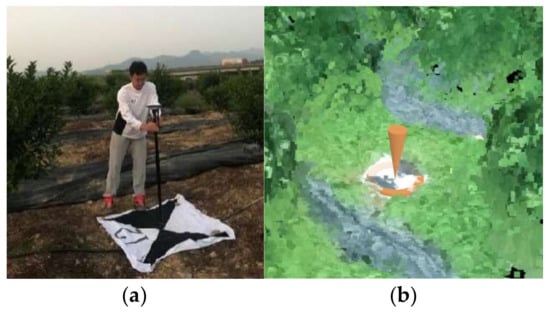

A digital orthophoto map is a map containing geographic information obtained by digital processing, which has the characteristics of high precision, rich information, and strong intuition [34]. This study intends to extract the coordinates of citrus trees through an orthophoto map. A D-RTK 2 high-precision GNSS mobile station (Figure 4a) was employed together with the DJI Phantom 4 RTK UAV to obtain high-precision positioning remote sensing images in order to ensure a high level of geographic accuracy required by the orthophoto map of the citrus orchard. Considering the impact of illumination, haze, and photo distortion on the positioning accuracy, six image control points were arranged in the experimental field to further improve the geographic accuracy of the orthophoto map. The layout of the control points and the arrangement are shown in Figure 4.

Figure 4.

Mobile base station and control point layout: (a) D-RTK 2 high-precision GNSS mobile station, (b) the red box is the test field, and "x" is the six control points selected in the test field, (c) control point.

UAV remote sensing data was imported into Pix4D Mapper for initial processing and the preprocessed model was checked and stabbed with control points to automatically generate point clouds, 3D mesh textures, and orthophoto maps. The result of the orthophoto map is shown in Figure 5.

Figure 5.

Orchard orthophoto map drawn based on UAV remote sensing image.

2.4. Citrus Tree Identification

After the orthophoto map is generated, it is necessary to identify the citrus trees in the orthophoto map in order to obtain the pixel coordinates of each tree. In order to find an efficient and accurate way to identify citrus trees, both a traditional image processing algorithm based on the Hough transform and a deep learning image processing algorithm based on YOlOv5 were applied for comparative analysis, and the recognition method with higher precision was selected for citrus tree identification and coordinate extraction.

2.4.1. Citrus Tree Detection Method Based on Hough Transform

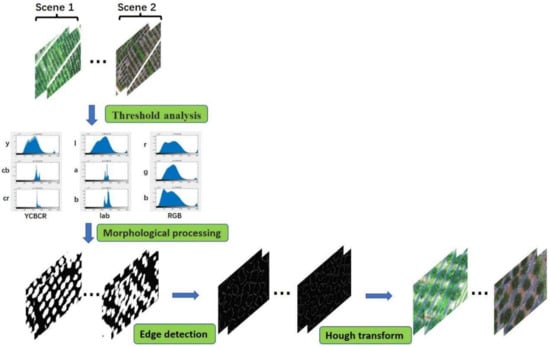

The geometric features of a citrus canopy are usually circular, therefore, the digital processing of remote sensing images to extract circular features enables citrus tree identification. Circular detection is one of the important research aspects in digital image processing and it has a wide application in automatic detection and pattern recognition [35,36]. Commonly used circular detection methods include the shape analysis method [37], the differential method [38], the Hough transform, and so on [39]. Among them, the Hough transform is the most widely used circular detection method at present, with high reliability and high applicability to noise, deformation, and partial edge discontinuity [40]. The detection of citrus trees based on the Hough transform mainly involves: threshold analysis; binary morphological processing; edge detection; and the Hough transform. The flowchart is shown in Figure 6.

Figure 6.

Flow chart of citrus tree detection based on Hough transform.

Image segmentation using the color features of citrus trees is currently a widely used segmentation algorithm [41]. Due to the complex background of orchard images, the selection of color space for image segmentation becomes very important in extracting the citrus tree objects. The color spaces include: RGB (red, green, blue), Lab, YCbCr, HIS (hue, intensity, saturation), HSV (hue, saturation, value) etc. [42,43]. A comparative analysis of three color spaces (RGB, Lab, YCbCr) commonly used in image processing shows that the YCbCr channel has a more pronounced peak, and is suitable for threshold segmentation of citrus trees.

The impacts of temperature, light, wires, etc., result in noise and holes in the segmented binary images. Without effective processing, large errors will occur in the subsequent edge detection and the Hough transform. Therefore, three basic morphologies—erosion, dilation, and hole filling—are employed to deal with the noise and holes in the binary images. Erosion refers to removing unwanted parts by continuously compressing the boundary inward and the specific erosion result depends on the objects and structural elements in the binary images. If the objects in the images are smaller than the structural elements, erosion can ablate the objects in the image, such as noise in the area of citrus trees. If the object is large and possesses structural elements, erosion can compress the object boundary, such as large noise, holes, etc. The effect of dilation is just the opposite of that of erosion, which means that the boundary of the object is enlarged by continuous expansion and the noise in the binary image can be better eliminated by the process of erosion and dilation. However, erosion and dilation only consider the removal of small noise in the target area of citrus trees. For larger holes, it is necessary to apply hole filling.

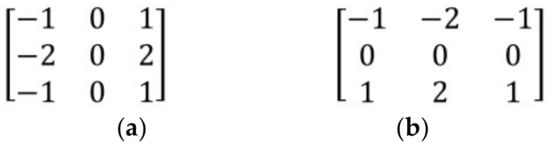

The above morphological processing achieves the segmentation of the target citrus tree and the background, but it does not extract the target citrus tree yet. The edge of the citrus tree image was extracted and the circular edge was detected through the Hough transform, so as to identify the citrus tree. The Sobel operator was used for edge detection of the citrus tree targets since the Sobel operator has a certain ability to suppress noise [44]. The Sobel operator convolves the x and y directions of each pixel in the image through the horizontal and vertical templates to obtain the approximate gradient and then the edge image. The horizontal and vertical templates of Sobel are shown in Figure 7.

Figure 7.

The horizontal and vertical templates of Sobel: (a) vertical templates of Sobel, (b) horizontal templates of Sobel.

The Hough transform achieves the fitting of straight lines and curves to image edges by transforming the image coordinates into parameter space and describing the points in parameter space [45]. Hough circular detection is mainly determined by the following five parameters: circle radius (step_r), circle angle (step_angle), minimum circle radius (minr), maximum circle radius (maxr), and threshold parameter (thresh). In this study, the threshold parameter was multiplied by the parameter space (Hough_space) as the threshold of Hough circular detection, and the optimal detection parameters were determined through repeated debugging of the test set. The Hough transform is applicable to all circulars represented by f (x, a) = 0. The parametric equation is as follows:

where represents the vertical distance from the line to the origin, represents the angle from the x-axis to the vertical line of the line, and the value range is ±90°.

2.4.2. Detection Method Based on YOLOv5 Target Detection Algorithm

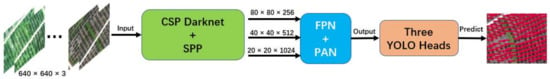

The object detection algorithm based on a deep convolutional neural network (DCNN) was applied to process the aerial images of citrus trees. DCNN has shown great advantages in the field of target detection in recent years by extracting targets in complex environments according to their high-dimensional features [46]. The detection of DCNN includes two-stage region-based detection and one-stage regression-based detection. Compared with two-stage detection, one-stage detection has the advantage of fast speed and is suitable for real-time detection, and is widely used in image recognition, video target detection, and other fields [47]. As the most typical one-stage target detection algorithm, the YOLO (You Only Look Once) series is superior to other algorithms in accuracy and speed [48]. The YOLOv5 in the YOLO series has a total of four network models, and users can choose the network model according to their individual needs, which provides a high degree of flexibility. Among them, the YOLOv5s model has the smallest size compared to the other three models, and the inference speed per image can reach 0.007 s [49]. Therefore, the YOLOv5s model was selected for the target detection of citrus trees.

The YOLOv5s target detection algorithm consists of four parts, namely the input terminal, the backbone network, the path aggregation network, and the detection terminal [50]. On the input terminal, a series of preprocessing and data enhancement operations are performed on the image to increase the number of training samples and to improve the generalization ability of the model, such as mosaic data enhancement, adaptive anchor box calculation, and adaptive image scaling [51]. In the backbone network, the YOLOv5 algorithm uses CSPDarknet as the backbone feature extraction network, and finally extracts three effective feature layers and inputs them to the next path aggregation network. In the path aggregation network, the YOLOv5s algorithm uses the combined structure of the feature pyramid network (FPN) and the path aggregation network (PAN) to enhance the model’s learning of location information, thereby comprehensively improving the robustness of the model. On the detection terminal, the YOLOv5s outputs three YOLO heads to predict the results. The citrus tree target detection process based on YOLOv5s is shown in Figure 8. Label data needs to be added to the dataset samples before model training. Data labeling tools mainly include Mechanical Turk, LabelImg, LabelMe, and RectLabel, among which LabelImg is an open-source labeling tool that is widely used by researchers [52]. Therefore, this paper used LabelImg software to manually add label data to the aerially photographed citrus trees of Scene 1 and Scene 2 and generate text files with the suffix xml, which are used for supervised learning of deep learning algorithms.

Figure 8.

Flowchart of citrus tree target detection based on YOLOv5s.

2.4.3. Training Parameter Setting and Evaluation

The experimental environments of the two algorithms are unified as Intel Core i9-10900KF CPU, NVIDIA GeForce RTX 3090, Pytorch 1.2.0, cuda10.0, torchvision0.4.0, and MATLAB 2019b. The datasets of the two scenes in this experiment are 767 aerial images, respectively. The datasets of each scene are divided into training: validation: test = 8.1:0.9:1. Some initial parameter settings are shown in Table 2.

Table 2.

Some initial training parameters of Hough transform and YOLOv5s.

In order to quantitatively analyze the performance of target detection algorithms, researchers have formulated many evaluation indicators, such as Precision, Recall, F1 Score, frame per second (FPS), etc. [53]. These indicators reflect the performance of target detection algorithms in different aspects to a certain extent. Precision is the probability that all predicted positive samples are actually positive samples. Recall is the ability of the classifier to identify positive samples. F1 Score is a compromise between Precision and Recall. Four indicators, Precision, Recall, F1 Score, and FPS were applied to analyze the performance of the two algorithms. The definitions of Precision, Recall, and F1 Score are shown in Equations (2)–(4), respectively.

where TP represents the number of images that are actually citrus tree targets and the model is correctly detected; FP represents the number of images that do not belong to the citrus tree target but are incorrectly detected by the model; FN represents the number of images that are actually citrus trees but are incorrectly detected by the model.

2.5. Citrus Tree Coordinate Extraction

After the target detection algorithm is determined, it can be used to detect the citrus trees in the orthophoto map to extract the pixel coordinates of the trees. In order to complete the conversion of the geographical coordinates of the citrus tree, it is necessary to first convert the pixel coordinates into the same UTM projection coordinate system in the orthophoto map. Then, the UTM projection coordinates are converted into geographic coordinates through a Gaussian inverse calculation to complete the coordinate information extraction of the citrus trees.

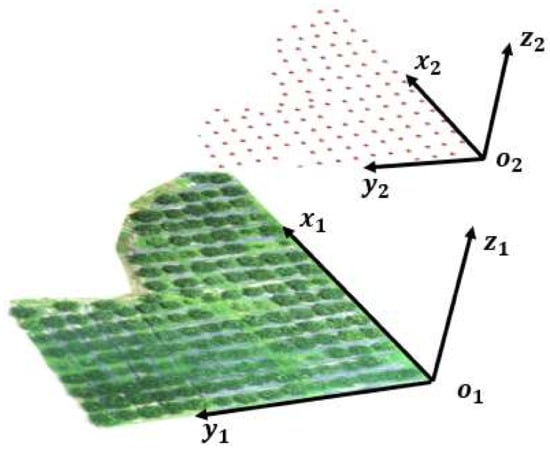

2.5.1. Conversion of Citrus Tree Pixel Coordinates to UTM Projection Coordinates

The output coordinate system selected for the orthophoto map is the WGS84-UTM (Universal Transverse Mercator) space projection coordinate system [54], so it is necessary to convert the pixel coordinates to UTM projection coordinates. The same point has different coordinate values in different coordinate systems. Performing a coordinate conversion between different coordinate systems usually requires three or more common coordinate points to complete the transformation between two spatial coordinate systems through rotation, translation, and scaling [55]. The pixel coordinates in this study are extracted based on the UTM coordinate system in the orthophoto map, as shown in Figure 9. In the figure, is the coordinate system of the orthophoto map and is the pixel coordinate system. There is no offset in the z-axis direction. Therefore, the conversion from pixel coordinates to UTM projection coordinates only needs to be scaled in equal proportions, and the conversion scaling factor equations are shown in Equations (5) and (6).

where is the UTM coordinate value of the right border of the orthophoto map, is the UTM coordinate value of the left border of the orthophoto map, is the pixel length of the orthophoto map, is the pixel width of the orthophoto map, and and are the scale factors of the abscissa and ordinate of the pixel, respectively.

Figure 9.

Pixel coordinate system and UTM coordinate system: is the UTM coordinate system; is the pixel coordinate system.

After the scale factor is obtained, the UTM coordinate system conversion can be completed according to Equations (7) and (8):

where is the UTM coordinate value of the lower boundary of the orthophoto map, and x and y are the abscissa and ordinate of the fruit tree pixel, respectively.

2.5.2. Conversion from UTM Projection Coordinates of Fruit Trees to WGS84 Coordinates

The WGS84 coordinate system is the coordinate system currently used by the Global Positioning System [56]. It is also a general coordinate system used for positioning and navigation [57]. The extraction of the geographic information of the citrus tree is completed by converting the UTM projection coordinates of the citrus tree into WGS84 geodetic coordinates. The Gauss–Krüger inverse algorithm was used to convert UTM projection coordinates to WGS84 geodetic coordinates. The Gauss–Krüger projection is a crosscut equirectangular elliptical projection drawn up by Johann Karl Friedrich Gauss (Gauss), and later supplemented by Krüger, hence the name, Gauss–Krüger projection. The conversion of UTM (X, Y) projected coordinates to WGS84 (L, B) geodetic coordinates is what we usually call a Gaussian inverse calculation. The calculation equations are shown in Equations (9) and (10):

where is the latitude of the bottom point, that is, when y = 0, the latitude of the projection point of the ordinate on the ellipsoid is the latitude of the bottom point; is the radius of curvature of the meridian circle, ; is the radius of curvature of the unitary circle, ; , are the first and second curvature radii; is the radius of curvature of the pole; ; is the semimajor axis of the ellipsoid, in m; is the central meridian, in radians (rad). The derivation equation of is shown in (11):

where is the zone number of the local projection zone.

In order to facilitate program compilation, is brought into the above equations and arranged as Equations (12) and (13):

3. Results

3.1. Comparison and Analysis of Citrus Trees Identification

The target detection of citrus trees based on the Hough transform adopted manual parameter adjustment and manual evaluation index calculation. The parameters were repeatedly adjusted through the training set and verified by the validation set and the optimal parameter values in two scenes (after and before weeding) were then determined. Due to the excessive amount of data, it is impossible to calculate the overall indicators of all test sets. Therefore, a random sampling method was used to select 10 pictures for testing in the two test sets. TP, FP, and FN values were calculated manually, and finally the Recall, F1 Score, and Precision were achieved. The statistical results based on the Hough transform are shown in Table 3. It can be seen that for the citrus tree target detection in Scene 1: after weeding, the average Precision of the Hough transform is 0.88, the average Recall and F1 Score are both 0.87. The differences among the three indicators are small, showing relatively stable detection performance. In Scene 2: before weeding, the average Precision of the Hough transform is 0.48, which is 0.4 lower than that of Scene 1. The Recall and F1 Score are 0.35 and 0.38, respectively, which is lower than that of Scene 1 as well. This shows that the Hough transform is more suitable for citrus tree detection in Scene 1 with clearer background and has relatively stable detection performance.

Table 3.

Statistics of citrus tree detection results based on Hough transform.

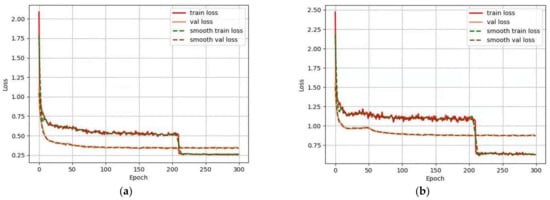

Figure 10 shows the loss curve of YOLOv5s training. It can be seen from the loss function that the data sets of the two scenes tend to converge after 200 epochs of training, indicating that the training accuracy of the YOLOv5s algorithm will not be too complex due to the environment. However, the loss function of the Scene 1 dataset (Figure 10a) is lower than the loss function of the Scene 2 dataset (Figure 10b) during the training process. The Scene 1 dataset finally converges to 0.25, about 0.4 different from that of Scene 2, which indicates that the training effect of Scene 1 (after weeding) is better than that of Scene 2 (before weeding). The complexity of the image background will still affect the training of the YOLOv5s model.

Figure 10.

Calculation result of YOLOv5s loss. (a) Calculation result of YOLOv5s loss in Scene 1: after weeding; (b) calculation result of YOLOv5s loss in Scene 2: before weeding.

The comparison results of the two algorithms in each scene are shown in Table 4. It can be seen that in Scene 1, the Precision difference between the two algorithms is 0.01, indicating that the two algorithms can correctly identify the citrus tree target with similar performance. The Recall difference between the two algorithms is 0.1, indicating that in terms of recognition rate, YOLOv5s performs better. The F1 Score difference between the two algorithms is 0.05, suggesting that the two algorithms have a similar recognition performance in general. However, in the target recognition of citrus trees in Scene 2 (before weeding), the detection results of the Hough transform show serious errors, whereas the YOLOv5s model still maintains high accuracy. For example, the F1 Score of YOLOv5s is 0.45 higher than that of the Hough transform. In addition, in terms of detection speed, the FPS of YOLOv5s achieved about 60 for both scenes, which is superior to that of the Hough transform algorithm. Based on the comparison and analysis, the YOLOv5s model was selected as the target recognition algorithm for citrus trees for its better identification accuracy and higher speed.

Table 4.

Comparison results of Hough transform and YOLOv5s algorithm.

3.2. Orthophoto Map Accuracy Verification

The high-precision orthophoto map is the premise to ensure the extraction of geographical coordinates of citrus trees. Six sampling points were randomly arranged in the orchard. A XAG Agricultural Beidou differential positioning terminal (XAG Technology Co., Ltd., Guangzhou, China) with high precision was used to extract the WGS84 coordinates of the sampling points. The result was then compared with the WGS84 coordinates information of the sampling points in the point cloud model to verify the correctness. The horizontal positioning accuracy of the XAG positioning terminal is ±10 mm [58], which meets the accuracy verification requirements. Figure 11a shows the process of extracting WGS84 coordinates of sampling points on site, and Figure 11b indicates the process of extracting coordinates of sampling points in a point cloud model.

Figure 11.

Field sampling and point cloud sampling: (a) on-site extraction of WGS84 coordinates of sampling points; (b) point cloud model extraction coordinates.

The coordinate information of the sampling points in the point cloud model and the field sampling points were compared and analyzed. The experimental comparison results are shown in Table 5. The measured values in the table are the coordinate information extracted from the orthophoto map and the actual value is the coordinate information extracted by using the XAG agricultural Beidou differential positioning terminal. By calculating the Euclidean distance between the actual and the measured value, it can be seen that the average accuracy of the Euclidean distance of the orthophoto map is 0.15 m, which is reliable and meets the extraction requirements of the citrus tree coordinates of the subsequent orthophoto map.

Table 5.

UTM coordinate comparison of sampling points.

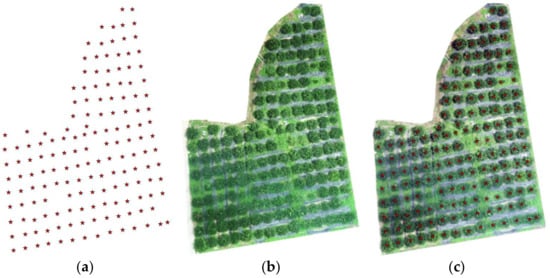

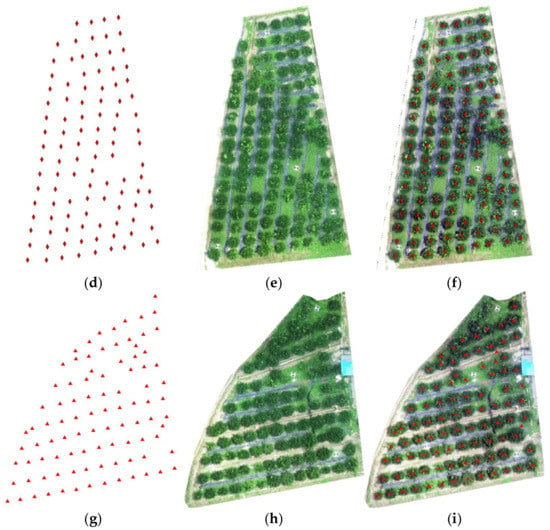

3.3. UTM Coordinate Verification

In the work of spatial coordinate system transformation, the reverse comparison with ArcMap software was applied to verify the accuracy of the coordinates. This method imports the converted UTM coordinate data of the citrus tree into the orthophoto map for comparison to verify whether it is located in the center of the fruit tree. ArcMap is an application program developed by the Environment System Research Institute with functions such as map making, spatial analysis, and spatial data database building. It is widely used in the field of surveying and mapping [59]. The ArcMap software was used to import the orthophoto map, and ArcToolbox was used to add the XY data to the new layer. Then, the added (X, Y) coordinate points were displayed on the layer page of the image map. Since the geometry of the citrus tree canopy is usually a circle with a diameter of 3 m, the precise coordinates of the tree center are not clearly specified. Therefore, the space rectangular coordinate verification was carried out through visual inspection to compare and verify its accuracy.

We selected three test areas for UTM coordinate verification, as shown in Figure 12, where a–c are test field 1, d–f are test field 2, and g–h are test field 3. The UTM projection coordinate points automatically converted from the pixel coordinates of citrus trees collected in three experimental plots were imported into ArcMap, as shown in Figure 12a,d,g. Similarly, the orthophoto map containing geographic coordinate information was imported into ArcMap, as shown in Figure 12b,e,h. The geographic coordinates of the orthophoto map were used as the benchmark to verify the accuracy of the coordinate transformation by comparing the mapping relationship between the two coordinates, as shown in Figure 12c,f,i. It can be seen based on visual inspection that the UTM coordinates of the trees through the conversion are basically located in the center of each citrus tree, and the accuracy is reliable and meets the requirements of subsequent experiments.

Figure 12.

UTM coordinate verification. (a–c) are experimental plot 1; (d–f) are experimental plot 2; (g–i) are experimental plot 3; (a,d,g) are converted UTM projection coordinate points; (b,e,h) are the orthophoto map of the extracted fruit tree pixels; (c,f,i) are the correspondence between the coordinate point and the orthophoto map in the UTM coordinate system.

3.4. Coordinate Conversion App Design and WGS84 Coordinate Verification

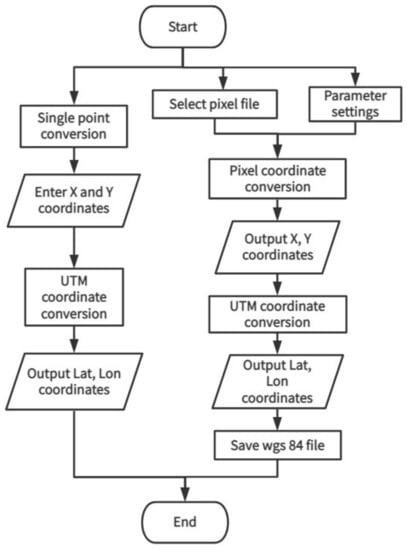

According to the coordinate conversion requirements, the process of converting fruit tree pixel coordinates to geodetic coordinates is extremely cumbersome. In order to simplify the coordinate conversion process, a citrus tree coordinate conversion app was developed using MATLAB software, which realizes the batch conversion of pixel coordinates to coordinates and the single-point conversion of UTM coordinates to WGS84 coordinates. The flow chart of the program is shown in Figure 13. This application has a single-point conversion function and batch conversion function. The single-point conversion function can perform coordinate conversion on a single UTM-projected coordinate and convert it to a WGS84 coordinate or XIAN80 coordinate. The batch conversion function can convert the pixel coordinates of citrus trees extracted from the orthophoto map into UTM projection coordinates and WGS84 coordinates, and then, the two coordinates are saved in Excel format for subsequent data processing and analysis.

Figure 13.

Flowchart of the coordinate conversion application.

In order to verify the conversion accuracy, the coordinate conversion application developed in this study and the commercial software ArcMap were both used to convert the same set of UTM projection coordinates to WGS84 coordinates. Due to the huge amount of data, only six sets of data were randomly selected from each experimental plot for comparison analysis. The results are shown in Table 6.

Table 6.

UTM coordinate result comparison.

Usually, the geometric shape of the citrus canopy is circular with a diameter of 3 m [11], which has a large footprint, so there is a certain error allowed for the extracted coordinates, and the accuracy of the coordinate error is required to be 0.1 m. The latitude and longitude values of the sampling points in Table 6 were expressed in decimal. According to the distribution characteristics of the earth’s latitude and longitude, the latitude difference is 111 km every 1°. The latitude and longitude at this experimental site are 23°36′N and 112°68′E, respectively. The longitude difference is 104,043.976 m every 1° [60], that is, the longitude difference is about 0.1 m every 0.000001°. The decimal latitude and longitude coordinates are accurate to 0.1 m, that is, it can be reserved to six decimal places. Therefore, we only need to focus on the last six digits of the latitude and longitude information in Table 6 to compare the conversion accuracy. It can be seen from the table that the same set of UTM data is converted by the developed application and ArcMap, and the difference in longitude and latitude is 0.1 × 10−6. The conversion accuracy of the method proposed in this study can reach centimeter-level precision, which meets the requirements of the geodetic coordinate conversion of citrus trees.

4. Discussion

Precision management of citrus orchards using plant protection UAVs in China requires obtaining the geographic coordinates of a single citrus tree. The citrus tree extraction from UAV remote sensing imagery proposed in this study is capable of automatically obtaining the WGS84 coordinates of citrus trees with high precision by using YOLOv5s and coordinate transformation. The accurate geographic coordinate information of single citrus trees provided the feasibility for precision orchard management by “one tree one code”. The variable rate application of agricultural production materials according to the growth differences of trees is possible in future research.

This study applied UAVs to collect remote sensing images of orchards and generate a high-precision orthophoto map. On this basis, traditional image recognition and deep learning algorithms were used to identify citrus trees in an orthophoto map. Pei et al. [31] also used the traditional binary image processing method to extract the coordinates of tea sprouts. However, this method requires manual debugging, resulting in the fact that the degree of automation is low and the recognition rate of tea sprouts in complex scenes is also poor. To better improve the recognition performance of the algorithm, the traditional target detection method based on the Hough transform was compared with the YOLOv5s algorithm based on deep learning. After comparison analysis, the YOLOv5s model based on deep learning showed strong robustness in citrus tree target detection in different scenarios, thereby ensuring its recognition accuracy of citrus trees. In terms of extracting citrus tree coordinates, the automatic conversion of citrus tree coordinates with high precision was achieved through spatial coordinate system transformation and Gauss–Krüger inverse calculation. Compared with the manual extraction of citrus tree coordinates, the automatic coordinates extraction method proposed in this study saves time and labor. In addition, the extraction of citrus trees from UAV remote sensing images is also superior to terrestrial mechanical sensors by providing a low-cost solution.

The accuracy of the orchard orthophoto map depends on the layout of image control points, image quality, overlap rate, flying height, and other factors. A DJI Phantom 4 RTK UAV was selected for remote sensing image acquisition, which can obtain high-quality remote sensing images with a resolution of 5472 × 3648. At the same time, it is equipped with a D-RTK 2 high-precision GNSS mobile station to ensure the accuracy of an orchard orthophoto map. However, in the process of aerial photography, the impact of factors such as light, wind speed, and distance on the positioning accuracy of the UAV cannot be ignored. Therefore, six control points in the field were arranged to further improve the accuracy of the orthophoto map. In terms of overlap rate, we adopted a higher side overlap rate and heading overlap rate, which allow the inability to complete data collection in one flight. UAV remote sensing images were taken at the time of day when the light is better. In terms of UAV flight height selection, the higher the flight, the lower the image resolution, which will reduce the effectiveness of citrus tree target detection. If the flight is too low, the feature points of the target in the images will be reduced, and the quality of the image orthophoto map will be drawn. The flight height was finally determined to be 50 m.

Visible light remote sensing images were used in this study for citrus tree target detection. The YOLOv5s model shows good robustness for citrus tree target detection in visible light remote sensing images, but the recognition accuracy of traditional algorithms is poor. Traditional image processing methods have high accuracy in extracting citrus trees with regular distribution and without weeds interference. However, its recognition accuracy of citrus trees in complex environments is poor. The main reasons lie in the fact that the thresholds corresponding to weeds and citrus trees are similar. It is difficult to perform effective threshold segmentation, thereby reducing the recognition accuracy. A similar method is also reflected in the literature [21]. This method judges the location of trees by identifying the local maxima of the point cloud model. However, this method often leads to large errors due to the size of the tree and the shape of the canopy. From this point of view, the YOLOv5s algorithm has certain robustness for citrus tree identification in environments with different degrees of complexity, so using the YOLOv5s model to identify fruit trees is a key step in extracting fruit tree coordinates. In addition to visible light remote sensing images, related research has also used thermal infrared remote sensing images [61], multispectral imagery [62], a digital elevation model (DEM) [63], and other methods to extract target features, achieving high target detection results. These methods are also suitable for the extraction of citrus tree coordinate information in this study. It is expected to solve the recognition accuracy of citrus trees based on the Hough transform in complex background. In future research, we also need to improve the robustness of this method, study different varieties of fruit trees, and realize the coordinate extraction of all varieties of fruit trees.

Identifying crop varieties and obtaining growth information through UAV remote sensing images is an important means for farmers to facilitate the digital management of orchards. However, with a new generation of farmers rapidly increasing their interest in using digital solutions, the cumulative archive of UAV imagery data is a concern. Kayad et al. [64] estimated the data storage disc space requirements in the last two decades from a 22 ha field located in Northern Italy. The results showed that the total accumulated data size from the study field reached 18.6 GB in 2020 mainly due to the use of UAV images. Through the method in this paper, the archived data of UAV images can be effectively reduced. In terms of citrus tree target detection, we use the YOLOv5s model to train remote sensing images. While ensuring recognition accuracy, the remote sensing data set of 7.69 GB is effectively converted into a training weight file of 54.7 MB. In the extraction of citrus tree geographic coordinates, the method used in this paper can convert the 40 MB orthophoto map into a 12.6 KB coordinate file. Reasonable archiving of remote sensing images can effectively reduce the storage space of data and help farmers manage their orchards more accurately. UAV remote sensing images not only provide farmers with rich data but also increase storage costs. In future research, we also need to optimize the utilization and benefits of UAV remote sensing images to provide more efficient orchard management plans.

5. Conclusions

This study proposed a method for citrus tree identification and coordinate extraction based on UAV remote sensing imagery and coordinate transformation. In the first stage, UAV remote sensing images were used to generate an orchard orthophoto map. Control points and RTK mobile base stations were applied to ensure the geographic accuracy of orthophoto maps. In the second stage, after comparing the detection accuracy of the YOLOv5s algorithm and the Hough transform, the YOLOv5s algorithm with a better identification performance of citrus trees was selected to extract the coordinate information of citrus trees. Finally, the spatial coordinate system transformation and Gaussian inverse calculation were conducted to extract the WGS84 coordinates of the citrus tree. The experimental results show that the average Euclidean distance accuracy of the orthophoto map reaches 0.15 m. In object detection, the YOLOv5s model has better detection performance in both scenes (Scene 1 after weeding: Precision = 0.89, Recall = 0.97, F1 Score = 0.92; Scene 2 before weeding: Precision = 0.91, Recall = 0.90, F1 Score = 0.91). The coordinate conversion method proposed in this study can convert WGS84 coordinates with an accuracy of 1 cm. The high-precision WGS84 coordinates of citrus trees can be automatically extracted based on the citrus tree identification and coordinate extraction method. This method is suitable for the extraction and transformation of target coordinates in all orthophoto maps and is featured with high precision, high efficiency, and a high-recognition rate.

Author Contributions

Conceptualization, Y.Z. and H.T.; methodology, Y.Z., H.T. and X.F.; validation, H.T. and Y.L.; formal analysis, H.T., X.F., H.H. and C.M.; investigation, Y.Z., H.H., H.T., C.M., D.Z. and H.L.; resources, H.T., D.Z. and H.L.; data curation, H.T. and C.M.; writing—original draft preparation, H.T., C.M. and Y.Z.; writing—review and editing, Y.Z. and C.M.; visualization, C.M.; supervision X.L.; project administration, Y.Z.; funding acquisition, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Guangdong Science and Technology Plan Project, grant number 2018A050506073, and the 111 Project, grant number D18019.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Annual Report on Fruit Production in China. Available online: https://data.stats.gov.cn/index.htm (accessed on 20 July 2022).

- Shan, Y. Present situation, development trend and countermeasures of citrus industry in China. J. Chin. Inst. Food Sci. Technol. 2008, 1, 1–8. [Google Scholar]

- Shu, M.Y.; Li, S.L.; Wei, J.X.; Che, Y.P.; Li, B.G.; Ma, T.Y. Extraction of citrus crown parameters using UAV platform. Trans. Chin. Soc. Agric. Eng. 2021, 37, 68–76. [Google Scholar]

- Wang, H.; Noguchi, N. Navigation of a robot tractor using the centimeter level augmentation information via Quasi-Zenith Satellite System. Eng. Agric. Environ. Food 2019, 12, 414–419. [Google Scholar] [CrossRef]

- Zhou, J.; He, Y.Q. Research progress on navigation path planning of agricultural machinery. Trans. Chin. Soc. Agric. Mach. 2021, 52, 1–14. [Google Scholar]

- Guo, C.Y.; Zhang, S.; Zhao, L.; Chen, J. Research on autonomous navigation system of orchard agricultural vehicle based on RTK-BDS. J. Agric. Mech. Res. 2020, 8, 254–259. [Google Scholar]

- Chen, K.; Tian, G.Z.; Gu, B.X.; Liu, Y.F.; Wei, J.S. Design and Simulation of front frame of orchard automatic obstacle avoidance Lawn Mower. Jiangsu Agric. Sci. 2020, 48, 226–232. [Google Scholar]

- Qiu, W.; Sun, H.; Sun, Y.H.; Liao, Y.Y.; Zhou, L.F.; Wen, Z.J. Design and test of circulating air-assisted sprayer for dwarfed orchard. Trans. Chin. Soc. Agric. Eng. 2021, 37, 18–25. [Google Scholar]

- Qiu, W.; Li, X.L.; Li, C.C.; Ding, W.M.; Lv, X.L.; Liu, Y.D. Design and test of a novel crawler-type multi-channel air-assisted orchard sprayer. Int. J. Agric. Biol. Eng. 2020, 13, 60–67. [Google Scholar] [CrossRef]

- Song, C.C.; Zhou, Z.Y.; Wang, G.B.; Wang, X.W.; Zang, Y. Optimization of the groove wheel structural parameters of UAV-based fertilizer apparatus. Trans. Chin. Soc. Agric. Eng. 2021, 37, 1–10. [Google Scholar]

- Qi, Y.; Dong, X.; Chen, P.; Lee, K.-H.; Lan, Y.; Lu, X.; Jia, R.; Deng, J.; Zhang, Y. Canopy Volume Extraction of Citrus reticulate Blanco cv. Shatangju Trees Using UAV Image-Based Point Cloud Deep Learning. Remote Sens. 2021, 13, 3437. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, H.; Huang, X.; Ma, C.; Wang, L.; Liu, H.; Lan, Y. Research Progress and Prospects of Agricultural Aero-Bionic Technology in China. Appl. Sci. 2021, 11, 10435. [Google Scholar] [CrossRef]

- Li, V.; Selam, A.; Beniamin, A.; Kamal, A. A novel method for detecting morphologically similar crops and weeds based on the combination of contour masks and filtered Local Binary Pattern operators. Comput. Electron. Agric. 2020, 9, giaa017. [Google Scholar]

- Hamuda, E.; Glavin, M.; Jones, E. A survey of image processing techniques for plant extraction and segmentation in the field. Comput. Electron. Agric. 2016, 125, 184–199. [Google Scholar] [CrossRef]

- Hogan, S.D.; Kelly, M.; Stark, B.; Chen, Y. Unmanned aerial systems for agriculture and natural resources. Calif. Agric. 2017, 71, 5–14. [Google Scholar] [CrossRef]

- Zhang, N.; Yang, G.J.; Zhao, C.J.; Zhang, J.C.; Yang, X.D.; Pan, Y.C.; Huang, W.J.; Xu, B.; Li, M.; Zhu, X.C.; et al. Progress and prospects of hyperspectral remote sensing technology for crop diseases and pests. Natl. Remote Sens. Bull. 2021, 25, 403–422. [Google Scholar]

- Shi, Z.; Liang, Z.Z.; Yang, Y.Y.; Guo, Y. Status and Prospect of Agricultural remote Sensing. Trans. Chin. Soc. Agric. Mach. 2015, 46, 247–260. [Google Scholar]

- Zhang, J.C.; Yuan, L.; Wang, J.H.; Luo, J.H.; Du, S.Z.; Huang, W.J. Research progress of crop diseases and pests monitoring based on remote sensing. Trans. Chin. Soc. Agric. Eng. 2012, 28, 1–11. [Google Scholar]

- Liu, S.Y.; Wang, X.Y.; Chen, X.A.; Hou, X.N.; Jiang, T.; Xie, P.F.; Zhang, X.M. Fruit tree row recognition with dynamic layer fusion and texture-gray gradient energy model. Trans. Chin. Soc. Agric. Eng. 2022, 38, 152–160. [Google Scholar]

- Malek, S.; Bazi, Y.; Alajlan, N.; AlHichri, H.; Melgani, F. Efficient Framework for Palm Tree Detection in UAV Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4692–4703. [Google Scholar] [CrossRef]

- Surový, P.; Almeida Ribeiro, N.; Panagiotidis, D. Estimation of positions and heights from UA V-sensed imagery in tree plantations in agrosilvopastoral systems. Int. J. Remote Sens. 2018, 39, 4786–4800. [Google Scholar] [CrossRef]

- Goldbergs, G.; Maier, S.W.; Levick, S.R.; Edwards, A. Efficiency of Individual Tree Detection Approaches Based on Light-Weight and Low-Cost UAS Imagery in Australian Savannas. Remote Sens. 2018, 10, 161. [Google Scholar] [CrossRef]

- Luo, X.; Wu, Y.; Zhao, L. YOLOD: A Target Detection Method for UAV Aerial Imagery. Remote Sens. 2022, 14, 3240. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, C.; Wu, H.; Zhao, C.; Teng, G.; Yu, Y.; Zhu, H. A Two-Stage Low-Altitude Remote Sensing Papaver Somniferum Image Detection System Based on YOLOv5s+DenseNet121. Remote Sens. 2022, 14, 1834. [Google Scholar] [CrossRef]

- Guo, Y.G.; Li, Z.C.; He, H.; Wang, Z.Y. A simplex search algorithm for the optimal weight of common point of 3D coordinate transformation. Acta Geod. Cartogr. Sin. 2020, 49, 1004–1013. [Google Scholar]

- Li, G.Y.; Li, Z.C. The Principles and Applications of Industrial Measuring Systems; Surveying and Mapping Press: Beijing, China, 2011. [Google Scholar]

- Zeng, A.M.; Zhang, L.P.; Wu, F.M.; Qin, X.P. Adaptive collocation method to coordinate transformation from XAS80 to CGCS2000. Geomat. Inf. Sci. Wuhan Univ. 2012, 37, 1434–1437. [Google Scholar]

- Li, B.F.; Shen, Y.Z.; Li, W.X. The seamless model for three-dimensional datum transformation. Sci. China Earth Sci. 2012, 42, 1047–1054. [Google Scholar] [CrossRef]

- Peng, X.Q.; Gao, J.X.; Wang, J. Research of the coordinate conversion between WGS84 and CGCS2000. J. Geod. Geodyn. 2015, 35, 119–221. [Google Scholar]

- Liu, F.; Zhou, L.L.; Yi, J.F. Study on the practical method to transform GPS geodetic coordinates to coordinates of localsystem. J. East China Norm. Univ. Nat. Sci. 2005, 2005, 73–77. [Google Scholar]

- Pei, W.; Wang, X.L. The two-dimension coordinates extraction of tea shoots picking based on image information. Acta Agric. Zhejiangensis 2016, 28, 522–527. [Google Scholar]

- Deng, S.S.; Xia, L.H.; Wang, X.X.; Pan, Z.K. Fuzzy comprehensive evaluation for the tourism climate in Zhaoqing city. Guangdong Agric. Sci. 2010, 37, 242–246. [Google Scholar]

- PHANTOM 4 RTK Specs. Available online: https://www.dji.com/id/phantom-4-rtk/info#specs (accessed on 20 July 2022).

- Fang, J.Q. Discussion on several technical problems about producing DOM. Geomat. Spat. Inf. Technol. 2007, 3, 91–93. [Google Scholar]

- Li, D.; Liu, J.; Yu, D. Indent round detection of Brinell test images based on Snake model and shape similarity. Chin. J. Sci. Instrum. 2011, 32, 2734–2739. [Google Scholar]

- Li, Z.; Liu, M.; Sun, Y. Research on calculation method for the projection of circular target center in photogrammetry. Chin. J. Sci. Instrum. 2011, 32, 2235–2241. [Google Scholar]

- Lin, J.; Shi, Q. Circle Recognition Through a Point Hough Transformation. Comput. Eng. 2003, 29, 17–18. [Google Scholar]

- Daugman, J.G. High Confidence Visual Recognition of Persons by a Test of Statistical Independence. IEEE Tran. Pattern Mach. Intell. 1993, 15, 1148–1161. [Google Scholar] [CrossRef]

- Davies, E.R. A Modified Hough Scheme for General Circle Location. Pattern Recognit. Lett. 1987, 7, 37–43. [Google Scholar] [CrossRef]

- Zhu, G.; Zhang, R. Circle detection using Hough transform. Comput. Eng. Des. 2008, 29, 1462–1464. [Google Scholar]

- Xiong, J.; Zhou, X.; Chen, L.; Cai, H.; Peng, H. Visual position of picking manipulator for disturbed litchi. Trans. Chin. Soc. Agric. Eng. 2012, 28, 36–41. [Google Scholar]

- Xiang, R.; Ying, B.; Jiang, H. Development of Real-time Recognition and Localization Methods for Fruits and Vegetables in Field. Trans. Chin. Soc. Agric. Mach. 2013, 44, 208–223. [Google Scholar]

- Cui, Y.; Su, S.; Wang, X.; Tian, Y.; Li, P.; Zhang, N. Recognition and Feature Extraction of Kiwifruit in Natural Environment Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2013, 44, 247–252. [Google Scholar]

- Li, D.; Wu, Q.; Yang, H.S. Design and implementation of edge detection system based on improved Sobel operator. Inf. Technol. Netw. Secur. 2022, 41, 13–17. [Google Scholar]

- Chen, K.; Zhou, X.; Xiong, J.; Peng, H.; Guo, A.; Chen, L. Improved fruit fuzzy clustering image segmentation algorithm based on visual saliency. Trans. Chin. Soc. Agric. Eng. 2013, 29, 157–165. [Google Scholar]

- Zhao, D.A.; Wu, R.D.; Liu, X.Y.; Zhao, Y.Y. Apple positioning based on YOLO deep convolutional neural network for picking robot in complex background. Trans. Chin. Soc. Agric. Eng. 2019, 35, 164–173. [Google Scholar]

- Liu, F.; Liu, Y.K.; Lin, S.; Guo, W.Z.; Xu, F.; Zhang, B. Fast Recognition Method for Tomatoes under Complex Environments Based on Improved YOLO. Trans. Chin. Soc. Agric. Machiner. 2020, 51, 229–237. [Google Scholar]

- Tan, S.L.; Bei, X.B.; Lu, G.L.; Tan, X.H. Real-time detection for mask-wearing of personnel based onYOLOv5 network mode. Laser J. 2021, 42, 147–150. [Google Scholar]

- Cheng, Z.; Jia, J.M.; Jiang, Z.; Wang, X. Research on Stuttering Type Detection Based on YOLOv5. J. Yunnan Minzu Univ. Nat. Sci. Ed. 2022, 1, 1–11. [Google Scholar]

- Cheng, Q.; Li, J.; Du, J. Ship detection method of optical remote sensing image based on YOLOv5. Syst. Eng. Electron. 2022, 1, 1–9. [Google Scholar]

- Hou, Y.; Shi, G.; Zhao, Y.; Wang, F.; Jiang, X.; Zhuang, R.; Mei, Y.; Ma, X. R-YOLO: A YOLO-Based Method for Arbitrary-Oriented Target Detection in High-Resolution Remote Sensing Images. Sensors 2022, 22, 5716. [Google Scholar] [CrossRef]

- Cai, L.; Wang, S.; Liu, J.; Zhu, Y. Survey of data annotation. J. Softw. 2020, 31, 302–320. [Google Scholar]

- Wang, C.; Wang, Q.; Wu, H.; Zhao, C.; Teng, G.; Li, J. Low-Altitude Remote Sensing Opium Poppy Image Detection Based on Modified YOLOv3. Remote Sens. 2021, 13, 2130. [Google Scholar] [CrossRef]

- Sun, L.D. Gauss-Kruger Projection and Universal Transverse Mercator Projection of the Similarities and Differences. Port Eng. Technol. 2008, 5, 31–51. [Google Scholar]

- Guo, Y.; Tang, B.; Zhang, Q.; Zhang, W.; Hei, L. Research on Coordinate Transformation Method of High Accuracy Based on Space Rectangular Coordinates System. J. Geod. Geodyn. 2012, 32, 125–128. [Google Scholar]

- Ning, J.S.; Yao, Y.B.; Zhang, X.H. Review of the development of Global satellite Navigation System. J. Navig. Position. 2013, 1, 3–8. [Google Scholar]

- Cui, H.; Lin, Z.; Sun, J. Research on UAV Remote Sensing System. Bull. Surv. Mapp. 2005, 5, 11–14. [Google Scholar]

- XAG. Product Manual. Available online: https://www.xa.com/service/downloads (accessed on 20 July 2022).

- Wu, W.; Li, X.S.; Zhang, B. Probe of developing technology based on ArcGIS Engine. Sci. Technol. Eng. 2006, 2, 176–178. [Google Scholar]

- Li, Z.X.; Li, J.X. Quickly Calculate the Distance between Two Points and Measurement Error Based on Latitude and Longitude. Geomat. Spat. Inf. Technol. 2013, 36, 235–237. [Google Scholar]

- Li, L.; Jiang, L.; Zhang, J.; Wang, S.; Chen, F. A Complete YOLO-Based Ship Detection Method for Thermal Infrared Remote Sensing Images under Complex Backgrounds. Remote Sens. 2022, 14, 1534. [Google Scholar] [CrossRef]

- Han, S.; Zhao, Y.; Cheng, J.; Zhao, F.; Yang, H.; Feng, H.; Li, Z.; Ma, X.; Zhao, C.; Yang, G. Monitoring Key Wheat Growth Variables by Integrating Phenology and UAV Multispectral Imagery Data into Random Forest Model. Remote Sens. 2022, 14, 3723. [Google Scholar] [CrossRef]

- Lin, J.; Wang, M.; Ma, M.; Lin, Y. Aboveground Tree Biomass Estimation of Sparse Subalpine Coniferous Forest with UAV Oblique Photography. Remote Sens. 2018, 10, 1849. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Paraforos, D.; Rodrigues, F.; Cohene, Y.; Fountas, S.; Francisco, M.; Pezzuolo, A.; Grigolato, S.; Marinello, F. How many gigabytes per hectare are available in the digital agriculture era? A digitization footprint estimation. Comput. Electron. Agric. 2022, 198, 107080. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).