Abstract

Superpixel segmentation is widely used in polarimetric synthetic aperture radar (PolSAR) image classification. However, the classification method using simple majority voting cannot easily handle evidence conflicts in a single superpixel. At present, there is no method to evaluate the quality of superpixel classification. To solve the above problems, this paper proposes a hybrid classification model based on superpixel entropy discrimination (SED), and constructs a two-level cascade classifier. Firstly, a light gradient boosting machine (LGBM) was used to process large-dimensional input features, and simple linear iterative clustering (SLIC) was integrated to obtain the primary classification results based on superpixels. Secondly, information entropy was introduced to evaluate the quality of superpixel classification, and a complex-valued convolutional neural network (CV-CNN) was used to reclassify the high-entropy superpixels to obtain the secondary classification results. Experiments with two measured PolSAR datasets show that the overall accuracy of both classification methods exceeded 97%. This method suppressed the evidence conflict in a single superpixel and the inaccuracy of superpixel segmentation. The test time of our proposed method was shorter than that of CV-CNN, and using only 55% of CV-CNN test data could achieve the same accuracy as using CV-CNN for the whole image.

1. Introduction

Polarimetric synthetic aperture radar (PolSAR) can obtain images of different polarimetric channels. Compared with traditional single-channel synthetic aperture radar (SAR), PolSAR has rich polarimetric and texture features [1,2], and can obtain more comprehensive target-scattering characteristics. PolSAR images have a wide range of application scenarios, such as terrain classification, building extraction and building damage assessment [3,4,5], among which terrain classification is an important research objective of PolSAR image interpretation.

In the field of terrain classification of PolSAR images, it is an important step to use superpixels for surface feature segmentation. Superpixel segmentation is an image segmentation technology [6] that is widely used in computer vision tasks such as target detection [7], visual tracking [8] and image quality assessment [9]. Through the space and color features of the image, the whole image is condensed into a set of subregions to effectively maintain the local consistency of the image. Compared with traditional pixel images, superpixel images contain less redundant information, which can reduce the complexity of subsequent image processing tasks. PolSAR images usually pay more attention to the natural feature categories gathered in a large area, such as forests, oceans, etc. In addition, the feature categories with traces of human activities, such as farmland and building areas, usually show a regular aggregation form. Therefore, similar ground objects often appear in the form of local coherent aggregation, and an isolated pixel is usually disturbed by noise or classification error. Therefore, each superpixel on the PolSAR image can be considered as the same ground object, similar to using a fast and efficient simple linear iterative clustering (SLIC) and improved methods to suppress the interference of speckle noise fast and efficiently [10,11,12].

In the early days of PolSAR image interpretation, the superpixel method was often used together with the model-based classification method. Wishart distribution [13] uses polarization covariance matrix to derive the Wishart distance model, and uses this distance in combination with superpixel classification. In addition, a variety of classification algorithms based on statistical models are used, such as the classification algorithm based on a Markov random-field model [14] and its variant [15], and the hybrid model of Wishart and Markov random field [16]. However, superpixel-based algorithms depend on the performance of classifiers. These model-based classifiers rely heavily on accurate statistical models, while PolSAR parameter estimation tasks are sensitive to data obtained from different environments or platforms, which makes it difficult to widely apply the algorithms to various PolSAR datasets [17].

The data-driven machine learning classification algorithm is also a good combination of superpixels, such as random forest (RF) [18], XGBoost (XGB) [19], support vector machine (SVM) [20] are used to classify PolSAR image datasets. The input of machine learning algorithms is often characterized by various polarization decomposition methods, such as Cloude–Pottier decomposition [21,22], Freeman decomposition [23] and Yamaguchi decomposition [24]. However, high-dimensional input data will lead to a “dimension disaster”, which has led some researches to try to select the optimal feature combination [25,26]. In the face of many kinds of ground object classification methods, feature selection is still difficult. These manually selected features limit the performance of the classifier and cannot solve the evidence conflict problem when the local superpixel segmentation is low-quality.

In recent years, deep learning has made outstanding achievements in PolSAR image processing tasks [27]. Benefiting from its end-to-end characteristics, the process of manual feature extraction can be transformed into automatic depth feature extraction. The convolutional neural network (CNN) is a typical representation of deep learning [28]. The improved complex-valued CNN (CV-CNN) can make full use of the amplitude and phase information of images [29,30]; adding a context mechanism and attention module to the network can further automatically extract useful features [31,32], and deep networks such as capsule network [33] are also applied to PolSAR image classification. The fusion of multi-temporal SAR data and optical data has achieved higher classification accuracy by using a 2D-CNN-based classifier [34]. These methods have achieved high classification accuracy. However, as the network becomes wider and deeper, the requirement for computing resources of the model increases significantly. In addition, its end-to-end characteristics are difficult to combine with the superpixel method, which makes it difficult to avoid the influence of speckle noise. To solve this problem, the probability distribution of the output of a multilayer automatic encoder is used as a measure, and the k-nearest neighbor (KNN) is introduced to improve the classification accuracy of superpixels [35]. Lately, SLIC has been added to the end-to-end process to automatically seek the optimal superpixel segmentation by using a superpixel sampling network (SSN) [36], which improves the effect of superpixel edge fitting. However, the impact of a large number of training iterations on classification efficiency must be considered.

To sum up, in complex local images, the existing superpixel methods cannot fully fit the edge of terrains, and rely heavily on the accuracy of the classifier. In addition, the simple majority voting mechanism cannot reflect the evidence conflict in a single superpixel, which easily leads to the loss of accuracy in terrain edge classification. At present, there are many improved methods for superpixel image segmentation [11,37,38], but there is a lack of an evaluation method for the quality of each classified superpixel, which is incomplete in the PolSAR image classification based on superpixels.

In order to solve the above problems, this study proposes a method to evaluate the quality of superpixel classification called superpixel entropy discrimination (SED), and constructed a hybrid classification model. Firstly, a light gradient boosting machine (LGBM) was used to process large-dimensional input features with an extremely fast training speed and a strong feature selection ability [39], and SLIC was used to obtain superpixels, quickly obtaining the primary classification results based on pixel-by-pixel voting in a single superpixel. Secondly, information entropy was used to evaluate the quality of superpixel classification. The features of ground objects in high-entropy superpixels are complex, and it is difficult to express the accurate information of classification by manually extracting features. CV-CNN was used to reclassify the high-entropy superpixels, extracting features automatically, and the secondary classification results were obtained. The main contributions of our work include:

- (1)

- A superpixel entropy discrimination method was proposed, and the definition of superpixel entropy based on information entropy was proposed to describe the evidence conflict in a single superpixel, which was used to evaluate the quality of superpixel classification.

- (2)

- A two-level cascade classifier based on LGBM+SLIC and CV-CNN was proposed. The superpixels with high entropy were reclassified by CV-CNN to reduce the accuracy loss caused by evidence conflict in a single superpixel.

- (3)

- The training and testing time consumption of LGBM+SLIC were short. The integrated model could achieve the same accuracy by using CV-CNN for the whole image, which greatly shortened the testing time while maintaining high-accuracy performance.

The rest of this paper is organized as follows: Section 2 introduces the main framework and submodules of our proposed method and gives the derivation process of SED. Section 3 shows the results and analysis of our proposed method for two typical PolSAR datasets. Section 4 discusses the effect of SED under different conditions. Finally, Section 5 represents the conclusion.

2. Proposed Method

In past research, the combination of superpixel segmentation and a classification algorithm has played an important role in the ground object classification of PolSAR images, and has achieved good classification accuracy. Our proposed method also continues this main technical route and improves on it. We proposed SED based on information entropy to evaluate the quality of single-superpixel classification, and constructed a two-level cascade classifier.

2.1. Main Framework

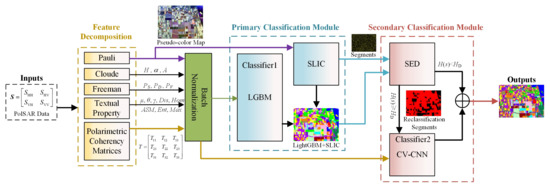

In this paper, we proposed a hybrid model based on superpixel entropy discrimination (SED), which was applied to PolSAR terrain classification. PolSAR image data were used as the input, and the polarimetric features and texture features of the image were obtained in the feature decomposition module. The processed data entered a two-level cascade classification model. In the primary classification module, LGBM+SLIC was used to classify the previous data. This combination can avoid the heavy feature selecting work and obtain the classification results fast. In the secondary classification module, the SED method was used to evaluate the quality of superpixel classification, and CV-CNN was used to reclassify the high-entropy superpixel data to obtain the complete image classification results after fusion. The flow of the whole method is shown in Figure 1. In the following, the feature decomposition and two classification modules are introduced in detail according to the flow, and the derivation process of SED is given in Section 2.4.1.

Figure 1.

Schematic diagram of the proposed method.

2.2. Feature Decomposition

At first, the polarimetric scattering matrix S is often used to define the features of PolSAR images. The complex matrix S is expressed as:

In the single-backscattering system, S satisfies the reciprocity S = S, and then the three-dimensional Pauli eigenvector is defined:

The 3 × 3 complex polarimetric coherence matrix T can be obtained:

where is the average of the set. The nondiagonal data in (3) are in the plural form, and T is represented as , , , Re(), Im(), Re (), Im(), Re() and Im().

Polarization decomposition theories include coherent decomposition and target polarization decomposition, which is based on the eigenvector or scattering model. They characterize different features of PolSAR images [31]. Although complex features can hardly be represented by using one decomposition, the combination of multiple decomposition methods can represent it from different perspectives.

Then, considering the relationship between adjacent pixels in the PolSAR image, the pixels belonging to a certain class are extremely dependent on their neighborhood space. The texture feature can reflect the spatial distribution characteristics of the image, which is one of the most important features of the PolSAR image. This paper used the gray-level co-occurrence matrix (GLCM) [40] to extract eight texture features of the image.

Reasonably selected features have positive effects on classification results. We deliberately selected different types of features in the feature selection process, and we also tried different combinations, such as Yamaguchi decomposition and Krogager decomposition [41]. Finally, the combination of Cloude–Pottier decomposition, Freeman–Durden decomposition, Pauli decomposition [42] and texture feature was found to have a better effect.

Finally, the polarimetric coherence matrix T, nine decomposition parameters and eight texture features were used to constitute the 26-dimensional features for the classification of PolSAR images, as shown in Table 1.

Table 1.

Features of PolSAR image.

2.3. Primary Classification Module

2.3.1. Light Gradient Boosting Machine (LGBM)

The LGBM is part of the boosting algorithm in the field of ensemble learning [43], and it was developed on the basis of the gradient boosting decision tree (GBDT) [44]. The LGBM has extremely fast training and testing speed, and has a strong feature selection ability due to its unique gradient-based one-side sampling (GOSS) and exclusive feature bundling (EFB) algorithms [39], which can avoid the problem of “dimension explosion” caused by the input of large-dimensional features of PolSAR images [45].

The LGBM uses the gradient lifting algorithm to iteratively generate a cluster of decision trees as the final prediction model. For the pre-specified loss function and the input training dataset , the LGBM generates the prediction model as follows:

Firstly, the initialized prediction model is:

where represents the label of the training set, represents the initialized output value, and N represents the size of the training set.

When the number of iterations is , and the negative gradient calculated is:

where M represents the number of base classifiers.

Secondly, fitting base classifiers:

where h(•) represents the expression of the base classifiers, i.e., the decision tree, and , are the superparameter and weight of the m-th base classifier, respectively.

Then, to update the current prediction model:

Finally, the final prediction model is obtained:

The LGBM has the ability to reduce the model output deviation. Because classification based on superpixels has strong local image consistency, it can suppress the adverse impact caused by the model output variance. Therefore, the combination of an LGBM and SLIC has a positive complementary relationship.

2.3.2. Simple Linear Iterative Clustering(SLIC)

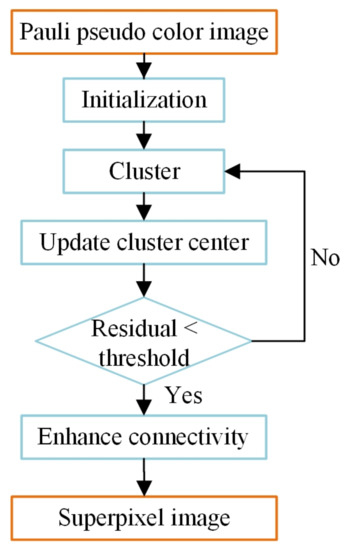

The SLIC [46] algorithm is a fast and effective superpixel generation algorithm. The SLIC algorithm flow is shown in Figure 2.

Figure 2.

Flowchart of SLIC.

The initialization of the algorithm includes:

(1) Converting the input Pauli pseudo-color image to the CIELA color space, and the pixel is represented by . Where is a coordinate of uniform color space, and is the brightness of color. is the position between red and green. is the position between yellow and blue.

(2) Placing cluster centers according to the equal step length L: , where is the position of the cluster centers, represents the position of pixel on the image, and , are coordinates of pixel . The calculation method for step length L during initialization is , where is the number of cluster centers, and S is the image size.

(3) Moving cluster centers to the position with the lowest gradient in its eight fields.

SLIC synthesizes color distance and spatial distance to obtain a new distance metric, D, for clustering. The color distance and spatial distance between two pixels, and , are calculated as follows:

The distance metric D is obtained:

where M is the maximum color distance in the cluster, and L is the step size when the cluster center is placed, which is used to replace the maximum spatial distance in the cluster.

In actual calculation, (12) constant deformation is:

The time complexity of SLIC is linear with the image size S, so SLIC has extremely high computational efficiency. After superpixels are generated, simple majority voting is used to complete the classification of a single superpixel, and then the whole image is traversed.

2.4. Secondary Classification Module

2.4.1. Superpixel Entropy Discrimination(SED)

In the local images with complex feature types, the existing superpixel methods cannot fully fit the feature edges, and are exceedingly reliant on the accuracy of the classifier. The simple majority voting mechanism also cannot reflect the evidence conflict within a single superpixel, which highly likely to lead to a loss in the accuracy of feature edge classification. At present, there is no method to evaluate the quality of each classified superpixel.

In a local region, information entropy can be used to accurately measure the difference between two pixels [2,47,48]. In the same way, information entropy can also measure the difference between superpixels. We call this superpixel entropy discrimination (SED). This paper selected the classification proportion of an LGBM in a single superpixel to construct superpixel entropy to describe the evidence conflict degree in the superpixel.

First, we calculate the classification proportion in the superpixel. When the classification category is determined, it is equivalent to the classification probability of the superpixel:

where is the classification category, n is the number of classification categories, is the number of pixels in a single superpixel, and is the total number of pixels in a single category.

Then, the information entropy of each superpixel is constructed:

where is the collection of superpixels of the whole image.

If the classification results for all pixels in a superpixel tend to be consistent, the superpixel entropy will be small, which means that the classification of the superpixel is high-quality. If there are evidence conflicts between the classification results, the superpixel entropy will be large, which means that the classification of the superpixel is low-quality.

According to the monotonic property of information entropy, there is an upper limit on the entropy of each superpixel, which is related to the number of classification categories n: . Assuming that the probability of the largest category in a single superpixel is , the entropy of a single superpixel can be expressed as:

The following optimization problems can be constructed:

where represents the probability of other classifications.

Let us construct Lagrange function:

where represents the Lagrange multiplier.

Solve Equation (18) above:

Let us assume is the discrimination threshold of superpixel entropy. Let equal the maximum value of (20):

This transformation is mainly used to facilitate the evaluation of the quality of superpixel classification. Superpixel entropy still has all the properties of information entropy.

- (1)

- If , it means that a classification dominates in a single superpixel. The superpixel has high classification quality. The uncertainty in this superpixel is mainly caused by speckle noise or small-scale classification errors of the primary classifier. It is feasible to use the maximum classification instead of local region classification.

- (2)

- If , it means that multiple classifications may account for similar proportions in a single superpixel. The kind of superpixel has low classification quality. The uncertainty in this superpixel is mainly caused by the error of superpixel edge segmentation or the large-scale classification error of the primary classifier. It is not feasible to use the maximum classification to replace the local area. We used CV-CNN to reclassify it.

2.4.2. Complex-Valued Convolutional Neural Network (CV-CNN)

Compared with manually extracting image features, depth learning methods, such as CNN, can deeply mine the joint features between adjacent pixels through the convolution-pooling process. The application in PolSAR images has proved that the efficiency of this automatic feature extraction classification method is much higher than that of the manual feature extraction classification method, especially in local images with complex terrain, ground object boundary and noise point [28,49,50]. At present, quite a number of PolSAR image researches are using CNN-related classification methods. In order to reduce the complexity of the whole network, we used CV-CNN to reclassify the pixels within the high-entropy superpixels, using the combination of two convolution-pooling layers and two full connection layers. The specific network superparameter settings refer to [36].

3. Experiments and Results

3.1. Experimental Setup

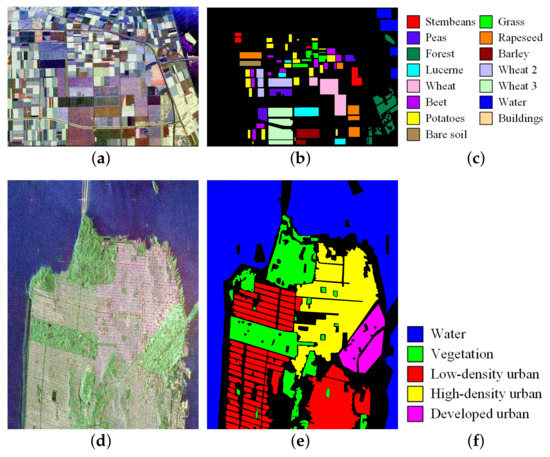

The two measured datasets used in the experiment in this paper were the image of Flevoland in the Netherlands taken by the AIRSAR on an airborne platform at the L band and the image of San Francisco in the United States taken by Radarsat-2 on a spaceborne platform at the C band. These two datasets are often used for PolSAR image classification. Pauli pseudo-color images and ground truth maps of the two datasets are presented in Figure 3. The size of the Flevoland image was 750 × 1024, and there were 15 different types of ground objects in total. The ground object truth of the image was drawn with reference to [36]; the size of the San Francisco image was 1800 × 1380, and there were five different types of ground objects. The ground truth value of the image was drawn with reference to [51]. The black part in the ground truth is the unlabeled area.

Figure 3.

AIRSAR Flevoland and RS-2 San Francisco datasets. (a) Pauli pseudo-color image of Flevoland. (b) Ground truth of Flevoland. (c) Labels of Flevoland. (d) Pauli pseudo-color image of San Francisco. (e) Ground truth of San Francisco. (f) Labels of San Francisco.

Our proposed method was compared with seven PolSAR image classification methods, including SVM, RF and XGB in machine learning and RV-CNN in deep learning. In order to compare the functions of each part of the model proposed in this paper, we also listed the classification effects of only using LGBM, only using LGBM+SLIC and only using CV-CNN. The input of SVM was nine real and imaginary parts generated by the polarimetric coherence matrix T. XGB and RF were also combined with SLIC, and their input was the same as that used for the proposed method. The input of RV-CNN generated a tensor with a size of 12 × 12 × 9 for the polarimetric coherence matrix T.

In the experiments, 9% of the labeled pixels were selected as the test set and 1% as the verification set. We used the whole image as the test set, and used the overall accuracy (OA) and kappa coefficient to evaluate the classification performance. The experiments were run on Python and Intel i7-11700 CPU.

3.2. Classification Results of Flevoland Dataset

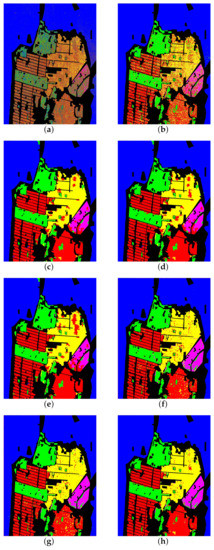

In the Flevoland dataset, the number of superpixels was set to 592, the number of base classifiers of the LGBM was set to 600, the maximum depth of base classifiers was set to 9, and the learning rate was set to 0.15. All the above parameters were obtained through 10-fold cross-validation to find the optimal superparameters. CV-CNN used a 12 × 12 local image near a single pixel to replace the category of the pixel, and the size of each input was a 12x12x6 tensor. Zero filling was performed on the outermost layer of the whole image. To prevent the network from overfitting, we set the learning rate of CV-CNN to 0.5 and the number of iterations to 50. According to (20), the threshold for SED is negatively correlated with . was set to 0.75, and its corresponding = 1.7631, which will be discussed in detail in the next section. The classification results of the Flevoland dataset are shown in Table 2, and the classification output images are shown in Figure 4.

Table 2.

Accuracy results of Flevoland Dataset.

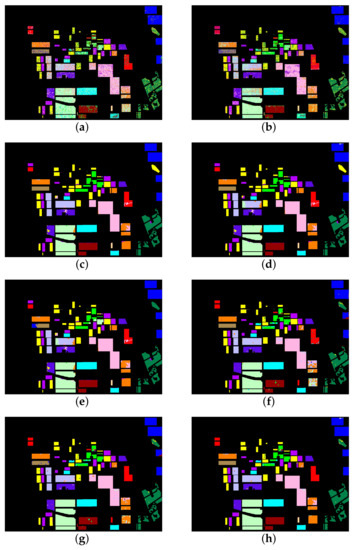

Figure 4.

Classification results of Flevoland dataset overlaid ground truth. (a) SVM (b), LGBM (c), LGBM+SLIC (d), XGB+SLIC (e), RF+SLIC (f), RV-CNN (g), CV-CNN (h), proposed method.

Due to the limitations of manually extracted features, SVM and LGBM, which are not combined with superpixel segmentation, were seriously affected by speckle noise, resulting in their low classification accuracy, as shown in Figure 4a,b. LGBM-SLIC, XGB-SLIC and RF-SLIC had obvious recognition effects on wheat, grass and water because superpixel segmentation has good segmentation effects on these categories. The OA of these three methods was close, as seen in Figure 4c–e. Because RV-CNN only used the amplitude of the polarization coherence matrix as input, its overall performance was not as good as that of the classification method based on superpixels, as seen in Figure 4f. Affected by the performance of the classifier and the inaccurate edge segmentation of the features, CV-CNN performed better than the above methods, as shown in Figure 4g. However, it was blatantly obvious that RV-CNN and CV-CNN could not identify buildings. This was due to the combination of fewer building sample iterations. This phenomenon can be avoided by increasing the iterations from 50 to 100. The OA of CV-CNN was increased to 98.01%, and the OA of our proposed method also increased to 98.48%; nevertheless, the training time and the possibility of overfitting increased accordingly.

As seen in Table 2, our proposed method achieved the best classification results for a total of seven types of ground objects. This method performed best for peas, bare soil and barley, which were accurately classified 1.02%, 0.72% and 1.96% more often by our method than the second most accurate one. In other classifications, such as grape and rapeseed, the accuracy of our proposed method was always higher than that of CV-CNN. Improving the performance of CV-CNN would also improve the accuracy of our proposed method. This is related to the secondary classification module using the feature of CV-CNN reclassification. The proposed method also achieved the best OA and kappa coefficients. The OA was 1.2% higher than that of the CV-CNN with the highest score among the other seven classification methods, and the kappa coefficient reached 0.9709. This is because this method improved the accuracy of edge classification of the superpixels. Therefore, in the Flevoland image classification experiment, our proposed method achieved the optimal classification results.

3.3. Classification Results of San Francisco Dataset

In the San Francisco dataset, the number of superpixels was set to 2148, the number of base classifiers was set to 1000, and the rest of the superparameter designs were the same as those described in Section 3.2. The classification results for the San Francisco dataset are shown in Table 3, and the classification image is shown in Figure 5.

Table 3.

Accuracy results of San Francisco Dataset.

Figure 5.

Classification results of San Francisco dataset overlaid with ground truth. (a) SVM (b), LGBM (c) LGBM+SLIC (d), XGB+SLIC (e), RF+SLIC (f), RV-CNN (g), CV-CNN (h), proposed method.

According to Table 3, our proposed method achieved the best classification results for water, vegetation, low-density urban and slashed urban, and nearly had the highest accuracy for high-density urban. It can be seen from the comparison of the subgraphs in Figure 5 that this method further avoided the influence of speckle noise.

The OA of this method reached 97.52%, which is 1.1% higher than that of CV-CNN, and the kappa coefficient reached 0.9643, which is also higher than that achieved by the other seven classification methods. In a word, in the experiment of image classification in San Francisco, our proposed method achieved the best results. In fact, the performance of the model was affected by several parameters, such as the number of superpixels k and the superpixel entropy . The model applied in the dataset did not have the best performance in the extreme case. We will discuss this in detail in the next section.

4. Disussion

The performance of our proposed method was affected by the extent of the superpixel segmentation k and the superpixel entropy . In this section, we first carefully observe the impact of SED on the edge segmentation of ground objects, and then discuss the performance changes in the model with different superparameters.

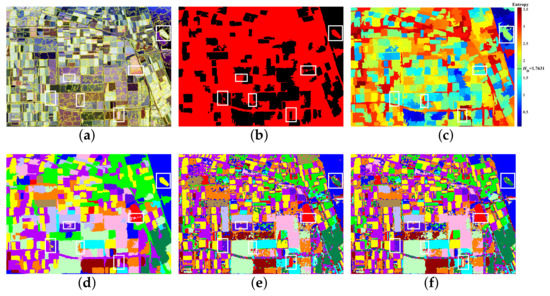

4.1. Classification Effect of SED

Focusing on the Flevoland dataset of Section 3.2, Figure 6 shows the performance of the superpixel entropy. Considering Figure 6d–f, pay attention to the forest area in the green box. LGBM+SLIC was incorrectly classified. CV-CNN could correctly classify this region. Our proposed method could also use the results of CV-CNN through SED to obtain the correct classification. On the contrary, as seen for the building area in the bottom-right box, CV-CNN did not correctly classify this, but SED judged that the superpixel quality of the area was high enough, and locked the final result to obtain the correct classification of LGBM+SLIC. As seen for the box on the left side of the image, the terrain edge classification accuracy for peas and wheat was also significantly improved, indicating that SED is helpful for the classification of feature edges.

Figure 6.

Performance of the superpixel entropy when k = 592, = 0.75, = 1.7631. (a) Superpixel on Pauli pseudo-color image. (b) Superpixel distinguished by SED. Red color represents superpixels reclassified using CV-CNN. (c) The heat map of superpixel entropy, which also reflects the degree of evidence conflict in the superpixel. (d) The full image result of LGBM+SLIC. (e) The full image result of CV-CNN. (f) The full-image result of our proposed method.

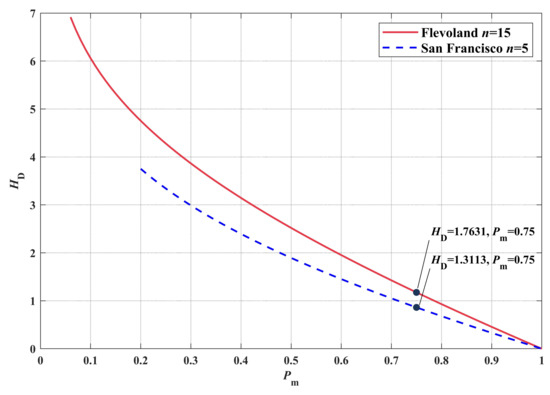

4.2. Configuration of SED

In the experiments, we manually specified = 0.75, which represents the probability that the largest category accounts for more than 75% of a single superpixel. We further explore the relationship between and in Figure 7.

Figure 7.

- curve.

The superpixel entropy thresholds were = 1.7631 for Flevoland and = 1.3113 for San Francisco. If was higher than this value, the corresponding superpixel would be reclassified by CV-CNN. But this is not necessarily the value that obtains the optimal accuracy, so we tried to find the influence of and k on OA.

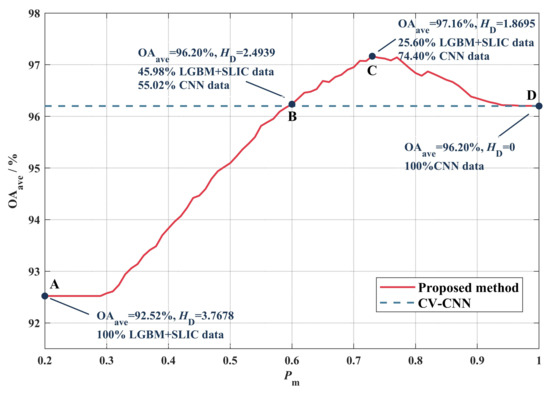

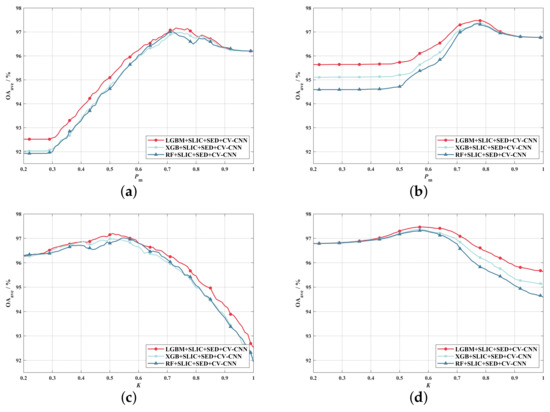

Keeping the models of LGBM-SLIC and CV-CNN unchanged, we considered the changes in OA under the conditions of different numbers of superpixels, k, and superpixel entropy, . We set the initial placement step of each superpixel to 34–57, and the corresponding number of superpixels to 244–750, which is a moderate range of computing resource consumption. The threshold for SED was negatively correlated with . We set to change from 0 to 1 according to the step of 0.01 to change . After 2400 experiments, Figure 8 was obtained.

Figure 8.

OA- curve. The curve shows the average OA with different numbers of superpixels when the number of superpixels, k, ranged from 244 to 750. The percentage of data represents the proportion of LGBM+SLIC and CV-CNN data used by our proposed method in the test set.

When = 0 or 1, point A and point D on the curve represent the when using LGBM+SLIC or CV-CNN, respectively, for all data in the proposed model. Point C indicates that the maximum value was obtained when = 0.73. At this time, = 97.16%, which is 0.94% higher than that of CV-CNN. Point B indicates that when = 0.60, the of our proposed method was the same as that of CV-CNN, but only 55.02% of the data were tested using CV-CNN. Because the speed of LGBM+SLIC is fast, our proposed method could greatly shorten the test time cost to process the whole image, which was 33% shorter than that of CV-CNN with the same accuracy at 96.20%, and the increase in the training time cost was almost negligible. The comparison of testing time cost is shown in Table 4.

Table 4.

Comparison of time cost.

In addition, on the basis of Figure 8, we also used XGB and RF to replace the LGBM in our primary classification model. The average OA curve is shown in Figure 9a. We performed the same operation on the San Francisco dataset, as shown in in Figure 9b. The initial placement step of each superpixel for the San Francisco dataset was 32–61, and accordingly, the corresponding number of superpixels was 690–2425. In terms of the overall trend, the largest improvement of in the two images was concentrated in = 0.7–0.8. According to the experimental results, our manual value of = 0.75 is reasonable.

Figure 9.

The average OA curve when LGBM, XGB and RF were used as the curves of the primary classifier. (a) - curve for Flevoland. (b) - curve for San Francisco. (c) -K curve for Flevoland. (d) -K curve for San Francisco.

We also tested the method of using different forms of the threshold of superpixel entropy to find out the maximum value of all superpixel entropy in the whole image, and set the threshold coefficient K:

We used (22) instead of (21) to implement classification on two datasets according to the above method and also made the -K curve in Figure 9c,d.

As seen in Figure 9, there was a difference in accuracy between the curves, which is related to the performance of the classifier itself. Most notably, whether or K was used to design , our proposed method always had a maximum value higher than the OA of the SLIC and CV-CNN method combined for these two datasets, which shows that the experimental results in Section 3 are not an isolated phenomenon, and the SED and hybrid model proposed in this paper are effective. In addition, in the current model, the number of superpixels and still need to be manually specified. The phenomenon of the maximum value always appearing indicates that SED has the possibility of adding to the end-to-end model. We can automatically learn these parameters through the loss function, which is the direction of our further research.

There are some limitations of this paper. One limitation is that SED cannot play a role when is greater than the threshold, and the classification corresponding to is wrong. This is because when only pixel-by-pixel voting is used, the OA loss of superpixel classification will still be caused by the classification error of the primary classifier. To prevent this kind of situation, higher requirements are needed for the performance of primary classifiers.

The other limitation is that our proposed method had a good performance in low-resolution images, and the effect of its application in high-resolution images remains to be verified. In future work, we plan to explore the application of SED in high-resolution PolSAR images. Because the information in high-resolution images is huge and complex, it is difficult to classify it directly at the pixel level, so the patch-based classification algorithm is preferred [27]. SED combines superpixel classification and the patch-based algorithm, which makes it possible to transplant it on high-resolution images. Moreover, most of the current methods used in high-resolution PolSAR images have a complex network structure, a large number of parameters and long prediction time [52]. Our proposed method had a good effect, simplifying the model and reducing time consumption, and also has great application potential in high-resolution images.

5. Conclusions

In this paper, a superpixel entropy discrimination method was proposed, and information entropy was introduced to discriminate between the classification quality of superpixels. This paper also proposed a hybrid model based on SED. Firstly, an LGBM was used to filter large-dimensional features, and SLIC was combined to quickly obtain superpixel classification results. After the classification quality of superpixels was determined by SED, CV-CNN was used to reclassify the low-quality superpixels. The results show that our proposed method can improve the classification accuracy, and SED can effectively evaluate the quality of superpixel classification. In the future, we mainly have two research directions. One is to add the superpixel entropy to the end-to-end process and the other is to explore the application of our proposed method in high-resolution PolSAR images. Simplifying the model and reducing time consumption while maintaining performance is a major challenge, and our proposed method is promising for those direction.

Author Contributions

Conceptualization, J.S., L.G. and Y.W.; methodology, L.G. and Y.W.; software, L.G. and Y.W.; validation, L.G.; formal analysis, L.G.; investigation, Y.W.; resources, J.S.; data curation, L.G. and Y.W.; writing—original draft preparation, L.G.; writing—review and editing, J.S.; visualization, L.G.; supervision, J.S.; project administration, J.S.; funding acquisition, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to thank NASA/Jet Propulsion Laboratory and CSA&MDA for providing the PolSAR data used in this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pierce, L.E.; Ulaby, F.T.; Sarabandi, K.; Dobson, M.C. Knowledge-based classification of polarimetric SAR images. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1081–1086. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Zhai, W.; Shen, H.; Huang, C.; Pei, W. Fusion of polarimetric and texture information for urban building extraction from fully polarimetric SAR imagery. Remote Sens. Lett. 2016, 7, 31–40. [Google Scholar] [CrossRef]

- Quan, S.; Xiong, B.; Xiang, D.; Zhao, L.; Zhang, S.; Kuang, G. Eigenvalue-based urban area extraction using polarimetric SAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 458–471. [Google Scholar] [CrossRef]

- Xiao, D.; Wang, Z.; Wu, Y.; Gao, X.; Sun, X. Terrain segmentation in polarimetric SAR images using dual-attention fusion network. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Ren, X.; Malik, J. Learning a classification model for segmentation. In Proceedings of the Computer Vision, IEEE International Conference on IEEE Computer Society, Nice, France, 13–16 October 2003; Volume 2, p. 10. [Google Scholar]

- Yan, J.; Yu, Y.; Zhu, X.; Lei, Z.; Li, S.Z. Object detection by labeling superpixels. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5107–5116. [Google Scholar]

- Yeo, D.; Son, J.; Han, B.; Hee Han, J. Superpixel-based tracking-by-segmentation using markov chains. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1812–1821. [Google Scholar]

- Sun, W.; Liao, Q.; Xue, J.H.; Zhou, F. SPSIM: A superpixel-based similarity index for full-reference image quality assessment. IEEE Trans. Image Process. 2018, 27, 4232–4244. [Google Scholar] [CrossRef] [Green Version]

- Gu, F.; Zhang, H.; Wang, C. A classification method for polsar images using SLIC superpixel segmentation and deep convolution neural network. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6671–6674. [Google Scholar]

- Gao, H.; Wang, C.; Xiang, D.; Ye, J.; Wang, G. TSPol-ASLIC: Adaptive superpixel generation with local iterative clustering for time-series quad-and dual-polarization SAR data. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Hou, B.; Yang, C.; Ren, B.; Jiao, L. Decomposition-feature-iterative-clustering-based superpixel segmentation for PolSAR image classification. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1239–1243. [Google Scholar] [CrossRef]

- Lee, J.S.; Grunes, M.R.; Kwok, R. Classification of multi-look polarimetric SAR imagery based on complex Wishart distribution. Int. J. Remote Sens. 1994, 15, 2299–2311. [Google Scholar] [CrossRef]

- Bi, H.; Xu, L.; Cao, X.; Xue, Y.; Xu, Z. Polarimetric SAR image semantic segmentation with 3D discrete wavelet transform and Markov random field. IEEE Trans. Image Process. 2020, 29, 6601–6614. [Google Scholar] [CrossRef]

- Song, W.; Li, M.; Zhang, P.; Wu, Y.; Tan, X.; An, L. Mixture WG Γ -MRF Model for PolSAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 905–920. [Google Scholar] [CrossRef]

- Liu, C.; Liao, W.; Li, H.C.; Wang, R.; Philips, W. Semi-supervised classification of polarimetric SAR images using Markov random field and two-level Wishart mixture model. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 990–993. [Google Scholar]

- Ahishali, M.; Kiranyaz, S.; Ince, T.; Gabbouj, M. Classification of polarimetric SAR images using compact convolutional neural networks. GIScience Remote Sens. 2021, 58, 28–47. [Google Scholar] [CrossRef]

- Fang, Y.; Zhang, H.; Mao, Q.; Li, Z. Land cover classification with gf-3 polarimetric synthetic aperture radar data by random forest classifier and fast super-pixel segmentation. Sensors 2018, 18, 2014. [Google Scholar] [CrossRef] [Green Version]

- Dong, H.; Xu, X.; Wang, L.; Pu, F. Gaofen-3 PolSAR image classification via XGBoost and polarimetric spatial information. Sensors 2018, 18, 611. [Google Scholar] [CrossRef] [Green Version]

- He, Z.; Shen, Y.; Zhang, M.; Wang, Q.; Wang, Y.; Yu, R. Spectral-spatial hyperspectral image classification via SVM and superpixel segmentation. In Proceedings of the 2014 IEEE International Instrumentation and Measurement Technology Conference (I2MTC) Proceedings, Montevideo, Uruguay, 12–15 May 2014; pp. 422–427. [Google Scholar]

- Cloude, S.R.; Pottier, E. A review of target decomposition theorems in radar polarimetry. IEEE Trans. Geosci. Remote Sens. 1996, 34, 498–518. [Google Scholar] [CrossRef]

- Aghababaee, H.; Sahebi, M.R. Incoherent target scattering decomposition of polarimetric SAR data based on vector model roll-invariant parameters. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4392–4401. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. Three-component scattering model to describe polarimetric SAR data. In Radar Polarimetry; SPIE: Bellingham, WA, USA, 1993; Volume 1748, pp. 213–224. [Google Scholar]

- Yamaguchi, Y.; Moriyama, T.; Ishido, M.; Yamada, H. Four-component scattering model for polarimetric SAR image decomposition. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1699–1706. [Google Scholar] [CrossRef]

- Wu, Q.; Wen, Z.; Wang, Y.; Luo, Y.; Li, H.; Chen, Q. A Statistical-Spatial Feature Learning Network for PolSAR Image Classification. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Yang, C.; Hou, B.; Ren, B.; Hu, Y.; Jiao, L. CNN-based polarimetric decomposition feature selection for PolSAR image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8796–8812. [Google Scholar] [CrossRef]

- Parikh, H.; Patel, S.; Patel, V. Classification of SAR and PolSAR images using deep learning: A review. Int. J. Image Data Fusion 2020, 11, 1–32. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.Q. Polarimetric SAR image classification using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.Q. Complex-valued convolutional neural network and its application in polarimetric SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Xie, W.; Ma, G.; Zhao, F.; Liu, H.; Zhang, L. PolSAR image classification via a novel semi-supervised recurrent complex-valued convolution neural network. Neurocomputing 2020, 388, 255–268. [Google Scholar] [CrossRef]

- Dong, H.; Zhang, L.; Lu, D.; Zou, B. Attention-based polarimetric feature selection convolutional network for PolSAR image classification. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, P.; Tan, X.; Li, B.; Jiang, Y.; Song, W.; Li, M.; Wu, Y. PolSAR image classification using hybrid conditional random fields model based on complex-valued 3-D CNN. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 1713–1730. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, F.; Xiang, D.; Yin, Q.; Zhou, Y.; Wang, W. PolSAR image land cover classification based on hierarchical capsule network. Remote Sens. 2021, 13, 3132. [Google Scholar] [CrossRef]

- Shakya, A.; Biswas, M.; Pal, M. Fusion and classification of multi-temporal SAR and optical imagery using convolutional neural network. Int. J. Image Data Fusion 2022, 13, 113–135. [Google Scholar] [CrossRef]

- Hou, B.; Kou, H.; Jiao, L. Classification of polarimetric SAR images using multilayer autoencoders and superpixels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3072–3081. [Google Scholar] [CrossRef]

- Wang, L.; Hong, H.; Zhang, Y.; Wu, J.; Ma, L.; Zhu, Y. PolSAR-SSN: An End-to-End Superpixel Sampling Network for PolSAR Image Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Tirandaz, Z.; Akbarizadeh, G.; Kaabi, H. PolSAR image segmentation based on feature extraction and data compression using weighted neighborhood filter bank and hidden Markov random field-expectation maximization. Measurement 2020, 153, 107432. [Google Scholar] [CrossRef]

- Zhang, L.; Han, C.; Cheng, Y. Improved SLIC superpixel generation algorithm and its application in polarimetric SAR images classification. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 4578–4581. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 1–9. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Krogager, E. New decomposition of the radar target scattering matrix. Electron. Lett. 1990, 18, 1525–1527. [Google Scholar] [CrossRef]

- Demirci, S.; Kirik, O.; Ozdemir, C. Interpretation and analysis of target scattering from fully-polarized ISAR images using Pauli decomposition scheme for target recognition. IEEE Access 2020, 8, 155926–155938. [Google Scholar] [CrossRef]

- Abou Omar, K.B. XGBoost and LGBM for Porto Seguro’s Kaggle challenge: A comparison. Prepr. Semester Proj. 2018. [Google Scholar]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Ustuner, M.; Balik Sanli, F. Polarimetric target decompositions and light gradient boosting machine for crop classification: A comparative evaluation. ISPRS Int. J. Geo-Inf. 2019, 8, 97. [Google Scholar] [CrossRef] [Green Version]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Weissgerber, F.; Colin-Koeniguer, E.; Trouvé, N.; Nicolas, J.M. A temporal estimation of entropy and its comparison with spatial estimations on PolSAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3809–3820. [Google Scholar] [CrossRef]

- Lin, H.; Wang, H.; Wang, J.; Yin, J.; Yang, J. A novel ship detection method via generalized polarization relative entropy for PolSAR images. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Liu, X.; Jiao, L.; Tang, X.; Sun, Q.; Zhang, D. Polarimetric convolutional network for PolSAR image classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3040–3054. [Google Scholar] [CrossRef] [Green Version]

- Yu, L.; Zeng, Z.; Liu, A.; Xie, X.; Wang, H.; Xu, F.; Hong, W. A Lightweight Complex-Valued DeepLabv3+ for Semantic Segmentation of PolSAR Image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 930–943. [Google Scholar] [CrossRef]

- Zuo, Y.; Guo, J.; Zhang, Y.; Lei, B.; Hu, Y.; Wang, M. A Deep Vector Quantization Clustering Method for Polarimetric SAR Images. Remote Sens. 2021, 13, 2127. [Google Scholar] [CrossRef]

- Bai, Y.; Zhao, Y.; Shao, Y.; Zhang, X.; Yuan, X. Deep learning in different remote sensing image categories and applications: Status and prospects. Int. J. Remote Sens. 2022, 43, 1800–1847. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).