Towards Improved Unmanned Aerial Vehicle Edge Intelligence: A Road Infrastructure Monitoring Case Study

Abstract

:1. Introduction

2. Related Work

2.1. UAV-Based Road Corridor Monitoring

2.1.1. Degradation Detection

2.1.2. Situational Awareness

2.1.3. Scene Understanding

2.2. Real-Time UAV Monitoring Systems

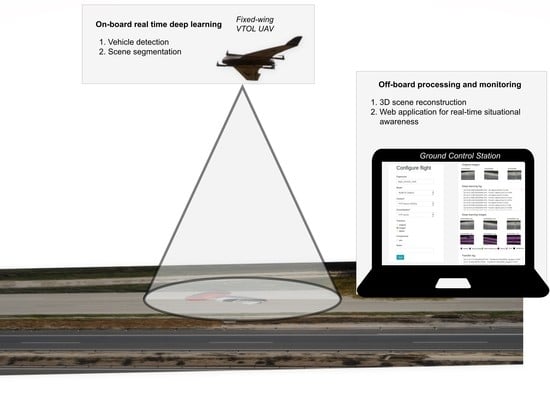

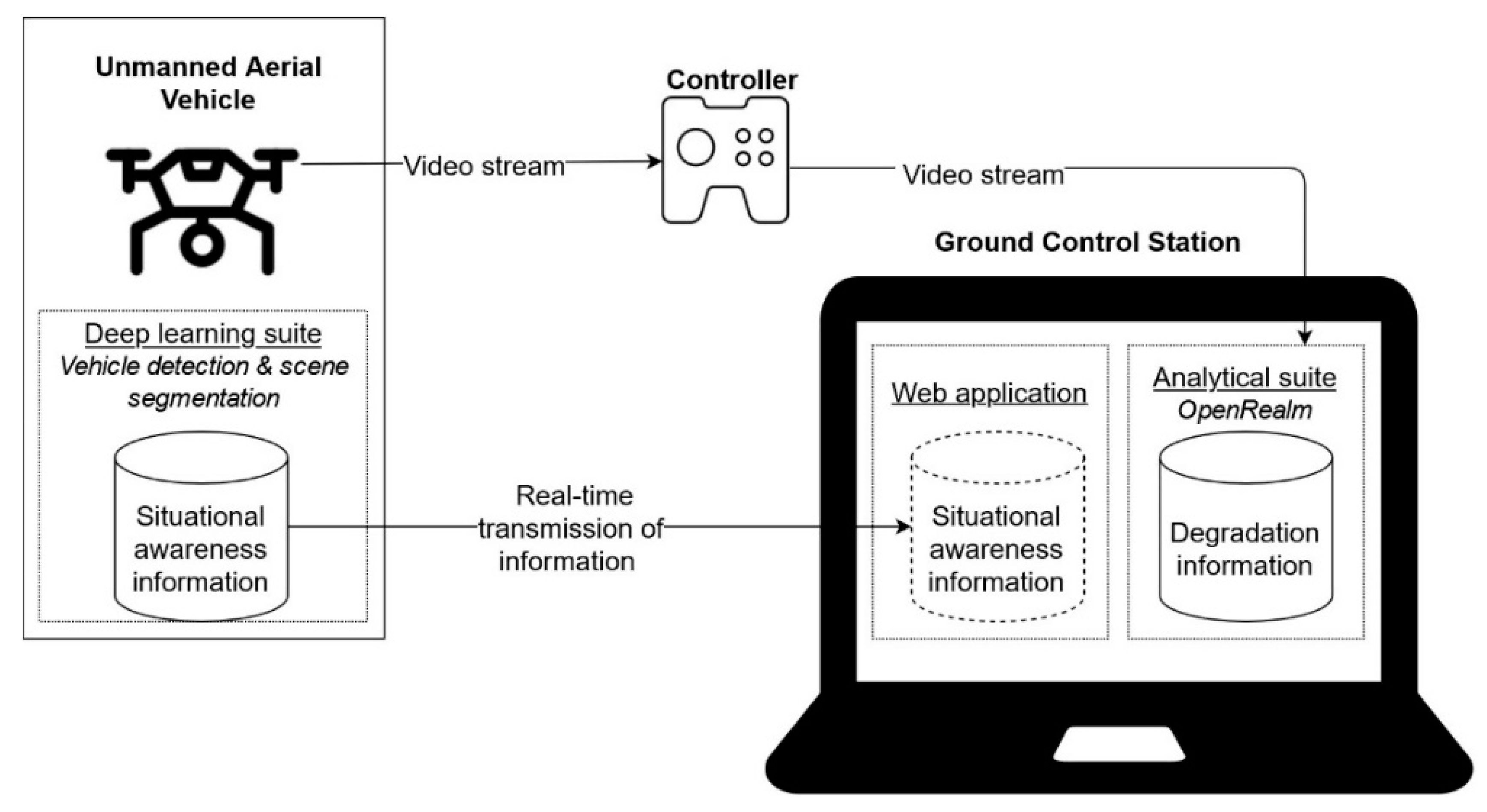

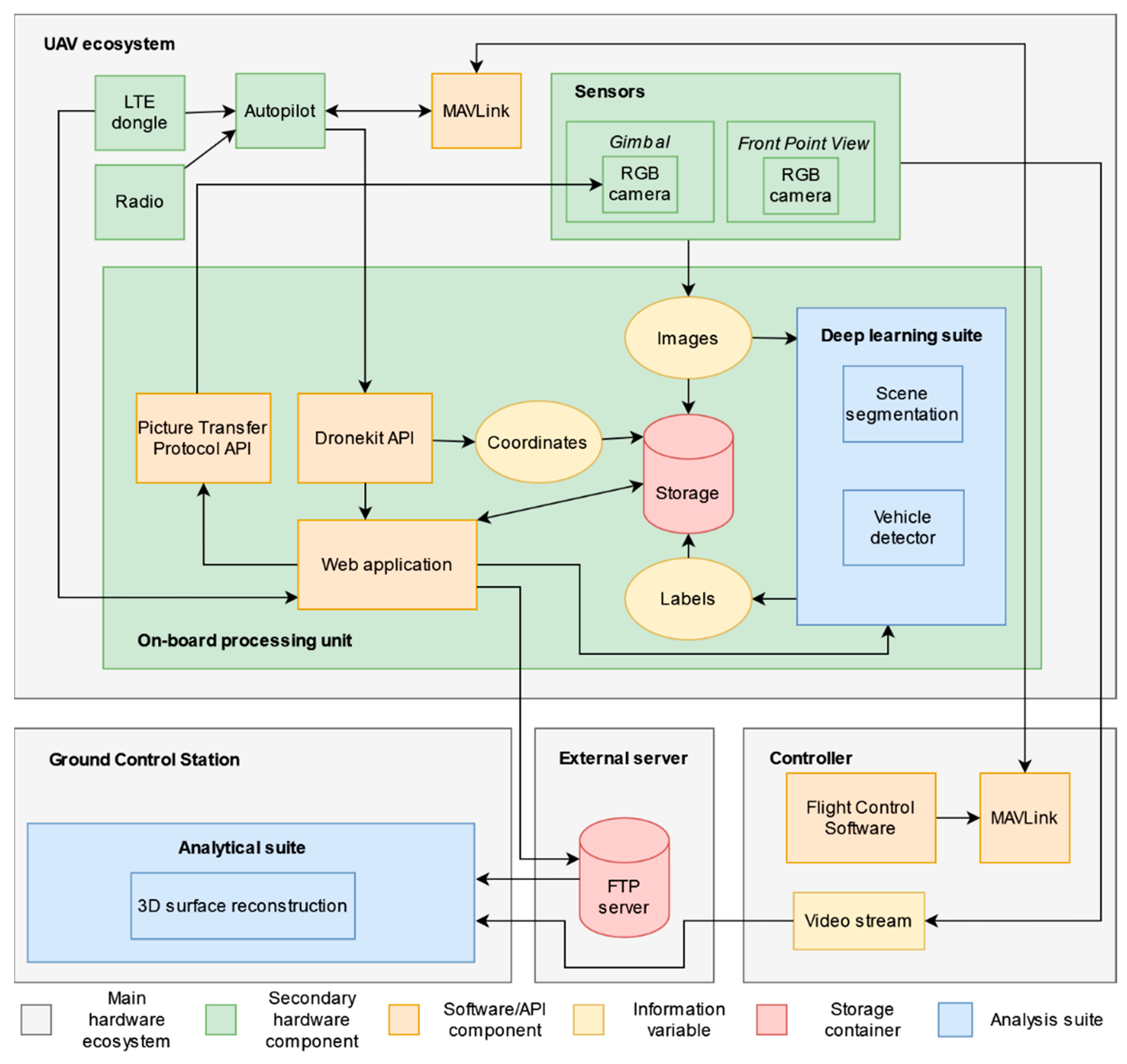

3. Real-Time UAV Monitoring System

3.1. System Design Considerations

3.2. Fixed-Wing VTOL UAV: The DeltaQuad Pro

3.3. Hardware Ecosystem

3.4. Analytical Suite

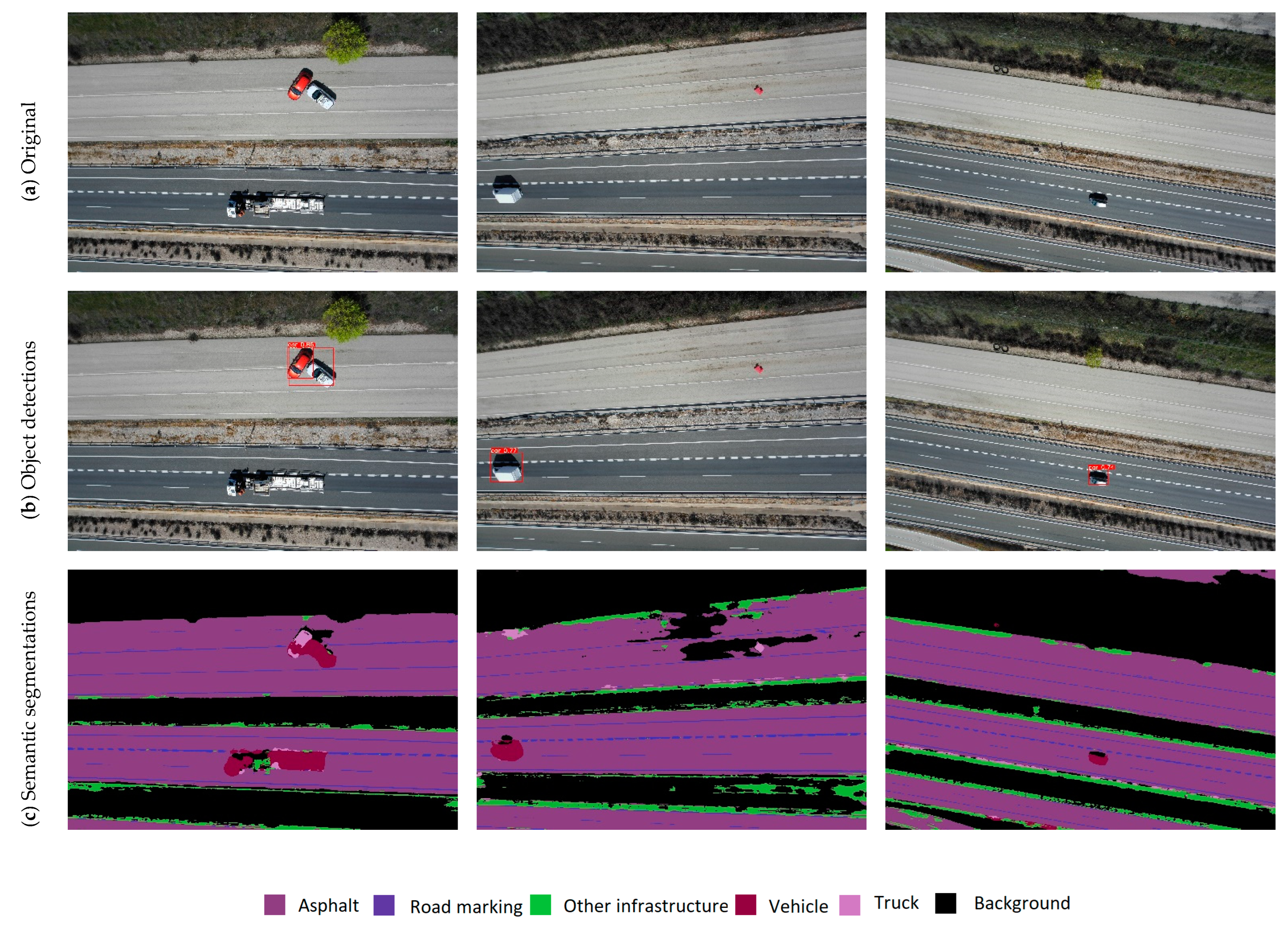

3.5. Deep Learning Suite

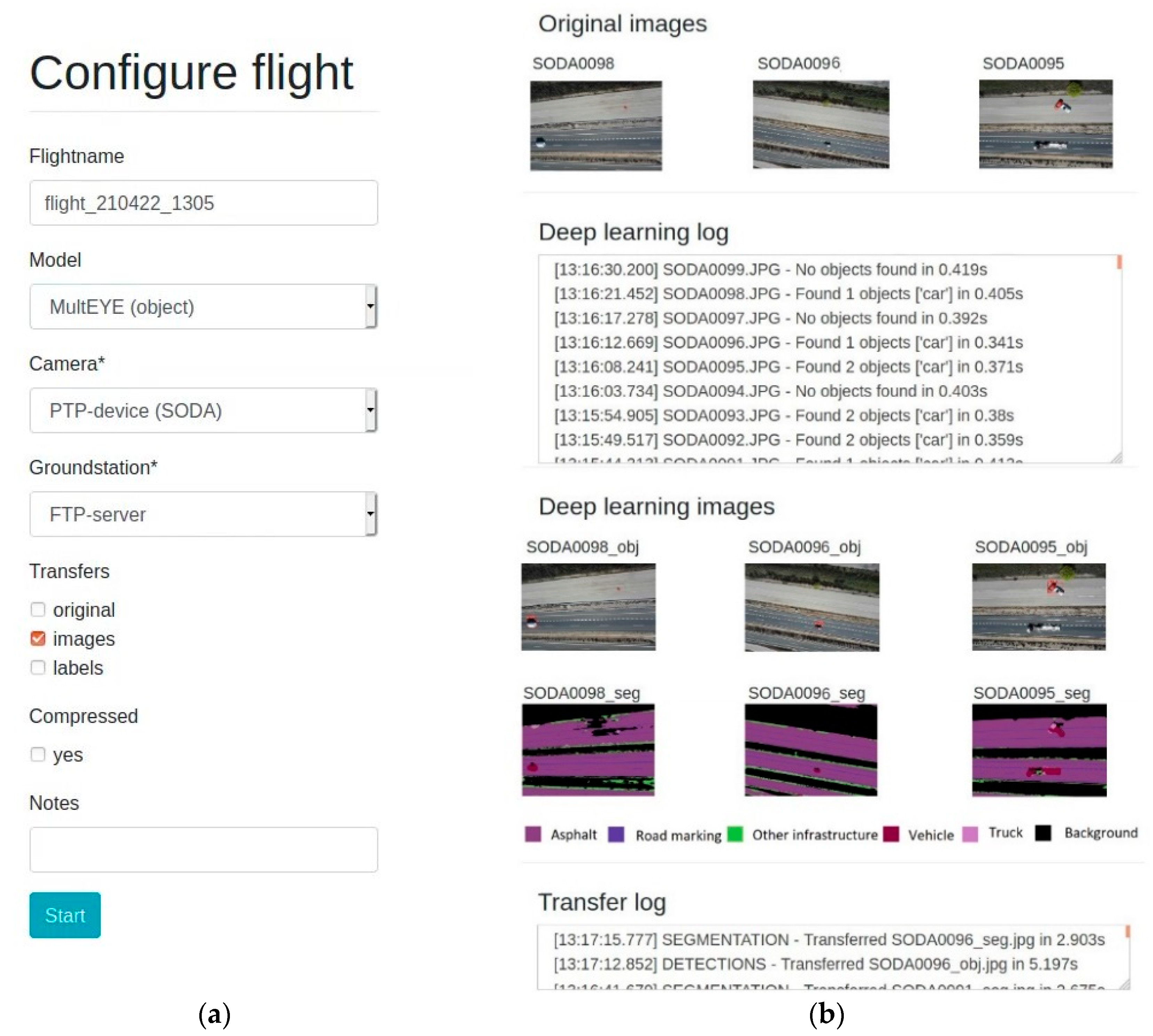

3.6. Web Application

- (1)

- The flight organization (“Flightname”);

- (2)

- The deep learning suite, e.g., which models to execute (“Model”);

- (3)

- The on-board camera and its associated capturing protocol (“Camera”);

- (4)

- The name of the GCS (“Groundstation”);

- (5)

- Which information variables should be transmitted mid-flight (“Transfer”);

- (6)

- Whether these variables should be compressed (“Compressed”); or

- (7)

- Ancillary information (“Notes”).

4. Experiment and Benchmarks

4.1. Demo: Road Infrastructure Scenario

4.2. Benchmarks

4.2.1. Latency

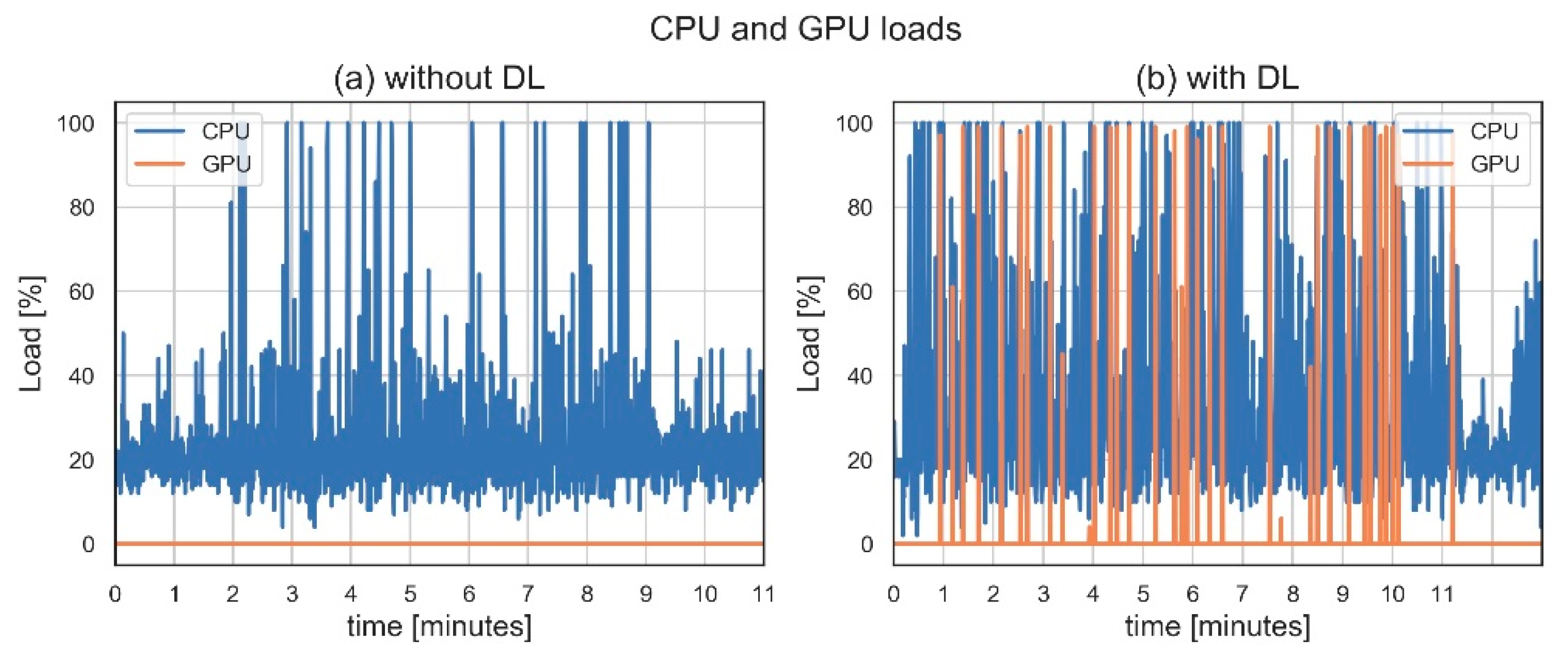

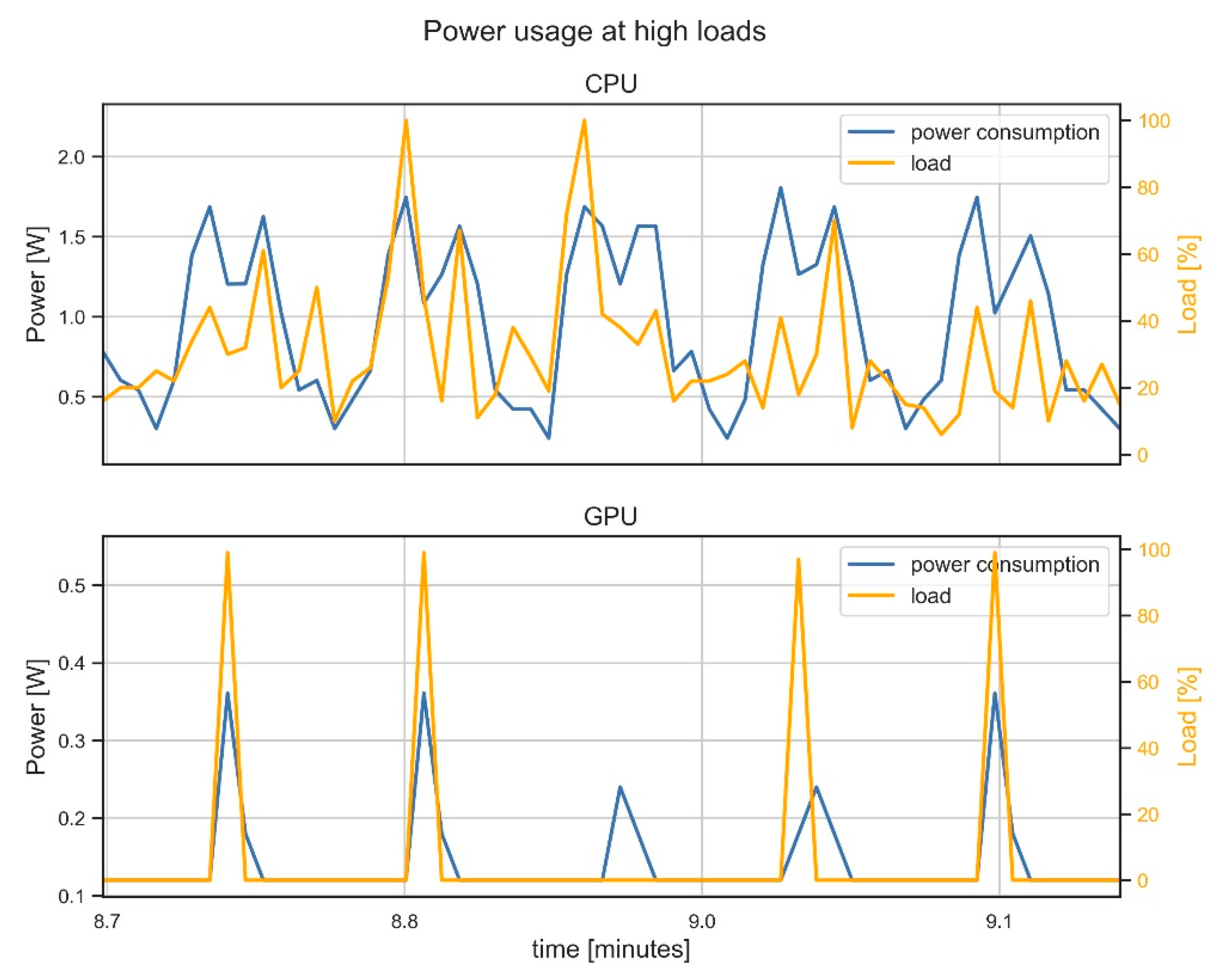

4.2.2. Computational Strain

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nex, F.; Armenakis, C.; Cramer, M.; Cucci, D.A.; Gerke, M.; Honkavaara, E.; Kukko, A.; Persello, C.; Skaloud, J. UAV in the advent of the twenties: Where we stand and what is next. ISPRS J. Photogramm. Remote Sens. 2022, 184, 215–242. [Google Scholar] [CrossRef]

- Nex, F.; Duarte, D.; Steenbeek, A.; Kerle, N. Towards real-time building damage mapping with low-cost UAV solutions. Remote Sens. 2019, 11, 287. [Google Scholar] [CrossRef]

- Azimi, S.M. ShuffleDet: Real-time vehicle detection network in on-board embedded UAV imagery. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2019; Leal-Taixé, L., Roth, S., Eds.; Springer International Publishing: Munich, Germany, 2019; pp. 88–99. [Google Scholar]

- Balamuralidhar, N.; Tilon, S.; Nex, F. MultEYE: Monitoring system for real-time vehicle detection, tracking and speed estimation from UAV imagery on edge-computing platforms. Remote Sens. 2021, 13, 573. [Google Scholar] [CrossRef]

- Schellenberg, B.; Richardson, T.; Richards, A.; Clarke, R.; Watson, M. On-Board Real-Time Trajectory Planning for Fixed Wing Unmanned Aerial Vehicles in Extreme Environments. Sensors 2019, 19, 4085. [Google Scholar] [CrossRef]

- Vandersteegen, M.; Van Beeck, K.; Goedeme, T. Super accurate low latency object detection on a surveillance UAV. In Proceedings of the 16th International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 27–31 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Wu, H.H.; Zhou, Z.; Feng, M.; Yan, Y.; Xu, H.; Qian, L. Real-time single object detection on the UAV. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1013–1022. [Google Scholar]

- Hein, D.; Kraft, T.; Brauchle, J.; Berger, R. Integrated UAV-Based Real-Time Mapping for Security Applications. ISPRS Int. J. Geo-Inform. 2019, 8, 219. [Google Scholar] [CrossRef]

- Gleave, S.D.; Frisoni, R.; Dionori, F.; Casullo, L.; Vollath, C.; Devenish, L.; Spano, F.; Sawicki, T.; Carl, S.; Lidia, R.; et al. EU Road Surfaces: Economic and Safety Impact of the Lack of Regular Road Maintenance; Publications Office of the European Union: Brussels, Belgium, 2014.

- Hallegatte, S.; Rentschler, J.; Rozenberg, J. Lifelines. The Resilient Infrastructure Opportunity; The World Bank: Washington, DC, USA, 2019. [Google Scholar]

- Chan, C.Y.; Huang, B.; Yan, X.; Richards, S. Investigating effects of asphalt pavement conditions on traffic accidents in Tennessee based on the pavement management system (PMS). J. Adv. Transp. 2010, 44, 150–161. [Google Scholar] [CrossRef]

- Zhang, C.; Elaksher, A. An Unmanned Aerial Vehicle-Based Imaging System for 3D Measurement of Unpaved Road Surface Distresses. Comput. Civ. Infrastruct. Eng. 2012, 27, 118–129. [Google Scholar] [CrossRef]

- Nappo, N.; Mavrouli, O.; Nex, F.; van Westen, C.; Gambillara, R.; Michetti, A.M. Use of UAV-based photogrammetry products for semi-automatic detection and classification of asphalt road damage in landslide-affected areas. Eng. Geol. 2021, 294, 106363. [Google Scholar] [CrossRef]

- Tan, Y.; Li, Y. UAV Photogrammetry-Based 3D Road Distress Detection. ISPRS Int. J. Geo-Inform. 2019, 8, 409. [Google Scholar] [CrossRef]

- Roberts, R.; Inzerillo, L.; Di Mino, G. Using UAV Based 3D Modelling to Provide Smart Monitoring of Road Pavement Conditions. Information 2020, 11, 568. [Google Scholar] [CrossRef]

- Biçici, S.; Zeybek, M. An approach for the automated extraction of road surface distress from a UAV-derived point cloud. Autom. Constr. 2021, 122, 103475. [Google Scholar] [CrossRef]

- Saad, A.M.; Tahar, K.N. Identification of rut and pothole by using multirotor unmanned aerial vehicle (UAV). Measurement 2019, 137, 647–654. [Google Scholar] [CrossRef]

- Dorafshan, S.; Thomas, R.J.; Coopmans, C.; Maguire, M. A Practitioner ’s Guide to Small Unmanned Aerial Systems for Bridge Inspection. Infrastructures 2019, 4, 72. [Google Scholar] [CrossRef]

- Humpe, A. Bridge inspection with an off-the-shelf 360° camera drone. Drones 2020, 4, 67. [Google Scholar] [CrossRef]

- Morgenthal, G.; Hallermann, N. Quality Assessment of Unmanned Aerial Vehicle (UAV) Based Visual Inspection of Structures. Adv. Struct. Eng. 2014, 17, 289–302. [Google Scholar] [CrossRef]

- Chen, S.; Laefer, D.F.; Mangina, E.; Zolanvari, S.M.I.; Byrne, J. UAV Bridge Inspection through Evaluated 3D Reconstructions. J. Bridg. Eng. 2019, 24, 05019001. [Google Scholar] [CrossRef]

- Calvi, G.M.; Moratti, M.; O’Reilly, G.J.; Scattarreggia, N.; Monteiro, R.; Malomo, D.; Calvi, P.M.; Pinho, R. Once upon a Time in Italy: The Tale of the Morandi Bridge. Struct. Eng. Int. 2019, 29, 198–217. [Google Scholar] [CrossRef]

- Nguyen, H.H.; Tran, D.N.N.; Jeon, J.W. Towards Real-Time Vehicle Detection on Edge Devices with Nvidia Jetson TX2. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Seoul, Korea, 1–3 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Li, J.; Chen, S.; Zhang, F.; Li, E.; Yang, T.; Lu, Z. An adaptive framework for multi-vehicle ground speed estimation in airborne videos. Remote Sens. 2019, 11, 1241. [Google Scholar] [CrossRef]

- Hernández, D.; Cecilia, J.M.; Cano, J.; Calafate, C.T. Flood Detection Using Real-Time Image Segmentation from Unmanned Aerial Vehicles on Edge-Computing Platform. Remote Sens. 2022, 14, 223. [Google Scholar] [CrossRef]

- Popescu, D.; Ichim, L.; Caramihale, T. Flood areas detection based on UAV surveillance system. In Proceedings of the 2015 19th International Conference on System Theory, Control and Computing (ICSTCC), Cheile Gradistei, Romania, 14–16 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 753–758. [Google Scholar]

- Kerle, N.; Nex, F.; Gerke, M.; Duarte, D.; Vetrivel, A. UAV-based structural damage mapping: A review. ISPRS Int. J. Geo-Inform. 2020, 9, 14. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A Deep Learning Based Forest Fire Detection Approach Using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–5. [Google Scholar]

- Poudel, R.P.K.; Liwicki, S.; Cipolla, R. Fast-SCNN: Fast semantic segmentation network. In Proceedings of the 30th British Machine Vision Conference, Cardiff, UK, 9–12 September 2019; BMVA Press: Swansea, UK, 2019; p. 289. [Google Scholar]

- Chen, Z.; Dou, A. Road damage extraction from post-earthquake uav images assisted by vector data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Beijing, China, 7–10 May 2018; Volume XLII–3, pp. 211–216. [Google Scholar]

- Bai, L.; Lyu, Y.; Huang, X. RoadNet-RT: High Throughput CNN Architecture and SoC Design for Real-Time Road Segmentation. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 704–714. [Google Scholar] [CrossRef]

- Hao, X.; Hao, X.; Zhang, Y.; Li, Y.; Wu, C. Real-time semantic segmentation with weighted factorized-depthwise convolution. Image Vis. Comput. 2021, 114, 104269. [Google Scholar] [CrossRef]

- Yasrab, R. ECRU: An Encoder-Decoder Based Convolution Neural Network (CNN) for Road-Scene Understanding. J. Imaging 2018, 4, 116. [Google Scholar] [CrossRef]

- Yang, M.Y.; Kumaar, S.; Lyu, Y.; Nex, F. Real-time Semantic Segmentation with Context Aggregation Network. ISPRS J. Photogramm. Remote Sens. 2021, 178, 124–134. [Google Scholar] [CrossRef]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic object classes in video: A high-definition ground truth database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

- Lyu, Y.; Vosselman, G.; Xia, G.S.; Yilmaz, A.; Yang, M.Y. UAVid: A semantic segmentation dataset for UAV imagery. ISPRS J. Photogramm. Remote Sens. 2020, 165, 108–119. [Google Scholar] [CrossRef]

- Meng, L.; Peng, Z.; Zhou, J.; Zhang, J.; Lu, Z.; Baumann, A.; Du, Y. Real-Time Detection of Ground Objects Based on Unmanned Aerial Vehicle Remote Sensing with Deep Learning: Application in Excavator Detection for Pipeline Safety. Remote Sens. 2020, 12, 182. [Google Scholar] [CrossRef]

- Hossain, S.; Lee, D.J. Deep learning-based real-time multiple-object detection and tracking from aerial imagery via a flying robot with GPU-based embedded devices. Sensors 2019, 19, 3371. [Google Scholar] [CrossRef]

- Maltezos, E.; Douklias, A.; Dadoukis, A.; Misichroni, F.; Karagiannidis, L.; Antonopoulos, M.; Voulgary, K.; Ouzounoglou, E.; Amditis, A. The inus platform: A modular solution for object detection and tracking from uavs and terrestrial surveillance assets. Computation 2021, 9, 12. [Google Scholar] [CrossRef]

- Yazid, Y.; Ez-Zazi, I.; Guerrero-González, A.; El Oualkadi, A.; Arioua, M. UAV-Enabled Mobile Edge-Computing for IoT Based on AI: A Comprehensive Review. Drones 2021, 5, 148. [Google Scholar] [CrossRef]

- Ejaz, W.; Awais Azam, M.; Saadat, S.; Iqbal, F.; Hanan, A. Unmanned Aerial Vehicles Enabled IoT Platform for Disaster Management. Energies 2019, 12, 2706. [Google Scholar] [CrossRef]

- Mignardi, S.; Marini, R.; Verdone, R.; Buratti, C. On the Performance of a UAV-aided Wireless Network Based on NB-IoT. Drones 2021, 5, 94. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, Q.; Zhang, R. Accessing from the Sky: A Tutorial on UAV Communications for 5G and beyond. Proc. IEEE 2019, 107, 2327–2375. [Google Scholar] [CrossRef]

- Kern, A.; Bobbe, M.; Khedar, Y.; Bestmann, U. OpenREALM: Real-time Mapping for Unmanned Aerial Vehicles. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 902–911. [Google Scholar]

| Characteristic | Value |

|---|---|

| Type UAV | Hybrid (Fixed-wing VTOL) |

| Wingspan | 235 cm |

| Height | n.a. |

| Camera | S.O.D.A. (20 Megapixel) |

| Processing unit | NVIDIA Jetson TX2 |

| Long Term Evolution (LTE) | 4G LTE dongle |

| Min–Max speed | 12–28 m/s (fixed-wing) |

| Maximum flight time | 2 h (fixed-wing) |

| Maximum Take of Weight (MTOW) | 6.2 kg |

| Maximum wind speed | 33 km/h |

| Weather | drizzle |

| Autopilot | Px4 Professional autopilot |

| Communication protocol | MAVLink |

| Mission planner | QGroundControl |

| Safety protocol | PX4 safety operations |

| Item | Size [Mb] | Avg. Download Time [s] | Avg. Inference Time [s] | Avg. Transfer Time over WiFi [s] | Avg. Transfer Time over 4G [s] |

|---|---|---|---|---|---|

| Original | 4.35 | 1.09 | 0.343 | 1.496 | 6.043 |

| Compressed | 0.0029 | - | - | - | 1.557 |

| Labels | 0.0010 | - | - | - | 1.343 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tilon, S.; Nex, F.; Vosselman, G.; Sevilla de la Llave, I.; Kerle, N. Towards Improved Unmanned Aerial Vehicle Edge Intelligence: A Road Infrastructure Monitoring Case Study. Remote Sens. 2022, 14, 4008. https://doi.org/10.3390/rs14164008

Tilon S, Nex F, Vosselman G, Sevilla de la Llave I, Kerle N. Towards Improved Unmanned Aerial Vehicle Edge Intelligence: A Road Infrastructure Monitoring Case Study. Remote Sensing. 2022; 14(16):4008. https://doi.org/10.3390/rs14164008

Chicago/Turabian StyleTilon, Sofia, Francesco Nex, George Vosselman, Irene Sevilla de la Llave, and Norman Kerle. 2022. "Towards Improved Unmanned Aerial Vehicle Edge Intelligence: A Road Infrastructure Monitoring Case Study" Remote Sensing 14, no. 16: 4008. https://doi.org/10.3390/rs14164008

APA StyleTilon, S., Nex, F., Vosselman, G., Sevilla de la Llave, I., & Kerle, N. (2022). Towards Improved Unmanned Aerial Vehicle Edge Intelligence: A Road Infrastructure Monitoring Case Study. Remote Sensing, 14(16), 4008. https://doi.org/10.3390/rs14164008