A Novel Ground-Based Cloud Image Segmentation Method Based on a Multibranch Asymmetric Convolution Module and Attention Mechanism

Abstract

1. Introduction

- The MACM is first proposed to improve the ability of the network to capture more contextual information in a larger area and adaptively adjust the feature channel weights;

- MA-SegCloud performs favorably against state-of-the-art cloud image segmentation methods.

2. Methods

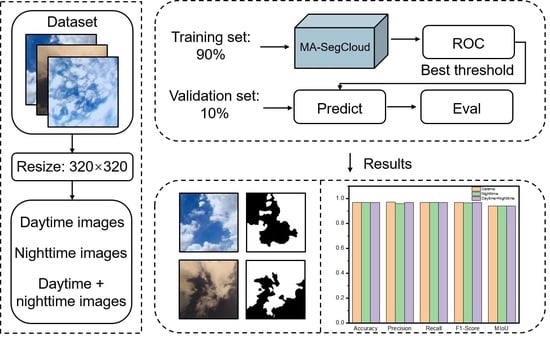

2.1. Overall Architecture of MA-SegCloud

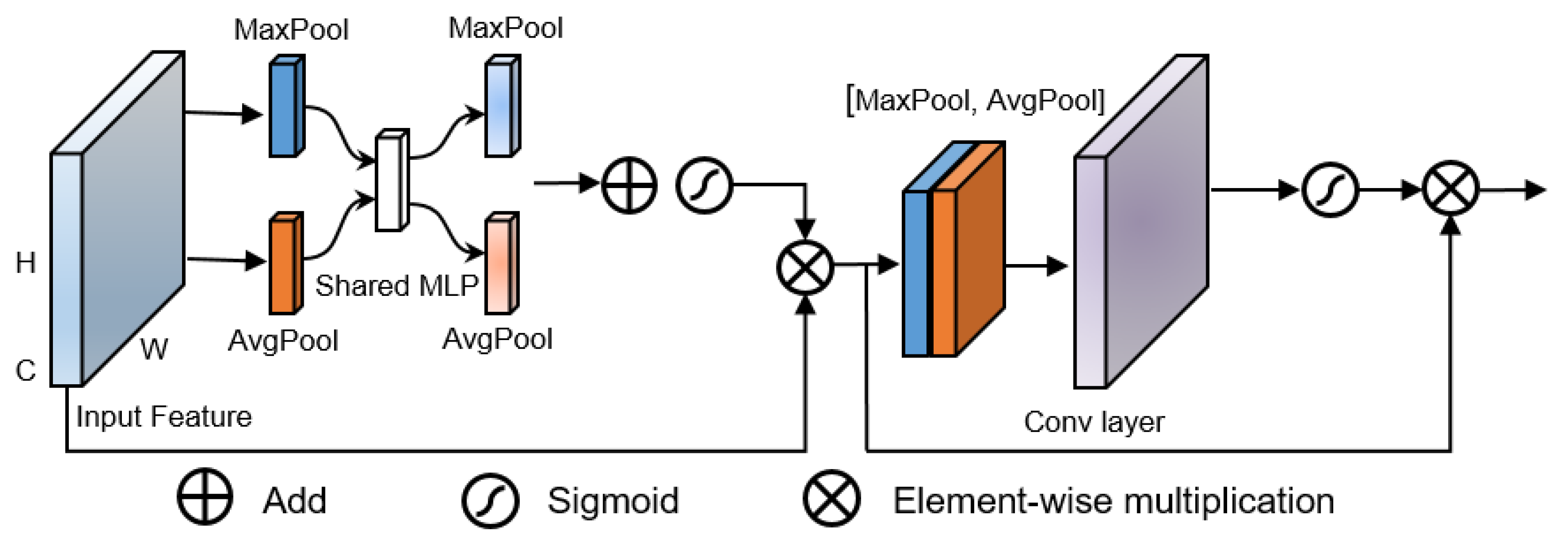

2.2. Convolutional Block Attention Module (CBAM)

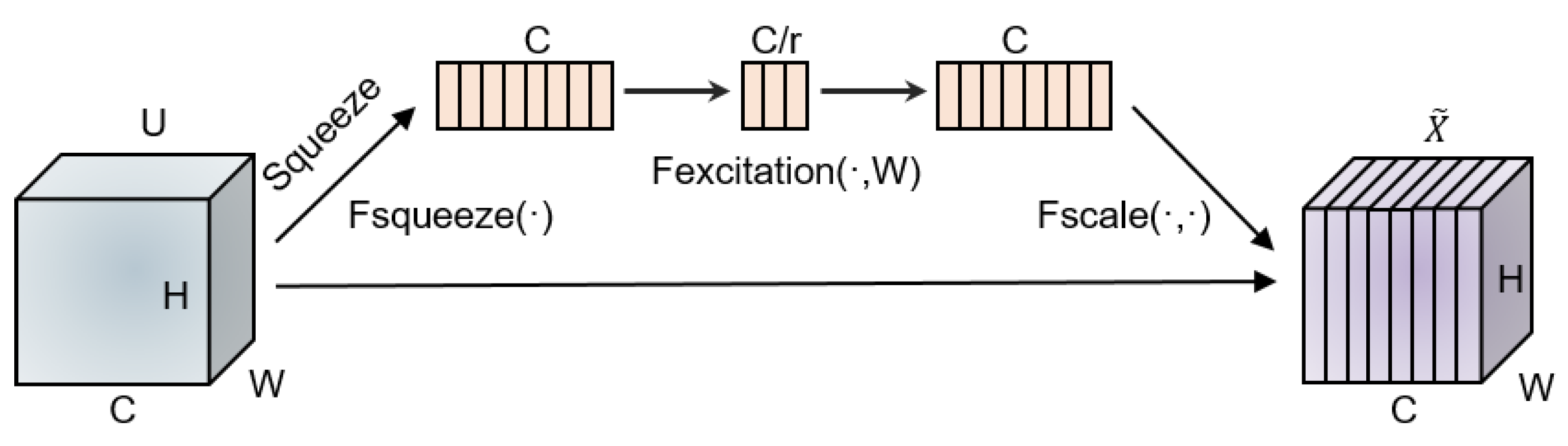

2.3. Squeeze-and-Excitation Module (SEM)

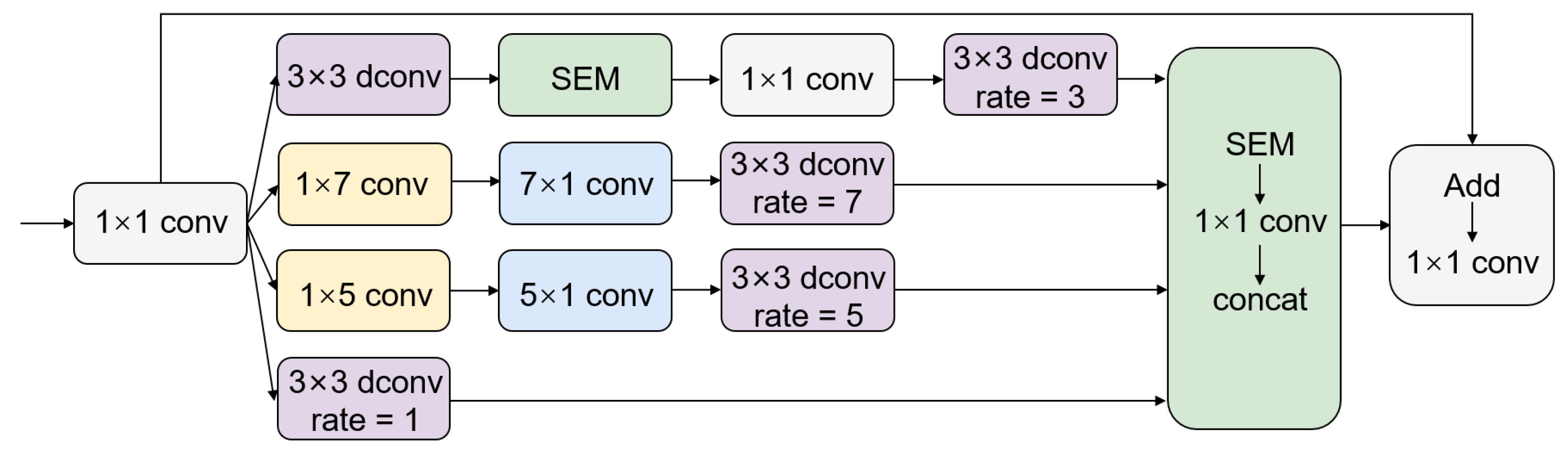

2.4. Multibranch Asymmetric Convolution Module (MACM)

3. Results

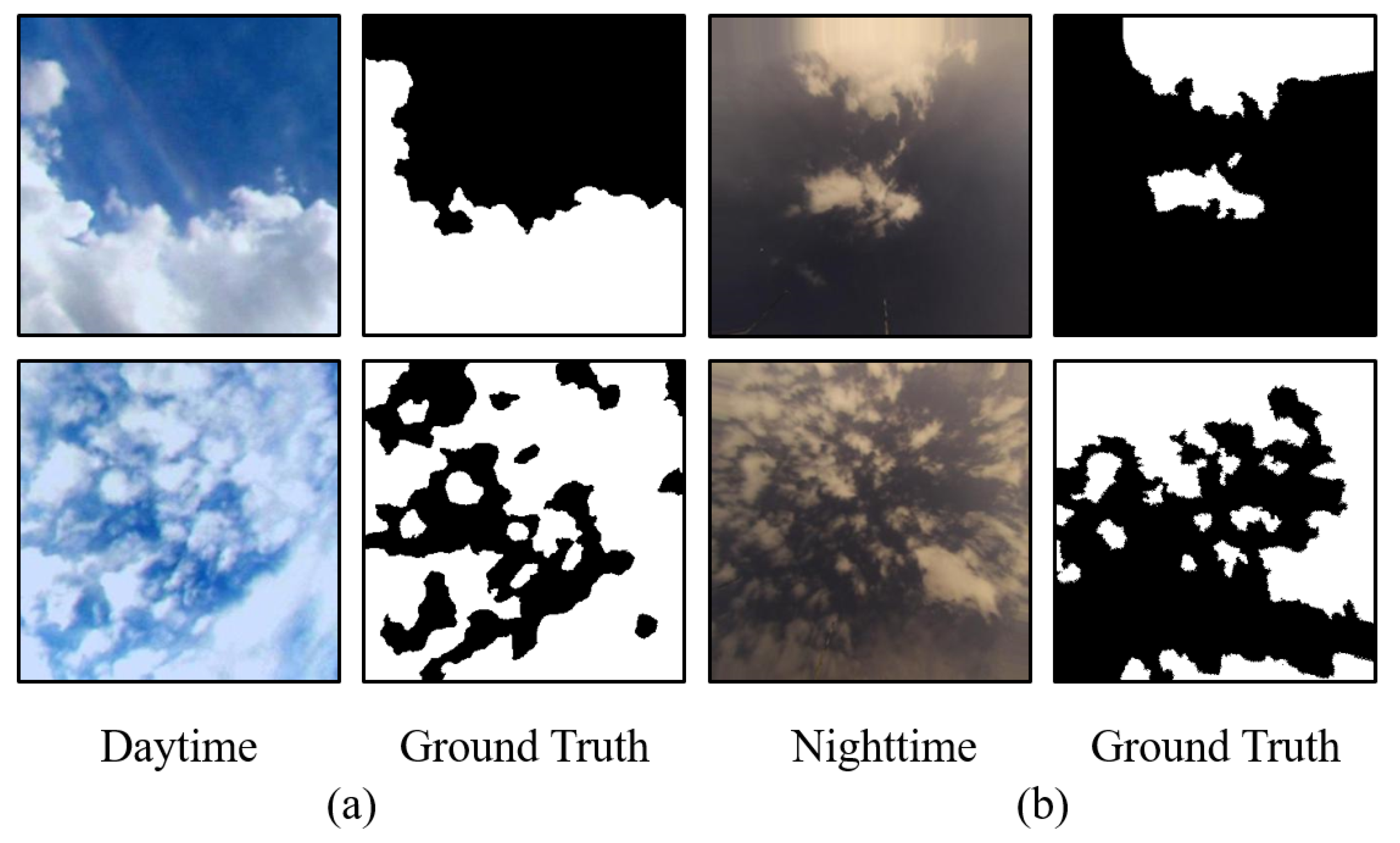

3.1. Dataset

3.2. Implementation

3.3. Evaluation Metrics

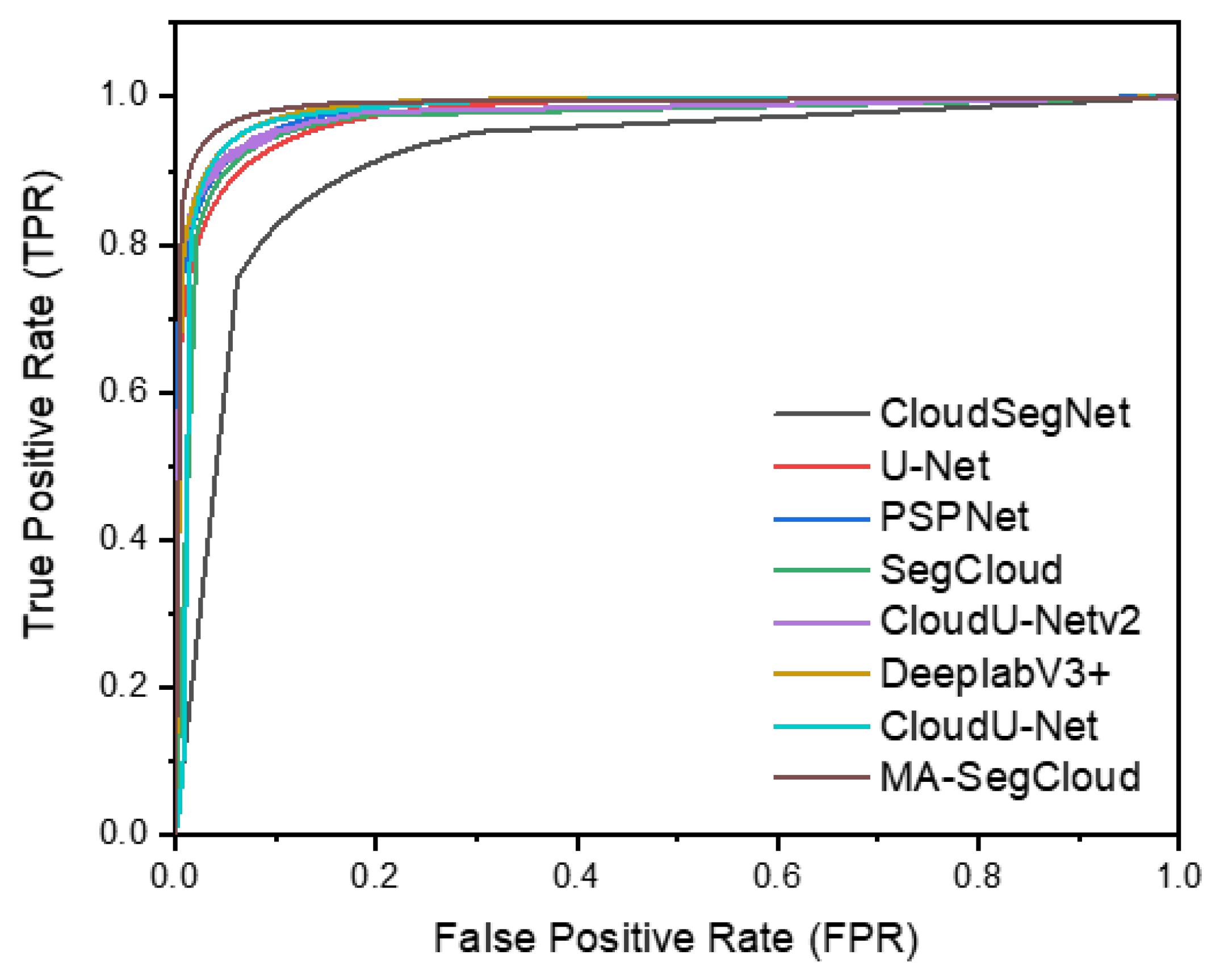

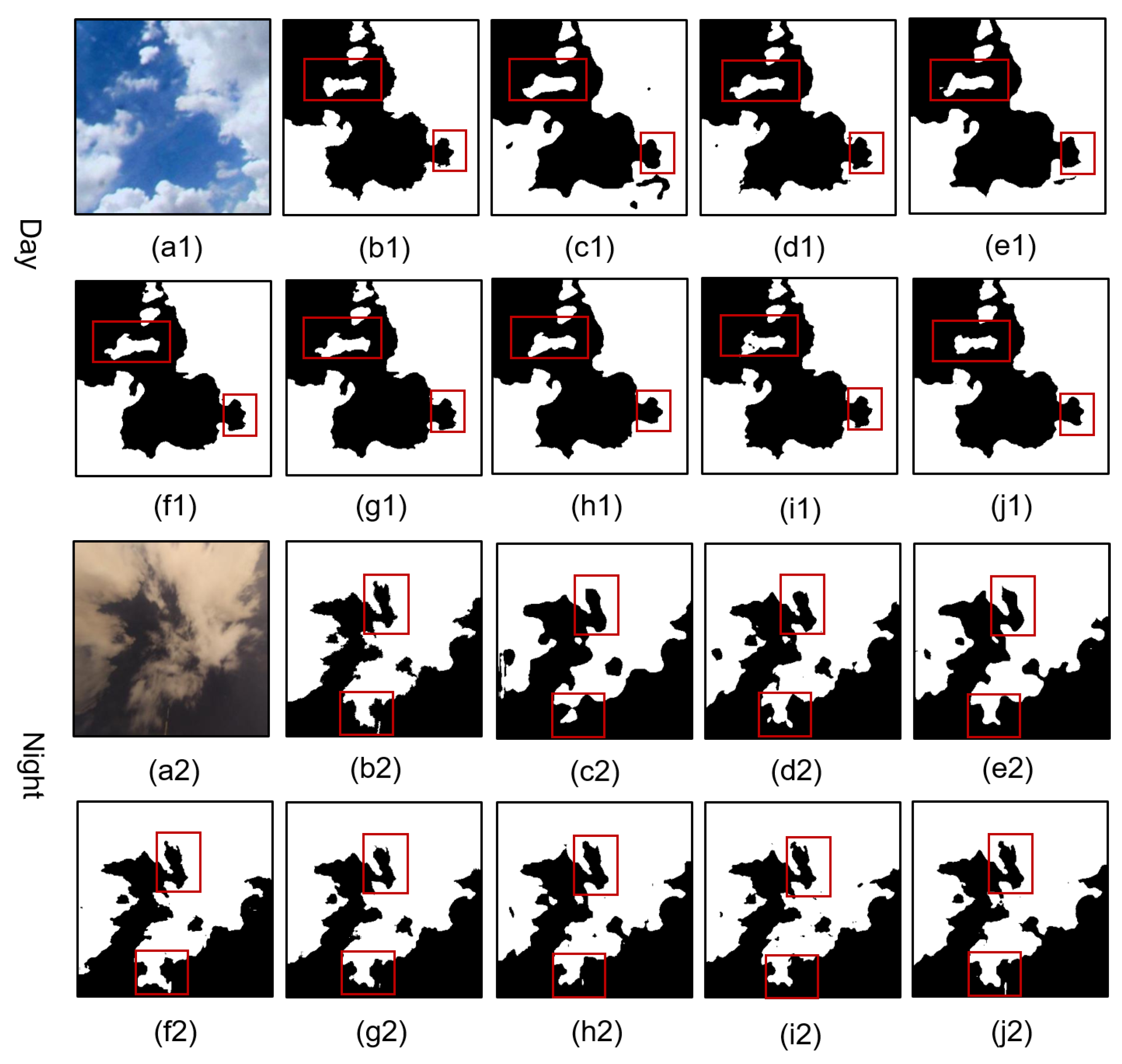

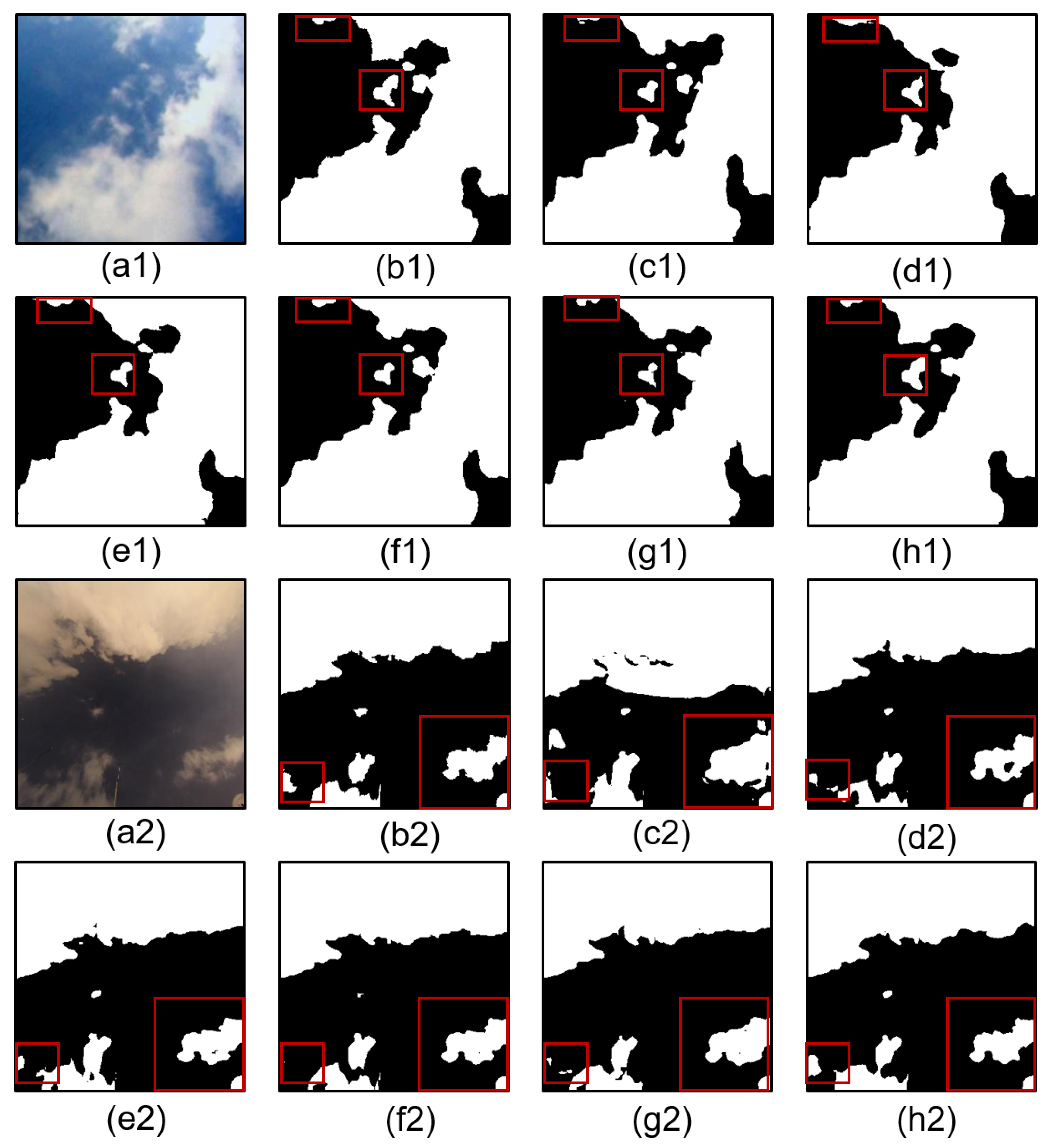

3.4. Comparison of the Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Calbo, J.; Long, C.N.; Gonzalez, J.A.; Augustine, J.; McComiskey, A. The thin border between cloud and aerosol: Sensitivity of several ground based observation techniques. Atmos. Res. 2017, 196, 248–260. [Google Scholar] [CrossRef]

- Klebe, D.I.; Blatherwick, R.D.; Morris, V.R. Ground-based all-sky mid-infrared and visible imagery for purposes of characterizing cloud properties. Atmos. Meas. Tech. 2014, 7, 637–645. [Google Scholar] [CrossRef]

- Schneider, S.H. Cloudiness as a global climatic feedback mechanism: The effects on the radiation balance and surface temperature of variations in cloudiness. J. Atmos. Sci. 1972, 29, 1413–1422. [Google Scholar] [CrossRef]

- Hudson, K.; Simstad, T. The Share Astronomy Guide to Observatory Site Selection; Neal Street Design Inc.: San Diego, CA, USA, 2010; Volume 1. [Google Scholar]

- Wang, G.; Kurtz, B.; Kleissl, J. Cloud base height from sky imager and cloud speed sensor. Sol. Energy 2016, 131, 208–221. [Google Scholar] [CrossRef]

- Kuji, M.; Murasaki, A.; Hori, M.; Shiobara, M. Cloud fractions estimated from shipboard whole-sky camera and ceilometer observations between East Asia and Antarctica. J. Meteorol. Soc. Jpn. Ser. II 2018, 96, 201–214. [Google Scholar] [CrossRef]

- Aebi, C.; Gröbner, J.; Kämpfer, N. Cloud fraction determined by thermal infrared and visible all-sky cameras. Atmos. Meas. Tech. 2018, 11, 5549–5563. [Google Scholar] [CrossRef]

- Long, C.; Sabburg, J.; Calbo, J.; Pages, D. Retrieving cloud characteristics from ground-based daytime color all-sky images. J. Atmos. Ocean. Technol. 2006, 23, 633–652. [Google Scholar] [CrossRef]

- Heinle, A.; Macke, A.; Srivastav, A. Automatic cloud classification of whole sky images. Atmos. Meas. Tech. 2010, 3, 557–567. [Google Scholar] [CrossRef]

- Krauz, L.; Janout, P.; Blažek, M.; Páta, P. Assessing Cloud Segmentation in the Chromacity Diagram of All-Sky Images. Remote Sens. 2020, 12, 1902. [Google Scholar] [CrossRef]

- Long, C.N. Correcting for circumsolar and near-horizon errors in sky cover retrievals from sky images. Open Atmos. Sci. J. 2010, 4, 45–52. [Google Scholar] [CrossRef][Green Version]

- Liu, S.; Zhang, L.; Zhang, Z.; Wang, C.; Xiao, B. Automatic Cloud Detection for All-Sky Images Using Superpixel Segmentation. IEEE Geosci. Remote Sens. Lett. 2015, 12, 354–358. [Google Scholar]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. Nighttime Sky/Cloud Image Segmentation. In Proceedings of the 2017 24th IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 345–349. [Google Scholar]

- Drönner, J.; Korfhage, N.; Egli, S.; Mühling, M.; Thies, B.; Bendix, J.; Freisleben, B.; Seeger, B. Fast Cloud Segmentation Using Convolutional Neural Networks. Remote Sens. 2018, 10, 1782. [Google Scholar] [CrossRef]

- Dev, S.; Nautiyal, A.; Lee, Y.H.; Winkler, S. Cloudsegnet: A deep network for nychthemeron cloud image segmentation. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1814–1818. [Google Scholar] [CrossRef]

- Shi, C.; Zhou, Y.; Qiu, B.; He, J.; Ding, M.; Wei, S. Diurnal and nocturnal cloud segmentation of all-sky imager (ASI) images using enhancement fully convolutional networks. Atmos. Meas. Tech. 2019, 12, 4713–4724. [Google Scholar] [CrossRef]

- Shi, C.; Zhou, Y.; Qiu, B.; Guo, D.; Li, M. CloudU-Net: A Deep Convolutional Neural Network Architecture for Daytime and Nighttime Cloud Images’ Segmentation. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1688–1692. [Google Scholar] [CrossRef]

- Shi, C.; Zhou, Y.; Qiu, B. CloudU-Netv2: A Cloud Segmentation Method for Ground-Based Cloud Images Based on Deep Learning. Neural Process. Lett. 2021, 53, 2715–2728. [Google Scholar] [CrossRef]

- Xie, W.; Liu, D.; Yang, M.; Chen, S.; Wang, B.; Wang, Z.; Xia, Y.; Liu, Y.; Wang, Y.; Zhang, C. SegCloud: A novel cloud image segmentation model using a deep convolutional neural network for ground-based all-sky-view camera observation. Atmos. Meas. Tech. 2020, 13, 1953–1961. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 472–480. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

| Method | Batch Size | Learning Rate | Optimizer | Loss Function |

|---|---|---|---|---|

| CloudSegNet | 8 | 1 | Adadelta | binary cross-entropy |

| U-Net | 8 | 0.001 | Adam | binary cross-entropy |

| PSPNet | 8 | 0.001 | Adam | binary cross-entropy |

| SegCloud | 8 | 0.006 | Mini-batch gradient descent | binary cross-entropy |

| CloudU-Netv2 | 8 | 0.001 | RAdam | binary cross-entropy |

| DeepLabV3+ | 8 | 0.001 | Adam | binary cross-entropy |

| CloudU-Net | 8 | 1 | Adadelta | binary cross-entropy |

| MA-SegCloud | 8 | 0.001 | Adam | binary cross-entropy |

| Type | Method | Accuracy | Precision | Recall | F1-Score | Error Rate | MIoU |

|---|---|---|---|---|---|---|---|

| CloudSegNet | 0.893 | 0.888 | 0.909 | 0.898 | 0.107 | 0.806 | |

| U-Net | 0.943 | 0.945 | 0.945 | 0.945 | 0.057 | 0.891 | |

| PSPNet | 0.945 | 0.953 | 0.942 | 0.948 | 0.055 | 0.896 | |

| Day | SegCloud | 0.941 | 0.953 | 0.934 | 0.943 | 0.059 | 0.889 |

| CloudU-Netv2 | 0.940 | 0.967 | 0.917 | 0.941 | 0.060 | 0.887 | |

| DeeplabV3+ | 0.953 | 0.962 | 0.948 | 0.955 | 0.047 | 0.911 | |

| CloudU-Net | 0.954 | 0.956 | 0.957 | 0.956 | 0.046 | 0.912 | |

| MA-SegCloud | 0.969 | 0.971 | 0.970 | 0.970 | 0.031 | 0.940 | |

| CloudSegNet | 0.880 | 0.870 | 0.922 | 0.895 | 0.120 | 0.813 | |

| U-Net | 0.953 | 0.949 | 0.943 | 0.946 | 0.047 | 0.909 | |

| PSPNet | 0.938 | 0.927 | 0.931 | 0.929 | 0.062 | 0.882 | |

| Night | SegCloud | 0.955 | 0.936 | 0.960 | 0.948 | 0.045 | 0.912 |

| CloudU-Netv2 | 0.954 | 0.931 | 0.965 | 0.948 | 0.046 | 0.911 | |

| DeeplabV3+ | 0.947 | 0.931 | 0.948 | 0.939 | 0.053 | 0.898 | |

| CloudU-Net | 0.954 | 0.925 | 0.972 | 0.949 | 0.046 | 0.912 | |

| MA-SegCloud | 0.969 | 0.960 | 0.970 | 0.965 | 0.031 | 0.940 | |

| CloudSegNet | 0.896 | 0.899 | 0.899 | 0.899 | 0.104 | 0.811 | |

| U-Net | 0.944 | 0.945 | 0.945 | 0.945 | 0.056 | 0.893 | |

| PSPNet | 0.945 | 0.951 | 0.941 | 0.946 | 0.055 | 0.895 | |

| Day + Night | SegCloud | 0.942 | 0.952 | 0.936 | 0.944 | 0.058 | 0.891 |

| CloudU-Netv2 | 0.941 | 0.964 | 0.920 | 0.941 | 0.059 | 0.889 | |

| DeeplabV3+ | 0.953 | 0.960 | 0.948 | 0.954 | 0.047 | 0.910 | |

| CloudU-Net | 0.954 | 0.954 | 0.958 | 0.956 | 0.046 | 0.913 | |

| MA-SegCloud | 0.969 | 0.970 | 0.970 | 0.970 | 0.031 | 0.940 |

| Method | Accuracy | Precision | Recall | F1-Score | Error Rate | MIoU |

|---|---|---|---|---|---|---|

| without MACM and CBAM | 0.942 | 0.941 | 0.947 | 0.944 | 0.058 | 0.890 |

| without MACM | 0.949 | 0.941 | 0.961 | 0.951 | 0.051 | 0.902 |

| without SEM | 0.956 | 0.957 | 0.957 | 0.957 | 0.044 | 0.915 |

| without CBAM | 0.953 | 0.958 | 0.951 | 0.955 | 0.047 | 0.911 |

| without SEM and CBAM | 0.948 | 0.950 | 0.949 | 0.950 | 0.052 | 0.901 |

| MA-SegCloud | 0.969 | 0.970 | 0.970 | 0.970 | 0.031 | 0.940 |

| Method | Parameters | FLOPs | Training Time | Testing Time |

|---|---|---|---|---|

| (Mbytes) | (G) | (Hours) | (Seconds) | |

| CloudSegNet | 0.02 | 0.2 | 0.78 | 34 |

| U-Net | 95.0 | 175.9 | 1.07 | 47 |

| PSPNet | 26.4 | 10.0 | 2.65 | 53 |

| SegCloud | 74.8 | 158.9 | 7.2 | 75 |

| CloudU-Netv2 | 55.5 | 58.8 | 9.75 | 89 |

| DeeplabV3+ | 10.5 | 4.31 | 2.2 | 53 |

| CloudU-Net | 135.4 | 139.6 | 6.1 | 82 |

| MA-SegCloud | 55.8 | 47.5 | 6.9 | 68 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Wei, W.; Qiu, B.; Luo, A.; Zhang, M.; Li, X. A Novel Ground-Based Cloud Image Segmentation Method Based on a Multibranch Asymmetric Convolution Module and Attention Mechanism. Remote Sens. 2022, 14, 3970. https://doi.org/10.3390/rs14163970

Zhang L, Wei W, Qiu B, Luo A, Zhang M, Li X. A Novel Ground-Based Cloud Image Segmentation Method Based on a Multibranch Asymmetric Convolution Module and Attention Mechanism. Remote Sensing. 2022; 14(16):3970. https://doi.org/10.3390/rs14163970

Chicago/Turabian StyleZhang, Liwen, Wenhao Wei, Bo Qiu, Ali Luo, Mingru Zhang, and Xiaotong Li. 2022. "A Novel Ground-Based Cloud Image Segmentation Method Based on a Multibranch Asymmetric Convolution Module and Attention Mechanism" Remote Sensing 14, no. 16: 3970. https://doi.org/10.3390/rs14163970

APA StyleZhang, L., Wei, W., Qiu, B., Luo, A., Zhang, M., & Li, X. (2022). A Novel Ground-Based Cloud Image Segmentation Method Based on a Multibranch Asymmetric Convolution Module and Attention Mechanism. Remote Sensing, 14(16), 3970. https://doi.org/10.3390/rs14163970