Crop Classification Based on GDSSM-CNN Using Multi-Temporal RADARSAT-2 SAR with Limited Labeled Data

Abstract

:1. Introduction

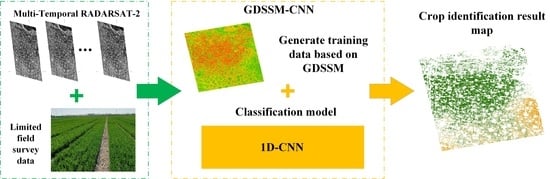

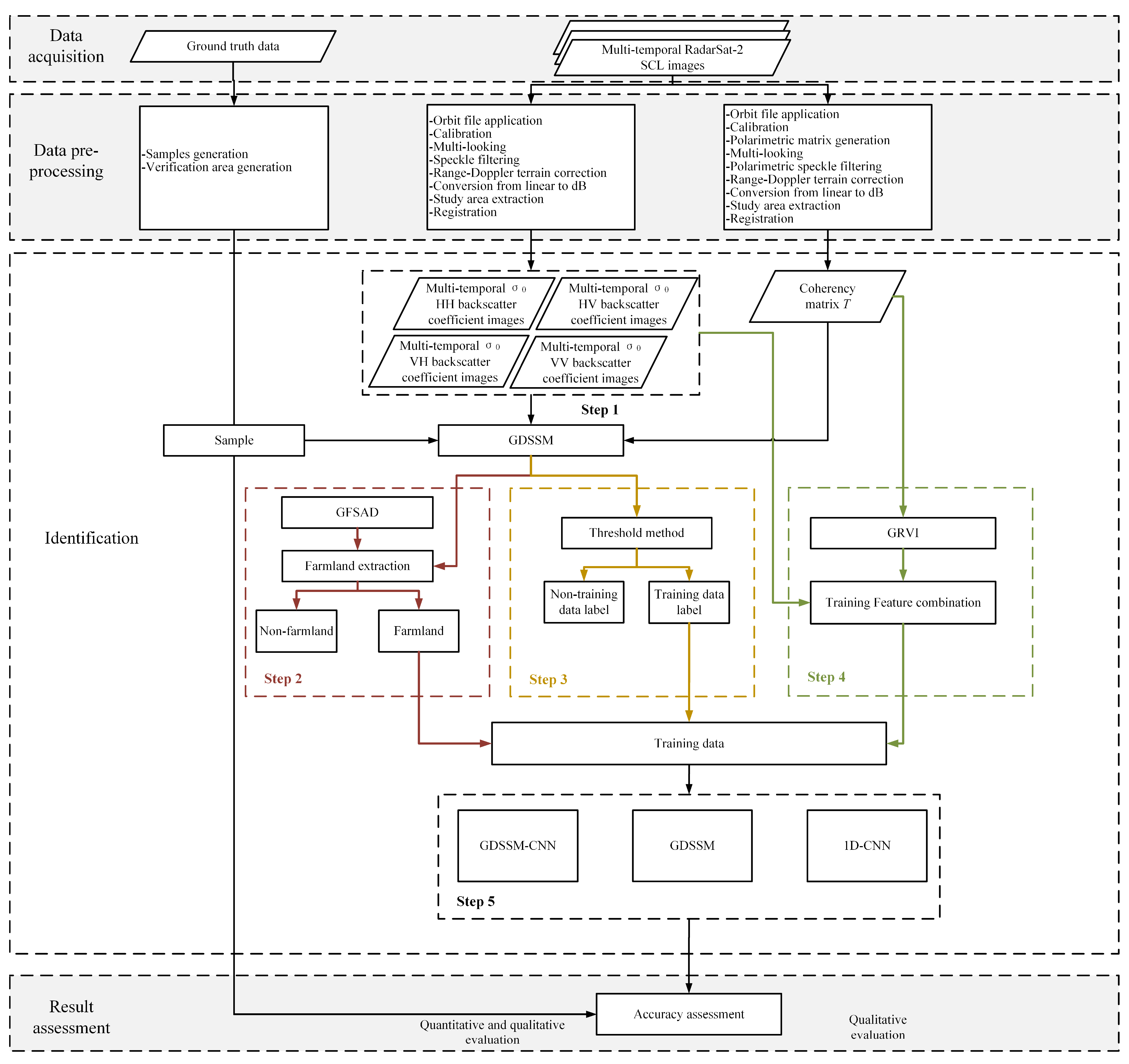

- For multi-temporal and fully polarimetric SAR images, a crop classification method combining the geodesic distance spectral similarity measure and a one-dimensional convolution neural network (GDSSM-CNN) is proposed. This method can still have excellent classification ability when the sample is limited.

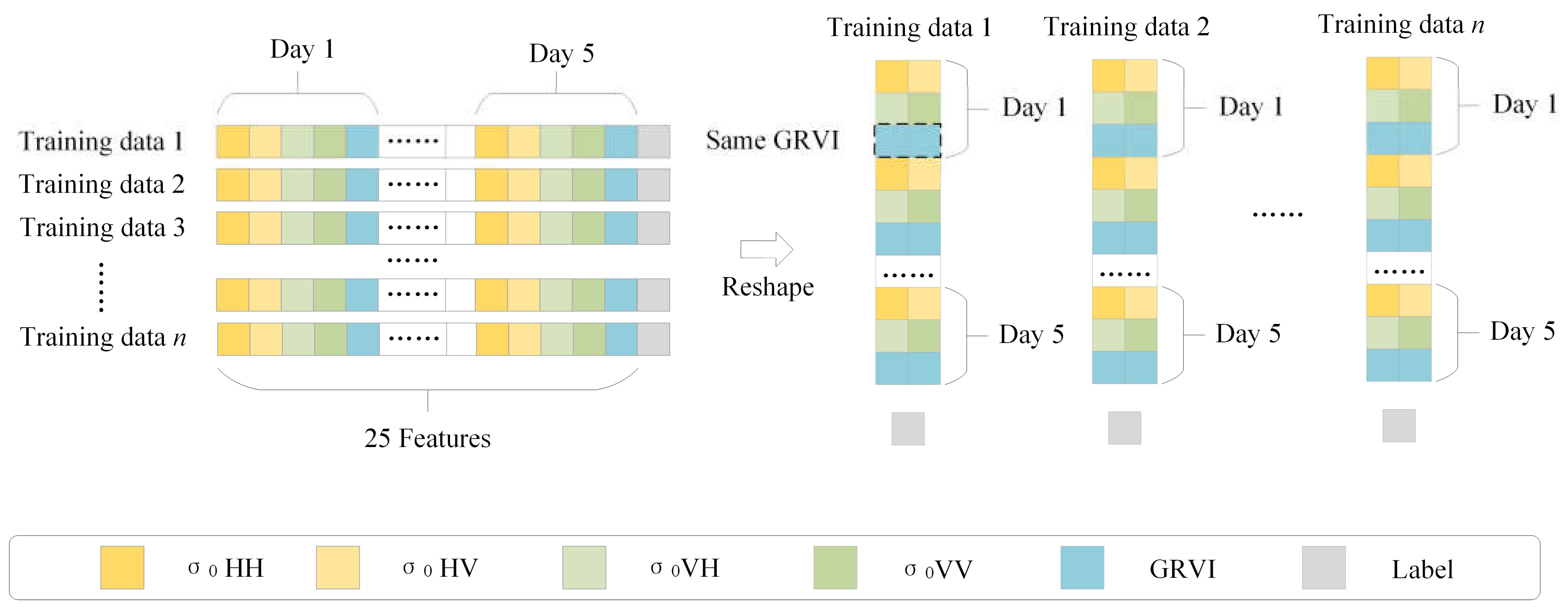

- The backscatter coefficient and GRVI are extracted from multi-temporal and fully polarized images and combined.

- For multi-temporal and fully polarimetric SAR images, a farmland region extraction method combining GFSAD data and GDSSM is proposed. Farmland and non-farmland areas can be extracted by this method delicately.

2. Materials and Methods

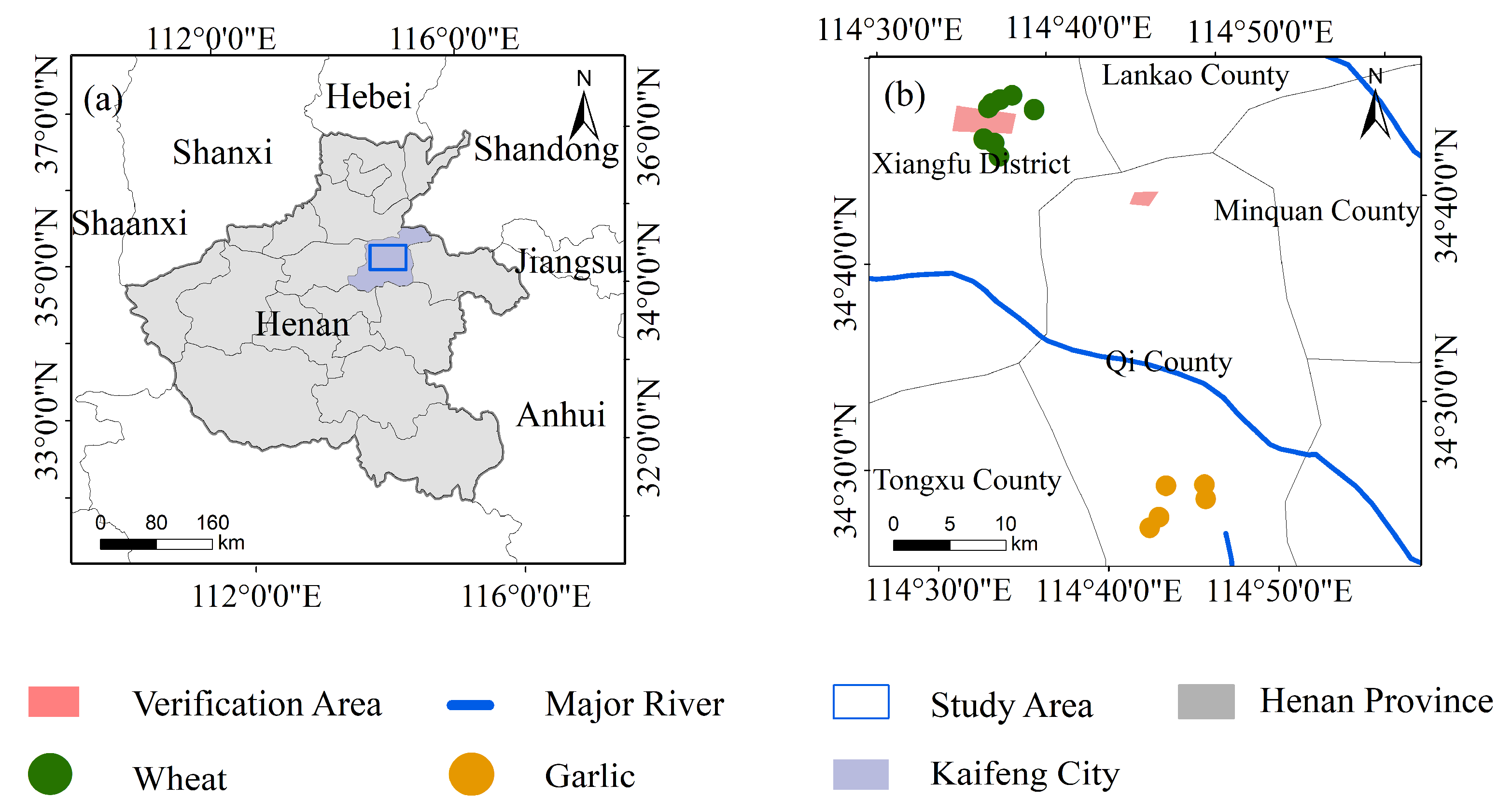

2.1. Study Area

2.2. Datasets and Preprocessing

2.3. Methodology

2.3.1. Geodesic Distance Spectral Similarity Measure

2.3.2. Generalized Volume Scattering Model-Based Radar Vegetation Index

2.3.3. Farmland Extraction

2.3.4. Training Data Extraction

2.3.5. One-Dimensional Convolutional Neural Network

3. Results

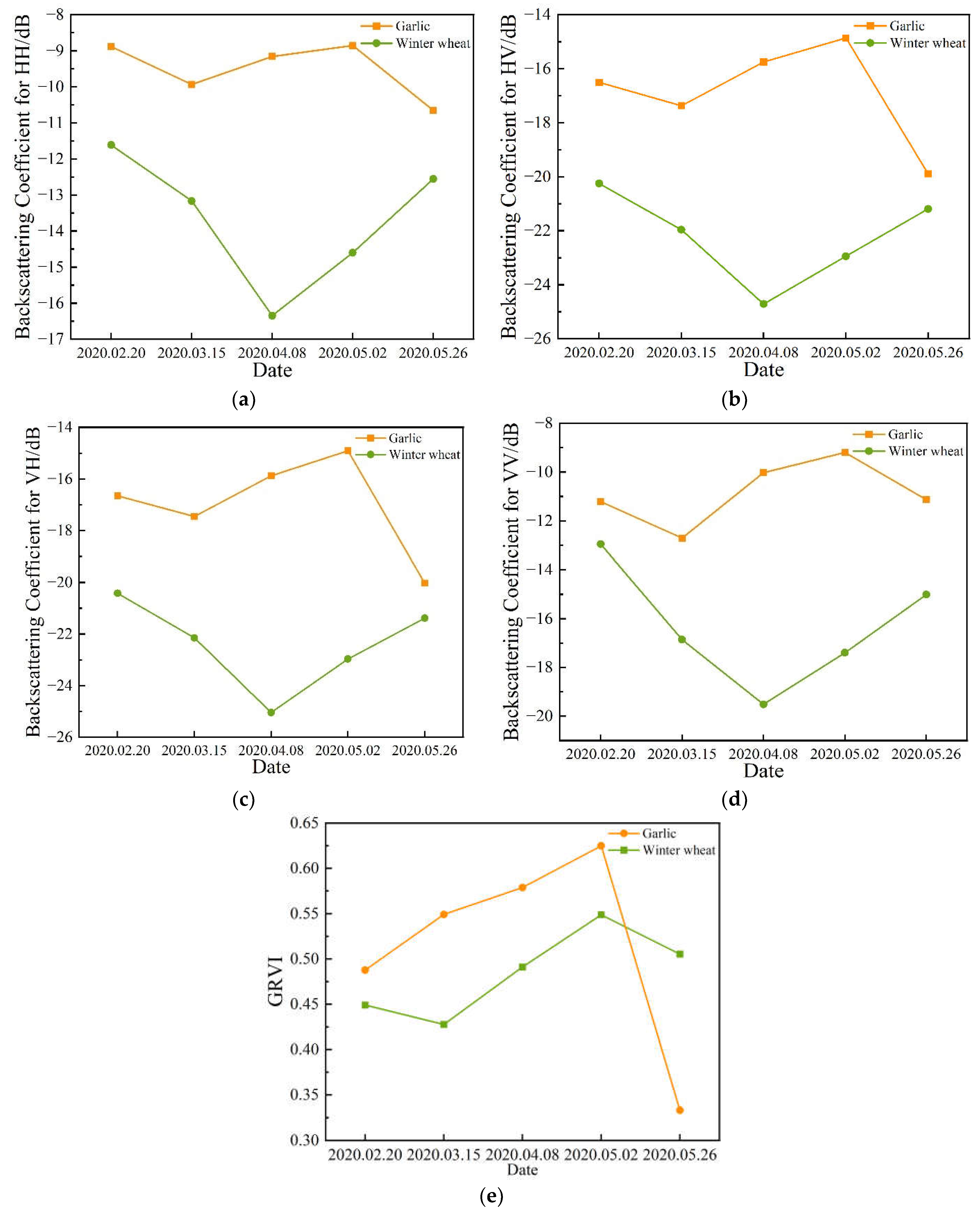

3.1. Temporal Profiles of the Backscatter Coefficient and GRVI

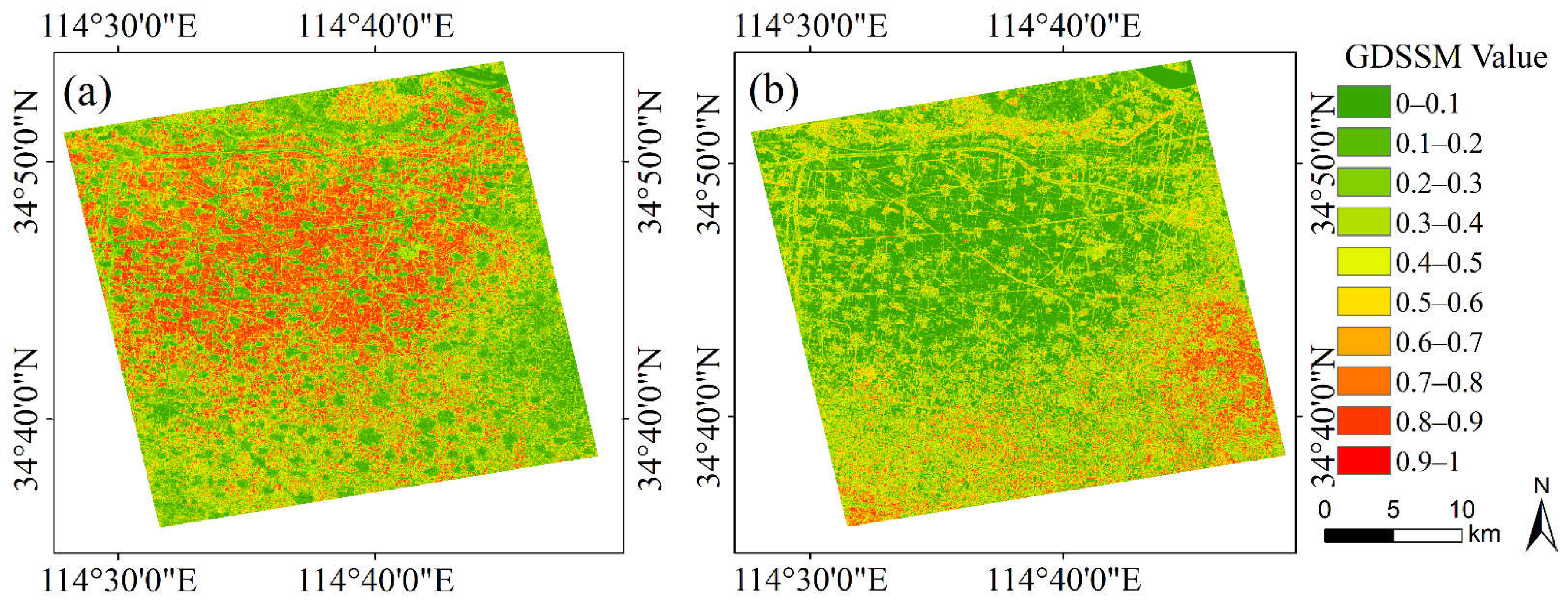

3.2. GDSSM for Different Crops

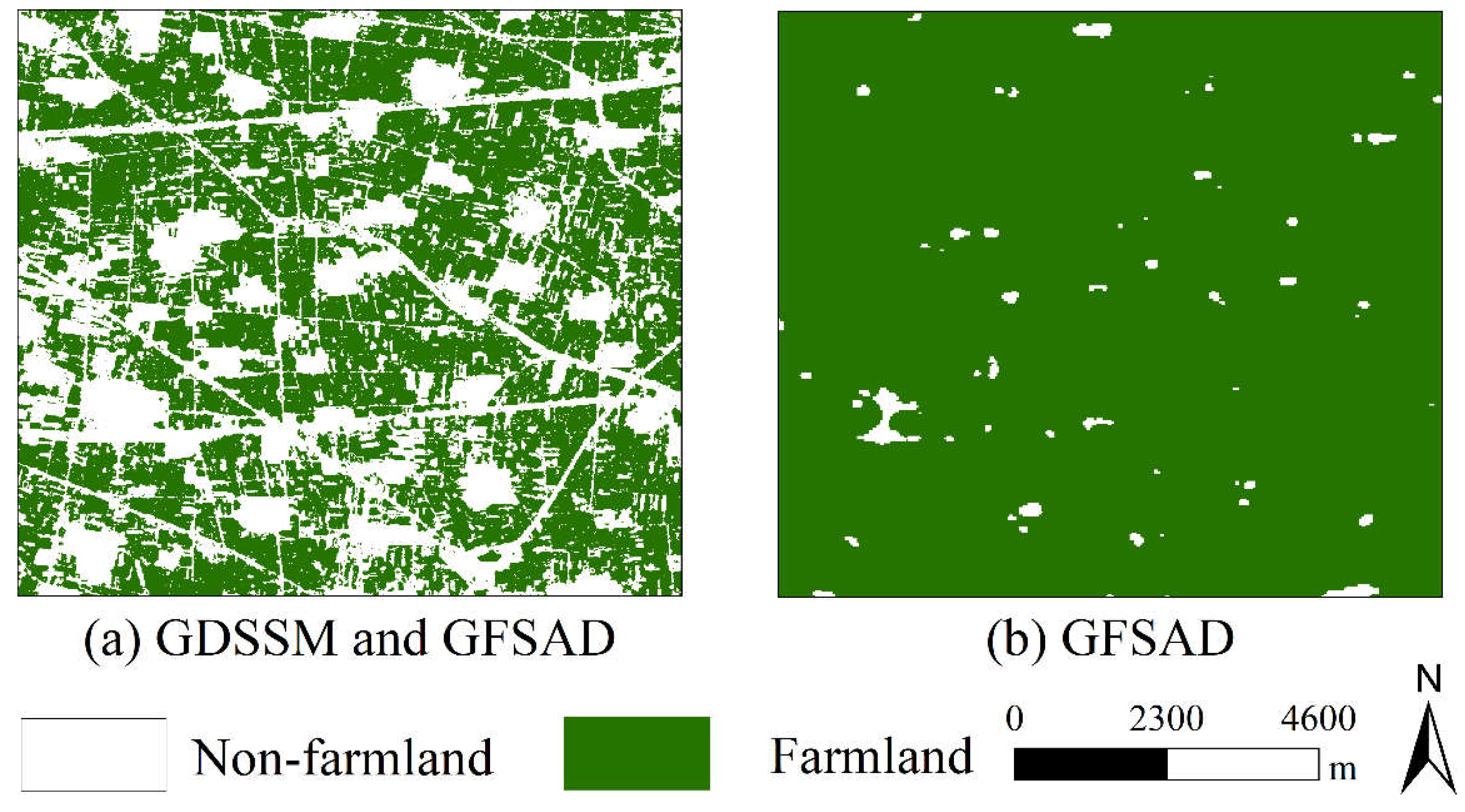

3.3. Farmland Mask

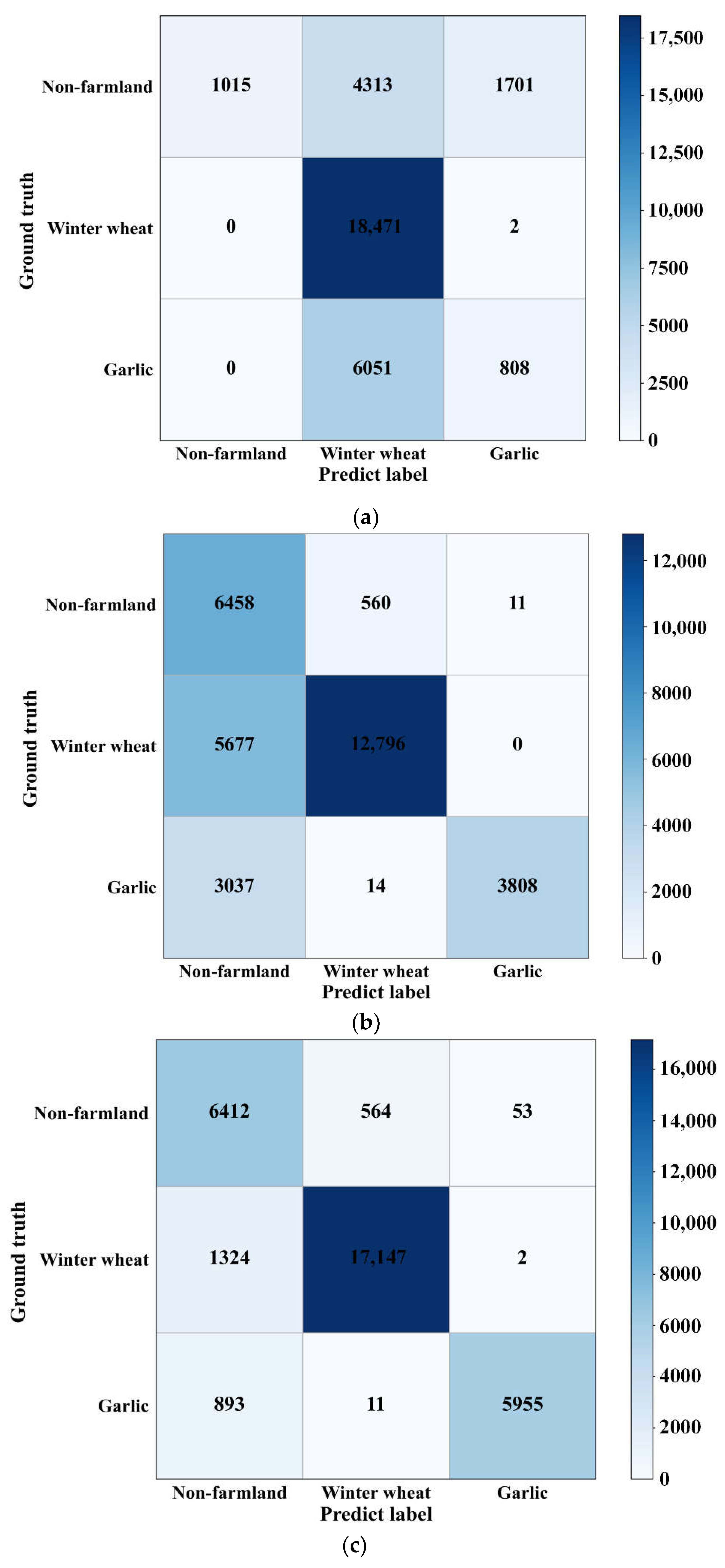

3.4. Crop Classification

4. Discussion

4.1. Combination of Backscatter Coefficient and GRVI

4.2. Extraction of Training Data

- From the number of training data, it can be seen that the number of training data increases rapidly with the decrease in the threshold, and the model training time increases rapidly with the increase in the amount of training.

- From the number of training data and the accuracy of the classifier, it can be seen that the accuracy of the classifier increases with the increase in training data. When the threshold is 0.9, the increase in training data has little impact on the accuracy. This means that the 1D-CNN model has been fully trained by the training data at this time.

- As the number of training data increases, the number of noise data (training data with wrong labels) increases. However, the accuracy of the classifier becomes higher and higher. This means that when the threshold is greater than or equal to 0.8, the number of noisy data is far less than the number of training data with correct labels, and the increase in low-noise data does not affect the performance of the classifier. When the threshold value is less than 0.8, the unknown pixel does not have a high similarity with the reference target. Therefore, when the threshold value is in the range of 0.8–0.9, the classifier can achieve very high accuracy.

4.3. Comparison of Three Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kwak, G.-H.; Park, N.-W. Impact of texture information on crop classification with machine learning and UAV images. Appl. Sci. 2019, 9, 643. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Yang, H.; Li, H.; Wang, W.; Li, N.; Zhao, J.; Pan, B. Spatio-temporal estimation of rice height using time series Sentinel-1 images. Remote Sens. 2022, 14, 546. [Google Scholar] [CrossRef]

- Xie, Y.; Huang, J. Integration of a crop growth model and deep learning methods to improve satellite-based yield estimation of winter wheat in Henan Province, China. Remote Sens. 2021, 13, 4372. [Google Scholar] [CrossRef]

- Pôças, I.; Calera, A.; Campos, I.; Cunha, M. Remote sensing for estimating and mapping single and basal crop coefficientes: A review on spectral vegetation indices approaches. Agric. Water Manag. 2020, 233, 106081. [Google Scholar] [CrossRef]

- Sun, C.; Bian, Y.; Zhou, T.; Pan, J. Using of multi-source and multi-temporal remote sensing data improves crop-type mapping in the subtropical agriculture region. Sensors 2019, 19, 2401. [Google Scholar] [CrossRef]

- Shi, S.; Ye, Y.; Xiao, R. Evaluation of food security based on remote sensing data—Taking Egypt as an example. Remote Sens. 2022, 14, 2876. [Google Scholar] [CrossRef]

- Leroux, L.; Castets, M.; Baron, C.; Escorihuela, M.-J.; Bégué, A.; Lo Seen, D. Maize yield estimation in West Africa from crop process-induced combinations of multi-domain remote sensing indices. Eur. J. Agron. 2019, 108, 11–26. [Google Scholar] [CrossRef]

- Sun, Y.; Luo, J.; Wu, T.; Zhou, Y.; Liu, H.; Gao, L.; Dong, W.; Liu, W.; Yang, Y.; Hu, X.; et al. Synchronous response analysis of features for remote sensing crop classification based on optical and SAR time-series data. Sensors 2019, 19, 4227. [Google Scholar] [CrossRef]

- Li, C.; Li, H.; Li, J.; Lei, Y.; Li, C.; Manevski, K.; Shen, Y. Using NDVI percentiles to monitor real-time crop growth. Comput. Electron. Agric. 2019, 162, 357–363. [Google Scholar] [CrossRef]

- French, A.N.; Hunsaker, D.J.; Sanchez, C.A.; Saber, M.; Gonzalez, J.R.; Anderson, R. Satellite-Based NDVI crop coefficients and evapotranspiration with eddy covariance validation for multiple durum wheat fields in the US Southwest. Agric. Water Manag. 2020, 239, 106266. [Google Scholar] [CrossRef]

- Wei, S.; Zhang, H.; Wang, C.; Wang, Y.; Xu, L. Multi-temporal SAR data large-scale crop mapping based on U-Net model. Remote Sens. 2019, 11, 68. [Google Scholar] [CrossRef]

- Adrian, J.; Sagan, V.; Maimaitijiang, M. Sentinel SAR-Optical fusion for crop type mapping using deep learning and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2021, 175, 215–235. [Google Scholar] [CrossRef]

- Bouchat, J.; Tronquo, E.; Orban, A.; Verhoest, N.E.C.; Defourny, P. Assessing the potential of fully polarimetric Mono- and bistatic SAR acquisitions in L-Band for crop and soil monitoring. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 3168–3178. [Google Scholar] [CrossRef]

- Nasirzadehdizaji, R.; Balik Sanli, F.; Abdikan, S.; Cakir, Z.; Sekertekin, A.; Ustuner, M. Sensitivity analysis of multi-temporal Sentinel-1 SAR parameters to crop height and canopy coverage. Appl. Sci. 2019, 9, 655. [Google Scholar] [CrossRef]

- Wang, H.; Magagi, R.; Goïta, K.; Trudel, M.; McNairn, H.; Powers, J. Crop phenology retrieval via polarimetric SAR decomposition and random forest algorithm. Remote Sens. Environ. 2019, 231, 111234. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, C.; Zhang, B.; Liu, M. Crop classification based on temporal information using Sentinel-1 SAR time-series data. Remote Sens. 2019, 11, 53. [Google Scholar] [CrossRef]

- Gao, H.; Wang, C.; Wang, G.; Fu, H.; Zhu, J. A novel crop classification method based on PpfSVM Classifier with time-series alignment kernel from dual-polarization SAR datasets. Remote Sens. Environ. 2021, 264, 112628. [Google Scholar] [CrossRef]

- Liao, C.; Wang, J.; Xie, Q.; Baz, A.A.; Huang, X.; Shang, J.; He, Y. Synergistic use of multi-temporal RADARSAT-2 and VENµS data for crop classification based on 1D convolutional neural network. Remote Sens. 2020, 12, 832. [Google Scholar] [CrossRef]

- Xie, Q.; Lai, K.; Wang, J.; Lopez-Sanchez, J.M.; Shang, J.; Liao, C.; Zhu, J.; Fu, H.; Peng, X. Crop monitoring and classification using polarimetric RADARSAT-2 time-series data across growing season: A case study in Southwestern Ontario, Canada. Remote Sens. 2021, 13, 1394. [Google Scholar] [CrossRef]

- Li, M.; Bijker, W. Vegetable classification in indonesia using dynamic time warping of Sentinel-1A dual polarization SAR time series. Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 268–280. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Moriyama, T.; Ishido, M.; Yamada, H. Four-Component scattering model for polarimetric SAR image decomposition. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1699–1706. [Google Scholar] [CrossRef]

- Singh, G.; Yamaguchi, Y. Model-based six-component scattering matrix power decomposition. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5687–5704. [Google Scholar] [CrossRef]

- Singh, G.; Malik, R.; Mohanty, S.; Rathore, V.S.; Yamada, K.; Umemura, M.; Yamaguchi, Y. Seven-component scattering power decomposition of POLSAR coherency matrix. IEEE Trans-Actions Geosci. Remote Sens. 2019, 57, 8371–8382. [Google Scholar] [CrossRef]

- Guo, J.; Wei, P.-L.; Liu, J.; Jin, B.; Su, B.-F.; Zhou, Z.-S. Crop classification based on differential characteristics of H/α scattering parameters for multitemporal Quad- and Dual-Polarization SAR images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6111–6123. [Google Scholar] [CrossRef]

- Chen, Q.; Cao, W.; Shang, J.; Liu, J.; Liu, X. Superpixel-based cropland classification of SAR image with statistical texture and polarization features. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Ratha, D.; Bhattacharya, A.; Frery, A.C. Unsupervised classification of PolSAR data using a scattering similarity measure derived from a geodesic distance. IEEE Geosci. Remote Sens. Lett. 2018, 15, 151–155. [Google Scholar] [CrossRef]

- Phartiyal, G.S.; Kumar, K.; Singh, D. An improved land cover classification using polarization signatures for PALSAR 2 data. Adv. Space Res. 2020, 65, 2622–2635. [Google Scholar] [CrossRef]

- Mandal, D.; Kumar, V.; Ratha, D.; Lopez-Sanchez, J.M.; Bhattacharya, A.; McNairn, H.; Rao, Y.S.; Ramana, K.V. Assessment of rice growth conditions in a semi-arid region of india using the generalized radar vegetation index derived from RADARSAT-2 polarimetric SAR data. Remote Sens. Environ. 2020, 237, 111561. [Google Scholar] [CrossRef]

- Ratha, D.; De, S.; Celik, T.; Bhattacharya, A. Change detection in polarimetric SAR images using a geodesic distance between scattering mechanisms. IEEE Geosci. Remote Sens. Lett. 2017, 14, 7. [Google Scholar] [CrossRef]

- Xie, Q.; Dou, Q.; Peng, X.; Wang, J.; Lopez-Sanchez, J.M.; Shang, J.; Fu, H.; Zhu, J. Crop classification based on the physically constrained general model-based decomposition using multi-temporal RADARSAT-2 Data. Remote Sens. 2022, 14, 2668. [Google Scholar] [CrossRef]

- Small, D. Flattening gamma: Radiometric terrain correction for SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3081–3093. [Google Scholar] [CrossRef]

- Orynbaikyzy, A.; Gessner, U.; Conrad, C. Spatial transferability of random forest models for crop type classification using Sentinel-1 and Sentinel-2. Remote Sens. 2022, 14, 1493. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, W.; Chen, S.; Ye, T. Mapping crop rotation by using deeply synergistic optical and SAR time series. Remote Sens. 2021, 13, 4160. [Google Scholar] [CrossRef]

- Zhang, W.-T.; Wang, M.; Guo, J.; Lou, S.-T. Crop classification using MSCDN classifier and sparse auto-encoders with non-negativity constraints for multi-temporal, Quad-Pol SAR data. Remote Sens. 2021, 13, 2749. [Google Scholar] [CrossRef]

- Chang, Y.-L.; Tan, T.-H.; Chen, T.-H.; Chuah, J.H.; Chang, L.; Wu, M.-C.; Tatini, N.B.; Ma, S.-C.; Alkhaleefah, M. Spatial-temporal neural network for rice field classification from SAR images. Remote Sens. 2022, 14, 1929. [Google Scholar] [CrossRef]

- Shang, R.; Wang, J.; Jiao, L.; Stolkin, R.; Hou, B.; Li, Y. SAR targets classification based on deep memory convolution neural networks and transfer parameters. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2834–2846. [Google Scholar] [CrossRef]

- Huang, Z.; Pan, Z.; Lei, B. Transfer learning with deep convolutional neural network for SAR target classification with limited labeled data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. Deep transfer learning for few-shot SAR image classification. Remote Sens. 2019, 11, 1374. [Google Scholar] [CrossRef]

- Xie, Q.; Wang, J.; Liao, C.; Shang, J.; Lopez-Sanchez, J.M.; Fu, H.; Liu, X. On the use of neumann decomposition for crop classification using Multi-Temporal RADARSAT-2 polarimetric SAR data. Remote Sens. 2019, 11, 776. [Google Scholar] [CrossRef]

- Granahan, J.C.; Sweet, J.N. An evaluation of atmospheric correction techniques using the spectral similarity scale. In Proceedings of the IGARSS 2001. Scanning the Present and Resolving the Future. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No.01CH37217), Sydney, NSW, Australia, 9–13 July 2001; Volume 5, pp. 2022–2024. [Google Scholar]

- Yang, H.; Pan, B.; Wu, W.; Tai, J. Field-based rice classification in Wuhua county through integration of multi-temporal Sentinel-1A and Landsat-8 OLI data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 226–236. [Google Scholar] [CrossRef]

- Antropov, O.; Rauste, Y.; Hame, T. Volume scattering modeling in PolSAR decompositions: Study of ALOS PALSAR data over boreal forest. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3838–3848. [Google Scholar] [CrossRef]

- Ratha, D.; Gamba, P.; Bhattacharya, A.; Frery, A.C. Novel techniques for built-up area extraction from polarimetric SAR images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 177–181. [Google Scholar] [CrossRef]

- Yadav, K.; Congalton, R.G. Accuracy Assessment of Global Food Security-Support Analysis Data (GFSAD) cropland extent maps produced at three different spatial resolutions. Remote Sens. 2018, 10, 1800. [Google Scholar] [CrossRef]

- Sun, X.; Lv, Y.; Wang, Z.; Fu, K. SCAN: Scattering characteristics analysis network for few-shot aircraft classification in high-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar]

- Guo, Z.; Qi, W.; Huang, Y.; Zhao, J.; Yang, H.; Koo, V.-C.; Li, N. Identification of crop type based on C-AENN using time series Sentinel-1A SAR data. Remote Sens. 2022, 14, 1379. [Google Scholar] [CrossRef]

- Yang, H.; Pan, B.; Li, N.; Wang, W.; Zhang, J.; Zhang, X. A systematic method for spatio-temporal phenology estimation of paddy rice using time series Sentinel-1 images. Remote Sens. Environ. 2021, 259, 112394. [Google Scholar] [CrossRef]

- Li, N.; Li, H.; Zhao, J.; Guo, Z.; Yang, H. Mapping winter wheat in Kaifeng, China using Sentinel-1A time-series images. Remote Sens. Lett. 2022, 13, 503–510. [Google Scholar] [CrossRef]

| Parameters | RadarSat-2 | Parameters | RadarSat-2 |

|---|---|---|---|

| Product type | SLC | Pass direction | Ascending |

| Imaging mode | Fine quad polarization | Center frequency | 5.4 GHz |

| Polarization | VV&VH&HH&HV | Look direction | Right |

| Resolution | 8 × 8 m | Incidence angle | 29°–31° |

| Band | C | Dates | 20 February 2020, 15 March 2020, 8 April 2020, 2 May 2020, 26 May 2020 |

| 1D-CNN | GDSSM | GDSSM-CNN (Threshold of 0.8) | ||||

|---|---|---|---|---|---|---|

| Producer’s Accuracy (%) | User’s Accuracy (%) | Producer’s Accuracy (%) | User’s Accuracy (%) | Producer’s Accuracy (%) | User’s Accuracy (%) | |

| Non-farmland | 14.44 | 1 | 91.88 | 42.57 | 91.22 | 74.31 |

| Winter wheat | 99.99 | 64.06 | 69.27 | 96.36 | 92.82 | 96.76 |

| Garlic | 11.78 | 32.18 | 55.52 | 99.70 | 86.82 | 99.08 |

| Accuracy (%) | 67.29 | 71.26 | 91.20 | |||

| Kappa | 0.3012 | 0.5491 | 0.8509 | |||

| Threshold | Winter Wheat Training Data | Garlic Training Data | Accuracy (%) | Kappa | Training Time |

|---|---|---|---|---|---|

| Use samples only | 10 | 5 | 67.29 | 0.3012 | 10 s |

| 0.95 | 994 | 259 | 89.13 | 0.8136 | 14 s |

| 0.90 | 51,179 | 8183 | 91.18 | 0.8506 | 2 min 17 s |

| 0.85 | 381,002 | 52,001 | 91.19 | 0.8507 | 14 min 1 s |

| 0.80 | 1,040,634 | 149,326 | 91.20 | 0.8509 | 38 min 11 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Lu, J.; Tian, G.; Yang, H.; Zhao, J.; Li, N. Crop Classification Based on GDSSM-CNN Using Multi-Temporal RADARSAT-2 SAR with Limited Labeled Data. Remote Sens. 2022, 14, 3889. https://doi.org/10.3390/rs14163889

Li H, Lu J, Tian G, Yang H, Zhao J, Li N. Crop Classification Based on GDSSM-CNN Using Multi-Temporal RADARSAT-2 SAR with Limited Labeled Data. Remote Sensing. 2022; 14(16):3889. https://doi.org/10.3390/rs14163889

Chicago/Turabian StyleLi, Heping, Jing Lu, Guixiang Tian, Huijin Yang, Jianhui Zhao, and Ning Li. 2022. "Crop Classification Based on GDSSM-CNN Using Multi-Temporal RADARSAT-2 SAR with Limited Labeled Data" Remote Sensing 14, no. 16: 3889. https://doi.org/10.3390/rs14163889

APA StyleLi, H., Lu, J., Tian, G., Yang, H., Zhao, J., & Li, N. (2022). Crop Classification Based on GDSSM-CNN Using Multi-Temporal RADARSAT-2 SAR with Limited Labeled Data. Remote Sensing, 14(16), 3889. https://doi.org/10.3390/rs14163889