1. Introduction

In recent years, global climate change has continued to intensify, and natural disasters have occurred frequently, which have had a severe impact on human society. Buildings are the main places for people to live and be active, and they are also the concentrated areas of population and property. Rapid and effective extraction of damage information of affected buildings is significant for postdisaster humanitarian assistance and secondary disaster prevention [

1,

2,

3]. High-resolution (HR) remote sensing images are now widely used in the field of remote sensing. In the field of remote sensing, there are many tasks that are almost always performed based on HR remote sensing images [

3,

4]. However, this also brings challenges to related algorithms. Because there are significant intraclass differences in HR remote sensing images, the interference of light and atmospheric conditions is also a non-negligible factor [

3]. In HR remote sensing images, the background environment of damaged buildings is usually complex. Accurately extracting damaged buildings from complex backgrounds is an important and challenging task [

2,

5].

Building damage discovery (BDD) falls under the category of change detection [

6,

7,

8]. Change detection studies use multitemporal remote sensing images or other remote sensing data to detect the range and class of change of objects in the same geographical area [

9]. When traditional methods such as the spectral feature threshold difference method [

10], ratio method [

11], change vector analysis method [

12], and regression analysis method [

13] are applied to HR remote sensing images, pseudochange areas and “salt and pepper noise” are prone to appear frequently. This is intolerable for the study of BDD because it needs to reflect clearer edges and more complete patches.

Deep learning technology [

14,

15] has been successfully applied in the field of remote sensing, such as remote sensing image classification, semantic segmentation, object detection, change detection, etc. [

16]. This is due to the great advantages of deep learning techniques in feature extraction and information modeling [

2,

17,

18]. In the BDD, various deep neural network models have shown good performance [

19,

20,

21] because they can extract the semantically rich high-level features from the image and synthesize the feature information [

3,

16]. However, deep learning-based BDD methods almost all rely on a large number of training samples and require deeper and more complex network models as the basis. Time-consuming model training is also inevitable. Additionally, the hyperparameter tune of the network is also a tedious process. The time cost is a factor that must be considered for the application of deep learning techniques in different domains. For a time-sensitive problem such as BDD, the large time investment can limit the use of some highly accurate network models [

2,

22,

23].

According to our survey, the convolutional neural network-based BDD approach is the most common. For example, Vetrivel et al. [

24] used AlexNet model and combined it with point cloud data to achieve multicore learning to complete the detection and evaluation of damaged buildings. Zhou et al. [

25] used DCNN to extract features and used a support vector machine as a classifier to complete the detection of building damage in the earthquake area. Hezaveh et al. [

26] used four strategies to alleviate the imbalance of training samples based on the UNet network and performed damage detection on the roof of the building. Ge et al. [

27] used an RS-GAN network model to extract more accurate building outlines. There are also some studies that have been conducted on building localization and damage classification. Some used UNet for pixel-level localization of damaged buildings and ResNet-50 [

28] as a discriminator of damage levels. Some designed a network called Siamese-UNet [

29] for an integrated implementation of damage localization and classification. Others have designed a ChangeOS [

1] framework that can perform integrated processing of building damage assessment to overcome the semantic gap problem. However, it has to be mentioned that all the abovementioned studies were conducted to ensure that the models were trained effectively. A large number of training samples and a long time of model training are necessary to ensure the smooth implementation of the proposed method.

It is common in deep learning to use pretrained models as a starting point for new models in computer vision tasks and natural language processing tasks, which usually consume huge time and computational resources in developing neural networks. Transfer learning [

30,

31,

32] allows the transfer of powerful acquired skills to related problems. This is certainly applicable to the discovery of damaged buildings in disaster-stricken areas. This is because timely assessment after a disaster can effectively guide postdisaster relief and humanitarian assistance [

1]. In some cases, timeliness even takes precedence over accuracy [

33,

34,

35]. It is worth noting that disasters are small probability events. While there is a wide variety of disaster types around the world, each type of disaster does not always occur. Very few visible disaster data have been recorded and is available. There are even fewer sample data that can be used to train the model. Model training with the support of a small number of samples is valuable if good results can be achieved [

36]. Therefore, using the transfer learning mode, the network model that extracts useful feature information through a few training samples has a high research value.

Embedding modules represented by attention mechanisms are continuously proposed to obtain better gains in different tasks. Networks with different structures and functions have been designed and applied in several fields of remote sensing image processing. This is dominated by end-to-end semantic segmentation networks, such as FCN [

37], UNet [

38], SegNet [

39], FC-EF [

40], DeepLabv3+ [

41], ChangeNet [

42], SCDNET [

7], DSA-Net [

43], ADA-Net [

6], etc., which show good performance in full element change detection or building change detection. Undoubtedly, the use of attention modules or their variants improves the feature extraction capability, robustness, or detection accuracy of the networks. However, attention modules for effective extraction of image features in BDD tasks still need to be studied in depth. The aspects of feature extraction at small sample numbers, reuse of low-level features, and effective fusion of low-level and high-level features are still of great value in the research of BDD.

In order to improve the efficiency of building damage information discovery and obtain an accurate damage area coverage map with the support of a small number of samples, this paper proposes a new transfer-learning deep attention network (TDA-Net). TDA-Net uses a pretrained residual network as the benchmark network, which can alleviate the heavy model training process, improve the efficiency of model use, and achieve rapid disaster response. In order to accurately extract the damage features in HR remote sensing images, precisely locate the damaged area, and guide the model to focus on the learning of damage information, we introduce a deep attention module which aims to model training data when there are few training samples. The key features implied in a small number of samples are fully learned, and the deep data representation of the building damage area in the image is mined to capture the effective information source for fast model fitting. Experiments are carried out on a set of global building disaster data and three sets of disaster area data, and the experimental results verify the effectiveness of the proposed method.

2. Materials and Methods

2.1. Dataset Description

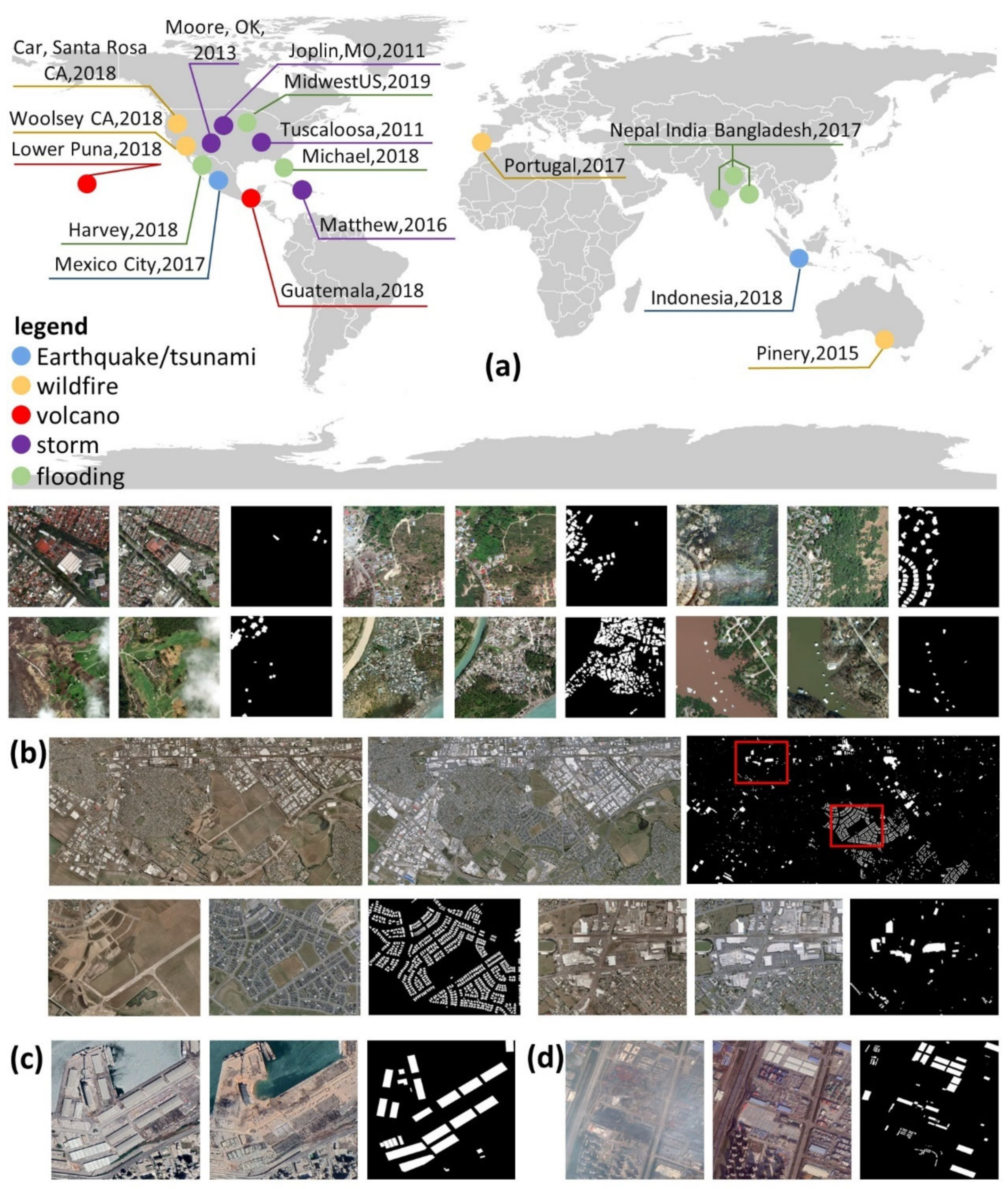

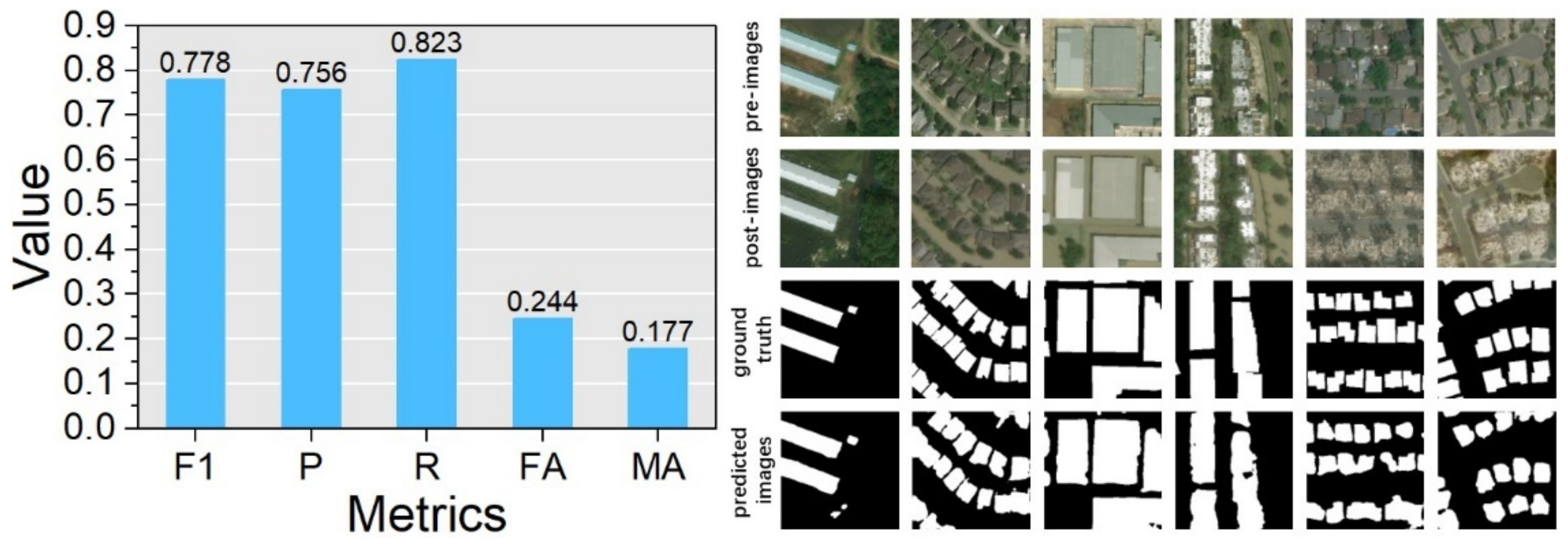

Four sets of data were used in this paper, as shown in

Figure 1. The first set is the global xView2 building damage assessment dataset [

23]. The dataset contains multiple types of disasters that occurred in North America, Asia, and Australia between 2011 and 2019. The locations of disasters are shown in

Figure 1a. The disaster types in the dataset include earthquakes, volcanoes, storms, tsunamis, wildfires, and floods. It consists of 22,068 HR remote sensing images from the WorldView2 and WorldView3 satellite platforms, and the images contain 850,736 building instances. With rich disaster types and real scenarios with large space-time spans, xBD is a high-quality dataset for pretraining models.

The second dataset is the WHU Building Dataset, which consists of aerial images with a spatial resolution of 0.075 m, as shown in

Figure 1b. The area covered by the imagery is Christchurch, New Zealand, which was hit by a 6.3 magnitude earthquake in February 2011, and a large number of buildings were destroyed. The urban area was subsequently rebuilt, and aerial imagery obtained in April 2012 contained 12,796 buildings within 20.5 km

2. This dataset is a large building change discovery dataset that can be used to validate the robustness of the model in discovering changing buildings over a large area. The other two sets of data come from Google Earth, and the disaster types involved are explosions. Beirut is the largest city and capital of Lebanon. Its port exploded on 4 August 2020. The accident destroyed a large number of buildings. We selected the area with the most severe explosion as the test area, as shown in

Figure 1c. On 12 August 2015, an explosion occurred in the Binhai New Area of Tianjin, China, and about 304 buildings were damaged. We selected remote sensing images before and after the explosion for building damage assessment, as shown in

Figure 1d.

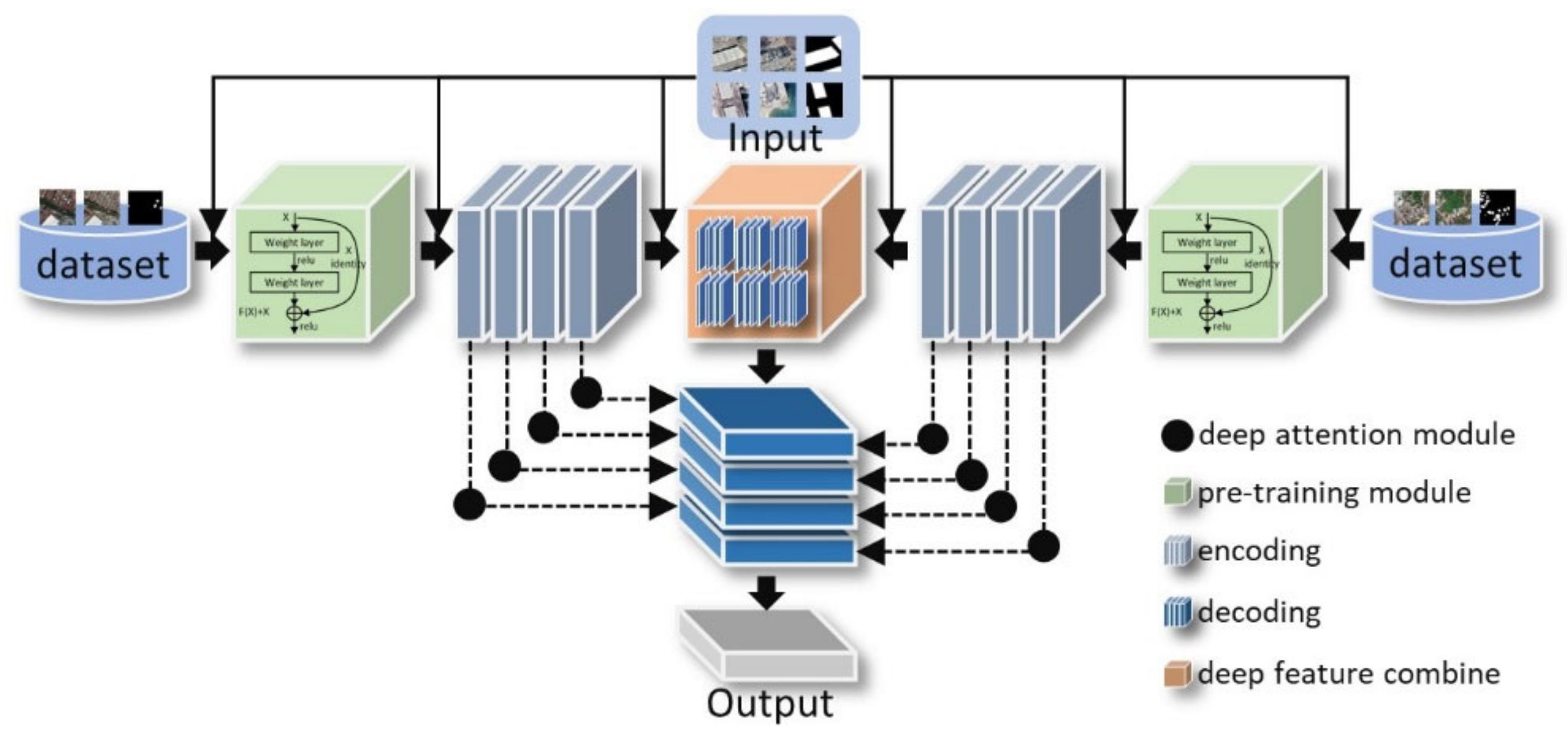

2.2. Basic Architecture of TDA-Net

The backbone network of TDA-Net consists of four parts: pretraining, encoding, decoding, and deep attention operation, as shown in

Figure 2. The pretraining section uses the Resnet101 model [

28]. It uses the shortcut connection operation technique to learn to form residuals for each layer in the network, optimize the training process better, and to deepen the number of network layers. Resnet101 has a deep network layer, which can extract higher-level in-depth features. We use it as the front-end network for feature extraction and use the image features extracted by it to serve the downstream work. The network in the second stage is composed of encoding modules. The encoding modules at both ends accept the output of Resnet101 and extract semantic information again, and finally form deep combined features. The third stage is the decoding part, where the depth-combined features implement pixel-level class mapping through decoding operations. The fourth stage is carried out simultaneously with the third stage. In the process of decoding and mapping the deep combined features, the deep attention module guides the network and combines primary and high-level features to achieve an effective combination and utilization of useful feature information. It is worth mentioning that the input of TDA-Net is bidirectional, with two interfaces for receiving pre- and postimages, respectively. The weights in the two branches are independent, and both use ResNet101 for efficient modeling of features in both phases of the images and targeted data analysis of the images. This approach of modeling images separately enables the extraction of image features at both ends using pretrained ResNet101, by which separate modeling of the distributed form of image data is achieved. Combining the features extracted by ResNet101 from the two phases of images separately at different levels results in a clearer representation of the features, which may not be an advantage of the approach that first concatenates the two phases of images and then feeds them into the model. This divide and conquer approach is a very popular way of using the data, and this side is used in much of the literature [

43,

44,

45,

46].

In order to reuse the low-dimensional feature data and make the raw images useful in the high-level classification task, we introduced raw image data information at each of the three different nodes of the network to supplement the high-level abstract feature information. At different nodes, different resampling strategies are used to keep the original image size and the feature map size of that layer consistent. The details of the model are shown in

Table 1.

In order to keep the same distribution of the input of each neural network’s layer and speed up the training of the model, we added a normalization layer to the encoding and decoding modules, respectively. ReLU is used as the nonlinear activation function in each layer. The Sigmoid function is used at the end of the network to implement the feature transformation and obtain the output of the network.

In the research of binary classification, the problem of a severe imbalance of the proportion of positive and negative samples is almost inevitable [

21,

47]. Disasters are always rare. Even if a disaster occurs in a particular area, it does not necessarily cause damage to buildings. Some minor damages are difficult to detect and are not apparent in optical images. In order to obtain a better training effect when the number of samples of damaged buildings is small and the environment where the buildings locate is complex, we use the focal loss function [

48]. This loss function reduces the weight of a large number of simple negative samples in training and can focus on information mining for difficult samples. The focal loss function was modified based on the cross-entropy loss function. It uses two modulation factors to balance the positive and negative samples and solve the problem of easy and difficult sample learning. Its formula is as follows:

where

and

are the sample modulation factors, and

is the predicted probability.

2.3. Pretraining Residuals Module

Adequate pretraining efforts have a positive effect on downstream tasks. This is because the pretrained model already has the ability to simulate similar data. ResNet was proposed in 2015 and won first place in the ImageNet competition classification task. Since then, many methods have been conducted on the basis of ResNet50 or ResNet101, and ResNet has been used in fields such as detection, segmentation, and recognition. The residual units in the network (

Figure 3) can learn new features based on the input features by “short-circuiting the connections”, allowing the network to achieve better performance.

The ResNet101 we use is a publicly available model pretrained on the ImageNet dataset. Based on this, we reconstructed the end of the network. The end-depth residual features of the network are accepted by a two-dimensional convolution, and a dimensionality reduction is made. Among them, the two-dimensional convolution includes a two-dimensional convolutional computation unit, a batch normalization unit, and a ReLU function unit. Finally, the reduced-dimensional result is mapped nonlinearly by the Sigmoid function. The pretraining module is trained using the xView2 building damage assessment dataset, mainly by supervising the results and adapting the model so it can model building damage information.

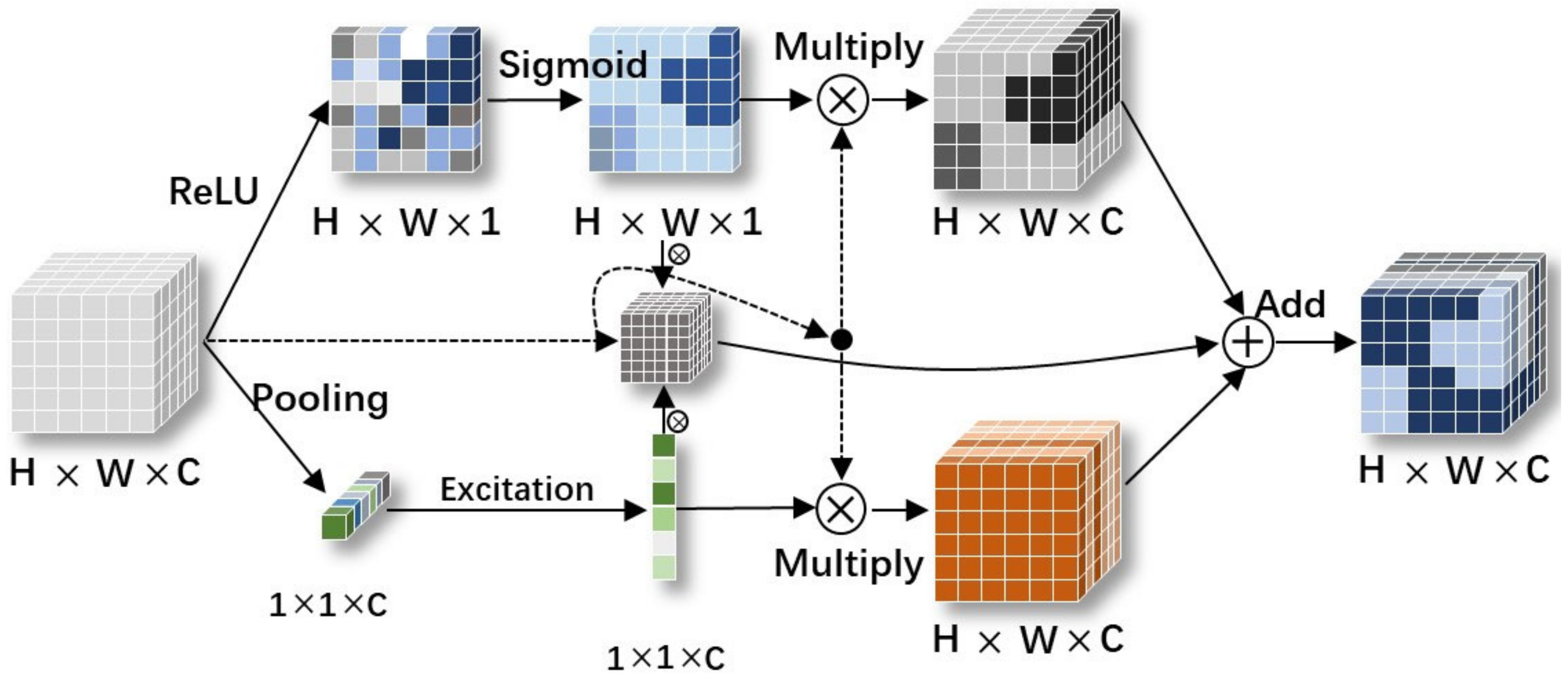

2.4. Deep Attention Module

A Convolutional Neural Network (CNN) has the characteristics of strong representation, fast inference, and weight sharing characteristics, and CNNs show better results in image segmentation [

5,

49]. The core computation of CNNs is implemented by the convolution operator, which learns new feature maps from the input feature maps by convolution kernels. Essentially, convolution is a feature fusion of a local region, which includes spatial (H and W dimensions) and interchannel (C dimension) feature fusion. For convolution operations, a large part of the work is to improve the receptive field, that is, to fuse more features spatially or to extract multiscale spatial information. For channel dimension feature fusion, the convolution operation basically connects all channels of the input feature map by default. The feature fusion of the spatial dimension realizes the continuous abstraction of spatial features with the deepening of the number of layers. In order to make the model effective at both the channel and spatial levels of attention, we combine channel attention and spatial attention to form a deep attention module (

Figure 4). The channel attention mechanism uses global descriptors to capture the relationship between channels and assign weights to channels [

50]. The spatial attention mechanism restricts the activated part to the region with segmentation, reduces the activation value of the background to optimize the segmentation, and plays the role of strengthening important features and suppressing secondary features. The deep attention module can guide the model to focus on the areas and channels that need to be learned and effectively model image information.

The deep attention module brings together the spatial and channel attention information and has the attention variant information after multiplying the two. This variant information has a stronger guiding ability and has the advantage of integration over spatial and channel attention. Compared with the traditional operation means, the deep attention module does not simply stitch the attention-weighted feature maps of the two branches. The spatial and channel attention weights are multiplied with the input feature matrix at the same time, and the obtained feature signals have stronger integration. Finally, the output of the deep attention module is obtained by making an addition operation of the three.

The calculation process of the transfer deep attention module is as follows:

where

identifies transfer deep attention module,

identifies spatial attention mechanism, and

identifies channel attention mechanism.

is the sigmoid activation function,

is the ReLu activation function, and

z identifies global average pooling.

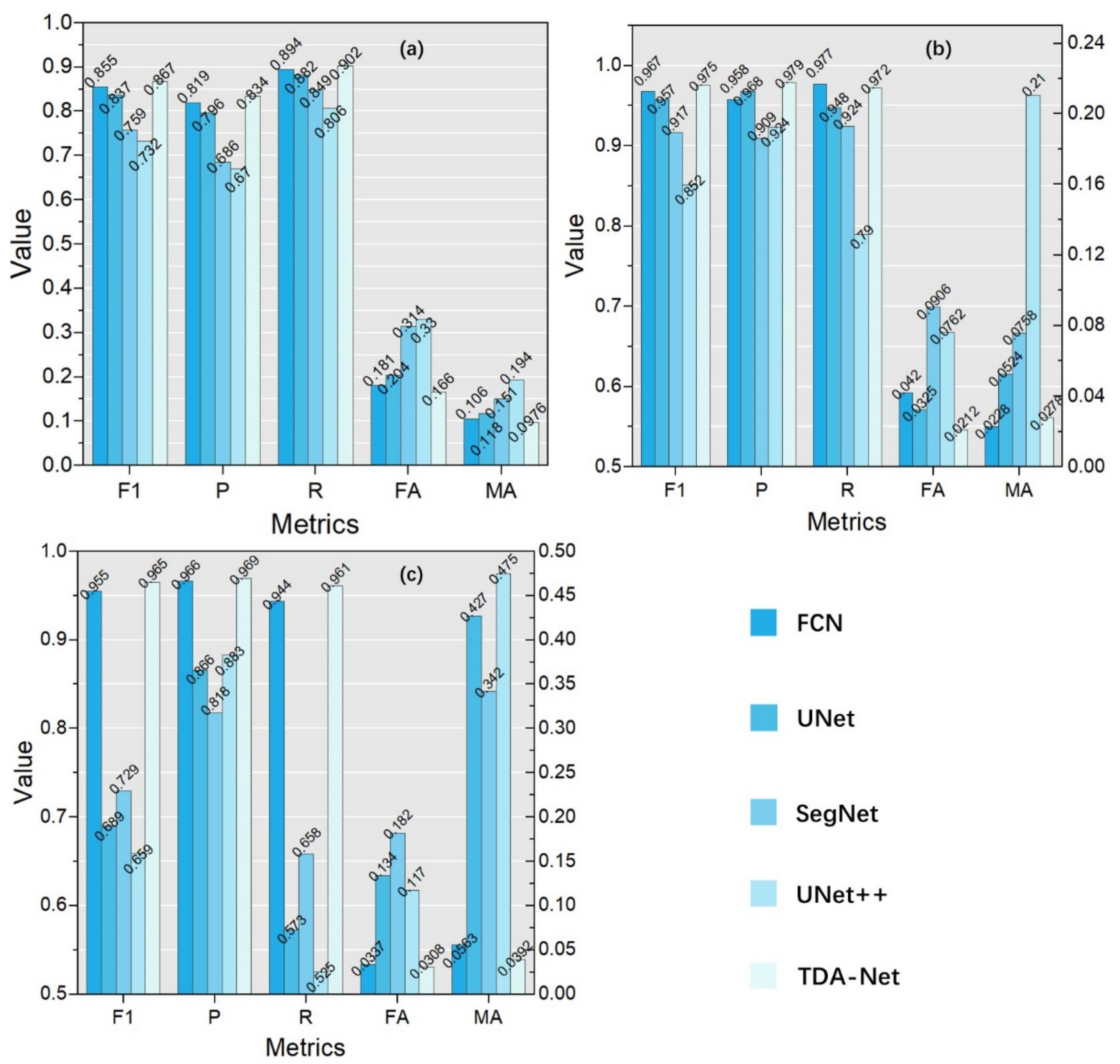

2.5. Comparison Methods and Evaluation Metrics

We evaluate our method, separately, from quantitative and qualitative perspectives. F1-score(F1), Precision(P), recall(R), false alarm (FA), and missing alarm (MA) are used as quantitative evaluation metrics. We use FCN [

37], UNet [

38], SegNet [

39], and UNet++ [

51] as comparison models, where the FCN used is an optimized version using the pretrained VGG16 as the feature extraction layer. UNet and SegNet are the same versions as in the original paper, i.e., UNet is the classical encoding and decoding structure with 4 down-sampling and 4 up-sampling layers, and SegNet uses the first 13 layers of VGG16 for the encoding part. UNet++ is the L2 pruning model in version.

The calculation formula of the evaluation metrics is as follows:

where

is the number of positive samples classified by the model correctly,

is the number of positive samples classified by the model incorrectly,

is the number of negative samples classified by the model correctly, and

is the number of negative samples classified by the model incorrectly.

FCN is an initial attempt to perform pixel-level classification of images, addressing the problem of image segmentation at the semantic level. The FCN used in the experiments is not the original version but a modified version using VGG16 as the pretrained feature-extraction backbone. This approach was used because we wanted to further explore the difference in accuracy with the introduction of the pretraining module compared to the normal model.

The UNet is a classic semantic segmentation network that has been successfully used in the field of remote sensing image processing. It is a fully convolutional neural network with a U-shaped structure, with the encoding path on the left and the decoding path on the right. The UNet model used in this paper is the classical version, but its output has been modified to accommodate the pattern of binary semantic segmentation. In addition, it has four layers for both encoding and decoding parts.

SegNet is also a fully convolutional neural network but does not use the same techniques as FCN in the encoding and decoding parts. The encoder part of SegNet uses the first 13 layers of VGG16, and each encoder layer corresponds to a decoder layer. The final output of the decoder is sent to the SoftMax classifier, which generates category probabilities for each pixel independently.

UNet++ has made further improvements based on UNet. It mitigates unknown network depths by efficient integration of U-Net of different depths and designs a highly flexible feature fusion scheme and a pruning scheme for model acceleration. Although the dense skip link structure of UNet++ is able to capture features at different levels and bridge the semantic gap between encoder and decoder feature maps, it has a large number of parameters, which in turn leads to its inefficient operation. This large consumption of time reduces the usability of UNet++ because the analysis and evaluation of disasters require a lower time investment. The L2-mode of UNet++ achieves almost the same level of detection accuracy as not only L3 and L4, but its time cost is also small. We believe that better results may be obtained if the full UNet++ is used in our experiments, but this improvement in accuracy will not be too great. At the same time, the time cost of the full UNet++ will be high. Therefore, we used the pruned UNet++ model.

The models involved in the comparison are relatively classical semantic segmentation networks, which are often used in remote sensing image change detection, and many researchers have proved their performance.

4. Discussion

From the experimental results we obtained, it does appear that the pretraining module is more useful for TDA-Net than the deep attention module. We believe that this is related to the feature extraction ability of ResNet101 and also to xView2. ResNet101 has a strong feature extraction ability, which can help TDA-Net to obtain useful feature information. Additionally, xView2 is a large disaster dataset, which contains many types of disasters and can effectively train the model to make it acquire a stronger data modeling capability. In addition, although the pretraining module achieves a better performance, this may be related to the design of the network because the “nopre” version of the model is based on TDA-Net with all ResNet101 removed, while the “noatt” version only removes the deep attention module.

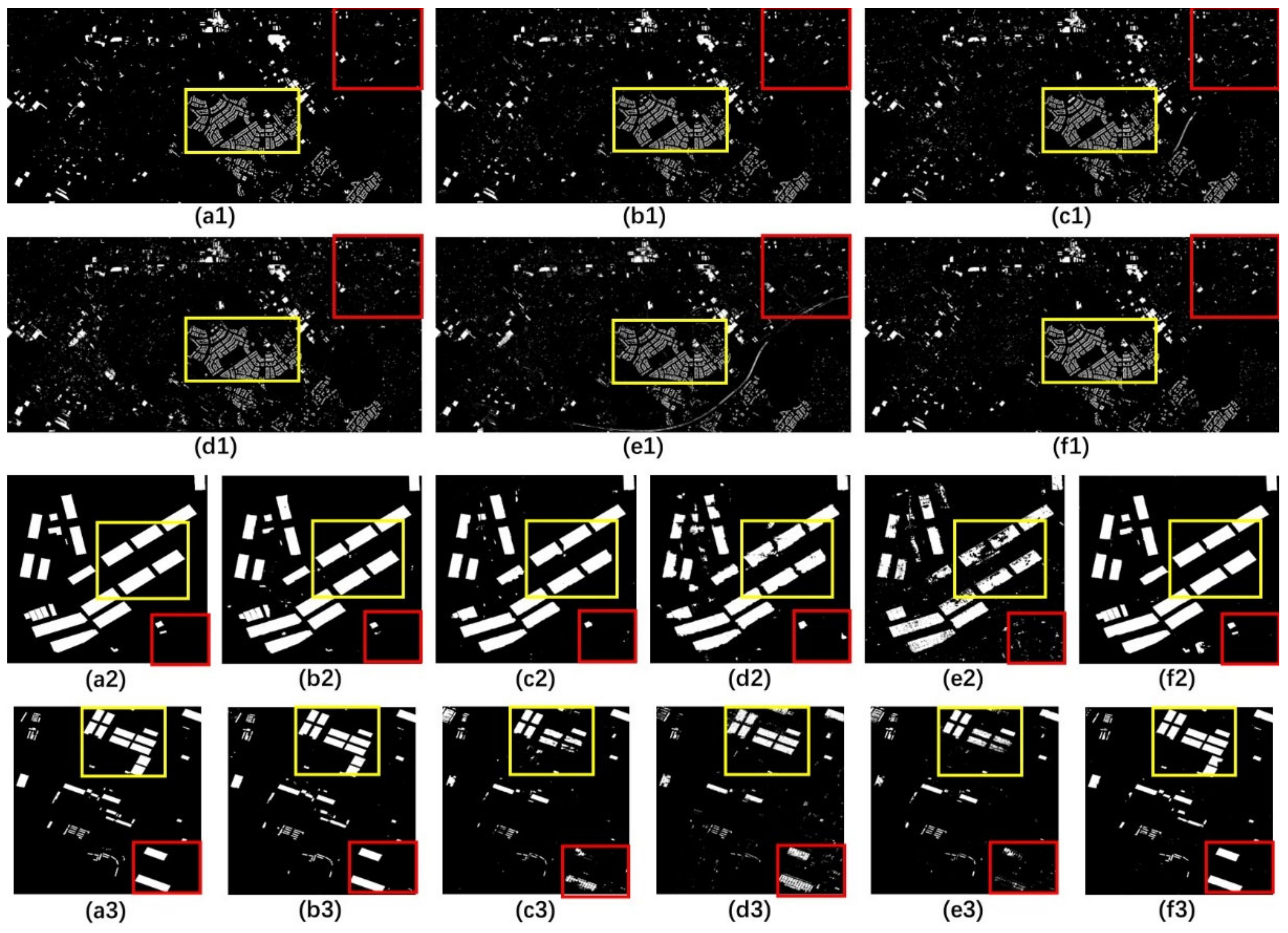

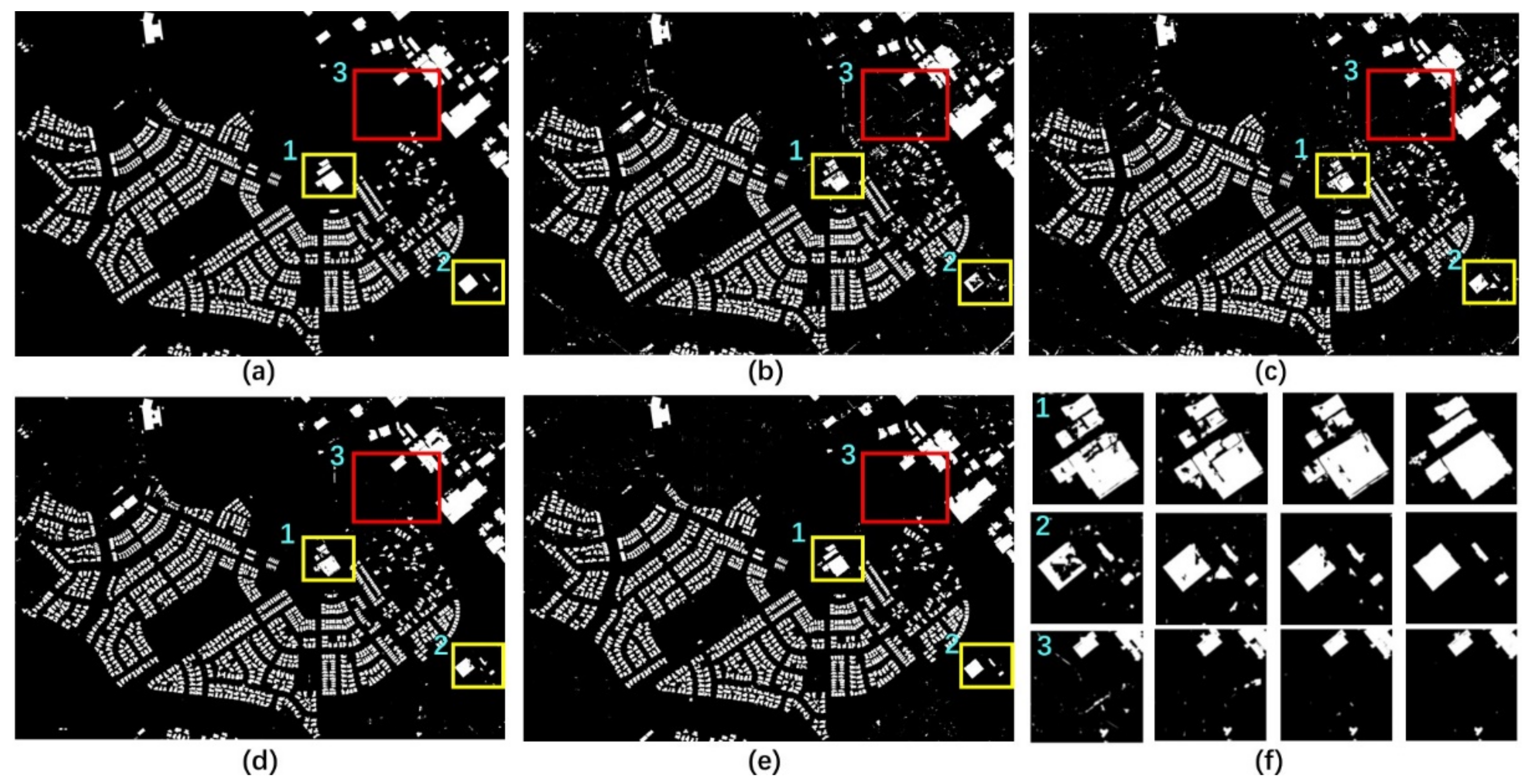

The role played by the pretrained model and the deep attention module on TDA-Net is worth exploring. We designed an ablation experiment in order to verify the effects that both have on the model and to analyze the role they play behind TDA-Net. The three models involved in the ablation experiment are the TDA-Net_nopre model with ResNet101 removed, the TDA-Net_noatt model with the deep attention module removed, and the TDA-Net_noprenoatt model with both ResNet101 and the attention module removed. That is, TDA-Net_nopre is based on TDA-Net with ResNet101 removed and does not use it as the feature extraction front-end structure. TDA-Net_noprenoatt removes the deep attention module and replaces it with skip connections. TDA-Net_noprenoatt is based on TDA-Net with both ResNet101 and the attention module removed, and only the encoding–decoding structure and the low-dimensional feature reuse mechanism are retained. In addition, Adam is still used as an optimizer, and its learning rate is set to 1 × 10

−4. We acquired a core region of size 7000 × 10,000 pixels in the WHU Building Dataset as a test area. The training data used are the same as those in

Section 3.2, but the training period is shortened to 50 epochs. We use four retrained models for building information extraction on the test area (see

Figure 10) and count the evaluation metrics of them (see

Table 4).

The addition of the pretraining model and the deep attention module has had an immediate effect on the performance improvement of TDA-Net. The performance of all three shows an incremental trend. That is, both the pretraining model and the deep attention module have positive effects on performance improvement. TDA-Net_noprenoatt has the worst performance, with a high rate of missing detection (see yellow box) and false detection (see red box), incomplete detection of buildings, and poorly defined edges of buildings. TDA-Net_nopre is complete in detecting buildings because of the deep attention module, which makes it more capable of feature sense, but there are still many false pixels. TDA-Net_noatt also achieved satisfactory performance, and it outperformed TDA-Net_nopre. TDA-Net performs best with clear edges, complete individuals, and fewer false pixels detected. It is worth mentioning that the use of the pretraining module seems to improve the performance of the model more, while the additional utilization of the deep attention model improves the performance of the model to a small extent, as can be clearly observed in

Table 4. This phenomenon may be due to the fact that the pretrained model plays a more important role inside the network, making the model more sensitive to image features and more able to fit the key feature information effectively. In contrast, the deep attention module used in the middle stage of the network only increases the feature awareness of the model and does not give it help in the front end of the network. This results in little improvement in the overall effectiveness of the model with a small number of samples involved in the training.

5. Conclusions

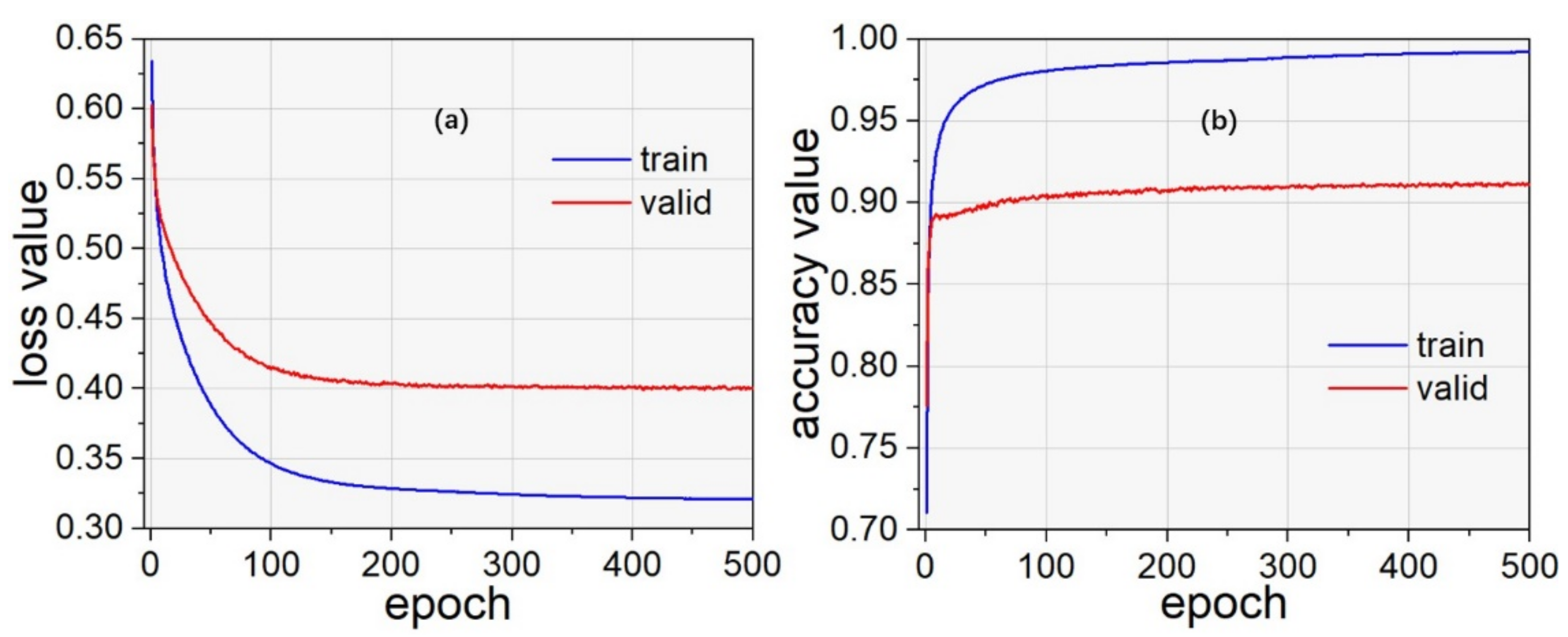

In this paper, we propose TDA-Net, a transfer learning-based deep attention network that can quickly and accurately extract information about damaged buildings in disaster areas. The greatest contribution of TDA-Net is to ensure high detection accuracy while maintaining high efficiency. The network incorporates the idea of transfer learning, and the pretrained bilateral branch benchmark model can effectively improve the training efficiency of the model. This is critical for rapid response to disasters. The deep attention module embedded in the network captures essential and practical information in the image. Under the training cycle of small batches, the model can be quickly converged, and the detection accuracy of the model can be higher. We conducted experiments on a total of three datasets, and the qualitative and quantitative results of the experiments show that our method is feasible and has high detection accuracy. Additionally, our analysis on the time consumption of the model also show that TDA-Net is of high practical value because it converges quickly and requires only a small number of cycles of training to achieve the expected validation accuracy.

In the future, the optimization of the structure of TDA-Net is worth investigating. This is because it takes the longest time in the detection phase. The optimization of its intermediate computation process may be beneficial in reducing time consumption. In addition, we fine-tuned the model using a small fraction of the data in our experiments. In future studies, perhaps we need to explore some steps without fine-tuning to achieve a more efficient detection process.