Remote sensing technology plays an important role in agricultural monitoring, geological survey, military survey, and target detection [

1,

2,

3,

4]. As a use of remote sensing technology, land cover classification is a hot and challenging research topic. Accurate land cover classification is important for agricultural production and grain yield assessment, urban planning and construction, and ecological change monitoring [

5,

6,

7]. Researchers have proposed multiple classifiers to classify land cover using remote sensing images [

8], and the classification methods based on optical satellite images can be broadly grouped into spectral-based and spectral–spatial classification methods [

9]. The spectrum-based classification methods use the spectral values obtained from remote sensing images as features, and statistical clustering or machine learning classification algorithms, including support vector machines (SVMs) [

10], maximum likelihood [

11], and random forest [

12], to classify pixels. The spectral–spatial-based classification methods combine their own spectral values and construct corresponding auxiliary information from the image neighborhood space as feature vectors to achieve pixel-by-pixel classification of remote sensing images [

13].

Although the above classifiers can accurately classify land cover, they still need to be improved for precision agriculture classification. Thus, with the development of deep learning, classification methods based on deep learning have been widely used for land cover classification, such as convolutional neural networks (CNNs) [

14,

15,

16,

17,

18,

19], improved Transformer [

20,

21], etc. U-Net [

22], as an earlier CNN, was initially applied for segmenting medical images because U-Net only needs a small batch of data to produce accurate segmentation results. U-Net has also been applied for land cover classification tasks by many researchers, who have proposed many improved U-Net network structures to improve the semantic segmentation performance [

23,

24]. The U-Net model consists of an encoder and a decoder. The encoder extracts high-level features through a step-by-step downsampling operation. The decoder gradually upsamples the high-level features, and combines the skip connection to restore the feature map to the original size. U-Net loses large amounts of detailed information during downsampling; therefore, adding ASPP to U-Net helps to retain this detailed information while increasing the perceptual field [

19]. Zhang et al. [

14] added an ASPP module to the underlying U-Net, enabling the feature maps to be extracted with multiscale contextual information and reducing the confusion between different types of adjacent pixels. To overcome the problem of poor image contour recovery in the decoder process of U-Net, a conditional random field (CRF) was added to U-Net for post-processing [

17]. CRF processing can reduce mixing between similar ground object types. The Res-UNet proposed by Cao et al. [

15] is an organic combination between ResNet [

25] and U-Net [

22], where the residual unit of ResNet replaces the convolutional layer of U-Net so that shallow features more easily propagate to deep layers, improving the distinction between features with small differences in spectral signatures.Yan et al. used U-Net to extract features at different levels, input them into SVM classification, and performed a majority voting game on the classification results of features at different levels, fully considering the effects of shallow, mid-level, and deep-level features [

16]. Biserka Petrovska et al. [

26] used a combination of CNN and SVM with linear and radial basis function (RBF) kernels (RBF SVM), which extracts features by the fine-tuned CNN to put into the RBF SVM for classification. Biserka Petrovska et al. [

27] used a variety of mainstream CNNs to extract features from remote sensing images, fused the features extracted by different CNNs, and put them into SVM for classification. The U-Net + SVM approach requires the features extracted from U-Net to be input to SVM training, and SVM training is a time-consuming process. U-Net++, proposed by Chen et al. [

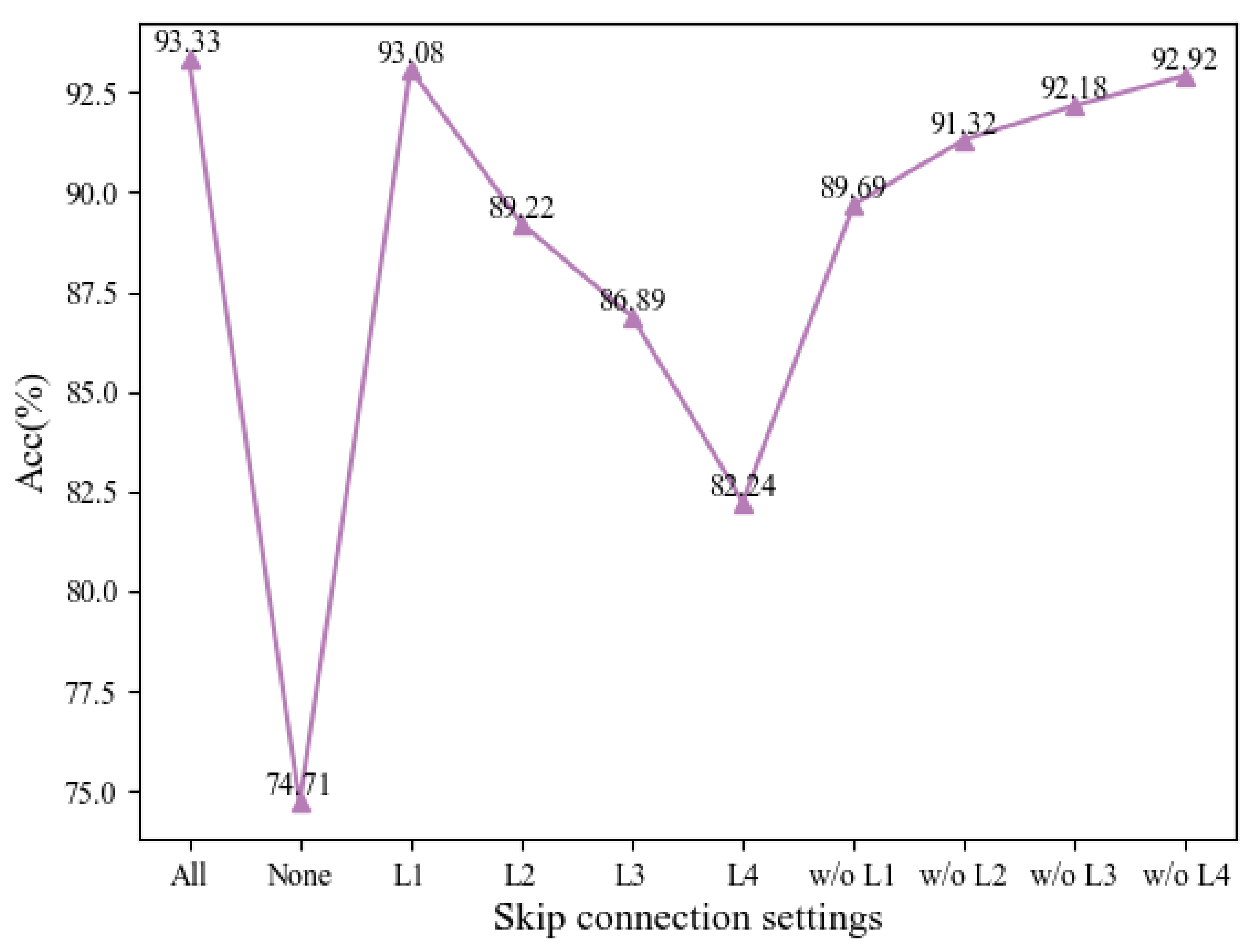

28] uses dense skip connections and subnetwork nesting to improve U-Net, but not all skip connections are beneficial for segmentation tasks, so some skip connections negatively affect the results [

29]. Remote sensing images contain rich spectral and spatial information, so selecting feature information favors classification is essential [

30]. The attention mechanism excels in natural language processing (NLP) and computer vision tasks. The attention mechanism can highlight features with strong representation ability and suppress irrelevant features [

31,

32,

33]. Therefore, the attention mechanism has been introduced into remote sensing image classification by many researchers. The attention mechanisms commonly used in remote sensing images include the channel attention, spatial attention, and self-attention mechanisms [

34]. Channel attention focuses on what is input, whereas spatial attention focuses on the location of the information, and spatial attention is complementary to channel attention. Channel attention uses global average pooling and global max pooling to obtain the relationship between feature channels to generate channel attention maps, and spatial attention uses average pooling and max pooling along the channel axis to obtain spatial feature attention maps [

32]. Zhu et al. [

35] enhanced the bands with strong characterization ability and suppressed irrelevant bands by introducing a spectral attention module that assigns different weights to the bands, improving the spatial attention mechanism that allows the network to assign neighboring pixel weights, and finally combining it with the residual unit to achieve high accuracy on the Indian Pines, University of Pavia, Kennedy (

http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes accessed on 1 January 2021). Different from directly embedding the attention module in the residual unit, Attention-UNet [

18] reduces the redundant features transmitted by the skip connections by adding attention gates to the U-Net skip connections, and highlights the salient features in specific local regions. High-resolution remote sensing images have certain features. Self-attention was originally used for NLP tasks. Due to the excellent performance of Transformer [

34], Alexey et al. [

36] proposed Vision Transformer (ViT) using the encoder part of Transformer. Although ViT performs well on large datasets such as imageNet, its memory consumption is large, so ViT has high hardware requirements. To improve efficiency, Chen et al. [

37] proposed TransUNet, which uses the image blocks from the feature map of CNN for the input sequence of ViT, and then combines it with U-Net. The decoder upsamples the output features of ViT and then combines it with the CNN feature map of the same size to recover the spatial information, which effectively improves the semantic information of the image. Because the Swin Transformer [

38], having powerful global modeling capability, has shown superior performance on several large visual datasets, some researchers have combined the Swin Transformer with U-Net to achieve semantic segmentation [

20,

21,

39]. The Swin Transformer used by ST-UNet [

20] was paralleled with CNN to improve the accuracy of small-scale target segmentation by combining the global features of the Swin Transformer with the local features of CNN through an aggregation module.

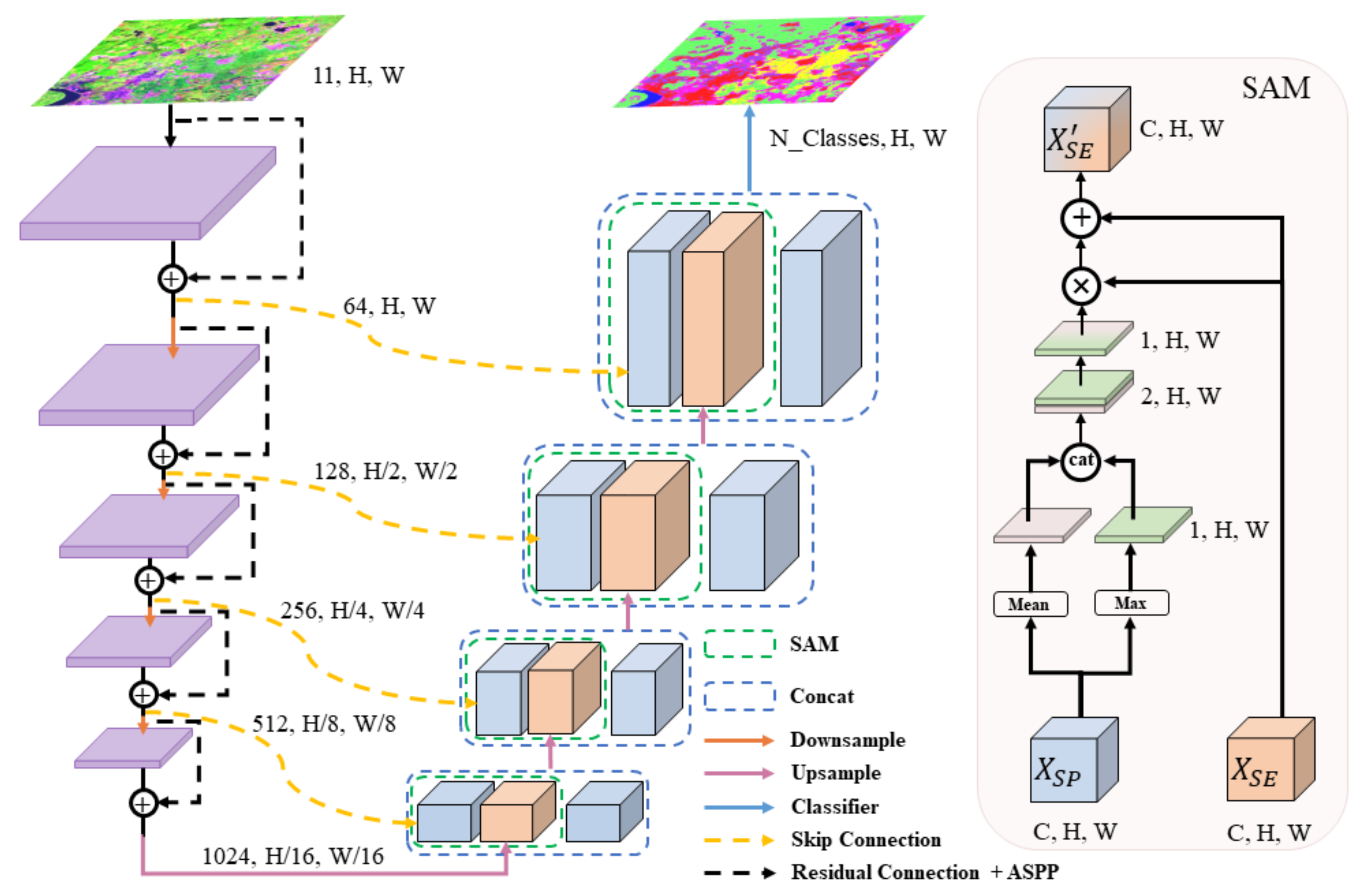

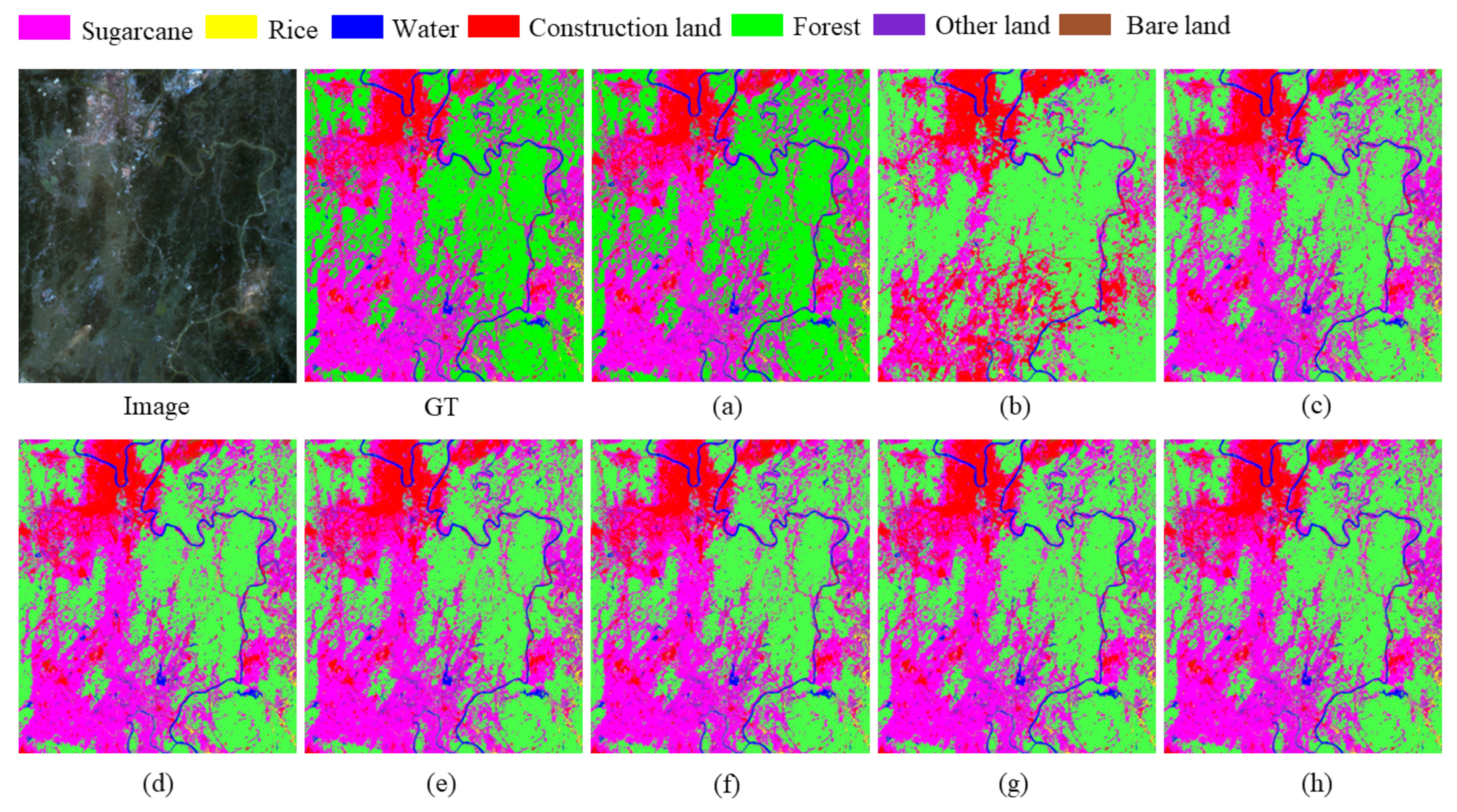

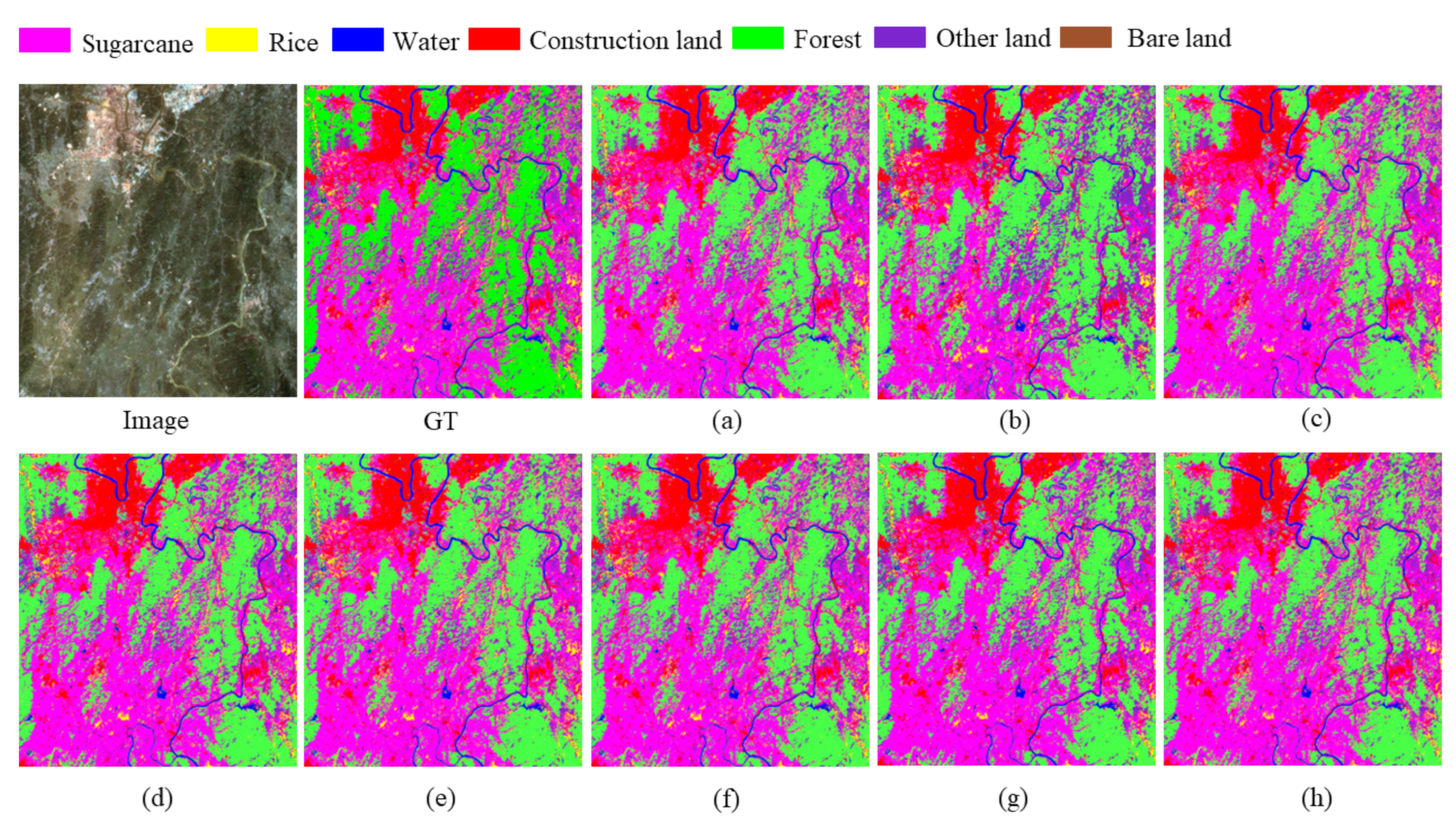

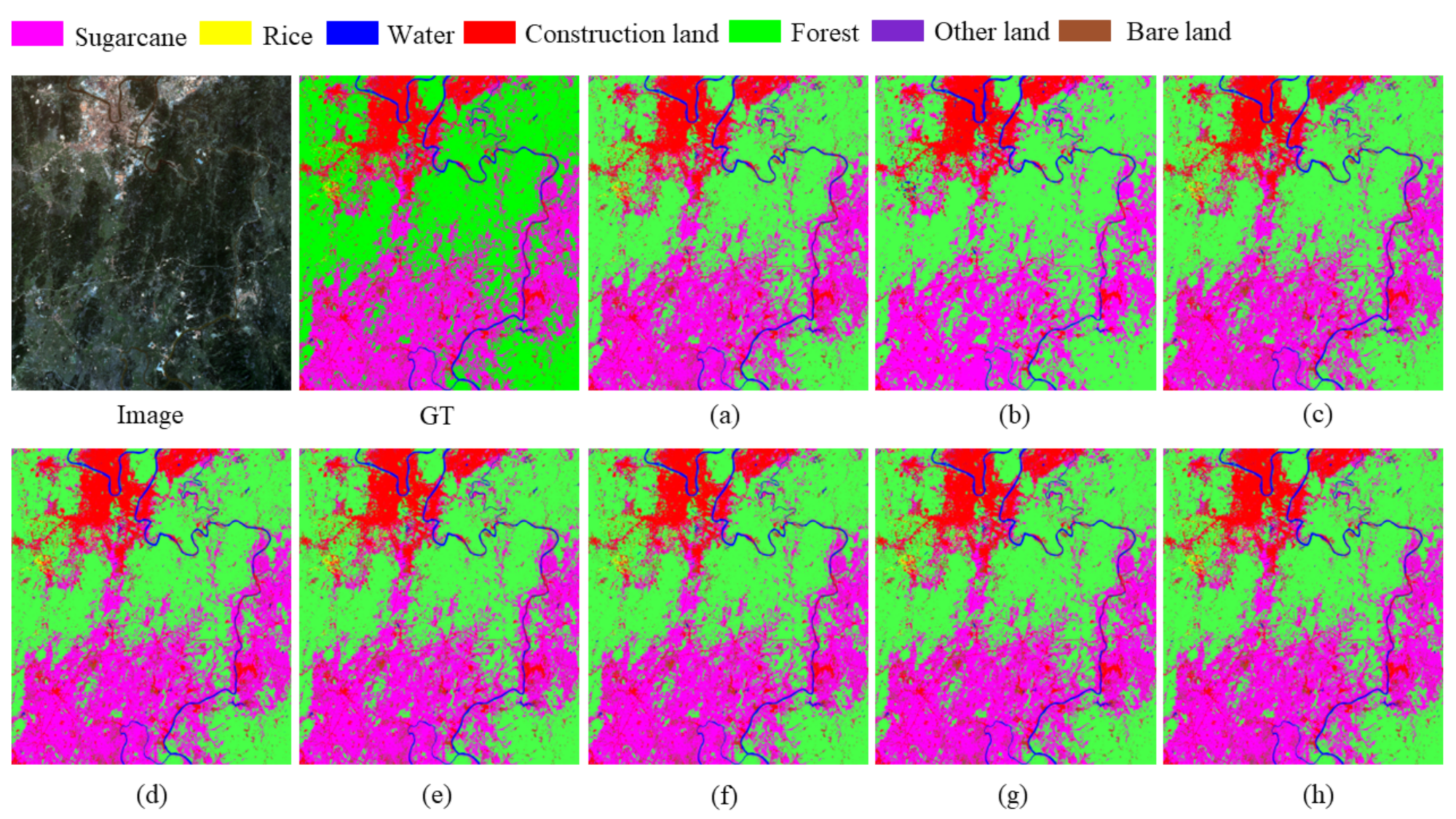

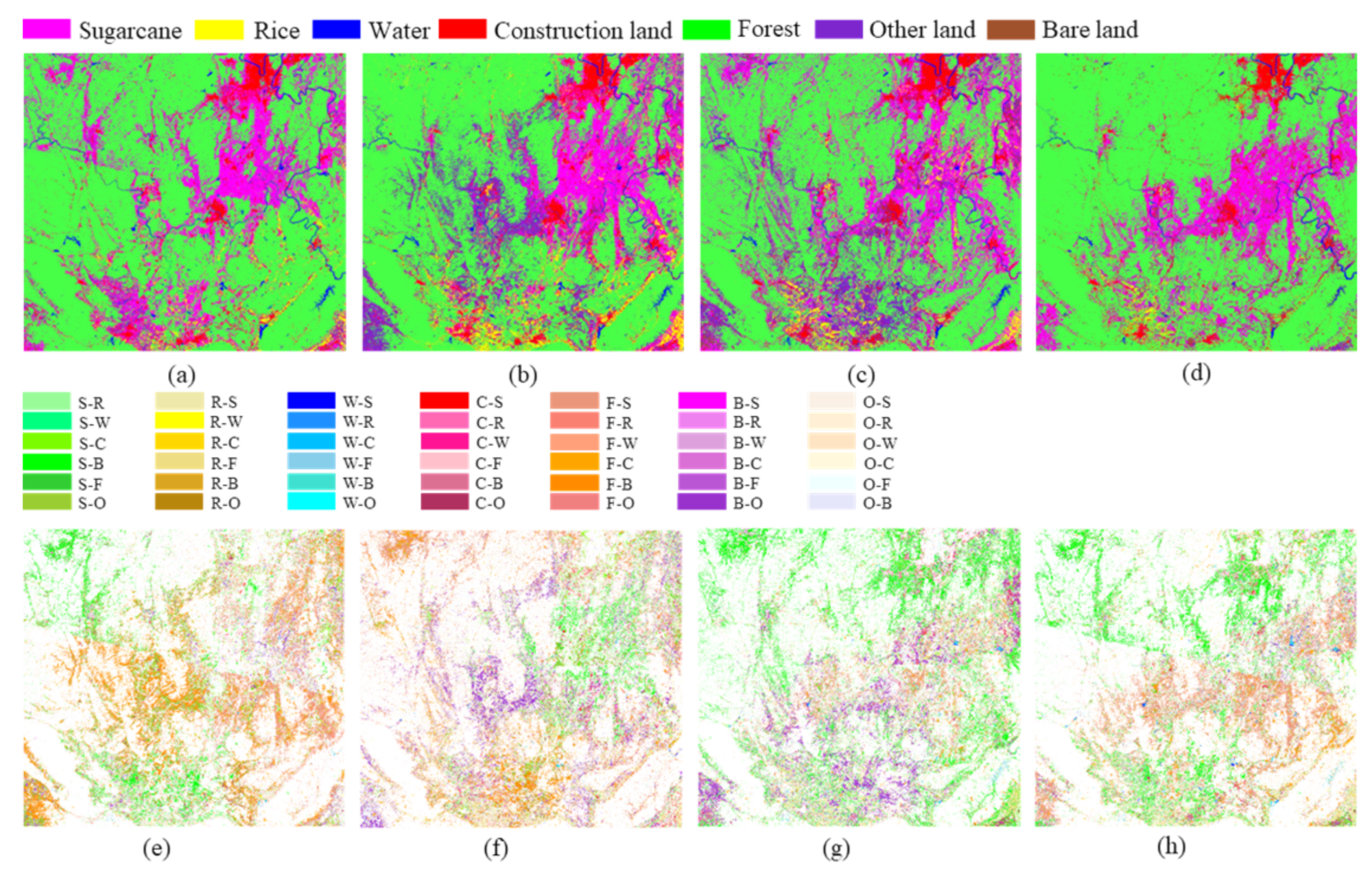

The algorithms described above, having different improved optimization approaches for different classification tasks, provided the reference ideas for the study described in this paper. To further improve the classification accuracy of remote sensing images, the deepening of the network was experimentally found to lead to the reduction in spatial resolution and the divergence in spatial information. Therefore, in this paper, an improved U-Net remote sensing classification algorithm that integrates attention and multiscale features is proposed. First, the algorithm uses dilated convolutions of different scales to expand the receptive field so that the network effectively integrates multiscale features and enhances shallow features. Second, through the fusion residual module, the shallow and deep features are deeply fused, and the characteristics of shallow and deep features are effectively used. Third, to integrate more spatial information into the upsampling feature maps, a spatial attention module (SAM) is used to fuse the feature maps obtained from skip connections with the upsampling feature maps to enhance the combination of spatial and semantic information.

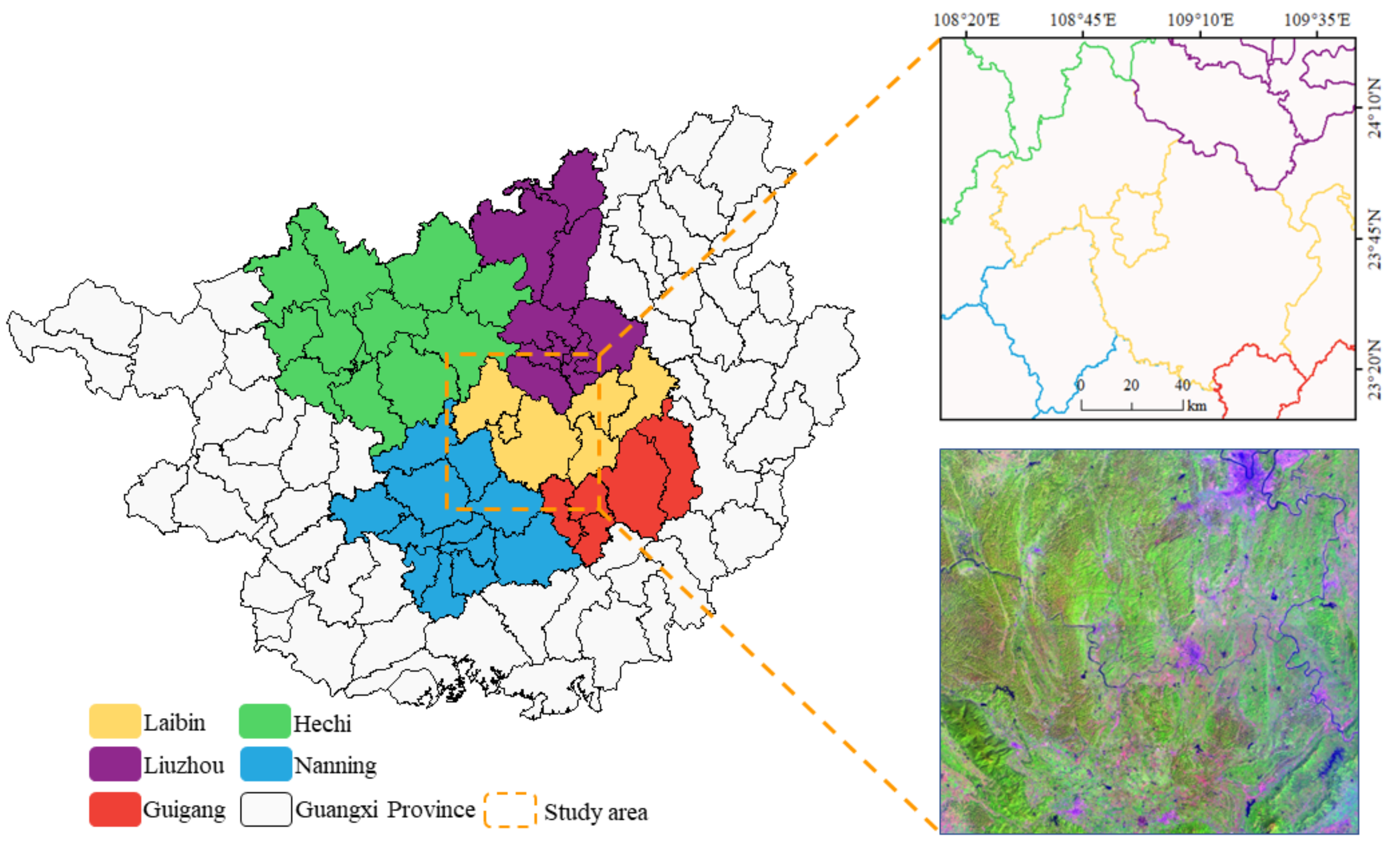

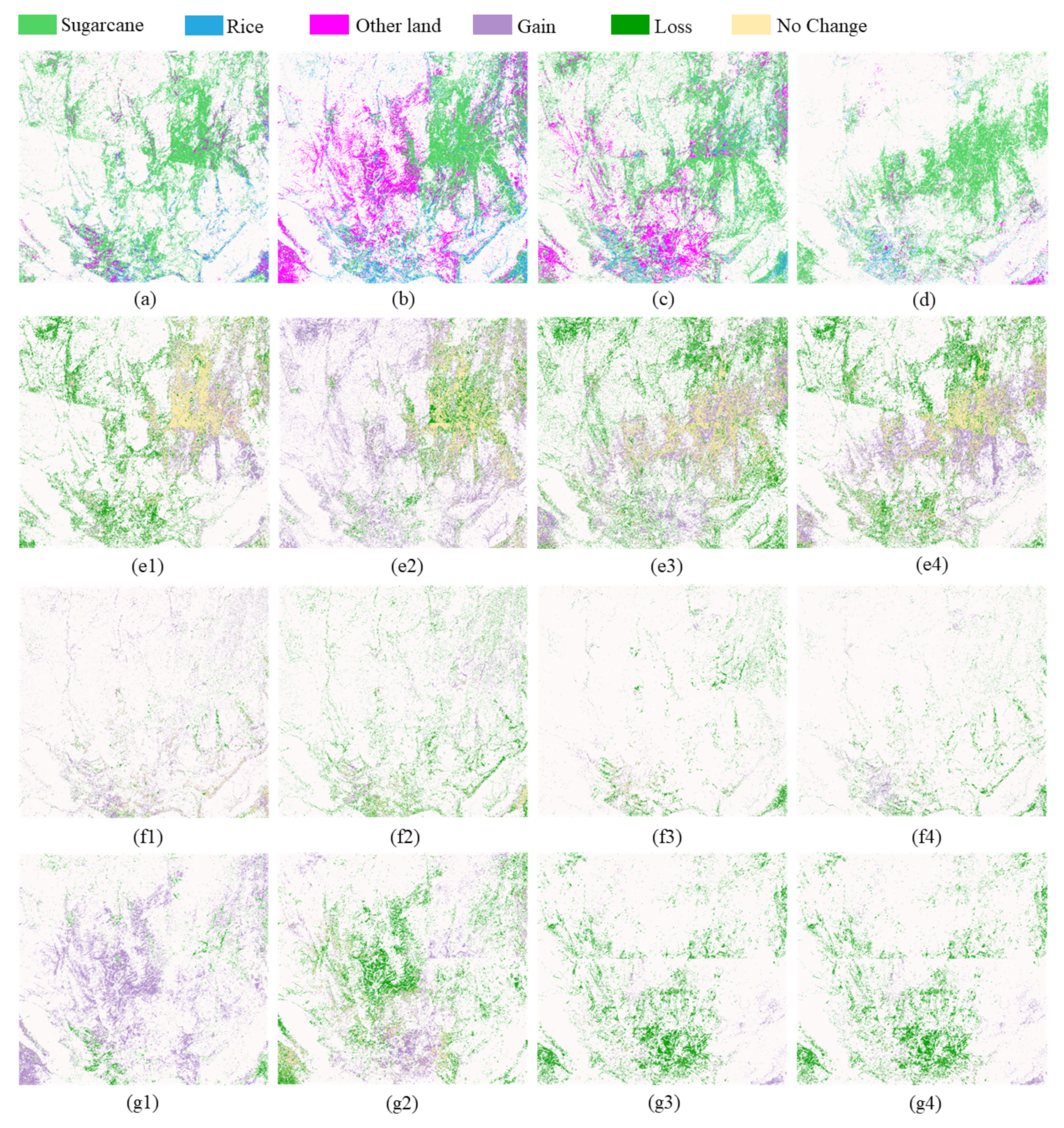

In this paper, U-Net is combined with ASPP and SAM to improve and optimize it for land cover classification from Landsat 8 remote sensing images. The main contributions include (1) exploring the effect of different hierarchical features of U-Net on the ground cover classification of 30 m resolution Landsat 8 images; (2) introducing the ASPP module to perform residual connections with the original U-Net, which not only increases the fusion of multiscale features but also enhanced the expression of shallow information; (3) introducing SAM to obtain the spatial weight matrix for the feature maps with richer spatial information, so that the spatial weight matrix acts on the corresponding semantic feature maps to obtain the feature maps combining spatial and semantic information; and (4) conducting dynamic change analysis of land use in the study area, focusing on the dynamic change in the crop planting area.