Abstract

Visual SLAM (VSLAM) has been developing rapidly due to its advantages of low-cost sensors, the easy fusion of other sensors, and richer environmental information. Traditional visionbased SLAM research has made many achievements, but it may fail to achieve wished results in challenging environments. Deep learning has promoted the development of computer vision, and the combination of deep learning and SLAM has attracted more and more attention. Semantic information, as high-level environmental information, can enable robots to better understand the surrounding environment. This paper introduces the development of VSLAM technology from two aspects: traditional VSLAM and semantic VSLAM combined with deep learning. For traditional VSLAM, we summarize the advantages and disadvantages of indirect and direct methods in detail and give some classical VSLAM open-source algorithms. In addition, we focus on the development of semantic VSLAM based on deep learning. Starting with typical neural networks CNN and RNN, we summarize the improvement of neural networks for the VSLAM system in detail. Later, we focus on the help of target detection and semantic segmentation for VSLAM semantic information introduction. We believe that the development of the future intelligent era cannot be without the help of semantic technology. Introducing deep learning into the VSLAM system to provide semantic information can help robots better perceive the surrounding environment and provide people with higher-level help.

1. Introduction

People need the mobile robot to perform some tasks by themselves, which needs the robot to be able to adapt to an unfamiliar environment. Therefore, SLAM [1] (Simultaneous Localization and Mapping), which enables localization and mapping in unfamiliar environments, has become a necessary capacity for autonomous mobile robots. Since it was first proposed in 1986, SLAM has attracted extensive attention from many researchers and developed rapidly in robotics, virtual reality, and other fields. SLAM refers to self-positioning based on location and map, and building incremental maps based on self-positioning. It is mainly used to solve the problem of robot localization and map construction when moving in an unknown environment [2]. SLAM, as a basic technology, has been applied to mobile robot localization and navigation in the early stage. With the development of computer technology (hardware) and artificial intelligence (software), robot research has received more and more attention and investment. Numerous researchers are committed to making robots more intelligent. SLAM is considered to be the key to promoting the real autonomy of mobile robots [3].

Some scholars divide SLAM into Laser SLAM and Visual SLAM (VSLAM) according to the different sensors adopted [4]. Compared with VSLAM, because of an early start, laser SLAM studies abroad are relatively mature and have been considered the preferred solution for mobile robots for a long time in the past. Similar to human eyes, VSLAM mainly uses images as the information source of environmental perception, which is more consistent with human understanding and has more information than laser SLAM. In recent years, camera-based VSLAM research has attracted extensive attention from researchers. Due to the advantages of cheap, easy installation, abundant environmental information, and easy fusion with other sensors, many vision-based SLAM algorithms have emerged [5]. VSLAM has the advantage of richer environmental information and is considered to be able to give mobile robots stronger perceptual ability and be applied in some specific scenarios. Therefore, this paper focuses on VSLAM and combs out the algorithms derived from it. SLAM based on all kinds of laser radar is not within the scope of discussion in this paper. Interested readers can refer to [6,7,8] and other sources in the literature.

As one of the solutions for autonomous robot navigation, traditional VSLAM is essentially a simple environmental understanding based on image geometric features [9]. Because traditional VSLAM only uses the geometric feature of the environment, such as points and lines, to face this low-level geometry information, it can reach a high level in real-time. Facing changes in lighting, texture, and dynamic objects are widespread, which shows the obvious shortage, in terms of position precision and robustness is flawed [10]. Although the map constructed by traditional visual SLAM includes important information in the environment and meets the positioning needs of the robot to a certain extent. It is inadequate in supporting the autonomous navigation and obstacle avoidance tasks of the robot. Furthermore, it cannot meet the interaction needs of the intelligent robot with the environment and humans [11].

People’s demand for intelligent mobile robots is increasing day by day, which put forward a high need for autonomous ability and the human–computer interaction ability of robots [12]. The traditional VSLAM algorithm can meet the basic positioning and navigation requirements of the robot, but cannot complete higher-level tasks such as “help me close the bedroom door”, “go to the kitchen and get me an apple”, etc. To achieve such goals, robots need to recognize information about objects in the scene, find out their locations and build semantic maps. With the help of semantic information, the data association is upgraded from the traditional pixel level to the object level. Furthermore, the perceptual geometric environment information is assigned with semantic labels to obtain a high-level semantic map. It can help the robot to understand the autonomous environment and human–computer interaction [13]. We believe that the rapid development of deep learning provides a bridge for the introduction of semantic information into VSLAM. Especially in semantic map construction, combining it with VLAM can enable robots to gain high-level perception and understanding of the scene. It significantly improves the interaction ability between robots and the environment [14].

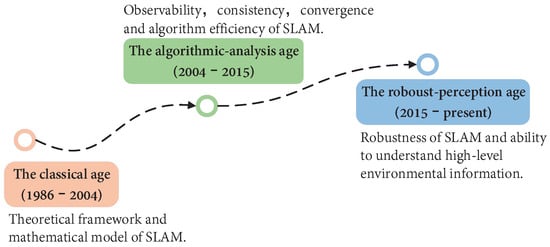

In 2016, Cadena et al. [15] first proposed to divide the development of SLAM into three stages. In their description, we are in a stage of robust perception, as shown in Figure 1. They describe the emphasis and contribution of SLAM in different times from three aspects: Classical, Algorithmic, and Robust. Ref. [16] summarizes the development of vision-based SLAM algorithms from 2010 to 2016 and provides a toolkit to help beginners. Yousif et al. [17] discussed the elementary framework of VSLAM and summarized several mathematical problems to help readers make the best choice. Bavle et al. [18] summarized the robot SLAM technology and pointed out the development trend of robot scene understanding. Starting from the fusion of vision and visual inertia, Servieres et al. [19] reviewed and compared important methods and summarized excellent algorithms emerging in SLAM. Azzam et al. [20] conducted a comprehensive study on feature-based methods. They classified the reviewed methods according to the visual features observed in the environment. Furthermore, they also proposed possible problems and solutions for the development of SLAM in the future. Ref. [21] introduces in detail the SLAM method based on monocular, binocular, RGB-D, and visual-inertial fusion, and gives the existing problems and future direction. Ref. [22] describes the opportunities and challenges of VSLAM from geometry to deep learning and forecasts the development prospects of VSLAM in the future semantic era.

Figure 1.

Overview of SLAM development era. The development of SLAM has gone through three main stages: theoretical framework, algorithm analysis, and advanced robust perception. The time points are not strictly limited, but rather represent the development of SLAM at a certain stage and the hot issues that people are interested in.

As you can see, there are some surveys and summaries of vision-based SLAM technologies. However, most of them only focus on one aspect of VSLAM, without a more comprehensive summary of the development of VSLAM. Furthermore, the above review focuses more on traditional visual SLAM algorithms, while semantic SLAM combined with deep learning is not introduced in detail. So, a comprehensive review of vision-based SLAM algorithms is necessary to help researchers and students launch their efforts at visual SLAM technologies to obtain an overview of this large field.

To give readers a deeper and more comprehensive understanding of the field of SLAM, we reviewed the history of general SLAM algorithms from inception to the present. In addition, we summarize the key solutions driving the technological evolution of SLAM solutions. The work of SLAM is described from the formation of point problems to the most commonly used state methods. Rather than focusing on just one aspect, we present the key main approaches to show the connections between the research that has brought the SLAM approach to its current state. In addition, we review the evolution of SLAM from traditional to semantic, a perspective that covers major, interesting, and leading design approaches throughout history. On this basis, we make a comprehensive summary of DEEP learning SLAM algorithms. Semantic VSLAM is also explained in detail to help readers better understand the characteristics of semantic VSLAM. We think our work can help readers better understand robot environment perception. Our work on semantic VSLAM can provide readers with a better idea and provide a useful reference for future SLAM research and even robot autonomous sensing. Therefore, this paper comprehensively supplements and updates the development of vision-based SLAM technology. Furthermore, this paper divides the development of vision-based SLAM into two stages: traditional VSLAM and semantic VSLAM integrating deep learning. So readers can better understand the research hot spots of VSLAM and grasp the development direction of VSLAM. We believe the traditional phase SLAM problem mainly solves the framework problem of the algorithm. In the semantic era, SLAM focuses on advanced situational awareness and system robustness in combination with deep learning.

Our review makes the following contributions to the state of the art:

- We have reviewed the development of vision-based SLAM more comprehensively, we review the recent research progress in the field of simultaneous localization and map construction based on environmental semantic information.

- Starting with a convolutional neural network (CNN) and a recurrent neural network (RNN), we describe the application of deep learning in VSLAM in detail. To our knowledge, this is the first review to introduce VSLAM from a neural network perspective.

- We describe the combination of semantic information and VSLAM in detail and point out the development direction of VSLAM in the semantic era. We mainly introduce and summarize the outstanding research achievements in the combination of semantic information and traditional visual SLAM in system localization and map construction, and make an in-depth comparison between traditional visual SLAM and semantic SLAM. Finally, the future research direction of semantic SLAM is proposed.

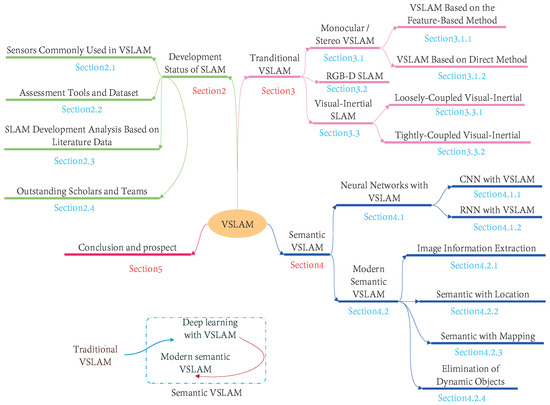

Specifically, in Section 1, this paper introduces the characteristics of traditional VSLAM in detail, including the direct method and the indirect method based on the front-end vision odometer, and makes a comparison between the depth camera-based VSLAM and the classical VSLAM integrated with IMU. In Section 2, this paper is divided into two parts. We firstly introduce the combination of deep learning and VSLAM from two neural networks, CNN and RNN. We believe that introducing deep learning into semantic VSLAM is the precondition for the development of semantic VSLAM. Furthermore, this stage can also be regarded as the beginning of semantic VSLAM. Then, this paper describes the process of deep learning leading semantic VSLAM to the advanced stage from the aspects of target detection and semantic segmentation. So this paper summarizes the development direction of semantic VSLAM from three aspects of localization, mapping, and elimination of dynamic objects. In Section 3, this paper introduces some mainstream SLAM data sets, and some outstanding laboratories in this area. In the end, we summarize the current research and point out the direction of VSLAM research in the future. The section table of contents for this article is shown in Figure 2.

Figure 2.

Structure diagram for the rest of this paper. This paper focuses on the second chapter of semantic VSLAM. We consider the introduction of neural networks as the beginning of semantic VSLAM. We start with a deep neural network, describe its combination with VSLAM, and then explain modern semantic VSLAM in detail from the aspects of object detection and semantic segmentation based on deep learning, and make a summary and prospect.

2. Development Status of SLAM

2.1. Sensors Commonly Used in VSLAM

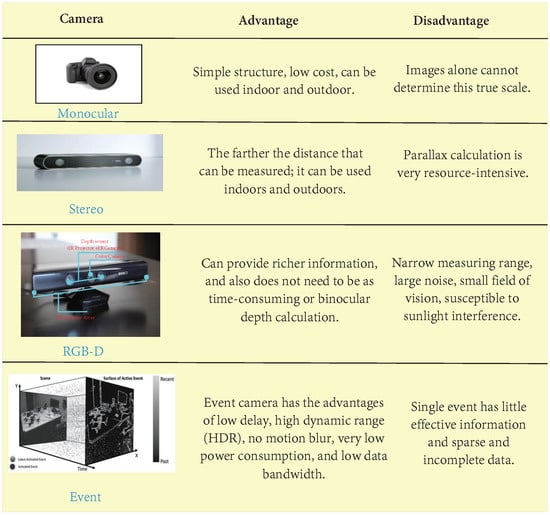

The sensors used in the VSLAM typically include the monocular camera, stereo camera, and RGB-D camera. The monocular camera and the stereo camera have similar principles and can be used in a wide range of indoor and outdoor environments. As a special form of camera, the RGB-D camera can directly obtain image depth mainly by actively emitting infrared structured light or calculating time-of-flight (TOF). It is convenient to use, but sensitive to light, and can only be used indoors in most cases [23]. Events camera as appeared in recent years, a new camera sensor, a picture of a different from the traditional camera. Events camera is “events”, can be as simple as “pixel brightness change”. The change of events camera output is pixel brightness, SLAM algorithm based on the event camera is still only in the preliminary study stage [24]. In addition, as a classical SLAM system based on vision, visual-inertial fusion has achieved excellent results in many aspects. In Figure 3, we compare the main features of different cameras.

Figure 3.

Comparison between different cameras. An event camera is not a specific type of camera, but a camera that can obtain “event information”. “Traditional cameras” work at a constant frequency and have natural drawbacks, such as lag, blurring, and overexposure when shooting high-speed objects. However, the event camera, a neuro-based method of processing information similar to the human eye, has none of these problems.

2.2. Assessment Tools and Dataset

SLAM problems have been around for decades. In the past few decades, many excellent algorithms have emerged, each of which has contributed to the rapid development of SLAM technology to varying degrees, despite its different focus. Each algorithm has to be compared fairly. Generally speaking, we can evaluate a SLAM algorithm from multiple perspectives such as time consumption, complexity, and accuracy. However, the most important one is that we pay the most attention to its accuracy. ATE (Absolute Trajectory Error) and RPE (Relative Pose Error) are the two most important indicators used to evaluate the accuracy of SLAM. The relative pose error is used to calculate the difference of pose changes in the same two-time stamps, which is suitable for estimating system drift. The absolute trajectory error directly calculates the difference between the real value of the camera pose and the estimated value of the SLAM system. The basic principles of ATE and RPE are as follows.

Assumptions: The given pose estimate is . The subscript represents time t (or frame), where it is assumed that the time of each frame of the estimated pose and the real pose are aligned, and the total number of frames is the same.

ATE: The absolute trajectory error is the direct difference between the estimated pose and the real pose, which can directly reflect the accuracy of the algorithm and the global trajectory consistency. It should be noted that the estimated pose and ground truth are usually not in the same coordinate system, so we need to pair them first: For stereo SLAM and RGB-D SLAM, the scale is uniform, so we need to calculate a transformation matrix from the estimated pose to the real pose by the least square method S ∈ SE (3). For monocular cameras with scale uncertainties, we need to calculate a similar transformation matrix S∈ Sim (3) from the estimated pose to the real pose. So the ATE of frame i is defined as follows:

Similar to RPE, RMSE is recommended for ATE statistics.

RPE: Relative pose error mainly describes the accuracy (compared with real pose) of two frames separated by a fixed time difference , which is equivalent to the error of the odometer directly measured. So the RPE of the frame I is defined as follows:

Given the total number n and the interval , we can obtain () RPE.Then we can use the root mean square error RMSE to calculate this error and obtain a population value:

represents the translation part of the relative pose error. We can evaluate the performance of the algorithm from the size of the RMSE value. However, in practice, we find that there are many choices for the selection of . To comprehensively measure the performance of the algorithm, we can calculate the average RMSE traversing all :

EVO [25] is an evaluation tool for the Python version of the SLAM system that can be used with a variety of data sets. In addition to ATE and RPE, data can be obtained, it can also draw a comparison diagram of the test algorithm and real trajectory. Is a very convenient assessment kit. SLAMBench2 [26] is a publicly available software framework that evaluates current and future SLAM systems through an extensible list of data sets. It includes open and closed source code while using a comparable and specified list of performance metrics. It supports a variety of existing SLAM algorithms and datasets, such as ElasticFusion [27], ORB-SLAM2 [28], and OKVIS [29], and integrating new SLAM algorithms and datasets are straightforward.

In addition, we also need to use datasets to test specific visualization of the algorithm. Common data sets used to test various aspects of SLAM performance are illustrated in Table 1. TUM data sets mainly include multi-view data sets, 3D object recognition and segmentation, scene recognition, 3D model matching, VSALM, and other data in various directions. According to the direction applied, it can be divided into TUM RGB-D [30], TUM MonoVO [31], and TUM VI [32]. Among them, the TUM RGB-D data set mainly contains indoor images with real ground tracks. Furthermore, it provides two measures to evaluate local accuracy and global consistency of orbit, namely relative attitude error and absolute trajectory error. TUM MonoVO is used to assess monocular systems, which contain both indoor and outdoor images. Due to the variety of scenarios, ground authenticity is not available, but rather large sequences with the same starting position are performed, allowing evaluation of cyclic drift. TUM VI is employed to the evaluation of the visual-inertial odometer. The KITTI [33] dataset is a famed outdoor environment data set jointly founded by the Karlsruhe Institute of Technology and Toyota American Institute of Technology. It is the largest computer vision algorithm evaluation data set in the world under autonomous driving scenarios, including monocular vision, binocular vision, Velodyne Lidar, POS trajectory, etc. It is the most widely used outdoor data set. The EuRoc [34] dataset A visual inertia data set developed by ETH Zurich. Cityscapes [35] is a dataset related to autonomous driving, focusing on pixel-level scene segmentation and instance annotation. In addition, many datasets are used in various scenarios, such as ICL-NUIM [36], NYU RGB-D [37], MS COCO [38], etc.

Table 1.

Common open-source datasets for SLAM.

Table 1.

Common open-source datasets for SLAM.

| Dataset | Sensor | Environment | Ground-Truth | Availability | Development |

|---|---|---|---|---|---|

| Cityscapes | Stereo | Outdoor | GPS | [35] | Daimler AG R&D, Max Planck Institute for Informatics, TU Darmstadt Visaul Inference Group |

| KITTI | Stereo/3D laser scanner | Outdoor | GPS/INS | [33] | Karlsruhe Institute of Technology and Toyota American Institute of Technology |

| TUM RGB-D | RGB-D | Indoor | Motion capture | [30] | Technical University of Munich |

| TUM MonoVO | Monocular | Indoor/Outdoor | Loop drift | [31] | |

| TUM VI | Stereo/IMU | Indoor/Outdoor | Motion capture | [32] | |

| EuRoc | Stereo/IMU | Indoor | Station/Motion capture | [34] | Eidgenössische Technische Hochschule Zürich |

| ICL-NUIM | RGB-D | Indoor | 3D surface model SLAM estimation | [36] | Imperial College Lodon |

2.3. SLAM Development Analysis Based on Literature Data

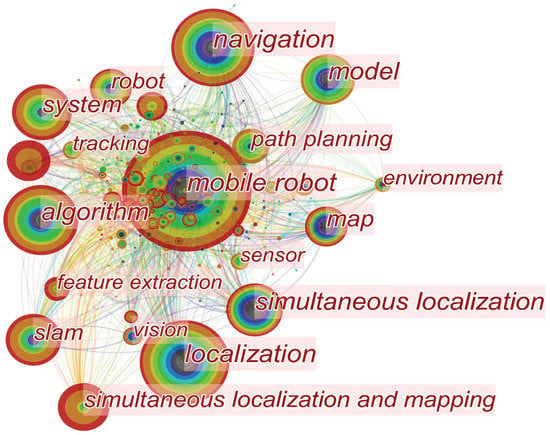

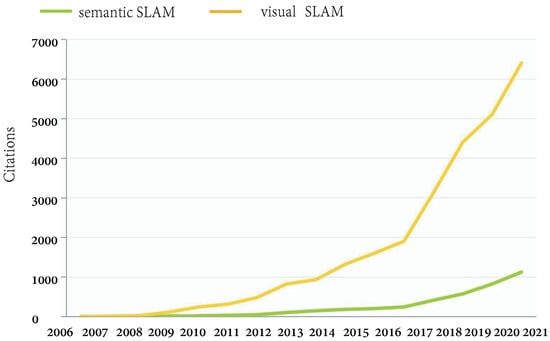

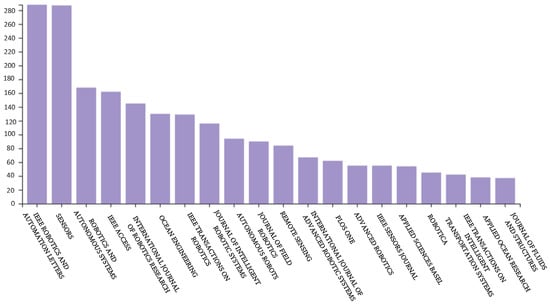

Since the advent of SLAM, it has been widely used in the field of robotics. As shown in Figure 4, this paper selected about 1000 hot articles related to mobile robots in the last two decades and made this keyword heat map. The larger the circle is, the higher the frequency of the keyword appears. The circle layer shows the time from the past to the present from the inside out, and the redder the color, the more attractive it is. Connecting lines indicate that there is a connection between different keywords (data from the Web of Science Core Collection). As shown in Figure 5, the number of citations of visual SLAM and semantic SLAM-related papers is increasing rapidly. Especially around 2017, visual SLAM and semantic SLAM saw their citations skyrocket. Many advances have been made in traditional VSLAM research. To enable robots to perceive the surrounding environment from a higher level, the research of semantic VSLAM has received extensive attention. Semantic SLAM has attracted more and more attention in recent years. Furthermore, as shown in Figure 6, this paper has selected about 5000 articles from the Web of Science Core Collection. Judging from the titles of journals about SLAM published, SLAM is a topic of interest in robotics.

Figure 4.

Hot words in mobile robot field.

Figure 5.

Citations for Web of Science articles on visual SLAM and semantic SLAM in recent years (Data are as of December 2021).

Figure 6.

Publication titles about SLAM on Web of Science.

As can be seen from the above data, SLAM research has always been a hot topic. With the rapid development of deep learning, the field of computer vision has made unprecedented progress. Therefore, VSLAM also ushered in a period of rapid development. Combining semantic information with VSLAM is going to be a hot topic for a long time. The development of semantic VSLAM can make robots truly autonomous.

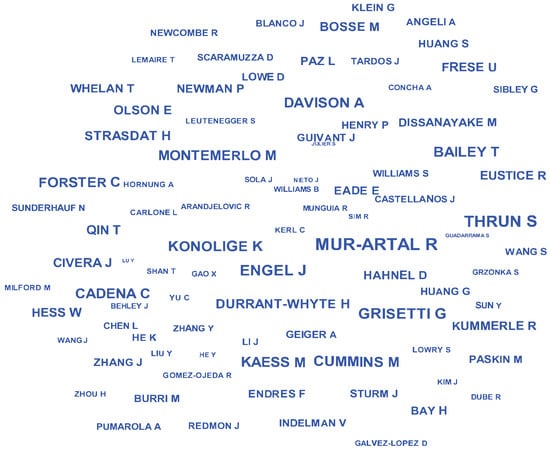

2.4. Outstanding Scholars and Teams

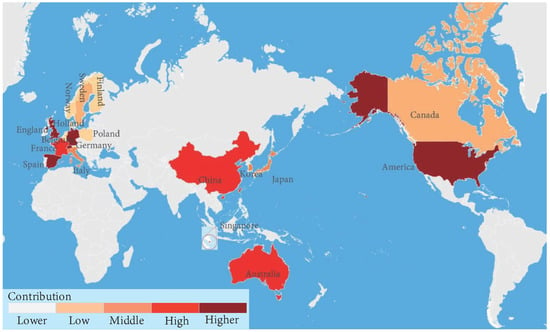

In addition, many scholars and teams have made indelible contributions to the research of SLAM. As shown in Figure 7, we analyzed approximately 4000 articles from 2000 to 2022 (data from the Web of Science website). A larger font indicates that the author has received the most attention, and vice versa. The countries to which they belong are presented in Figure 8. The computer vision group at the Technical University of Munich in Germany is a leader in this field. The team published a variety of classic visual SLAM solutions such as DSO [39] and LSD-SLAM [40], which improved the performance of all aspects of visual SLAM. The robotics and Perception group at the University of Zurich, Switzerland, also contributed to the rapid development of SLAM technology by developing SVO and VO/VIO trajectory assessment tools. In addition, the Computer Vision and Ensemble Laboratory of ETH Zurich has also made a lot of efforts in this field. Furthermore, they have made a lot of breakthrough progress in the field of visual semantic localization in large-scale outdoor mapping. The LABORATORY of ROBOTICS, Sensing, and Real-time Group SLAM at the University of Zaragoza in Spain is one of the biggest contributors to the development of SLAM. The ORB-SLAM series launched by the laboratory is a landmark scheme in visual SLAM, which has a far-reaching influence on the research of SLAM. In addition, the efforts of many scholars and teams have promoted the rapid development of visual semantic SLAM and laid a foundation for solving various problems in the future. Table 2 shows the works of some excellent teams and their team websites for your reference, you can check the website of the team by the number quoted after its name.

Figure 7.

A distinguished scholar in the field of visual SLAM.

Figure 8.

Contribution of different countries in the SLAM field(Colors from light to dark indicate contributions from low to high).

Some scholars have made outstanding contributions to semantic VSLAM research. Niko Sünderhauf [41] and their team, for example, have made many advances in robot scene understanding and semantic VSLAM. The team is dedicated to making a robot understand what it sees is one of the most fascinating goals. To this end, they develop novel methods for Semantic Mapping and Semantic SLAM by combining object detection with simultaneous localization and mapping (SLAM) techniques. The team [42] of researchers is part of the Australian Centre for Robotic Vision and is based at the Queensland University of Technology in Brisbane, Australia. They work on novel approaches to SLAM (Simultaneous Localization and Mapping) that create semantically meaningful maps by combining geometric and semantic information. We believe such semantically enriched maps will help robots understand our complex world and will ultimately increase the range and sophistication of interactions that robots can have in domestic and industrial deployment scenarios.

Table 2.

Some great teams and their contributions.

Table 2.

Some great teams and their contributions.

| Team | Works |

|---|---|

| The Dyson Robotics Lab at Imperial College [43] | Code-SLAM [44], ElasticFusion [27], Fusion++ [45], SemanticFusion [46] |

| Computer Vision Group TUM Department of Informatics Technical University of Munich [47] | D3VO [48], DM-VIO [49], LSD-SLAM [40], LDSO [50], DSO [39] |

| Autonomous Intelligent Systems University of Freiburg [51] | Gmapping [52], RGB-D SLAMv2 [53] |

| HKUST Aerial Robotics Group [54] | VINS-Mono [55], VINS-Fusion [56], Event-based stereo visual odometry [57] |

| UW Robotics and State Estimation Lab [58] | DART [59], DA-RNN [60], RGB-D Mapping [61] |

| Robotics, Perception and Real Time Group UNIVERSIDAD DE ZARAGOZA [62] | ORB-SLAM2 [28], Real-time monocular objects slam [63] |

3. Traditional VSLAM

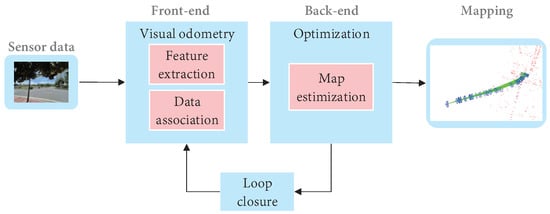

Cadena et al. [15] proposed a classical VSLAM framework, which mainly consists of two parts: front-end and back-end, as shown in Figure 9. The front end provides real-time camera pose estimation, while the back end provides map updates and optimizations. Specifically, mature visual SLAM systems include sensor data collection, front-end visual odometer, back-end optimization, loop closure detection, and map construction modules [64].

Figure 9.

The typical visual SLAM system framework.

3.1. Monocular/Stereo VSLAM

In this section, we will elaborate on the VSLAM algorithm based on monocular or stereo cameras. For the VSLAM system, the visual odometer, as the front-end of SLAM, is an indispensable part [65]. Ref. [20] points out that VSLAM can be divided into the direct method and indirect method according to the different image information collected by the front-end visual odometer. The indirect method needs to select a certain number of representative points from the collected images, called key points, and detect and match them in the following images to gain the camera pose. It not only saves the key information of the image but also reduces the amount of calculation, so it is widely used. The direct method uses all the information of the image without preprocessing and directly operates on pixel intensity, which has higher robustness in an environment with sparse texture [66]. Both the indirect method and direct method have been widely concerned and developed to different degrees.

3.1.1. VSLAM Based on the Feature-Based Method

The core of indirect VSLAM is to detect, extract and match geometric features( points, lines, or planes), estimate camera pose, and build an environment map while retaining important information, it can effectively reduce calculation, so it has been widely used [67]. The VSLAM method based on point feature has long been taken into account as the mainstream method of indirect VSLAM due to its simplicity and practicality [68].

Feature extraction mostly adopted corner extraction methods in the early, such as Harris [69], FAST [70], GFTT [71], etc. However, in many scenarios, simple corners cannot provide reliable features, which prompts researchers to seek more stable local image features. Nowadays, typical VSLAM methods based on point features firstly use feature detection algorithms, such as SIFT [72], SURF [73], and ORB [74], to extract key points in the image for matching. Then gain pose after minimizing reprojection error. Feature points and corresponding descriptors in the image are employed for data association. Furthermore, data association in initialization is completed through the matching of feature descriptors [75]. In Table 3, we list common traditional feature extraction algorithms and compare their main performance to help readers have a more comprehensive understanding.

Table 3.

Comparison table of commonly used feature extraction algorithms.

Davidson et al. [77] proposed MonoSLAM in 2007. This algorithm is considered to be the first real-time monocular VSLAM algorithm, which can achieve real-time drift free-motion structure recovery. The front end tracks the sparse feature points shi-Tomasi corner point for feature point matching, and the back end uses Extended Kalman Filter (EKF) [78] for optimization, which can build the sparse environment map online in real-time. This algorithm has a milestone significance in SLAM research, but the EKF method leads to a square growth between storage and state quantity, so it is not suitable for large-scale scenarios. In the same year, the advent of PTAM [79] improved MonoSLAM’s inability to work steadily for long periods in a wide range of environments. PTAM, as the first SLAM algorithm using nonlinear optimization at the back end, solves the problem of fast data growth in the filter-based method. Furthermore, it separated tracking and mapping into two different threads for the first time. The front end uses FAST corner detection to extract and estimate camera motion using image features, and the back end is responsible for nonlinear optimization and mapping. It not only ensures the real-time performance of SLAM in the calculation of camera pose but also ensures the accuracy of the whole SLAM system. However, because there is no loopback detection module, it will accumulate errors during long-running.

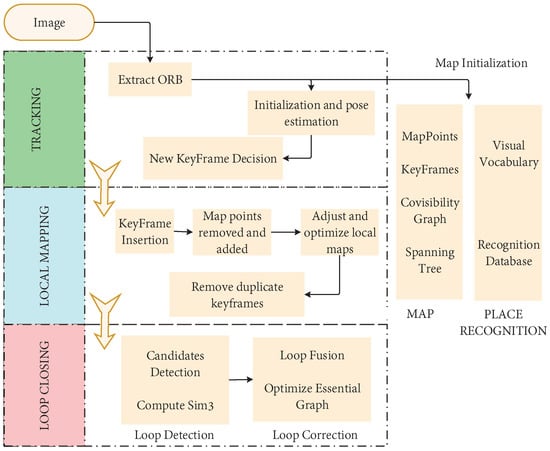

In 2015, MUR-Artal et al. proposed the ORB-SLAM [80]. This algorithm is regarded as the excellent successor of PTAM, and based on PTAM, added a loop closure detection module, which effectively reduces the cumulative error. As a real-time monocular visual SLAM system that uses ORB feature matching, the whole process is carried out around ORB features. As shown in Figure 10, the three threads of tracking, local mapping, and loop closure detection are used innovatively. In addition, the loop closure detection thread uses the word bag model DBoW [81] for loop closure. The loop closure method based on the BoW model can detect the loop closure quickly by detecting the image similarity. Furthermore, achieve good results in the processing speed and the accuracy of map construction. In later years, the team launched ORB-SLAM2 [28] and ORB-SlAM3 [82]. The ORB-SLAM family is one of the most widely used visual SLAM solutions due to its real-time CPU performance and robustness. However, the ORB-SLAM series relies heavily on environmental features, so it may be difficult to obtain enough feature points in an environment without texture features.

Figure 10.

Flow chart of ORB-SLAM.

The point feature-based SLAM system relies too much on the quality and quantity of point features. It is difficult to detect enough feature points in weak texture scenes, such as corridors, windows, white walls, etc. Thus, affecting the robustness and accuracy of the system and even leading to tracking failure. In addition, due to the rapid movement of the camera, illumination changes, and other reasons, the matching quantity and quality of point features will decline seriously. To improve the feature-based SLAM algorithms, the application of line features in SLAM systems has attracted more and more attention [83]. The commonly used line feature extraction algorithm is LSD [76].

In recent years, with the improvement of computer computing capacity, VSLAM-based online features have also been developed rapidly. Smith et al. [84] proposed a monocular VSLAM algorithm-based online feature extraction in 2006. Lines are represented by two endpoints, and line features are used in the SLAM system to detect and track the two endpoints of lines in small scenes. The system can use line features alone or in combination with point-line features, which is of groundbreaking significance in VSLAM research. In 2014, Perdices et al. proposed LineSLAM, a line-based monocular SLAM algorithm [85]. For line extraction, this scheme adopts the line extraction scheme in [86]. It detects the lines every time the keyframes are acquired. Then uses the Unscented Kalman Filter (UKF) to predict the current camera state and vector probability distribution of the ground line. Then, matches the line prediction result with the detected lines. Because the scheme has no loop closure and the line segment is of infinite length instead of finite length, it is difficult to be used in practice.

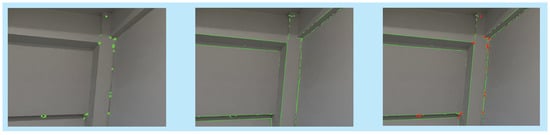

As shown in Figure 11, compared with point feature or line feature alone, the combination of point feature and line feature increases the number of feature observations and data association. Furthermore, line feature is less sensitive to light changes than the point feature, which improves the positioning accuracy and robustness of the original system [76]. In 2016, Klein et al. [87] adopted the method of point-line fusion to improve the tracking failure of the SLAM system due to image blur caused by fast camera movement. In 2017, Pumarola et al. [88] published monocular PL-SLAM, and Gomez-Ojeda et al. [89] published stereo PL-SLAM in the same year. Based on ORB-SLAM, the two algorithms use the LSD detection algorithm to detect line features and then combine the point-line features in each link of SLAM. It can work even when most of the point features disappear. Furthermore, it improves the accuracy, robustness, and stability of the SLAM system, but the real-time performance is not good.

Figure 11.

Comparison of point and line feature extraction in a weak texture environment. From left to right are ORB point feature extraction, LSD line feature extraction, and point-line combination feature extraction.

In addition, in some environments, there are some obvious surface features, which have aroused great interest of some researchers. Ref. [90] proposed a map construction method combining planes and lines. By introducing surface features into the real-time VSLAM system, the errors are reduced and the system robustness is improved by combining low-level features. In 2017, Li et al. [91] proposed a VSLAM algorithm based on point, line, and plane fusion for an artificial environment. Point features are used for the initial estimation of the robot’s current pose. Lines and planes are used to describe the environment. However, most planes only exist in the artificial environment, and few suitable planes can be found in the natural environment. These limit its application range.

Compared with the methods that rely only on point features, SLAM systems that rely only on lines or planes can only work stably in artificial environments in most cases. The VSLAM method combining point, line, and surface features improve the localization accuracy and robustness in weak texture scenes, illumination changes, and fast camera movement. However, the introduction of line and surface features increases the time consumption of feature extraction and matching, which reduces the efficiency of the SLAM system. Therefore, the VSLAM algorithm based on the point feature still occupies the mainstream position [92]. Table 4 shows a comparison of geometric features.

Table 4.

Comparison table of geometric features.

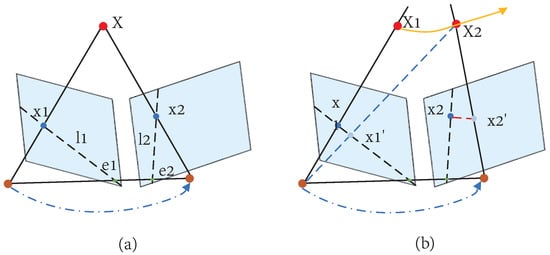

3.1.2. VSLAM Based on Direct Method

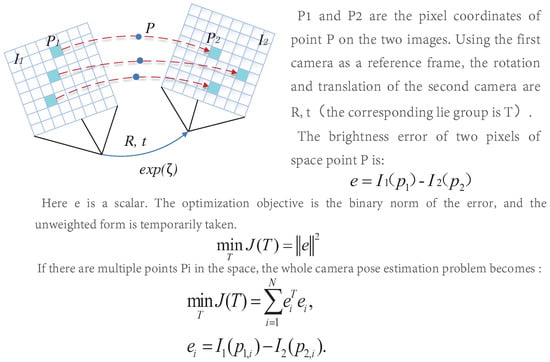

Different from feature-based methods, the direct method operates directly on pixel intensity and can retain all information about the image. Furthermore, the direct method cancels the process of feature extraction and matching, so the computational efficiency is better than the indirect method. Furthermore, it has good adaptability to the environment with complex textures. It can still keep a good effect in the environment with missing features [93]. The direct method is similar to the optical flow, and they both have a strong assumption: gray-level invariance, the principle of which is shown in Figure 12.

Figure 12.

Schematic diagram of the direct method.

In 2011, Newcombe et al. [94] proposed the DTAM algorithm, which was considered the first practical direct method sof VSLAM. DTAM allows tracking by comparing the input images with those created by reconstructed maps. The algorithm performs a precise and detailed reconstruction of the environment. However, it affects the computational cost of storing and processing the data, so it can only run in real-time on GPU. LSD-SLAM [40] neglects texture-free areas to improve operational efficiency and can run in real-time on CUP. LSD-SLAM, another major approach indirect method, combines featureless extraction with semi-dense reconstruction, and its core is a visual odometer using semi-dense reconstruction. The algorithm consists of three steps: tracking, depth estimation, and map optimization. Firstly, the photometric error is minimized to estimate the sensor pose. Secondly, select a keyframe for in-depth estimation. Finally, in the map optimization step, the new keyframe is merged into the map and optimized by using the posture optimization algorithm. In 2014, Forster et al. [95] proposed the semi-direct visual SLAM algorithm SVO. Since the algorithm does not need to extract features for each frame, it can run at high frame rates, which enables it to run in low-cost embedded systems [80]. SVO combines the advantages of the feature point method and direct method. The algorithm is divided into two main threads: motion estimation and mapping. Motion estimation is carried out by feature point matching, but mapping is carried out by the direct method. SVO has good results, but as a purely visual method, it only performs short-term data association, which limits its accuracy [82]. In 2018, Engel et al. [39] proposed DSO. DSO can effectively use any image pixel, which makes it robust even in featureless regions and can gain more accurate results than SVO. DSO can calculate accurate camera attitude in poor feature point detector performance, improving the robustness of low-texture areas or blurred images. In addition, the DSO uses both geometric and photometric camera calibration results for high accuracy estimation. However, DSO only considers local geometric consistency, so it inevitably produces cumulative errors. Furthermore, it is not a complete SLAM because it does not include loop closure, map reuse, etc.

Up to now, VSLAM has made many achievements in direct and indirect methods. Table 5 compares the advantages and disadvantages of the direct method and the indirect method to help readers better understand.

Table 5.

Comparison between direct method and indirect method.

3.2. RGB-D SLAM

An RGB-D camera is a visual sensor launched in recent years. It can simultaneously collect environmental color images and depth images, and directly gain depth maps mainly by actively emitting infrared structured light or calculating time-of-flight (TOF) [96]. The RGB-D camera, as a special camera, can gain three-dimensional information in space more conveniently. So it has been widely concerned and developed in three-dimensional reconstruction [97].

KinectFusion [98] is the first real-time 3D reconstruction system based on an RGB-D camera. It uses a point cloud created by the depth to estimate the camera pose through ICP (Iterative Closest Point). Then splices multi-frame point cloud collection based on the camera pose, and expresses reconstruction result by the TSDF (Truncated signed distance Function) model. The 3D model can be constructed in real-time with GPU acceleration. However, the system has not been optimized by loop closure. Furthermore, there will be obvious errors in long-term operation, and the RGB information of the RGB-D camera has not been fully utilized. In contrast, ElasticFusion [27] makes full use of the color and depth information of the RGB-D camera. It estimates the camera pose by the color consistency of RGB and estimates the camera pose by ICP. Then improves the estimation accuracy of the camera pose by constantly optimizing and reconstructing the map. Finally, the surfel model was used for map representation, but it could only be reconstructed in a small indoor scene. Kinitinuous [99] adds loop closure based on KinectFusion and makes non-rigid body transformation for 3d rigid body reconstruction by using a deformation graph for the first time. So it makes the results of two-loop closure reconstruction overlap, achieving good results in an indoor environment. Compared with the above algorithms, RGB-D SLAMv2 [53] is a very excellent and comprehensive system. It includes image feature detection, optimization, loop closure, and other modules, which are suitable for beginners to carry out secondary development.

Although the RGB-D camera is more convenient to use, the RGB-D camera is extremely sensitive to light. Furthermore, there are many problems with narrow, noisy, and small horizons, so most of the situation is only used in the room. In addition, the existing algorithms must be implemented using GPU. So the mainstream traditional VSLAM system still does not use the RGB-D camera as the main sensor. However, in three-dimensional reconstruction in the interior, the RGB-D camera is widely used. In addition, because of the ability to build a dense environment map, the semantic VSLAM direction, RGB-D camera is widely used. Table 6 shows the classic SLAM algorithm based on RGB-D cameras.

Table 6.

Some SLAM algorithms for sensors with an RGB-D camera.

3.3. Visual-Inertial SLAM

The pure visual SLAM algorithm has achieved many achievements. However, it is still difficult to solve the effects of image blur caused by fast camera movement and poor illumination by using only the camera as a single sensor [110]. IMU is considered to be one of the most complementary sensors to the camera. It can obtain accurate estimation at high frequency in a short time, and reduce the impact of dynamic objects on the camera. In addition, the camera data can effectively correct the cumulative drift of IMU [111]. At the same time, due to the miniaturization and cost reduction of cameras and IMU, visual-inertial fusion has also achieved rapid development. Furthermore, it become the preferred method of sensor fusion, which is favored by many researchers [112]. Nowadays, visual-inertial fusion can be divided into loosely coupled and tightly coupled according to whether image feature information is added to the state vector [113]. Loosely coupled means the IMU and the camera estimate their motion, respectively, and then fuse their pose estimation. Tightly coupled refers to the combination of the state of IMU and the state of the camera to jointly construct the equation of motion and observation, and then perform state estimation [114].

3.3.1. Loosely Coupled Visual-Inertial

The loosely coupled core is to fuse the positions and poses calculated by the vision sensor and IMU, respectively. The fusion has no impact on the results obtained by the two. Generally, the fusion is performed through EKF. Stephen Weiss [115] provided groundbreaking insights in their doctoral thesis. Ref. [116] proposed an efficient loose coupling method, and good experimental results were obtained by using an RGB-D camera and IMU. The loose-coupling implementation is relatively simple, but the fusion result is prone to error and there has been little research in this area.

3.3.2. Tightly Coupled Visual-Inertial

The core of the tightly coupled is to combine the states of the vision sensor and IMU through an optimized filter. It needs the image features to be added to the feature vector to jointly construct the motion equation and observation equation. Then perform state estimation to obtain the pose information. Tightly coupled needs full use of visual and inertial measurement information, which is complicated in method implementation but can achieve higher pose estimation accuracy. Therefore, it is also the mainstream method, and many breakthroughs have been made in this area.

In 2007, Mourikis et al. [117] proposed MSCKF. The core of MSCKF is to fuse IMU and visual information under the EKF in a tightly coupled way. Compared with the VO algorithm alone, MSCKF can adapt to more intense motion and texture loss, with higher robustness. Speed and accuracy can also reach a high level. MSCKF has been widely used in robot, UAV, and AR/VR fields. However, because the backend uses the Kalman filter method, global information cannot be used for optimization, and no loopback detection will cause error accumulation. Ref. [29] proposed OKVIS based on a fusion of binocular vision and IMU. However, it only outputs six degrees of freedom pose without loopback detection and map, so it is not a complete SLAM in a strict sense. Although it has good accuracy, its pose will be loose when it runs for a long time. Although these two algorithms have achieved good results, they have not been widely promoted. The lack of loop closure modules inevitably leads to cumulative errors when running for long periods of time.

The emergence of VINS-Mono [55] broke this situation. In 2018, a team from The Hong Kong University of Science and Technology (HKUST) introduced a monocular inertially tightly coupled VINS-Mono algorithm. It has since released its expanded version, Vins-Fusion, which supports multi-sensor integration, including Monocular + IMU, Stereo + IMU, and even stereo only, and also provides a version with GPS. VINS-mono is a classic fusion of vision and IMU. Its positioning accuracy is comparable to OKVIS, and it has a more complete and robust initialization and loop closure detection process than OKVIS. At the same time, VINS-Mono has set a standard for the research and application of visual SLAM, which is more monocular +IMU. In the navigation of robots, especially the autonomous navigation of UAVs, monocular cameras are not limited by RGB-D cameras (susceptible to illumination and limited depth information) and stereo cameras (occupying a large space). It can adapt to indoor, outdoor and different illumination environments with good adaptability.

As a supplement to cameras, inertial sensors can effectively solve the problem that a single camera cannot cope with. Visual inertial fusion is bound to become a long-term hot direction of SLAM research. However, the introduction of multiple sensors will lead to an increase in data, which has a high requirement on computing capacity [118]. Therefore, we believe that the next hot issue of visual-inertial fusion will be reflected in the efficient processing of sensor fusion data. How to make better use of data from different sensors will be a long-term attractive hot issue. Due to the rich information acquisition, convenient use and low price of visual sensors, the environment map constructed is closer to the real environment recognized by human beings. After decades of development, vision-based SLAM technology has achieved many excellent achievements. Table 7 summarizes some of the best visual-based SLAM algorithms, comparing their performance in key areas, and providing open-source addresses to help readers make better choices.

Table 7.

Best visual-based SLAM algorithms.

In this chapter, we summarize the traditional vision-based SLAM algorithms, and summarize some excellent algorithms for your reference, hoping to give readers a more comprehensive understanding. Next, we will cover VSLAM with semantic information fusion, aiming to explore the field of SLAM more deeply.

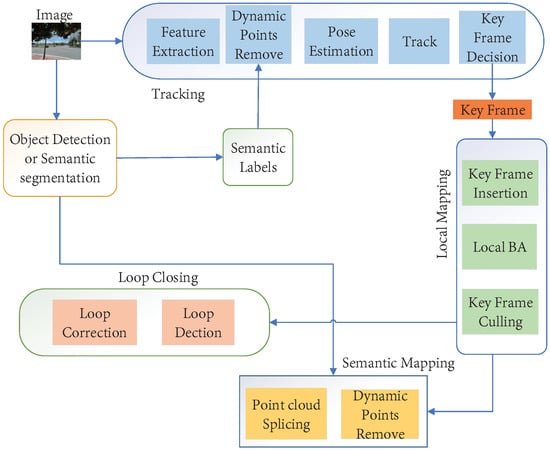

4. Semantic VSLAM

Semantic SLAM refers to a SLAM system that can not only obtain geometric information of the unknown environment and robot movement information but also detect and identify targets in the scene. It can obtain semantic information such as their functional attributes and relationship with surrounding objects, and even understand the contents of the whole environment [134]. Traditional VSLAM represents the environment in the form of point clouds and so on, which to us are a bunch of meaningless points. To perceive the world from both geometric and content levels and provide better services to humans, robots need to further abstract the features of these points and understand them [135]. With deep learning development, researchers have gradually realized its possible help to SLAM problems [136]. Semantic information can help SLAM to understand the map at a higher level. Furthermore, it lessens the dependence of the SLAM system on feature points and improves the robustness of the system [137].

Modern semantic VSLAM systems cannot do without the help of deep learning, and feature attributes and association relations obtained through learning can be used in different tasks [138]. As an important branch of machine learning, deep learning has achieved remarkable results in image recognition [139], semantic understanding [140], image matching [141], 3D reconstruction [142], and other tasks. The application of deep learning in computer vision can greatly ease the problems encountered by traditional methods [143]. Traditional VSLAM systems have achieved commendable results in many aspects, but there are still many challenging problems to be solved [144]. Ref. [145] has summarized deep learning-based VSLAM in detail and pointed out the problems existing in traditional VSLAM. These works [146,147,148,149] suggest that deep learning should be used to replace some modules of traditional SLAM, such as loop closure and pose estimation, to improve the traditional method.

Machine learning is a subset of artificial intelligence that uses statistical techniques to provide the ability to ”learn“ data from a computer without complex programming. Unlike task-specific algorithms, deep learning is a subset of machine learning based on learning data. It is inspired by the function and structure of what are known as artificial neural networks. Deep learning gains great flexibility and power by learning to display the world as simpler concepts and hierarchies, and to calculate more abstract representations based on less abstract concepts. The most important difference between traditional machine learning and deep learning is the performance of data scaling. Deep learning algorithms do not work well when the data is very small, because they need big data to perfectly identify and understand it. The performance of machine learning algorithms depends on the accuracy of features identified and extracted. Deep learning algorithms, on the other hand, identify these high-level features from the data, thus reducing the effort to develop an entirely new feature extractor for each problem. Deep learning is a subset of machine learning, which has proven to be a more powerful and promising branch of the industry compared to traditional machine learning algorithms. It realizes many functions that traditional machine learning cannot achieve with its layered characteristics. SLAM systems need to collect a large amount of information in the environment, so there is a huge amount of data to calculate, and the deep learning model is just suitable for solving this problem.

This paper believes that semantic VSLAM is an evolving process. In the early stage, some researchers tried to improve the performance of VSLAM by extracting semantic information in the environment using neural networks such as CNN. In the modern stage, target detection, semantic segmentation, and other deep learning methods are powerful tools to promote the development of semantic VSLAM. Therefore, in this chapter, we will first describe the application of typical neural networks in VSLAM. We believe that this is the premise of the development of modern semantic VSLAM. The application of neural networks in VSLAM provides a model for modern semantic VSLAM. This paper believes that a neural network is a bridge to introduce semantic information into the modern semantic VSLAM system and obtain rapid development.

4.1. Neural Networks with VSLAM

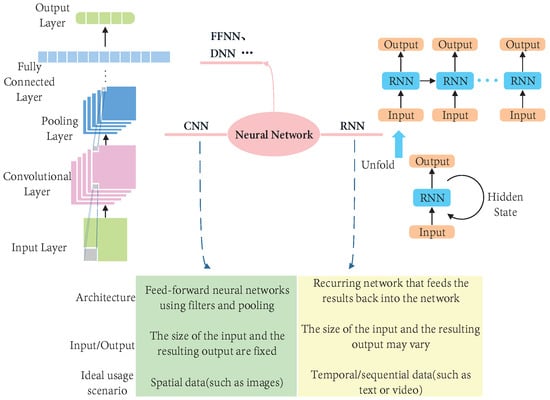

Figure 13 shows the typical framework of CNN and RNN. CNN can capture spatial features from the image, which help us accurately identify the object and its relationship with other objects in the image [150]. The characteristic of RNN is that it can process an image or numerical data. Because of the memory capacity of the network itself, it can learn data types with contextual correlation [151]. In addition, other types of neural networks such as DNN (Deep Neural Networks) also have some tentative work, but it is in the initial stage. This paper notes that CNN has the advantages of extracting features of things with a certain model, and then classifying, identifying, predicting, or deciding based on the features. It can be helpful to different modules of VSLAM. In addition, this paper believes that RNN has great advantages in helping to establish consistency between nearby frames. Furthermore, the high-level features have better differentiation, which can help robots to better complete data association.

Figure 13.

Structure block diagram of CNN and RNN. CNN is suitable for extracting unmarked features from hierarchical or spatial data. RNN is suitable for temporal data and other types of sequential data.

4.1.1. CNN with VSLAM

Traditional inter-frame estimation methods adopt feature-based methods or direct methods to identify camera pose through multi-view geometry [152]. Features-based methods need complex feature extraction and matching. Direct methods rely on pixel intensity values, which makes it difficult for traditional methods to obtain wished results in environments such as intense illumination or sparse texture [153]. In contrast, methods based on deep learning are more intuitive and concise. That is because they do not need to extract environmental features, feature matching, and complex geometric operations [154]. As the feature detection layer of CNN learns through training data, it avoids feature extraction in display and learns implicitly from training data during use. Refs. [155,156] and other works have made a detailed summary.

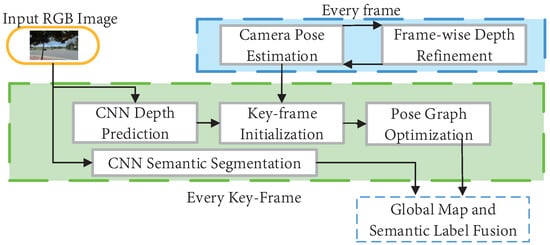

CNN’s advantages in image processing have been fully verified. For example, visual depth estimation improves the problem that monocular cameras cannot obtain reliable depth information [157]. In 2017, Tateno et al. [158] proposed a real-time SLAM system “CNN-SLAM “based on CNN in the framework of LSD-SLAM. As shown in Figure 14, the algorithm obtained a reliable depth map by training the depth estimation network model. CNN is used for depth prediction, which is input into subsequent modules such as traditional pose estimation to improve positioning and mapping accuracy. In addition, CNN semantic segmentation module is added to the framework, which provides help for advanced information perception of the VSLAM system. Similar work using the network to estimate depth information includes Code-SLAM [42] and DVSO [159] Based on a stereo camera. In the same year, Godard et al. [160] proposed an unsupervised image depth estimation scheme. Unsupervised learning is improved by using stereo data set, and then a single frame is used for pose estimation, which has a great improvement compared with other schemes.

Figure 14.

The structure of CNN-SLAM.

CNN not only solves the problem that traditional methods cannot obtain reliable depth data by using a monocular camera but also improves the defects of traditional methods in camera pose estimation. In 2020, Yang et al. [48] proposed D3VO. In this method, deep learning is used from three aspects, including depth estimation, pose estimation, and uncertainty estimation. The prediction depth, pose and uncertainty are closely combined into a direct visual odometer to simultaneously improve the performance of front-end tracking and back-end nonlinear optimization. However, self-supervised methods are difficult to adapt to all environments. In addition, Qin et al. [161] proposed a semantic feature-based localization method in 2020, which effectively solves the problem that traditional visual SLAM methods are prone to tracking loss. Its principle is to use CNN to detect semantic features in the narrow and crowded environment of an underground parking lot, lack of GPS signal, dim light, and sparse texture. Then use U-Net [162] to perform semantic segmentation to separate parking lines, speed bumps, and other indicators on the ground, and then use odometer information. The semantic features are mapped to the global coordinate system to build the parking lot map. Then the semantic features are matched with the previously constructed map to locate the vehicle. Finally, EKF is used to integrate visual positioning results and odometer information to ensure the system can obtain continuous and stable positioning results in the underground parking environment. Zhu et al. [163] learned rotation and translation by using CNN to focus on different quadrants of optical flow input. However, the end-to-end method to replace the visual odometer is simple and crude but without theoretical support and generalization ability.

Loop closure detection can eliminate cumulative trajectory errors and map errors, and determines the accuracy of the whole system, which is essentially a scene identification problem [164]. Traditional methods are matched by artificially designed sparse features or pixel-level dense features. Deep learning can learn high-level features in images through neural networks. Furthermore, its recognition rate can reach a higher level by using the powerful recognition ability of deep learning to extract higher-level robust features of images. In this way, the system can have stronger adaptability to image changes such as perspective and illumination and improve the loop closure image recognition ability [165]. Therefore, scene identification based on deep learning can improve the accuracy of loop closure detection, and CNN has also obtained many reliable effects for loop closure detection. Memon et al. [166] proposed a dictionary-based deep learning method, which is different from the traditional Bow dictionary and uses higher-level and more abstract deep learning features. This method does not need to create vocabulary, has higher memory efficiency, and has a faster running speed than similar methods. However, this paper is only based on the likeness score detection cycle, so it is not widely representative. Li et al. [167] proposed a learning feature-based visual SLAM system named DXSLAM, which solved the limitations of the above methods. Local and global features are extracted from each frame using CNN, and these features are then fed into modern SLAM pipelines for posture tracking, local mapping, and repositioning. Compared with traditional BOW-based methods, it achieves higher efficiency and lower computational cost. In addition, Qin et al. [168] used CNN to extract environmental semantic information and modeled the visual scene as a semantic subgraph. It can effectively improve the efficiency of loopback detection by using semantic information. Refs. [169,170] and others describe in detail the achievements of deep learning in many aspects. However, with the introduction of more complex and better models, how to ensure the real-time performance of model calculation? How to better set in the loop closure detection model in resource-constrained platforms, and the lightweight of the model is also a major problem [171].

CNN has achieved good results in replacing some modules of the traditional VSLAM algorithm, such as depth estimation and loop closure detection. Its stability is still not as good as the traditional VSLAM algorithm [172]. In contrast, the semantic information extraction of the CNN system has brought better effects. The process of traditional VSLAM is optimized by using CNN to extract the semantic information of the environment with higher-level features, making the traditional VSLAM achieve better results. Using a neural network to extract semantic information and combining it with VSLAM will be an area of great interest. With the help of semantic information, the data association is upgraded from the traditional pixel level to the object level. The perceptual geometric environment information is assigned with semantic labels to obtain a high-level semantic map. It can help the robot to understand the autonomous environment and human–computer interaction. Table 8 shows some main application links of the CNN network in VSLAM. Some are involved in many aspects, only the main contributions are listed here.

Table 8.

CNN used for VSLAM.

4.1.2. RNN with VSLAM

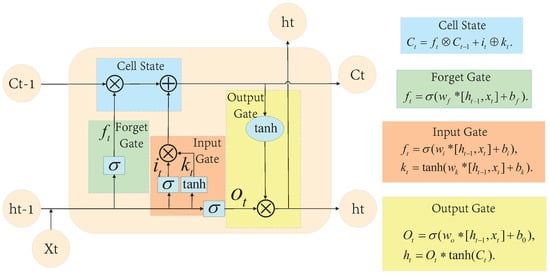

The research of RNN (recurrent neural network) began in the 1980s and 1990s and developed into one of the classical deep learning algorithms in the early 21st century. Long short-term Memory Networks (LSTM) are one of the most common recurrent neural networks [178]. LSTM is a variant of RNN, which remembers a controllable amount of previous training data or forgets it more properly [179]. As shown in Figure 15, the structure of LSTM and the equations of state of its different modules are given. LSTM with special implicit units can preserve input for a long time. LSTM inherits most characteristics of the RNN model and solves the Vanishing Gradient problem caused by the gradual reduction of the Gradient back transmission process. As another variant of RNN, GRU (Gated Recurrent Unit) is easier to train and can improve training efficiency [180]. RNN has some advantages in learning nonlinear features of sequences because of its memorization and parameter sharing. RNN constructed by introducing a convolutional neural network CNN can deal with computer vision problems involving sequence input [181].

Figure 15.

The basic framework of LSTAM.

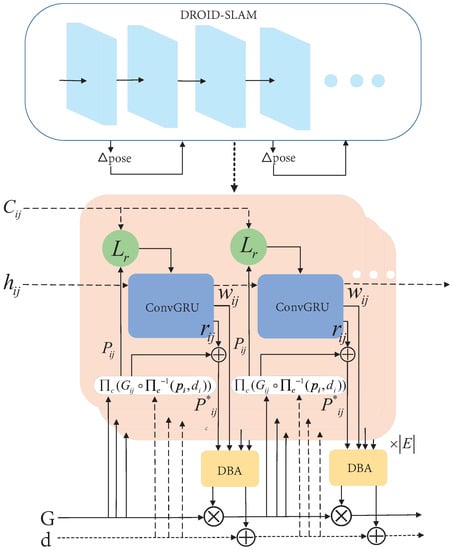

In pose estimation, the end-to-end deep learning method is introduced to solve pose parameters between frames of visual images without feature matching and complex geometric operations. It can quickly obtain the relative pose parameters between frames by directly inputting nearby frames [182]. Xue et al. [183] use deep learning to learn the process of feature selection and realize pose estimation based on RNN. In pose estimation, rotation and displacement are trained separately, which has better adaptability compared with traditional methods. In 2021, Teed et al. [184] introduced DROID-SLAM, whose core is a learnable update operator. As shown in Figure 16, the update operator is a 3 × 3 convolutional GRU with a hidden state of H. The iterative application of the update operator creates a series of attitudes and depths that converge to a fixed point that reflects a real reconstruction. The algorithm is an end-to-end neural network architecture for visual SLAM, which has great advantages over previous work in challenging environments.

Figure 16.

The core framework of DROID-SLAM.

Most existing methods adopt to combine CNN with RNN to improve the overall performance of VSLAM. CNN and RNN can be combined using a separate layer, with the output of CNN as the input of RNN. On the one hand, it can automatically learn the effective feature representation of the VO problem through CNN. On the other hand, it can implicitly model the timing model (motion model) and data association model (image sequence) through RNN [185]. In 2017, Yu et al. [60] combined RNN with KinectFusion to carry out semantic annotation on RGB-D collected images to reconstruct a 3D semantic map. They introduced a new loop closure unit into RNN to solve the problem of GPU computing resource consumption. This method makes full use of the advantages of RNN to realize the annotation of semantic information. High-level features have better discrimination and help the robot to better complete the data association. Due to the use of RGB-D cameras, they can only be operated in indoor environments. DeepSeqSLAM [186] solved this problem well. In this scheme, a trainable CNN+RNN architecture is used to jointly learn visual and location representations from a single monocular image sequence. An RNN is used to integrate temporal information on short image sequences. At the same time, using the dynamic information processing functions of these networks, end-to-end position and sequence position learning are realized for the first time. Furthermore, the ability to learn meaningful temporal relationships from single image sequences of large driving datasets. In running time, accuracy, and calculation needs, sequence-based methods are significantly superior to traditional methods and can operate stably in outdoor environments.

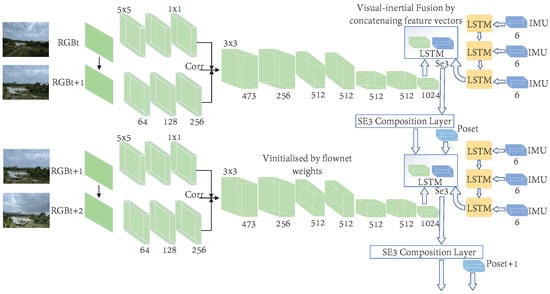

CNN can be combined with many links of VLSAM, such as feature extraction and matching, depth estimation, and pose estimation, and has achieved good results in these aspects. RNN, by contrast, has a smaller scope of application, but it has a great advantage in helping to establish consistency between nearby frames. RNN is a common method for data-driven timing modeling in deep learning. Inertial data such as high frame rate angular velocity and acceleration output by IMU have strict dependence on timing, which is especially suitable for RNN models. Based on this, Clark et al. [175] proposed to use a conventional small LSTM network to process the original data of IMU and obtain the motion characteristics under IMU data. Finally, they combined visual motion features with IMU motion features, and sent it into a core LSTM network for feature fusion and pose estimation. Its principle of it is shown in Figure 17.

Figure 17.

Clark et al. proposed a framework for visual inertia fusion using LSTM.

Compared with pose estimation, we believe that RNN is more attractive for its contribution to visual-inertial data fusion. This method can effectively fuse visual-inertial data and is more convenient than traditional methods. Similar work, such as [187,188], proves the effectiveness of the fusion strategy, which provides better performance compared with direct fusion. This paper gives the contribution of RNN to partial VSLAM in Table 9.

Table 9.

RNN used for VSLAM.

This paper introduces the combination of deep learning and traditional VSLAM from the classical neural networks CNN and RNN in this section. Table 10 shows some excellent algorithms combining neural networks with VSLAM.

Table 10.

An excellent algorithm combining neural networks with VSLAM.

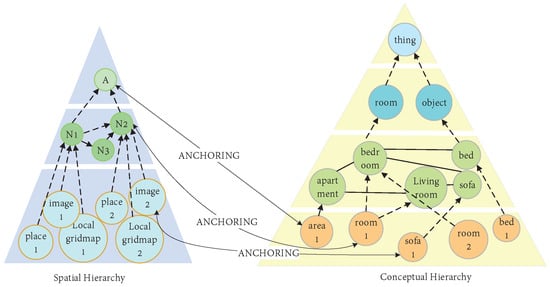

4.2. Modern Semantic VSLAM

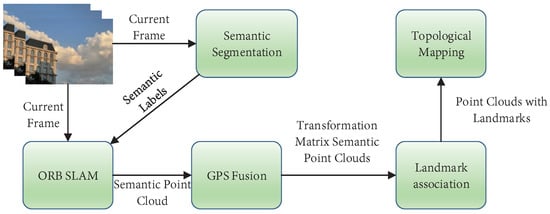

Deep learning has made many achievements in pose estimation, depth estimation, and loop closure detection. However, in VSLAM, deep learning is currently unable to shake the dominance of traditional methods. However, applying deep learning to semantic VSLAM research can obtain more valuable discoveries, which can quickly promote to development of semantic VSLAM. Refs. [60,158,168] used CNN or RNN to extract semantic information in the environment to improve the performance of different modules in traditional VSLAM. The semantic information was used for pose estimation and loopback detection. It significantly improved the performance of traditional methods and proved the effectiveness of semantic information for the VSLAM system. This paper believes that this provides technical support for the development of modern semantic VSLAM and is the beginning of modern semantic VSLAM. Using deep learning methods such as target detection and semantic segmentation to create a semantic map, which is an important representative period of semantic SLAM development. Refs. [135,200] points out that semantic SLAM can be divided into two types according to different target detection methods. One is to detect targets using traditional methods. Real-time monocular object SLAM is the most common one, using a large number of binary words and a database of object models to provide real-time detection. However, it’s very limited because there are many types of 3D object entities for semantic classes such as ”cars.“ Another approach to SLAM is object recognition using deep learning methods, such as those proposed in [46].

Semantics and SLAM may seem to be separate modules, but they are not. In many applications, the two go hand in hand. On the one hand, semantic information can help SLAM to improve the accuracy of mapping and localization, especially for complex dynamic scenes [201]. The mapping and localization of traditional SLAM are mostly based on pixel-level geometric matching. With semantic information, we can upgrade the data association from the traditional pixel level to the object level, improving the accuracy of complex scenes [202]. On the other hand, by using SLAM technology to calculate the position constraints between objects, the consistency constraints can be applied to the recognition results of the same object at different angles and at different times, thus improving the accuracy of semantic understanding. The integration of semantic and SLAM not only contributes greatly to the improvement of the accuracy of both but also promotes the application of SLAM in robotics, such as robot path planning and navigation, carrying objects according to human instructions, doing housework, and accompanying human movement, etc.

For example, We want a robot to walk from the bedroom to the kitchen to get an apple. How does that work? Relying on traditional SLAM, the robot calculates its location (automatically) and Apple’s location (manually) and then does path planning and navigation. If the apple is in the refrigerator, you also need to manually set the relationship between the refrigerator and the apple. However, now with our semantic SLAM technology, it’s much more natural for a human to send a robot, “Please go to the kitchen and get me an apple”, and the robot will do the rest automatically. If there is a contaminated ground in front of the robot during an operation, traditional path planning algorithms need to manually mark the contaminated area so the robot can bypass it [203].

Semantic information can help robots better understand their surroundings. Integrating semantic information into VSLAM is a growing field that has received more and more attention in recent years. This paper will elaborate on our understanding of semantic VSLAM from two aspects of localization, mapping, and dynamic object removal in this section. We believe the biggest contribution of deep learning for VSLAM is the introduction of semantic information. It can improve the performance of different modules of traditional methods to varying degrees. Especially in the construction of the semantic map, which promotes the innovation of the whole intelligent robot field.

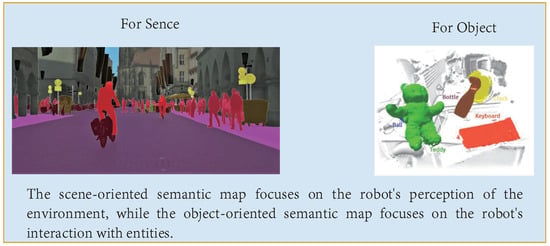

4.2.1. Image Information Extraction

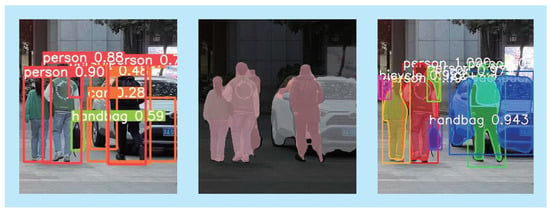

The core difference between modern semantic VSLAM and traditional VSLAM lies in the integration of the object detection module. It can obtain the attributes and semantic information of objects in the environment [204]. The first step of semantic VSLAM is to extract semantic information from the images gained by the camera. Furthermore, semantic information based on image information can be achieved through classifying image information [205]. Traditional target detection relies on interpretable machine learning classifiers, such as decision trees and SVM, to classify and realize target features. However the detection process is slow, the accuracy is low and the generalization ability is weak [206]. Image classification based on deep learning can be divided into Object detection, Semantic segmentation, and Instance segmentation, as shown in Figure 18.

Figure 18.

From left to right are the test renderers of YOLOv5, Deeplabv3, and Mask R-CNN.

How to better extract semantic information from images is a hot research issue in computer vision, whose essence is to extract object character information from scenes [207]. We believe that although neural networks such as CNN also contribute to semantic information extraction, modern semantic VSLAM relies more on semantic extraction modules such as target detection. Object detection and image semantic segmentation are both methods of extracting semantic information from images. Semantic segmentation of images is to understand images at the pixel level to obtain deep-level information in the image, including space, category, and edge. Semantic segmentation technology based on a deep neural network breaks through the bottleneck of traditional semantic segmentation [208]. Compared with semantic segmentation, target detection only obtains the object information and spatial information of the image. Furthermore, it identifies the category of each object by drawing the candidate box of the object, so target detection is faster than semantic segmentation [209]. Compared with object detection, semantic segmentation technology has higher accuracy, but its speed is much lower [210].

Target detection is divided into one-stage and two-stage structures [211]. Early target detection algorithms use two-stage architecture. After creating a series of candidate boxes as samples, sample classification is carried out through a convolutional neural network. Common algorithms include R-CNN [212], Fast R-CNN [213], Faster R-CNN [214], and so on. Later, YOLO [215] creatively proposed the one-stage structure. It directly carried out the Two steps of the two-stage in One step, completed the classification and positioning of objects in one step, and directly output the candidate box and its category obtained by regression. One-stage reduces the steps of the target detection algorithm and directly converts the problem of target frame positioning into regression problem theory without the need to create candidate boxes, which are superior in speed. Common algorithms include YOLO and SSD [216].

In 2014, the appearance of R-CNN subverted the traditional object detection scheme, improved the detection accuracy, and promoted the rapid development of object detection technology. Its core is to extract candidate regions, then obtain feature vectors through Alexnet, and finally use SVM classification and frame correction. However, the speed of feature extraction is limited due to the serial feature extraction method used by R-CNN. Ross proposed Fast R-CNN in 2015 to solve this problem well. Region of Interest Pooling (ROI Pooling) operation is used in Fast R-CNN to improve the efficiency of feature extraction, and Region generation network (RPN) is used for coordinate correction. Many candidate frames (anchor) are set in RPN. Then the dependency relation of the anchor to the background is judged, to work out the coverage area of the anchor and determine whether the target is covered. In addition, YOLO improves the accuracy of prediction, speeds up the processing speed and increases the types of identified objects, and proposes a joint training method for target classification and detection. YOLO is one of the most widely used target detection algorithms, offering real-time detection and a series of improved versions since then.

Different from object detection, semantic segmentation not only predicts the position and category of objects in the image but also accurately describes the boundary between different kinds of objects. However, in semantic segmentation technology, an ordinary convolutional neural network cannot obtain enough information. To solve this problem, Long et al. proposed a fully convolutional neural network FCN [217]. Compared with CNN, FCN does not have a fully connected layer. The new FCN obtains the spatial position of the feature map and fuses the output of different depth layers with the hierarchical structure. This method combines local information with global information and improves the accuracy of semantic segmentation. In Segnet network proposed by Badriarayansn et al. [218], the encoder-decoder structure was proposed, which combined two independent networks to improve the accuracy of segmentation. However, the combination of two independent networks severely reduced the detection speed. Zhao et al. proposed PSPNet [219] and a pyramid module, which fuses the features of each level, such as a pyramid, and finally fuses the output to further improve the segmentation effect.

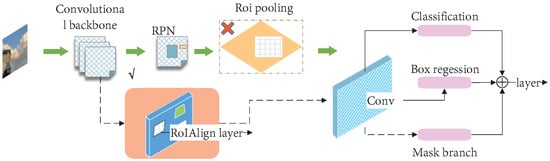

In recent years, the continuous improvement of computer performance promotes the rapid development of instance segmentation in vision. Instance segmentation not only has the classification on the pixel level (semantic segmentation) but also has the location information of different objects (target detection), even the same object can be detected. In 2017, He et al. proposed the Mask R-CNN [220]. This algorithm is the pioneering work of instance segmentation. As shown in Figure 19, its main idea is to add a branch for semantic segmentation based on Faster R-CNN.

Figure 19.

The framework of MASK-RCNN.