Abstract

3D reconstructed models are becoming more diffused daily, especially in the Cultural Heritage field. These geometric models are typically obtained from elaborating a 3D point cloud. A significant limit in using these methods is the realignment of different point clouds acquired from different acquisitions, particularly for those whose dimensions are millions of points. Although several methodologies have tried to propose a solution for this necessity, none of these seems to solve definitively the problems related to the realignment of large point clouds. This paper presents a new and innovative procedure for the fine registration of large point clouds. The method performs an alignment by using planar approximations of roof features, taking the roof’s extension into account. It looks particularly suitable for the alignment of large point clouds acquired in urban and archaeological environments. The proposed methodology is compared in terms of accuracy and time with a standard photogrammetric reconstruction based on Ground Control Points (GCPs) and other ones, aligned by the Iterative Closest Point method (ICP) and markers. The results evidence the excellent performance of the methodology, which could represent an alternative for aligning extensive photogrammetric reconstructions without the use of GCPs.

1. Introduction and State of the Art

Image-based and laser scanning are the mainly employed techniques for the 3D reconstruction of extensive areas. The first one, in particular, has been growing incredibly in the last few years, thanks to the possibility of being combined with the use of Unmanned Aerial Vehicles (UAVs), or drones. These can be equipped with a professional camera and can survey large and open spaces in a short time, with a low effort and, especially, a minimal cost. Laser scanning, on the other side, requires a higher effort to be used, so it is preferred in situations where a UAV cannot operate, such as in the 3D reconstruction of a large enclosed environment.

One of the current significant limitations in using these technologies is managing the extensive data set resulting from the acquisitions. Nowadays, large-scale 3D scanners can reach a few centimeters and even millimeters of resolution. Millions of points can characterize the resulting 3D point cloud when capturing large area environments. Such a large point cloud is quite hard to process with the current technologies [1], in particular for three different aspects, which are:

- Post-processing operations and tessellation for generating the 3D mesh;

- Storage of information;

- Alignment with other point clouds.

This last point, in particular, is becoming crucial in the last few years because, the characteristics of 3D scanners being complementary, the research community has advocated the integration of point clouds coming from different acquisition modalities [2]. This integration, being in most cases the point clouds defined in different coordinate systems, requires a well-known registration procedure that allows for aligning multiple geometric models to a unique reference frame. One of the most used methodologies for point cloud alignment is based on the use of markers. At least three markers are appropriately positioned on the area to be scanned. The point clouds are aligned by imposing the overlap of the recognized markers. The main limit of this methodology is the precision of the result, which also depends on the resolution of the digital images used to acquire the markers. Furthermore, the markers have to be positioned in an area to be detected in both acquisitions. This is not possible in the case of acquisitions performed a long time apart. In such cases, the use of natural markers is preferred, which, on the other side, is more difficult to detect accurately.

Most of the other existing procedures for point cloud alignment employ a coarse-to-fine alignment [3]. The coarse registration is addressed to establish a rough alignment between the point clouds. This step is fundamental for the methodologies in use for the fine registration, which are very sensitive to the initial alignment in terms of computational cost and final result. The coarse registration can adopt several strategies: Aiger et al. [4], for example, introduced the Four-points congruent set, a method allowing for extracting a set of four co-planar points whose intersectional diagonal ratios are invariant. This property is used to verify the matching between two point clouds and evaluate the alignment’s rigid transformation (rotation and translation). The weakness of this method is the high computational cost required to verify the matches between point sets.

Other methods treat the coarse alignment by using a probabilistic approach, searching for one-to-many correspondences between density functions. Jian et al. [5] modeled the point clouds through the Gaussian mixture models. Golyanik et al. and Zang et al. [6,7] proposed an improved and refined probabilistic registration framework. However, the limit of these methodologies is the incapability to work with large point clouds because registration results strictly depend on the sampling point clouds.

Finally, a last class of methodologies is based on deep learning techniques. Their significant advantage consists in the capability to recognize automatically, with good performance, features in the point cloud, reducing the subjectivity introduced by the operators when they perform these operations manually. The identified features are then used to establish the transformation matrix necessary for the alignment of point clouds. Some of the most interesting and recent works in this area have been presented by Qi et al. [8], Deng et al. [9,10], Yang et al. [11], and Wang et al. [12]. Their methods allow the direct alignment of unordered point sets. However, their reliability is limited only to small-scale indoor environments.

The previously presented methodologies do not allow for obtaining, so far, a satisfying alignment in metrological terms. Therefore, they are typically applied only as a first step of a more complex registration procedure that foresees, in the second part, the use of Iterative Closest Point (ICP) or Normal Distribution Transform (NDT), with their respective variants.

The ICP is a diffused method for fine registration of point clouds: it is quite simple to implement and the results are satisfying, especially when a good initial alignment is provided between the point clouds. ICP [13] performsoptimal registration by conversely resolving the nearest point-to-point correspondence and the optimal rigid transformation until convergence [14]. ICP searches for one-to-one correspondences between the points; this generates difficulties when the point clouds are characterized by heterogeneous densities, a typical drawback of 3D scanner technologies. ICP is also very sensitive to outliers, noise, and occlusions and misbehaves when the point clouds to be aligned have been acquired in different conditions (presence of cars, fauna, different light or weather conditions). Furthermore, this method is onerously computational since hundreds of thousands of points must be processed in a mathematical optimization process. Consequently, several variants of the classical ICP method have been proposed during these years. Sharp et al. [15], for example, suggested the use of invariant features combined with the idea of the geometric distance to minimize the effects of noise. Bae et al. [16] introduced a reviewed ICP, which uses curvature and normal vectors to identify the correspondences. Bouaziz et al. [17] presented a technique that treats the ICP as a sparse optimization problem whose solution minimizes the effects of outliers. In addition to the quality of the point cloud alignment, the computational efficiency of the method is another critical aspect. Uhlenbrock et al. [18] proposed a 2D array based k-d tree to speed up the iterative process. Pavlov et al. [19] introduced the Anderson acceleration technique in ICP, helping to reduce the number of iterations required for the method to converge. Nevertheless, the sensitivity to noise and outliers and the low efficiency of the method remain an open topic for the ICP.

NTD [20,21] is another method employed for the fine alignment. Its working principle is similar to the coarse alignment’s previously mentioned probabilistic approach. The point cloud is represented through a Gaussian distribution; each distribution is characterized by a specific Probability Density Function (PDF). Assigned two-point clouds to align, the NTD determines, through a nonlinear optimization problem, the transformation which minimizes the dissimilarities between the two PDFs. As opposed to the ICP, the NTD can also operate with low-density point clouds. On the other side, this method is conceptually more difficult to implement; the definition of PDFs requires a voxelization of the point cloud space, and the results of the nonlinear optimization are strictly dependent on the first alignment tentative.

The point clouds registration performed by using geometric features is a promising strategy to reduce the computational complexity of the ICP method, which can achieve high-quality results. This approach for the point cloud alignment is based on the overlap of properly selected geometric features recognized in the common area of each point cloud. This approach is, in general, much more efficient than the ICP since the alignment process is performed by using a limited amount of data, those associated with few geometric entities, generally planes. Furthermore, it performs high-quality results not so far from those obtained using ICP. Stamos et al. [22] presented a method where the alignment of point clouds is based on the intersection lines of the geometric planes associated with the vertical facets of buildings. A similar methodology was proposed by Yang et al. [23]. Some methods look interesting since they improve the efficiency of the feature alignment process. Dold et al. [24] introduced a technology that identifies the 3D planar patches of the mesh and combines them by using a constrained search technique so that the matching combinations are reduced. Xu et al. [25] proposed an automatic strategy for aligning planar patches based on the voxelization of the point cloud. In each voxel, an implicit plane representation is adopted to fit the surface of points. An eigenvalue decomposition evaluates the quality of the approximating plane with the surface.

Wu and Fan in [26] present an approach for registering airborne LiDAR point clouds based on matching corresponding linear plane features. First, the point clouds of the building roofs are extracted from the two LiDAR datasets using the method proposed in [27]. By an iterative process, the points used to approximate the roofs are those for which the residual error compared to the approximating plane is less than a threshold value. Then, the normal vectors are calculated for every simple roof facet of the corresponding buildings. The vectors of the roof facets are used to establish an observation equation system to estimate the transformation between the two datasets; the correspondence between roofs is manually detected. The least-squares method is used to solve the observation system with redundant feature-pairs. The registration is performed in two steps: first, the rotation matrix calculation and then the 3D translation vector computation. The results show that the method does not consider the size of the roofs in the optimization but only their number. This leads to incorrect registration in the case of a non-uniform distribution of roofs.

Rabbani et al. [28] illustrated a methodology that uses other kinds of features, such as cylinders, spheres, and planes that are extracted directly from the point cloud.

Registration performed using geometric features is fascinating because these, especially those extended concerning the point cloud’s dimensions, have low sensitivity to noise and outliers. Moreover, when enough geometric primitives are recognized in the point cloud, this one can be set aside for the successive alignment operations because the geometric features are defined by all the geometric attributes necessary for the alignment.

All the described methodologies using geometric features for the alignment have been tested in urban and interior environments since, in these cases, all the acquired objects are defined by planar features. In this paper, the geometric feature-based approach is used to align point clouds acquired in a vast natural environment with few diffused artificial elements such as groups of houses or historical environments. In particular, the possibility of associating planar features with roofs, which represent isolated elements in the environment and are characterized by very irregular surfaces, will be investigated. In addition, a new representation of the ideal flat feature associated with the roofs is proposed considering the roofs’ size in the observation equation system. This allows for an optimization that better considers the spatial distribution of roofs and not simply their spatial position and orientation.

In the first part of the paper, ad-hoc experimentation that has been led to show the validity and the repeatability of approximating roofs by planes will be presented. The second part will discuss an application of the proposed methodology to the test case of Alba Fucens, Italy, where the roofs of some ancient buildings have been used to merge two separate surveys. The alignment results have been compared with the “ground truth” thanks to some Ground Control Points (GCPs), uniformly distributed into the aligned point clouds. The presented methodology results have also been compared with those obtained from an ICP and a marker-based alignment.

2. The Proposed Methodology

The problem solved by the presented methodology consists of the alignment of two surveys of territory having a shared zone, where geometric features can approximate some anthropic artifacts. The methodology here proposed uses a typical element recurrent in the anthropized environments: the roofs of buildings. The roof pitches are elements to which ideal geometric features could be associated (planes) and then used as a reference for the alignment procedure of two distinct surveys. The proposed computer-aided approach starts with two sets of unordered point clouds to align, and , representing two different areas, A and B; the problem to be solved requires that:

In each point cloud, the points of the C area are segmented so that the C area is described by the survey of the region A and the survey of the region B. The presence of roof elements (non-ideal features) or other geometric entities to be associated with ideal features is required in C. In what follows, only roof pitches will be considered. The set of roof features in C must be identified as a set of non-ideal features with which ideal geometric elements can be associated (ideal-features). The ideal features are used to determine the two surveys’ alignment uniquely.

The methodology introduced in this paper allows for obtaining the fine alignment of multiple point clouds, two clouds at a time, by three key phases:

- non-ideal feature identification;

- ideal-features association;

- registration of the sets of ideal features and transformation matrix evaluation for the alignment of the point clouds.

2.1. Non Ideal-Feature Identification

The proposed methodology uses some of the roofs in the territory acquired as non-ideal features, with which ideal features (planes) are associated, leading to the alignment process. Roofs are identifiable in every urban environment and, thanks to their area extension, are high-weight elements for driving an alignment process. The set of non-ideal features (roofs) needs to be selected, in number and orientation, so that all the degrees of freedom necessary for alignment of the point clouds are constrained. Some methods described in the literature can be used for this purpose to automatize the identification process. Fan et al. in [27] present an approach for roof facet segmentation based on ridge detection and hierarchical decomposition along ridges. The process starts with detecting 3D points of roof ridges as the local maximum height. For each detected roof ridge, 3D points are segmented to their corresponding roof facets being located on the same plane as the seed points. The process terminates when no roof ridges can be detected. Dahaghin et al. in [29] proposed a method to extract building roofs from the visible point cloud. The method includes ground filtering, vegetation removal, wall removal based on geometric properties, and, finally, segmentation of remaining points. The roof segmentation is performed by a modified version of the connected component labeling algorithm proposed by Lumialn et al. in [30] that divides the selected point cloud into smaller parts separated by the minimum distance condition, and each part forms a connected component. Awrangjeb et al. in [31] propose an automatic 3D roof extraction method by integrating LIDAR (Light Detection In addition, Ranging) data and multispectral ortho imagery. Using the ground height from a DEM (Digital Elevation Model), the raw LIDAR points are separated into the ground and non-ground points. The latter are segmented using the image line guided segmentation technique using the ground mask and color and texture information from the orthoimagery to extract the roof planes. These automatic methods presented in the literature show excellent results in roof segmentation. Since this paper aims to analyze mainly the association and registration phases, each roof pitch (non-ideal feature) is manually selected and segmented by an operator from the point clouds to which it belongs, so that h-th non-ideal features are identified, one for each roof pitch. The resulting patches, for for , are the real features associated with the h roofs identifiable in the point set C.

2.2. Ideal-Features Association

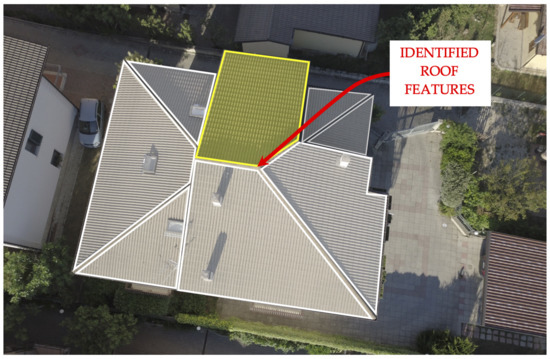

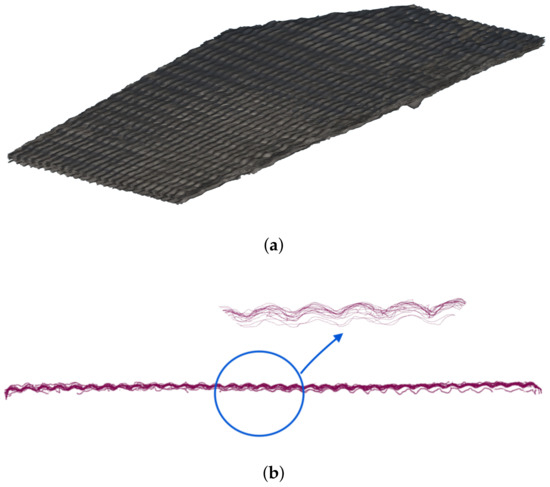

An ideal planar feature is associated with each identified patch . The repeatability of the ideal feature association to the roof patch is an important aspect that must be evaluated. Roofs are not planar objects, but they are composed of tiles that can be different in dimensions, colors, shapes, and integrity (cf. Figure 1). The continuity of roofs can be broken by chimney pots, antennas, and windows, making the planar approximation even more complex. Each time the same roof is surveyed, a different point cloud is obtained, but the ideal features associated with it have to represent the same ideal geometric entity with a high level of repeatability.

Figure 1.

An example of roof tiles characterized by different shape, dimensions, colors and integrity.

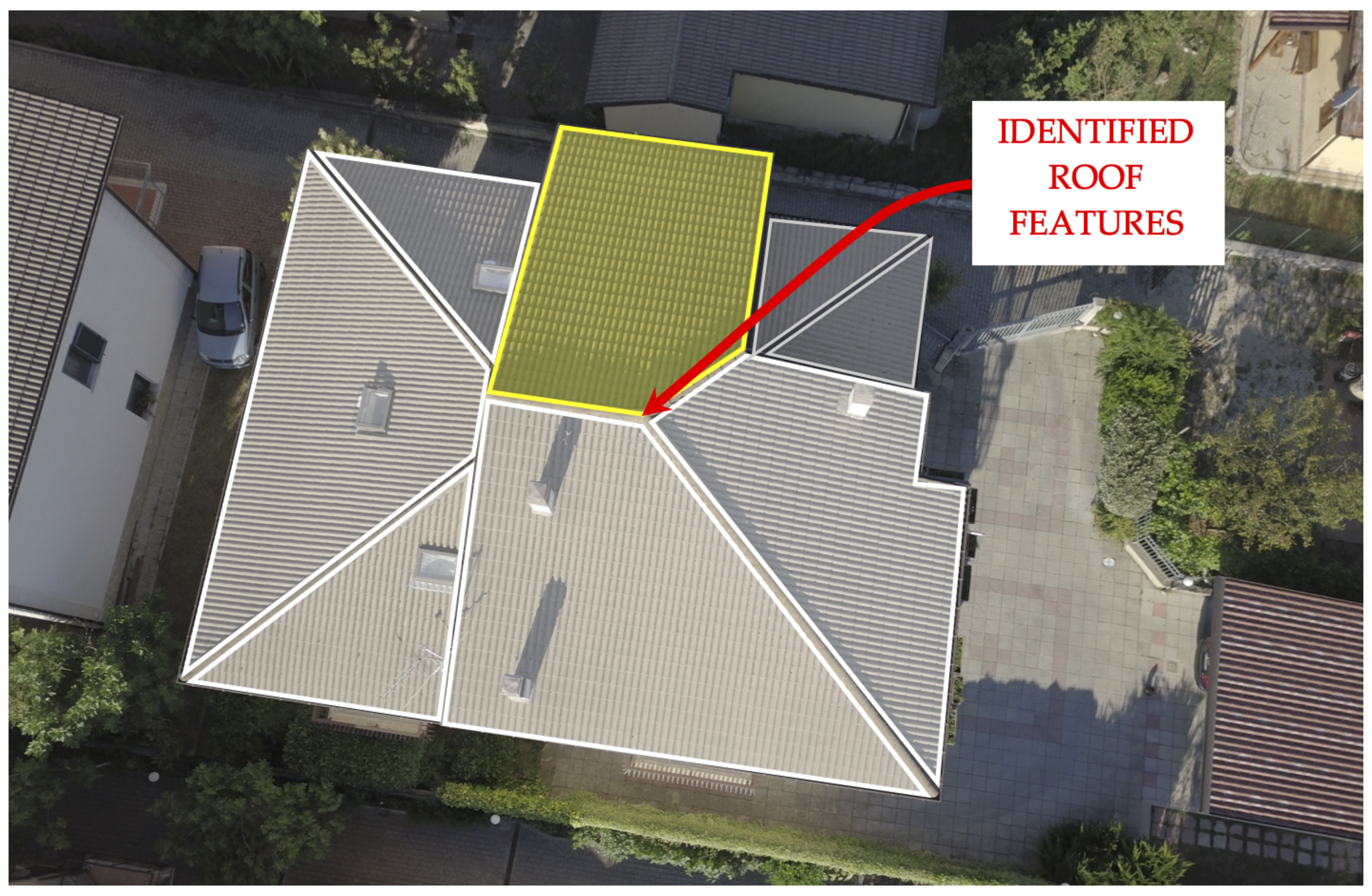

Specific experimentation has been carried out to validate the present hypothesis. The roofs characterizing a private building sited in Genzano (42°2109.7N, 13°1929.8E), L’Aquila, Italy, have been captured through 20 UAV surveys. The UAV surveys have been performed by the use of a DJI Mavic Pro, whose technical specifications are indicated in Table 1. The missions have been accomplished between 2 July and 5 July 2021, with good weather but different light conditions. Figure 2 shows a top view of the building subjected to the experimentation, while the flight plan parameters are reported by Table 2.

Table 1.

Technical specification of the instrumentation used for the UAV photogrammetry surveys in Genzano.

Figure 2.

Top view of the building chosen for experimenting the approximation of roofs by planes.

Table 2.

Adopted configuration for the 20 surveys.

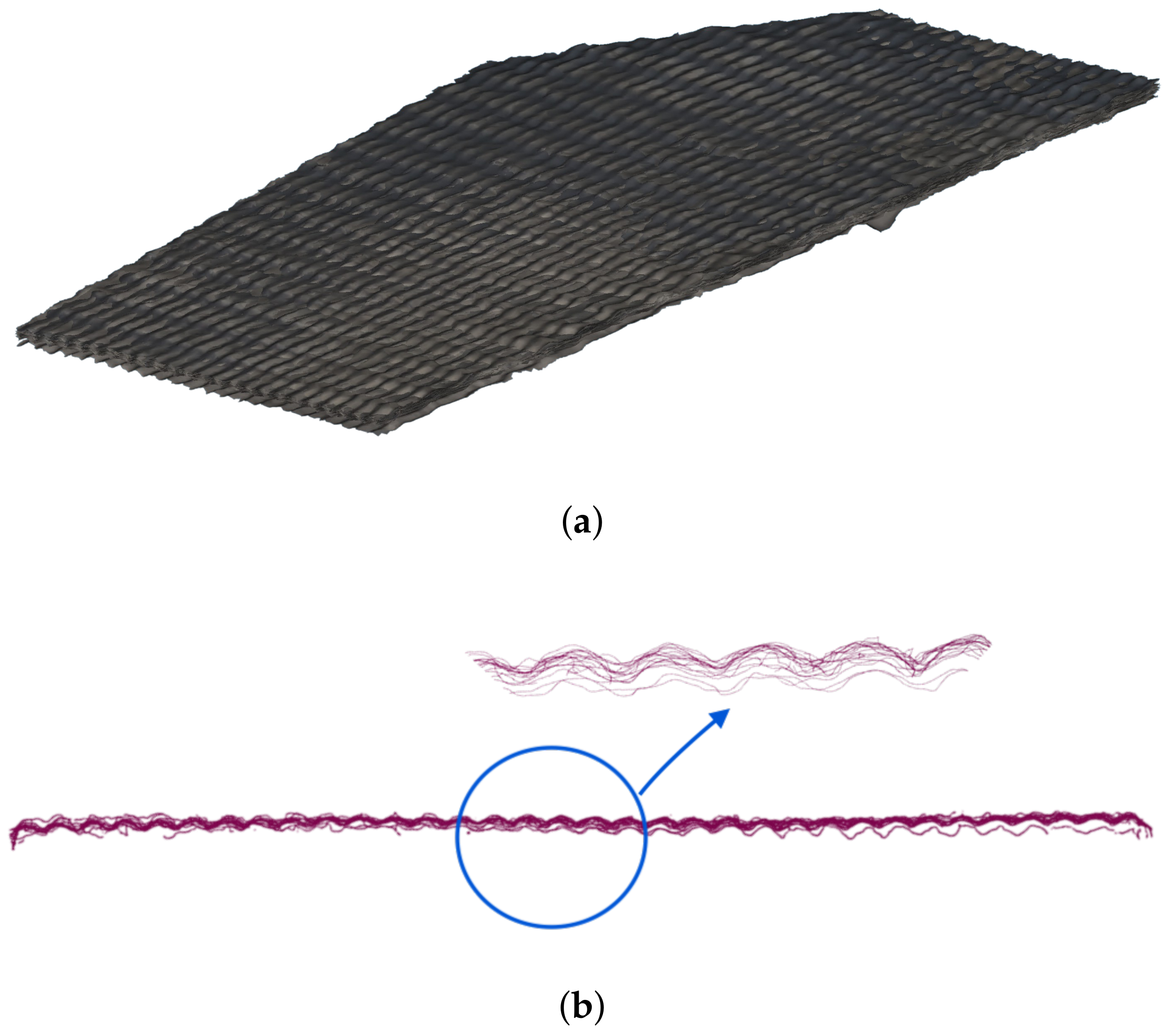

Approximately 40 photos were captured during each survey. These have been imported into Agisoft Metashape Pro (v. 1.7.1) as separated chunks, where they have been processed to obtain a 3D mesh using the Ultra High preset. The resulting meshes have been aligned with each other by defining four Control Points, recognizable thanks to the use of artificial markers that an operator has placed on the rooftop. The alignment has been performed into Agisoft Metashape using the integrated Chunk Alignment tool. Then, the meshes have been exported into Cloud Compare and manually segmented to isolate the parts of roofs. Figure 3a shows the results of the alignment and segmentation process for one of the roof features identified in the acquired building (evidenced by yellow in Figure 2). In particular, a roof feature is identified by those surfaces having the same normal, with a specific tolerance value, without interruptions. Figure 3a evidences a not negligible dispersion in the overlapping between the aligned meshes.

Figure 3.

Results of alignment and segmentation of one of the roof features of the acquired building. (a) View of the aligned mesh for one of the recognized roof features; (b) 2D contours obtained from the cross section of the roof feature recognized in (a).

A periodic function describes the roof contour, neglecting the outliers, whose period remains constant while the magnitude changes. These dispersions are for several reasons. A systematic source of error is introduced by the changes in light conditions, which lead to a different evaluation of roof contours by the photogrammetric reconstruction algorithm. This problem, described by Aber et al. [32], can be identifiable from Figure 3b. A source of casual error is introduced by the capturing sensors of the UAV camera. Finally, the discretization from the tessellation process introduces other differences among the points clouds. The present experimentation shows that the approximating planes have limited variability, despite the differences between the obtained models from the different acquisitions.

For each one of the recognized roof features of the acquired building, the approximating planes according to , , and norms have been evaluated. Being the implicit equation of a plane is as follows:

the values of a, b, c and d have been determined for the metrics previous mentioned. Only the results of the roof feature shown in Figure 3 have been reported in Table 3, those associated with the other recognized roof features being very similar to this in terms of statistical behaviour.

Table 3.

Values of coefficients a, b, c and d for the 20 meshes of the roof feature identified by Figure 3.

The obtained values have been statistically analysed to determine the repeatability and reliability of approximating roofs by planes. The results of the analysis have been reported in Table 4 and lead to the following considerations: each one of the adopted norms for the plane approximation shows good results, although evidence better behavior with respect to the other ones. The standard deviations of the coefficients a, b and c are the same for , and . These refer to the cosine directors of the plane, and the obtained standard deviations can be translated into minimal orientation errors. The orientation error among the obtained planes being minimal, the d parameter can be interpreted as the distance among them: the obtained standard deviation, using , is 1.7 cm. Since this value is comparable to the Ground Simple Distance of the UAV surveys, the approximation of roofs by planes seems robust.

In light of the achieved results, the fitting method has been used for the experimental part of the present work, described in Section 3.

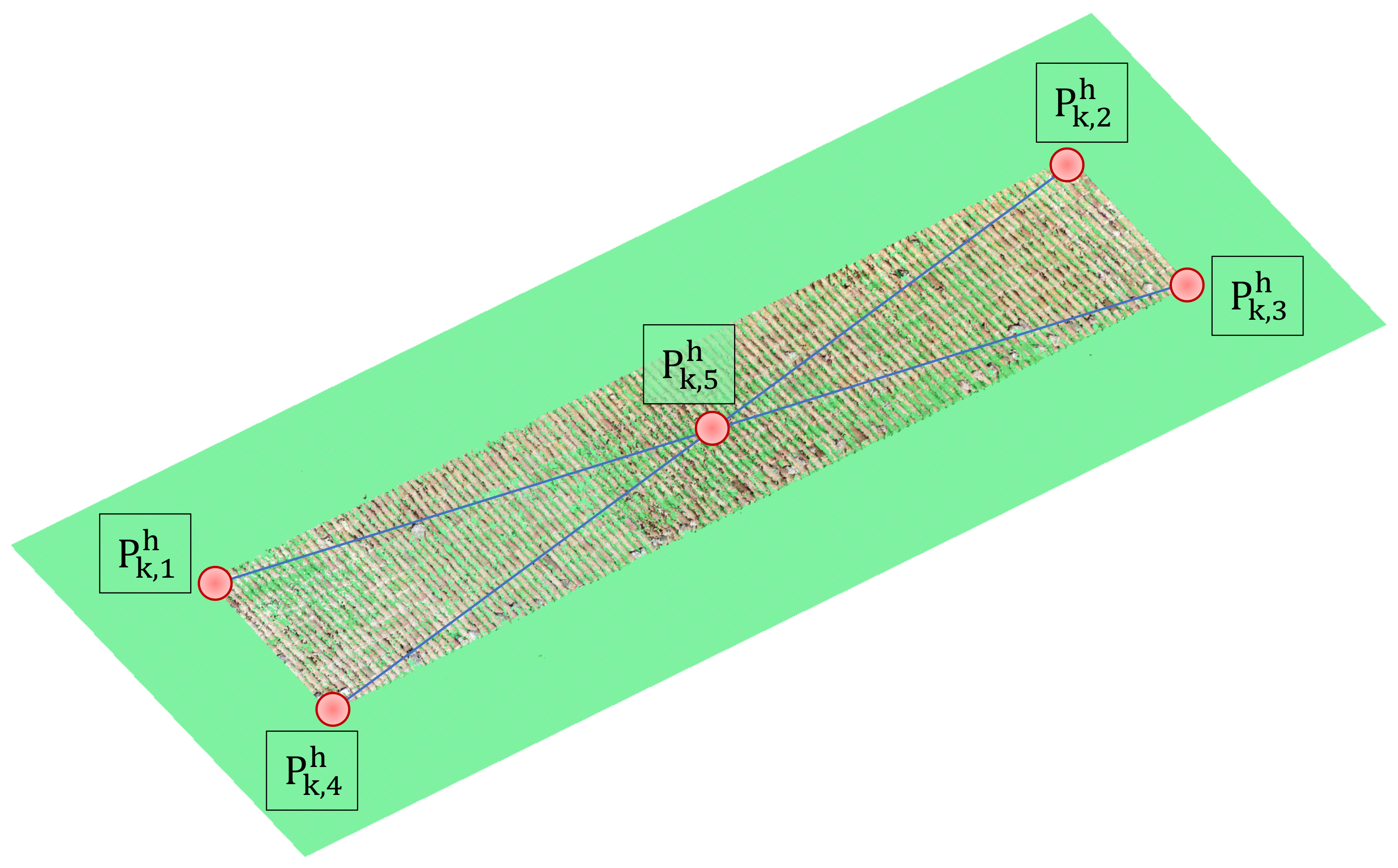

2.3. Ideal-Features Registration

The realignment problem is solved by minimizing the distances from the set of ideal features associated with with the set of corresponding ideal features in . Since the roofs are determined in the overlapping part of two points clouds, correspondences are automatically derived with an initial registration of roofs points based on the PCA method. The apex h ( to ) identifies the corresponding ideal feature in each of the two points clouds. is assumed as a fixed point cloud, and as a moving one. Registration performs the alignment of corresponding ideal features recognized in and so that the sum of the distances of two corresponding ideal features is minimized:

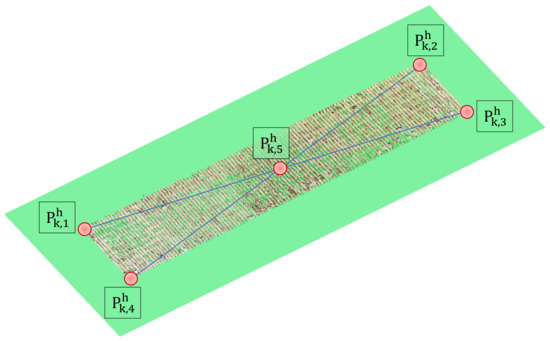

For this purpose, the ideal features () in the fixed point cloud are represented by their implicit equations. In contrast, those in the moving point cloud () by five reference points are automatically computed from the parametric equation of the plane: the four extreme points of the ideal feature and its barycentric point ( to 5 and ) (Figure 4).

Figure 4.

Example of the non-ideal feature with superimposed ideal-features and the reference points.

In order to evaluate the distance , for each ideal feature belonging to the moving point cloud , the registration is achieved by an iterative method that minimizes the following objective function:

where:

- is the number of the ideal features in each point cloud to be aligned;

- is the distance between the reference point at the step and the corresponding ideal feature ;

- is expressed by homogeneous coordinates;

- is the rotation matrix at the step;

- is the scale matrix at the step;

- is the translation matrix at the step;

As the minimization strategy, a meta-heuristic algorithm is used in the proposed methodology: the Particle Swarm Optimization (PSO) [33]. PSO has been chosen since, with respect to the exact methods, it has a lower computational burden and lack of strict usage hypothesis. Although the use of the PSO does not guarantee the convergence to a global minimum, in the application analysed here, we have the initial association between the features of the fixed point cloud with the corresponding one of the moving permitted appropriate solutions in all the considered scenarios. The result of the registration steps is the roto-translation non-rigid matrix:

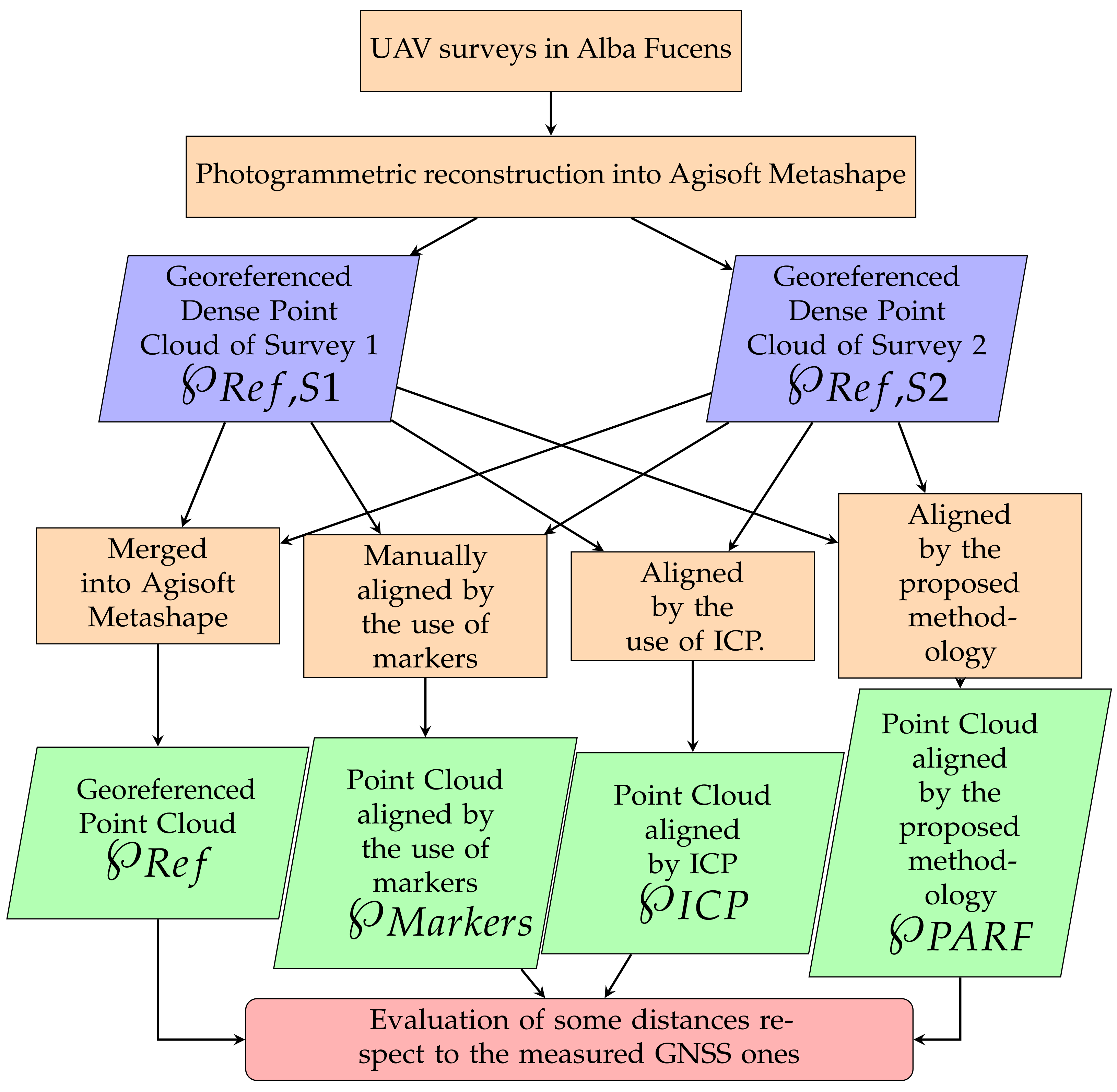

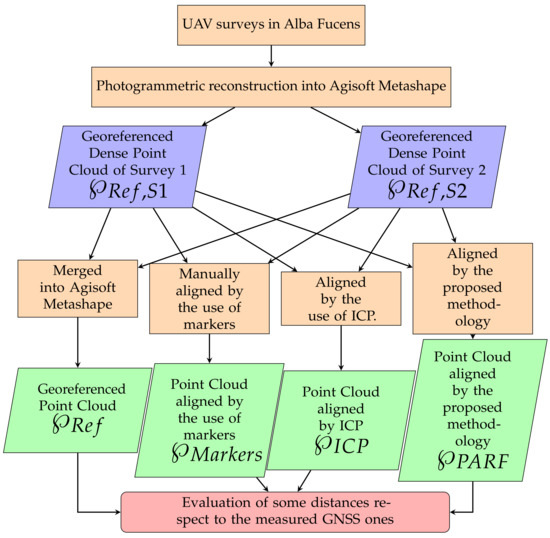

3. Experiments and Analysis

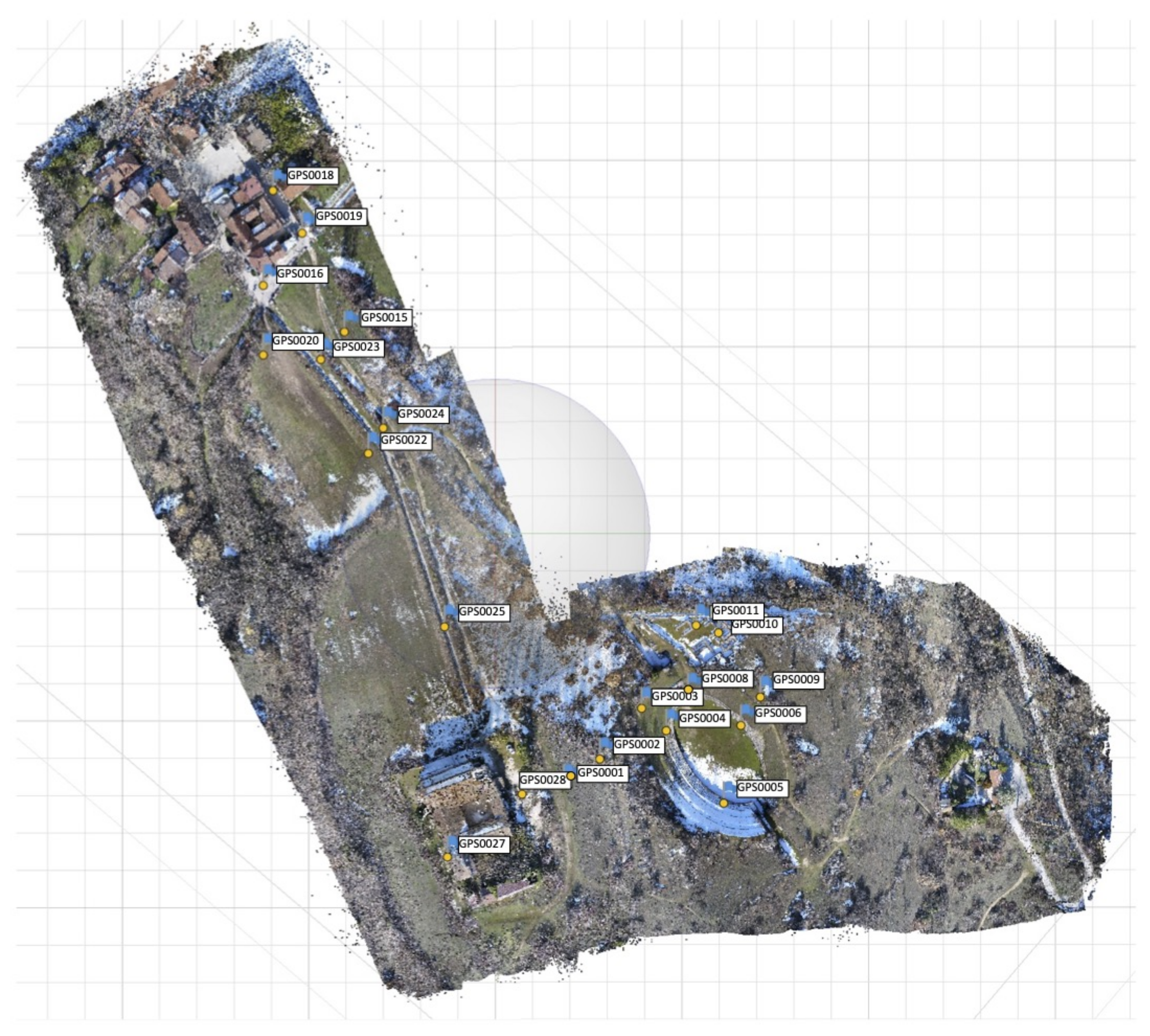

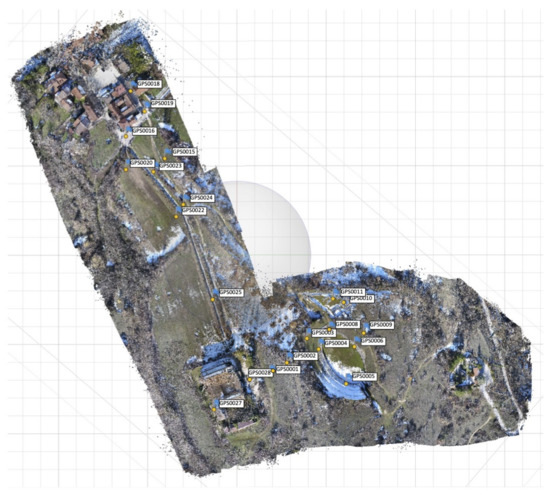

The methodology presented here has been tested in Alba Fucens, L’Aquila, Italy. Alba Fucens was an old Roman City founded in 303 B.C., and its ruins represent the broadest archaeological site in the Apennines. The extension of archaeological excavations and elements of interest in this city covers an area of approximately 20 hectares. Therefore, the digital 3D reconstruction of this archaeological site looks suitable for testing the proposed technique. Figure 5 shows the main phases of the experiment. The area reported by Figure 6 has been acquired, and 3D reconstructed through two different UAV surveys. These were performed on the date of 20 December 2021, using a DJI Matrice 200 V2, equipped with a DJI Zenmuse X5S, whose technical specifications are listed in Table 5.

Figure 5.

Workflow of the adopted experimentation to validate the proposed methodology.

Figure 6.

Overview of Alba Fucens and area covered by the two surveys and the flight plans of S1 and S2.

Table 5.

Technical specification of the instrumentation used for the UAV photogrammetry surveys in Alba Fucens.

The flight plans have been set to guarantee a good overlap between images and establish the final GSD (Ground Sample Distance) as a function of the sensor focal length and the height flight. A side lap of 60% and an overlap of 70% have been established for these surveys. The flights have been set at 30 m of altitude from the starting area (red circle in Figure 6) with a GSD of 0.66 cm/pixel. As the area is quite steep, the roof cluster, which is the starting point, is located in the highest part of the two surveys. The lowest area achieved about 55 m of flight altitude and 1.21 cm/pixel GSD. 417 and 475 images for the first and second flights have been taken. The flight plans for the two surveys are reported in Figure 6. In addition, during the survey, 25 points were acquired by a GNSS (Global Navigation Satellite System) receiver in NRTK (Network Real-Time Kinematic) mode (: 2–3 cm) that will be used for geo-referencing the point clouds and assessing their quality.

The acquired images have been processed into Agisoft Metashape Pro, obtaining two different point clouds:

- the first point cloud referred to the area of Survey 1;

- the second point cloud referred to the area of Survey 2;

The elaborations have been performed using the same settings for all the point clouds and, in particular, the most advanced preset (highest) for the Image Alignment and (“ultra-high”) for the Dense Point Cloud generation. Table 6 presents the estimated errors on GCPs and CPs for every survey. An average error of 5.35 cm has been measured on the GCPs and 5.01 cm on the CPs for Survey 1, while the same error is 4.99 cm and 4.57 cm on the CPs for Survey 2. The obtained values demonstrate good quality of the final reconstructions, whose errors are comparable with those detected in similar works described by the literature. In Table 7, the data associated with the photogrammetric reconstructions have been listed. Figure 7 shows the results of Dense Point Cloud generation and the position of GCPs and CPs.

Table 6.

GCPs and CPs RMSE of Surveys 1 and 2.

Table 7.

Data related to the photogrammetric reconstructions.

Figure 7.

Dense Point Cloud generation into Agisoft Metashape and position of GCPs.

These two point clouds have been registered by four different methods, which are described in the following:

- the registration of and has been performed into Agisoft Metashape. The two surveys, which have been processed in two different chunks, have been aligned with the “Align chunk” tool in the Program, using the marker-based method and the high preset. Then, the point clouds have been merged into a single one by the “Merge chunk” method. The resulting points cloud, characterized by over one and a half billion points, has been exported in .ply format according to the “WGS 84/UTM zone 33N” coordinate system. The GCP RMSE and CP RMSE are, respectively, 6.11 cm and 5.58 cm;

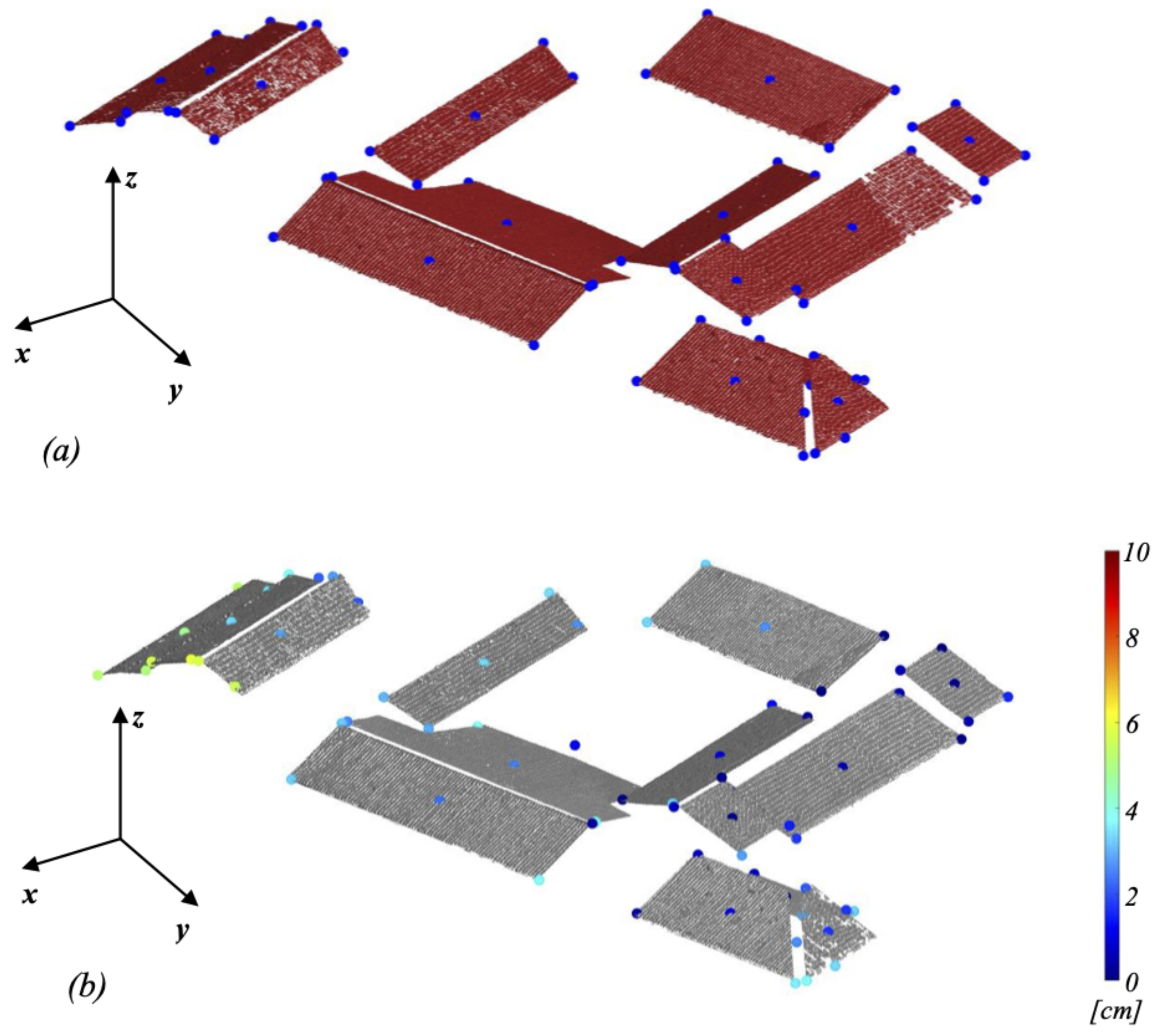

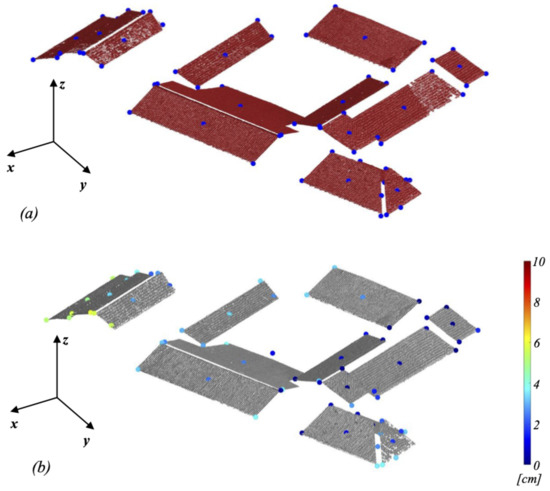

- the PARF registration, described in Section 2, has been coded in Matlab. In Figure 8a, the features used to perform the alignment of the two-point clouds are shown; these are represented by the roof patches of San Pietro church (cf. Figure 6 and Figure 9). At the end of the optimization process, the objective function assumed a value of 2.635. The evaluated transformation matrix has been applied to the point cloud for the alignment with the reference point cloud , obtaining the . In Figure 8b, the point clouds of the features of Survey 1 have been superimposed on the features of Survey 2. In the same figure, the distances’ color map between the point cloud of Survey 2 to the reference feature of the Survey 1 is depicted.

Figure 8. Results of registration method: (a) reference points superimposed on “moving” point cloud; (b) final position of reference points superimposed on “fixed” point cloud.

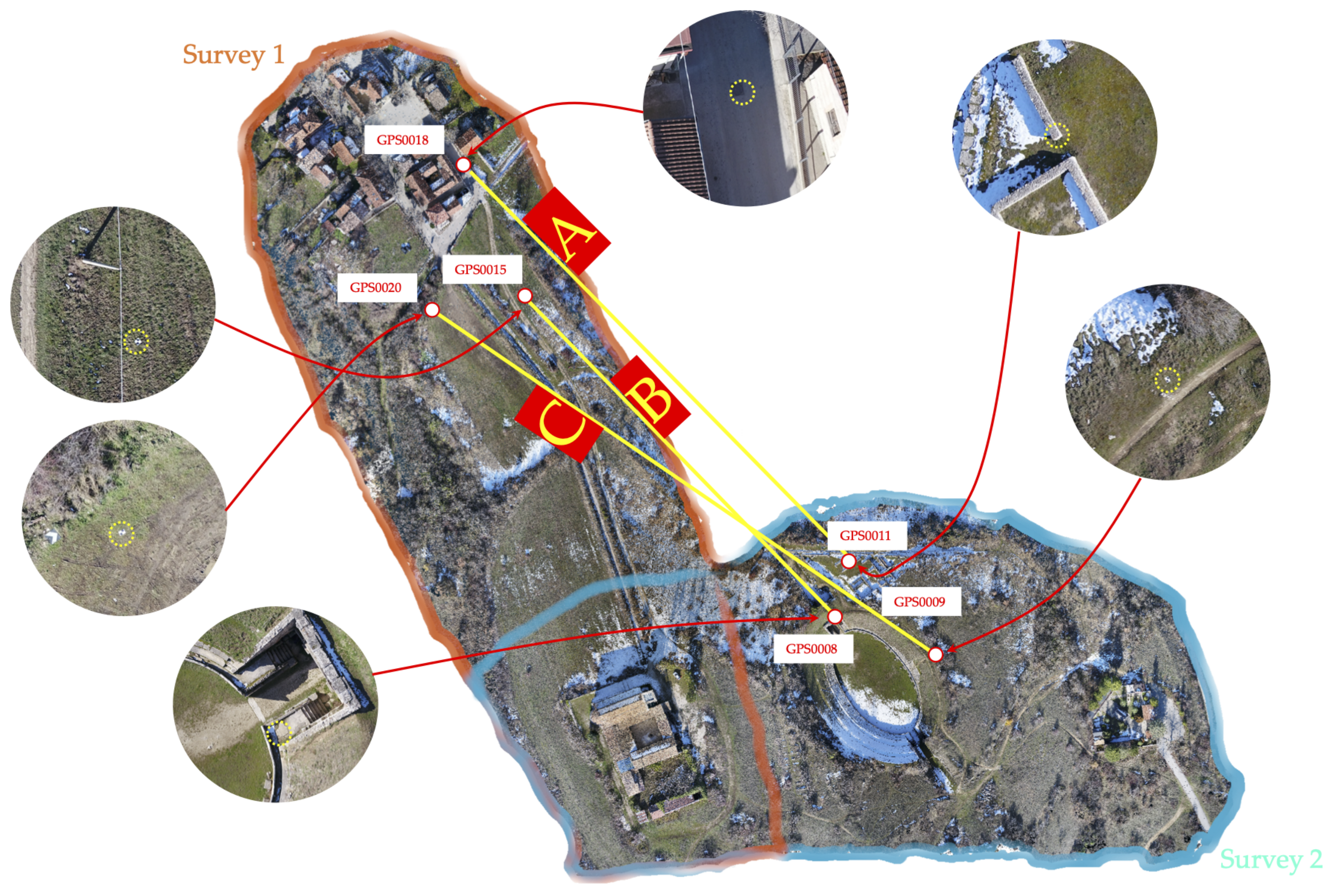

Figure 8. Results of registration method: (a) reference points superimposed on “moving” point cloud; (b) final position of reference points superimposed on “fixed” point cloud. Figure 9. Position of markers adopted for the marker-based alignment.

Figure 9. Position of markers adopted for the marker-based alignment. - , the registration between and , has been performed by the use of natural markers; this is a standard practice in Reverse Engineering applications. Two-point clouds can be aligned by recognizing at least three common markers and applying a rigid transformation that permits them to overlap. This possibility is sub-ordered to the perfect correspondence between the considered point sets. This hypothesis is nearly impossible to verify in real-world situations, especially for photogrammetry. The overlapping between the point sets is generally handled as an optimization problem. The distance between the identified corresponding points is minimized; more correspondences are identified, and the quality of the realignment process should be better. Here, the “point pair realignment” procedure, implemented by CloudCompare, has been used for the marker-based alignment. The markers have been chosen manually by extracting a set of five markers identifiable in the common area of the two point clouds () (Figure 9). The markers have been selected on the same roofs used as a reference to apply the PARF alignment method. In this way, the results obtained by the two methodologies are comparable. The alignment has been performed with a Root Mean Square Error of the distance between the markers of 22 cm;

- ; another alignment has been executed by applying an ICP methodology. In this case, the points in the intersection area of Survey 1 and Survey 2 () have been used to apply the ICP. Due to a large number of points in and , a decimation has been done, resulting in a mean distance between points of 0.03 m. This value is comparable to the point cloud location estimated error. The ICP algorithm implemented in CloudCompare has been applied, assuming a RSE of 1 × and excluding the outliers from the optimization. The registration resulting from the ICP performs an RMSE of the distances between the points in the two clouds of 2 cm.

Six GCPs have been selected to compare the accuracy of the registration of , , , and : three belonging to Survey 1 (GPS0015, GPS0018 and GPS0020), three to the Survey 2 (GPS0008, GPS0009 and GPS0011). The Euclidean distance between these pairs of the selected markers has been calculated (cf. Figure 10) and compared with the reference one, resulting from the GNSS measurements. Table 8 reports the measured distances.

Figure 10.

Measured distances (in yellow) between GCPs for the evaluation of the realignment process.

Table 8.

Measured distances (cf. Figure 10) in , and point clouds. The obtained GNSS distance is calculated starting from the GNSS position in NRTK mode with : 0.02–0.03 m.

As expected, the marker method looks the worst; an average error of 0.33 m results from the three observed distances with respect to the GNSS measurement. The flaws introduced during the picking process explain such a significant inaccuracy, which is even more impacting when natural markers are used. Furthermore, due to the low dimensions of the data set utilized for alignment, local distortion significantly impacts the marker-based technique. On the other hand, the ICP method looks like the better one. An average error of 0.09 m results from the reference distances. The good ICP results can be explained by the sampling data set’s size, which in this case is extended to the entire area shared by the two studies. The presence of an inaccuracy, on the other side, indicates that local distortions have a significant influence that can only be addressed by using GCPs.

The results of the presented methodology (PARF) are not so dissimilar from those obtained from the ICP alignment. An average error of 0.12 m results from the three measurements in Table 8. In this case, the sampling data set dimension is significantly smaller than the ICP. Moreover, it should be considered an additional source of error, generated by the approximation of the roof patches by planes and quantitatively experimented in Section 2.2.

The decimation has provided an additional source of uncertainty during the post-processing (point-point space set to 0.03 cm). This operation has been necessary to process the point clouds into CloudCompare by the workstation used for experimentation, equipped with an Intel Xeon E5 with 64 Gb of RAM. Considering all the sources of errors introduced by the photogrammetric reconstruction and the post-processing operations, the results of the realignment by the proposed methodology look very interesting: the PARF-based method makes a maximum relative error of 0.11%, whereas that based on markers registration 0.40%. The results obtained using the proposed methodology did not require any artificial marker; the realignment process depends on the presence of roof features in common areas of the surveys. Table 9 shows the different realignment times required for the adopted and compared point clouds.

Table 9.

Approximated realignment time for the four different methodologies.

4. Conclusions

This paper presents a new and innovative methodology for the accurate realignment of large point clouds. The proposed technique is based on a planar approximation of geometric features associated with roofs (PARF); roofs can be recognized in most urban and archaeological environments. A new representation of the ideal flat feature associated with the roofs is proposed considering the roofs’ size in the observation equation system. Specific experimentation proposed in the paper demonstrated their low sensitivity to noise compared to other analyzed methodologies. For these reasons, by the proposed methodology, roofs can represent a strong reference element for determining the relative realignment of point clouds. In order to evaluate the accuracy, the presented methodology has been compared with other very spread methods, which are the marker-based and ICP realignment. The results evidence that the PARF-based method is better than the marker-based one and comparable with ICP, although significantly less expansive in computational terms with respect to this one. The proposed representation of the ideal flat features associated with the roofs allows for an optimization that better considers the spatial distribution of roofs and not simply their spatial position and orientation. A significant advantage of the methodology presented here is that it does not require the use of any introduced element in the surveyed area. Therefore, a PARF-based realignment can be performed even after years of respect to a marker-based one. Moreover, this methodology also has a low sensitivity with respect to environmental changes. For example, this is not verified for the ICP, where the season changes may lead to significant variations (leaves falling from the trees, weeds, etc.) in the area, preventing the algorithm from converging. At the same time, the ICP requires an excellent initial coarse alignment to converge, differently from the PARF. The proposed method requires less computational resources than the ICP, converges faster, and has not required the decimation of the point cloud, which also requires a lot of time to be performed. The presented methodology requires isolating a tiny part of the point cloud, resulting in more efficiency than other feature-based methodologies. Given the promising results obtained by the proposed association and registration methods, further efforts will be spent on automating the only manual processing: roof segmentation and recognition.

Author Contributions

Conceptualization, L.D.A., P.D.S. and E.G.; methodology, L.D.A., P.D.S. and E.G.; software, L.D.A. and E.G.; validation, M.A., E.G. and S.Z.; investigation, E.G.; resources, M.A., D.D. and S.Z.; data curation, E.G.; writing—original draft preparation, L.D.A. and E.G.; writing—review and editing, M.A., P.D.S., E.G. and S.Z.; visualization, M.A., E.G. and S.Z.; supervision, P.D.S. and D.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dong, Z.; Yang, B.; Liang, F.; Huang, R.; Scherer, S. Hierarchical registration of unordered TLS point clouds based on binary shape context descriptor. ISPRS J. Photogramm. Remote Sens. 2018, 144, 61–79. [Google Scholar] [CrossRef]

- Al-Rawabdeh, A.; He, F.; Habib, A. Automated feature-based down-sampling approaches for fine registration of irregular point clouds. Remote Sens. 2020, 12, 1224. [Google Scholar] [CrossRef] [Green Version]

- Guo, Y.; Sohel, F.; Bennamoun, M.; Lu, M.; Wan, J. Rotational projection statistics for 3D local surface description and object recognition. Int. J. Comput. Vis. 2013, 105, 63–86. [Google Scholar] [CrossRef] [Green Version]

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-points Congruent Sets for Robust Surface Registration. ACM Trans. Graph. 2008, 27, 85. [Google Scholar] [CrossRef] [Green Version]

- Jian, B.; Vemuri, B.C. Robust point set registration using Gaussian mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1633–1645. [Google Scholar] [CrossRef] [PubMed]

- Golyanik, V.; Taetz, B.; Reis, G.; Stricker, D. Extended coherent point drift algorithm with correspondence priors and optimal subsampling. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision, Lake Placid, NY, USA, 7–10 March 2016. [Google Scholar] [CrossRef]

- Zang, Y.; Lindenbergh, R.C. An improved coherent point drift method for tls point cloud registration of complex scenes. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Enschede, The Netherlands, 10–14 June 2019; Volume 42. [Google Scholar] [CrossRef] [Green Version]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Deng, H. PPF-FoldNet: Unsupervised Learning of Rotation Invariant 3D Local Descriptors Supplementary Material Additional Visualizations of Matching. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Deng, H.; Birdal, T.; Ilic, S. 3D local features for direct pairwise registration. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. FoldingNet: Point Cloud Auto-Encoder via Deep Grid Deformation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Solomon, J. Deep closest point: Learning representations for point cloud registration. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar] [CrossRef] [Green Version]

- Besl, P.J.; McKay, N.D. A Method for Registration of 3D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Dong, Z.; Liang, F.; Yang, B.; Xu, Y.; Zang, Y.; Li, J.; Wang, Y.; Dai, W.; Fan, H.; Hyyppäb, J.; et al. Registration of large-scale terrestrial laser scanner point clouds: A review and benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 163, 327–342. [Google Scholar] [CrossRef]

- Sharp, G.C.; Lee, S.W.; Wehe, D.K. ICP registration using invariant features. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 90–102. [Google Scholar] [CrossRef] [Green Version]

- Bae, K.H.; Lichti, D.D. A method for automated registration of unorganised point clouds. ISPRS J. Photogramm. Remote Sens. 2008, 63, 36–54. [Google Scholar] [CrossRef]

- Bouaziz, S.; Tagliasacchi, A.; Pauly, M. Sparse iterative closest point. In Proceedings of the Eurographics Symposium on Geometry Processing, Genova, Italy, 3–5 July 2013; Volume 32. [Google Scholar] [CrossRef] [Green Version]

- Uhlenbrock, R.; Kim, K.; Hoffmann, H.; Dolne, J.J. Rapid 3D registration using local subtree caching in iterative closest point (ICP) algorithm. In Proceedings of the Unconventional and Indirect Imaging, Image Reconstruction, and Wavefront Sensing 2017, San Diego, CA, USA, 6–10 August 2017. [Google Scholar] [CrossRef]

- Pavlov, A.L.; Ovchinnikov, G.W.; Derbyshev, D.Y.; Tsetserukou, D.; Oseledets, I.V. AA-ICP: Iterative closest point with anderson acceleration. In Proceedings of the Proceedings—IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018. [Google Scholar] [CrossRef] [Green Version]

- Magnusson, M.; Duckett, T. A Comparison of 3D Registration Algorithms for Autonomous Underground Mining Vehicles. In Proceedings of the European Conference on Mobile Robotics (ECMR 2005), Ancona, Italy, 7–10 September 2005. [Google Scholar]

- Takeuchi, E.; Tsubouchi, T. A 3D scan matching using improved 3D normal distributions transform for mobile robotic mapping. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006. [Google Scholar] [CrossRef]

- Stamos, I.; Leordeanu, M. Automated feature-based range registration of urban scenes of large scale. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 2. [Google Scholar] [CrossRef]

- Yang, B.; Dong, Z.; Liang, F.; Liu, Y. Automatic registration of large-scale urban scene point clouds based on semantic feature points. ISPRS J. Photogramm. Remote Sens. 2016, 113, 43–58. [Google Scholar] [CrossRef]

- Dold, C.; Brenner, C. Registration of terrestrial laser scanning data using planar patches and image data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2006, 36, 78–83. [Google Scholar] [CrossRef]

- Xu, Y.; Boerner, R.; Yao, W.; Hoegner, L.; Stilla, U. Automated coarse registration of point clouds in 3d urban scenes using voxel based plane constraint. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Wuhan, China, 18–22 September 2017; Volume 4. [Google Scholar] [CrossRef] [Green Version]

- Wu, H.; Fan, H. Registration of airborne LiDAR point clouds by matching the linear plane features of building roof facets. Remote Sens. 2016, 8, 447. [Google Scholar] [CrossRef] [Green Version]

- Fan, H.; Yao, W.; Fu, Q. Segmentation of sloped roofs from airborne LiDAR point clouds using ridge-based hierarchical decomposition. Remote Sens. 2014, 6, 3284–3301. [Google Scholar] [CrossRef] [Green Version]

- Rabbani, T.; Dijkman, S.; van den Heuvel, F.; Vosselman, G. An integrated approach for modelling and global registration of point clouds. ISPRS J. Photogramm. Remote Sens. 2007, 61, 355–370. [Google Scholar] [CrossRef]

- Dahaghin, M.; Samadzadegan, F.; Dadrass Javan, F. Precise 3D extraction of building roofs by fusion of UAV-based thermal and visible images. Int. J. Remote Sens. 2021, 42, 7002–7030. [Google Scholar] [CrossRef]

- Lumia, R.; Shapiro, L.; Zuniga, O. A new connected components algorithm for virtual memory computers. Comput. Vision Graph. Image Process. 1983, 22, 287–300. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Zhang, C.; Fraser, C.S. Automatic extraction of building roofs using LIDAR data and multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2013, 83, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Aber, J.S.; Marzolff, I.; Ries, J.B.; Aber, S.E. Principles of Photogrammetry. In Small-Format Aerial Photography and UAS Imagery; Elsevier: Amsterdam, The Netherlands, 2019; pp. 19–38. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).