Multiscale Object-Based Classification and Feature Extraction along Arctic Coasts

Abstract

:1. Introduction

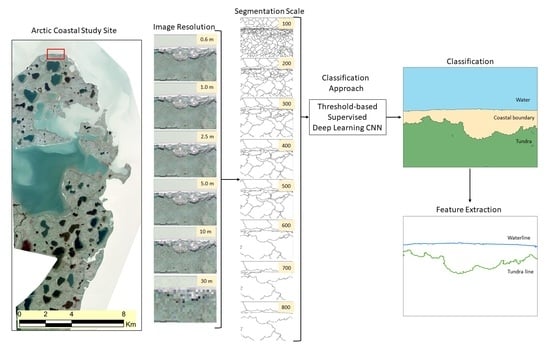

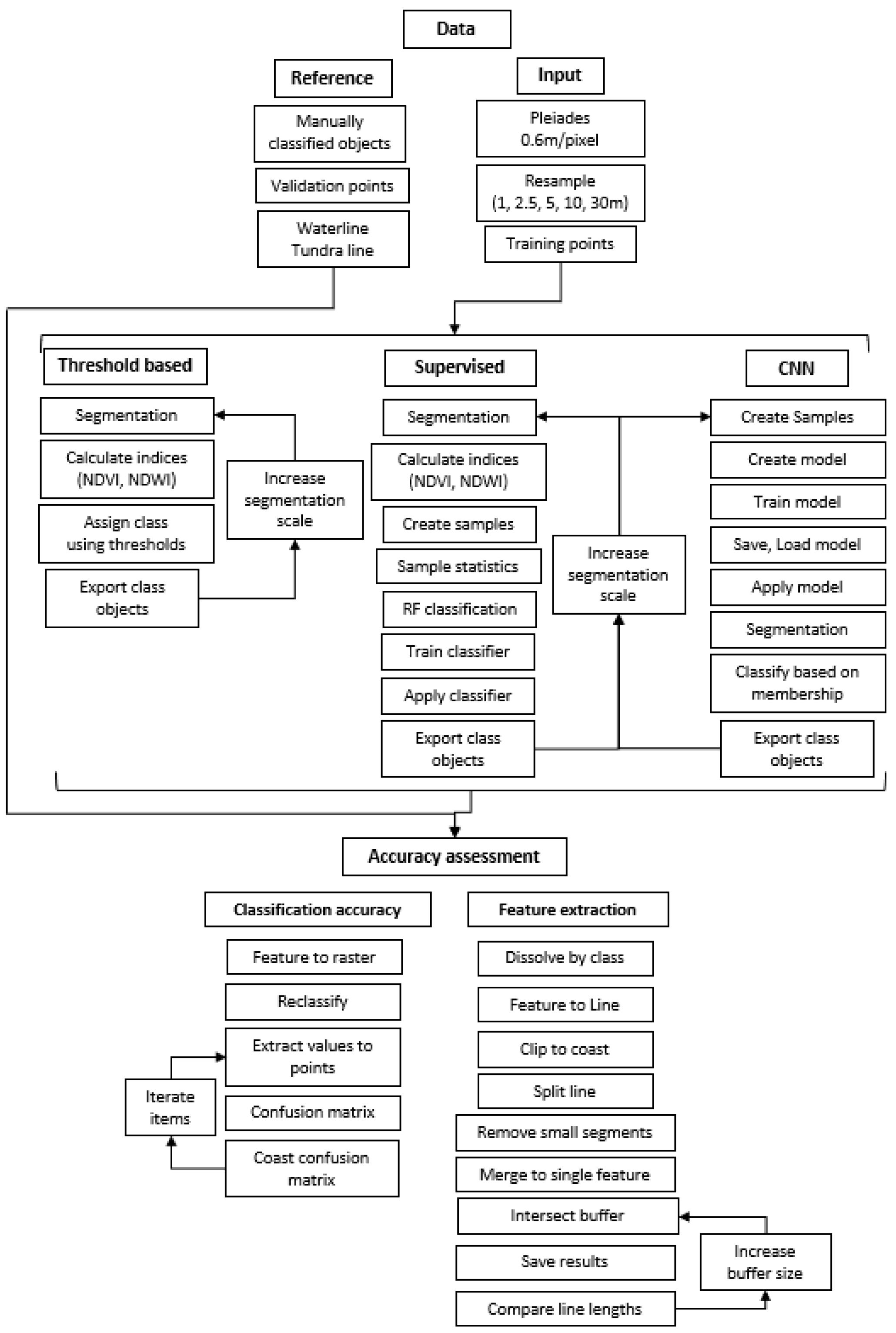

2. Materials and Methods

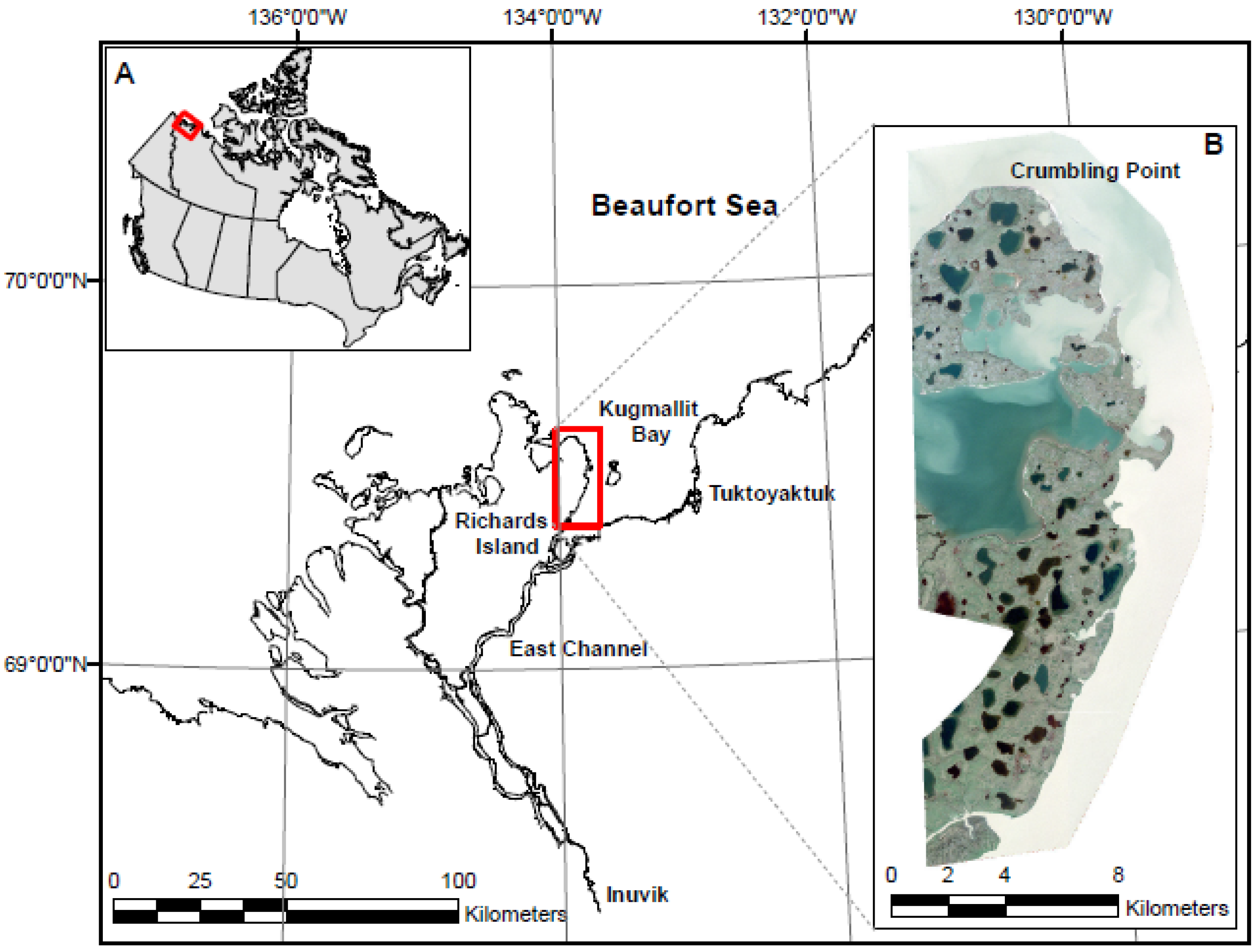

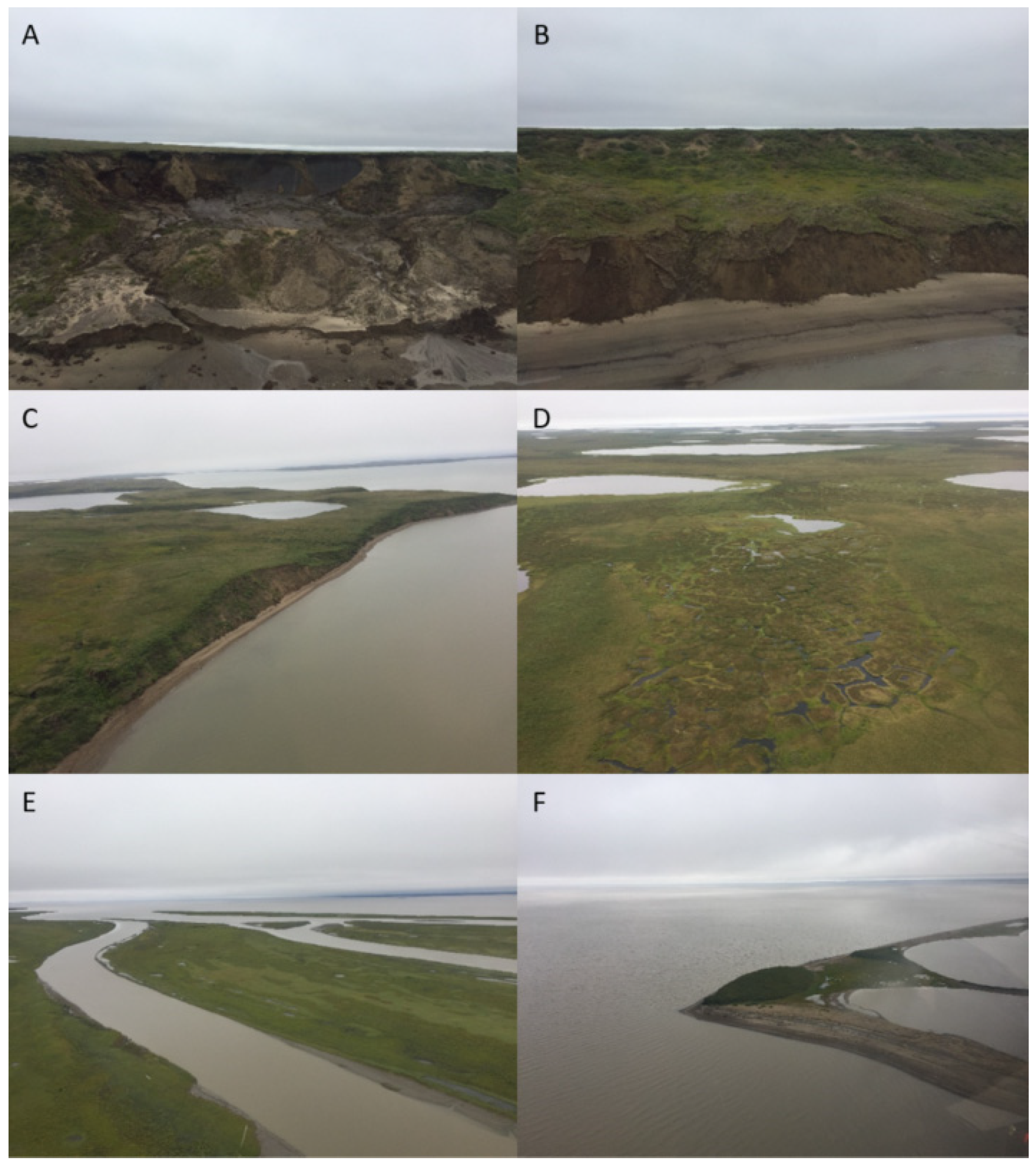

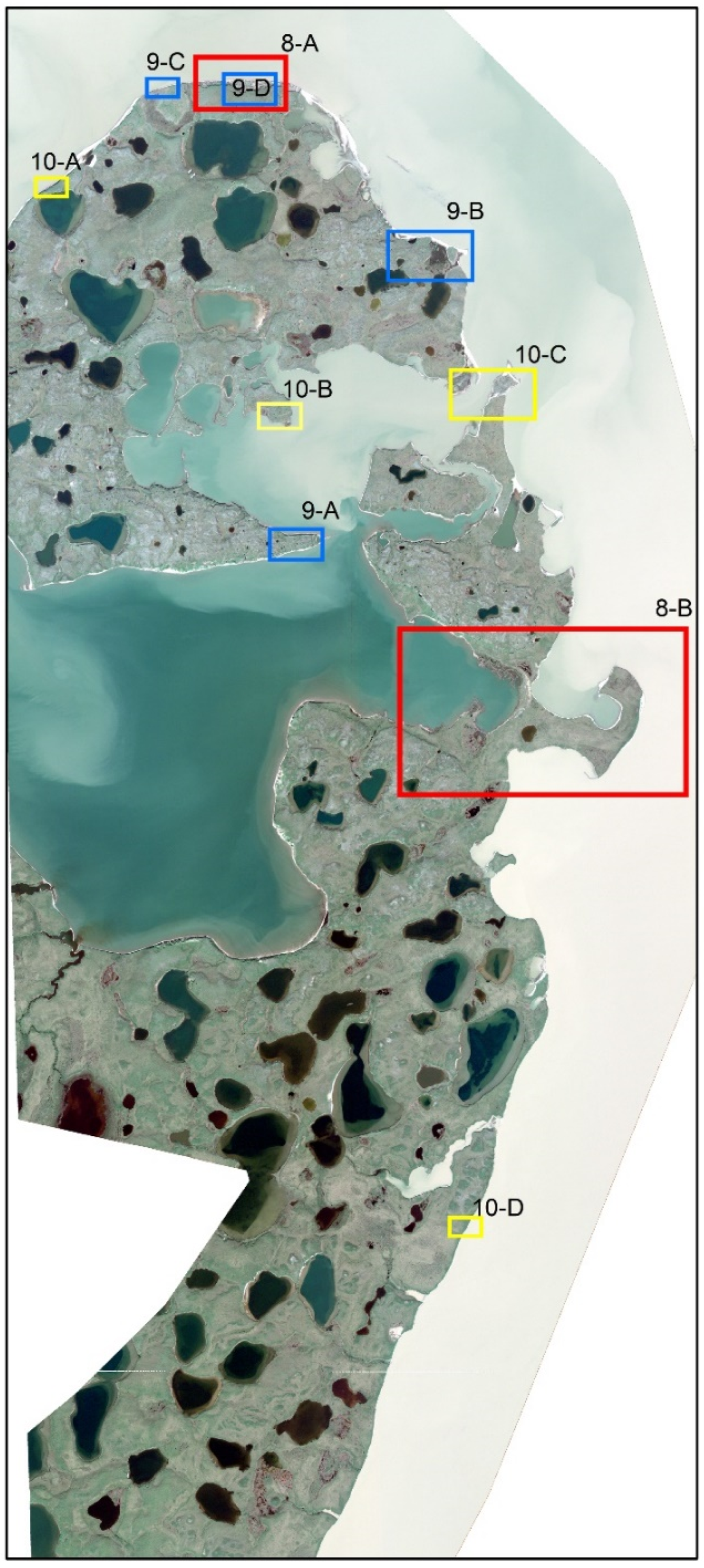

2.1. Study Area

2.2. Source Data

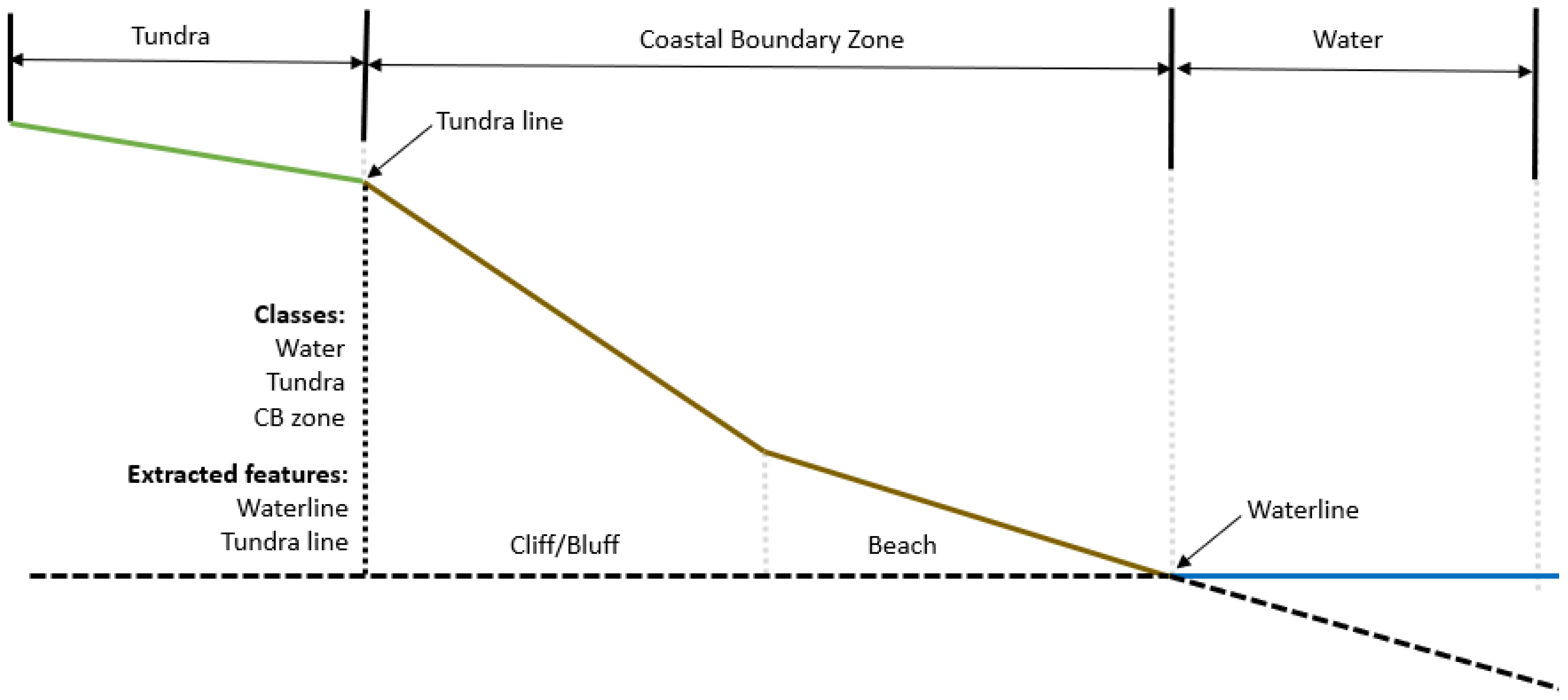

2.3. Classification Approaches

2.3.1. Threshold-Based Classification

2.3.2. Supervised Classification

2.3.3. Deep Learning Classification

2.4. Accuracy Assessment

2.4.1. Reference Datasets

2.4.2. Confusion Matrices

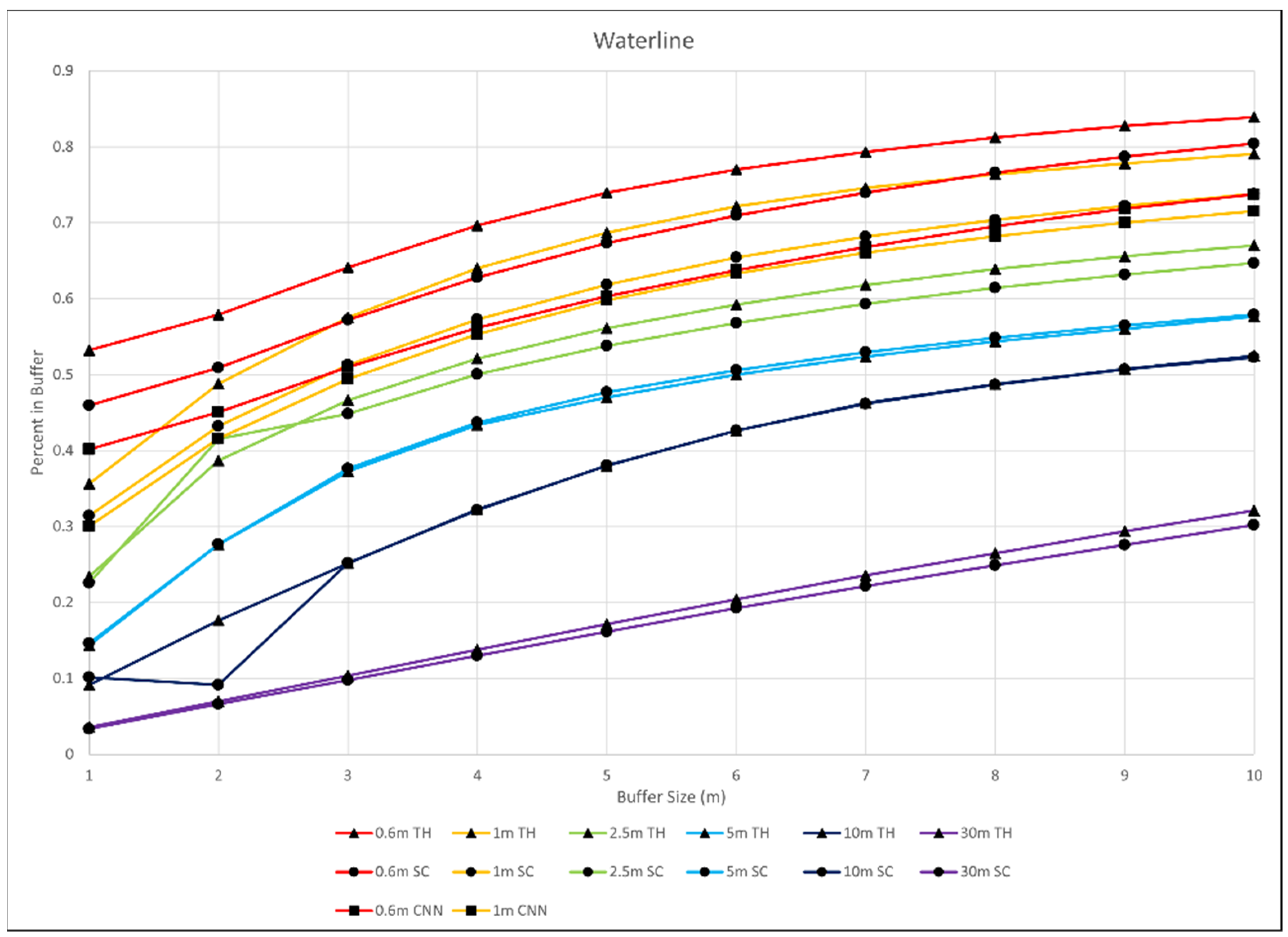

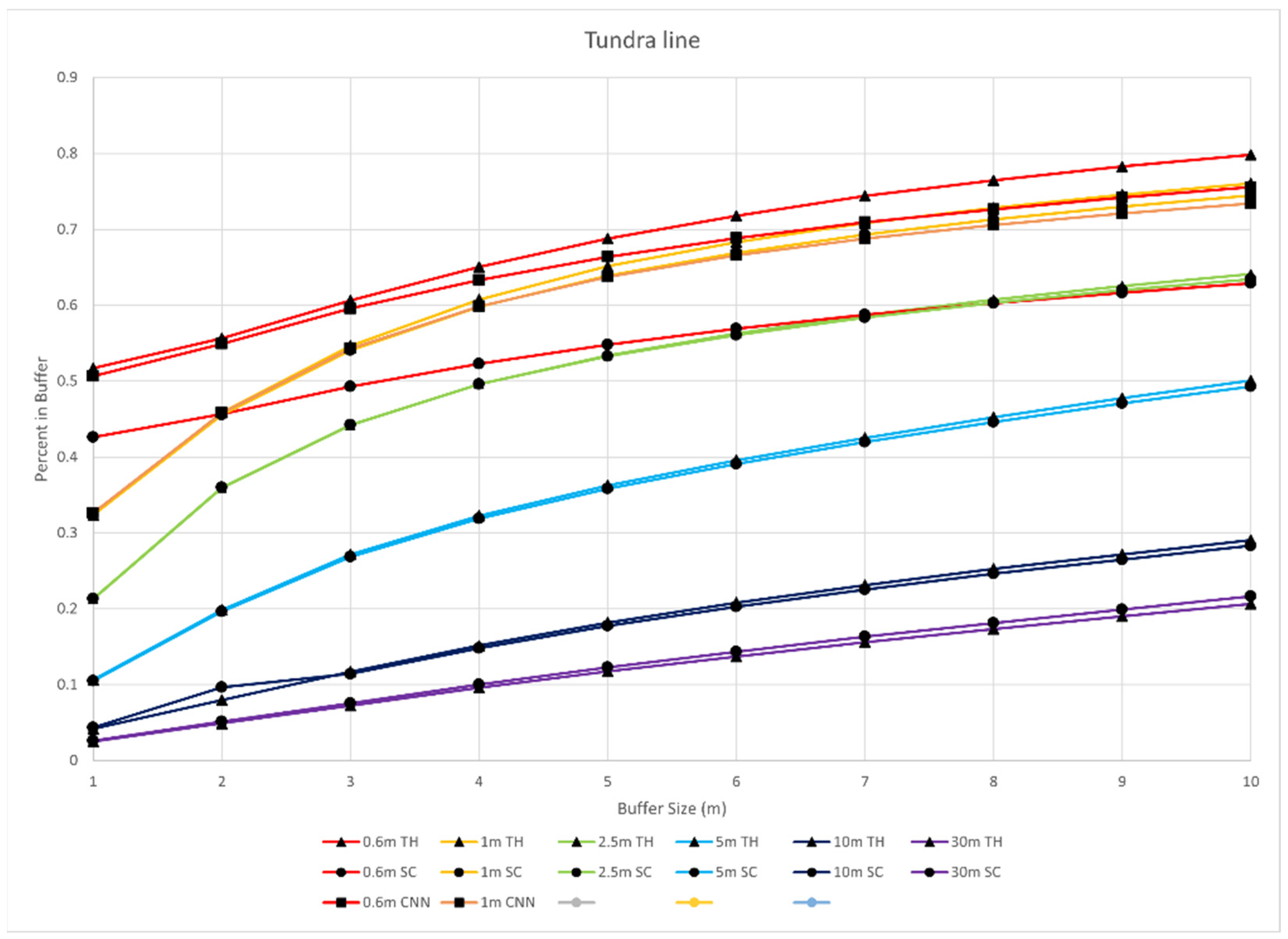

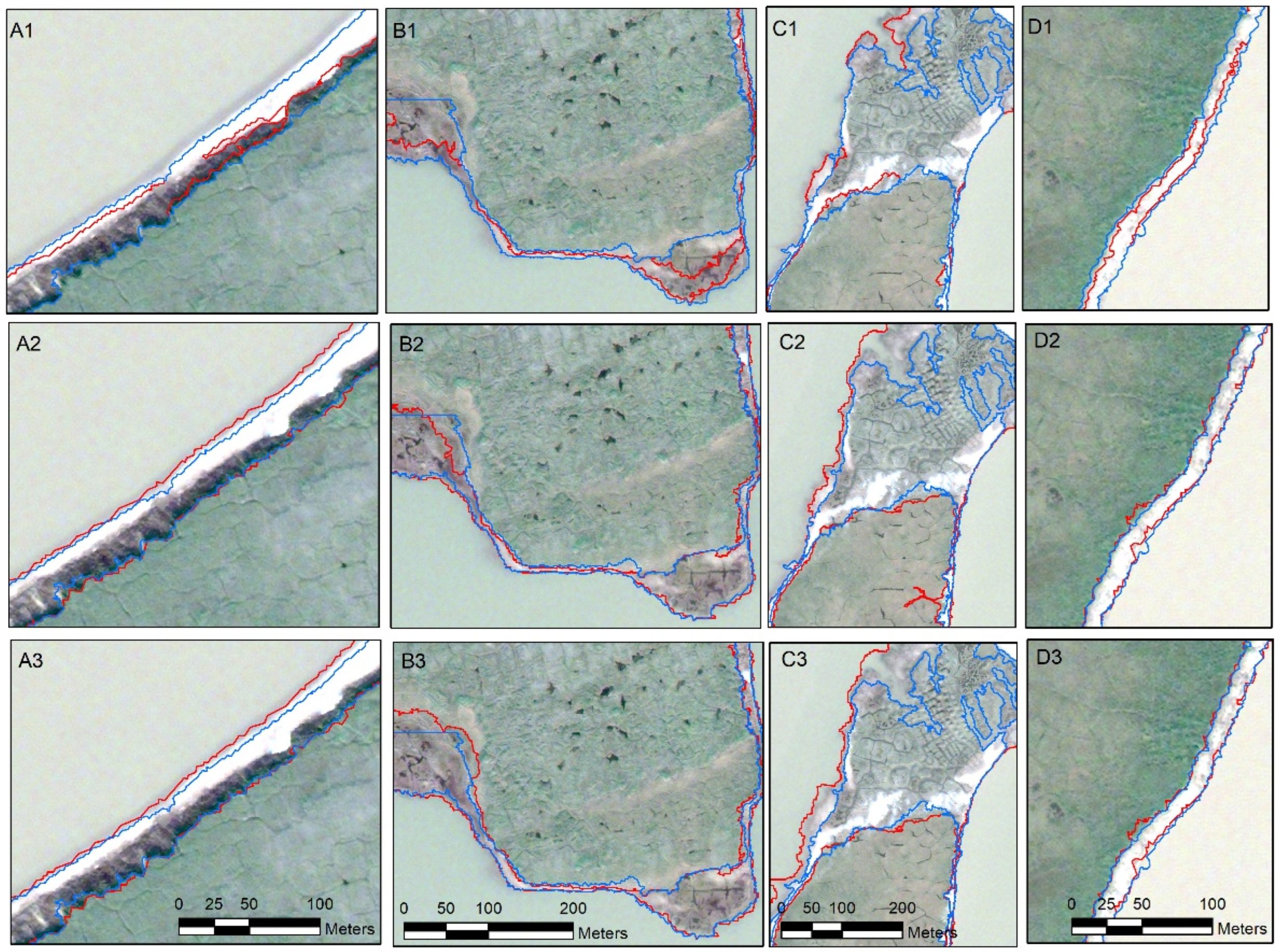

2.4.3. Feature Extraction

3. Results

3.1. Classification Accuracy

3.1.1. Threshold-Based Classification

3.1.2. Supervised Classification

3.1.3. CNN

3.2. Feature Extraction

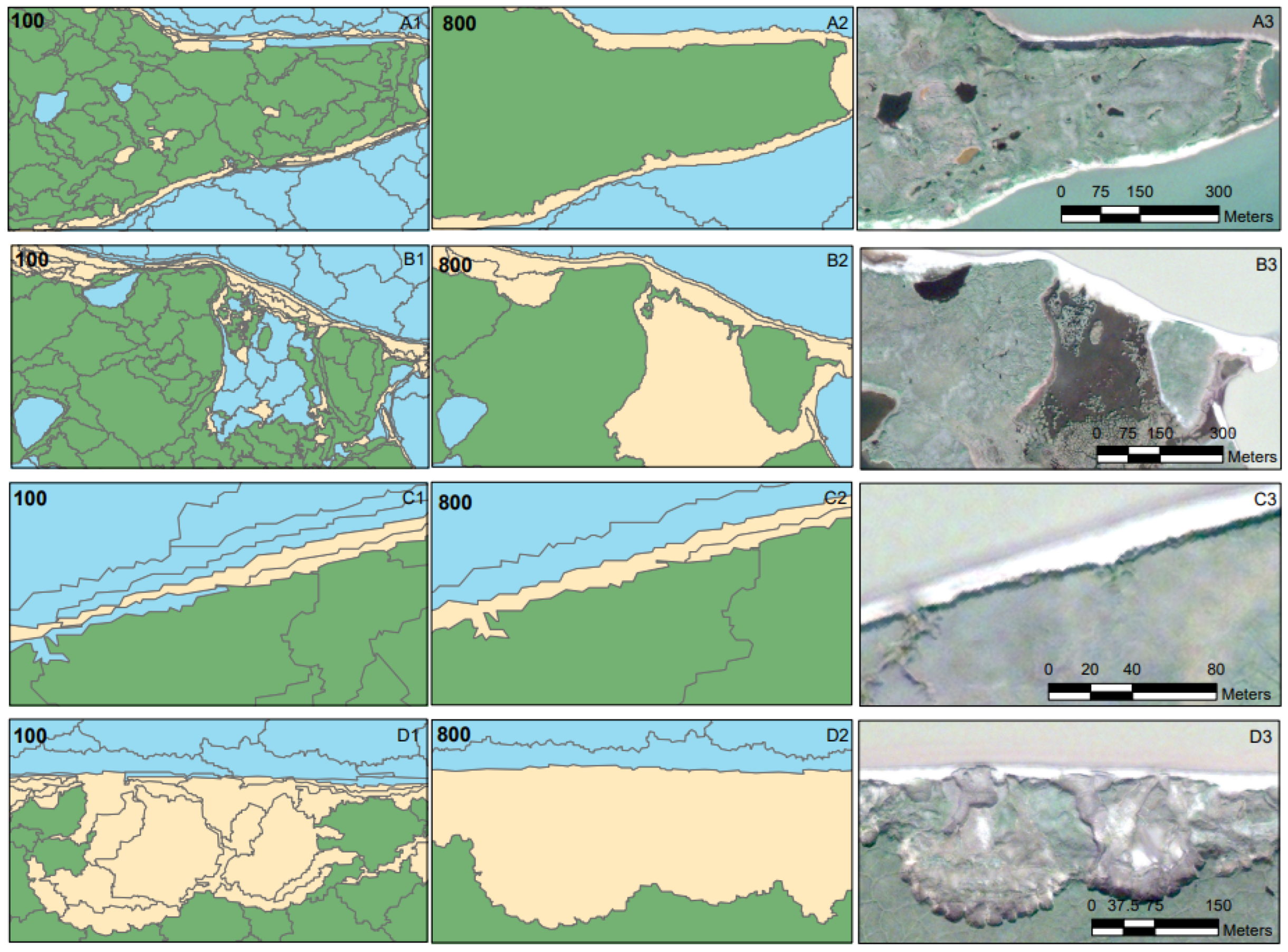

3.2.1. Threshold-Based Classification

3.2.2. Supervised Classification

3.2.3. CNN

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jones, B.M.; Irrgang, A.M.; Farquharson, L.M.; Lantuit, H.; Whalen, D.; Ogorodov, S.; Grigoriev, M.; Tweedie, C.; Gibbs, A.E.; Strzelecki, M.C.; et al. Coastal Permafrost Erosion; Atmospheric Administration: Washington, DC, USA, 2020; pp. 1–10. [CrossRef]

- Günther, F.; Overduin, P.P.; Yakshina, I.A.; Opel, T.; Baranskaya, A.V.; Grigoriev, M.N. Observing Muostakh Disappear: Permafrost Thaw Subsidence and Erosion of a Ground-Ice-Rich Island in Response to Arctic Summer Warming and Sea Ice Reduction. Cryosphere 2015, 9, 151–178. [Google Scholar] [CrossRef] [Green Version]

- Günther, F.; Overduin, P.P.; Sandakov, A.V.; Grosse, G.; Grigoriev, M.N. Short- and Long-Term Thermo-Erosion of Ice-Rich Permafrost Coasts in the Laptev Sea Region. Biogeosciences 2013, 10, 4297–4318. [Google Scholar] [CrossRef] [Green Version]

- Jones, B.M.; Arp, C.D.; Jorgenson, M.T.; Hinkel, K.M.; Schmutz, J.A.; Flint, P.L. Increase in the Rate and Uniformity of Coastline Erosion in Arctic Alaska. Geophys. Res. Lett. 2009, 36, 1–5. [Google Scholar] [CrossRef]

- Lantuit, H.; Pollard, W.H. Fifty Years of Coastal Erosion and Retrogressive Thaw Slump Activity on Herschel Island, Southern Beaufort Sea, Yukon Territory, Canada. Geomorphology 2008, 25, 84–112. [Google Scholar] [CrossRef]

- Lantuit, H.; Overduin, P.P.; Couture, N.; Wetterich, S.; Aré, F.; Atkinson, D.; Brown, J.; Cherkashov, G.; Drozdov, D.; Donald Forbes, L.; et al. The Arctic Coastal Dynamics Database: A New Classification Scheme and Statistics on Arctic Permafrost Coastlines. Estuaries Coasts 2012, 35, 383–400. [Google Scholar] [CrossRef] [Green Version]

- Perovich, D.; Light, B. Sunlight, Sea Ice, and the Ice Albedo Feedback in a Changing Arctic Sea Ice Cover; LONG-TERM GOALS; Atmospheric Administration: Washington, DC, USA, 2016.

- Steele, M.; Dickinson, S. The Phenology of Arctic Ocean Surface Warming. J. Geophys. Res. Ocean. 2016, 121, 6847–6861. [Google Scholar] [CrossRef]

- Richter-Menge, J. Arctic Report Card; Climate.gov: New York, NY, USA, 2011.

- Smith, S.L.; Romanovsky, V.E.; Lewkowicz, A.G.; Burn, C.R.; Allard, M.; Clow, G.D.; Yoshikawa, K.; Throop, J. Thermal State of Permafrost in North America: A Contribution to the International Polar Year. Permafr. Periglac. Process. 2010, 21, 117–135. [Google Scholar] [CrossRef] [Green Version]

- Romanovsky, V.E.; Smith, S.L.; Christiansen, H.H. Permafrost Thermal State in the Polar Northern Hemisphere during the International Polar Year 2007–2009: A Synthesis. Permafr. Periglac. Process. 2010, 21, 106–116. [Google Scholar] [CrossRef] [Green Version]

- Lim, M.; Whalen, D.; Martin, J.; Mann, P.J.; Hayes, S.; Fraser, P.; Berry, H.B.; Ouellette, D. Massive Ice Control on Permafrost Coast Erosion and Sensitivity. Geophys. Res. Lett. 2020, 47, e2020GL087917. [Google Scholar] [CrossRef]

- Vermaire, J.C.; Pisaric, M.F.J.; Thienpont, J.R.; Courtney Mustaphi, C.J.; Kokelj, S.V.; Smol, J.P. Arctic Climate Warming and Sea Ice Declines Lead to Increased Storm Surge Activity. Geophys. Res. Lett. 2013, 40, 1386–1390. [Google Scholar] [CrossRef]

- Farquharson, L.M.; Mann, D.H.; Swanson, D.K.; Jones, B.M.; Buzard, R.M.; Jordan, J.W. Temporal and Spatial Variability in Coastline Response to Declining Sea-Ice in Northwest Alaska. Mar. Geol. 2018, 404, 71–83. [Google Scholar] [CrossRef]

- Fritz, M.; Vonk, J.E.; Lantuit, H. Collapsing Arctic Coastlines. Nat. Clim. Chang. 2017, 7, 6–7. [Google Scholar] [CrossRef] [Green Version]

- Tanski, G.; Wagner, D.; Knoblauch, C.; Fritz, M.; Sachs, T.; Lantuit, H. Rapid CO2 Release from Eroding Permafrost in Seawater. Geophys. Res. Lett. 2019, 46, 11244–11252. [Google Scholar] [CrossRef] [Green Version]

- Irrgang, A.M.; Lantuit, H.; Manson, G.K.; Günther, F.; Grosse, G.; Overduin, P.P. Variability in Rates of Coastal Change Along the Yukon Coast, 1951 to 2015. J. Geophys. Res. Earth Surf. 2018, 123, 779–800. [Google Scholar] [CrossRef] [Green Version]

- O’Rourke, M.J.E. Archaeological Site Vulnerability Modelling: The Influence of High Impact Storm Events on Models of Shoreline Erosion in the Western Canadian Arctic. Open Archaeol. 2017, 3, 1–16. [Google Scholar] [CrossRef]

- Gens, R. Remote Sensing of Coastlines: Detection, Extraction and Monitoring. Int. J. Remote Sens. 2010, 31, 1819–1836. [Google Scholar] [CrossRef]

- Sankar, R.D.; Murray, M.S.; Wells, P. Decadal Scale Patterns of Shoreline Variability in Paulatuk, N.W.T, Canada. Polar Geogr. 2019, 42, 196–213. [Google Scholar] [CrossRef]

- Jones, B.M.; Farquharson, L.M.; Baughman, C.A.; Buzard, R.M.; Arp, C.D.; Grosse, G.; Bull, D.L.; Günther, F.; Nitze, I.; Urban, F.; et al. A Decade of Remotely Sensed Observations Highlight Complex Processes Linked to Coastal Permafrost Bluff Erosion in the Arctic. Environ. Res. Lett. 2018, 13, 115001. [Google Scholar] [CrossRef]

- Vonk, J.E.; Sanchez-Garca, L.; Van Dongen, B.E.; Alling, V.; Kosmach, D.; Charkin, A.; Semiletov, I.P.; Dudarev, O.V.; Shakhova, N.; Roos, P.; et al. Activation of Old Carbon by Erosion of Coastal and Subsea Permafrost in Arctic Siberia. Nature 2012, 489, 137–140. [Google Scholar] [CrossRef]

- Rachold, V.; Grigoriev, M.N.; Are, F.E.; Solomon, S.; Reimnitz, E.; Kassens, H.; Antonow, M. Coastal Erosion vs Riverline Sediment Discharge in the Arctic Shelfx Seas. Int. J. Earth Sci. 2000, 89, 450–460. [Google Scholar] [CrossRef]

- Obu, J.; Lantuit, H.; Grosse, G.; Günther, F.; Sachs, T.; Helm, V.; Fritz, M. Coastal Erosion and Mass Wasting along the Canadian Beaufort Sea Based on Annual Airborne LiDAR Elevation Data. Geomorphology 2016, 293, 331–346. [Google Scholar] [CrossRef] [Green Version]

- Bird, E.C.F. Coastal Geomorphology: An Introduction; Wiley: Chichester, UK; Hoboken, NJ, USA, 2008; ISBN 9780470517291. [Google Scholar]

- Solomon, S.M. Spatial and Temporal Variability of Shoreline Change in the Beaufort-Mackenzie Region, Northwest Territories, Canada. Geo-Marine Lett. 2005, 25, 127–137. [Google Scholar] [CrossRef]

- Cunliffe, A.M.; Tanski, G.; Radosavljevic, B.; Palmer, W.F.; Sachs, T.; Lantuit, H.; Kerby, J.T.; Myers-Smith, I.H. Rapid Retreat of Permafrost Coastline Observed with Aerial Drone Photogrammetry. Cryosph. Discuss. 2018, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Clark, A.; Moorman, B.; Whalen, D.; Fraser, P. Arctic Coastal Erosion: UAV-SfM Data Collection Strategies for Planimetric and Volumetric Measurements. Arct. Sci. 2021, 29, 1–29. [Google Scholar] [CrossRef]

- Nitze, I.; Heidler, K.; Barth, S.; Grosse, G. Developing and Testing a Deep Learning Approach for Mapping Retrogressive Thaw Slumps. Remote Sens. 2021, 13, 4294. [Google Scholar] [CrossRef]

- Castilla, G.; Hay, G.J. Image Objects and Geographic Objects. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 91–110. [Google Scholar] [CrossRef]

- Blaschke, T. Object Based Image Analysis for Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Bartsch, A.; Pointner, G.; Ingeman-Nielsen, T.; Lu, W. Towards Circumpolar Mapping of Arctic Settlements and Infrastructure Based on Sentinel-1 and Sentinel-2. Remote Sens. 2020, 12, 2368. [Google Scholar] [CrossRef]

- Abolt, C.J.; Young, M.H.; Atchley, A.L.; Wilson, C.J. Brief Communication: Rapid Machine-Learning-Based Extraction and Measurement of Ice Wedge Polygons in High-Resolution Digital Elevation Models. Cryosphere 2019, 13, 237–245. [Google Scholar] [CrossRef] [Green Version]

- Bhuiyan, M.A.E.; Witharana, C.; Liljedahl, A.K.; Jones, B.M.; Daanen, R.; Epstein, H.E.; Kent, K.; Griffin, C.G.; Agnew, A. Understanding the Effects of Optimal Combination of Spectral Bands on Deep Learning Model Predictions: A Case Study Based on Permafrost Tundra Landform Mapping Using High Resolution Multispectral Satellite Imagery. J. Imaging 2020, 6, 97. [Google Scholar] [CrossRef]

- Zhang, W.; Witharana, C.; Liljedahl, A.K. Deep Convolutional Neural Networks for Automated Characterization of Arctic Ice-Wedge Polygons in Very High Spatial Resolution Aerial Imagery. Remote Sens. 2018, 10, 1487. [Google Scholar] [CrossRef] [Green Version]

- Timilsina, S.; Sharma, S.K.; Aryal, J. Mapping Urban Trees Within Cadastral Parcels Using an Object-Based Convolutional Neural Network. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 111–117. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Zhang, H.; Sun, W.; Li, S.; Wang, F.; Yang, G. A Coarse-to-Fine Deep Learning Based Land Use Change Detection Method for High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 1933. [Google Scholar] [CrossRef]

- Patil, A.; Rane, M. Convolutional Neural Networks: An Overview and Its Applications in Pattern Recognition. Smart Innov. Syst. Technol. 2021, 195, 21–30. [Google Scholar] [CrossRef]

- Xu, B. Improved Convolutional Neural Network in Remote Sensing Image Classification. Neural Comput. Appl. 2020, 33, 8169–8180. [Google Scholar] [CrossRef]

- Fu, T.; Ma, L.; Li, M.; Johnson, B.A. Using Convolutional Neural Network to Identify Irregular Segmentation Objects from Very High-Resolution Remote Sensing Imagery. J. Appl. Remote Sens. 2018, 12, 1. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Xu, Q.; Wang, H.; Kang, Y.; Li, X. Automatic Waterline Extraction and Topographic Mapping of Tidal Flats from SAR Images Based on Deep Learning. Geophys. Res. Lett. 2022, 49, 1–13. [Google Scholar] [CrossRef]

- Bengoufa, S.; Niculescu, S.; Mihoubi, M.K.; Belkessa, R.; Abbad, K. Rocky Shoreline Extraction Using a Deep Learning Model and Object-Based Image Analysis. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2021, 43, 23–29. [Google Scholar] [CrossRef]

- Aryal, B.; Escarzaga, S.M.; Vargas Zesati, S.A.; Velez-Reyes, M.; Fuentes, O.; Tweedie, C. Semi-Automated Semantic Segmentation of Arctic Shorelines Using Very High-Resolution Airborne Imagery, Spectral Indices and Weakly Supervised Machine Learning Approaches. Remote Sens. 2021, 13, 4572. [Google Scholar] [CrossRef]

- Liu, B.; Yang, B.; Masoud-Ansari, S.; Wang, H.; Gahegan, M. Coastal Image Classification and Pattern Recognition: Tairua Beach, New Zealand. Sensors 2021, 21, 7352. [Google Scholar] [CrossRef]

- Van der Meij, W.M.; Meijles, E.W.; Marcos, D.; Harkema, T.T.L.; Candel, J.H.J.; Maas, G.J. Comparing Geomorphological Maps Made Manually and by Deep Learning. Earth Surf. Process. Landforms 2022, 47, 1089–1107. [Google Scholar] [CrossRef]

- Kabir, S.; Patidar, S.; Xia, X.; Liang, Q.; Neal, J.; Pender, G. A Deep Convolutional Neural Network Model for Rapid Prediction of Fluvial Flood Inundation. J. Hydrol. 2020, 590, 125481. [Google Scholar] [CrossRef]

- Chen, Z.; Scott, T.R.; Bearman, S.; Anand, H.; Keating, D.; Scott, C.; Arrowsmith, J.R.; Das, J. Geomorphological Analysis Using Unpiloted Aircraft Systems, Structure from Motion, and Deep Learning. IEEE Int. Conf. Intell. Robot. Syst. 2020, 1276–1283. [Google Scholar] [CrossRef]

- Hay, G.J.; Castilla, G. Geographic Object-Based Image Analysis (GEOBIA): A New Name for a New Discipline. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 75–89. [Google Scholar] [CrossRef]

- Berry, H.B.; Whalen, D.; Lim, M. Long-Term Ice-Rich Permafrost Coast Sensitivity to Air Temperatures and Storm Influence: Lessons from Pullen Island, Northwest Territories, Canada. Arct. Sci. 2021, 23, 1–23. [Google Scholar] [CrossRef]

- Judge, A.S.; Pelletier, B.R.; Norquay, I. Marine Science Atlas of the Beaufort Sea-Geology and Geophysics; Geological Survey of Canada: Ottawa, ON, Canada, 1987; p. 39. [Google Scholar]

- Rampton, V.N. Quaternary Geology of the Tuktoyaktuk Coastlands, Northwest Territories; Geological Survey of Canada: Ottawa, ON, Canada, 1988; pp. 1–107. [Google Scholar] [CrossRef]

- Trishchenko, A.P.; Kostylev, V.E.; Luo, Y.; Ungureanu, C.; Whalen, D.; Li, J. Landfast Ice Properties over the Beaufort Sea Region in 2000–2019 from MODIS and Canadian Ice Service Data. Can. J. Earth Sci. 2021, 19, 1–19. [Google Scholar] [CrossRef]

- Overeem, I.; Anderson, R.S.; Wobus, C.W.; Clow, G.D.; Urban, F.E.; Matell, N. Sea Ice Loss Enhances Wave Action at the Arctic Coast. Geophys. Res. Lett. 2011. [Google Scholar] [CrossRef]

- Forbes, D.L. State of the Arctic Coast 2010; Atmospheric Administration: Washington, DC, USA, 2011; ISBN 9783981363722.

- Kavzoglu, T.; Yildiz, M. Parameter-Based Performance Analysis of Object-Based Image Analysis Using Aerial and Quikbird-2 Images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II–7, 31–37. [Google Scholar] [CrossRef] [Green Version]

- Lowe, S.H.; Guo, X. Detecting an Optimal Scale Parameter in Object-Oriented Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 890–895. [Google Scholar] [CrossRef]

- Li, C.; Shao, G. Object-Oriented Classification of Land Use/Cover Using Digital Aerial Orthophotography. Int. J. Remote Sens. 2012, 33, 922–938. [Google Scholar] [CrossRef]

- Addink, E.A.; De Jong, S.M.; Pebesma, E.J. The Importance of Scale in Object-Based Mapping of Vegetation Parameters with Hyperspectral Imagery. Photogramm. Eng. Remote Sens. 2007, 73, 905–912. [Google Scholar] [CrossRef] [Green Version]

- Tzotsos, A.; Karantzalos, K.; Argialas, D. Object-Based Image Analysis through Nonlinear Scale-Space Filtering. ISPRS J. Photogramm. Remote Sens. 2011, 66, 2–16. [Google Scholar] [CrossRef]

- Trimble eCognition. ECognition Developer Rulsets. 2021. Available online: http://www.ecognition.com/ (accessed on 9 May 2022).

- Boak, E.H.; Turner, I.L. Shoreline Definition and Detection: A Review. J. Coast. Res. 2005, 214, 688–703. [Google Scholar] [CrossRef] [Green Version]

- Goodchild, M.F.; Hunter, G.J. A Simple Positional Accuracy Measure for Linear Features. Int. J. Geogr. Inf. Sci. 1997, 11, 299–306. [Google Scholar] [CrossRef]

- Irrgang, A.M.; Bendixen, M.; Farquharson, L.M.; Baranskaya, A.V.; Erikson, L.H.; Gibbs, A.E.; Ogorodov, S.A.; Overduin, P.P.; Lantuit, H.; Grigoriev, M.N.; et al. Drivers, Dynamics and Impacts of Changing Arctic Coasts. Nat. Rev. Earth Environ. 2022, 3, 39–54. [Google Scholar] [CrossRef]

- Imagery, H.M.; Abdelhady, H.U.; Troy, C.D.; Habib, A. A Simple, Fully Automated Shoreline Detection Algorithm For. Remote Sens. 2022, 14, 557. [Google Scholar]

- Lim, M.; Whalen, D.; Mann, P.J.; Fraser, P.; Berry, H.B.; Irish, C.; Cockney, K.; Woodward, J. Effective Monitoring of Permafrost Coast Erosion: Wide-Scale Storm Impacts on Outer Islands in the Mackenzie Delta Area. Front. Earth Sci. 2020, 8, 561322. [Google Scholar] [CrossRef]

- Porter, C.; Morin, P.; Howat, I.; Noh, M.-J.; Bates, B.; Peterman, K.; Keesey, S.; Schlenk, M.; Gardiner, J.; Tomko, K.; et al. ArcticDEM; Harvard Dataverse: Cambridge, MA, USA, 2018. [Google Scholar] [CrossRef]

| Resolution | 100 | 200 | 300 | 400 | 500 | 600 | 700 | 800 | Average | Kappa | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Scale Segmentation (m) | |||||||||||

| 0.6 | Entire Scene | 83 | 83 | 83 | 84 | 85 | 84 | 84 | 84 | 84 | 0.75–0.78 |

| Coastal Subset | 84 | 87 | 87 | 89 | 88 | 89 | 88 | 90 | 88 | 0.76–0.85 | |

| 1 | Entire Scene | 83 | 86 | 87 | 85 | 84 | 83 | 85 | 85 | 85 | 0.75–0.81 |

| Coastal Subset | 86 | 87 | 89 | 89 | 87 | 87 | 88 | 88 | 88 | 0.79–0.84 | |

| 2.5 | Entire Scene | 85 | 85 | 86 | 86 | 87 | 86 | 82 | 82 | 85 | 0.73–0.81 |

| Coastal Subset | 87 | 87 | 88 | 88 | 87 | 86 | 85 | 85 | 87 | 0.78–0.82 | |

| 5 | Entire Scene | 85 | 84 | 81 | 78 | 75 | 75 | 75 | 74 | 78 | 0.61–0.78 |

| Coastal Subset | 87 | 88 | 88 | 77 | 76 | 77 | 75 | 75 | 80 | 0.63–0.82 | |

| 10 | Entire Scene | 81 | 76 | 69 | 67 | 65 | 30 | 21 | 21 | 54 | 0.25–0.71 |

| Coastal Subset | 80 | 75 | 68 | 62 | 60 | 60 | 64 | 64 | 67 | 0.41–0.71 | |

| 30 | Entire Scene | 69 | 61 | 62 | 63 | 62 | 62 | 62 | 50 | 61 | 0.24–0.54 |

| Coastal Subset | 66 | 63 | 61 | 61 | 56 | 56 | 56 | 41 | 58 | 0.12–0.49 |

| Resolution | 100 | 200 | 300 | 400 | 500 | 600 | 700 | 800 | Average | Kappa | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Scale Segmentation (m) | |||||||||||

| 0.6 | Entire Scene | 62 | 77 | 81 | 90 | 76 | 88 | 88 | 86 | 81 | 0.43–0.83 |

| Coastal Subset | 69 | 85 | 81 | 90 | 76 | 90 | 89 | 88 | 84 | 0.54–0.85 | |

| 1 | Entire Scene | 91 | 90 | 88 | 88 | 85 | 94 | 86 | 85 | 88 | 0.77–0.86 |

| Coastal Subset | 90 | 90 | 90 | 90 | 86 | 87 | 87 | 87 | 88 | 0.8–0.86 | |

| 2.5 | Entire Scene | 90 | 88 | 88 | 87 | 88 | 89 | 85 | 82 | 87 | 0.73–0.84 |

| Coastal Subset | 91 | 87 | 87 | 86 | 86 | 86 | 84 | 83 | 86 | 0.77–0.87 | |

| 5 | Entire Scene | 85 | 84 | 84 | 80 | 79 | 76 | 75 | 77 | 80 | 0.62–0.78 |

| Coastal Subset | 86 | 87 | 85 | 79 | 78 | 77 | 75 | 75 | 80 | 0.62–0.81 | |

| 10 | Entire Scene | 80 | 78 | 74 | 70 | 68 | 62 | 63 | 63 | 70 | 0.43–0.7 |

| Coastal Subset | 81 | 77 | 71 | 65 | 63 | 60 | 64 | 64 | 68 | 0.4–0.72 | |

| 30 | Entire Scene | 71 | 61 | 62 | 54 | 62 | 62 | 62 | 50 | 61 | 0.24–0.56 |

| Coastal Subset | 66 | 63 | 61 | 53 | 56 | 56 | 56 | 41 | 57 | 0.12–0.5 |

| Resolution | 100 | 200 | 300 | 400 | 500 | 600 | 700 | 800 | Average | Kappa | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Scale Segmentation (m) | |||||||||||

| 0.6 | Entire Scene | 95 | 94 | 95 | 94 | 92 | 92 | 93 | 93 | 93 | 0.88–0.93 |

| Coastal Subset | 91 | 91 | 90 | 91 | 91 | 91 | 90 | 89 | 91 | 0.84–0.87 | |

| 1 | Entire Scene | 95 | 93 | 93 | 91 | 89 | 88 | 88 | 88 | 91 | 0.82–0.92 |

| Coastal Subset | 88 | 88 | 89 | 89 | 88 | 88 | 88 | 88 | 88 | 0.82–0.83 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Clark, A.; Moorman, B.; Whalen, D.; Vieira, G. Multiscale Object-Based Classification and Feature Extraction along Arctic Coasts. Remote Sens. 2022, 14, 2982. https://doi.org/10.3390/rs14132982

Clark A, Moorman B, Whalen D, Vieira G. Multiscale Object-Based Classification and Feature Extraction along Arctic Coasts. Remote Sensing. 2022; 14(13):2982. https://doi.org/10.3390/rs14132982

Chicago/Turabian StyleClark, Andrew, Brian Moorman, Dustin Whalen, and Gonçalo Vieira. 2022. "Multiscale Object-Based Classification and Feature Extraction along Arctic Coasts" Remote Sensing 14, no. 13: 2982. https://doi.org/10.3390/rs14132982

APA StyleClark, A., Moorman, B., Whalen, D., & Vieira, G. (2022). Multiscale Object-Based Classification and Feature Extraction along Arctic Coasts. Remote Sensing, 14(13), 2982. https://doi.org/10.3390/rs14132982