1. Introduction

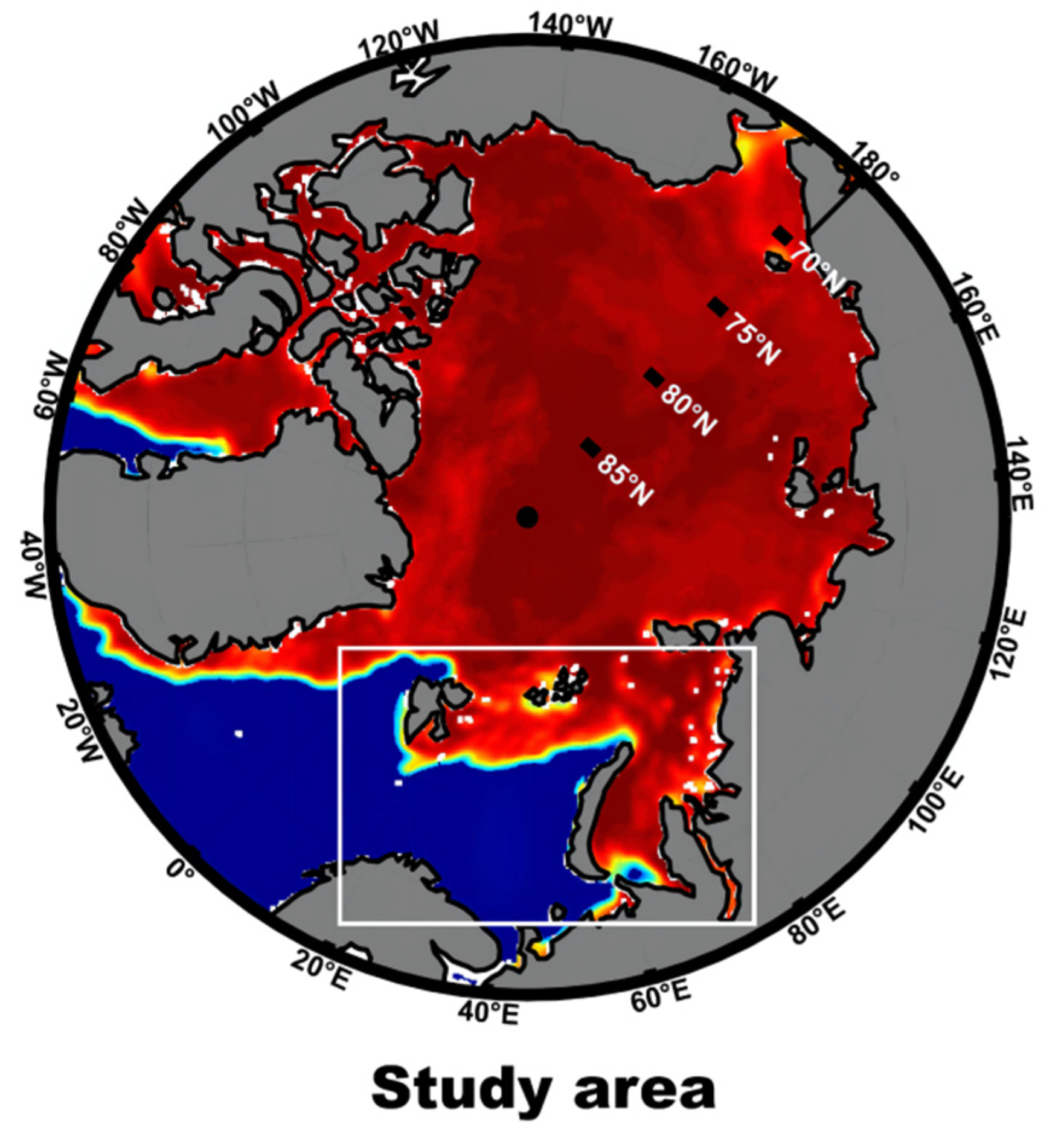

In recent decades, global warming has become a common topic of concern for many countries around the world and the entire scientific community. Increasing atmospheric temperature has accelerated the melting trend of Arctic sea ice. At the same time, Arctic sea ice plays an important role in the energy and water balance of the global climate system, which is reflected in the positive feedback effect of Arctic sea ice melting that exacerbates global warming [

1,

2]. The Arctic region includes the entire Arctic Ocean and parts of Greenland (Danish territory), Canada, Alaska, Russia, Norway, Sweden, Finland, and Iceland. The Arctic region is surrounded by transportation routes and industrial bases of many countries, and high pollution and human-induced damage have caused uncontrollable factors in the evolution of Arctic sea ice [

3]. Currently, the severe melting of Arctic sea ice directly affects human production and life, but it also results in unprecedented opportunities for human beings to develop Arctic resources [

4]. In the Arctic region with a low sea ice concentration, the navigation time of the waterway is effectively extended, which is undoubtedly beneficial to scientific exploration and commercial development. To date, humans still do not have a complete understanding of the dynamic evolution of Arctic sea ice (especially around 30 days), which causes polar researchers and ships to be at great risk under the influence of extreme Arctic weather and sea ice (or ice floes). Therefore, further research and development of sea ice forecasting (especially mid- and long-term forecasting) is required in the Arctic Region, which can not only provide navigation security for human development of Arctic resources, but also provide more valuable assistance for Arctic climate change research [

5,

6].

Sea ice concentration (SIC) is the proportion of sea ice over a given area of the ocean, which reflects the spatial density of sea ice and is one of the important parameters to characterize sea ice [

7]. Recently, many countries have developed operational applications of the Arctic sea ice forecasting system based on numerical models. For example, the US Navy’s short-term sea ice forecast system (Arctic Cap Now-cast/Forecast System, ACNFS) and Canada’s Global Ice Ocean Prediction System (GIOPS) combine sea ice models with operational oceanographic and meteorological models, and assimilate certain information, such as temperature, salinity, and ocean currents, into the background field [

8,

9]. ACNFS can achieve a forecast validity of seven days [

10,

11]. GIOPS can provide daily sea ice condition analysis products and 10-day sea ice numerical forecast products. Additionally, Towards an Operational Prediction System for the North Atlantic European Coastal Zones (TOPAZ) of the Nansen Environmental and Remote Sensing Center of Norway is an ocean/sea ice real-time prediction system covering the North Atlantic and Arctic Oceans and can provide nearly 10 days’ worth of sea ice and ocean forecast results [

12]. The Arctic Sea Ice Numerical Forecast System established by the National Marine Environmental Forecasting Center of China is capable of forecasting sea ice for the entire Arctic region for the next five days [

13]. From the above, it can be observed that the general numerical model has a prediction duration within two weeks for SIC in the Arctic. Meanwhile, these numerical models involve a large number of ideal assumptions and also require complex computational platforms, which cannot meet the demand for longer forecast duration and faster forecast speed in operational applications.

Unlike the numerical model prediction, artificial intelligence methods are miniaturized and simple. With the rapid development of artificial intelligence algorithms, more and more advanced model architectures have been built to adapt to different data structures. Many machine learning models, such as support vector machines (SVMs) [

14], wavelet neural networks (WNNs) [

15,

16], and the long short-term memory network (LSTM) [

17], have been successfully applied to the field of oceanic intelligence prediction. A spatio-temporal deep learning scheme based on convolutional neural networks (CNNs) has performed well in studying the sea surface temperature (SST) associated with tropical instability waves, and realizing artificial intelligence prediction driven by satellite remote sensing big data [

18]. In addition to single prediction models, hybrid models have also good prediction performance. For example, an LSTM-AdaBoost ensemble learning model to predict sea surface temperature [

19] and a hybrid prediction model based on the combination of multivariate statistical analysis and a deep learning model provided new ideas for the mid- and long-term forecasting of ocean variables [

20,

21,

22]. The development of oceanic intelligence forecasting has driven the rapid development of deep learning application in the field of sea ice forecasting [

23,

24]. The average error of the single-month SIC prediction based on the LSTM model was less than 0.09, but its error was greater in the melting season (July–September) with an RMSE of 0.1109; nevertheless, the above results were significantly better than the traditional statistical forecasts [

25]. Wang, Scott, and Clausi [

26] used CNNs to forecast the SIC, and the results show that their model outperforms the multilayer perceptron (MLP) model with an RMSE of 0.22. Choi et al. [

27] used a gated recursive unit (GRU) to provide 15-day SIC predictions, which outperformed the LSTM model. Kim et al. [

28] built a monthly SIC forecast model by the CNN model and showed that the CNN model outperformed the persistence model with an RMSE of 0.0576. Liu et al. [

29] used a convolutional LSTM (ConvLSTM) to predict the daily Arctic SIC, and the RMSE of the ConvLSTM remained around 0.15 when the forecast horizon was 10 days, while the RMSE of the conventional CNN reached around 0.18.

However, we learn from the above studies that the current intelligent forecasts of SIC mostly use a single scale and have a short forecast window. None of the aforementioned prediction models have achieved satisfactory results in SIC prediction experiments over 30 days. Taking the ConvLSTM model as an example, it has both an image feature extraction capability (CNN-based model) and time-series feature extraction capability (RNN-based model). The ConvLSTM model greatly simplifies data preprocessing and solves the problem of spatio-temporal deep learning from specific requirements, but there are still limitations in the study of spatio-temporal long-term prediction. When the prediction horizon increases, the ConvLSTM model takes a longer time to train the model; at the same time, its prediction results may be worse. This is because the I/O (input/output) data structure of the ConvLSTM model is three-dimensional spatio-temporal data, which increases the uncertainty of long-term prediction. It is worth mentioning that complex deep learning models (e.g., ConvLSTM) are extremely time-consuming without the support of a high-performance computing platform for operational forecasting. Even the training process (including data processing, hyper-parameter tuning, and model correction) of the model takes much longer than some traditional numerical model predictions. We learned from the above that simple models are exceptionally limited in mid- and long-term prediction research, but a complex model structure may not necessarily produce excellent prediction results either. Therefore, it is necessary for us to find a more accurate and simpler SIC prediction model, especially for SIC prediction with lead times of more than 30 days.

Considering the above, we develop a hybrid deep learning prediction model for the Arctic SIC based on the empirical orthogonal function (EOF) analysis, LSTM, and DNN, which is called the EOF–LSTM–DNN (ELD) prediction model in this study. By using EOF analysis, the spatio-temporal prediction problem of the SIC field can be transformed into a time-series prediction problem; then, we use the classical encoder–decoder architecture to process these time series. In this model, the LSTM neural network is used to encode the time series, and a fully connected DNN is used to decode the time series. Based on the above model, we can achieve an SIC prediction with a forecast window of 100 days in a rolling forecast method.

The rest of the paper is organized as follows:

Section 2 describes the dataset used in this study.

Section 3 introduces the theoretical frameworks of EOF, LSTM, and DNN, respectively. In

Section 4, the sea ice concentration forecast experiments using the EOF–LSTM–DNN (ELD) model in the Arctic are presented in detail. Then, some discussions and future research suggestions are given in

Section 5. Finally, in

Section 6, we draw conclusions.

3. Methodology

The mid- and long-term evolutions of SIC is a spatio-temporal variation process. If we treat the evolutionary process of SIC as a time-series evolutionary problem, the spatial evolution characteristics are often lost because the spatial correlation is not considered. Similarly, if we only focus on the changes in spatial structure without considering the effects of temporal changes, this will make predictions impossible to achieve over a longer time scale. Therefore, it is necessary for us to consider both the spatial and temporal evolution of SIC to achieve better predictions.

As a classic “weapon” for spatio-temporal data mining and dimensional compression in the field of geosciences, EOF analysis can effectively extract the spatial features and temporal variations of research objects. As an enhanced version of the traditional regression neural network (RNN) model, LSTM can extract features from time series. In addition, DNN with a multilayer structure can decode the features extracted by LSTM well to obtain the final result of the SIC prediction. The combination of the above three techniques provides a reasonable method for the analysis and prediction of spatio-temporal fields. Therefore, we proposed an EOF–LSTM–DNN model for predicting the SIC, which is referred to as the ELD model in this study.

3.1. The Architecture of the ELD Model

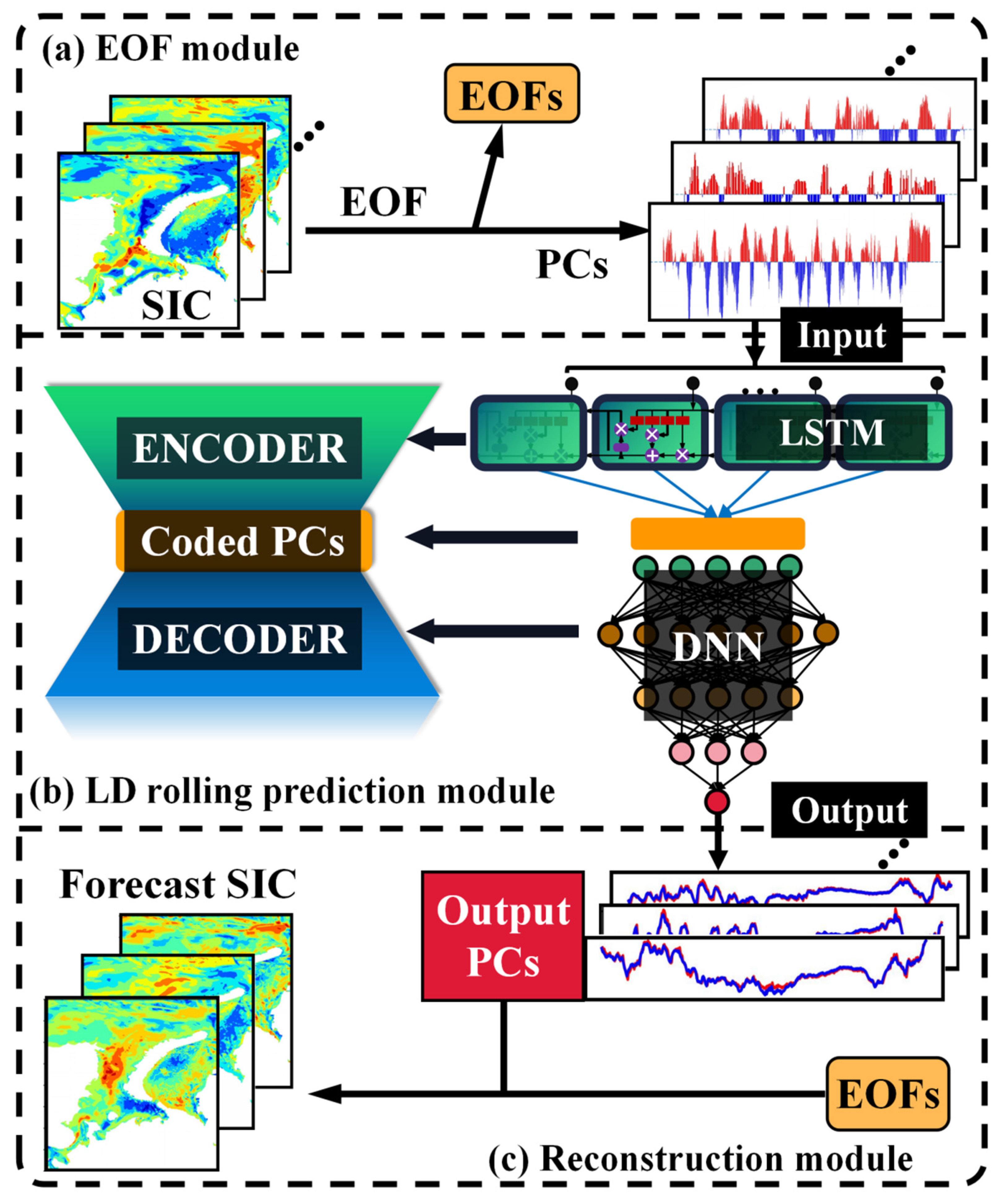

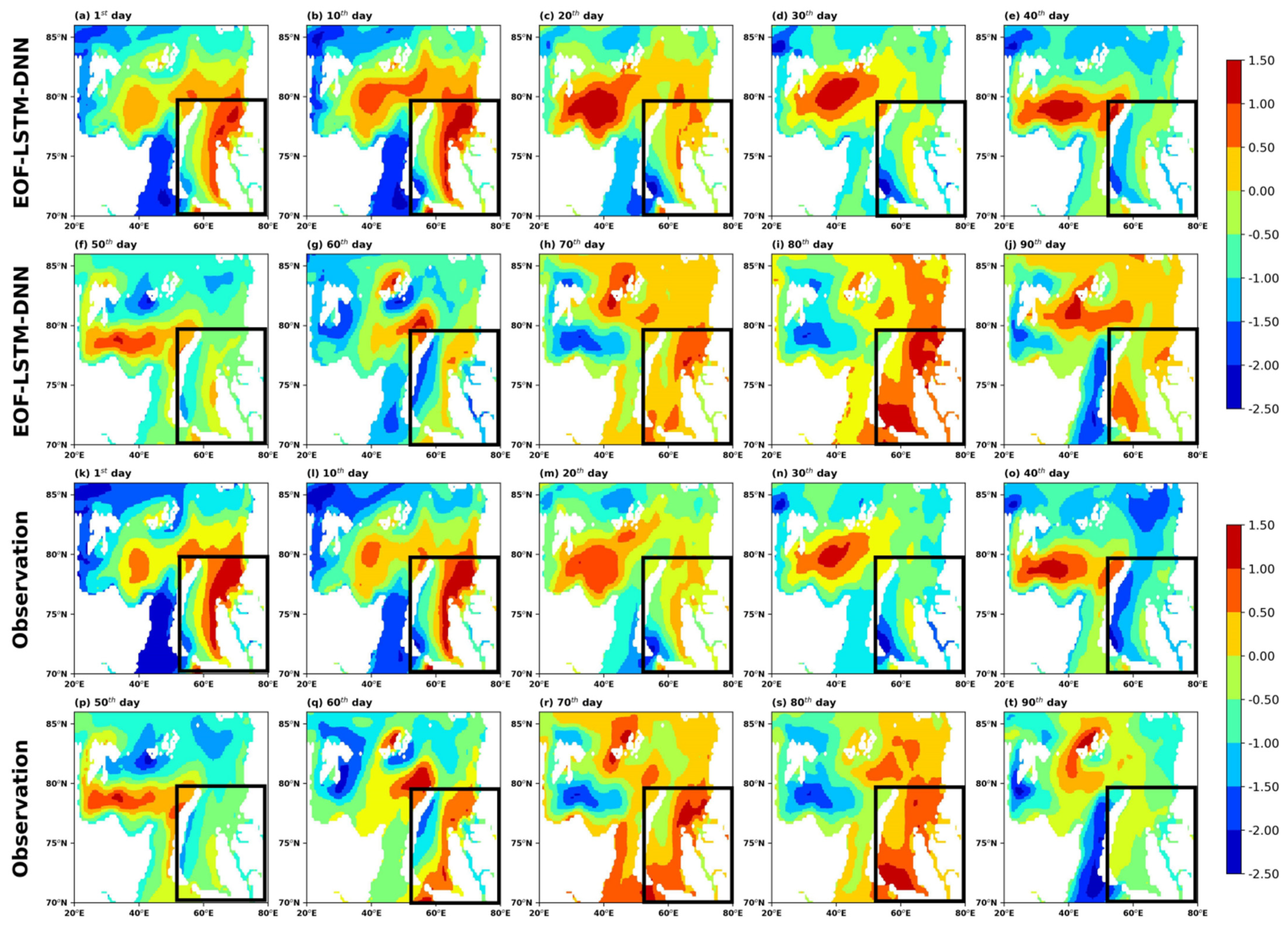

The ELD model is composed of three parts, namely, the EOF module, the LSTM–DNN (LD) rolling prediction module, and the reconstruction module, as shown in

Figure 2. The functions of each module are described as follows:

First, in the EOF module, the original SIC satellite remote sensing dataset was divided into training and validation datasets. The training dataset can be decomposed into orthogonal spatial modes (EOFs) and corresponding principle components (PCs) by EOF analysis; then, the PCs of the validation dataset were obtained by projecting the testing set onto the EOFs obtained above. The details and related algorithm of the EOF decomposition are described in

Section 3.2.

Next, in the LD rolling prediction module, a LSTM neural network was used to encode the input PCs, and then a multilayer deep neural network (DNN) was used to decode the encoded PCs to derive the future single-step prediction results. After the single-step prediction was completed, the model integrated the single-step prediction results with the original input to obtain a new input, and used the new input for the next prediction. The above steps were cycled several times to produce the final mid- and long-term prediction results for the PCs. Detailed information about the LSTM–DNN network is explained in

Section 3.3.

Finally, the reconstruction module the SIC prediction field can be reconstructed by combining the output PCs in the LD rolling module with the EOFs obtained in the EOF module.

3.2. Empirical Orthogonal Function (EOF) Analysis

EOF decomposition was proposed by Pearson and introduced into the analysis of meteorological problems by Lorenz, which is commonly used to analyze variables [

30,

31]. It is often used to analyze the characteristics of the temporal and spatial distributions of the field and to separate the temporal and spatial characteristics of the variable field. Thus, the main information of the variable field can be represented by a few typical eigenvectors.

Before conducting EOF analysis, we need to remove the climatology to obtain the anomaly matrix

:

In the above matrix,

is the number of space observation points,

is the length of the time series, and

represents the observation value of the

spatial point on the

day. Then, the covariance matrix

of matrix

can be calculated as:

The eigenvalues

and eigenvectors

of

can be expressed as follows:

where

are arranged in descending order. Each non-zero eigenvalue corresponds to a column of eigenvectors, also referred to as the spatial pattern. For example, the eigenvector corresponding to

is called the first spatial pattern (i.e., the first column of

) and so on. The spatial patterns are projected onto the matrix

to obtain the PCs corresponding to the eigenvector:

The data of each row in the corresponds to the PCs of each column of eigenvectors. The PC of the first spatial pattern corresponds to the first row of , and so on.

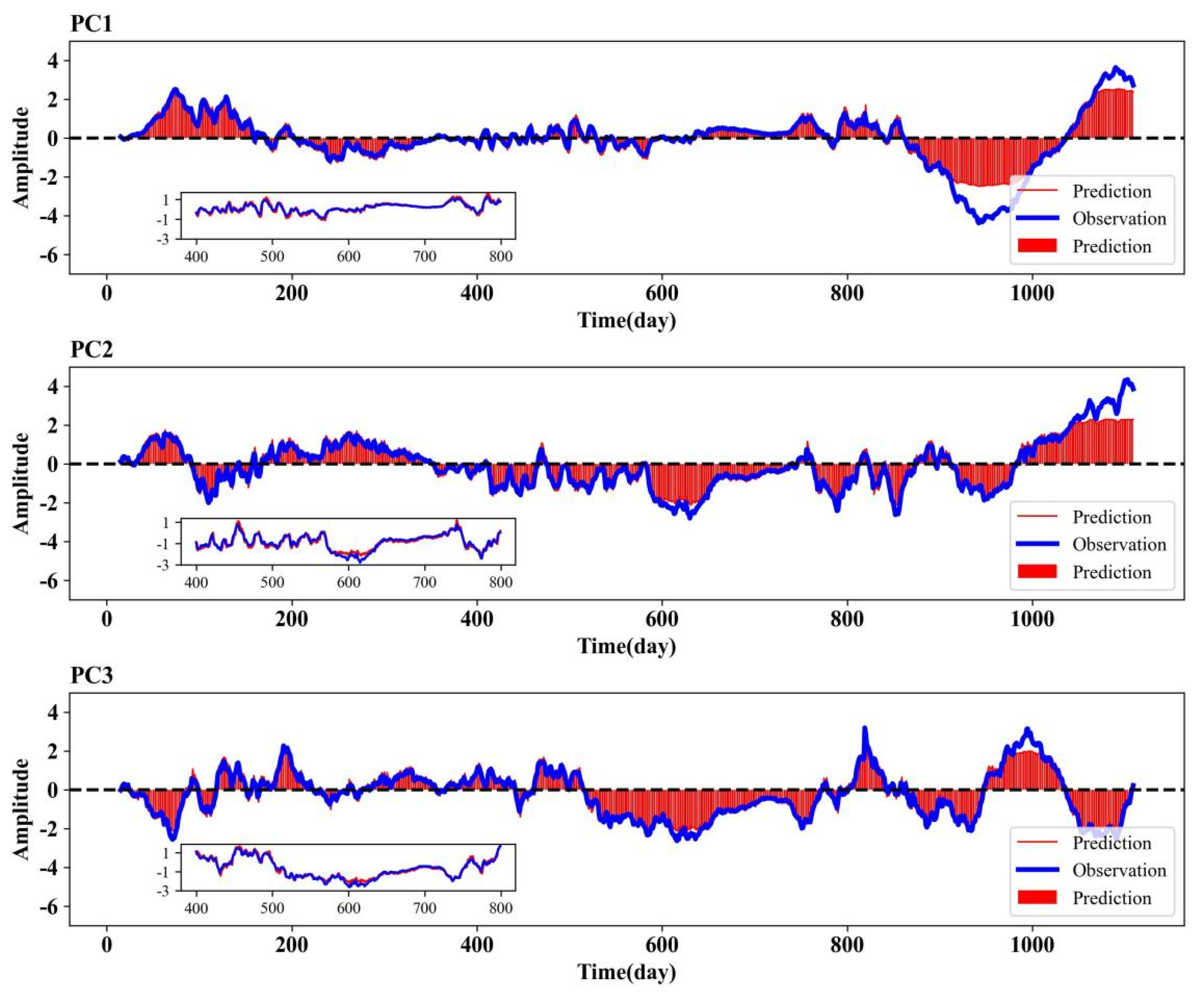

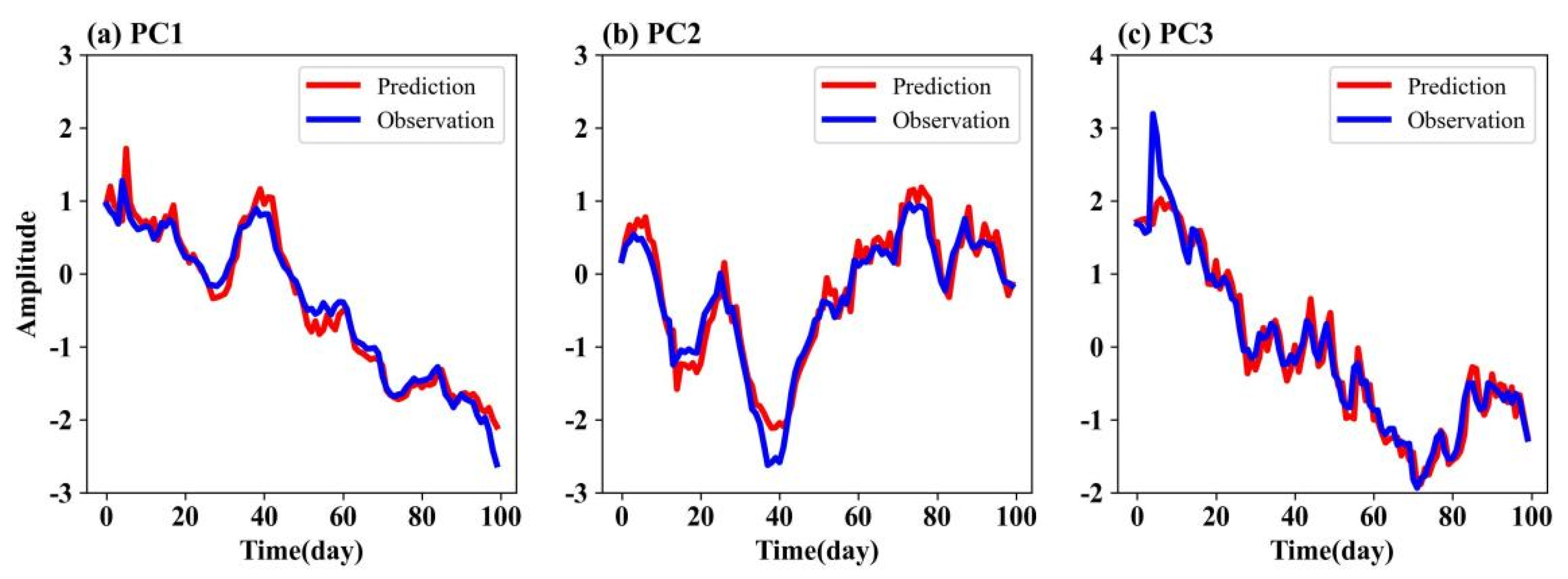

By the EOF analysis, the training datasets of the Arctic SIC are decomposed into EOFs and corresponding PCs. Here, we retain 15 EOFs with a total variance of 92%. Then, the validation dataset is project onto these 15 EOFs to obtain the PCs of the validation dataset. To date, what we need to consider further is how to better analyze and predict these time series (PCs).

3.3. Long Short-Term Memory Network (LSTM) and Deep Neural Network (DNN)

The LSTM network, a variant of RNN, was originally proposed by Hochreiter and Schmidhuber [

32], and it is a solution to the vanishing and exploding gradient problem of RNNs. The core of RNN lies in its feature extraction and memorability (sharing of parameters within neurons). The neurons of the traditional RNN contain self-feedback connections, and the output is jointly determined by the input and the previous output, making it capable of remembering information. However, as the time interval increases, information is lost during the flow of neurons by multiplying the decimal points multiple times, resulting in the disappearance of the gradient. The influence of the current output on the subsequent output weakens until it disappears. Therefore, useful information cannot be continuously remembered. LSTM can continuously cycle the information to ensure the storage of information and remember the long-term information of the time series for future predictions. As the data flow though the network, the information can be stored, deleted, and added according to whether it is needed or not, effectively coping with the problem of vanishing gradients [

33]. Therefore, LSTM can predict longer sequences and sequences with longer intervals. LSTM has advantages in time-series modeling with strong learning and generalization abilities, and has a good predictive effect on nonstationary data. When it learns the nonlinear features of a sequence, the high-dimensional mapping and evolution of the features does not only depend on the current independent information, but is also determined by the long-term influence mechanism that occurred historically and the current state. It is important to note here that the long-term historical information is actually the default behavior of the LSTM network structure rather than the result of human intervention or deliberate learning, which provides a good objective condition for future integrations with EOF analysis.

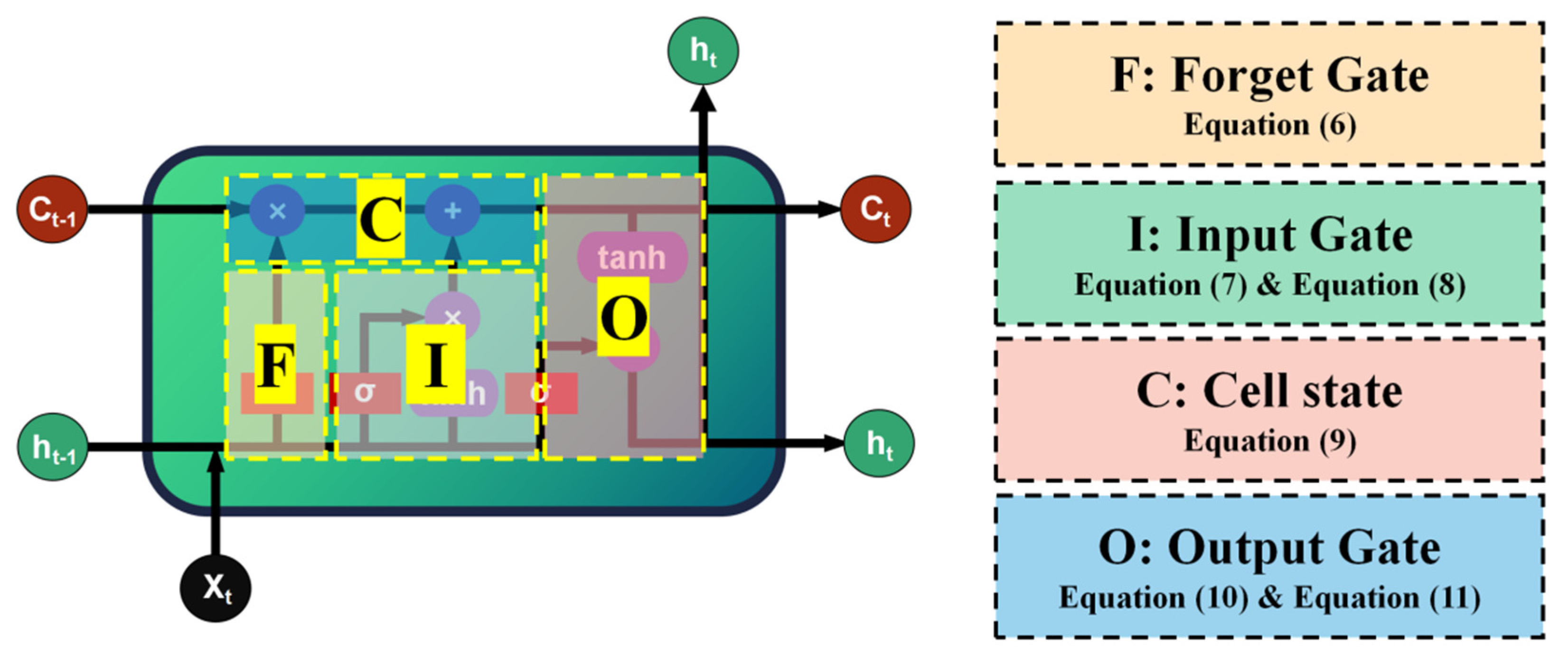

The LSTM is able to combine information learned in the long- and short-terms because its internal feature-processing mechanism is well able to control the unit states, processing, and mining mapping relationships through the information contained in the data itself. This capability is given by a structure called ‘gates’, as shown in

Figure 3. These gate units that process and mine the input information realize the long-term learning capability of LSTM. The structure and the process of LSTM is as follows:

where

is the sigmoid function;

,

,

, and

are the weights applied to the concentration of the new input

and the output

from the previous cell; and

,

,

, and

are the corresponding biases.

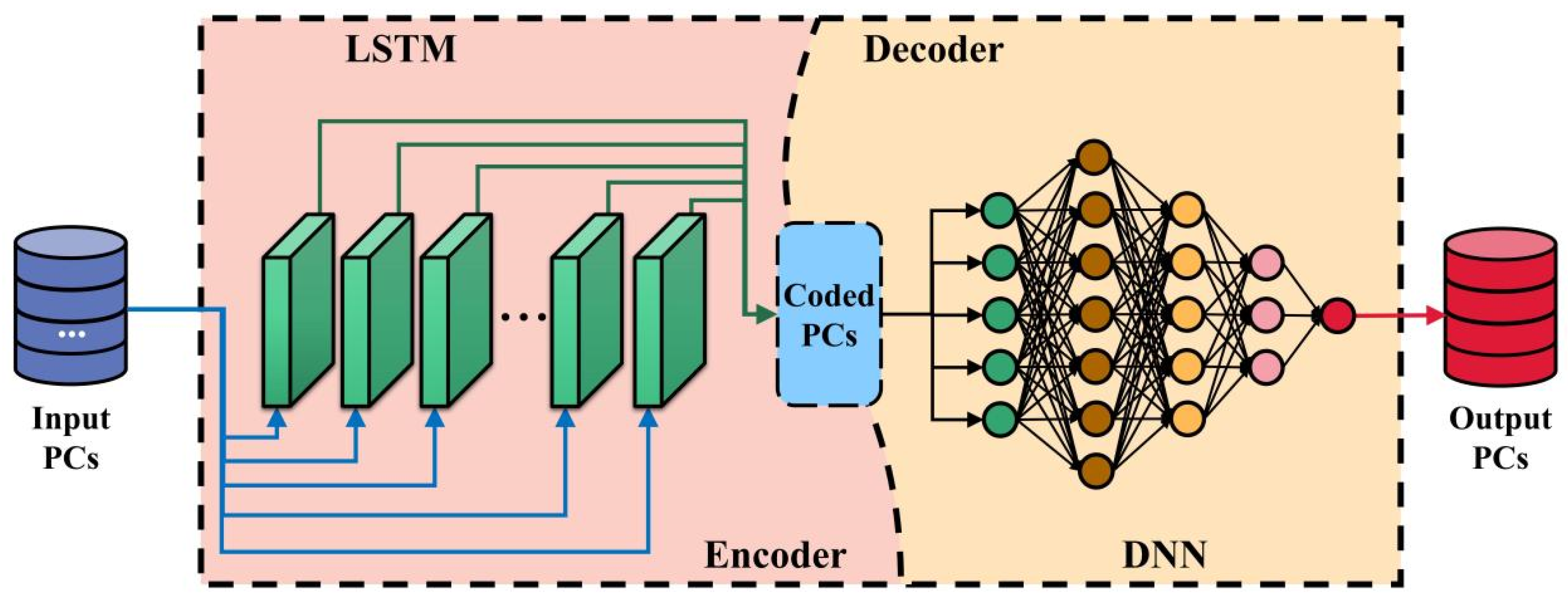

DNN is a multilayer feedforward network trained by error back-propagation. It takes the square of the network error as the cost function and uses the gradient descent method to calculate the minimum value of the cost function. The DNN model used in this study, which is presented in

Figure 4, consists of five layers: an input layer, three hidden layers, and an output layer. Since there was no accurate rule to determine the number of neurons in the hidden layer, it was generally determined by repeated trial and error. After constantly trying different numbers of neurons during the operation of the model, the number was finally determined to be 41. The input layer consisted of 15 neurons, the number of neurons in the three hidden layers were 10, 10, and 5, and the output layer was 1.

5. Discussions

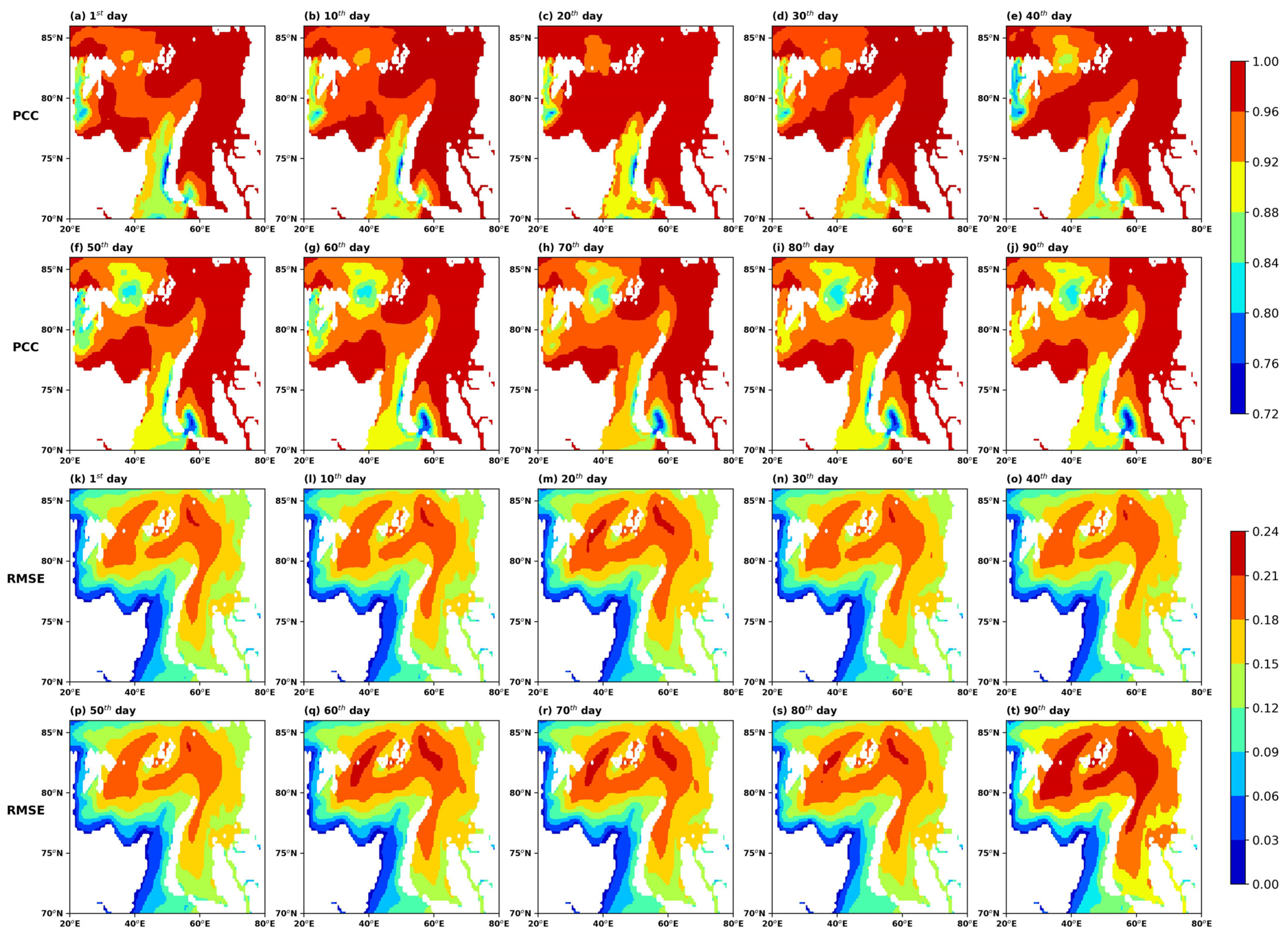

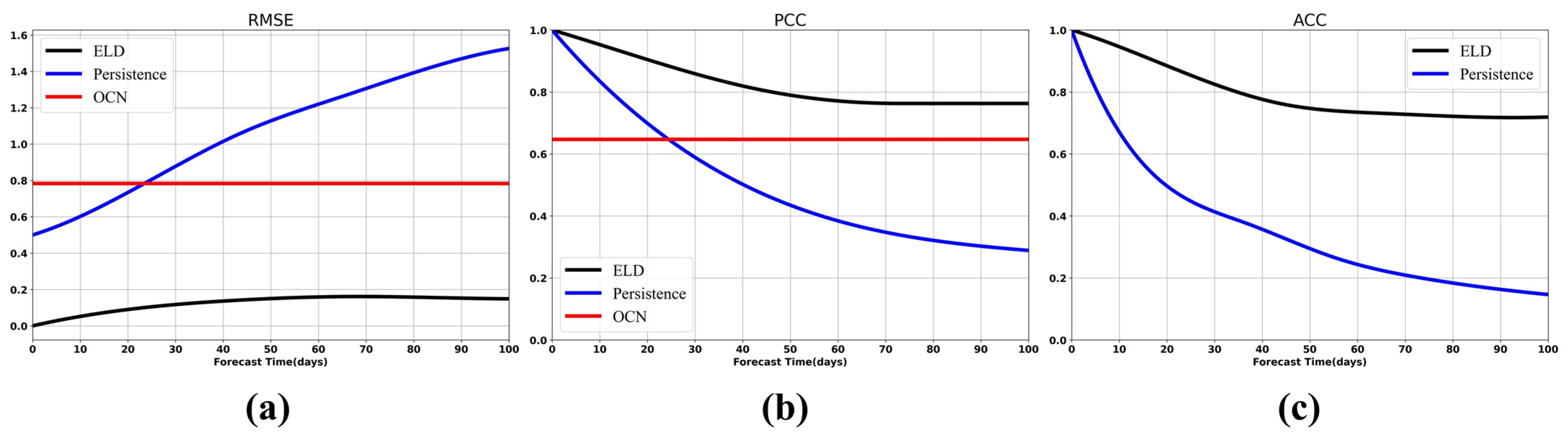

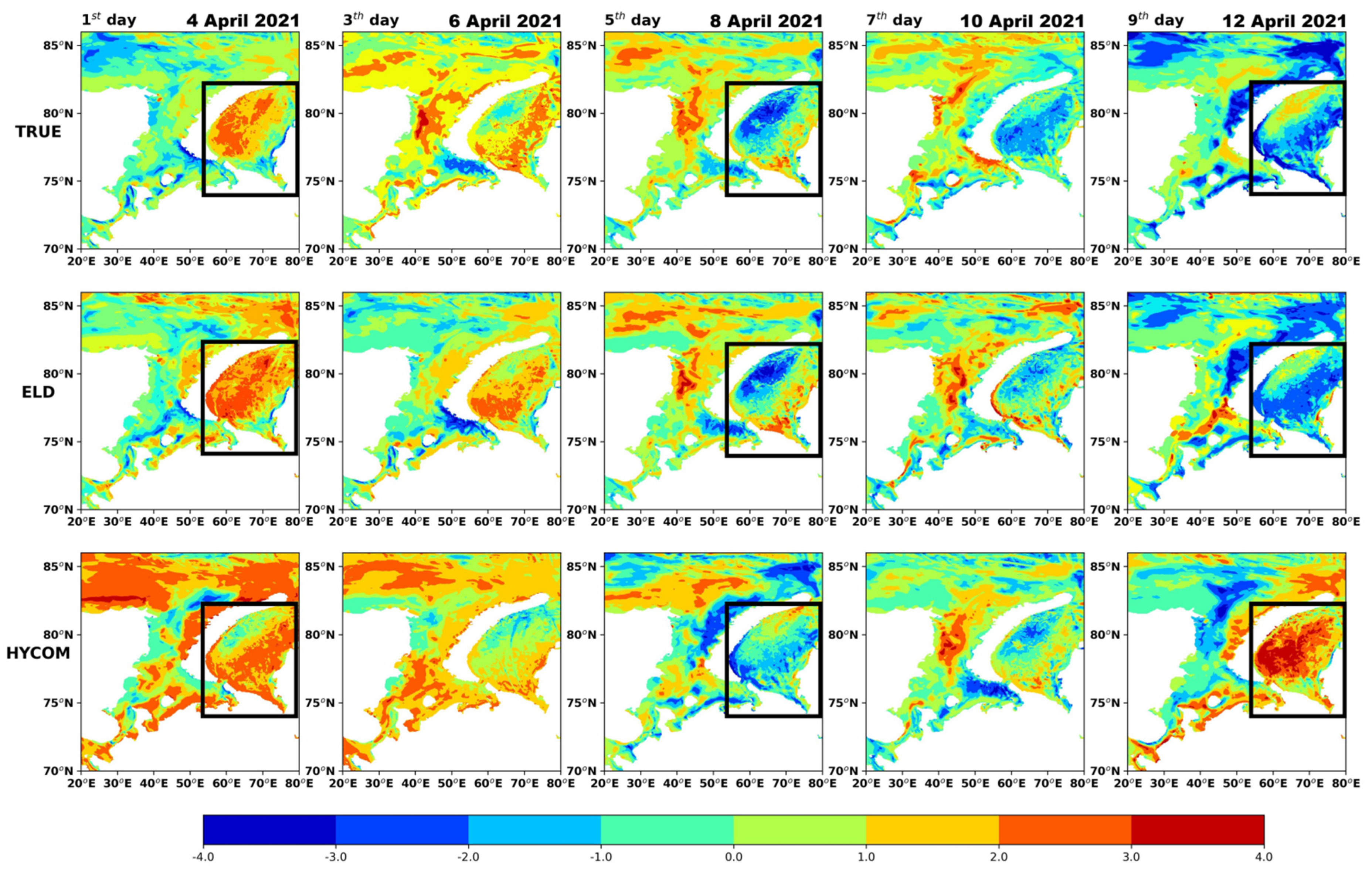

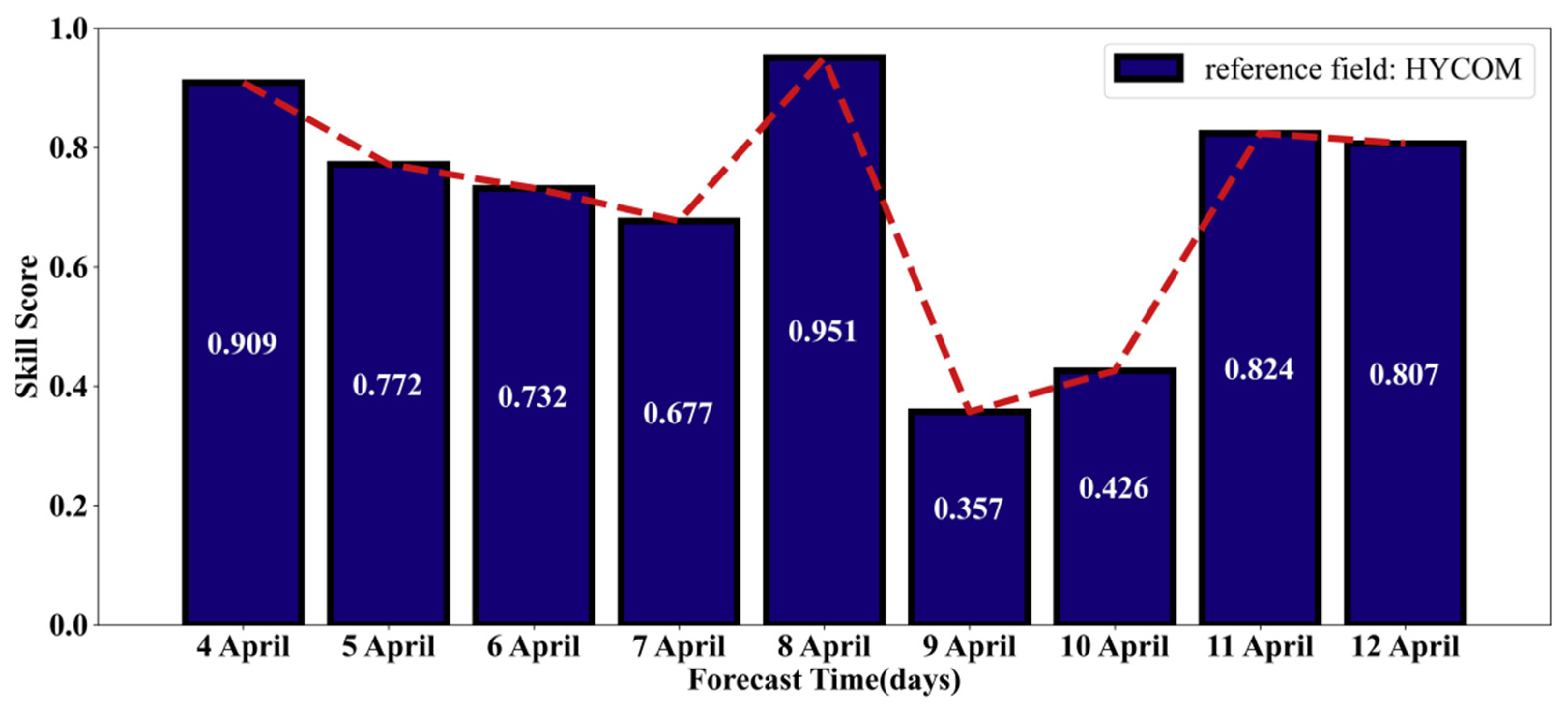

The ELD model makes full use of a large amount of satellite remote sensing SIC data to combine with the deep learning model. The forecast problem of the time–space field is transformed into the time series by the EOF method, which greatly simplifies the forecast difficulty and shortens the forecast time. Extensive experimental results confirm that the ELD model can obtain more accurate SIC 100-day prediction results at the expense of relatively short time consumption. The statistical results of the 100-day forecast of the ELD model show that the forecast RMSE increases with the forecast time. On the one hand, this is because the prediction error of the ELD model accumulates over time steps, which propagates the error from earlier time steps to later time steps, causing the long-term prediction results to be inferior to short-term results. However, the ELD model can effectively reduce the cumulative effect of this error, which is an important reason for its excellent performance in the 100-day forecast. On the other hand, medium- and long-term forecasting based on deep learning models may require sufficient training data and input data. At the same time, the quality of the data used for model learning is also a key factor in improving forecast timeliness. The pre-training of the ELD model is the most time-consuming stage in the whole experiment and the actual operational SIC forecast. The speed of EOF decomposition is slowed down due to the increase in the spatio-temporal resolution of the original data set. Although EOF is very time-consuming, its running results can be stored stably and can be directly called in subsequent prediction stages. It is worth mentioning that, when the dynamic mechanism is unclear or the solution of complex mathematical equations is difficult, the ELD model architecture scheme has wide applicability and flexibility for multi-domain spatio-temporal data mining and forecasting in the ocean and atmosphere.

The ELD model shows strong advantages in the current experimental stage, but we still found that it still had some aspects that could be improved. First, the ELD model has only been used for forecasting studies in a part of the Arctic. When the ELD model is used to study and forecast the entire Arctic region in the future, the computation load of the EOF module increases and the SIC prediction accuracy of the ELD model may decrease. If we want to produce mid- and long-term forecasts of high-resolution SIC in the entire Arctic region, the ELD model may need to be equipped with a high-performance computing platform, such as the GPU cluster. More importantly, the ELD model adopts the supervised learning method commonly used in deep learning. The label data used in the forecast training process greatly limit the forecasting ability of the ELD model to deal with sudden changes. In other words, the parameter results obtained by the ELD model training need to be retrained after having been used for a certain number of times, and need to pay more attention to data with abnormal changes in the SIC as much as possible. Such retraining is necessary to obtain more accurate prediction results. As we all know, the evolution process of SIC contains many dynamic and thermodynamic factors. In this experiment, we only conducted prediction research from the perspective of the SIC single variable, which ignored the influence of some necessary external factors on the evolution of SIC. This also provides new ideas for follow-up research. We can integrate multiple sea ice variables for forecasting, thereby improving forecast accuracy and forecast timeliness.

6. Conclusions

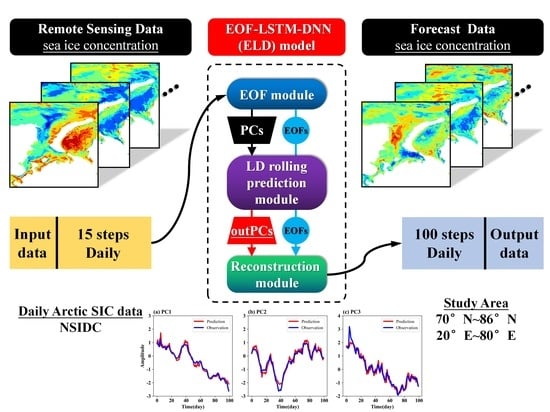

In this study, we proposed an Arctic sea ice concentration (SIC) forecast model (EOF–LSTM–DNN) based on statistical analysis and deep learning, and extend the effective forecast duration of SIC to 100 days. The model was driven by satellite remote sensing SIC data. The ELD model consisted of the empirical orthogonal function (EOF) method, long short-term memory network (LSTM), and deep neural network (DNN). Among them, EOF converts the spatio-temporal data of Arctic sea ice concentration into time series, LSTM encodes the historical time series, and DNN decodes the encoded information to obtain SIC forecast results. Meanwhile, we adopted the persistence prediction and optimal climatic normal (OCN) method as the baseline model to compare its RMSE, PCC, and ACC with the ELD model. The RMSE, PCC, and ACC of the ELD model at the 100th day of forecasts were 0.2, 0.77, and 0.74, respectively. While the RMSE of the persistence prediction and OCN results were 1.5 and 0.8, the ACC of the persistence prediction was 0.1, and the PCC of the persistence prediction and OCN results were 0.28 and 0.64. This shows that the ELD model is significantly better than the two baseline models mentioned above in mid- and long-term forecasting.

In comparison with the other machine learning models, the RMSE and MAE of the ELD model are significantly lower than those of logistic regression (LgR), back-propagation neural network (BPNN), recurrent neural network (RNN), and LSTM. At the same time, we compared the ELD model with the SIC results of the HYCOM numerical prediction. From the forecast skill score (SS), it can be observed that the SS of the ELD model relative to HYCOM is always greater than 0 (the average SS in 9 days is 0.7172), and the ELD model has obvious advantages in capturing the spatial evolution of SIC. The above experimental results all show that the ELD model has great potential in the mid- and long-term predictions of SIC.