Convolutional Neural Networks for Automated Built Infrastructure Detection in the Arctic Using Sub-Meter Spatial Resolution Satellite Imagery

Abstract

:1. Introduction

2. Materials and Methods

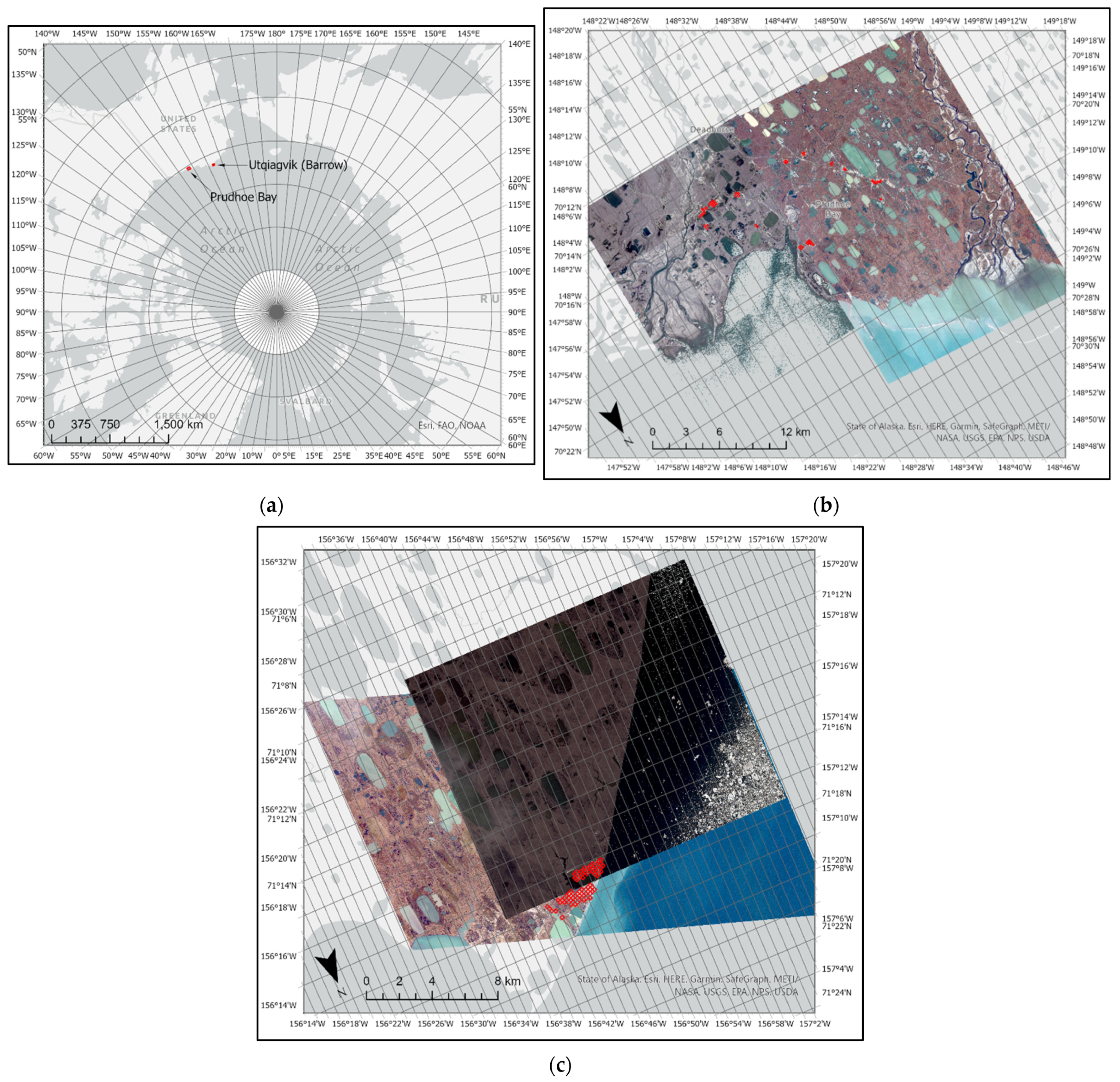

2.1. Study Area and Data

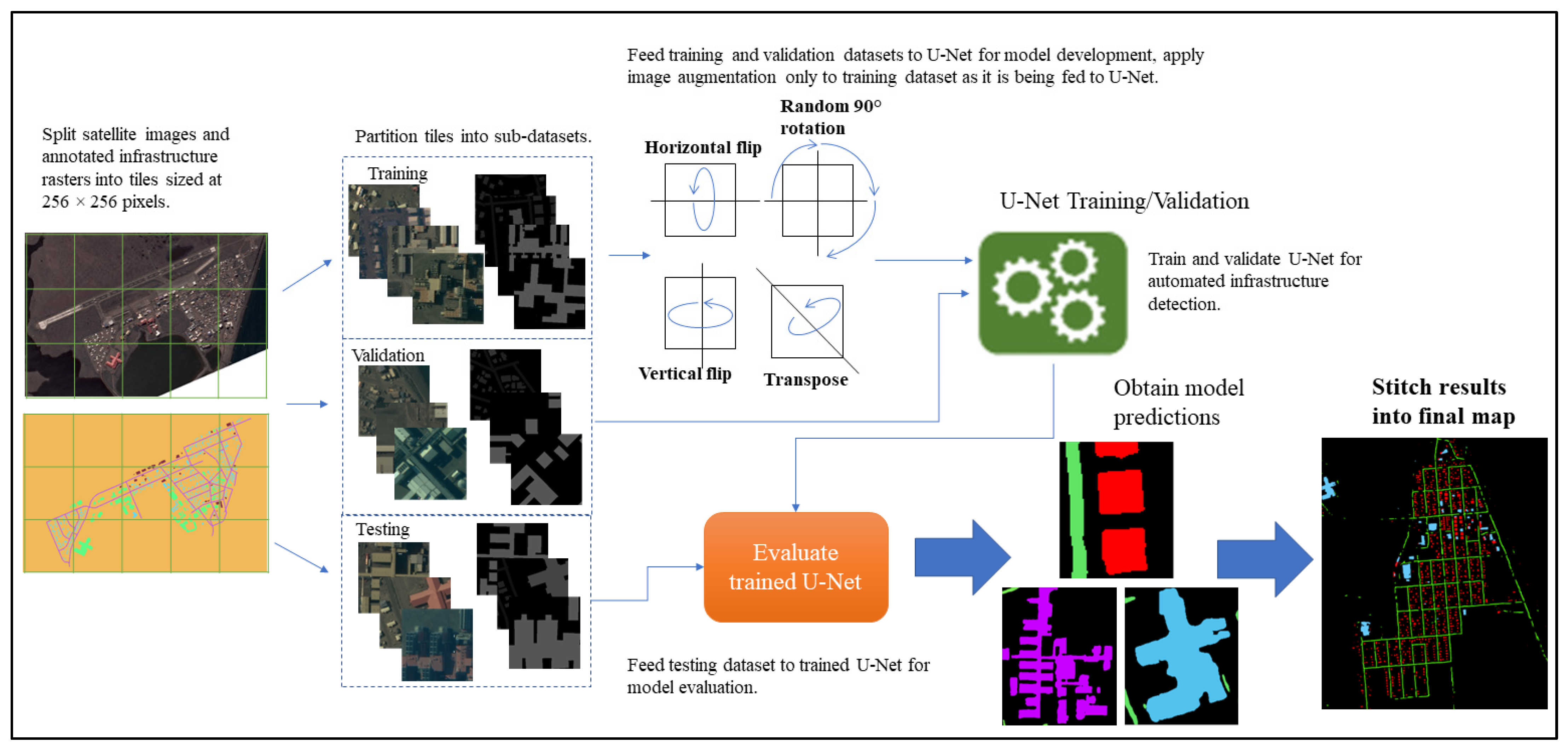

2.2. Generalized Workflow

2.3. Annotated Data Collection

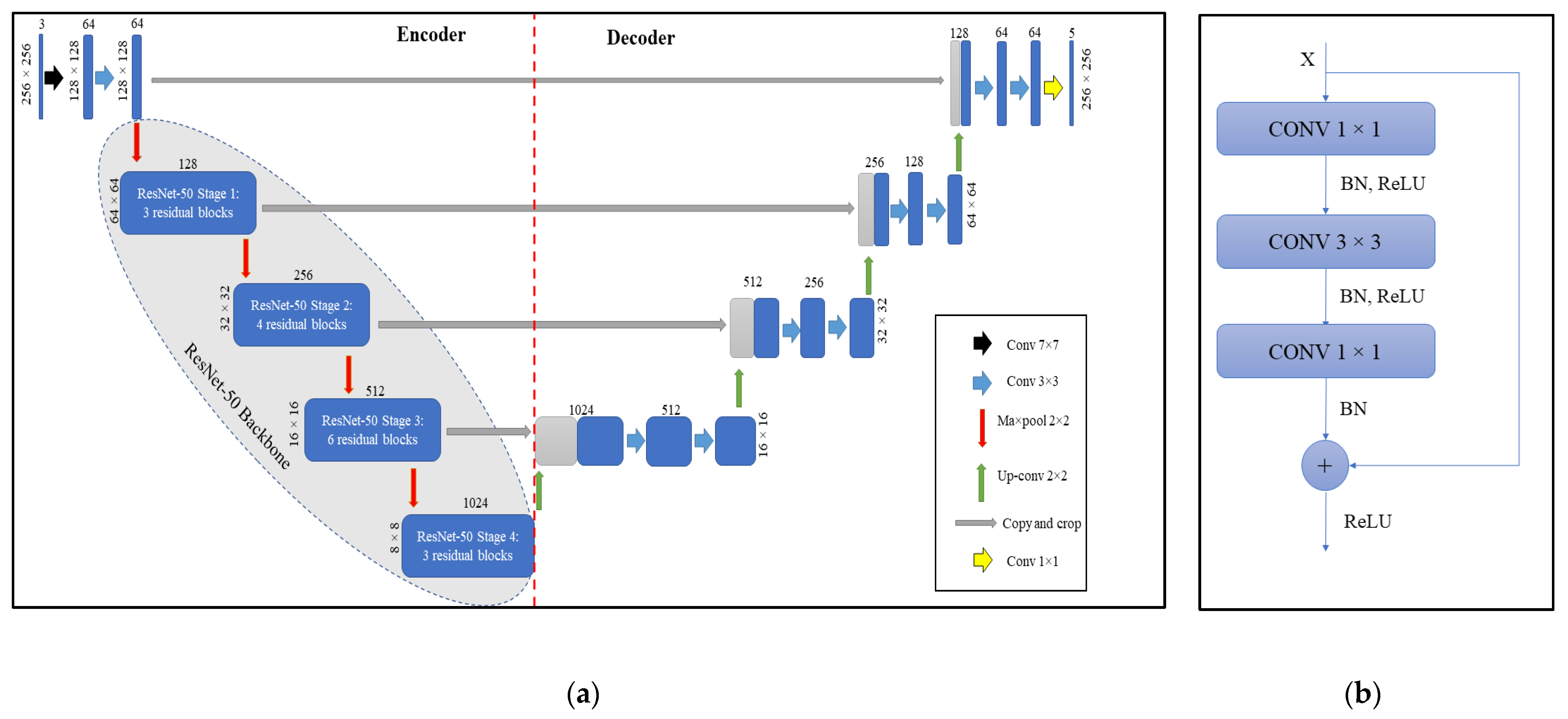

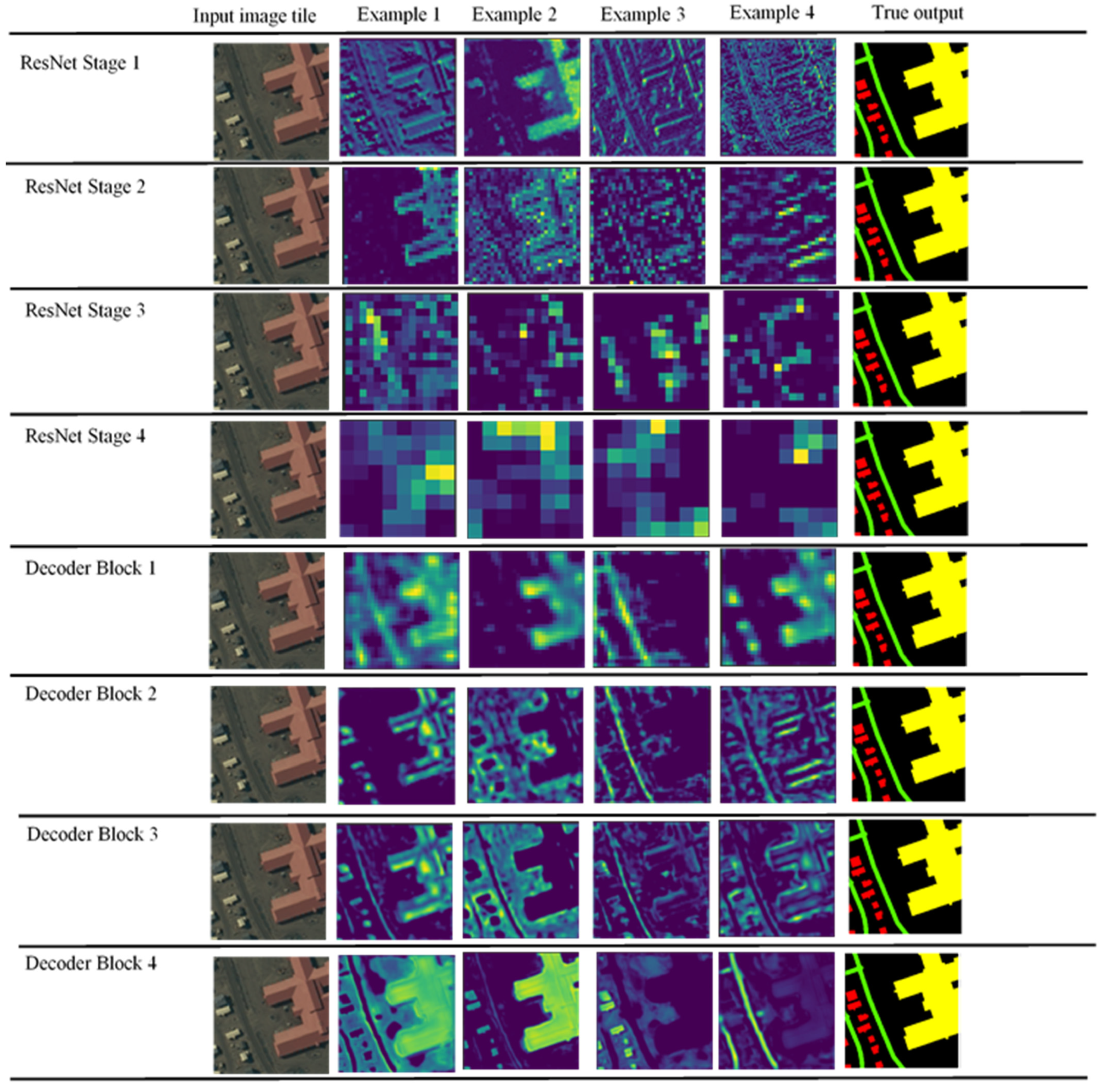

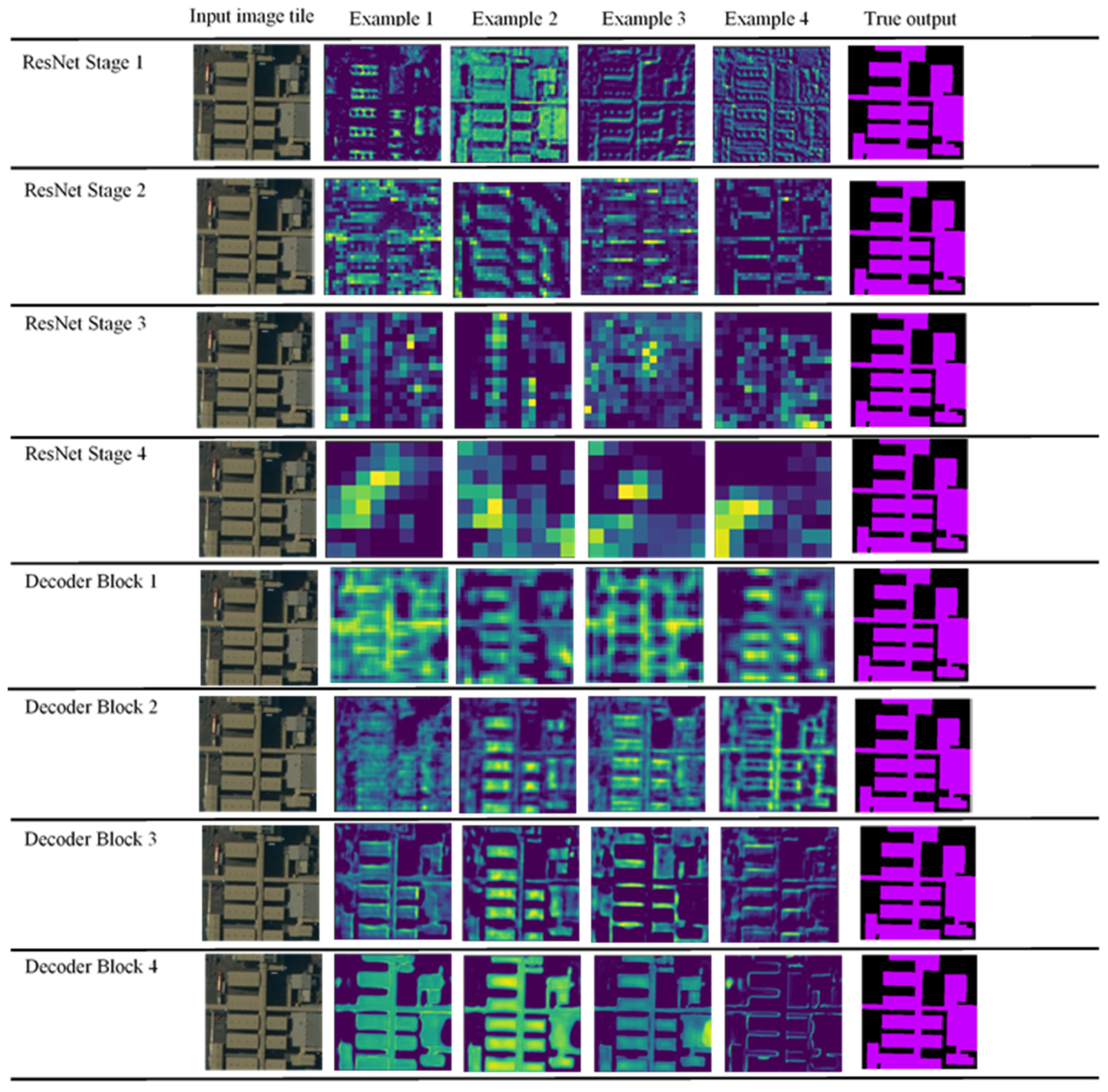

2.4. Deep Learning Algorithm

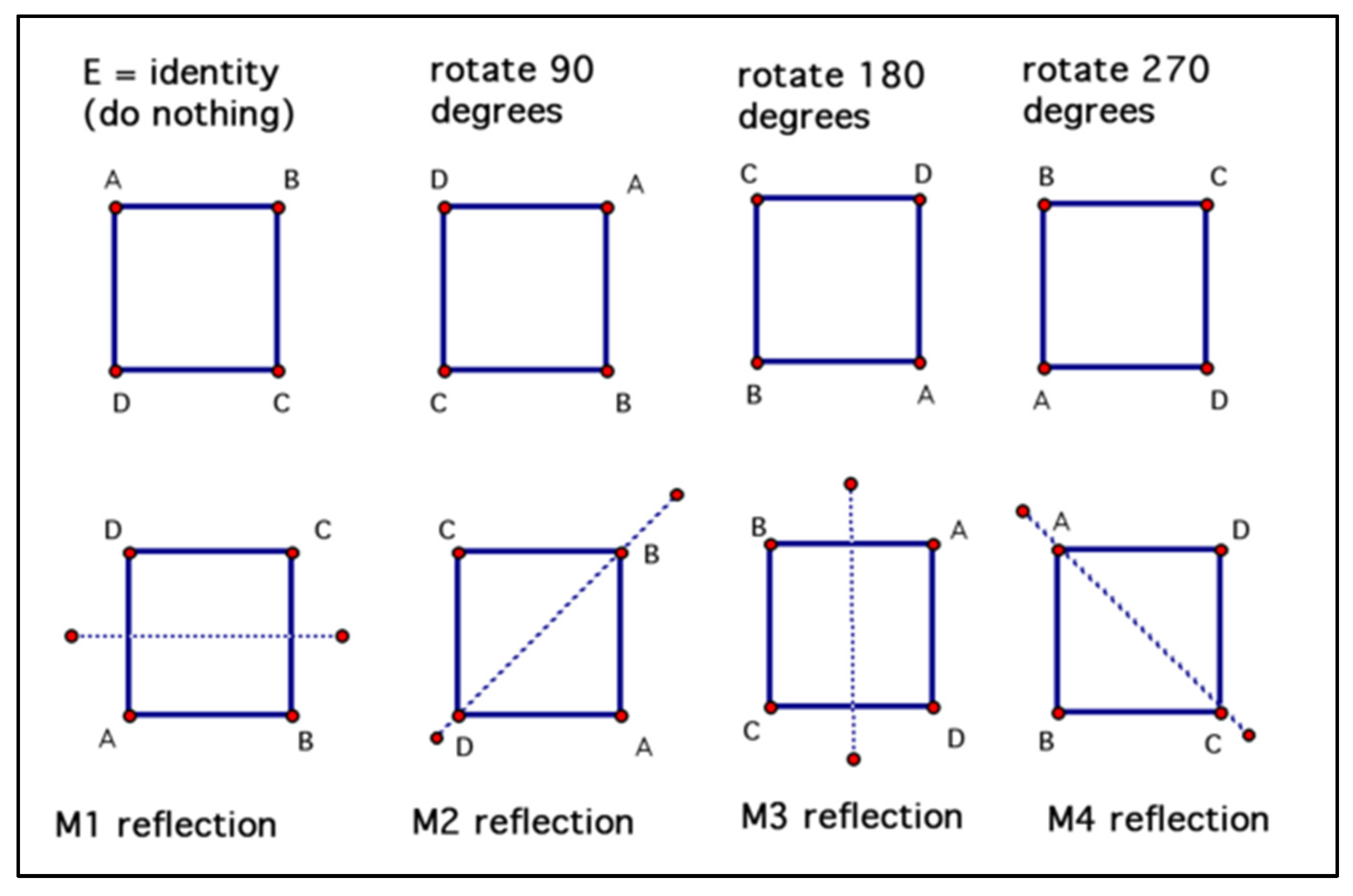

2.5. Model Training

2.6. Accuracy Assessment

3. Results

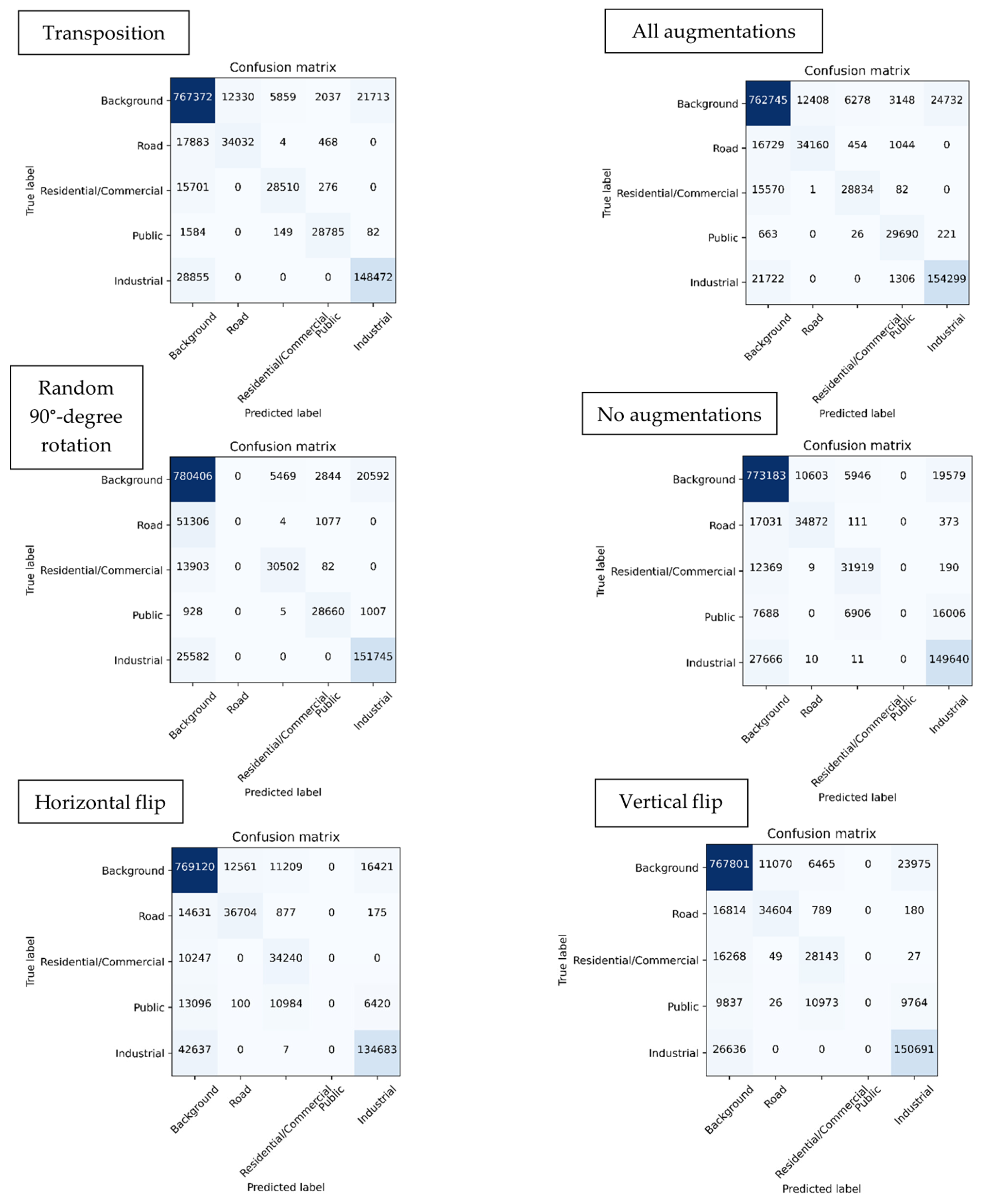

3.1. Quantitative Metrics

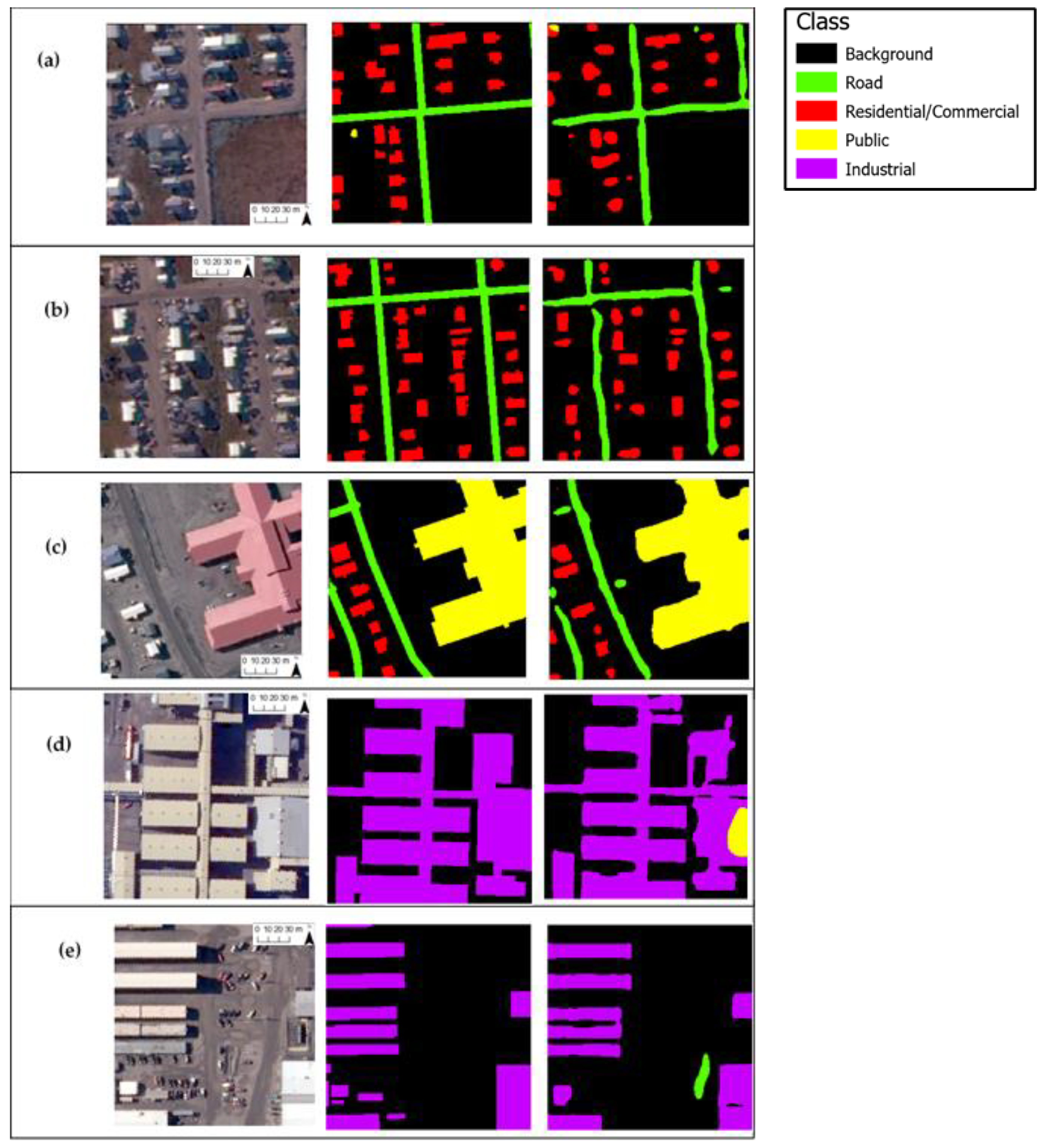

3.2. Visual Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brown, J.; Ferrians, O.J., Jr.; Heginbottom, J.A.; Melnikov, E.S. Circum-Arctic Map of Permafrost and Ground-Ice Conditions; National Snow and Ice Data Center: Boulder, CO, USA, 1997; Available online: https://nsidc.org/data/ggd318 (accessed on 10 February 2022).

- Biskaborn, B.K.; Smith, S.L.; Noetzli, J.; Matthes, H.; Vieira, G.; Streletskiy, D.A.; Schoeneich, P.; Romanovsky, V.E.; Lewkowicz, A.G.; Abramov, A.; et al. Permafrost is warming at a global scale. Nat. Commun. 2019, 10, 264. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Comiso, J.C.; Hall, D.K. Climate trends in the Arctic as observed from space. WIREs Clim. Chang. 2014, 5, 389–409. [Google Scholar] [CrossRef]

- Kokelj, S.V.; Jorgenson, M.T. Advances in Thermokarst Research. Permafr. Periglac. Process. 2013, 24, 108–119. [Google Scholar] [CrossRef]

- Hjort, J.; Karjalainen, O.; Aalto, J.; Westermann, S.; Romanovsky, V.E.; Nelson, F.E.; Etzelmüller, B.; Luoto, M. Degrading permafrost puts Arctic infrastructure at risk by mid-century. Nat. Commun. 2018, 9, 1–9. [Google Scholar] [CrossRef]

- Nelson, F.E.; Anisimov, O.A.; Shiklomanov, N.I. Subsidence risk from thawing permafrost. Nature 2001, 410, 889–890. [Google Scholar] [CrossRef] [PubMed]

- Melvin, A.M.; Larsen, P.; Boehlert, B.; Neumann, J.E.; Chinowsky, P.; Espinet, X.; Martinich, J.; Baumann, M.S.; Rennels, L.; Bothner, A.; et al. Climate change damages to Alaska public infrastructure and the economics of proactive adaptation. Proc. Natl. Acad. Sci. USA 2016, 114, E122–E131. [Google Scholar] [CrossRef] [Green Version]

- Streletskiy, D.A.; Suter, L.J.; Shiklomanov, N.I.; Porfiriev, B.N.; Eliseev, D.O. Assessment of climate change impacts on buildings, structures and infrastructure in the Russian regions on permafrost. Environ. Res. Lett. 2019, 14, 025003. [Google Scholar] [CrossRef]

- Suter, L.; Streletskiy, D.; Shiklomanov, N. Assessment of the cost of climate change impacts on critical infrastructure in the circumpolar Arctic. Polar Geogr. 2019, 42, 267–286. [Google Scholar] [CrossRef]

- Ramage, J.; Jungsberg, L.; Wang, S.; Westermann, S.; Lantuit, H.; Heleniak, T. Population living on permafrost in the Arctic. Popul. Environ. 2021, 43, 22–38. [Google Scholar] [CrossRef]

- Barros, V.R.; Field, C.B.; Dokken, D.J.; Mastrandrea, M.D.; Mach, K.J.; Bilir, T.E.; Chatterjee, M.; Ebi, K.L.; Estrada, Y.O.; Genova, R.C.; et al. Climate Change 2014: Impacts, Adaptation, and Vulnerability. Part B: Regional Aspects. Contribution of Working Group II to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2014; Available online: https://www.ipcc.ch/site/assets/uploads/2018/02/WGIIAR5-PartB_FINAL.pdf (accessed on 10 February 2022).

- Larsen, P.; Goldsmith, S.; Smith, O.; Wilson, M.; Strzepek, K.; Chinowsky, P.; Saylor, B. Estimating future costs for Alaska public infrastructure at risk from climate change. Glob. Environ. Chang. 2008, 18, 442–457. [Google Scholar] [CrossRef]

- Gautier, D.L.; Bird, K.J.; Charpentier, R.R.; Grantz, A.; Houseknecht, D.W.; Klett, T.R.; Moore, T.E.; Pitman, J.K.; Schenk, C.J.; Schuenemeyer, J.H.; et al. Assessment of Undiscovered Oil and Gas in the Arctic. Science 2009, 324, 1175–1179. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Larsen, J.N.; Fondahl, G. Arctic Human Development Report—Regional Processes and Global Linkages; Nordic Council of Ministers: Copenhagen, Denmark, 2015. [Google Scholar] [CrossRef] [Green Version]

- Anisimov, O.A.; Vaughan, D.G. Polar Regions (Arctic and Antarctic). Climate Change 2007: Impacts, Adaptation, and Vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2007; pp. 653–685. Available online: https://www.ipcc.ch/site/assets/uploads/2018/02/ar4-wg2-chapter15-1.pdf (accessed on 10 February 2022).

- Raynolds, M.K.; Walker, D.A.; Ambrosius, K.J.; Brown, J.; Everett, K.R.; Kanevskiy, M.; Kofinas, G.P.; Romanovsky, V.E.; Shur, Y.; Webber, P.J. Cumulative geoecological effects of 62 years of infrastructure and climate change in ice-rich permafrost landscapes, Prudhoe Bay Oilfield, Alaska. Glob. Chang. Biol. 2014, 20, 1211–1224. [Google Scholar] [CrossRef]

- Kanevskiy, M.; Shur, Y.; Walker, D.; Jorgenson, T.; Raynolds, M.K.; Peirce, J.L.; Jones, B.M.; Buchhorn, M.; Matyshak, G.; Bergstedt, H.; et al. The shifting mosaic of ice-wedge degradation and stabilization in response to infrastructure and climate change, Prudhoe Bay Oilfield, Alaska. Arct. Sci. 2022, 8, 498–530. [Google Scholar] [CrossRef]

- Walker, D.A.; Raynolds, M.K.; Kanevskiy, M.Z.; Shur, Y.S.; Romanovsky, V.E.; Jones, B.M.; Buchhorn, M.; Jorgenson, M.T.; Šibík, J.; Breen, A.L.; et al. Cumulative impacts of a gravel road and climate change in an ice-wedge polygon landscape, Prudhoe Bay, AK. Arct. Sci. 2022. [Google Scholar] [CrossRef]

- Arctic Monitoring and Assessment Programme (AMAP). Snow, Water, Ice and Permafrost in the Arctic (SWIPA), Oslo, Norway. 2017. Available online: https://www.amap.no/documents/doc/snow-water-ice-and-permafrost-in-the-arctic-swipa-2017/1610 (accessed on 11 February 2022).

- Brown de Colstoun, E.C.; Huang, C.; Wang, P.; Tilton, J.C.; Tan, B.; Phillips, J.; Niemczura, S.; Ling, P.-Y.; Wolfe, R.E. Global Man-Made Impervious Surface (GMIS) Dataset from Landsat; NASA Socioeconomic Data and Applications Center (SEDAC): Palisades, NY, USA, 2017; Available online: https://sedac.ciesin.columbia.edu/data/set/ulandsat-gmis-v1/data-download (accessed on 11 February 2022).

- Wang, P.; Huang, C.; Brown de Colstoun, E.; Tilton, J.; Tan, B. Global Human Built-Up and Settlement Extent (HBASE) Dataset from Landsat; NASA Socioeconomic Data and Applications Center (SEDAC): Palisades, NY, USA, 2017; Available online: https://sedac.ciesin.columbia.edu/data/set/ulandsat-hbase-v1/data-download (accessed on 11 February 2022).

- Bartsch, A.; Pointner, G.; Ingeman-Nielsen, T.; Lu, W. Towards Circumpolar Mapping of Arctic Settlements and Infrastructure Based on Sentinel-1 and Sentinel-2. Remote Sens. 2020, 12, 2368. [Google Scholar] [CrossRef]

- Kumpula, T.; Forbes, B.C.; Stammler, F.; Meschtyb, N. Dynamics of a Coupled System: Multi-Resolution Remote Sensing in Assessing Social-Ecological Responses during 2.5 Years of Gas Field Development in Arctic Russia. Remote Sens. 2012, 4, 1046–1068. [Google Scholar] [CrossRef] [Green Version]

- Kumpula, T.; Forbes, B.; Stammler, F. Combining data from satellite images and reindeer herders in arctic petroleum development: The case of Yamal, West Siberia. Nord. Geogr. Publ. 2006, 35, 17–30. Available online: https://www.geobotany.uaf.edu/library/pubs/KumpulaT2006_nordGP_25_17.pdf (accessed on 12 February 2022).

- Kumpula, T.; Forbes, B.; Stammler, F. Remote Sensing and Local Knowledge of Hydrocarbon Exploitation: The Case of Bovanenkovo, Yamal Peninsula, West Siberia, Russia. ARCTIC 2010, 63, 165–178. [Google Scholar] [CrossRef]

- Kumpula, T.; Pajunen, A.; Kaarlejärvi, E.; Forbes, B.C.; Stammler, F. Land use and land cover change in Arctic Russia: Ecological and social implications of industrial development. Glob. Environ. Chang. 2011, 21, 550–562. [Google Scholar] [CrossRef]

- Gadal, S.; Ouerghemmi, W. Multi-Level Morphometric Characterization of Built-up Areas and Change Detection in Siberian Sub-Arctic Urban Area: Yakutsk. ISPRS Int. J. Geo-Inf. 2019, 8, 129. [Google Scholar] [CrossRef] [Green Version]

- Ourng, C.; Vaguet, Y.; Derkacheva, A. Spatio-temporal urban growth pattern in the arctic: A case study in surgut, Russia. In Proceedings of the 2019 Joint Urban Remote Sensing Event (JURSE), Vannes, France, 22–24 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Ardelean, F.; Onaca, A.; Chețan, M.-A.; Dornik, A.; Georgievski, G.; Hagemann, S.; Timofte, F.; Berzescu, O. Assessment of Spatio-Temporal Landscape Changes from VHR Images in Three Different Permafrost Areas in the Western Russian Arctic. Remote Sens. 2020, 12, 3999. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a New Paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2018, arXiv:1703.06870. [Google Scholar] [CrossRef]

- Tiede, D.; Schwendemann, G.; Alobaidi, A.; Wendt, L.; Lang, S. Mask R-CNN-based building extraction from VHR satellite data in operational humanitarian action: An example related to COVID-19 response in Khartoum, Sudan. Trans. GIS 2021, 25, 1213–1227. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Li, S.; Teng, F.; Lin, Y.; Wang, M.; Cai, H. Improved Mask R-CNN for Rural Building Roof Type Recognition from UAV High-Resolution Images: A Case Study in Hunan Province, China. Remote Sens. 2022, 14, 265. [Google Scholar] [CrossRef]

- Li, W.; He, C.; Fang, J.; Zheng, J.; Fu, H.; Yu, L. Semantic Segmentation-Based Building Footprint Extraction Using Very High-Resolution Satellite Images and Multi-Source GIS Data. Remote Sens. 2019, 11, 403. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.; Yuan, Y.; Liu, G. SDUNet: Road extraction via spatial enhanced and densely connected UNet. Pattern Recognit. 2022, 126, 108549. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Wong, S.C.; Gatt, A.; Stamatescu, V.; McDonnell, M.D. Understanding data augmentation for classification: When to warp? In Proceedings of the International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Udawalpola, M.; Hasan, A.; Liljedahl, A.K.; Soliman, A.; Witharana, C. Operational-Scale GeoAI for Pan-Arctic Permafrost Feature Detection from High-Resolution Satellite Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIV-M-3-2, 175–180. [Google Scholar] [CrossRef]

- Udawalpola, M.R.; Hasan, A.; Liljedahl, A.; Soliman, A.; Terstriep, J.; Witharana, C. An Optimal GeoAI Workflow for Pan-Arctic Permafrost Feature Detection from High-Resolution Satellite Imagery. Photogramm. Eng. Remote Sens. 2022, 88, 181–188. [Google Scholar] [CrossRef]

| Reference | Study Area | Data | Spatial Resolution (in Order of Listed Data, “Field Survey” Omitted) | Method | Feature(s) of Interest |

|---|---|---|---|---|---|

| Kumpula et al., 2006 [25] | Bovanenkovo gas field, Yamal Peninsula (West Siberia) | Field survey, QuickBird-2 (panchromatic, multispectral), ASTER VNIR, Landsat (TM, MSS) | 0.61 m, 2.5 m, 15 m, 30 m, 80 m | Manual digitization | Quarries, power lines, roads, winter roads, drill towers, barracks |

| Kumpula et al., 2010 [26] | Bovanenkovo gas field, Yamal Peninsula (West Siberia) | Field survey, QuickBird-2 (pan, multi), ASTER VNIR, SPOT (pan, multi), Landsat (ETM7, TM, MSS) | 0.63 m, 2.4 m, 15 m, 10 m, 20 m, 30 m, 30 m, 80 m | Manual digitization | Roads, impervious cover, barracks, winter roads, settlements, quarries |

| Kumpula et al., 2011 [27] | Bovanenkovo gas field and Toravei oil field, Yamal Peninsula (West Siberia) | Field survey, QuickBird-2 (pan, multi), ASTER VNIR, SPOT (multi), Landsat (ETM7, TM, MSS) | 0.63 m, 2.4 m, 15 m, 10 m, 20 m, 30 m, 30 m | Manual digitization | Buildings, roads, sand quarries, pipelines |

| Kumpula et al., 2012 [23] | Bovanenkovo gas field, Yamal Peninsula (West Siberia) | Field survey, QuickBird-2 (pan, multi), GeoEye, ASTER VNIR, SPOT (multi), Landsat (ETM7, TM, MSS) | 0.63 m, 2.4 m, 1.65 m, 15 m, 20 m, 30 m, 30 m, 70 m | Manual digitization | Pipelines, powerlines, drilling towers, roads, impervious cover, barracks, settlements, quarries |

| Raynolds et al., 2014 [16] | Prudhoe Bay Oilfield, Alaska | Aerial photography (B&W, color, color infrared) | 1 ft resolution for two images. Map scale was then used to describe the rest of the imagery. Scales are as follows: 1:3000, 1:6000, 1:12,000, 1:18,000, 1:24,000, 1:60,000, 1:68,000, 1:120,000 | Manual digitization | Roads, gravel pads, excavations, pipelines, powerlines, fences, canals, gravel and construction debris |

| Gadal and Ouerghemmi, 2019 [28] | Yakutsk, Russia | SPOT-6 (pan, multi), Sentinel-2 (multi) | 1.5 m, 6 m, 10 m | Semi-automated (object-based image analysis) | Houses, other structures |

| Ourng et al., 2019 [29] | Surgut, Russia | Sentinel-1 (SAR), Sentinel-2 (multi), Landsat (TM, MSS) | 10 m, 10 m, 30 m, 60 m | Automated (machine learning) | Built-up area |

| Bartsch et al., 2020 [22] | Pan-Arctic, within 100 km of the Arctic coast | Sentinel-1 (SAR) and Sentinel-2 (multi) | 10 m, 10 m | Automated (machine learning and deep learning) | Buildings, roads, other human-impacted areas |

| Ardelean et al., 2020 [24] | Bovanenkovo gas field, Yamal Peninsula (West Siberia) | QuickBird-2 (pan, multi), GeoEye-1 (pan, multi) | 0.6 m, 2.4 m, 0.4 m, 1.8 m | Manual digitization | Buildings, roads |

| Study Area | Sensor | Acquisition Date | Spatial Resolution (m) |

|---|---|---|---|

| Utqiagvik | WV-02 | 8 September 2014 | 0.72 × 0.87 |

| QB-02 | 1 August 2002 | 0.67 × 0.71 | |

| Prudhoe Bay | WV-02 | 7 September 2014 | 0.50 × 0.50 |

| WV-02 | 7 September 2014 | 0.50 × 0.50 | |

| QB-02 | 21 August 2009 | 0.62 × 0.58 | |

| QB-02 | 21 August 2009 | 0.62 × 0.60 |

| Residential/Commercial | Public | Industrial | Road | |

|---|---|---|---|---|

| Utqiagvik | 1243 | 88 | n/a | 223 |

| Prudhoe Bay | n/a | n/a | 102 | 30 |

| Background | Residential/Commercial | Public | Industrial | Road | |

|---|---|---|---|---|---|

| Training | 6,528,038 | 352,686 | 155,131 | 525,418 | 237,511 |

| Validation | 883,507 | 71,180 | 33,795 | 54,917 | 70,713 |

| Testing | 809,311 | 52,387 | 30,600 | 177,327 | 44,487 |

| Hyperparameter | Value/Type |

|---|---|

| Input size | 256 × 256 pixels |

| Batch size | 8 |

| Epochs | 60 |

| Loss function | Dice Loss |

| Optimizer | Adam |

| Learning rate | 0.001 |

| Augmentation Method | Precision | Recall | F1-Score | Average F1-Score | |

|---|---|---|---|---|---|

| Transposition | Background | 0.92 | 0.95 | 0.94 | 0.83 |

| Road | 0.73 | 0.65 | 0.69 | ||

| Residential/Commercial | 0.83 | 0.64 | 0.72 | ||

| Public | 0.91 | 0.94 | 0.93 | ||

| Industrial | 0.87 | 0.84 | 0.85 | ||

| All | Background | 0.93 | 0.94 | 0.94 | 0.82 |

| Road | 0.73 | 0.65 | 0.69 | ||

| Residential/Commercial | 0.81 | 0.65 | 0.72 | ||

| Public | 0.84 | 0.97 | 0.90 | ||

| Industrial | 0.86 | 0.87 | 0.87 | ||

| Random 90° rotation | Background | 0.89 | 0.96 | 0.93 | 0.69 |

| Road | 0.00 | 0.00 | 0.00 | ||

| Residential/Commercial | 0.85 | 0.69 | 0.76 | ||

| Public | 0.88 | 0.94 | 0.91 | ||

| Industrial | 0.88 | 0.86 | 0.87 | ||

| None | Background | 0.92 | 0.96 | 0.94 | 0.64 |

| Road | 0.77 | 0.67 | 0.71 | ||

| Residential/Commercial | 0.71 | 0.72 | 0.71 | ||

| Public | 0.00 | 0.00 | 0.00 | ||

| Industrial | 0.81 | 0.84 | 0.82 | ||

| Horizontal flip | Background | 0.91 | 0.95 | 0.93 | 0.63 |

| Road | 0.74 | 0.70 | 0.72 | ||

| Residential/Commercial | 0.60 | 0.77 | 0.67 | ||

| Public | 0.00 | 0.00 | 0.00 | ||

| Industrial | 0.85 | 0.76 | 0.80 | ||

| Vertical flip | Background | 0.93 | 0.93 | 0.93 | 0.62 |

| Road | 0.72 | 0.69 | 0.70 | ||

| Residential/Commercial | 0.51 | 0.72 | 0.60 | ||

| Public | 0.00 | 0.00 | 0.00 | ||

| Industrial | 0.82 | 0.88 | 0.85 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manos, E.; Witharana, C.; Udawalpola, M.R.; Hasan, A.; Liljedahl, A.K. Convolutional Neural Networks for Automated Built Infrastructure Detection in the Arctic Using Sub-Meter Spatial Resolution Satellite Imagery. Remote Sens. 2022, 14, 2719. https://doi.org/10.3390/rs14112719

Manos E, Witharana C, Udawalpola MR, Hasan A, Liljedahl AK. Convolutional Neural Networks for Automated Built Infrastructure Detection in the Arctic Using Sub-Meter Spatial Resolution Satellite Imagery. Remote Sensing. 2022; 14(11):2719. https://doi.org/10.3390/rs14112719

Chicago/Turabian StyleManos, Elias, Chandi Witharana, Mahendra Rajitha Udawalpola, Amit Hasan, and Anna K. Liljedahl. 2022. "Convolutional Neural Networks for Automated Built Infrastructure Detection in the Arctic Using Sub-Meter Spatial Resolution Satellite Imagery" Remote Sensing 14, no. 11: 2719. https://doi.org/10.3390/rs14112719

APA StyleManos, E., Witharana, C., Udawalpola, M. R., Hasan, A., & Liljedahl, A. K. (2022). Convolutional Neural Networks for Automated Built Infrastructure Detection in the Arctic Using Sub-Meter Spatial Resolution Satellite Imagery. Remote Sensing, 14(11), 2719. https://doi.org/10.3390/rs14112719