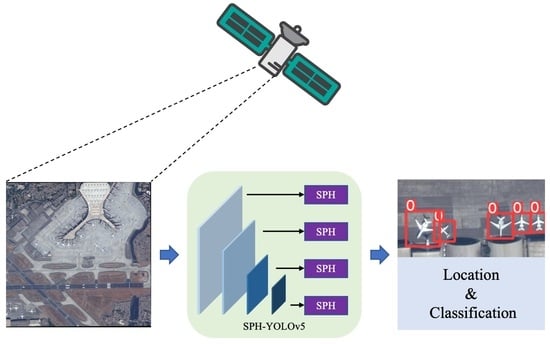

Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images

Abstract

:1. Introduction

- New feature fusion layers and prediction head are added from the shallow layer in YOLOv5 for the first time to detect small objects because it can maximumly preserve the feature information.

- Original convolutional prediction heads in YOLOv5 are replaced with Swin Transformer Prediction Heads (SPHs) for the first time to reduce computational complexity.

- Normalization-based Attention Modules (NAMs) are integrated into YOLOv5 to add a sparsity penalty to the attention module to improve performance.

- Our proposed SPH-YOLOv5 achieves 0.716 mean Average Precision (mAP) on the DOTA dataset with complex objects and 0.98 on the NWPU-VHR10 dataset with relatively simple objects, the best accuracy among the results of the existing models.

2. Related Work

2.1. Object Detection

2.2. Data Augmentation

2.3. The Attention Mechanism

3. Theoretical Model

3.1. Review of YOLOv5

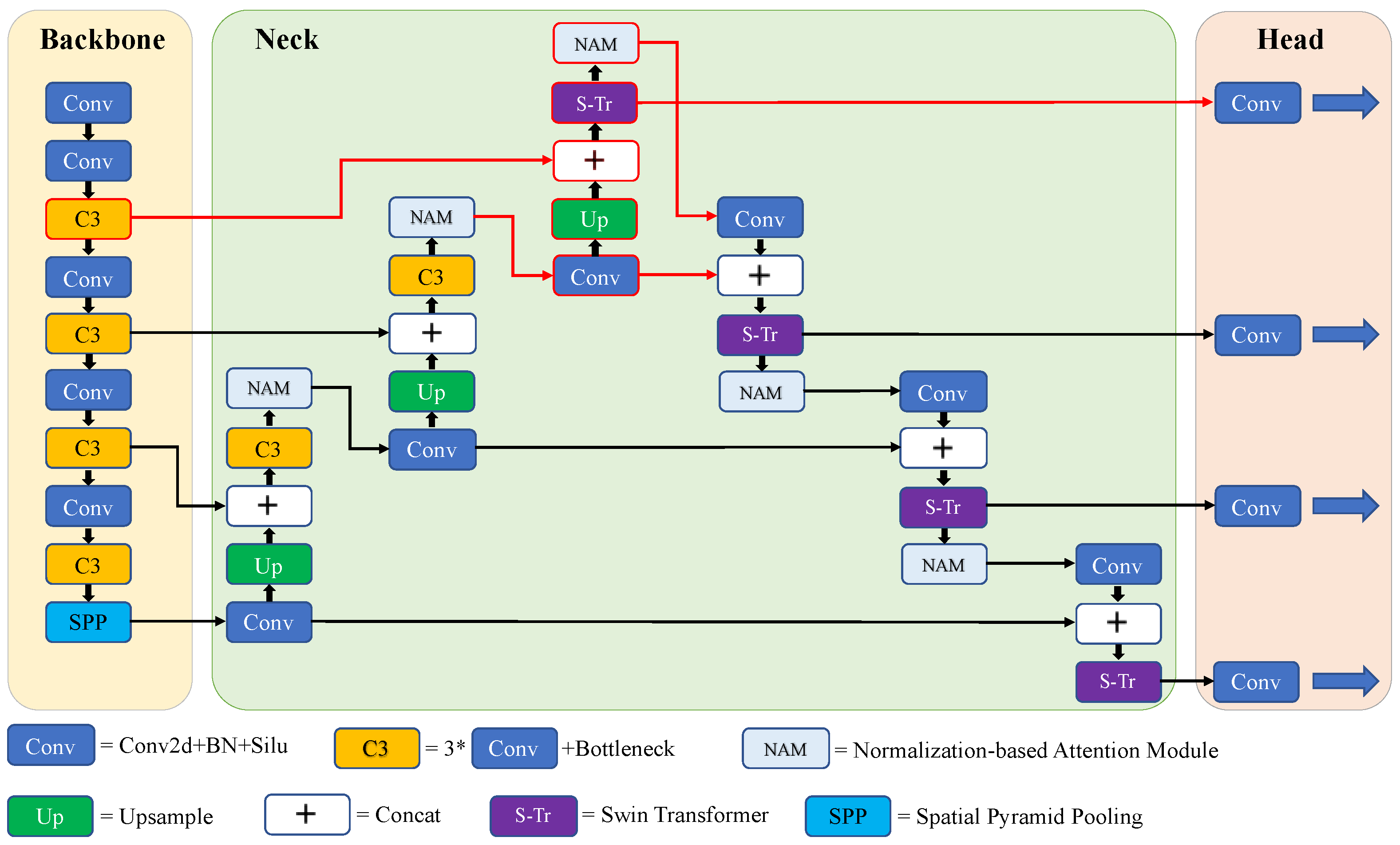

3.2. SPH-YOLOv5

3.2.1. Proposed Prediction Head for Small Objects

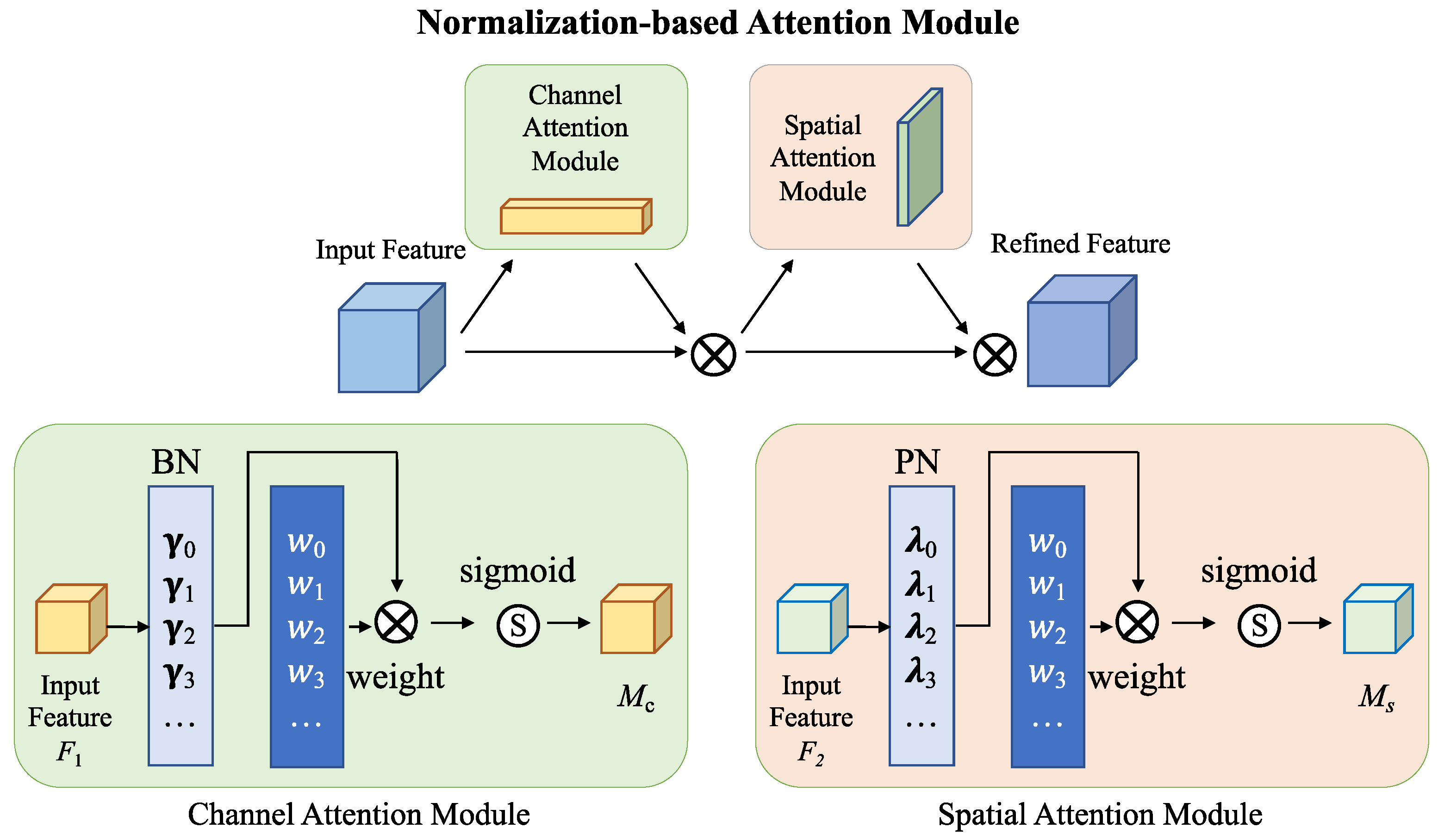

3.2.2. Normalization-Based Attention Module (NAM)

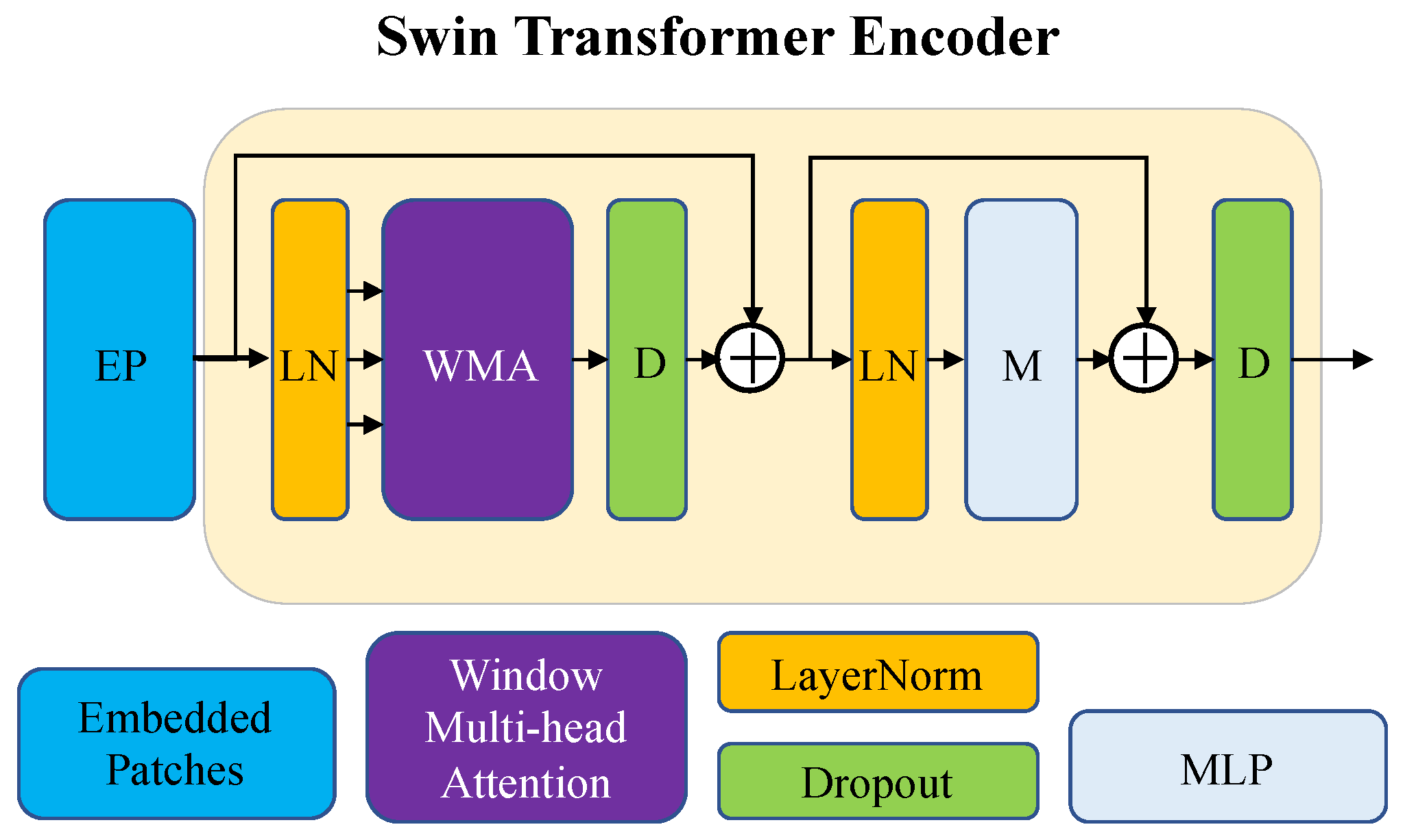

3.2.3. Swin Transformer Encoder Block

3.2.4. Comparison with TPH-YOLOv5

4. Experiments

4.1. Datasets and Evaluation Metrics

4.2. Implementation Details

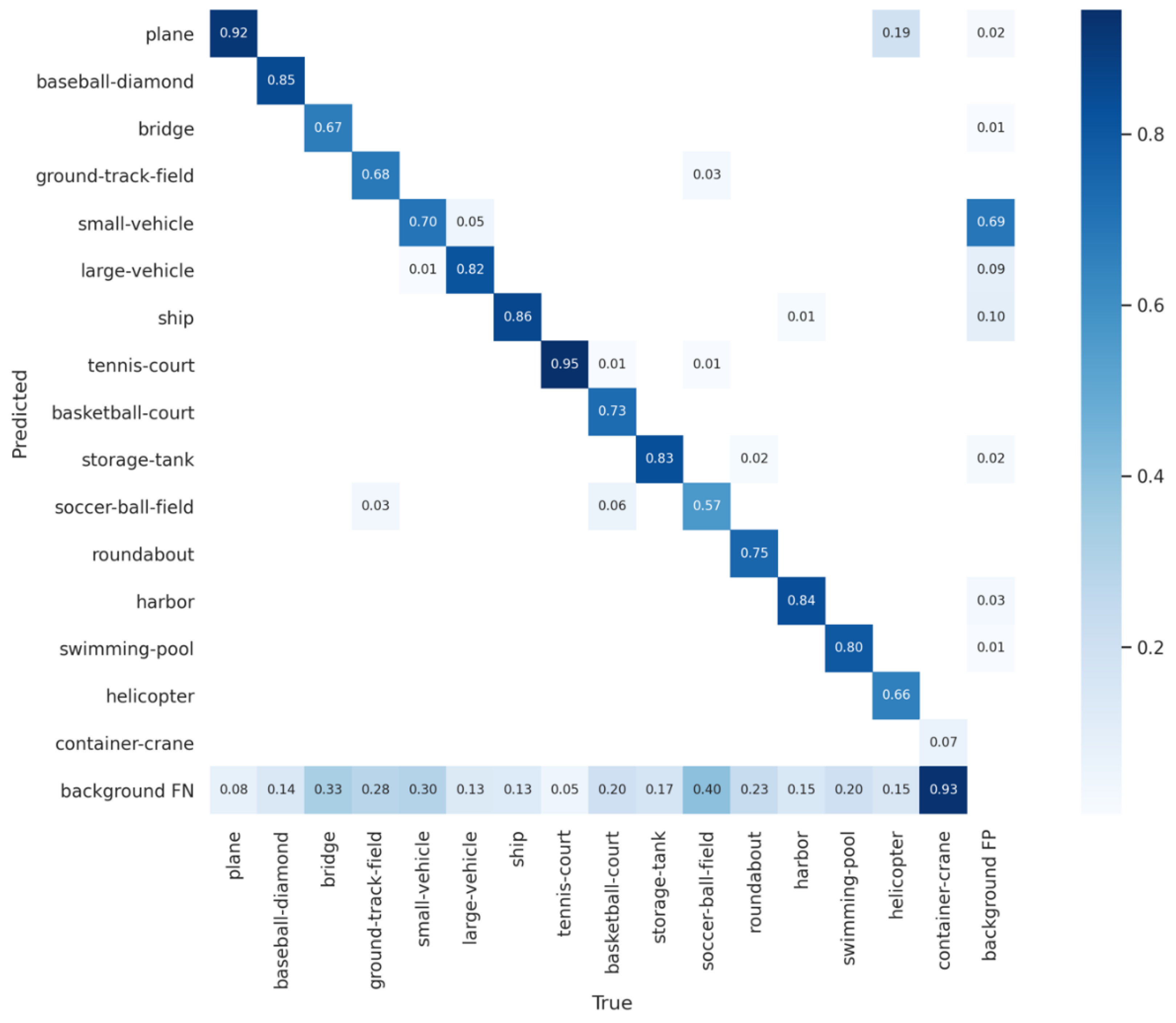

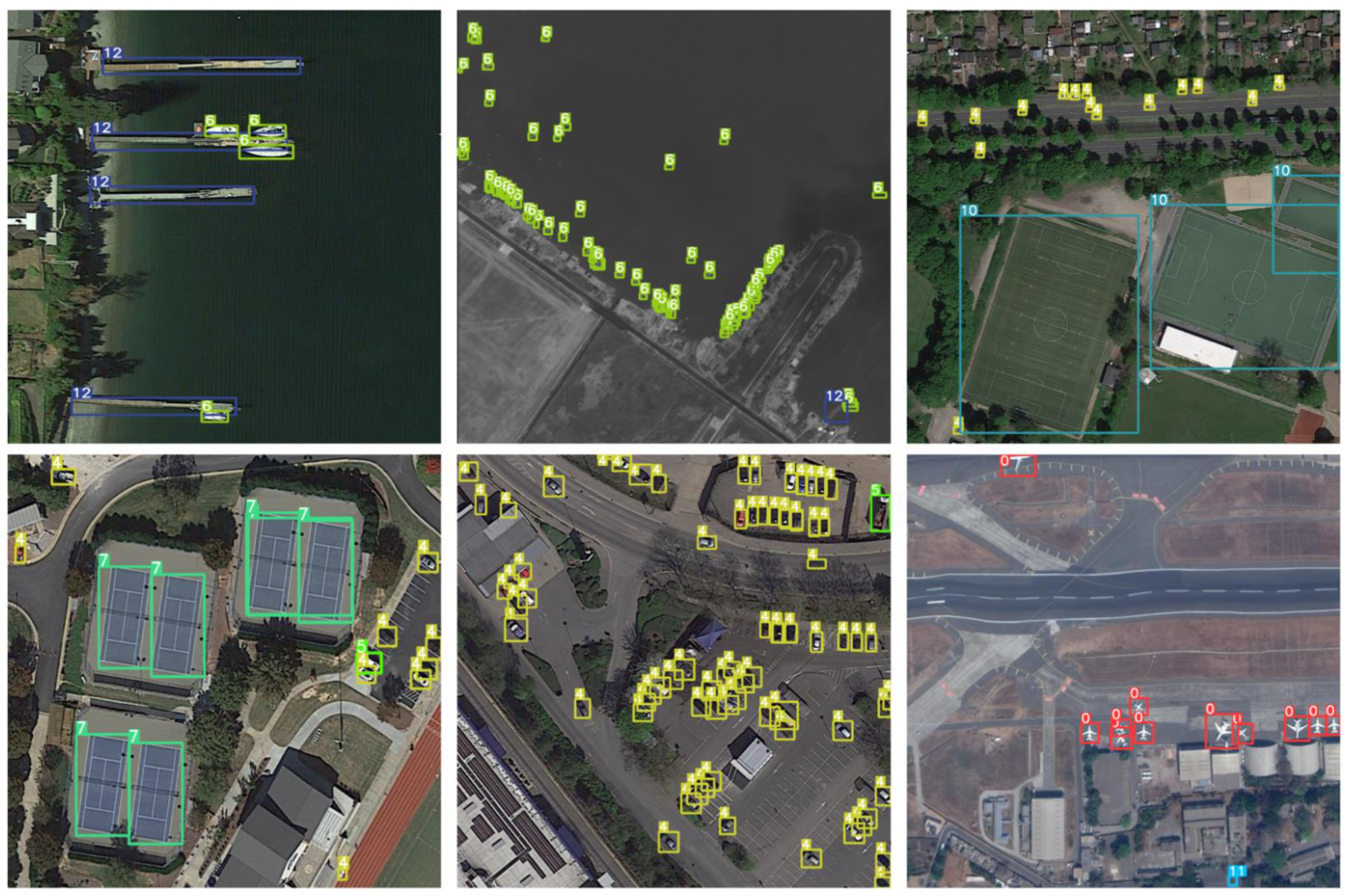

4.3. Experimental Results

4.4. Ablation Experiments

4.5. Hyperparameter Exploration

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sadgrove, E.J.; Falzon, G.; Miron, D.; Lamb, D.W. Real-time object detection in agricultural/remote environments using the multiple- expert colour feature extreme learning machine (mec-elm). Comput. Ind. 2018, 98, 183–191. [Google Scholar] [CrossRef]

- Reilly, V.; Idrees, H.; Shah, M. Detection and tracking of large number of targets in wide area surveillance. In Computer Vision—ECCV 2010, Proceedings of the 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 186–199. [Google Scholar]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, M.; Zhang, J.; Tao, D. Stagewise Unsupervised Domain Adaptation with Adversarial Self-Training for Road Segmentation of Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2022; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Wang, R.; Song, Y.; Guo, Z.; Niu, Y. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Syst. Appl. 2022, 197, 116793. [Google Scholar] [CrossRef]

- Everingham, M.; van Gool, L.; Williams, C.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Cheng, G.; Lang, C.; Wu, M.; Xie, X.; Yao, X.; Han, J. Feature enhancement network for object detection in optical remote sensing images. J. Remote Sens. 2021, 2021, 9805389. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Ke, L.; Gang, W.; Gong, C.; Liqiu, M.; Junwei, H. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–27 July 2017; pp. 6517–6525. [Google Scholar]

- Joseph, R.; Ali, F. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Roy, A.M.; Bose, R.; Bhaduri, J. A fast accurate fine-grain object detection model based on yolov4 deep neural network. Neural Comput. Appl. 2022, 34, 3895–3921. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. Cspnet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Low, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietika inen, M. Deeplearningforgenericobjectdetection: A survey. arXiv 2018, arXiv:1809.02165. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Berlin, Germany, 11–14 March 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-iouloss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Misra, D. Mish:Aselfregularizednon-monotonicactivationfunction. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 13029–13038. [Google Scholar]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the 7th International Conference on Document Analysis and Recognition (ICDAR 2003), Edinburgh, UK, 3–6 August 2003; Volume 3. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup:Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- DeVries, T.; Taylor, G.W. Improved regularization of convolutional neural networks with cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Mnih, V.; Heess, N.; Graves, A. Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 2014, 27, 2204–2212. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. Adv. Neural Inf. Process. Syst. 2015, 28, 2017–2025. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.H.; Tay, F.E.; Feng, J.; Yan, S. Tokens-to-token vit: Training vision transformers from scratch on imagenet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 558–567. [Google Scholar]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mecha-nisms in computer vision: A survey. arXiv 2021, arXiv:2111.07624. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. Tph-yolov5: Improved yolov5 based on transformer prediction head for object detection on drone- captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 19–25 June 2021; pp. 2778–2788. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-based Attention Module. arXiv 2021, arXiv:2111.12419. [Google Scholar]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. Dota: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

| Dataset | 10–50 Pixels | 50–300 Pixels | >300 Pixels |

|---|---|---|---|

| NWPU-VHR10 | 15% | 83% | 2% |

| DOTA | 79% | 20% | 10% |

| Method | P | R | mAP |

|---|---|---|---|

| Faster R-CNN | 0.925 | 0.917 | 0.936 |

| RetinaNet | 0.912 | 0.912 | 0.928 |

| SSD | 0.903 | 0.908 | 0.906 |

| YOLOv3 | 0.913 | 0.919 | 0.927 |

| Scaled-YOLOv4 | 0.957 | 0.921 | 0.940 |

| YOLOv5 | 0.961 | 0.955 | 0.975 |

| TPH-YOLOv5 | 0.963 | 0.956 | 0.978 |

| SPH-YOLOv5 | 0.965 | 0.967 | 0.980 |

| Method | P | R | mAP |

|---|---|---|---|

| Faster R-CNN | 0.710 | 0.594 | 0.631 |

| RetinaNet | 0.714 | 0.585 | 0.622 |

| SSD | 0.696 | 0.522 | 0.561 |

| YOLOv3 | 0.715 | 0.546 | 0.587 |

| Scaled-YOLOv4 | 0.728 | 0.583 | 0.632 |

| YOLOv5 | 0.760 | 0.601 | 0.645 |

| TPH-YOLOv5 | 0.785 | 0.643 | 0.683 |

| SPH-YOLOv5 | 0.806 | 0.683 | 0.716 |

| Method | Inference Time (per Picture) |

|---|---|

| YOLOv5 | 10.5 ms |

| TPH-YOLOv5 | 32.5 ms |

| SPH-YOLOv5 | 19.5 ms |

| Methods | P | R | mAP |

|---|---|---|---|

| YOLOv5 | 0.76 | 0.601 | 0.645 |

| YOLOv5 + P2 | 0.818 | 0.625 | 0.675 |

| YOLOv5 + P2 + Transformer | 0.804 | 0.643 | 0.683 |

| YOLOv5 + P2 + Swin Transformer | 0.805 | 0.661 | 0.690 |

| YOLOv5 + P2 + CBAM | 0.812 | 0.667 | 0.689 |

| SPH-YOLOv5 | 0.806 | 0.683 | 0.716 |

| Type | P | R | mAP |

|---|---|---|---|

| Only using spatial attention | 0.802 | 0.664 | 0.709 |

| Only using channel attention | 0.801 | 0.663 | 0.706 |

| CBAM | 0.805 | 0.672 | 0.714 |

| NAM | 0.806 | 0.683 | 0.716 |

| Methods | Image Size | Batch Size | Optimizer | mAP |

|---|---|---|---|---|

| SPH-YOLOv5 | 1280 | 16 | SGD | 0.716 |

| SPH-YOLOv5-2 | 1024 | 16 | SGD | 0.711 |

| SPH-YOLOv5-3 | 640 | 16 | SGD | 0.705 |

| SPH-YOLOv5-4 | 1280 | 16 | Adam | 0.697 |

| SPH-YOLOv5-5 | 1280 | 8 | SGD | 0.703 |

| SPH-YOLOv5-6 | 1280 | 32 | SGD | 0.694 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, H.; Mu, T.; Li, Q.; Dai, H.; Li, C.; He, Z.; Wang, W.; Han, F.; Tuniyazi, A.; Li, H.; et al. Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images. Remote Sens. 2022, 14, 2861. https://doi.org/10.3390/rs14122861

Gong H, Mu T, Li Q, Dai H, Li C, He Z, Wang W, Han F, Tuniyazi A, Li H, et al. Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images. Remote Sensing. 2022; 14(12):2861. https://doi.org/10.3390/rs14122861

Chicago/Turabian StyleGong, Hang, Tingkui Mu, Qiuxia Li, Haishan Dai, Chunlai Li, Zhiping He, Wenjing Wang, Feng Han, Abudusalamu Tuniyazi, Haoyang Li, and et al. 2022. "Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images" Remote Sensing 14, no. 12: 2861. https://doi.org/10.3390/rs14122861

APA StyleGong, H., Mu, T., Li, Q., Dai, H., Li, C., He, Z., Wang, W., Han, F., Tuniyazi, A., Li, H., Lang, X., Li, Z., & Wang, B. (2022). Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images. Remote Sensing, 14(12), 2861. https://doi.org/10.3390/rs14122861