Registration of Multisensor Images through a Conditional Generative Adversarial Network and a Correlation-Type Similarity Measure

Abstract

:1. Introduction

1.1. Previous Work

1.1.1. Geometric Transformations

1.1.2. Similarity Measures

- (a)

- Area-based methods operate with the entire image area, usually relying on similarity and information-theoretic measures [1,16]. On the one hand, area-based methods are computationally heavier than the feature-based strategies (see point b) because of the need to compute a functional by taking into consideration the whole image or generally large image regions. On the other hand, the accuracy achievable by such techniques is generally higher than that achieved by feature-based algorithms [1].

- (b)

- Feature-based methods operate on spatial features extracted from the input and reference images rather than on the whole image area. They are generally faster but often less accurate than area-based methods, and the accuracy of the registration result depends on the accuracy of the feature extraction method that is being used. There exist different strategies for the extraction of informative features. In particular, feature-point registration algorithms [1] extract a set of distinctive and highly informative individual points from both images and then find the geometric transformation that matches them. Feature points are named in different ways, including control points, tie-points, and landmarks. Well-known approaches in this area are those based on scale-invariant feature transforms (SIFT) [17], speeded-up robust features (SURF) [18], maximally stable extremal regions (MSER) [19], and Harris point detectors [20]. Other features of interest may be curvilinear and could be extracted by using edge detection algorithms [1], generalized Hough transforms [21], or stochastic geometry (e.g., marked point processes, MPPs) [22].

- (c)

- Hybrid methods are aimed at taking advantage of both the accuracy of area-based methods and the limited computational burden of feature-based methods. An example is provided by [23], where image registration is initialized using a SIFT-based strategy, and the resulting parameters are then refined via an area-based solution. Similarly, the methods in [24,25] are based on the extraction from planetary images of ellipsoidal features representing the craters using an MPP model. The result of such feature-based registration step is then further refined using an area-based strategy that makes use of the highly accurate mutual information similarity measure. Other kinds of hybrid registration methods are reported in [26], where global intensity measures are integrated with geometric configurational constraints, and [27], where SIFT and mutual information are combined in a coarse-to-fine strategy.

1.1.3. Optimization Strategies

1.1.4. Multisensor Image Registration

2. Materials and Methods

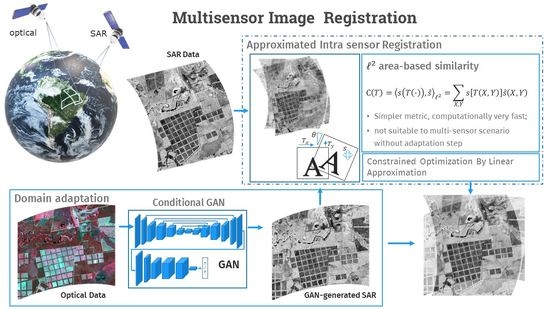

2.1. Assumptions and Overall Architecture of the Proposed Method

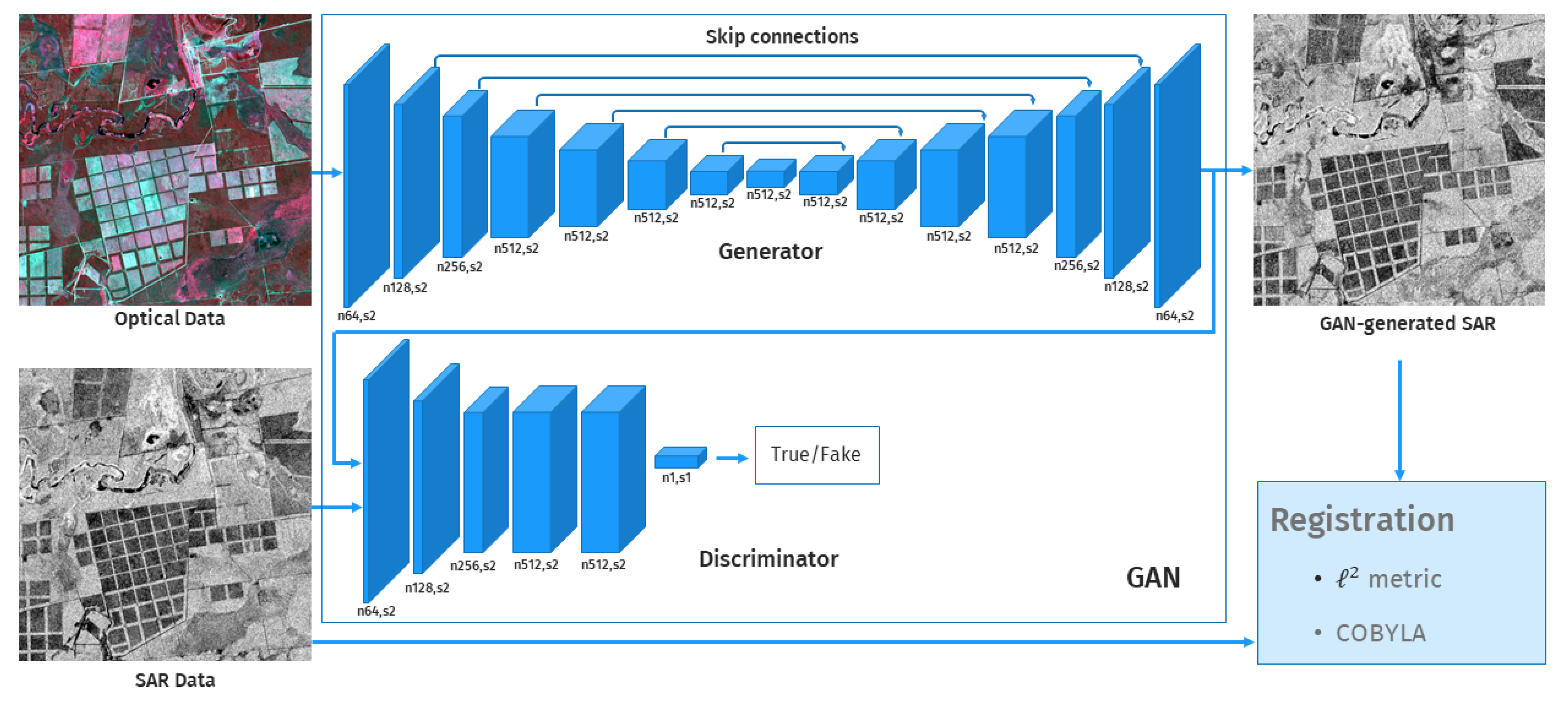

2.1.1. Conditional GAN Stage

2.1.2. Transformation Stage

2.1.3. Matching Strategy

2.2. Data Sets for Experiments

- (1)

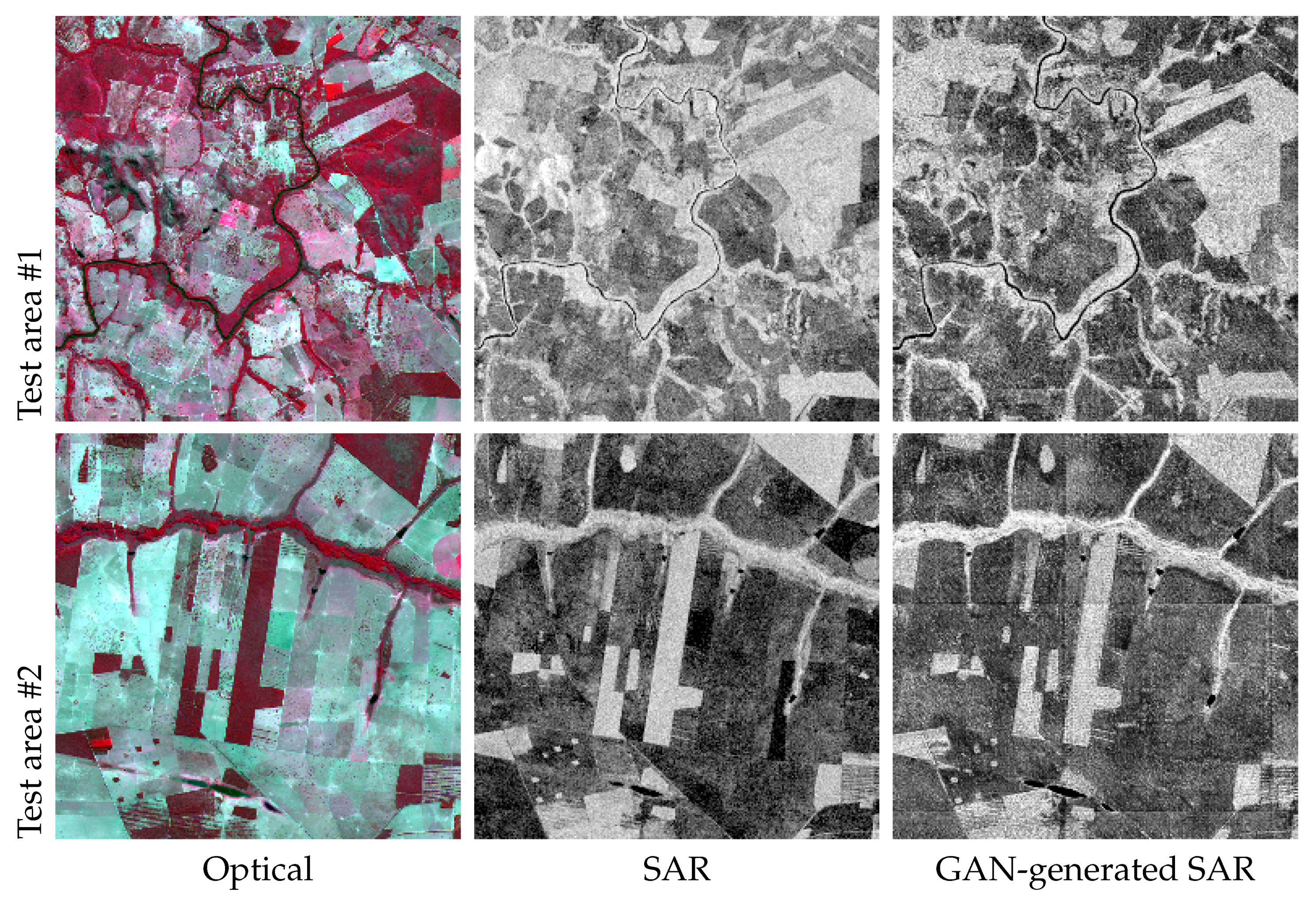

- Paraguay: The dataset is composed of Sentinel-1 (S1) SAR and Sentinel-2 (S2) optical data acquired in 2018 over Amazonia. The study area is north of Pozo Colorado, Paraguay, west of the namesake river, and is mainly composed of grass, crops, forests, and waterways. S2 provides multispectral data with 13 bands in the visible, near-infrared (NIR), and short wave infrared. The spatial resolution includes 10, 20, and 60 m depending on the bands, with 10 m available for the blue, green, red, and NIR channels. The spatial resolution of S1 is 5 m in stripmap mode.

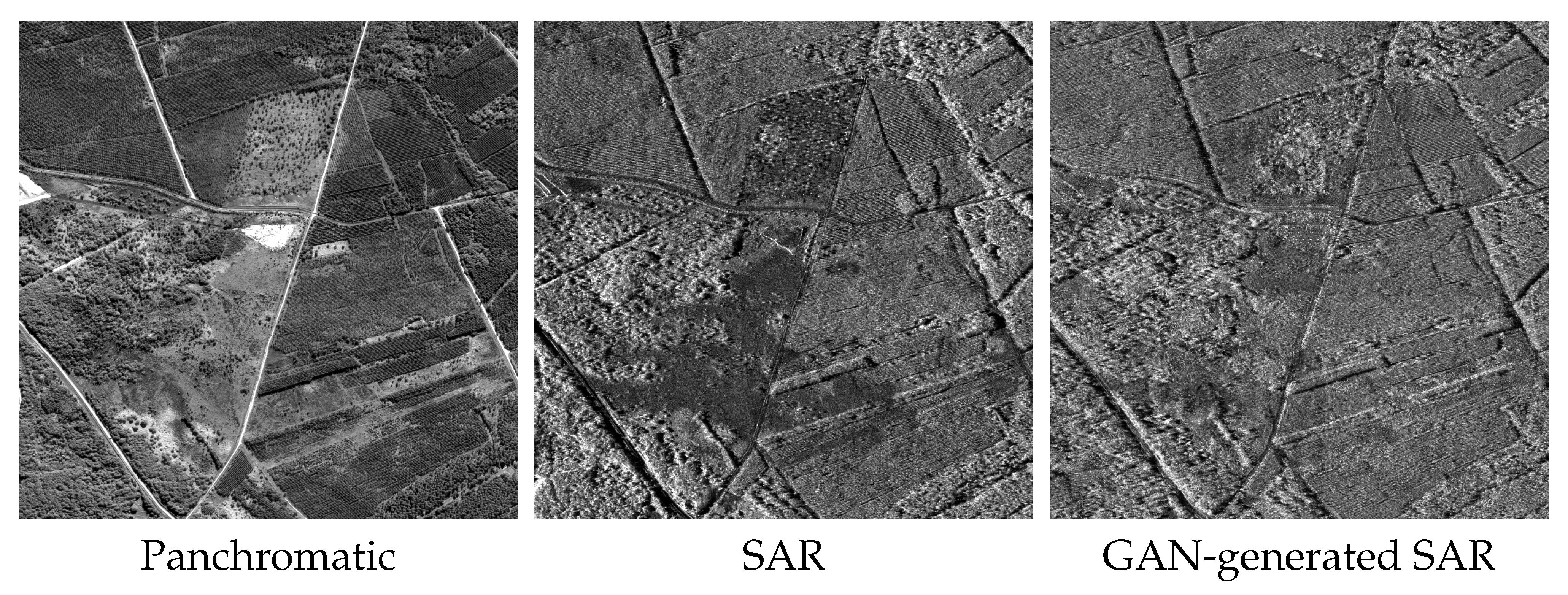

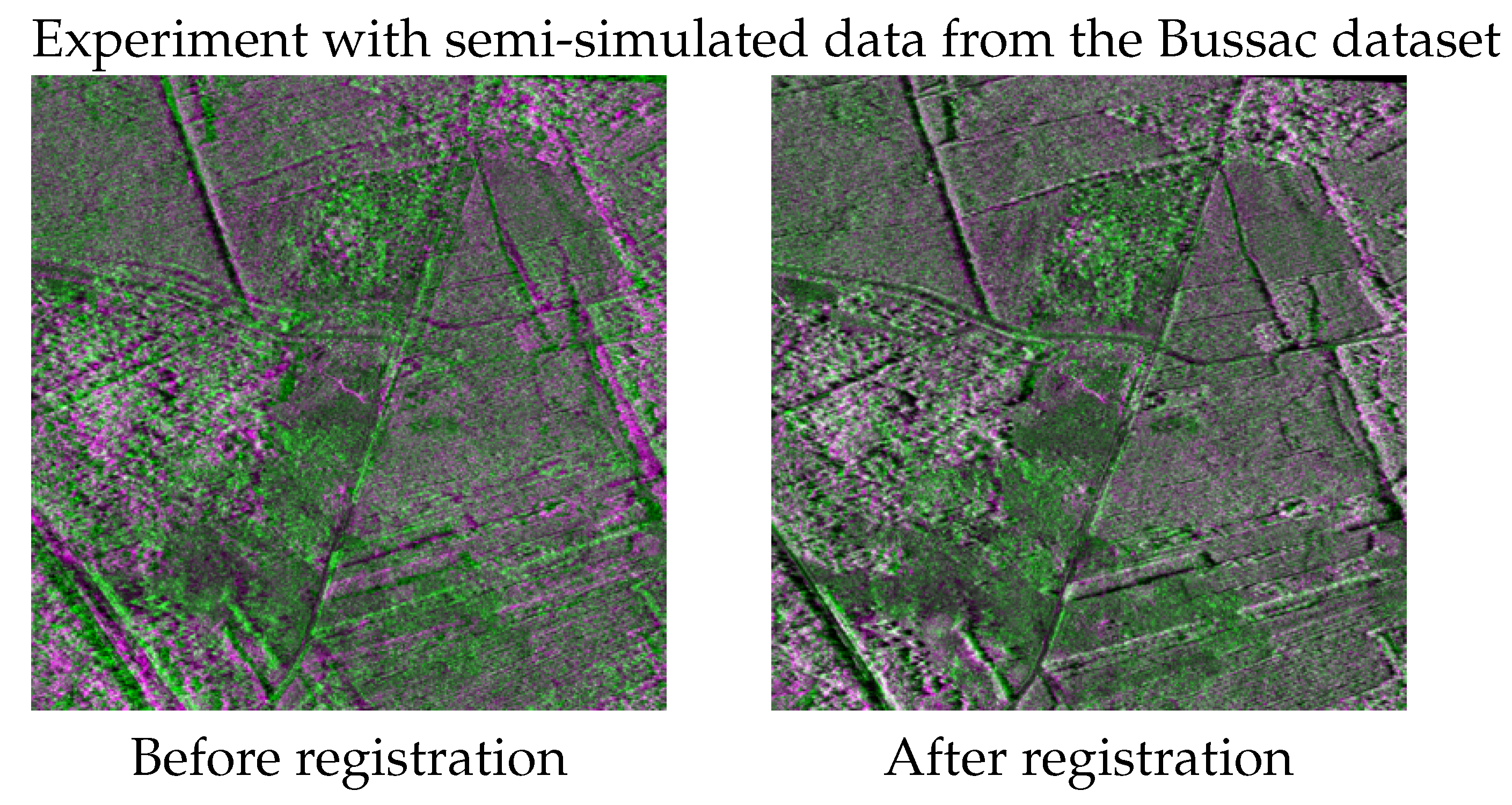

- (2)

- Bussac: The second dataset is made of a Pléiades panchromatic image and a COSMO-SkyMed SAR image acquired in Spotlight mode on an ascending orbit over a countryside area near Bussac-Forêt, France. The study zone is composed of woods, fields, roads, and a few buildings. The radar image is acquired in the right-looking direction and has pixel spacing of 0.5 m, while the spatial resolution is approximately 1 m. The resolution of the panchromatic image is 0.5 m. The optical image has been projected into the radar geometry using the ALOS digital elevation model for the area of Bussac-Forêt.

- (3)

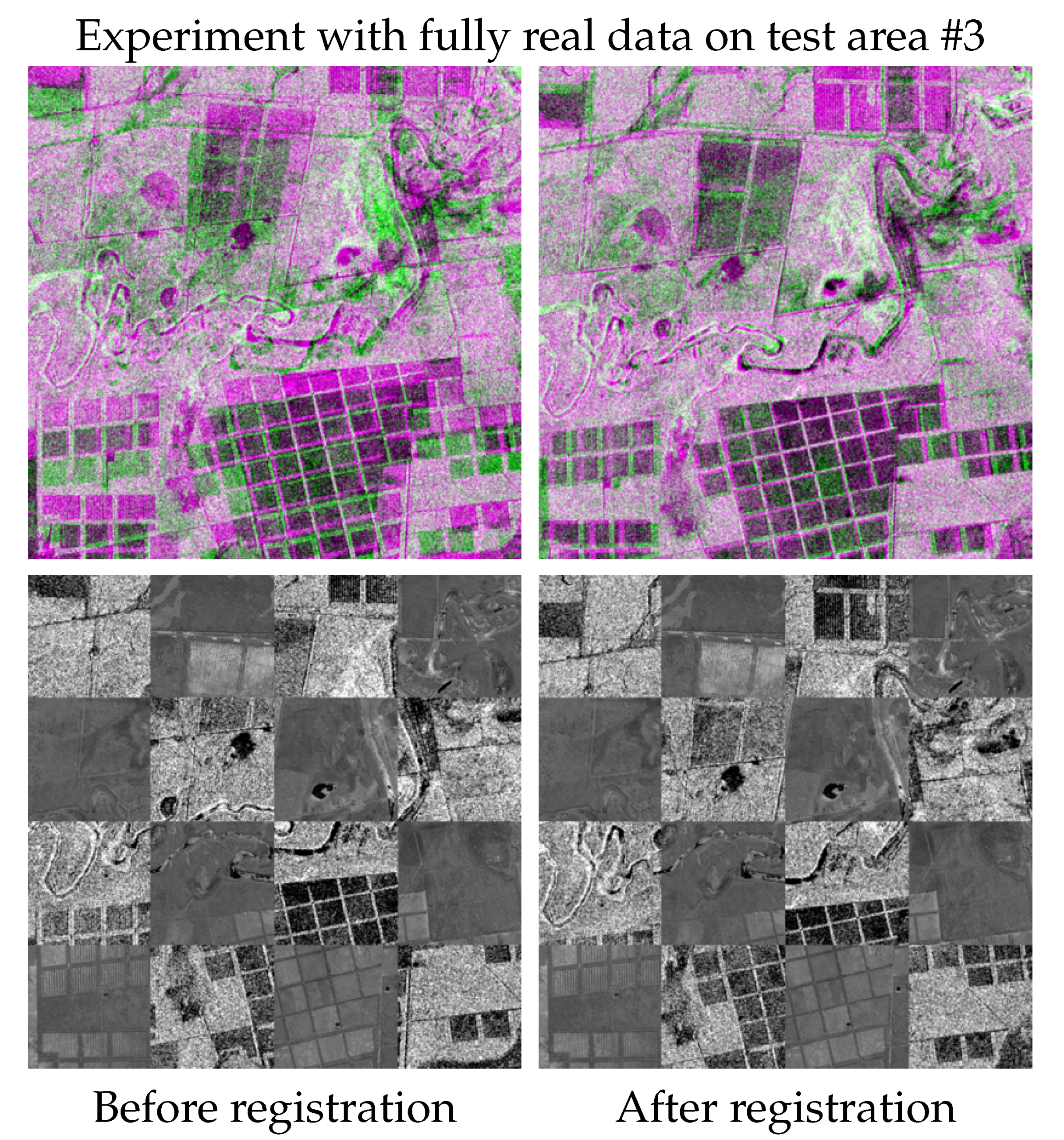

- Brazil: The third dataset is composed again of S1 and S2 data, acquired in 2018 over the Amazon. The area is over the city of Aquidauana, in the namesake region in Brazil. The landscape is composed of the city of Acquidauana, crops, forests, and some mountainous reliefs. The data composition is the same as in the Paraguay dataset. The proposed method, trained with the S1 and S2 data of the Paraguay dataset, was applied to this further dataset for testing purposes in order to investigate the robustness of an already trained model to variations in the distribution of the input data, provided they were acquired by the same sensors.

3. Results

3.1. Preprocessing and Setup

- (1)

- Paraguay: The red, green, and NIR optical channels with 10 m spatial resolution were considered for experiments. The input SAR data were obtained by applying the multitemporal despeckling method in [69] to a time series of seven S1 acquisitions. According to the assumptions of the proposed approach, the optical and SAR images to be registered are supposed to share the same pixel lattice. The Paraguay dataset corresponds to optical-SAR pairs with different spatial resolutions. S1 stripmap imagery and S2 visible and NIR channels have 5 and 10 m resolutions, respectively. Therefore, as a preprocessing step, the S1 input was downsampled on the pixel lattice of the S2 image prior to the application of the proposed method. The S2 and the despeckled SAR images were manually registered to be used for training the cGAN and testing the proposed method. The training set was composed of 187 patches ( pixels each) drawn from the East part of the scene. The number of training epochs was set at 250.

- (2)

- Bussac: The final SAR image was obtained by averaging the results of two different despeckling techniques applied to the COSMO-SkyMed image: a Wiener filter applied with a homomorphic filtering strategy (logarithmic scale) and the method in [70], which applies non-local filtering by means of wavelet shrinking. No resampling was necessary in the case of the Bussac dataset because the spatial resolution of the Pléiades panchromatic image is equal to the pixel spacing of the COSMO-SkyMed Spotlight image ( m). The entire COSMO-SkyMed image was approximately pixels, and around 75% of the scene was used to train the cGAN. The Pléiades panchromatic image was manually warped to the SAR grid, so there were paired patches to be used for training. Even after this manual step, the images could still exhibit residual subpixel error. The training set was made of 101 patches ( pixels each) drawn from the whole scene except for the southwest corner, which was used for testing the accuracy of the registration result. In this case, the default architecture of pix2pix, which is aimed at operating with 3-channel imagery, was modified to map from the single-channel panchromatic to the single-channel SAR domains. The number of training epochs was experimentally fixed at 200. The amount of training data was, in fact, rather limited, so it was important to minimize the risk of overfitting.

- (3)

- Brazil: In this case, the data are of the same kind of those in Paraguay. The same preprocessing strategy as in the case of the Paraguay dataset was adopted. Here, the S2 and the despeckled SAR images were manually registered only for testing purposes since the cGAN trained on the Paraguay dataset was considered here, without any fine-tuning or retraining.

3.1.1. Hyperparameter Tuning

3.1.2. Competing Methods

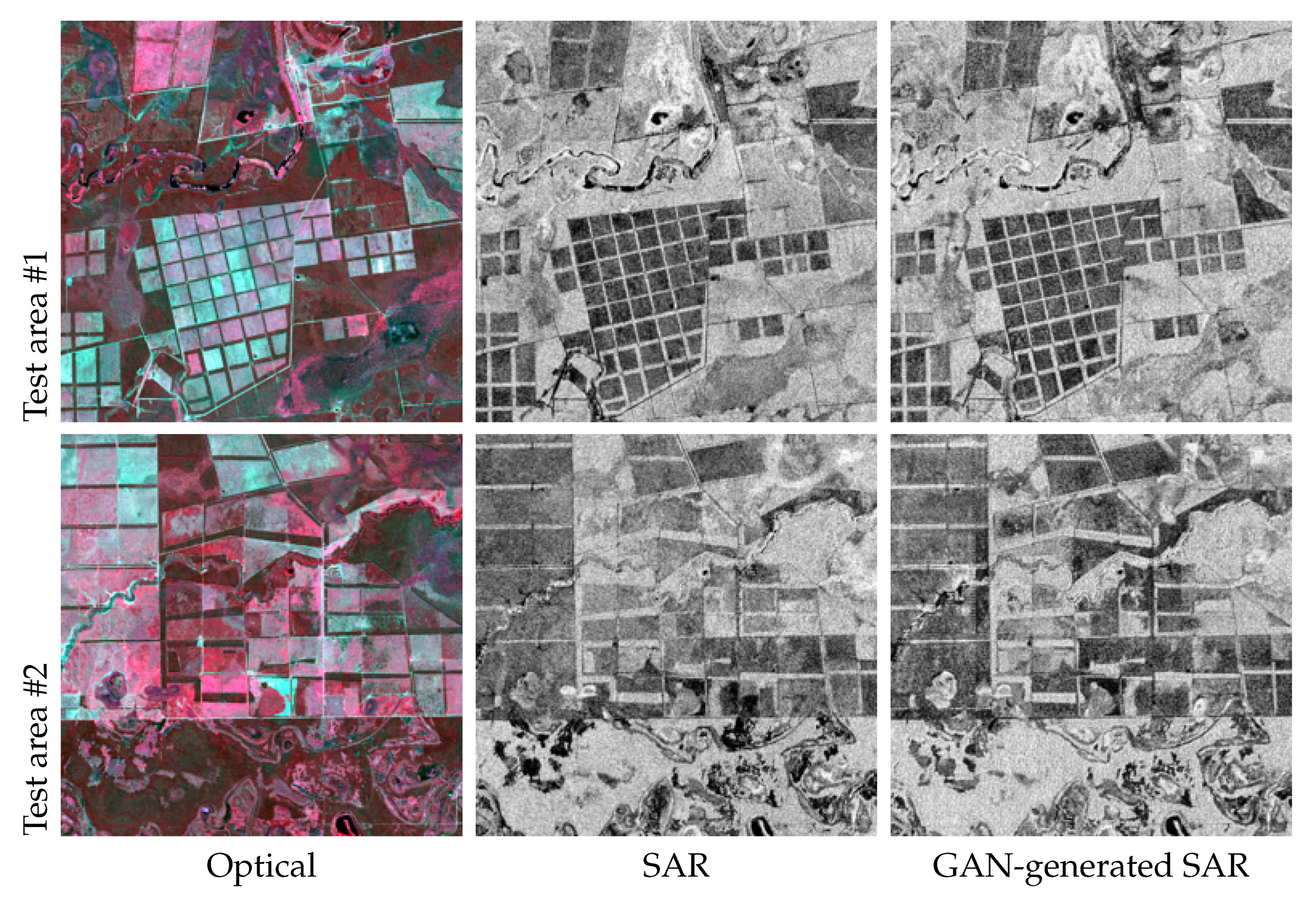

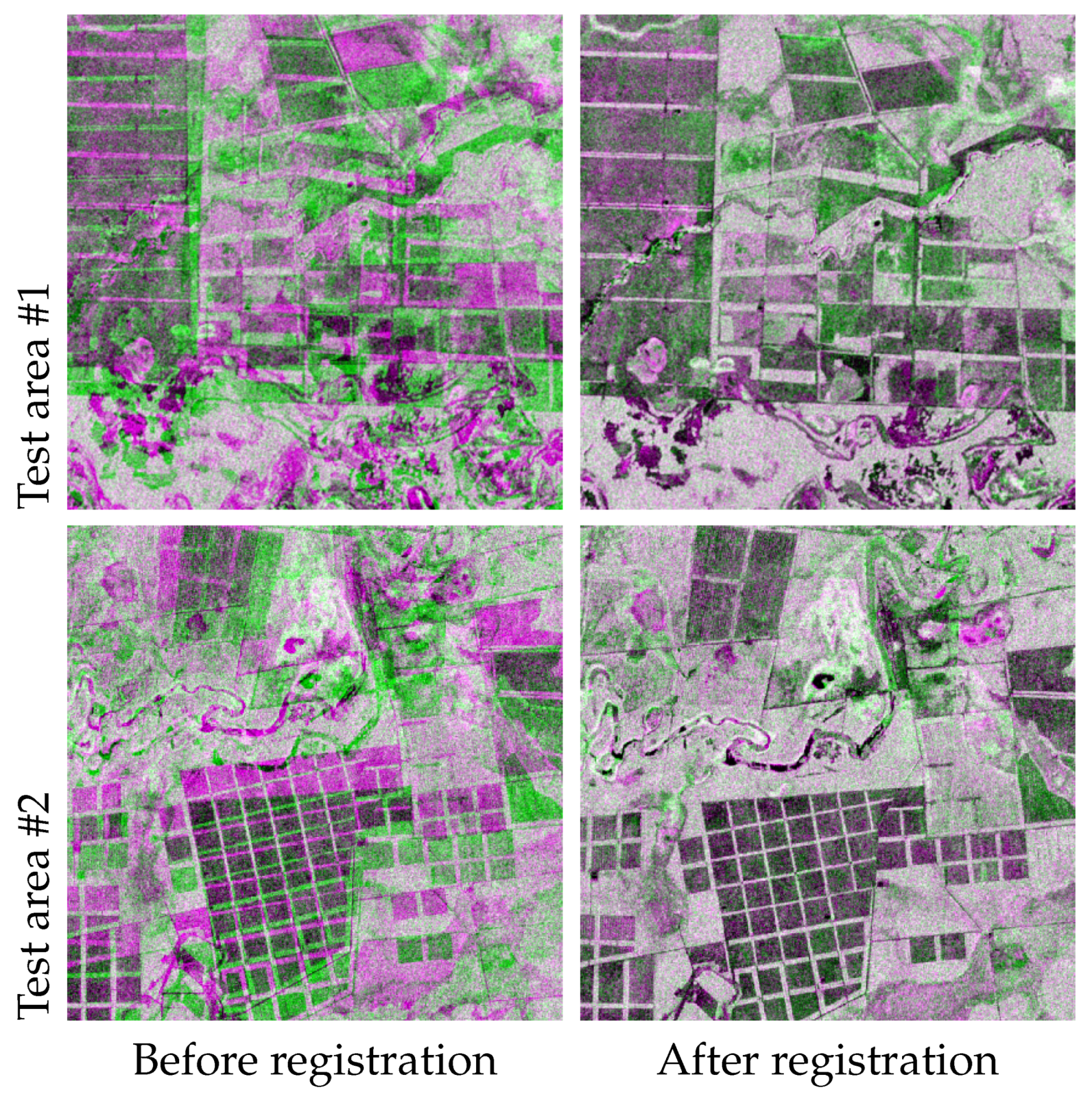

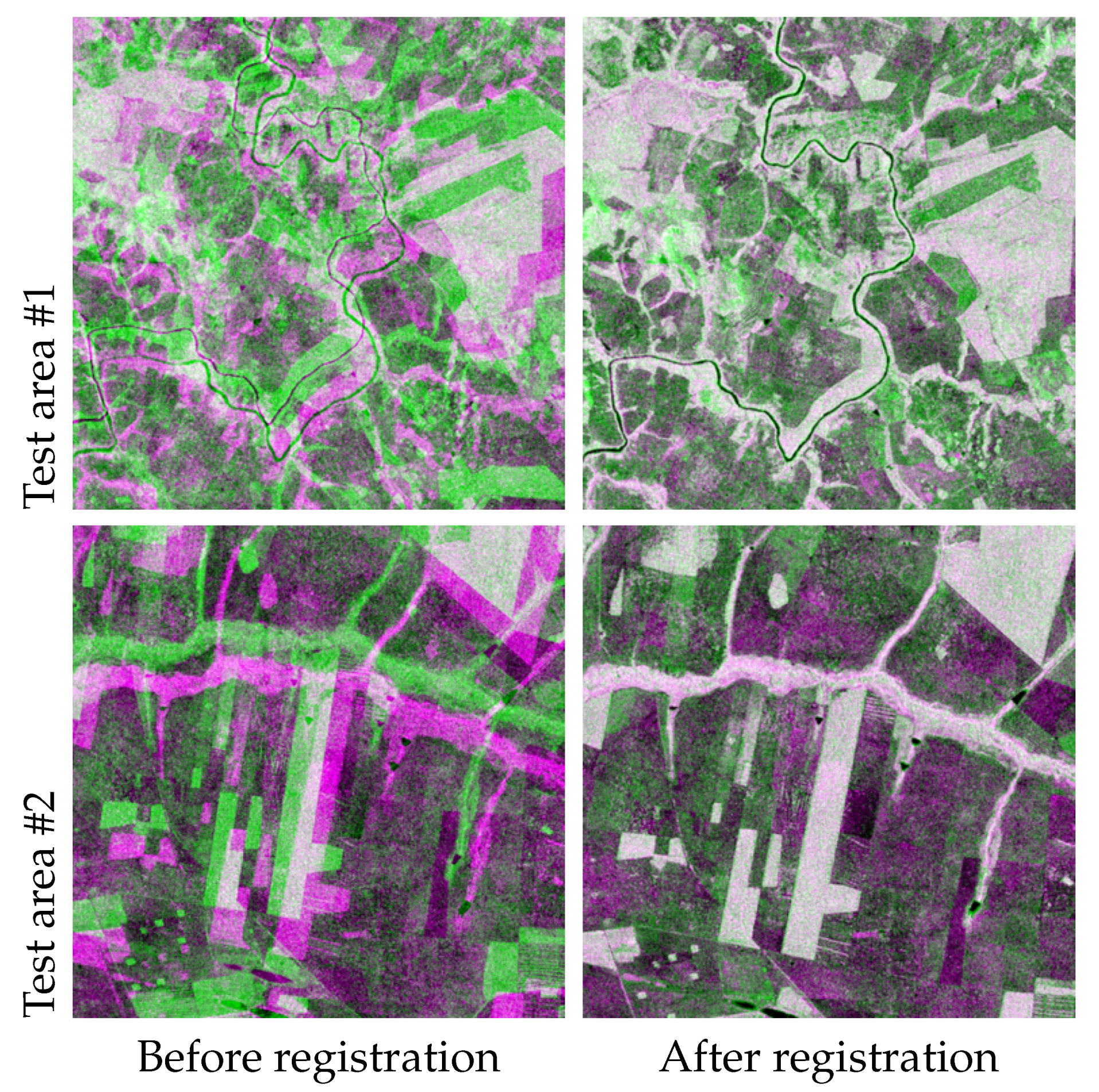

3.2. Experimental Results

3.2.1. Results from the Paraguay Dataset

3.2.2. Results on the Bussac Dataset

3.2.3. Results on the Brazil Dataset

4. Discussion

4.1. Paraguay Results

4.2. Bussac Results

4.3. Brazil Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Le Moigne, J.; Netanyahu, N.S.; Eastman, R.D. (Eds.) Image Registration for Remote Sensing; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar] [CrossRef]

- Brown, L.G.; Gottesfeld, L. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Goshtasby, A.A. Image Registration: Principles, Tools and Methods; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Merkle, N.; Auer, S.; Müller, R.; Reinartz, P. Exploring the Potential of Conditional Adversarial Networks for Optical and SAR Image Matching. IEEE J. Sel. Top. Appl. Earth Observ. Rem. Sens. 2018, 11, 1811–1820. [Google Scholar] [CrossRef]

- Fuentes Reyes, M.; Auer, S.; Merkle, N.; Henry, C.; Schmitt, M. Sar-to-optical image translation based on conditional generative adversarial networks—Optimization, opportunities and limits. Remote Sens. 2019, 11, 2067. [Google Scholar] [CrossRef]

- Toriya, H.; Dewan, A.; Kitahara, I. SAR2OPT: Image alignment between multi-modal images using generative adversarial networks. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 923–926. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-To-Image Translation With Conditional Adversarial Networks. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Huang, X.; Wen, L.; Ding, J. SAR and optical image registration method based on improved CycleGAN. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Xiamen, China, 26–29 November 2019; pp. 1–6. [Google Scholar]

- Powell, M.J.D. A Direct Search Optimization Method That Models the Objective and Constraint Functions by Linear Interpolation. In Advances in Optimization and Numerical Analysis; Gomez, S., Hennart, J.P., Eds.; Springer: Dordrecht, The Netherlands, 1994; pp. 51–67. [Google Scholar] [CrossRef]

- Maggiolo, L.; Solarna, D.; Moser, G.; Serpico, S.B. Automatic area-based registration of optical and SAR images through generative adversarial networks and a correlation-type metric. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020. [Google Scholar]

- Pinel-Puysségur, B.; Maggiolo, L.; Roux, M.; Gasnier, N.; Solarna, D.; Moser, G.; Serpico, S.B.; Tupin, F. Experimental Comparison of Registration Methods for Multisensor Sar-Optical Data. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3022–3025. [Google Scholar]

- Bennett, M.K. Affine and Projective Geometry; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Ash, R.B. Information Theory; Courier Corporation: Chelmsford, MA, USA, 1990. [Google Scholar]

- Zagorchev, L.; Goshtasby, A. A comparative study of transformation functions for nonrigid image registration. IEEE Trans. Image Process. 2006, 15, 529–538. [Google Scholar] [CrossRef]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vision Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the International Conference on Computer Vision, ICCV ’99, Kerkyra, Greece, 20–27 September 1999; IEEE Computer Society: Washington, DC, USA, 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the Computer Vision—ECCV 2006, Graz, Austria, 7–13 May 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar] [CrossRef]

- Donoser, M.; Bischof, H. Efficient Maximally Stable Extremal Region (MSER) Tracking. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 1, pp. 553–560. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Duda, R.O.; Hart, P.E. Use of the Hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Descombes, X.; Minlos, R.; Zhizhina, E. Object Extraction Using a Stochastic Birth-and-Death Dynamics in Continuum. J. Math. Imaging Vis. 2009, 33, 347–359. [Google Scholar] [CrossRef]

- Huo, C.; Chen, K.; Zhou, Z.; Lu, H. Hybrid approach for remote sensing image registration. In Proceedings of the MIPPR 2007: Remote Sensing and GIS Data Processing and Applications; and Innovative Multispectral Technology and Applications. International Society for Optics and Photonics, Wuhan, China, 15–17 November 2007; Volume 6790, p. 679006. [Google Scholar]

- Solarna, D.; Gotelli, A.; Le Moigne, J.; Moser, G.; Serpico, S.B. Crater Detection and Registration of Planetary Images Through Marked Point Processes, Multiscale Decomposition, and Region-Based Analysis. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6039–6058. [Google Scholar] [CrossRef]

- Solarna, D.; Moser, G.; Le Moigne, J.; Serpico, S.B. Planetary crater detection and registration using marked point processes, multiple birth and death algorithms, and region-based analysis. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2337–2340. [Google Scholar]

- Huang, X.; Sun, Y.; Metaxas, D.; Sauer, F.; Xu, C. Hybrid image registration based on configural matching of scale-invariant salient region features. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 167. [Google Scholar]

- Gong, M.; Zhao, S.; Jiao, L.; Tian, D.; Wang, S. A novel coarse-to-fine scheme for automatic image registration based on SIFT and mutual information. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4328–4338. [Google Scholar] [CrossRef]

- Maes, F.; Collignon, A.; Vandermeulen, D.; Marchal, G.; Suetens, P. Multimodality image registration by maximization of mutual information. IEEE Trans. Med. Imaging 1997, 16, 187–198. [Google Scholar] [CrossRef]

- Thevenaz, P.; Unser, M. Optimization of mutual information for multiresolution image registration. IEEE Trans. Image Process. 2000, 9, 2083–2099. [Google Scholar] [CrossRef] [PubMed]

- Loeckx, D.; Slagmolen, P.; Maes, F.; Vandermeulen, D.; Suetens, P. Nonrigid Image Registration Using Conditional Mutual Information. IEEE Trans. Med. Imaging 2010, 29, 19–29. [Google Scholar] [CrossRef] [PubMed]

- Parzen, E. On Estimation of a Probability Density Function and Mode. Ann. Math. Stat. 1962, 33, 1065–1076. [Google Scholar] [CrossRef]

- Gao, W.; Oh, S.; Viswanath, P. Demystifying fixed k-nearest neighbor information estimators. IEEE Trans. Inf. Theory 2018, 64, 5629–5661. [Google Scholar] [CrossRef]

- Hristov, D.H.; Fallone, B.G. A grey-level image alignment algorithm for registration of portal images and digitally reconstructed radiographs. Med. Phys. 1996, 23, 75–84. [Google Scholar] [CrossRef]

- Sarvaiya, J.; Patnaik, S.; Bombaywala, S. Image Registration by Template Matching Using Normalized Cross-Correlation. In Proceedings of the 2009 International Conference on Advances in Computing, Control, and Telecommunication Technologies, Bangalore, India, 28–29 December 2009; pp. 819–822. [Google Scholar] [CrossRef]

- Mitchell, M. An Introduction to Genetic Algorithms; MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- van Laarhoven, P.J.M.; Aarts, E.H.L. Simulated annealing. In Simulated Annealing: Theory and Applications; Springer: Dordrecht, The Netherlands, 1987; pp. 7–15. [Google Scholar] [CrossRef]

- Boyd, S.; Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Le Moigne, J. Parallel registration of multisensor remotely sensed imagery using wavelet coefficients. In Proceedings of the Wavelet Applications. International Society for Optics and Photonics, Orlando, FL, USA, 4–8 April 1994; Volume 2242, pp. 432–443. [Google Scholar]

- Li, H.; Manjunath, B.; Mitra, S.K. A contour-based approach to multisensor image registration. IEEE Trans. Image Process. 1995, 4, 320–334. [Google Scholar] [CrossRef]

- Li, H.; Manjunath, B.; Mitra, S.K. Optical-to-SAR image registration using the active contour model. In Proceedings of the 27th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 1–3 November 1993; pp. 568–572. [Google Scholar]

- Chen, H.M.; Arora, M.K.; Varshney, P.K. Mutual information-based image registration for remote sensing data. Int. J. Remote Sens. 2003, 24, 3701–3706. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fan, B.; Huo, C.; Pan, C.; Kong, Q. Registration of optical and SAR satellite images by exploring the spatial relationship of the improved SIFT. IEEE Geosci. Remote Sens. Lett. 2012, 10, 657–661. [Google Scholar] [CrossRef]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-like algorithm for SAR images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 453–466. [Google Scholar] [CrossRef]

- Ma, W.; Wen, Z.; Wu, Y.; Jiao, L.; Gong, M.; Zheng, Y.; Liu, L. Remote sensing image registration with modified SIFT and enhanced feature matching. IEEE Geosci. Remote Sens. Lett. 2016, 14, 3–7. [Google Scholar] [CrossRef]

- Xiang, Y.; Wang, F.; You, H. OS-SIFT: A robust SIFT-like algorithm for high-resolution optical-to-SAR image registration in suburban areas. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3078–3090. [Google Scholar] [CrossRef]

- Woo, J.; Stone, M.; Prince, J.L. Multimodal registration via mutual information incorporating geometric and spatial context. IEEE Trans. Image Process. 2014, 24, 757–769. [Google Scholar] [CrossRef] [PubMed]

- Ye, Y.; Shan, J.; Bruzzone, L.; Shen, L. Robust registration of multimodal remote sensing images based on structural similarity. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2941–2958. [Google Scholar] [CrossRef]

- Byun, Y.; Choi, J.; Han, Y. An area-based image fusion scheme for the integration of SAR and optical satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2212–2220. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, W.; Miao, Q.; Wang, S. Multimodal continuous ant colony optimization for multisensor remote sensing image registration with local search. Swarm Evol. Comput. 2019, 47, 89–95. [Google Scholar] [CrossRef]

- Gadermayr, M.; Heckmann, L.; Li, K.; Bähr, F.; Müller, M.; Truhn, D.; Merhof, D.; Gess, B. Image-to-Image Translation for Simplified MRI Muscle Segmentation. Front. Radiol. 2021, 1, 3. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful image colorization. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 649–666. [Google Scholar]

- Beaulieu, M.; Foucher, S.; Haberman, D.; Stewart, C. Deep image-to-image transfer applied to resolution enhancement of sentinel-2 images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2611–2614. [Google Scholar]

- Jing, Y.; Yang, Y.; Feng, Z.; Ye, J.; Yu, Y.; Song, M. Neural Style Transfer: A Review. IEEE Trans. Vis. Comput. Graph. 2020, 26, 3365–3385. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. A Neural Algorithm of Artistic Style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- Makhzani, A.; Shlens, J.; Jaitly, N.; Goodfellow, I.; Frey, B. Adversarial Autoencoders. arXiv 2015, arXiv:1511.05644. [Google Scholar]

- Zhu, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Generative adversarial networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5046–5063. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Liu, Q.; Zhou, H.; Xu, Q.; Liu, X.; Wang, Y. PSGAN: A generative adversarial network for remote sensing image pan-sharpening. IEEE Trans. Geosci. Remote Sens. 2020, 59, 10227–10242. [Google Scholar] [CrossRef]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud removal with fusion of high resolution optical and SAR images using generative adversarial networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef]

- Niu, X.; Gong, M.; Zhan, T.; Yang, Y. A conditional adversarial network for change detection in heterogeneous images. IEEE Geosci. Rem. Sens. Lett. 2018, 16, 45–49. [Google Scholar] [CrossRef]

- Hughes, L.H.; Marcos, D.; Lobry, S.; Tuia, D.; Schmitt, M. A deep learning framework for matching of SAR and optical imagery. ISPRS J. Photogramm. Remote Sens. 2020, 169, 166–179. [Google Scholar] [CrossRef]

- Rozsypálek, Z.; Broughton, G.; Linder, P.; Rouček, T.; Blaha, J.; Mentzl, L.; Kusumam, K.; Krajník, T. Contrastive Learning for Image Registration in Visual Teach and Repeat Navigation. Sensors 2022, 22, 2975. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Sanchez-Esguevillas, A.; Arribas, J.I.; Carro, B. Supervised contrastive learning over prototype-label embeddings for network intrusion detection. Inf. Fusion 2022, 79, 200–228. [Google Scholar] [CrossRef]

- Hughes, L.H.; Merkle, N.; Bürgmann, T.; Auer, S.; Schmitt, M. Deep learning for SAR-optical image matching. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 4877–4880. [Google Scholar]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E.; Masters, B.R. Digital Image Processing; Pearson Education: Noida, India, 2009. [Google Scholar]

- Solarna, D.; Maggiolo, L.; Moser, G.; Serpico, S.B. A Tiling-based Strategy for Large-Scale Multisensor Image Registration. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022. [Google Scholar]

- Zhao, W.; Denis, L.; Deledalle, C.; Maitre, H.; Nicolas, J.M.; Tupin, F. Ratio-based multi-temporal SAR images denoising. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3552–3565. [Google Scholar] [CrossRef]

- Parrilli, S.; Poderico, M.; Angelino, C.V.; Verdoliva, L. A Nonlocal SAR Image Denoising Algorithm Based on LLMMSE Wavelet Shrinkage. IEEE Trans. Geosci. Remote Sens. 2012, 50, 606–616. [Google Scholar] [CrossRef]

- Powell, M.J.D. An efficient method for finding the minimum of a function of several variables without calculating derivatives. Comput. J. 1964, 7, 155–162. [Google Scholar] [CrossRef]

- Brent, R.P. An algorithm with guaranteed convergence for finding a zero of a function. Comput. J. 1971, 14, 422–425. [Google Scholar] [CrossRef]

- Nesterov, Y. Lectures on Convex Optimization; Springer: Berlin/Heidelberg, Germany, 2018; Volume 137. [Google Scholar]

- Zavorin, I.; Le Moigne, J. Use of multiresolution wavelet feature pyramids for automatic registration of multisensor imagery. IEEE Trans. Image Process. 2005, 14, 770–782. [Google Scholar] [CrossRef] [PubMed]

- Luppino, L.T.; Kampffmeyer, M.; Bianchi, F.M.; Moser, G.; Serpico, S.B.; Jenssen, R.; Anfinsen, S.N. Deep image translation with an affinity-based change prior for unsupervised multimodal change detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–22. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on COMPUTER Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

| Synthetic Transformation | Initial | Powell | Mutual Information Powell with Barrier Functions | COBYLA | Proposed |

|---|---|---|---|---|---|

| 1 | 111.02 | 1.24/743 | 0.95/1.05 | 2.34/0.9 | 0.34/0.35 |

| 2 | 96.38 | 1.06/739 | 0.92/0.9 | 2.29/3.82 | 0.24/0.22 |

| 3 | 88.92 | 61.7/0.93 | 61.8/0.98 | 18.4/13.5 | 0.22/0.42 |

| 4 | 34.37 | 35.5/1.36 | 39.4/1.07 | 1.01/4.14 | 0.22/0.27 |

| avg. | 82.67 | 24.9/371 | 25.7/1 | 6.01/5.59 | 0.25/0.31 |

| Synthetic Transformation | Initial | Powell | Mutual Information Powell with Barrier Functions | COBYLA | Proposed |

|---|---|---|---|---|---|

| 1 | 111.02 | 52.08 | 18.7 | 21.55 | 0.87 |

| 2 | 96.38 | 32.52 | 38.1 | 20.78 | 0.81 |

| 3 | 88.92 | 14.55 | 18.93 | 26.35 | 0.85 |

| 4 | 34.37 | 71.75 | 53.83 | 17.87 | 0.89 |

| avg. | 82.67 | 42.72 | 32.48 | 21.63 | 0.85 |

| Synthetic Transformation | Initial | Powell | Mutual Information Powell with Barrier Functions | COBYLA | Proposed |

|---|---|---|---|---|---|

| 1 | 111.02 | 863/88.68 | 1.10/90 | 2.57/6.93 | 1.08/0.89 |

| 2 | 96.38 | 1.21/0.97 | 38.1/38.1 | 4.54/1.31 | 1.07/0.95 |

| 3 | 88.92 | 0.83/1.25 | 0.63/1.34 | 11.3/16.6 | 1.09/1.07 |

| 4 | 34.37 | 0.42/1.4 | 1.45/1.2 | 3.97/0.84 | 1.08/0.91 |

| avg. | 82.67 | 14.2/22.9 | 10.3/23.3 | 5.61/6.43 | 1.08/0.96 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maggiolo, L.; Solarna, D.; Moser, G.; Serpico, S.B. Registration of Multisensor Images through a Conditional Generative Adversarial Network and a Correlation-Type Similarity Measure. Remote Sens. 2022, 14, 2811. https://doi.org/10.3390/rs14122811

Maggiolo L, Solarna D, Moser G, Serpico SB. Registration of Multisensor Images through a Conditional Generative Adversarial Network and a Correlation-Type Similarity Measure. Remote Sensing. 2022; 14(12):2811. https://doi.org/10.3390/rs14122811

Chicago/Turabian StyleMaggiolo, Luca, David Solarna, Gabriele Moser, and Sebastiano Bruno Serpico. 2022. "Registration of Multisensor Images through a Conditional Generative Adversarial Network and a Correlation-Type Similarity Measure" Remote Sensing 14, no. 12: 2811. https://doi.org/10.3390/rs14122811

APA StyleMaggiolo, L., Solarna, D., Moser, G., & Serpico, S. B. (2022). Registration of Multisensor Images through a Conditional Generative Adversarial Network and a Correlation-Type Similarity Measure. Remote Sensing, 14(12), 2811. https://doi.org/10.3390/rs14122811