Building Plane Segmentation Based on Point Clouds

Abstract

:1. Introduction

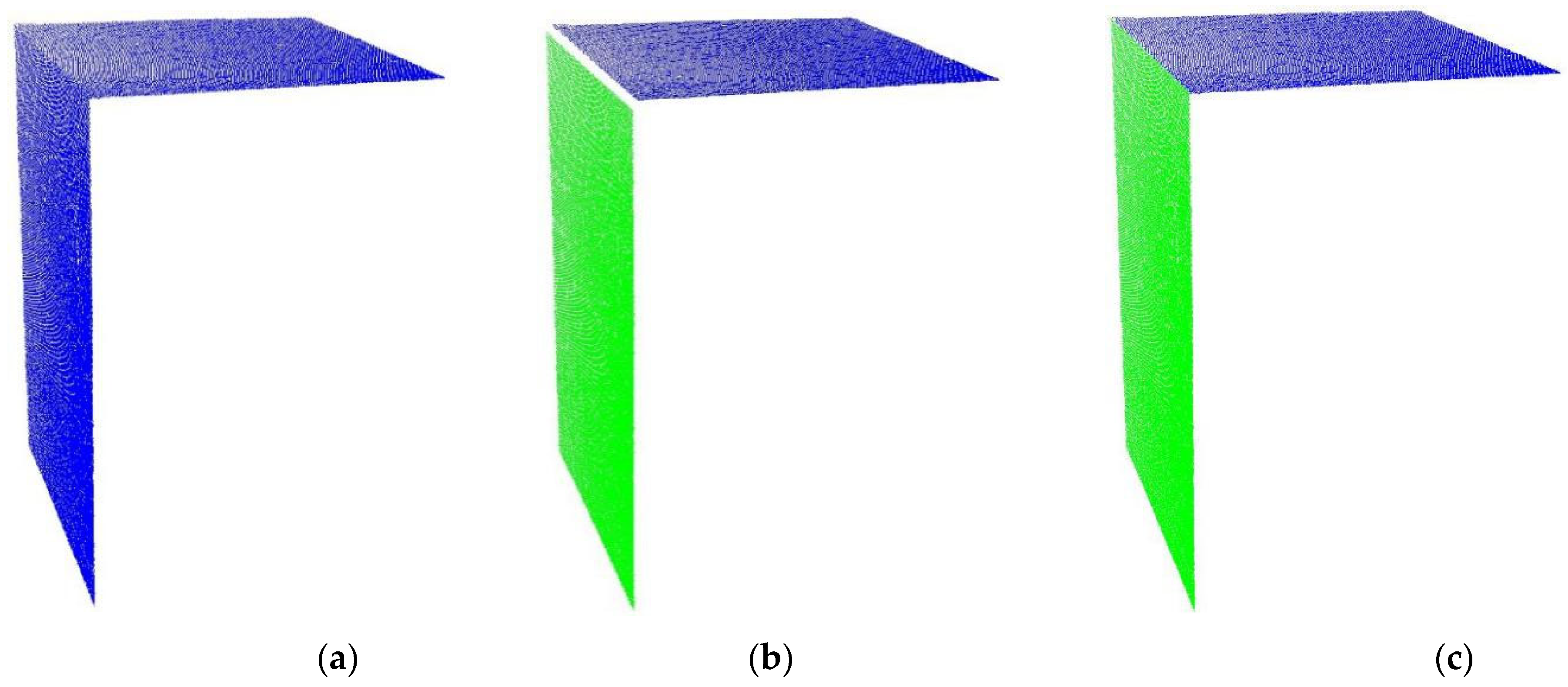

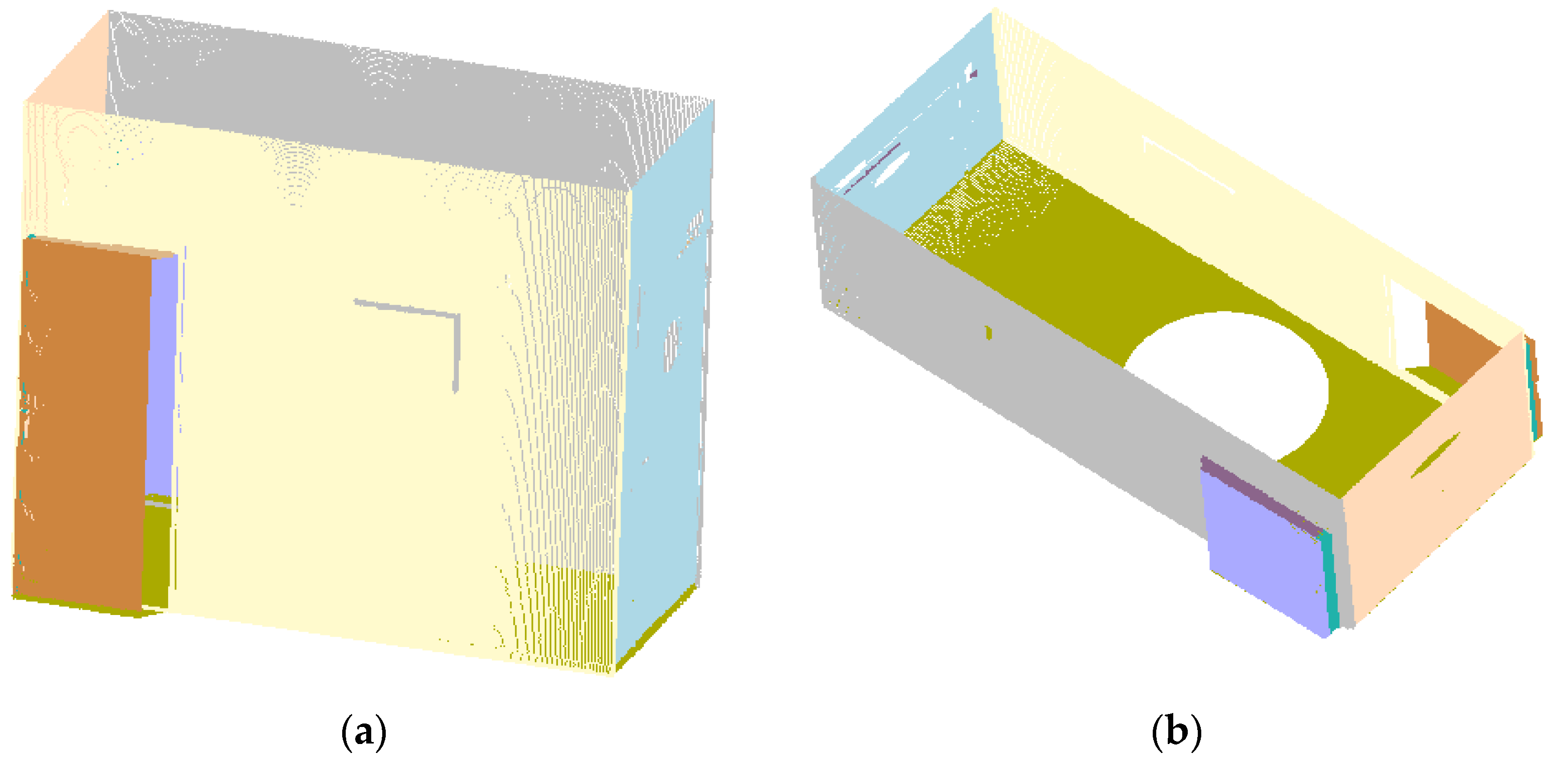

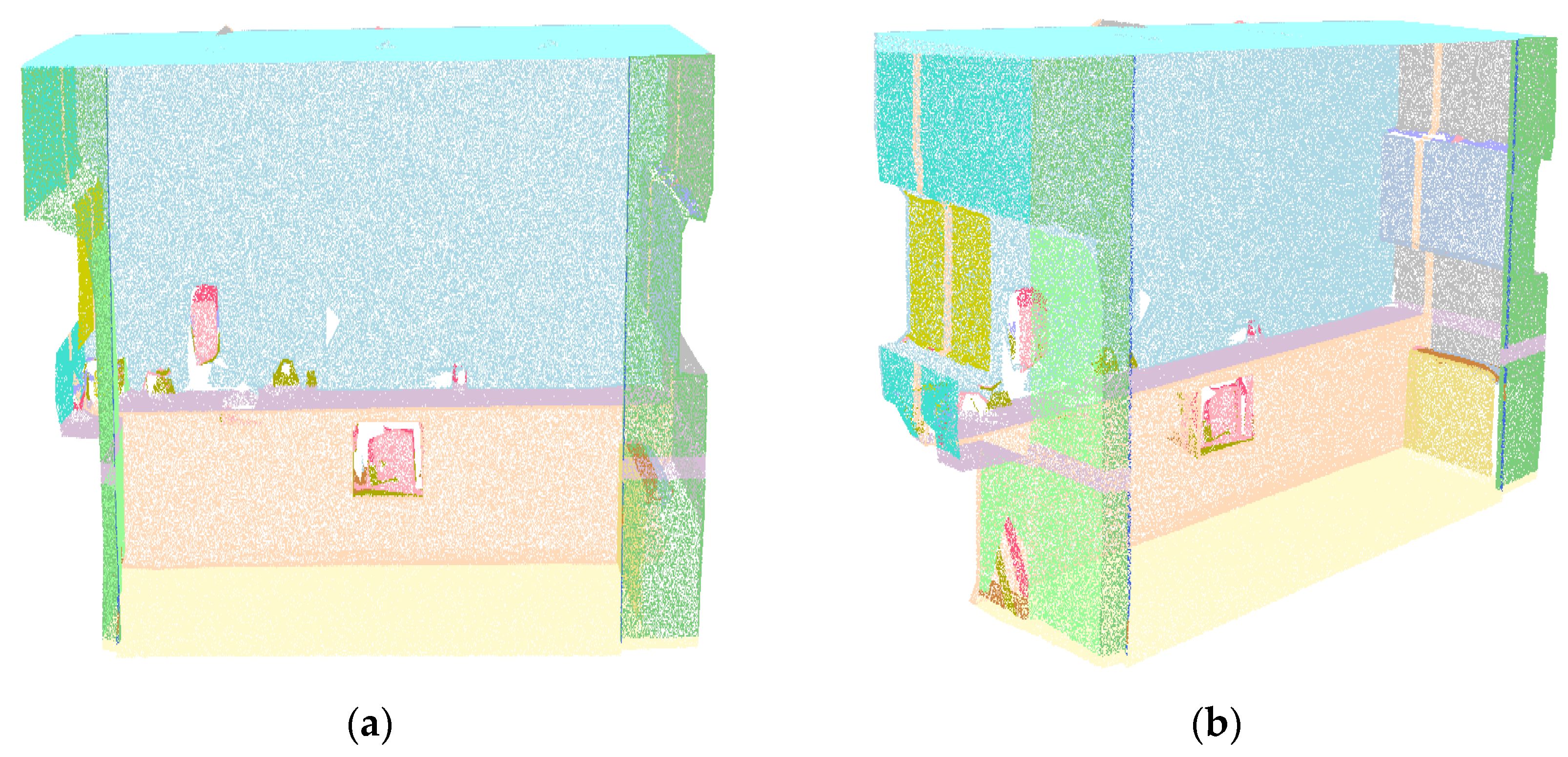

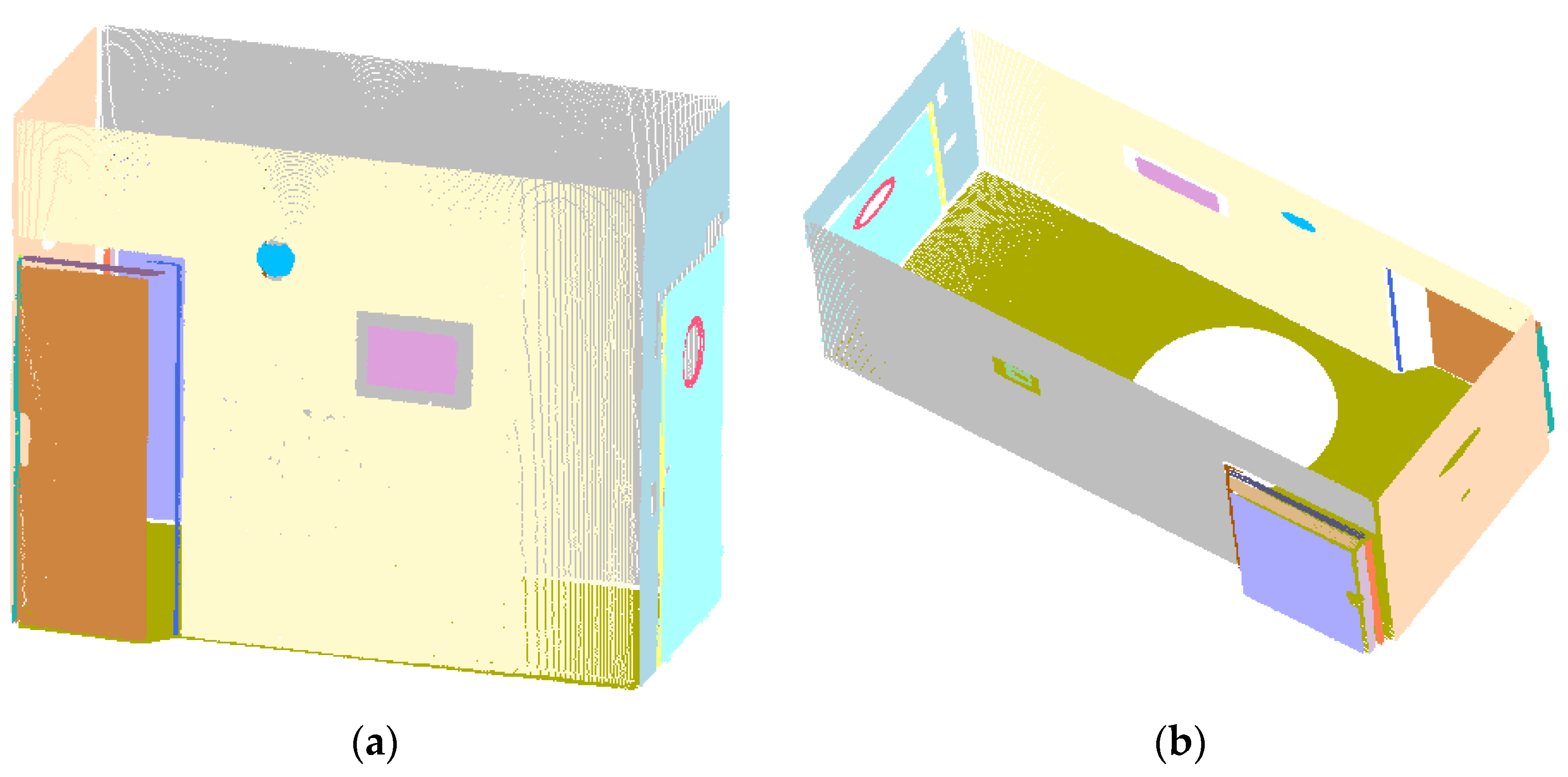

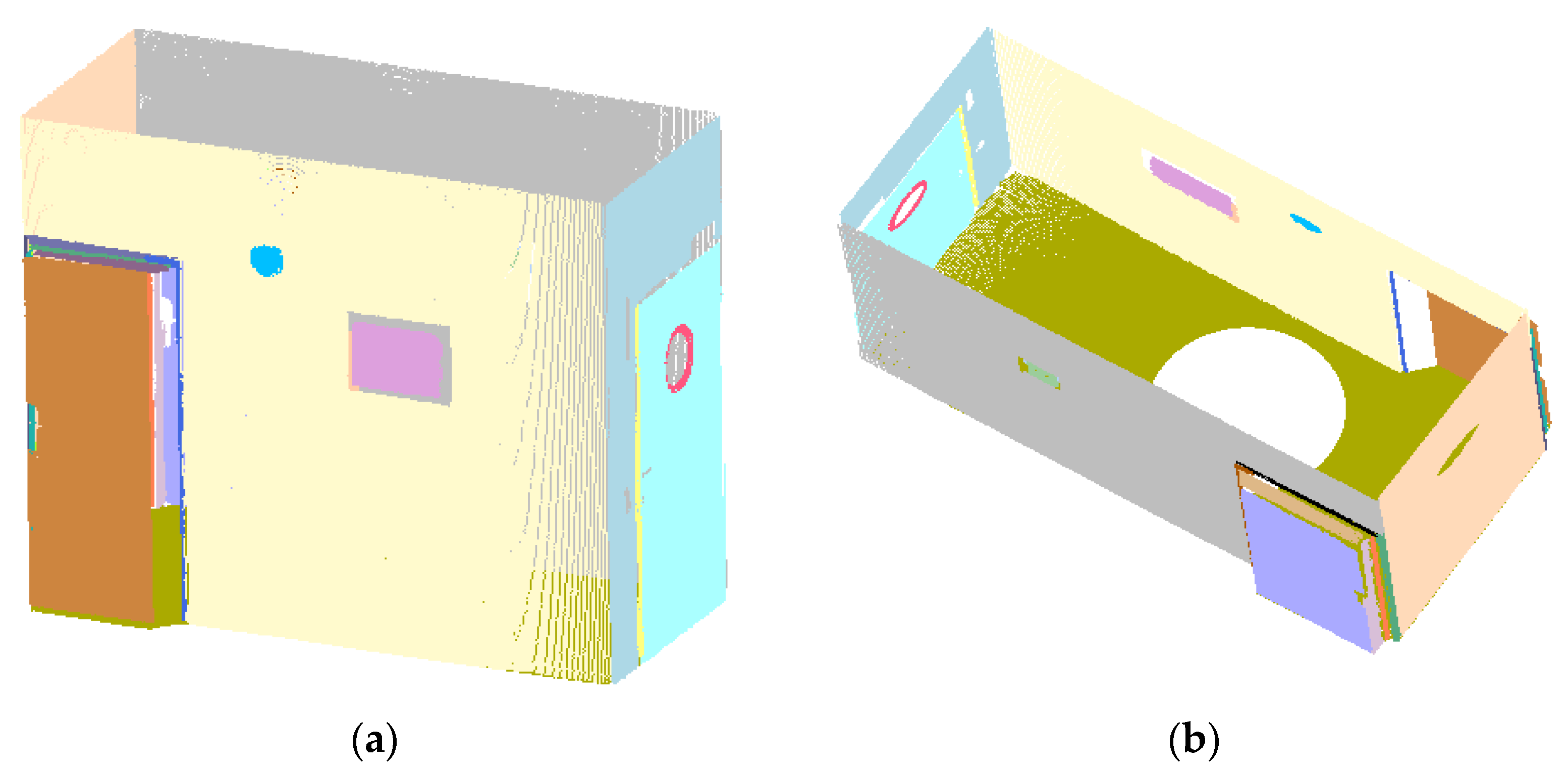

2. Methods

2.1. Coarse Extraction of Plane Points

- Calculate all points’ curvatures and add the minimum curvature point to the seed point set.

- Calculate the normal vector of the current seed point and its adjacent points. If the current seed point’s adjacent points not only meet the angle value between the normal vector of the points and the normal vector of the current seed point but also their curvature value is less than the set curvature value, the adjacent points to the seed point sequence.

- Delete the current seed point, and the adjacent points are regarded as new seed points to continue the region growing.

- This process will continue until the seed point sequence is empty.

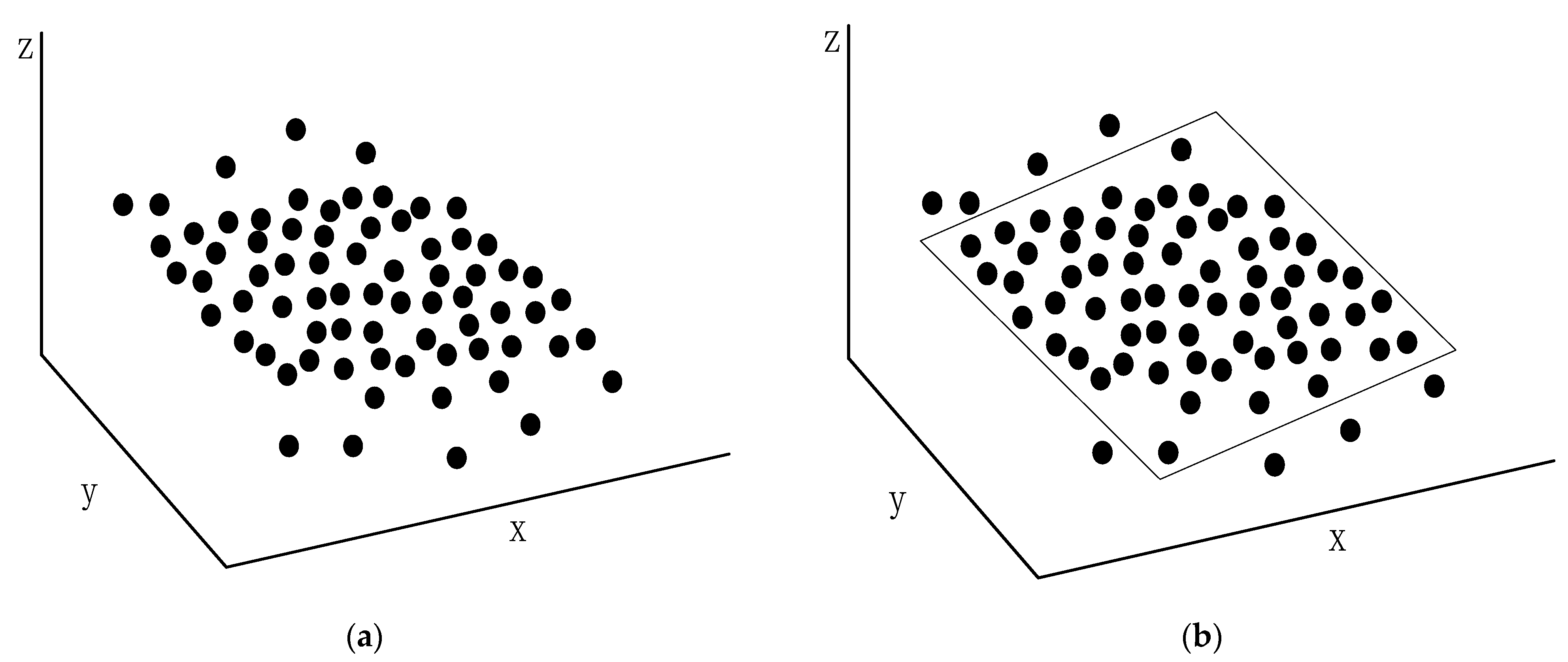

2.2. Optimal Extraction of Plane Points

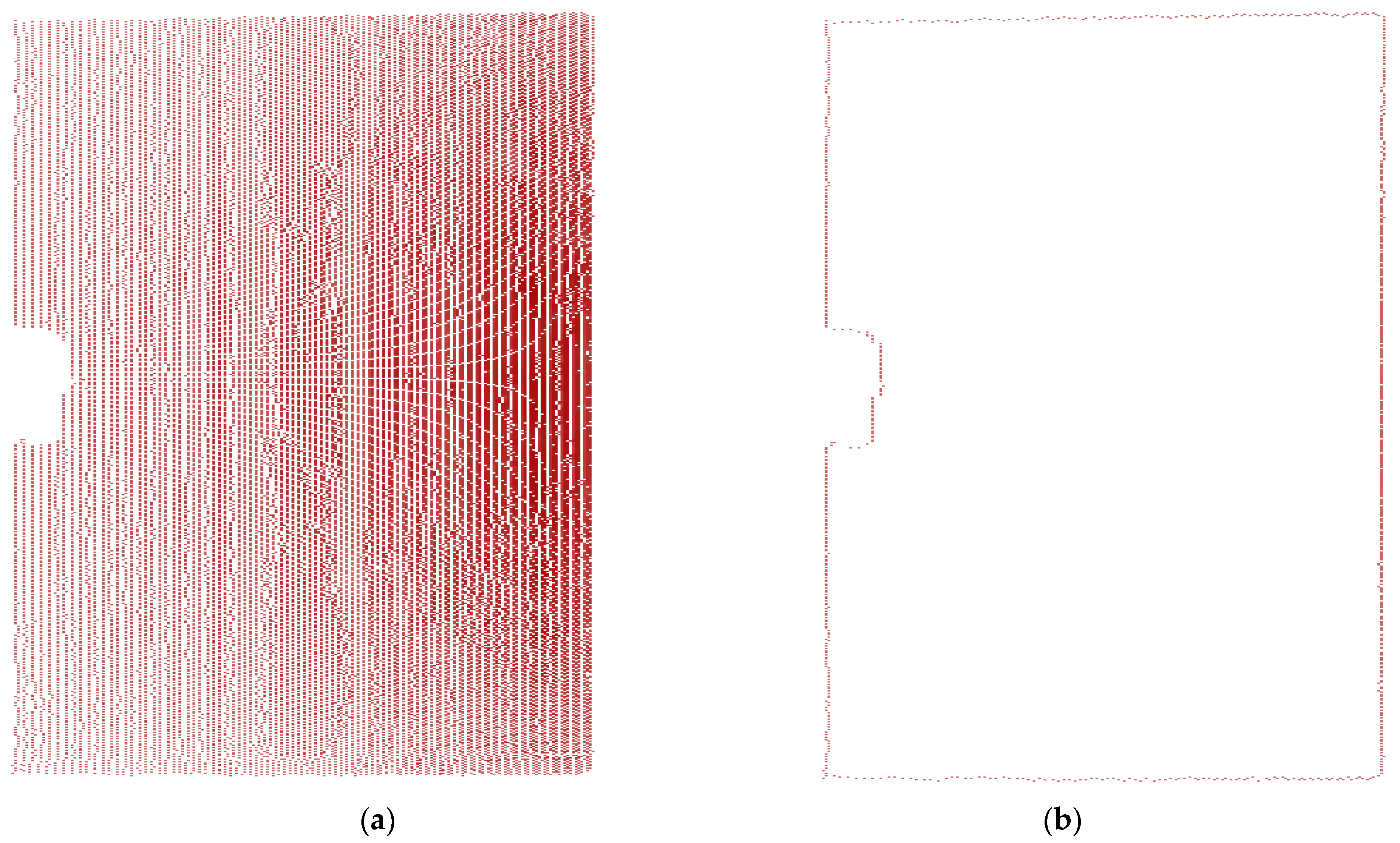

2.2.1. Boundary Points

- Calculate the normal vector of the surface formed by a point and its neighboring points. The micro-tangent plane is calculated from the normal vector.

- Project these points to the micro-tangent plane.

- Calculate the angle between the vectors formed between a point and its adjacent points on the micro-tangent plane.

- If the maximum angle between adjacent vectors is greater than the set threshold value, it is regarded as a boundary point; otherwise, it is considered an interior point (Figure 2).

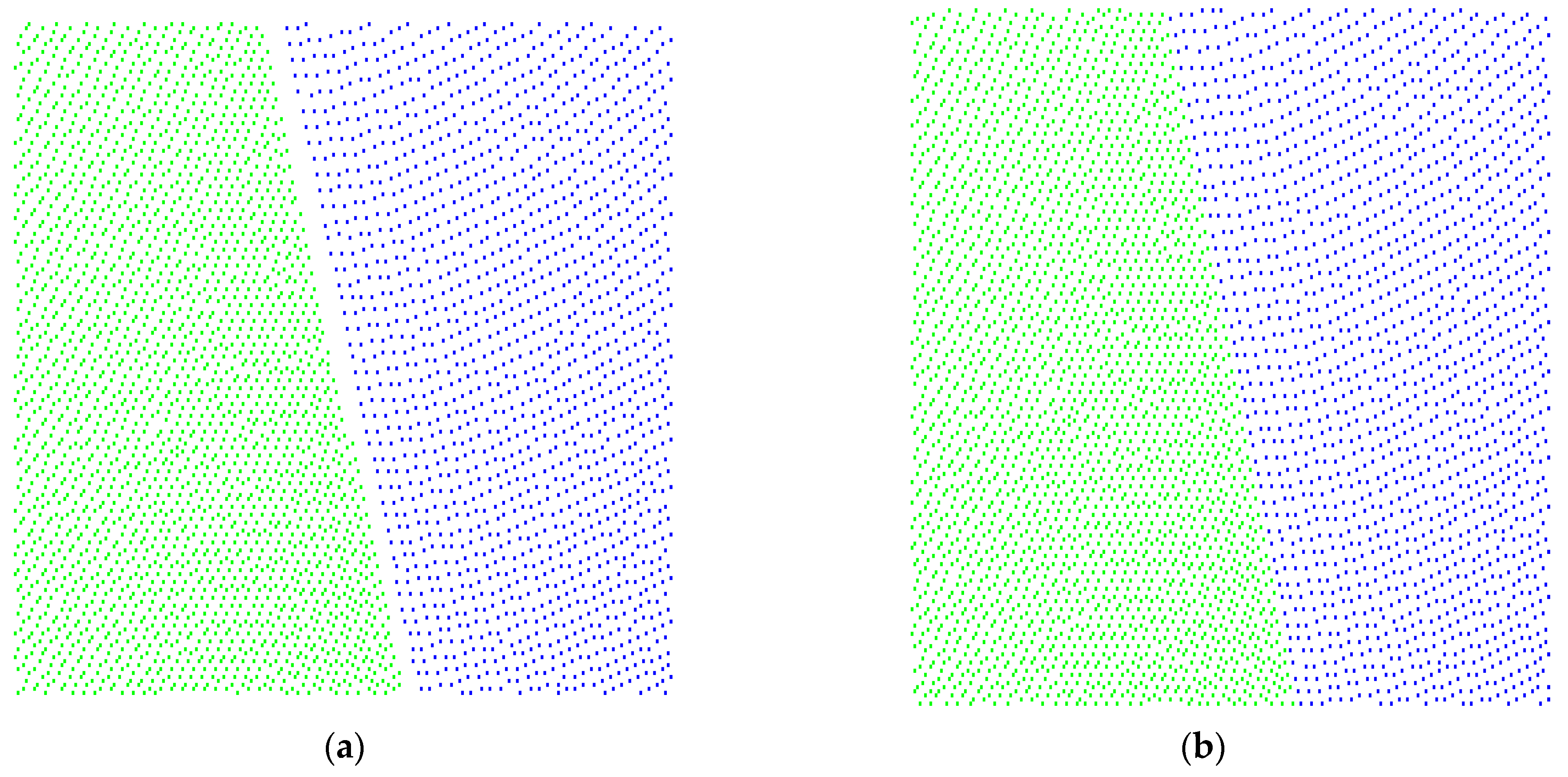

2.2.2. Coplanar points Extraction

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xu, L.; Kong, D.; Li, X. On-the-Fly Extraction of Polyhedral Buildings From Airborne LiDAR Data. IEEE Geosci. Remote. Sens. Lett. 2014, 11, 1946–1950. [Google Scholar] [CrossRef]

- Shahzad, M.; Zhu, X.X. Robust Reconstruction of Building Facades for Large Areas Using Spaceborne TomoSAR Point Clouds. IEEE Trans. Geosci. Remote. Sens. 2014, 53, 752–769. [Google Scholar] [CrossRef] [Green Version]

- Cao, R.; Zhang, Y.; Liu, X.; Zhao, Z. 3D building roof reconstruction from airborne LiDAR point clouds: A framework based on a spatial database. Int. J. Geogr. Inf. Sci. 2017, 31, 1359–1380. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, B.; Du, X.; Lu, X. Mutual Attention Inception Network for Remote Sensing Visual Question Answering. IEEE Trans. Geosci. Remote. Sens. 2021, 1–14. [Google Scholar] [CrossRef]

- Tang, P.; Huber, D.; Akinci, B.; Lipman, R.; Lytle, A. Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Autom. Constr. 2010, 19, 829–843. [Google Scholar] [CrossRef]

- Martínez-Sánchez, J.; Soria-Medina, A.; Arias, P.; Buffara-Antunes, A.F. Automatic processing of Terrestrial Laser Scanning data of building façades. Autom. Constr. 2012, 22, 298–305. [Google Scholar] [CrossRef]

- Zheng, X.; Chen, X.; Lu, X.; Sun, B. Unsupervised Change Detection by Cross-Resolution Difference Learning. IEEE Trans. Geosci. Remote. Sens. 2021, 1–16. [Google Scholar] [CrossRef]

- Luo, F.; Huang, H.; Ma, Z.; Liu, J. Semisupervised Sparse Manifold Discriminative Analysis for Feature Extraction of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6197–6211. [Google Scholar] [CrossRef]

- Yang, L.; Sheng, Y.; Wang, B. 3D reconstruction of building facade with fused data of terrestrial LiDAR data and optical image. Optik 2016, 127, 2165–2168. [Google Scholar] [CrossRef]

- Zheng, X.; Gong, T.; Li, X.; Lu, X. Generalized Scene Classification from Small-Scale Datasets with Multitask Learning. IEEE Trans. Geosci. Remote. Sens. 2021, 1–11. [Google Scholar] [CrossRef]

- Luo, F.; Zou, Z.; Liu, J.; Lin, Z. Dimensionality reduction and classification of hyperspectral image via multi-structure unified discriminative embedding. IEEE Trans. Geosci. Remote. Sens. 2021, 1. [Google Scholar] [CrossRef]

- Zebedin, L.; Bauer, J.; Karner, K.; Bischof, H. Fusion of Feature- and Area-Based Information for Urban Buildings Modeling from Aerial Imagery. Eur. Conf. Comput. Vis. 2008, 5305, 873–886. [Google Scholar] [CrossRef]

- Luo, F.; Zhang, L.; Zhou, X.; Guo, T.; Cheng, Y.; Yin, T. Sparse-Adaptive Hypergraph Discriminant Analysis for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1082–1086. [Google Scholar] [CrossRef]

- Khoshelham, K.; Nardinocchi, C.; Frontoni, E.; Mancini, A.; Zingaretti, P. Performance evaluation of automated approaches to building detection in multi-source aerial data. ISPRS J. Photogramm. Remote. Sens. 2010, 65, 123–133. [Google Scholar] [CrossRef] [Green Version]

- Guan, H.; Ji, Z.; Zhong, L.; Li, J.; Ren, Q. Partially supervised hierarchical classification for urban features from lidar data with aerial imagery. Int. J. Remote. Sens. 2013, 34, 190–210. [Google Scholar] [CrossRef]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D mapping: Using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robot. Res. 2012, 31, 647–663. [Google Scholar] [CrossRef] [Green Version]

- Chen, K.; Lai, Y.-K.; Wu, Y.-X.; Martin, R.; Hu, S.-M. Automatic semantic modeling of indoor scenes from low-quality RGB-D data using contextual information. ACM Trans. Graph. 2014, 33, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Chang, A.; Dai, A.; Funkhouser, T.; Halber, M.; Niebner, M.; Savva, M.; Song, S.; Zeng, A.; Zhang, Y. Matterport3D: Learning from RGB-D Data in Indoor Environments. In Proceedings of the International Conference 3D, Vision 2017, Qingdao, China, 10–12 October 2017; Volume 1, pp. 667–676. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Brenner, C. Rule-based roof plane detection and segmentation from laser point clouds. In Proceedings of the 2011 Joint Urban Remote Sensing Event, Munich, Germany, 11–13 April 2011; pp. 293–296. [Google Scholar] [CrossRef]

- Costantino, D.; Angelini, M.G. Production of DTM quality by TLS data. Eur. J. Remote Sens. 2013, 46, 80–103. [Google Scholar] [CrossRef] [Green Version]

- Arastounia, M.; Lichti, D.D. Automatic Object Extraction from Electrical Substation Point Clouds. Remote. Sens. 2015, 7, 15605–15629. [Google Scholar] [CrossRef] [Green Version]

- Gilani, S.A.N.; Awrangjeb, M.; Lu, G. An Automatic Building Extraction and Regularisation Technique Using LiDAR Point Cloud Data and Orthoimage. Remote. Sens. 2016, 8, 258. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.C.; Lin, C.H. Image-based Airborne LiDAR Point Cloud Encoding for 3D Building Model Retrieval. ISPRS Arch. 2016, XLI-B8, 12–19. [Google Scholar]

- Ogundana, O.O.; Charles, R.C.; Richard, B.; Huntley, J.M. Automated detection of planes in 3-D point clouds using fast Hough transforms. Opt. Eng. 2011, 50, 053609. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Borrmann, D.; Elseberg, J.; Kai, L.; Nüchter, A. The 3D Hough Transform for plane detection in point clouds: A review and a new accumulator design. 3D Res. 2011, 2, 3. [Google Scholar] [CrossRef]

- Hulik, R.; Spanel, M.; Smrz, P.; Materna, Z. Continuous plane detection in point-cloud data based on 3D Hough Transform. J. Vis. Commun. Image Represent. 2014, 25, 86–97. [Google Scholar] [CrossRef]

- Awwad, T.M.; Zhu, Q.; Du, Z.; Zhang, Y. An improved segmentation approach for planar surfaces from unstructured 3D point clouds. Photogramm. Rec. 2010, 25, 5–23. [Google Scholar] [CrossRef]

- Xu, B.; Jiang, W.; Shan, J.; Zhang, J.; Li, L. Investigation on the Weighted RANSAC Approaches for Building Roof Plane Segmentation from LiDAR Point Clouds. Remote. Sens. 2015, 8, 5. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Yang, F.; Zhu, H.; Li, D.; Li, Y.; Tang, L. An Improved RANSAC for 3D Point Cloud Plane Segmentation Based on Normal Distribution Transformation Cells. Remote. Sens. 2017, 9, 433. [Google Scholar] [CrossRef] [Green Version]

- Sampath, A.; Shan, J. Segmentation and Reconstruction of Polyhedral Building Roofs from Aerial Lidar Point Clouds. IEEE Trans. Geosci. Remote. Sens. 2009, 48, 1554–1567. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Zhang, C.; Fraser, C.S. Automatic extraction of building roofs using LIDAR data and multispectral imagery. ISPRS J. Photogramm. Remote. Sens. 2013, 83, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Zhou, G.; Cao, S.; Zhou, J. Planar Segmentation Using Range Images from Terrestrial Laser Scanning. IEEE Geosci. Remote. Sens. Lett. 2016, 13, 257–261. [Google Scholar] [CrossRef]

- Arnaud, A.; Gouiffès, M.; Ammi, M. On the Fly Plane Detection and Time Consistency for Indoor Building Wall Recognition Using a Tablet Equipped with a Depth Sensor. IEEE Access 2018, 6, 17643–17652. [Google Scholar] [CrossRef]

- Xiao, J.; Zhang, J.; Adler, B.; Zhang, H.; Zhang, J. Three-dimensional point cloud plane segmentation in both structured and unstructured environments. Robot. Auton. Syst. 2013, 61, 1641–1652. [Google Scholar] [CrossRef]

- Jochem, A.; Höfle, B.; Wichmann, V.; Rutzinger, M.; Zipf, A. Area-wide roof plane segmentation in airborne LiDAR point clouds. Comput. Environ. Urban Syst. 2011, 36, 54–64. [Google Scholar] [CrossRef]

- Kwak, E.; Al-Durgham, M.; Habib, A. Automatic 3d Building Model Generation from Lidar And Image Data Using Sequential Minimum Bounding Rectangle. ISPRS-Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2012, XXXIX-B3, 285–290. [Google Scholar] [CrossRef] [Green Version]

- Deschaud, E.; Goulette, F. A Fast and Accurate Plane Detection Algorithm for Large Noisy Point Clouds Using Filtered Normals and Voxel Growing. In Proceedings of the 3DPVT, Paris, France, 17–20 May 2010. [Google Scholar]

- Chen, D.; Zhang, L.; Li, J.; Liu, R. Urban building roof segmentation from airborne lidar point clouds. Int. J. Remote. Sens. 2012, 33, 6497–6515. [Google Scholar] [CrossRef]

- Vo, A.-V.; Truong-Hong, L.; Laefer, D.F.; Bertolotto, M. Octree-based region growing for point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Besl, P.; Jain, R. Segmentation through variable-order surface fitting. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 167–192. [Google Scholar] [CrossRef] [Green Version]

- Nurunnabi, A.; Belton, D.; West, G. Robust Segmentation in Laser Scanning 3D Point Cloud Data. In Proceedings of the 14th International Conference on Digital Image Computing Techniques & Applications 2013, Fremantle, WA, Australia, 3–5 December 2012. [Google Scholar]

- Hoppe, H.; Derose, T.; Duchamp, T.; McDonald, J.; Stuetzle, W. Surface reconstruction from unorganized points. ACM Siggraph Comput. Graph. 1992, 26, 71–78. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote. Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Pauly, M.; Gross, M.; Kobbelt, L. Efficient simplification of point-sampled surfaces. In Proceedings of the IEEE Visualization, 2002. VIS 2002, Boston, MA, USA, 27 October–1 November 2002; pp. 163–170. [Google Scholar] [CrossRef] [Green Version]

- Walczak, J.; Poreda, T.; Wojciechowski, A. Effective Planar Cluster Detection in Point Clouds Using Histogram-Driven Kd-Like Partition and Shifted Mahalanobis Distance Based Regression. Remote. Sens. 2019, 11, 2465. [Google Scholar] [CrossRef] [Green Version]

- Elmore, K.L.; Richman, M. Euclidean Distance as a Similarity Metric for Principal Component Analysis. Mon. Weather. Rev. 2001, 129, 540–549. [Google Scholar] [CrossRef]

- Rooms Detection Datasets (Full-3D). Available online: http://www.ifi.uzh.ch/en/vmml/research/datasets.html (accessed on 13 November 2020).

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D Semantic Parsing of Large-Scale Indoor Spaces. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Qian, X.; Ye, C. NCC-RANSAC: A Fast Plane Extraction Method for 3-D Range Data Segmentation. IEEE Trans. Cybern. 2014, 44, 2771–2783. [Google Scholar] [CrossRef]

| Plane | Accuracy | 0.015 m | 0.020 m | 0.025 m | 0.030 m | 0.035 m |

|---|---|---|---|---|---|---|

Plane 1 | Correct | 84.05% | 84.62% | 85.10% | 85.49% | 85.85% |

| Error | 0.34% | 0.65% | 0.98% | 1.23% | 1.49% | |

Plane 2 | Correct | 95.79% | 96.03% | 96.13% | 96.17% | 96.20% |

| Error | 0.09% | 0.30% | 0.54% | 0.73% | 1.05% | |

Plane 3 | Correct | 96.31% | 96.20% | 96.27% | 96.46% | 96.56% |

| Error | 0.17% | 0.20% | 0.34% | 0.40% | 0.44% | |

Plane 4 | Correct | 98.98% | 99.55% | 99.93% | 99.81% | 99.61% |

| Error | 1.89% | 2.18% | 2.30% | 2.50% | 2.99% | |

Plane 5 | Correct | 98.68% | 98.89% | 99.05% | 99.14% | 99.17% |

| Error | 0.57% | 0.94% | 1.27% | 1.75% | 2.46% | |

Plane 6 | Correct | 96.92% | 97.72% | 97.70% | 98.34% | 98.55% |

| Error | 0.00% | 0.09% | 0.41% | 0.90% | 1.52% | |

Plane 7 | Correct | 96.61% | 97.20% | 97.60% | 98.57% | 98.70% |

| Error | 0.00% | 0.06% | 0.33% | 1.19% | 1.38% | |

Plane 8 | Correct | 97.37% | 98.23% | 98.48% | 98.42% | 98.08% |

| Error | 0.88% | 1.50% | 1.85% | 2.36% | 2.73% |

| Plane | Accuracy | 0.015 m | 0.020 m | 0.025 m | 0.030 m | 0.035 m |

|---|---|---|---|---|---|---|

Plane 01 | Correct | 96.32% | 96.83% | 97.32% | 97.80% | 98.18% |

| Error | 0.21% | 0.32% | 0.45% | 0.74% | 1.27% | |

Plane 02 | Correct | 95.95% | 96.69% | 97.35% | 97.85% | 98.05% |

| Error | 0.09% | 0.21% | 0.43% | 0.61% | 0.83% | |

Plane 03 | Correct | 89.52% | 92.33% | 94.48% | 95.43% | 95.49% |

| Error | 0.87% | 1.48% | 2.09% | 2.33% | 2.09% | |

Plane 04 | Correct | 90.96% | 92.21% | 93.37% | 94.07% | 93.35% |

| Error | 0.08% | 0.18% | 0.37% | 0.57% | 0.84% | |

Plane 05 | Correct | 88.19% | 89.54% | 90.85% | 91.70% | 92.11% |

| Error | 0.28% | 0.60% | 1.10% | 1.96% | 3.09% | |

Plane 06 | Correct | 94.01% | 96.03% | 97.66% | 98.20% | 97.28% |

| Error | 0.04% | 0.22% | 0.69% | 0.91% | 0.98% | |

Plane 07 | Correct | 94.16% | 95.71% | 97.04% | 98.35% | 99.34% |

| Error | 0.89% | 1.24% | 1.81% | 2.44% | 3.65% | |

Plane 08 | Correct | 97.24% | 97.69% | 98.14% | 98.55% | 99.03% |

| Error | 0.45% | 0.60% | 0.80% | 1.04% | 1.48% | |

Plane 09 | Correct | 66.62% | 68.02% | 69.02% | 69.25% | 68.44% |

| Error | 0.33% | 0.59% | 0.85% | 0.92% | 1.06% |

| Cottage | Pantry | |||||

|---|---|---|---|---|---|---|

| precision | recall | F1 score | precision | recall | F1 score | |

| 0.015 m | 95.59% | 99.38% | 97.39% | 90.33% | 99.60% | 94.49% |

| 0.020 m | 96.06% | 99.07% | 97.40% | 91.67% | 99.33% | 95.11% |

| 0.025 m | 96.28% | 98.98% | 97.56% | 92.80% | 98.98% | 95.56% |

| 0.030 m | 96.55% | 98.60% | 97.51% | 93.47% | 98.65% | 95.75% |

| 0.035 m | 96.59% | 98.23% | 97.35% | 93.47% | 98.23% | 95.53% |

| Plane | Accuracy | RANSAC | Region Growing | RANSAC-RG | The Proposed Method |

|---|---|---|---|---|---|

Plane 1 | Correct | 100.00% | 82.10% | 85.10% | 85.10% |

| Error | 9.63% | 0.07% | 0.96% | 0.98% | |

Plane 2 | Correct | 100.00% | 94.99% | 96.07% | 96.13% |

| Error | 6.22% | 0.00% | 0.52% | 0.54% | |

Plane 3 | Correct | 94.83% | 94.39% | 96.25% | 96.27% |

| Error | 0.84% | 0.00% | 0.29% | 0.34% | |

Plane 4 | Correct | 97.40% | 97.73% | 97.39% | 99.93% |

| Error | 1.19% | 1.01% | 1.01% | 2.30% | |

Plane 5 | Correct | 98.28% | 95.48% | 98.81% | 99.05% |

| Error | 64.17% | 0.06% | 1.06% | 1.27% | |

Plane 6 | Correct | 99.73% | 95.16% | 98.14% | 97.70% |

| Error | 5.00% | 0.00% | 0.37% | 0.41% | |

Plane 7 | Correct | 99.01% | 94.87% | 97.12% | 97.60% |

| Error | 4.90% | 0.00% | 0.31% | 0.33% | |

Plane 8 | Correct | 89.75% | 92.69% | 96.48% | 98.48% |

| Error | 164.97% | 0.00% | 1.70% | 1.85% |

| Plane | Accuracy | RANSAC | Region Growing | RANSAC-RG | The Proposed Method |

|---|---|---|---|---|---|

Plane 01 | Correct | 96.38% | 95.43% | 97.10% | 97.80% |

| Error | 1.76% | 0.10% | 0.70% | 0.74% | |

Plane 02 | Correct | 99.91% | 94.58% | 97.98% | 97.85% |

| Error | 6.21% | 0.02% | 0.87% | 0.61% | |

Plane 03 | Correct | 97.89% | 83.99% | 81.06% | 95.43% |

| Error | 252.84% | 0.36% | 2.58% | 2.33% | |

Plane 04 | Correct | 80.18% | 88.60% | 90.74% | 94.07% |

| Error | 28.76% | 0.00% | 0.08% | 0.57% | |

Plane 05 | Correct | 100.00% | 85.54% | 92.09% | 91.70% |

| Error | 30.61% | 0.02% | 2.21% | 1.96% | |

Plane 06 | Correct | 84.78% | 90.61% | 95.23% | 98.20% |

| Error | 93.32% | 0.00% | 0.82% | 0.91% | |

Plane 07 | Correct | 94.76% | 91.63% | 98.36% | 98.35% |

| Error | 76.37% | 0.39% | 2.65% | 2.44% | |

Plane 08 | Correct | 95.23% | 96.47% | 98.41% | 98.55% |

| Error | 2.02% | 0.22% | 0.84% | 1.04% | |

Plane 09 | Correct | 84.95% | 63.85% | 66.53% | 69.25% |

| Error | 17.48% | 0.12% | 0.30% | 0.92% |

| RANSAC | Region Growing | RANSAC-RG | The Proposed Method | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| precision | recall | F1 score | precision | recall | F1 score | precision | recall | F1 score | precision | recall | F1 score | |

| cottage | 97.38% | 83.69% | 88.43% | 93.43% | 99.85% | 96.47% | 95.67% | 99.20% | 97.64% | 96.28% | 98.98% | 97.56% |

| pantry | 92.68% | 72.69% | 79.17% | 87.86% | 99.85% | 93.18% | 90.83% | 98.68% | 94.27% | 93.47% | 98.65% | 95.75% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, Z.; Gao, Z.; Zhou, G.; Li, S.; Song, L.; Lu, X.; Kang, N. Building Plane Segmentation Based on Point Clouds. Remote Sens. 2022, 14, 95. https://doi.org/10.3390/rs14010095

Su Z, Gao Z, Zhou G, Li S, Song L, Lu X, Kang N. Building Plane Segmentation Based on Point Clouds. Remote Sensing. 2022; 14(1):95. https://doi.org/10.3390/rs14010095

Chicago/Turabian StyleSu, Zhonghua, Zhenji Gao, Guiyun Zhou, Shihua Li, Lihui Song, Xukun Lu, and Ning Kang. 2022. "Building Plane Segmentation Based on Point Clouds" Remote Sensing 14, no. 1: 95. https://doi.org/10.3390/rs14010095

APA StyleSu, Z., Gao, Z., Zhou, G., Li, S., Song, L., Lu, X., & Kang, N. (2022). Building Plane Segmentation Based on Point Clouds. Remote Sensing, 14(1), 95. https://doi.org/10.3390/rs14010095