A Robust and Fast Collision-Avoidance Approach for Micro Aerial Vehicles Using a Depth Sensor †

Abstract

1. Introduction

- (1)

- The presented collision-avoidance method only uses a depth sensor for collision-avoidance and does not need any information about the geometry of the obstacles.

- (2)

- By using the novel Euclidean distance field-mapping and collision-detection approach, the computing time of the collision-avoidance maneuver can be reduced to a range of 10.4 ms and 40.4 ms that is less than the computing time of the state-of-the-art algorithms.

- (3)

- In the simulation experiments, the proposed collision-avoidance strategy can fly the robot at 2.4 m/s in challenging dynamic environments with an 82% success rate across 100 repetitive tests. The algorithm can also achieve a 95% success rate of 100 repetitive tests in a challenging static environment with a flight speed of 1.96 m/s.

2. Problem Statement

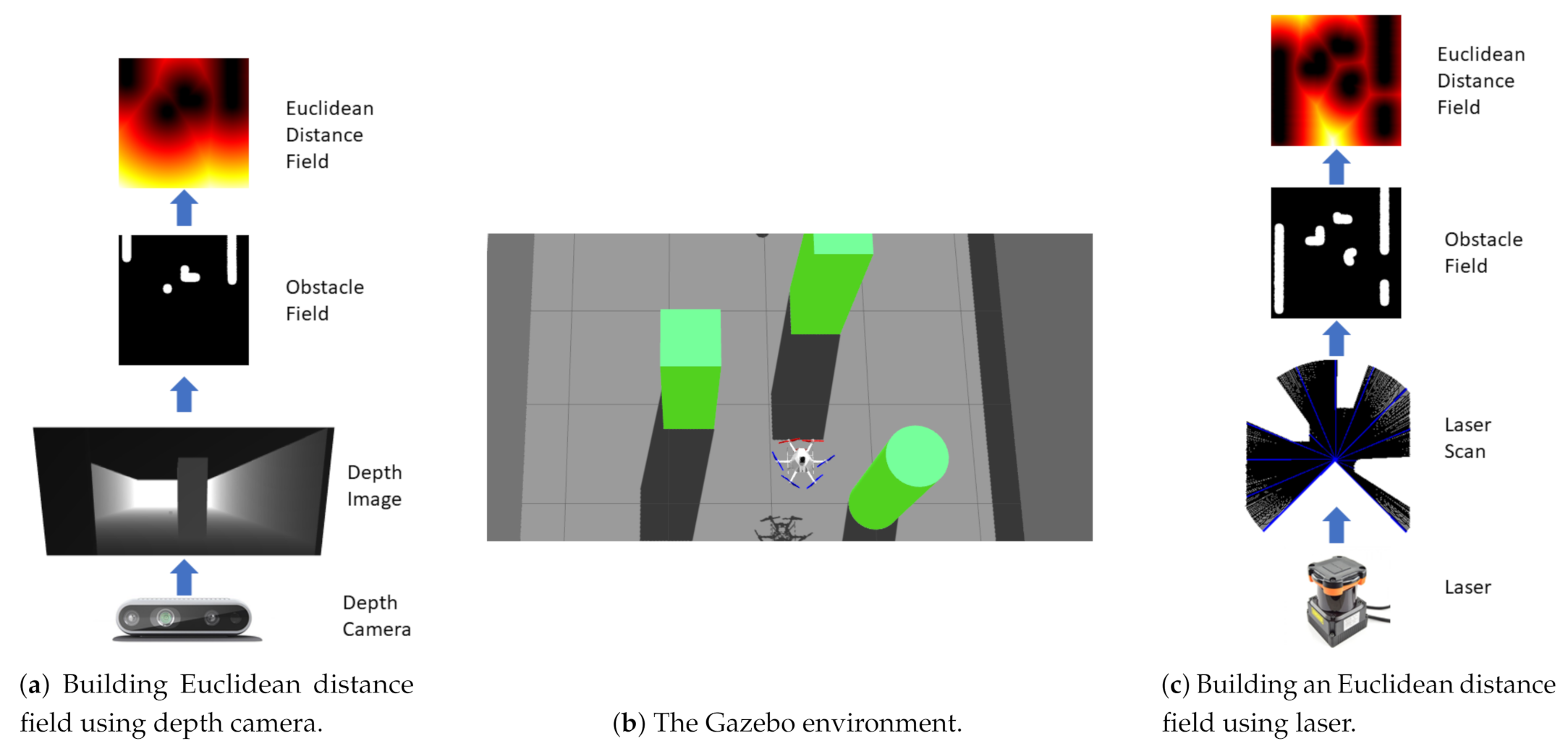

3. Depth-Based Euclidean Distance Field Construction

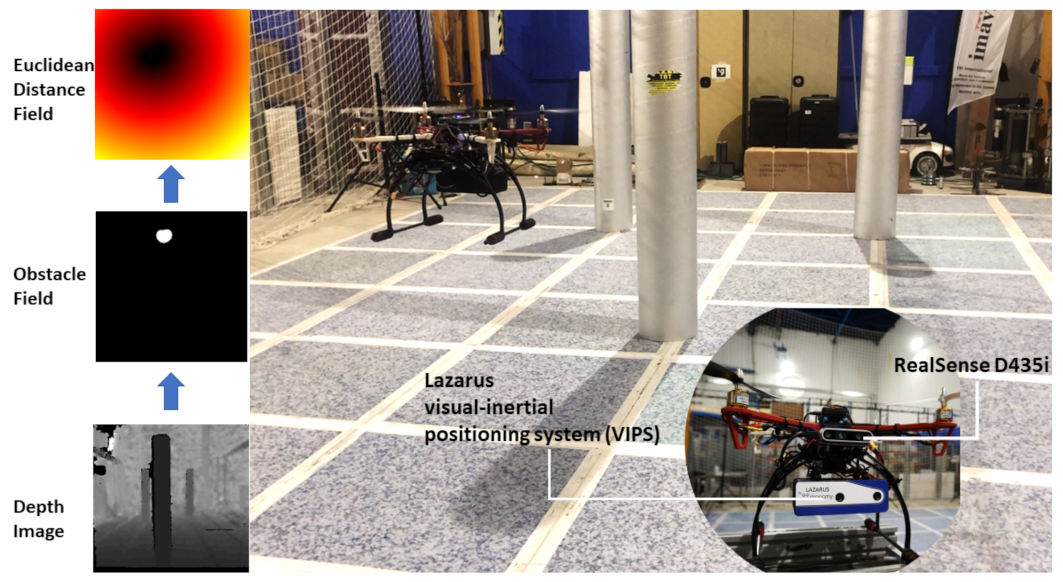

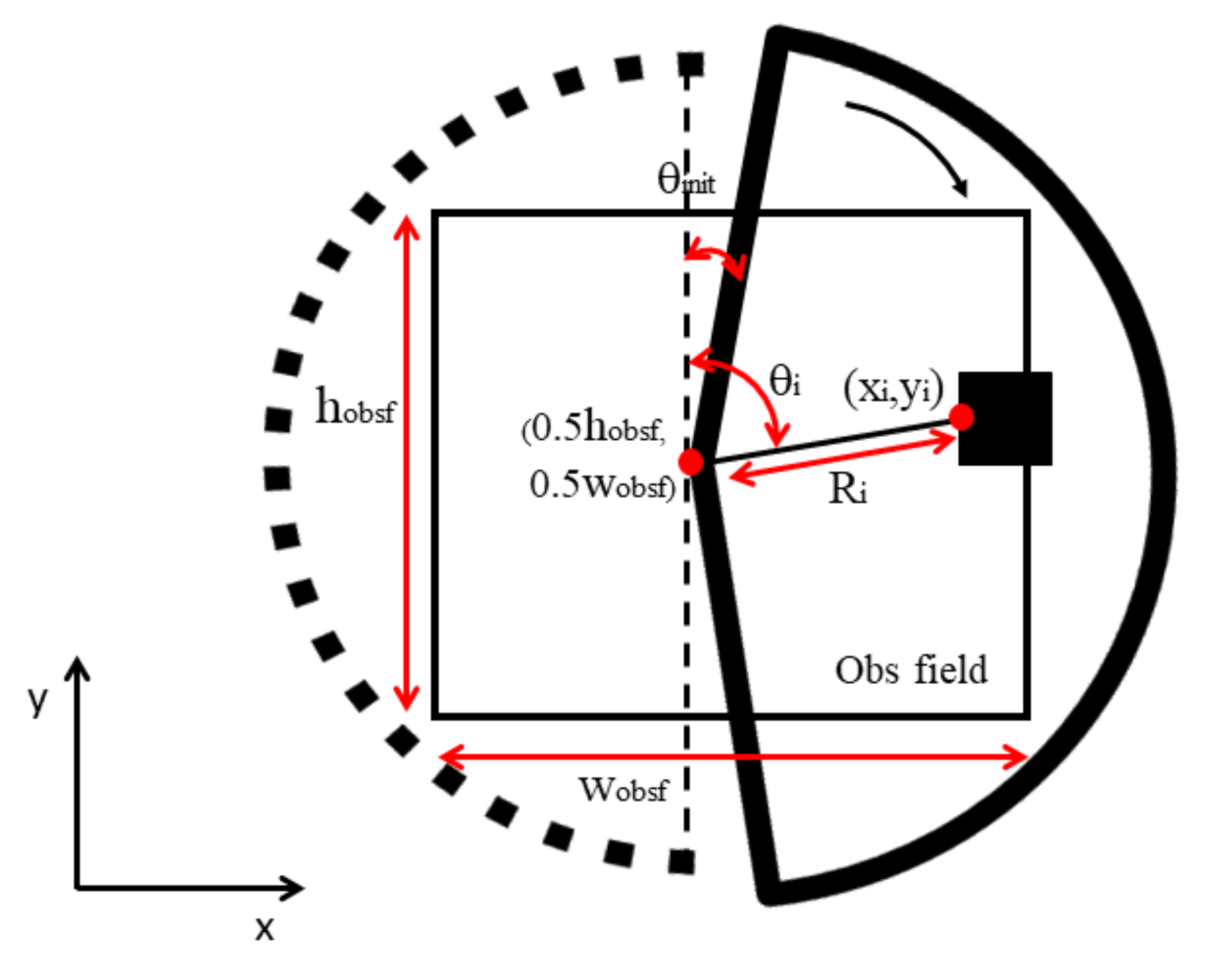

3.1. Building the Obstacle Field Using the Depth Sensor

3.2. Transforming the Obstacle Field to the Euclidean Distance Field

4. Collision-Avoidance Using Euclidean Distance Fields and Rapidly-Exploring Random Tree

4.1. Euclidean Distance Field-Based Collision Detection

4.2. Collision-Avoidance Planning and Replanning

5. Algorithm Complexity

6. Experiments and Results

6.1. Experimental Setup

6.2. Simulation Experimental Results

6.3. Real Flight Experimental Results

7. Discussions

7.1. Robustness of the Proposed Collision-Avoidance Algorithm

7.2. Computing Efficiency of the Proposed Collision-Avoidance Algorithm

7.3. Flight Speeds in Real Flights vs. Flight Speeds in Simulations

7.4. Fail Cases in Simulation Experiments

8. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sampedro, C.; Bavle, H.; Rodriguez-Ramos, A.; Puente, P.d.; Campoy, P. Laser-Based Reactive Navigation for Multirotor Aerial Robots using Deep Reinforcement Learning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1024–1031. [Google Scholar]

- Lu, L.; Sampedro, C.; Rodriguez-Vazquez, J.; Campoy, P. Laser-based collision-avoidance and Reactive Navigation using RRT* and Signed Distance Field for Multirotor UAVs. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 1209–1217. [Google Scholar]

- Penin, B.; Giordano, P.R.; Chaumette, F. Vision-Based Reactive Planning for Aggressive Target Tracking While Avoiding Collisions and Occlusions. IEEE Robot. Autom. Lett. 2018, 3, 3725–3732. [Google Scholar] [CrossRef]

- Zingg, S.; Scaramuzza, D.; Weiss, S.; Siegwart, R. MAV navigation through indoor corridors using optical flow. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 3361–3368. [Google Scholar]

- Cho, G.; Kim, J.; Oh, H. Vision-Based Obstacle Avoidance Strategies for MAVs Using Optical Flows in 3-D Textured Environments. Sensors 2019, 19, 2523. [Google Scholar] [CrossRef] [PubMed]

- Chiang, H.; Malone, N.; Lesser, K.; Oishi, M.; Tapia, L. Path-guided artificial potential fields with stochastic reachable sets for motion planning in highly dynamic environments. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2347–2354. [Google Scholar]

- Cetin, O.; Yilmaz, G. Real-time Autonomous UAV Formation Flight with Collision and Obstacle Avoidance in Unknown Environment. J. Intell. Robot. Syst. 2016, 84, 415–433. [Google Scholar] [CrossRef]

- Lin, Z.; Castano, L.; Mortimer, E.; Xu, H. Fast 3D collision-avoidance Algorithm for Fixed Wing UAS. J. Intell. Robot. Syst. 2020, 97, 577–604. [Google Scholar] [CrossRef]

- Fulgenzi, C.; Spalanzani, A.; Laugier, C. Dynamic Obstacle Avoidance in uncertain environment combining PVOs and Occupancy Grid. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 1610–1616. [Google Scholar]

- Van den Berg, J.; Snape, J.; Guy, S.J.; Manocha, D. Reciprocal collision-avoidance with acceleration-velocity obstacles. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3475–3482. [Google Scholar]

- Ma, J.; Lu, H.; Xiao, J.; Zeng, Z.; Zheng, Z. Multi-robot Target Encirclement Control with collision-avoidance via Deep Reinforcement Learning. J. Intell. Robot. Syst. 2020, 99, 371–386. [Google Scholar] [CrossRef]

- Lopez, B.T.; How, J.P. Aggressive collision-avoidance with limited field-of-view sensing. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1358–1365. [Google Scholar]

- Liu, S.; Watterson, M.; Tang, S.; Kumar, V. High speed navigation for quadrotors with limited onboard sensing. In Proceedings of the 2016 EEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1484–1491. [Google Scholar]

- Burri, M.; Oleynikova, H.; Achtelik, M.W.; Siegwart, R. Real-time visual-inertial mapping, re-localization and planning onboard MAVs in unknown environments. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 1872–1878. [Google Scholar]

- Chen, J.; Liu, T.; Shen, S. Online generation of collision-free trajectories for quadrotor flight in unknown cluttered environments. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1476–1483. [Google Scholar]

- Tordesillas, J.; Lopez, B.T.; How, J.P. FASTER: Fast and Safe Trajectory Planner for Flights in Unknown Environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1934–1940. [Google Scholar]

- Chen, H.; Lu, P. Computationally Efficient Obstacle Avoidance Trajectory Planner for UAVs Based on Heuristic Angular Search Method. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 5693–5699. [Google Scholar]

- Ryll, M.; Ware, J.; Carter, J.; Roy, N. Efficient Trajectory Planning for High Speed Flight in Unknown Environments. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 732–738. [Google Scholar]

- Fu, C.; Ye, J.; Xu, J.; He, Y.; Lin, F. Disruptor-Aware Interval-Based Response Inconsistency for Correlation Filters in Real-Time Aerial Tracking. IEEE Trans. Geosci. Remote. Sens. 2020. [Google Scholar] [CrossRef]

- Bejiga, M.B.; Zeggada, A.; Nouffidj, A.; Melgani, F. A Convolutional Neural Network Approach for Assisting Avalanche Search and Rescue Operations with UAV Imagery. Remote Sens. 2017, 9, 100. [Google Scholar] [CrossRef]

- Nikolic, J.; Burri, M.; Rehder, J.; Leutenegger, S.; Huerzeler, C.; Siegwart, R. A UAV system for inspection of industrial facilities. In Proceedings of the 2013 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Distance Transforms of Sampled Functions; Technical Report TR2004-1963; Cornell University: Ithaca, NY, USA, 2004. [Google Scholar]

- Charles, R.; Adam, B.; Nicholas, R. Polynomial trajectory planning for aggressive quadrotor flight in dense indoor environments. In Robotics Research. Springer Tracts in Advanced Robotics; Inaba, M., Corke, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; Volume 114. [Google Scholar]

- Kamel, M.; Stastny, T.; Alexis, K.; Siegwart, R. Model Predictive Control for Trajectory Tracking of Unmanned Aerial Vehicles Using Robot Operating System. In Robot Operating System (ROS); Studies in Computational Intelligence; Koubaa, A., Ed.; Springer: Cham, Switzerland, 2017; Volume 707. [Google Scholar]

- Morgan, Q.; Ken, C.; Josh, G.B.P.F.; Tully, F.; Jeremy, L.; Rob, W.; Ng, A.Y. ROS: An open-source Robot Operating System. Proceedings of Open-source Software Workshop of 2009 International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 1476–1483. [Google Scholar]

- Furrer, F.; Burri, M.; Achtelik, M.; Siegwart, R. RotorS-A Modular Gazebo MAV Simulator Framework. In Robot Operating System (ROS); Studies in Computational Intelligence; Koubaa, A., Ed.; Springer: Cham, Switzerland, 2016; Volume 625. [Google Scholar]

- Sekhavat, S.; Svestka, P.; Laumond, J.P.; Overmars, M. Multilevel path planning for nonholonomic robots using semi-holonomic subsystems. Int. J. Robot. Res. 1998, 17, 840–857. [Google Scholar] [CrossRef]

- Gu, S.; Lillicrap, T.; Sutskever, I.; Levine, S. Continuous deepq-learning with model-based acceleration. In Proceedings of the International Conference on Machine Learning ICML, New York, NY, USA, 20–22 June 2016. [Google Scholar]

| Acronym | Definition |

|---|---|

| MAV | Micro Aerial Vehicle |

| EDF | Euclidean Distance Field |

| OFM | Only Forward Maneuver |

| IMU | Inertial Measurement Unit |

| EDT | Euclidean Distance Transform |

| RRT | Rapid Random Tree |

| ROS | Robot Operating System |

| DDPG | Deep Deterministic Policy Gradient |

| NAF | Normalized Advantage Function |

| Algorithm | PL (m) | TG (s) | AV (m/s) | MV (m/s) | SR (%) |

|---|---|---|---|---|---|

| OFM_HIGH | 10.20 ± 1.85 | 16.03 ± 5.81 | 0.62 ± 0.02 | 2.44 ± 0.19 | 82 |

| OFM_LOW | 10.44 ± 2.22 | 17.53 ± 3.50 | 0.59 ± 0.01 | 1.72 ± 0.21 | 85 |

| DDPG [1] | 6.88 ± 0.81 | 16.3 ± 3.49 | 0.42 ± 0.007 | 1.06 ± 0.20 | 81 |

| NAF [28] | 7.57 ± 0.77 | 15.75 ± 2.88 | 0.48 ± 0.05 | 1.07 ± 0.06 | 87 |

| Algorithm | PL (m) | TG (s) | AV (m/s) | MV (m/s) | SR (%) |

|---|---|---|---|---|---|

| OFM | 4.8731 ± 1.1098 | 18.53 ± 11.78 | 0.28 ± 0.02 | 1.96 ± 0.05 | 95 |

| Authors | Comp. Time (ms) | Authors | Comp. Time (ms) |

|---|---|---|---|

| Liu et al. [13] | >160 | Burri et al. [14] | >40 |

| Chen et al. [15] | >34 | Tordesillas et al. [16] | >25 |

| Chen et al. [17] | ≥18 | Ryll et al. [18] | ≈30 |

| This paper | 25.4 ± 15 (>10.4) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, L.; Carrio, A.; Sampedro, C.; Campoy, P. A Robust and Fast Collision-Avoidance Approach for Micro Aerial Vehicles Using a Depth Sensor. Remote Sens. 2021, 13, 1796. https://doi.org/10.3390/rs13091796

Lu L, Carrio A, Sampedro C, Campoy P. A Robust and Fast Collision-Avoidance Approach for Micro Aerial Vehicles Using a Depth Sensor. Remote Sensing. 2021; 13(9):1796. https://doi.org/10.3390/rs13091796

Chicago/Turabian StyleLu, Liang, Adrian Carrio, Carlos Sampedro, and Pascual Campoy. 2021. "A Robust and Fast Collision-Avoidance Approach for Micro Aerial Vehicles Using a Depth Sensor" Remote Sensing 13, no. 9: 1796. https://doi.org/10.3390/rs13091796

APA StyleLu, L., Carrio, A., Sampedro, C., & Campoy, P. (2021). A Robust and Fast Collision-Avoidance Approach for Micro Aerial Vehicles Using a Depth Sensor. Remote Sensing, 13(9), 1796. https://doi.org/10.3390/rs13091796