Optimized Deep Learning Model as a Basis for Fast UAV Mapping of Weed Species in Winter Wheat Crops

Abstract

1. Introduction

2. Materials and Methods

2.1. The UAV Image Data Set and Plant Annotation

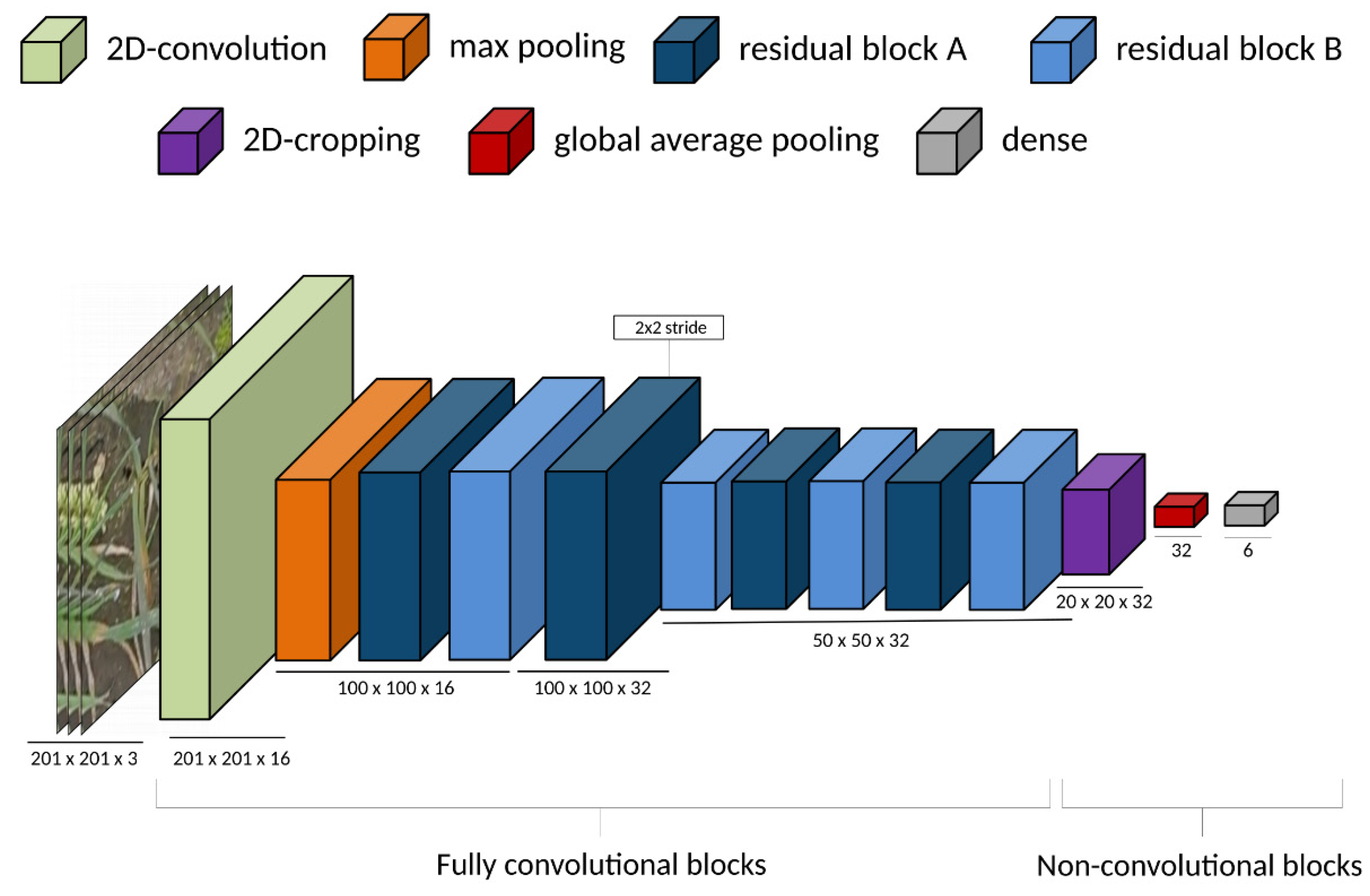

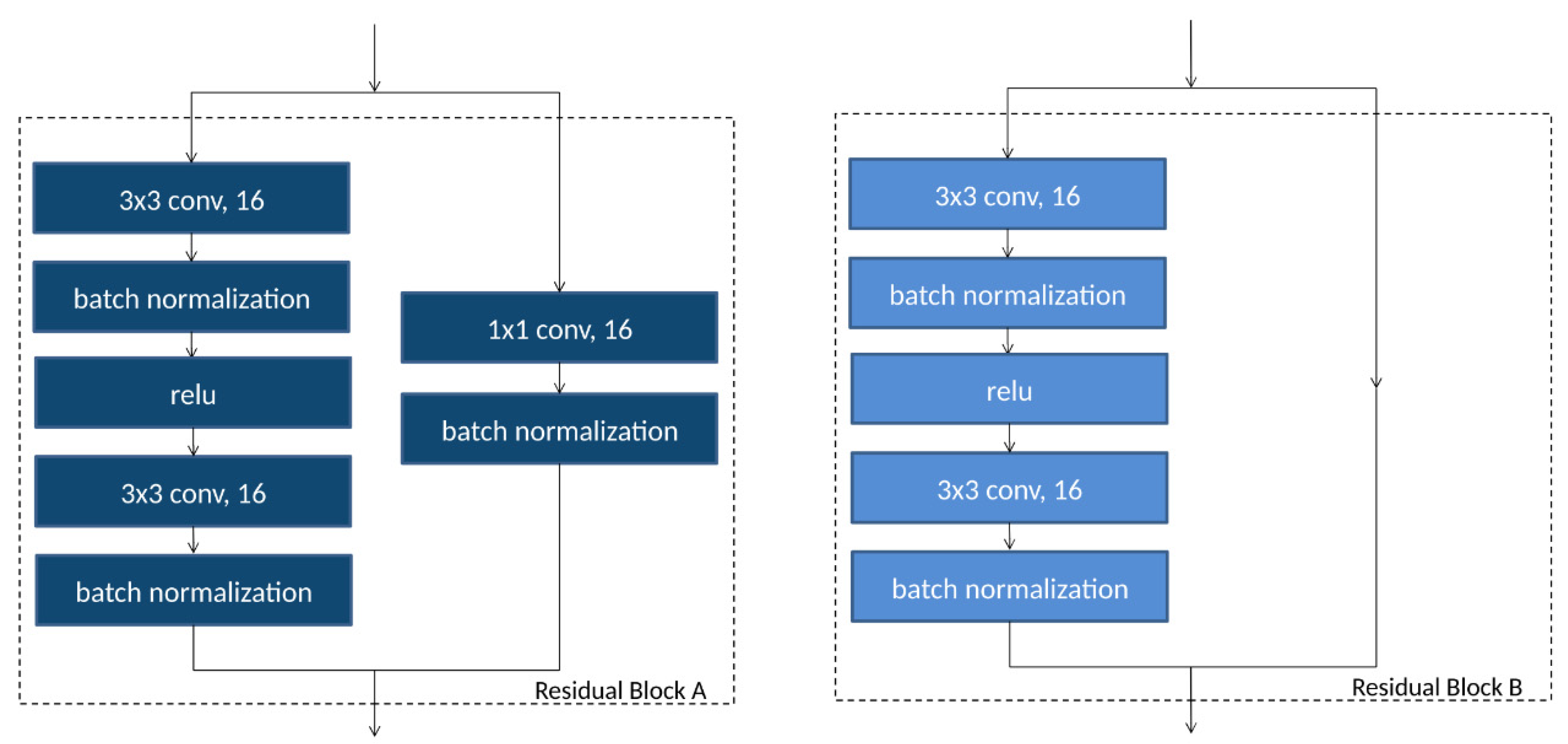

2.2. The Image Classifier Base Architecture

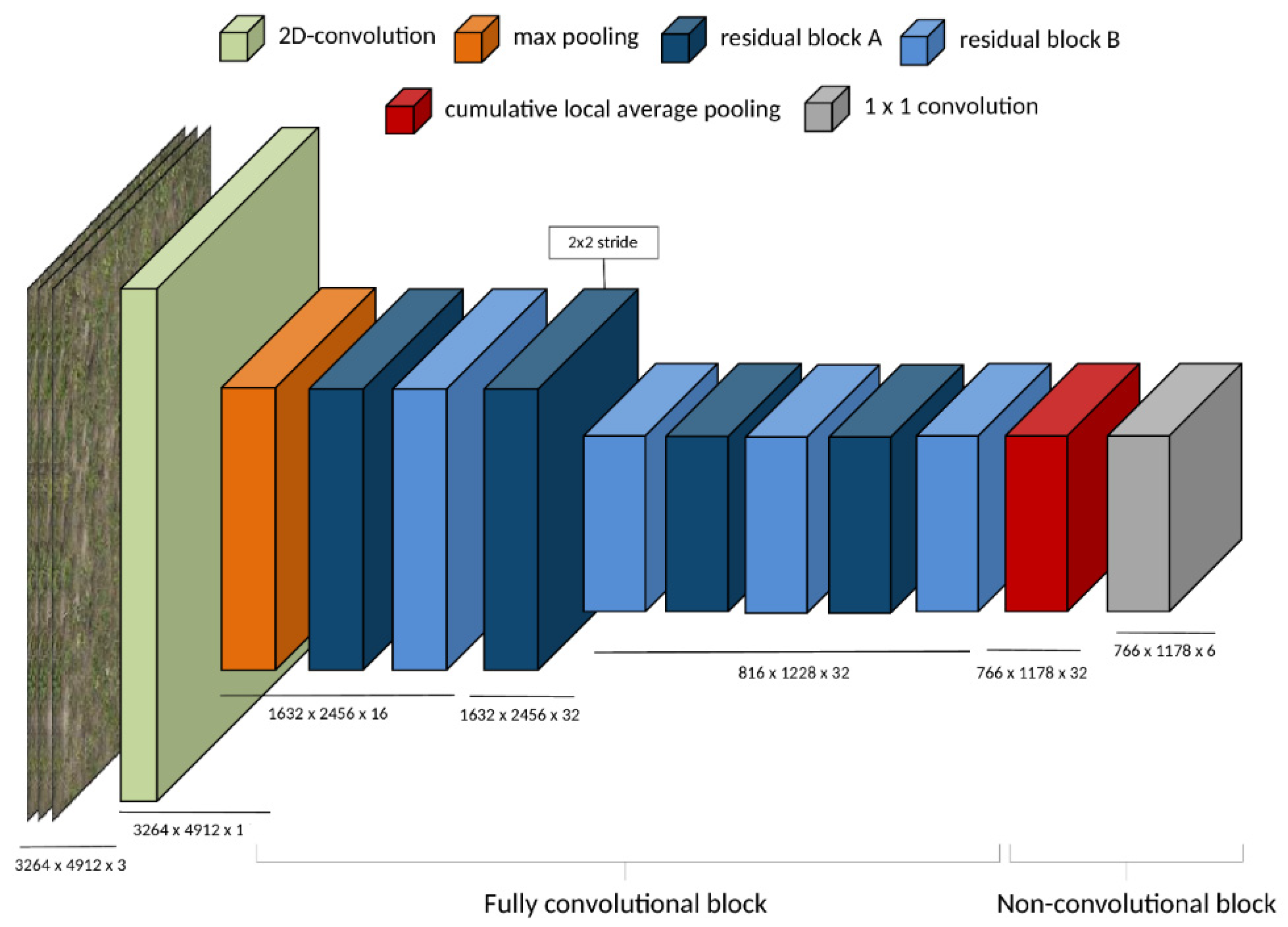

2.3. Optimizing Computational Performance for Creating Weed Maps

2.4. Testing the Accuracy of the Image Classifier and Its Prediction Performance (Model Training and Testing)

3. Results

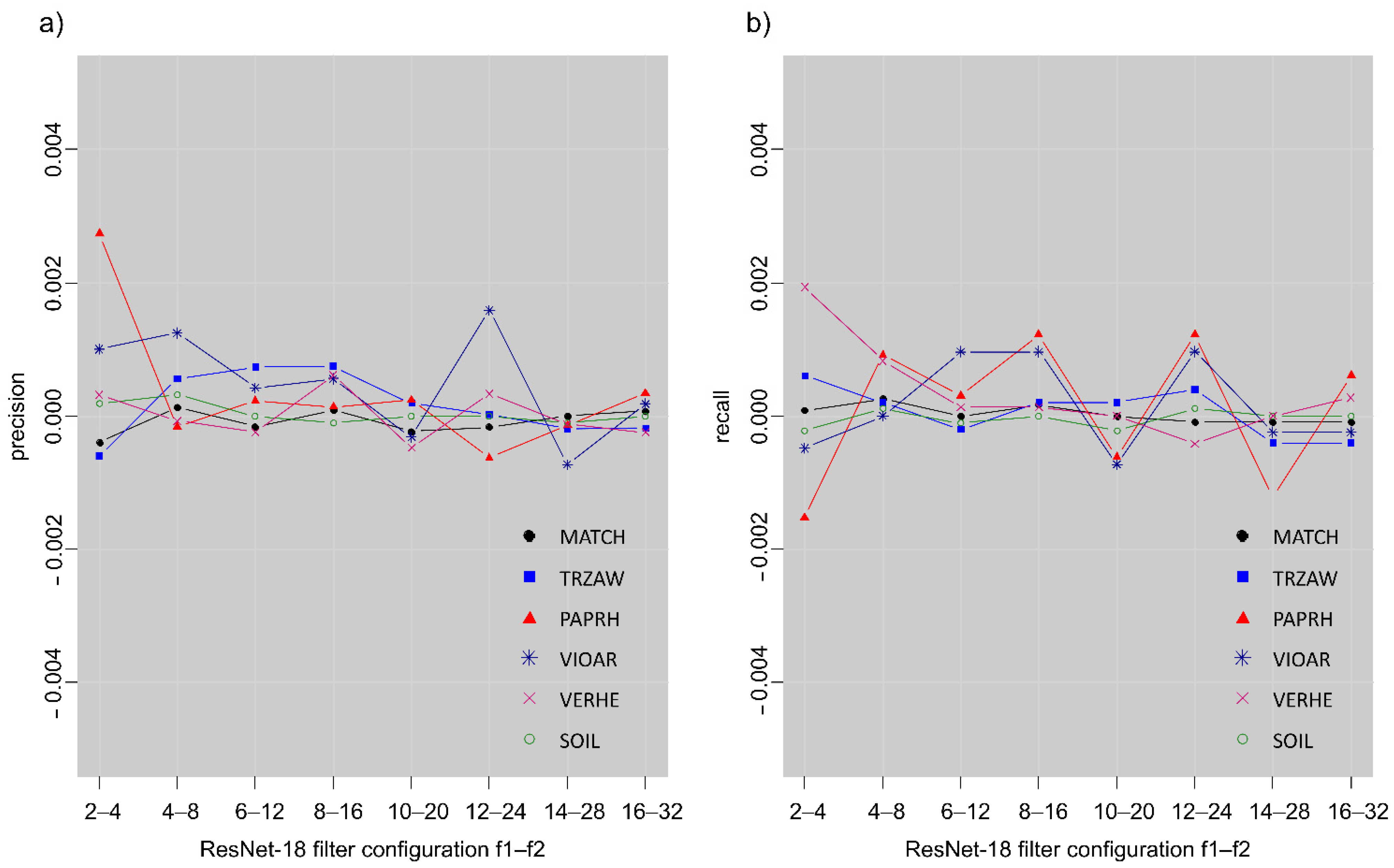

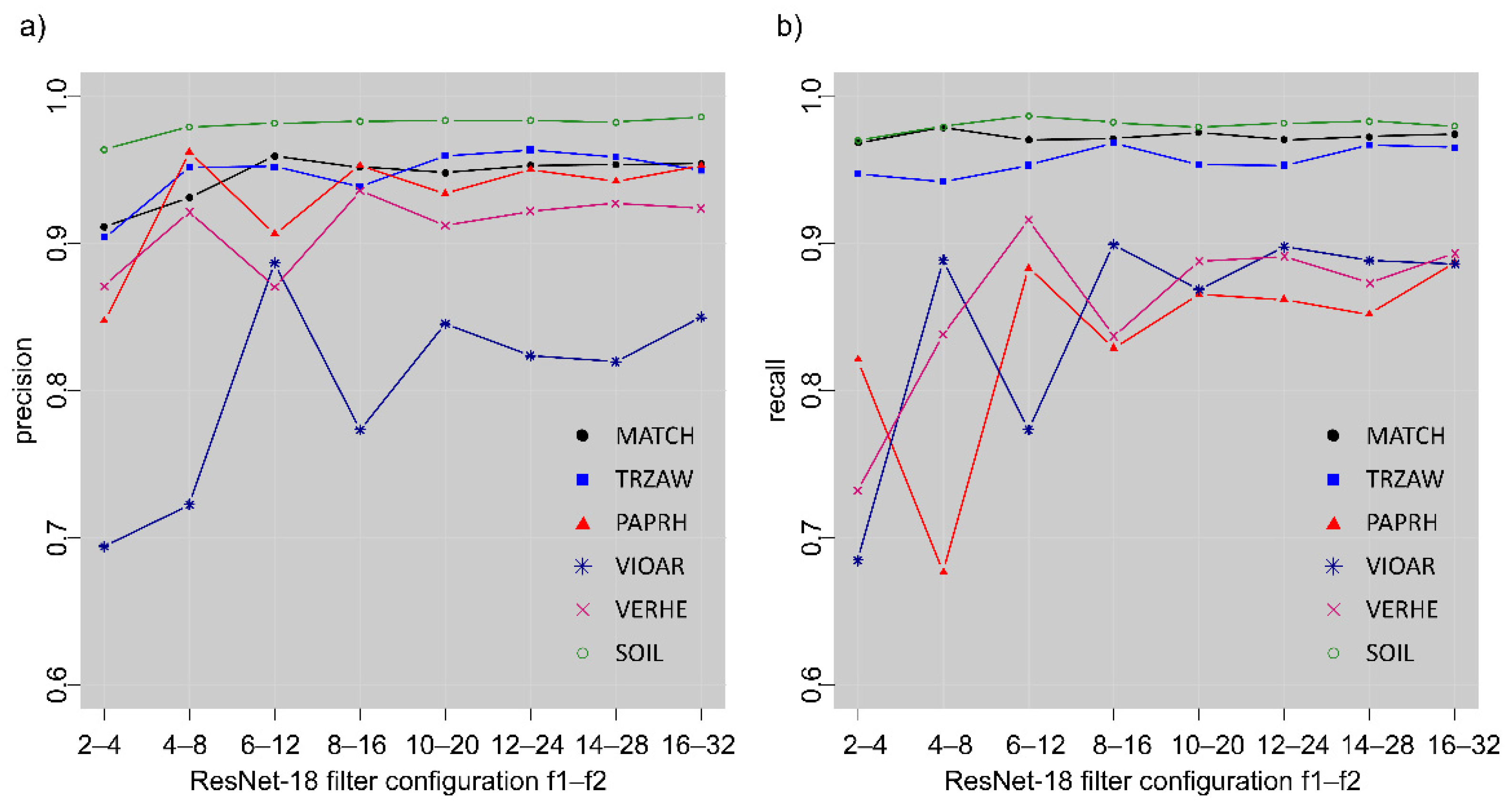

3.1. Overall Performance of the ResNet-18 Image-Level Classifier Regarding 32-Bit and 16-Bit Precision

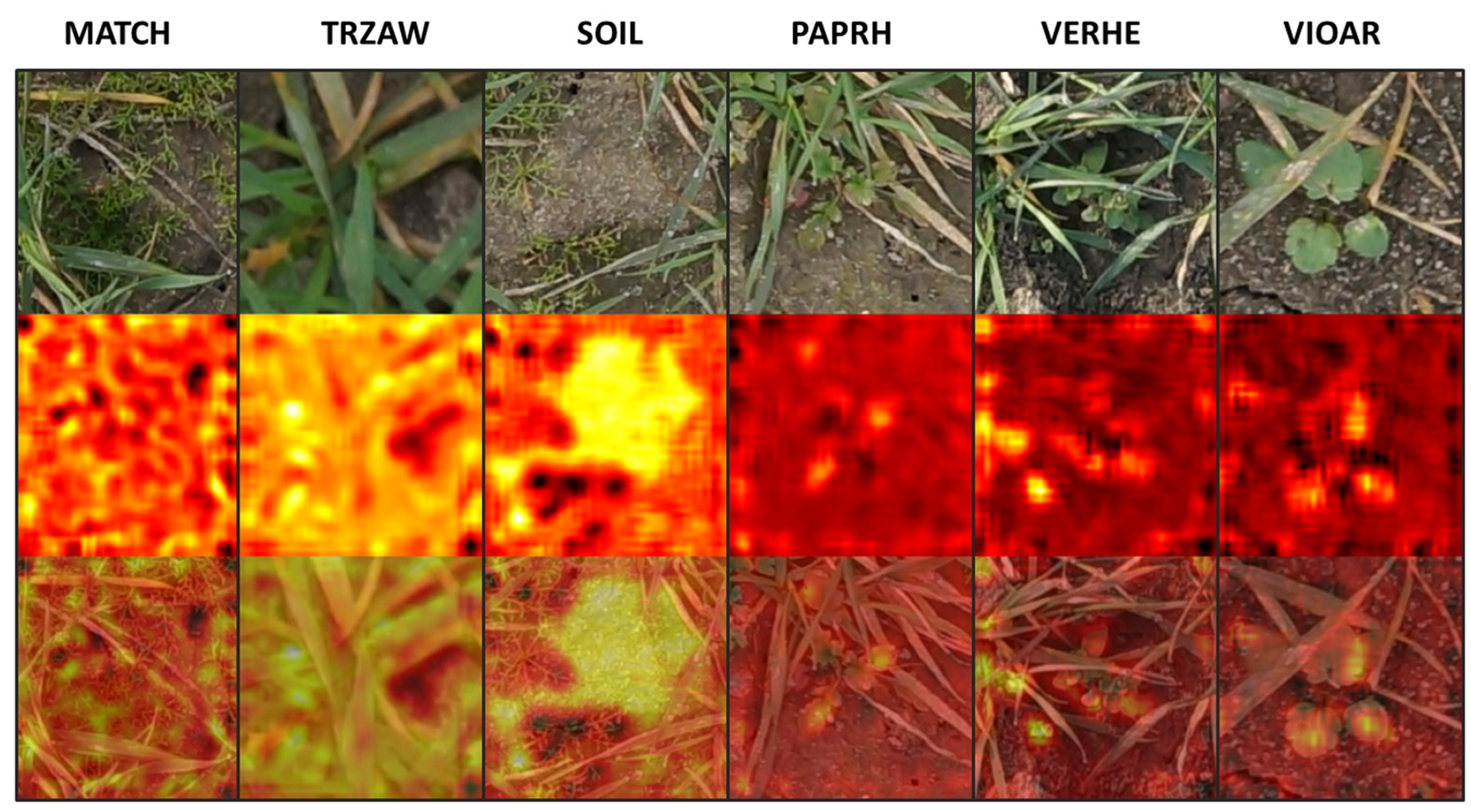

3.2. Class Specific Prediction Quality Assessment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Walter, A.; Finger, R.; Huber, R.; Buchmann, N. Opinion: Smart farming is key to developing sustainable agriculture. Proc. Natl. Acad. Sci. USA 2017, 114, 6148–6150. [Google Scholar] [CrossRef] [PubMed]

- Barroso, J.; Fernandez-Quintanilla, C.; Ruiz, D.; Hernaiz, P.; Rew, L. Spatial stability of Avena Sterilis Ssp. Ludoviciana populations under annual applications of low rates of imazamethabenz. Weed Res. 2004, 44, 178–186. [Google Scholar] [CrossRef]

- Zimdahl, R.L. Fundamentals of Weed Science, 3th ed.; Elsevier Academic Press: Amsterdam, The Netherlands, 2007; ISBN 978-0-12-372518-9. [Google Scholar]

- European Commission. EU Biodiversity Strategy for 2030 Bringing Nature Back into Our Lives; European Commission: Brussels, Belgium, 2020; Document 52020DC0380, COM(2020) 380 Final. [Google Scholar]

- Christensen, S.; Søgaard, H.T.; Kudsk, P.; Nørremark, M.; Lund, I.; Nadimi, E.S.; Jørgensen, R. Site-specific weed control technologies. Weed Res. 2009, 49, 233–241. [Google Scholar] [CrossRef]

- Jensen, P.K. Target precision and biological efficacy of two nozzles used for precision weed control. Precis. Agric. 2015, 16, 705–717. [Google Scholar] [CrossRef]

- Rasmussen, J.; Azim, S.; Nielsen, J.; Mikkelsen, B.F.; Hørfarter, R.; Christensen, S. A new method to estimate the spatial correlation between planned and actual patch spraying of herbicides. Precis. Agric. 2020, 21, 713–728. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.-H. Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef]

- Pflanz, M.; Nordmeyer, H.; Schirrmann, M. Weed mapping with UAS imagery and a bag of visual words based image classifier. Remote Sens. 2018, 10, 1530. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 1–7. [Google Scholar] [CrossRef]

- Paice, M.E.R.; Miller, P.C.H.; Bodle, D.J. An experimental sprayer for the spatially selective application of herbicides. J. Agric. Eng. Res. 1995, 60, 100–109. [Google Scholar] [CrossRef]

- Westwood, J.H.; Charudattan, R.; Duke, S.O.; Fennimore, S.A.; Marrone, P.; Slaughter, D.C.; Swanton, C.; Zollinger, R. Weed Management in 2050: Perspectives on the Future of Weed Science. Weed Sci. 2018, 66, 275–285. [Google Scholar] [CrossRef]

- Somerville, G.J.; Sønderskov, M.; Mathiassen, S.K.; Metcalfe, H. Spatial Modelling of Within-Field Weed Populations; a Review. Agronomy 2020, 10, 1044. [Google Scholar] [CrossRef]

- Walter, A.; Khanna, R.; Lottes, P.; Stachniss, C.; Nieto, J.; Liebisch, F. Flourish—A robotic approach for automation in crop management. In Proceedings of the 14th International Conference on Precision Agriculture (ICPA), Montreal, QC, Canada, 24–27 June 2018; pp. 1–9. [Google Scholar]

- Hunter, J.E.; Gannon, T.W.; Richardson, R.J.; Yelverton, F.H.; Leon, R.G. Integration of remote-weed mapping and an autonomous spraying unmanned aerial vehicle for site-specific weed management. Pest. Manag. Sci. 2020, 76, 1386–1392. [Google Scholar] [CrossRef]

- Yang, F.; Xue, X.; Cai, C.; Sun, Z.; Zhou, Q. Numerical simulation and analysis on spray drift movement of multirotor plant protection unmanned aerial vehicle. Energies 2018, 11, 2399. [Google Scholar] [CrossRef]

- López-Granados, F.; Peña-Barragán, J.M.; Jurado-Expósito, M.; Francisco-Fernández, M.; Cao, R.; Alonso-Betanzos, A.; Fontenla-Romero, O. Multispectral classification of grass weeds and wheat (Triticum Durum) using linear and nonparametric functional discriminant analysis and neural networks: Multispectral classification of grass weeds in wheat. Weed Res. 2008, 48, 28–37. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed mapping in early-season maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef]

- De Castro, A.; Torres-Sánchez, J.; Peña, J.; Jiménez-Brenes, F.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Choksuriwong, A.; Emile, B.; Laurent, H.; Rosenberger, C. Comparative study of global invariant descriptors for object recognition. J. Electron. Imaging 2008, 17, 1–10. [Google Scholar] [CrossRef]

- Franz, E.; Gebhardt, M.R.; Unklesbay, K.B. Shape Description of completely visible and partially occluded leaves for identifying plants in digital image. Trans. ASABE 1991, 34, 673–681. [Google Scholar] [CrossRef]

- Rumpf, T.; Römer, C.; Weis, M.; Sökefeld, M.; Gerhards, R.; Plümer, L. Sequential support vector machine classification for small-grain weed species discrimination with special regard to Cirsium Arvense and Galium Aparine. Comput. Electron. Agric. 2012, 80, 89–96. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Shape features for identifying young weeds using image analysis. Trans. ASAE 1995, 38, 271–281. [Google Scholar] [CrossRef]

- Csurka, G.; Dance, C.R.; Fan, L.; Willamowski, J.; Bray, C. Visual categorization with bags of keypoints. In Proceedings of the ECCV International Workshop on Statistical Learning in Computer Vision, Prague, Czech Republic, 15–16 May 2004; pp. 1–22. [Google Scholar]

- Kazmi, W.; Garcia-Ruiz, F.; Nielsen, J.; Rasmussen, J.; Andersen, H.J. Exploiting Affine Invariant Regions and Leaf Edge Shapes for Weed Detection. Comput. Electron. Agric. 2015, 118, 290–299. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet: Large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Dos Santos Ferreira, A.; Matte Freitas, D.; da Silva, G.G.; Pistori, H.; Theophilo Folhes, M. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Peteinatos, G.G.; Reichel, P.; Karouta, J.; Andújar, D.; Gerhards, R. Weed Identification in Maize, Sunflower, and Potatoes with the Aid of Convolutional Neural Networks. Remote Sens. 2020, 12, 4185. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zou, K.; Chen, X.; Zhang, F.; Zhou, H.; Zhang, C. A Field Weed Density Evaluation Method Based on UAV Imaging and Modified U-Net. Remote Sens. 2021, 13, 310. [Google Scholar] [CrossRef]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A multiclass weed species image dataset for deep learning. Sci. Rep. 2019, 9, 2058. [Google Scholar] [CrossRef]

- Deng, J.; Zhong, Z.; Huang, H.; Lan, Y.; Han, Y.; Zhang, Y. Lightweight Semantic Segmentation Network for Real-Time Weed Mapping Using Unmanned Aerial Vehicles. Appl. Sci. 2020, 10, 7132. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9908, pp. 630–645. ISBN 978-3-319-46492-3. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. arXiv 2015, arXiv:1411.4038. [Google Scholar]

- Kingma, D.P.; Ba, J. A Method for stochastic optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2704–2713. [Google Scholar] [CrossRef]

- Jurado-Expósito, M.; de Castro, A.I.; Torres-Sánchez, J.; Jiménez-Brenes, F.M.; López-Granados, F. Papaver Rhoeas L. mapping with cokriging using UAV imagery. Precis. Agric. 2019, 20, 1045–1067. [Google Scholar] [CrossRef]

- Schirrmann, M.; Hamdorf, A.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Dammer, K.-H. Regression Kriging for Improving Crop Height Models Fusing Ultra-Sonic Sensing with UAV Imagery. Remote Sens. 2017, 9, 665. [Google Scholar] [CrossRef]

- Lottes, P.; Behley, J.; Milioto, A.; Stachniss, C. Fully convolutional networks with sequential information for robust crop and weed detection in precision farming. IEEE Robot. Autom. Lett. 2018, 3, 2870–2877. [Google Scholar] [CrossRef]

- Sa, I.; Chen, Z.; Popovic, M.; Khanna, R.; Liebisch, F.; Nieto, J.; Siegwart, R. WeedNet: Dense semantic weed classification using multispectral images and MAV for smart farming. IEEE Robot. Autom. Lett. 2018, 3, 588–595. [Google Scholar] [CrossRef]

- Dammer, K.-H.; Wartenberg, G. Sensor-based weed detection and application of variable herbicide rates in real time. Crop Prot. 2007, 26, 270–277. [Google Scholar] [CrossRef]

- Rockwell, A.D.; Ayers, P.D. A variable rate, direct nozzle injection field sprayer. Appl. Eng. Agric. 1996, 12, 531–538. [Google Scholar] [CrossRef]

- Krebs, M.; Rautmann, D.; Nordmeyer, H.; Wegener, J.K. Entwicklung Eines Direkteinspeisungssystems Ohne Verzögerungszeiten Zur Pflanzenschutzmittelapplikation. Landtechnik 2015, 238–253. [Google Scholar] [CrossRef]

- Gerhards, R.; Christensen, S. Real-Time Weed Detection, Decision Making and Patch Spraying in Maize, Sugarbeet, Winter Wheat and Winter Barley: Patch Spraying. Weed Res. 2003, 43, 385–392. [Google Scholar] [CrossRef]

| Filter 1 | Filter 2 | Optimizer | Learning Rate |

|---|---|---|---|

| 2 | 4 | Adam | 0.01 |

| 4 | 8 | Adam | 0.01 |

| 6 | 12 | Adam | 0.01 |

| 8 | 16 | Adam | 0.01 |

| 10 | 20 | Adam | 0.01 |

| 12 | 24 | Adam | 0.01 |

| 14 | 28 | Adam | 0.01 |

| 16 | 32 | Adam | 0.01 |

| Filter 1 | Filter 2 | 32-Bit | 16-Bit | Difference |

|---|---|---|---|---|

| 2 | 4 | 0.883 | 0.883 | −0.000222 |

| 4 | 8 | 0.916 | 0.916 | −0.000345 |

| 6 | 12 | 0.935 | 0.935 | −0.000098 |

| 8 | 16 | 0.930 | 0.931 | −0.000295 |

| 10 | 20 | 0.938 | 0.938 | 0.000148 |

| 12 | 24 | 0.941 | 0.941 | −0.000172 |

| 14 | 28 | 0.939 | 0.938 | 0.000197 |

| 16 | 32 | 0.944 | 0.944 | 0.000000 |

| MATCH | TRZAW | SOIL | PAPRH | VERHE | VIOAR | Recall | CV (%) | |

|---|---|---|---|---|---|---|---|---|

| MATCH | 2307 | 13 | 17 | 5 | 8 | 15 | 0.98 | 0.68 |

| TRZAW | 21 | 951 | 2 | 4 | 11 | 7 | 0.95 | 1.31 |

| SOIL | 29 | 1 | 1798 | 0 | 1 | 7 | 0.98 | 0.67 |

| PAPRH | 18 | 4 | 3 | 562 | 35 | 23 | 0.87 | 4.40 |

| VERHE | 8 | 11 | 2 | 5 | 739 | 66 | 0.89 | 5.19 |

| VIOAR | 47 | 10 | 3 | 14 | 85 | 1293 | 0.89 | 5.01 |

| Precision | 0.95 | 0.96 | 0.99 | 0.95 | 0.84 | 0.92 | Overall | |

| CV (%) | 1.11 | 1.05 | 0.45 | 4.06 | 5.66 | 3.22 | accuracy | 0.94 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Camargo, T.; Schirrmann, M.; Landwehr, N.; Dammer, K.-H.; Pflanz, M. Optimized Deep Learning Model as a Basis for Fast UAV Mapping of Weed Species in Winter Wheat Crops. Remote Sens. 2021, 13, 1704. https://doi.org/10.3390/rs13091704

de Camargo T, Schirrmann M, Landwehr N, Dammer K-H, Pflanz M. Optimized Deep Learning Model as a Basis for Fast UAV Mapping of Weed Species in Winter Wheat Crops. Remote Sensing. 2021; 13(9):1704. https://doi.org/10.3390/rs13091704

Chicago/Turabian Stylede Camargo, Tibor, Michael Schirrmann, Niels Landwehr, Karl-Heinz Dammer, and Michael Pflanz. 2021. "Optimized Deep Learning Model as a Basis for Fast UAV Mapping of Weed Species in Winter Wheat Crops" Remote Sensing 13, no. 9: 1704. https://doi.org/10.3390/rs13091704

APA Stylede Camargo, T., Schirrmann, M., Landwehr, N., Dammer, K.-H., & Pflanz, M. (2021). Optimized Deep Learning Model as a Basis for Fast UAV Mapping of Weed Species in Winter Wheat Crops. Remote Sensing, 13(9), 1704. https://doi.org/10.3390/rs13091704