City-Scale Mapping of Urban Façade Color Using Street-View Imagery

Abstract

1. Introduction

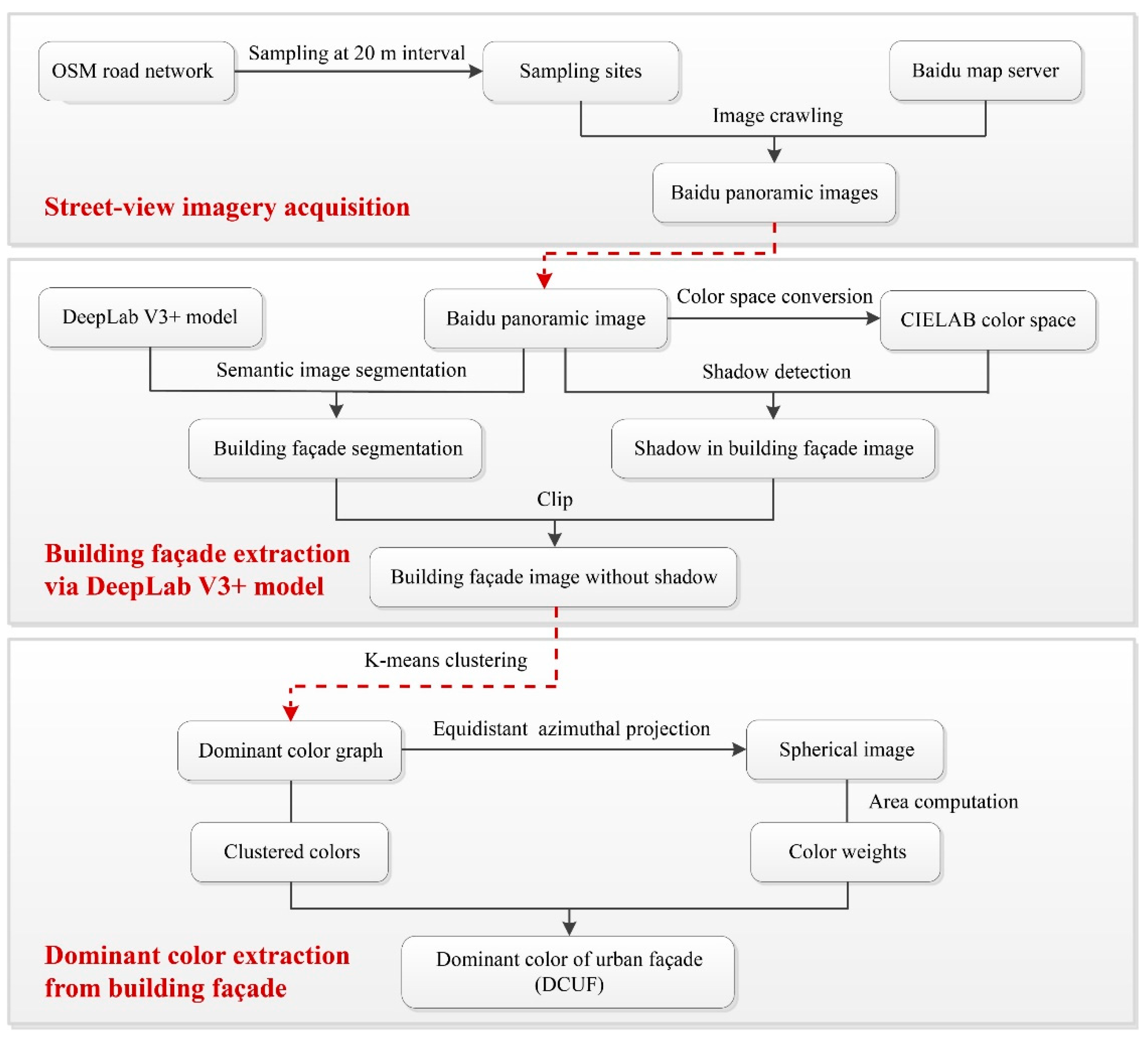

2. Materials and Methods

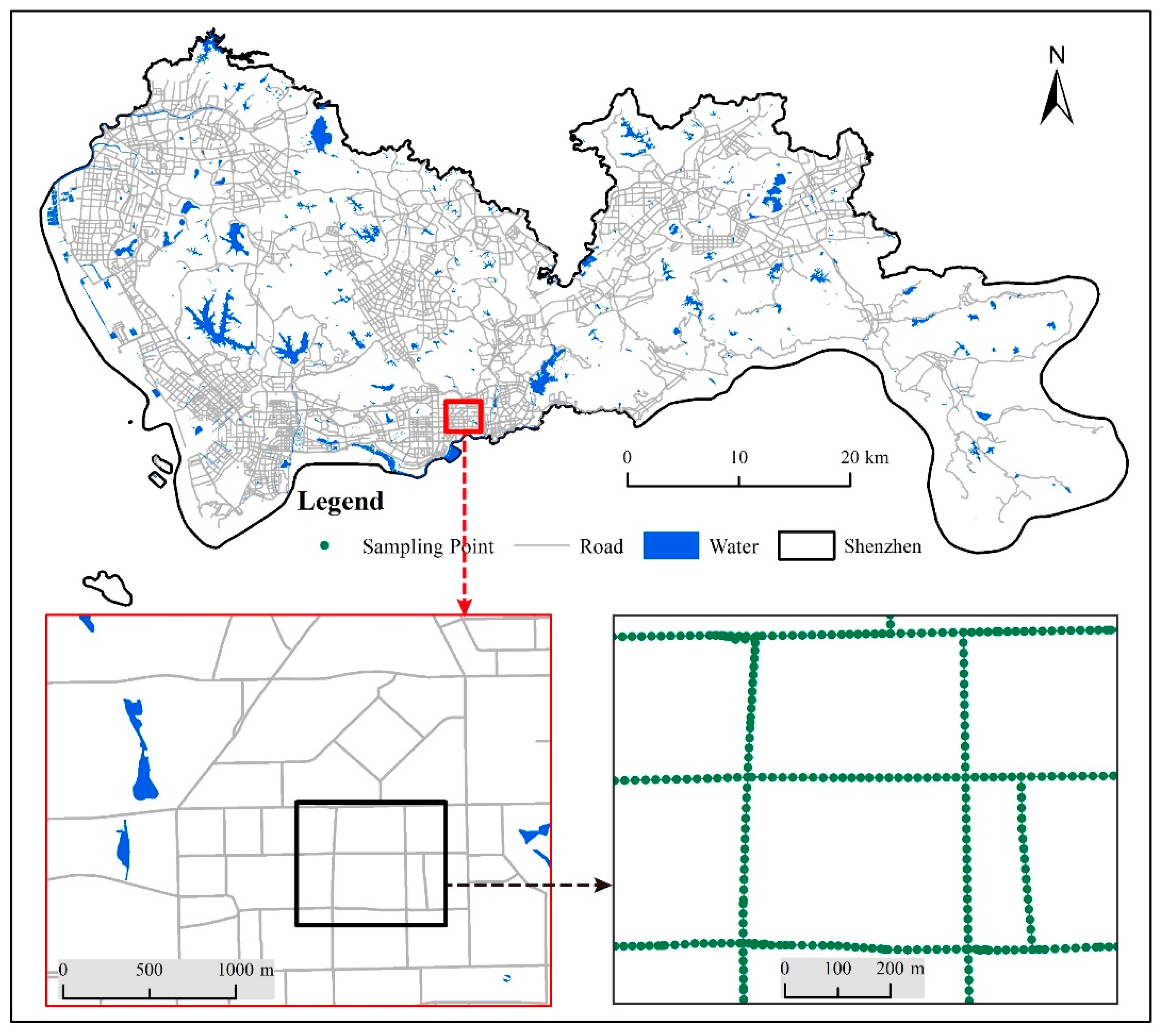

2.1. Study Area

2.2. Dominant Color of the Urban Façade (DCUF)

- As the CCBC-240 uses the Munsell color system to represent the color, the Munsell color codes of the 240 standard colors are converted to the HSV color space to obtain the corresponding HSV values of the 240 standard colors.

- The K-means clustering of the colors of the urban façade images is matched to the 240 standard colors in CBCC-240, using the smallest Euclidean distance (d) between the HSV values. The Euclidean distance between the HSV value of a K-means clustering color and the HSV value of the 240 standard colors in the CBCC-240 is calculated using Equation (2) [35]:

2.3. Baidu Street View (BSV) Panorama Acquisition

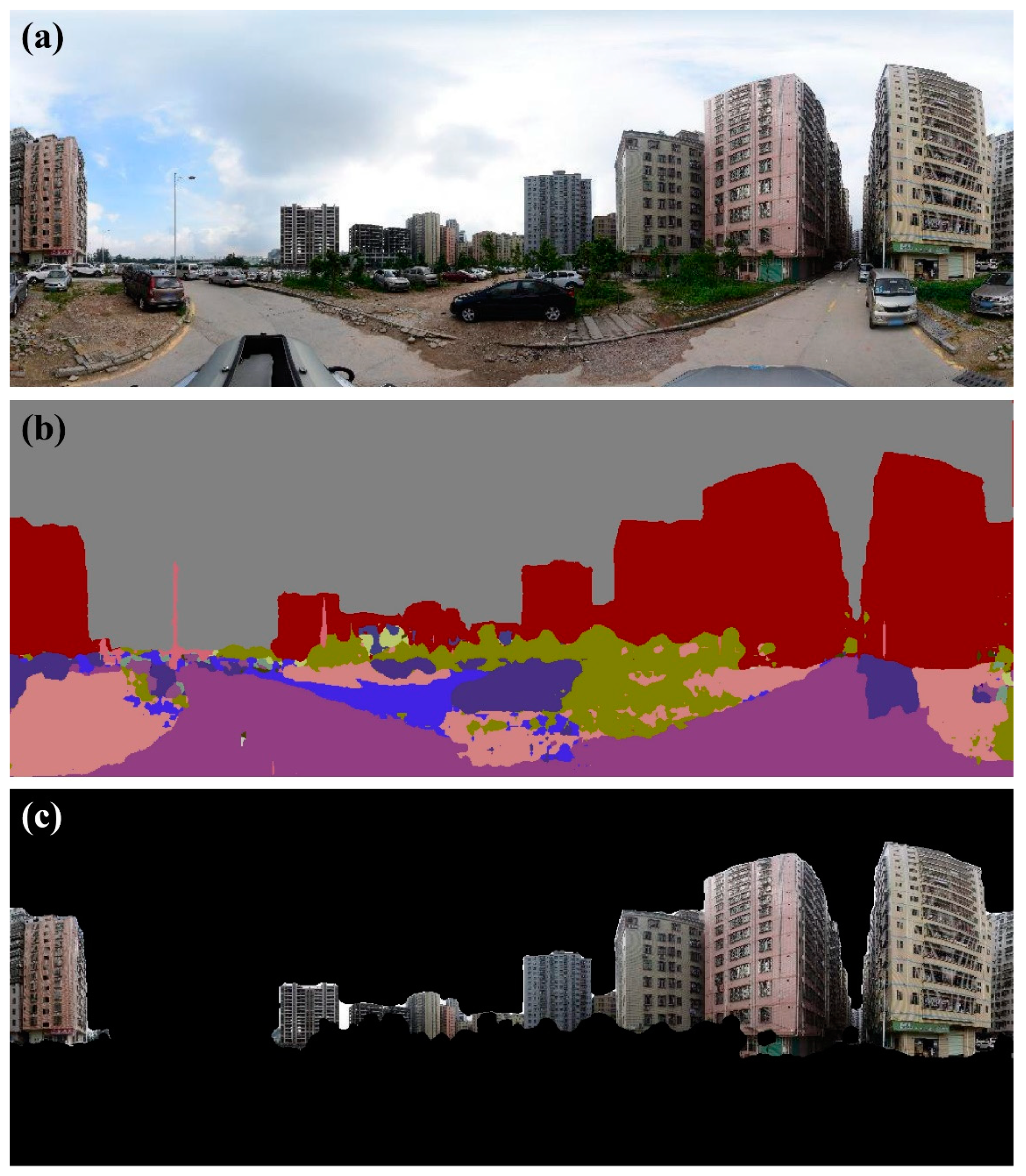

2.4. Extraction of the Urban Façade from BSV

2.4.1. Deep Learning-Based Extraction of the Urban Façade

2.4.2. Shadow Detection of the Urban Façade Using CIELAB Color Space

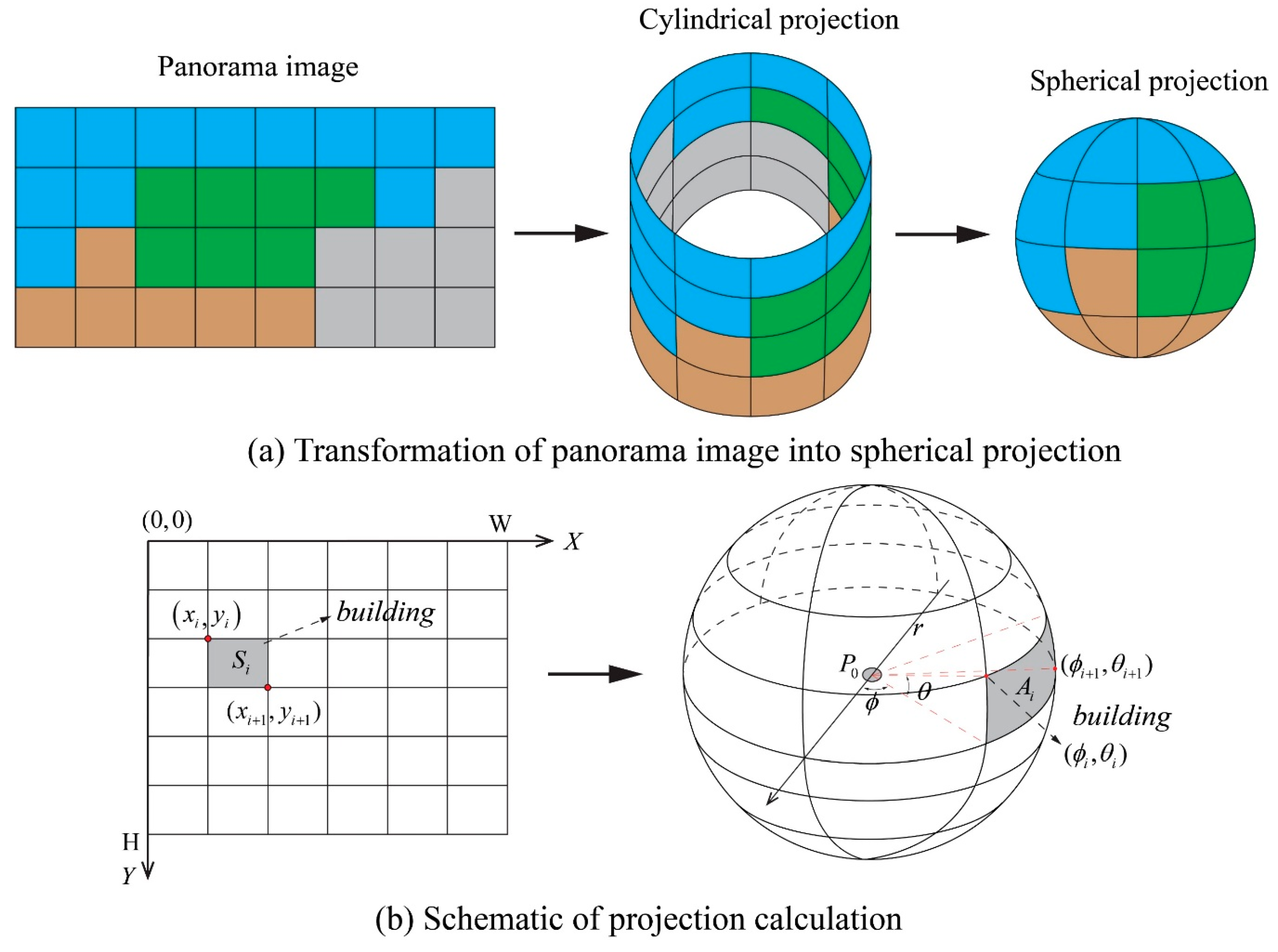

2.5. Determination of the Weight of the Dominant Color in the DCUF

3. Results

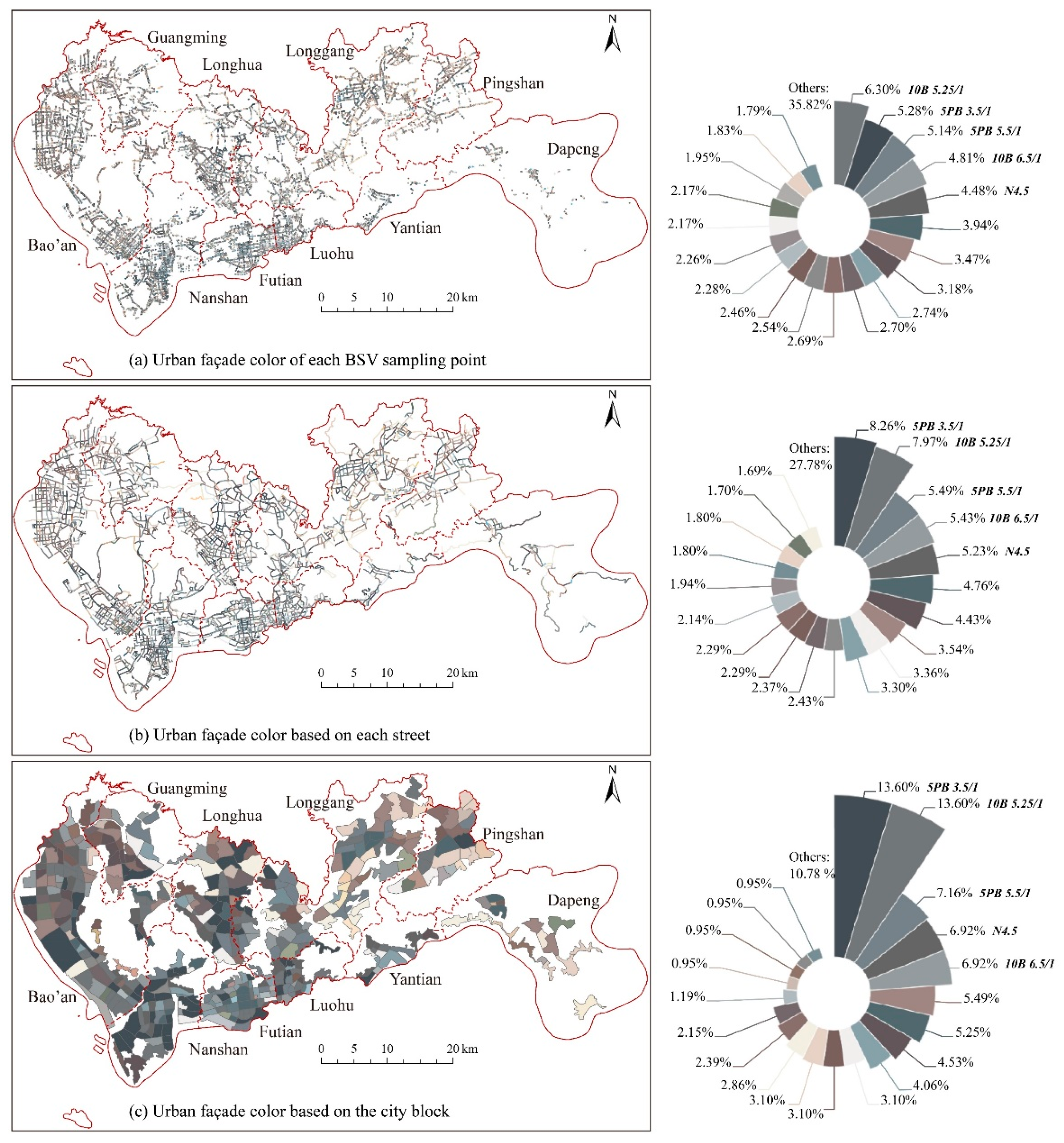

3.1. Mapping of Urban Façade Color Based on Street and City Block

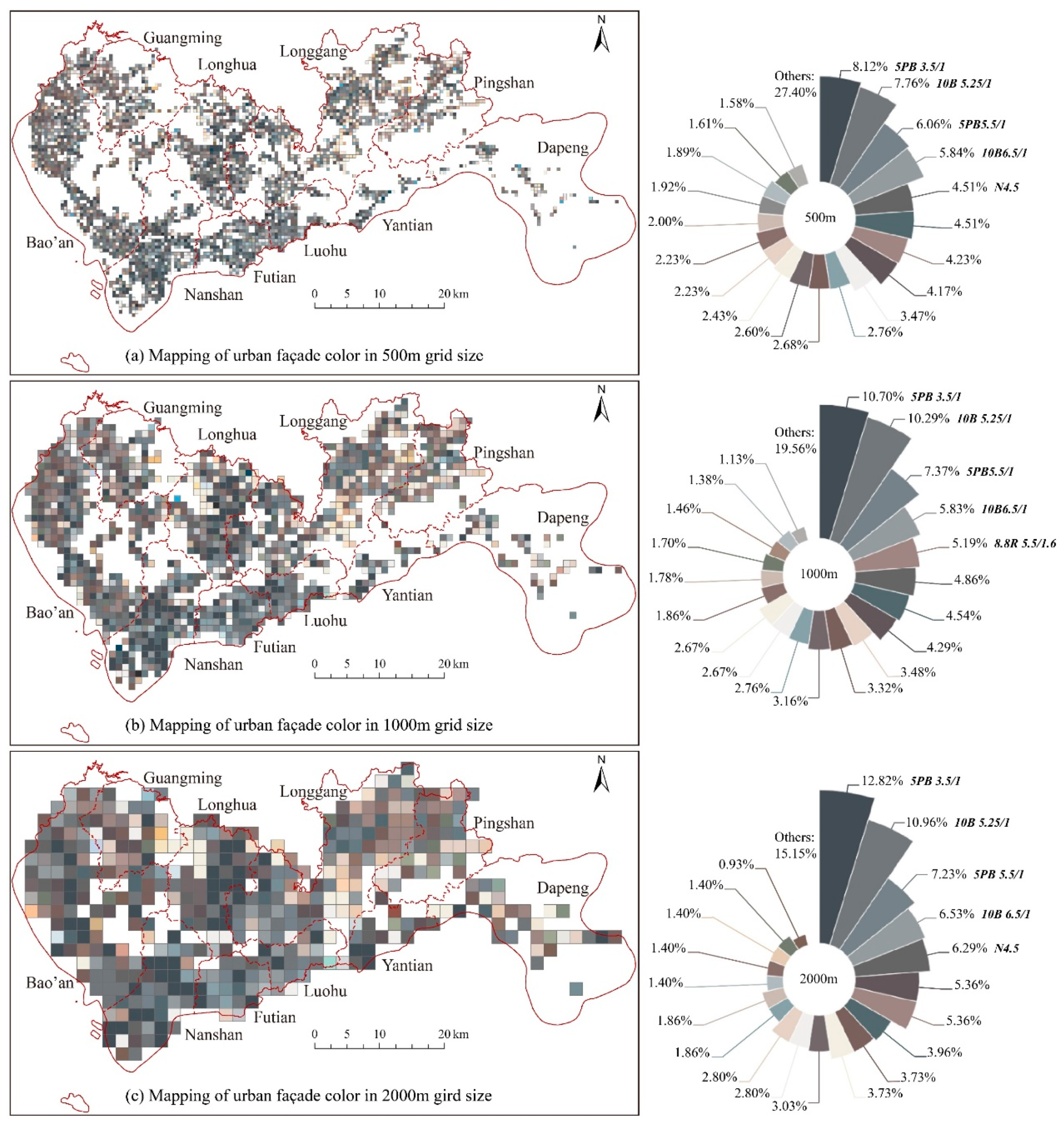

3.2. Mapping of the Urban Façade Color Based on a Regular Grid

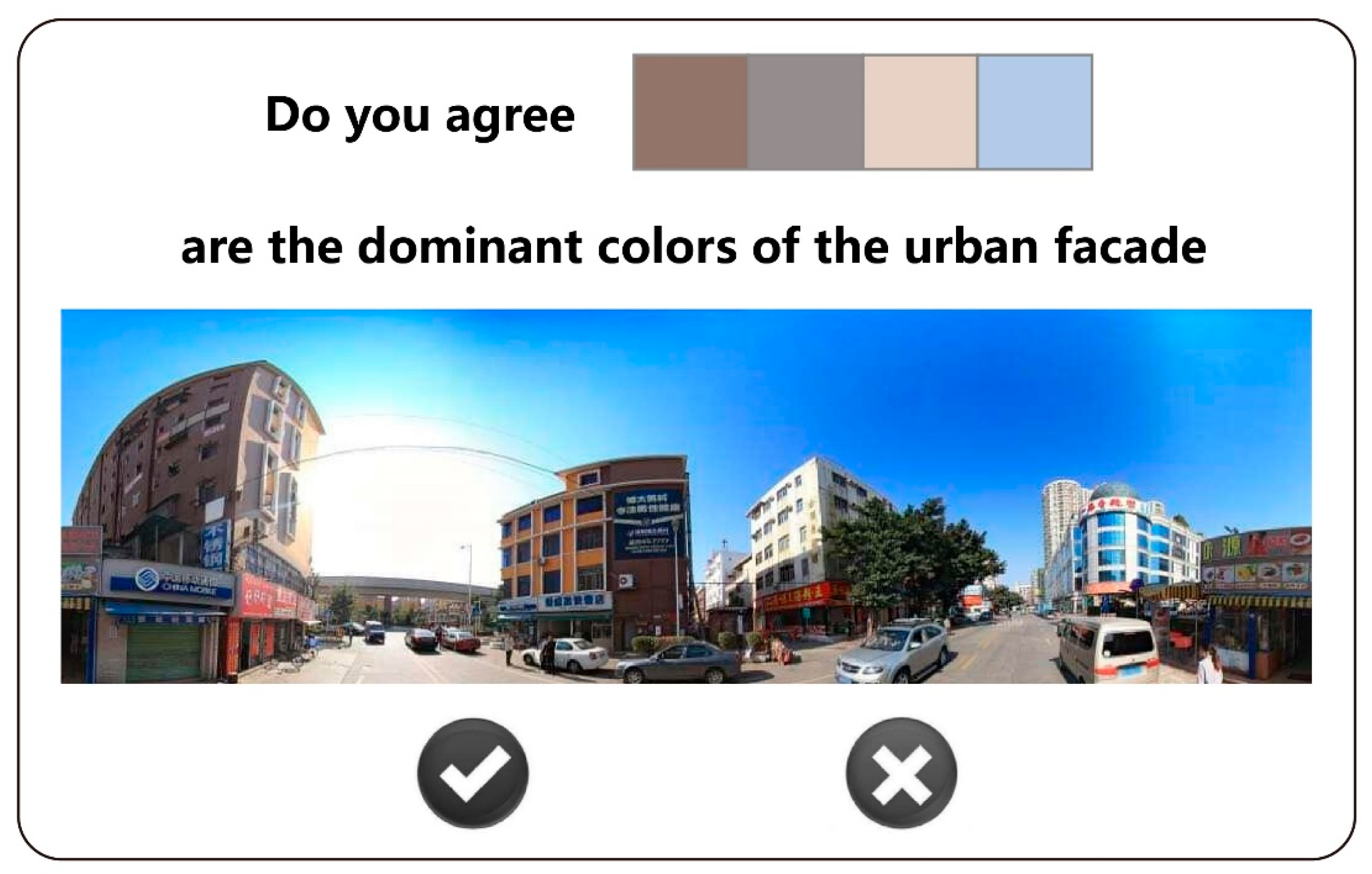

3.3. Result Verification

4. Discussion

4.1. Measuring the Urban Façade Color at the City Scale

4.2. Contributions for Precise Urban Planning and Design

4.3. Combining Urban Science with Urban Design: A Data-Informed, Algorithm-Driven Perspective

4.4. Limitations and Future Steps

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ye, Y.; Zhang, Z.; Zhang, X.; Zeng, W. Human-scale Quality on Streets: A Large-scale and Efficient Analytical Approach Based on Street View Images and New Urban Analytical Tools. Urban Plan. Int. 2019, 34, 18–27. [Google Scholar] [CrossRef]

- Gou, A.P.; Wang, J.B. A comparison between wishes and status: Gray is not the preference for residents while the city shows neutral colors. Color Res. Appl. 2017, 42, 650–663. [Google Scholar] [CrossRef]

- Markevych, I.; Schoierer, J.; Hartig, T.; Chudnovsky, A.; Hystad, P.; Dzhambov, A.M.; De Vries, S.; Triguero-Mas, M.; Brauer, M.; Nieuwenhuijsen, M.J. Exploring preathways linking greenspace to health: Theoretical and methodological guidance. Environ. Res. 2017, 158, 301–317. [Google Scholar] [CrossRef] [PubMed]

- Labib, S.M.; Lindley, S.; Huck, J.J. Spatial dimensions of the influence of urban green-blue spaces on human health: A systematic review. Environ. Res. 2020, 180, 108869. [Google Scholar] [CrossRef] [PubMed]

- Gou, A.P.; Wang, J.B. Research on the location characters of urban color plan in China. Color Res. Appl. 2008, 33, 68–76. [Google Scholar] [CrossRef]

- Tosca, T.F. Environmental colour design for the third millennium: An evolutionary standpoint. Color Res. Appl. 2002, 27, 441–454. [Google Scholar] [CrossRef]

- De Mattiello, M.L.F.; Rabuini, E. Colours in La Boca: Patrimonial Identity in the Urban Landscape. Color Res. Appl. 2011, 36, 222–228. [Google Scholar] [CrossRef]

- Linton, H.; Linton, R. Color in Architecture: Design Methods for Buildings, Interiors, and Urban Spaces; McGraw-Hill: New York, NY, USA, 1999. [Google Scholar]

- Caivano, J.L. Research on color in architecture and environmental design: Brief history, current developments, and possible future. Color Res. Appl. 2006, 31, 350–363. [Google Scholar] [CrossRef]

- Gou, A. Method of urban color plan based on spatial configuration. Color Res. Appl. 2013, 38, 65–72. [Google Scholar] [CrossRef]

- Xu, Z.; Zheng, X. Roadmap of moving urban colour toward cultural sustainability in China. Color Res. Appl. 2021, 46, 222–239. [Google Scholar] [CrossRef]

- Gouaich, Y.; Mebrouki, A.; Ghariri, R.; Mebarki, A.; Belabbas, A.; Manav, B.; Serra, J.; Llopis, J.; Torres, A. A novel method for assessing the chromatic integration of architecture in the Ksourian landscape of M’zab Valley, Algeria. Color Res. Appl. 2018, 43, 760–778. [Google Scholar] [CrossRef]

- Garcia-Codoner, A.; Verdu, J.L.; Barchino, A.T.; Guillen, R.V.; Lluch, J.S. Colour as a Structural Variable of Historical Urban Form. Color Res. Appl. 2009, 34, 253–265. [Google Scholar] [CrossRef]

- Mohammadgholipour, M.; Kirchner, E.; Salleh, E. Quantifying the color palettes of Naghsh-e Jahan Square in Isfahan. Color Res. Appl. 2020, 45, 911–925. [Google Scholar] [CrossRef]

- Staresinic, M.; Simoncic, B.; Bracko, S. Using a Digital Camera to Identify Colors in Urban Environments. J. Imaging Sci. Technol. 2011, 55, 060201. [Google Scholar] [CrossRef]

- Nguyen, L.; Embrechts, J.J.; Teller, J. Colour in the city: A chromatic typology for the quantitative analysis of spatial coherence. Landsc. Res. 2020, 45, 44–62. [Google Scholar] [CrossRef]

- Li, M.; Xu, J.G.; Zhang, X. Spatial-Sensitivity Analysis for Urban Color Planning: Study of Luoyang City, China. J. Urban Plan. Dev. 2017, 143, 05016014. [Google Scholar] [CrossRef]

- Ibrahim, M.R.; Haworth, J.; Cheng, T. Understanding cities with machine eyes: A review of deep computer vision in urban analytics. Cities 2020, 96, 102481. [Google Scholar] [CrossRef]

- Li, X.; Ratti, C. Mapping the spatio-temporal distribution of solar radiation within street canyons of Boston using Google Street View panoramas and building height model. Landsc. Urban Plan. 2019, 191, 103387. [Google Scholar] [CrossRef]

- Lu, Y. Using Google Street View to investigate the association between street greenery and physical activity. Landsc. Urban Plan. 2019, 191, 103435. [Google Scholar] [CrossRef]

- Chen, L.; Lu, Y.; Sheng, Q.; Ye, Y.; Wang, R.; Liu, Y. Estimating pedestrian volume using Street View images: A large-scale validation test. Comput. Environ. Urban Syst. 2020, 81, 101481. [Google Scholar] [CrossRef]

- Ye, Y.; Richards, D.; Lu, Y.; Song, X.; Zhuang, Y.; Zeng, W.; Zhong, T. Measuring daily accessed street greenery: A human-scale approach for informing better urban planning practices. Landsc. Urban Plan. 2018, 191, 103434. [Google Scholar] [CrossRef]

- Cao, R.; Zhu, J.; Tu, W.; Li, Q.; Cao, J.; Liu, B.; Zhang, Q.; Qiu, G. Integrating Aerial and Street View Images for Urban Land Use Classification. Remote Sens. 2018, 10, 1553. [Google Scholar] [CrossRef]

- Krylov, V.A.; Kenny, E.; Dahyot, R. Automatic Discovery and Geotagging of Objects from Street View Imagery. Remote. Sens. 2018, 10, 661. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Liang, J.; Gong, J.; Sun, J.; Zhou, J.; Li, W.; Li, Y.; Liu, J.; Shen, S. Automatic sky view factor estimation from street view photographs—A big data approach. Remote Sens. 2017, 9, 411. [Google Scholar] [CrossRef]

- Ye, Y.; Li, D.; Liu, X. How block density and typology affect urban vitality: An exploratory analysis in Shenzhen, China. Urban Geogr. 2018, 39, 631–652. [Google Scholar] [CrossRef]

- Abdel-Mottaleb, M.; Krishnamachari, S. Multimedia descriptions based on MPEG-7: Extraction and applications. IEEE Trans. Multimed. 2004, 6, 459–468. [Google Scholar] [CrossRef]

- Li, L.; Zhang, X.Q.; Hu, W.M.; Li, W.Q.; Zhu, P.F. Soccer Video Shot Classification Based on Color Characterization Using Dominant Sets Clustering. In Advances in Multimedia Information Processing-Pcm 2009; Muneesawang, P., Wu, F., Kumazawa, I., Roeksabutr, A., Liao, M., Tang, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5879, pp. 923–929. [Google Scholar]

- Mustaffa, M.R.; Ahmad, F.; Rahmat, R.; Mahmod, R. Dominant Colour Descriptor with Spatial Information for Content-Based Image Retrieval. In Proceedings of the International Symposium of Information Technology 2008, Kuala Lumpur, Malaysia, 26–29 August 2008; Volume 1–4, pp. 1612–1620. [Google Scholar]

- Celebi, M.E. Improving the performance of k-means for color quantization. Image Vis. Comput. 2011, 29, 260–271. [Google Scholar] [CrossRef]

- Feng, Z.L.; Yuan, W.L.; Fu, C.L.; Lei, J.; Song, M.L. Finding intrinsic color themes in images with human visual perception. Neurocomputing 2018, 273, 395–402. [Google Scholar] [CrossRef]

- Kim, D.W.; Lee, K.H.; Lee, D. A novel initialization scheme for the fuzzy c-means algorithm for color clustering. Pattern Recognit. Lett. 2004, 25, 227–237. [Google Scholar] [CrossRef]

- Maddalena, L.; Petrosino, A. The SOBS algorithm: What are the limits? In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 21–26. [Google Scholar]

- Ding, B.; Long, C.; Zhang, L.; Xiao, C. ARGAN: Attentive Recurrent Generative Adversarial Network for Shadow Detection and Removal. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 10212–10221. [Google Scholar]

- Yarlagadda, S.K.; Zhu, F. A Reflectance Based Method For Shadow Detection and Removal. In 2018 IEEE Southwest Symposium on Image Analysis and Interpretation; IEEE: Piscataway Township, NJ, USA, 2018; pp. 9–12. [Google Scholar]

- Zhu, T.; Yin, X. Image Shadow Detection and Removal in Autonomous Vehicle Based on Support Vector Machine. Sens. Mater. 2020, 32, 1969–1979. [Google Scholar] [CrossRef]

- Suny, A.H.; Mithila, N.H. A Shadow Detection and Removal from a Single Image Using LAB Color Space. Int. J. Comput. Sci. Issues 2013, 10, 270. [Google Scholar]

- Barbierato, E.; Bernetti, I.; Capecchi, I.; Saragosa, C. Integrating remote sensing and street view images to quantify urban forest ecosystem services. Remote Sens. 2020, 12, 329. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Volume 11211, pp. 801–808. [Google Scholar]

- Gil, J.Y.; Kimmel, R. Efficient dilation, erosion, opening, and closing algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1606–1617. [Google Scholar] [CrossRef]

- Murali, S.; Govindan, V.K. Shadow Detection and Removal from a Single Image Using LAB Color Space. Cybern. Inf. Technol. 2013, 13, 95–103. [Google Scholar] [CrossRef]

- Chavel, I. Riemannian Geometry: A Modern Introduction; Cambridge University Press: Cambridge, UK, 2006; Volume 98. [Google Scholar]

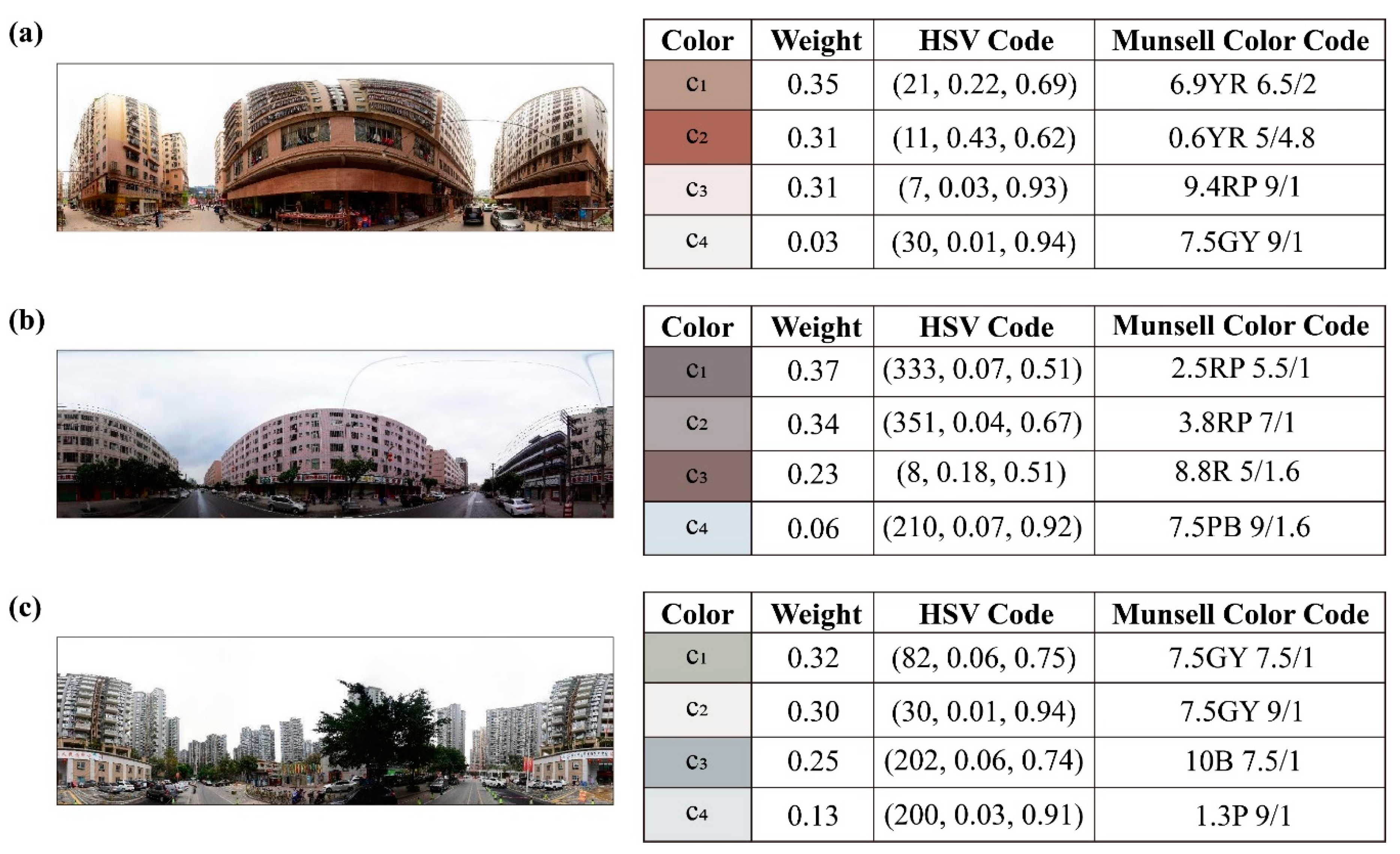

| ID | Location | Dominant Colors |

|---|---|---|

| 1 | (113.9195, 22.5128) | (0.35, (21,0.22,0.69),“6.9 YR 6.5/2”), (0.31, (11, 0.43, 0.62), “0.6 YR 5/4.8”), (0.31, (7, 0.03, 0.93), “9.4 RP 9/1”), (0.03, (30, 0.01, 0.94), “7.5 GY 9/1”) |

| 2 | (113.9012, 22.8101) | (0.37, (333, 0.07, 0.51),“2.5 RP 5.5/1”), (0.34, (351, 0.04, 0.67), “3.8 RP 7/1”), (0.23, (8, 0.18, 0.51), “8.8 R 5/1.6”), (0.06, (210, 0.07, 0.92), “7.5 PB 9/1.6”) |

| 3 | (113.8489,22.5709) | (0.32, (82, 0.06, 0.75),“7.5 GY 7.5/1”), (0.30, (30, 0.01, 0.94), “7.5 GY 9/1”), (0.25, (202, 0.06, 0.74), “10 B 7.5/1”), (0.13, (200, 0.03, 0.91), “1.3 P 9/1”) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, T.; Ye, C.; Wang, Z.; Tang, G.; Zhang, W.; Ye, Y. City-Scale Mapping of Urban Façade Color Using Street-View Imagery. Remote Sens. 2021, 13, 1591. https://doi.org/10.3390/rs13081591

Zhong T, Ye C, Wang Z, Tang G, Zhang W, Ye Y. City-Scale Mapping of Urban Façade Color Using Street-View Imagery. Remote Sensing. 2021; 13(8):1591. https://doi.org/10.3390/rs13081591

Chicago/Turabian StyleZhong, Teng, Cheng Ye, Zian Wang, Guoan Tang, Wei Zhang, and Yu Ye. 2021. "City-Scale Mapping of Urban Façade Color Using Street-View Imagery" Remote Sensing 13, no. 8: 1591. https://doi.org/10.3390/rs13081591

APA StyleZhong, T., Ye, C., Wang, Z., Tang, G., Zhang, W., & Ye, Y. (2021). City-Scale Mapping of Urban Façade Color Using Street-View Imagery. Remote Sensing, 13(8), 1591. https://doi.org/10.3390/rs13081591