High-Resolution Aerial Detection of Marine Plastic Litter by Hyperspectral Sensing

Abstract

1. Introduction

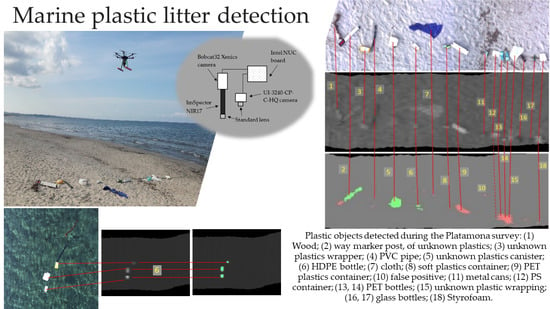

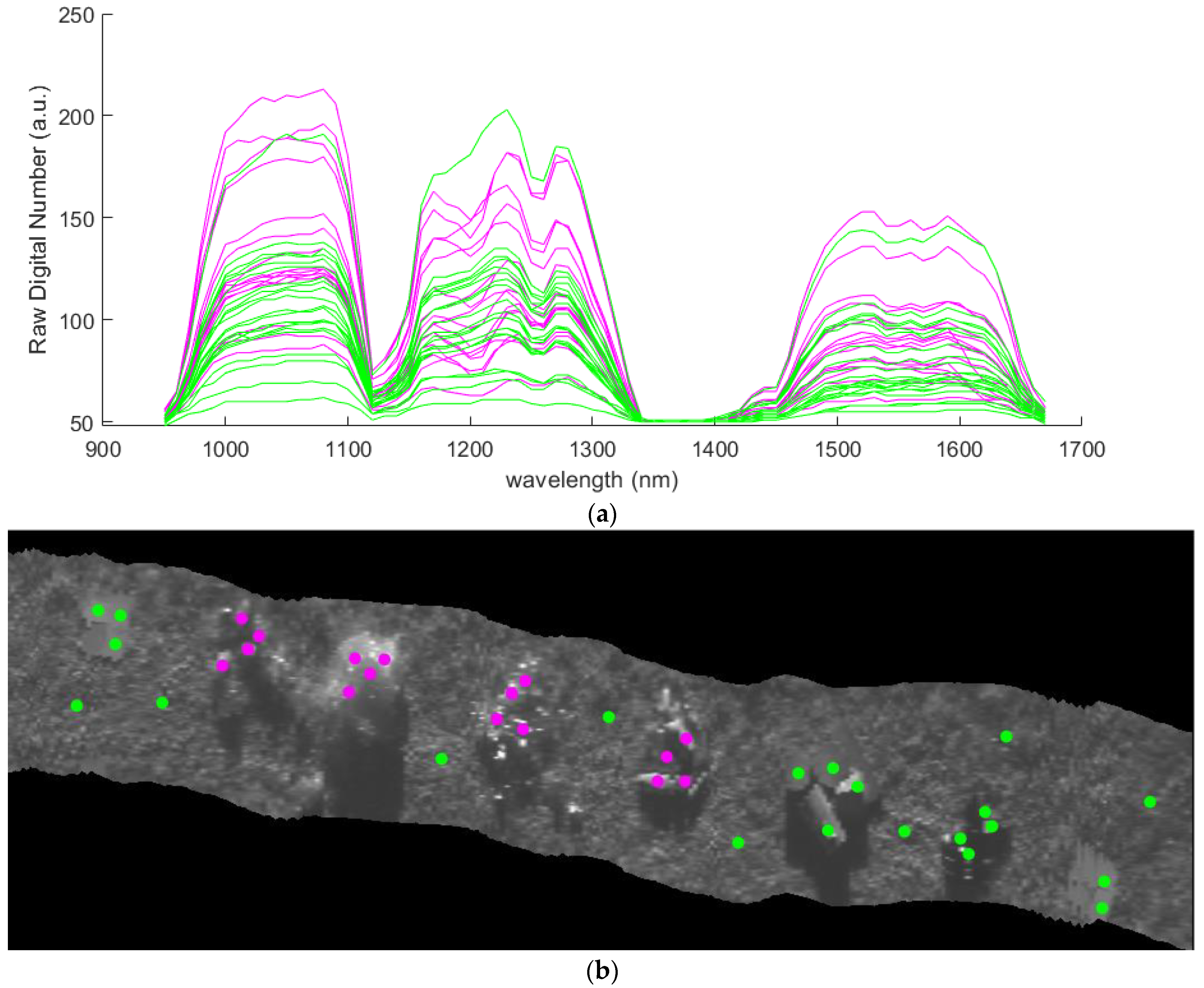

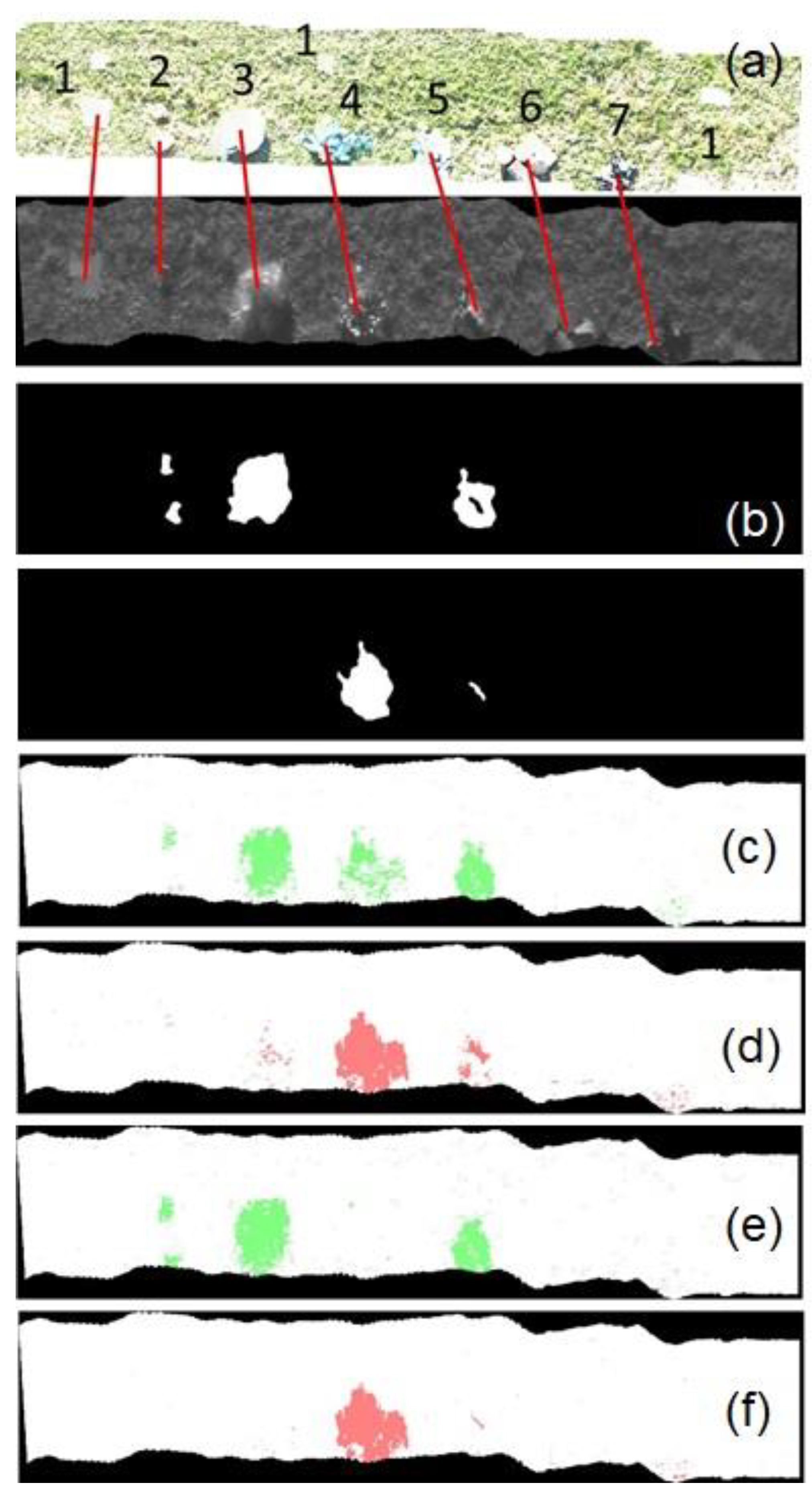

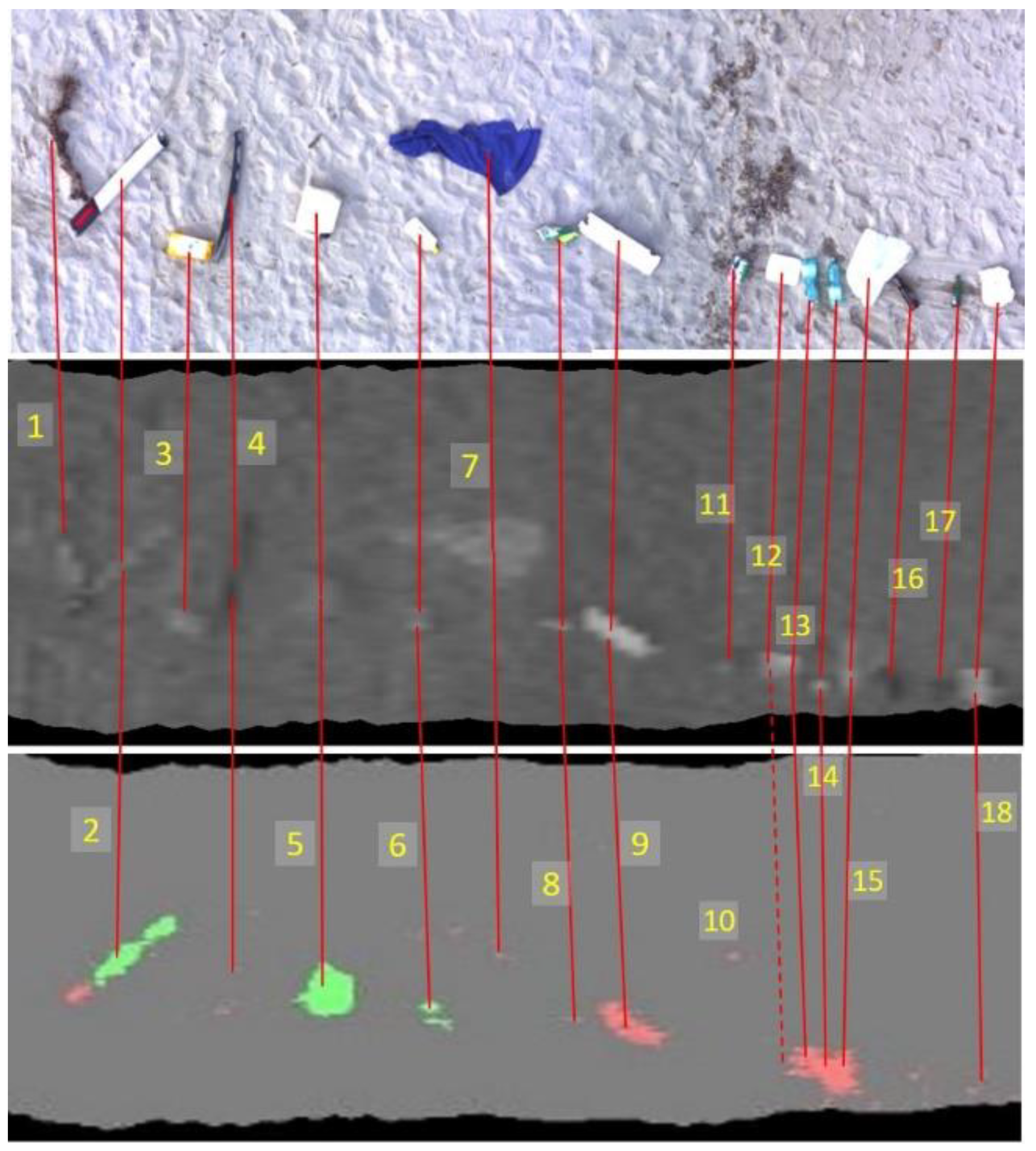

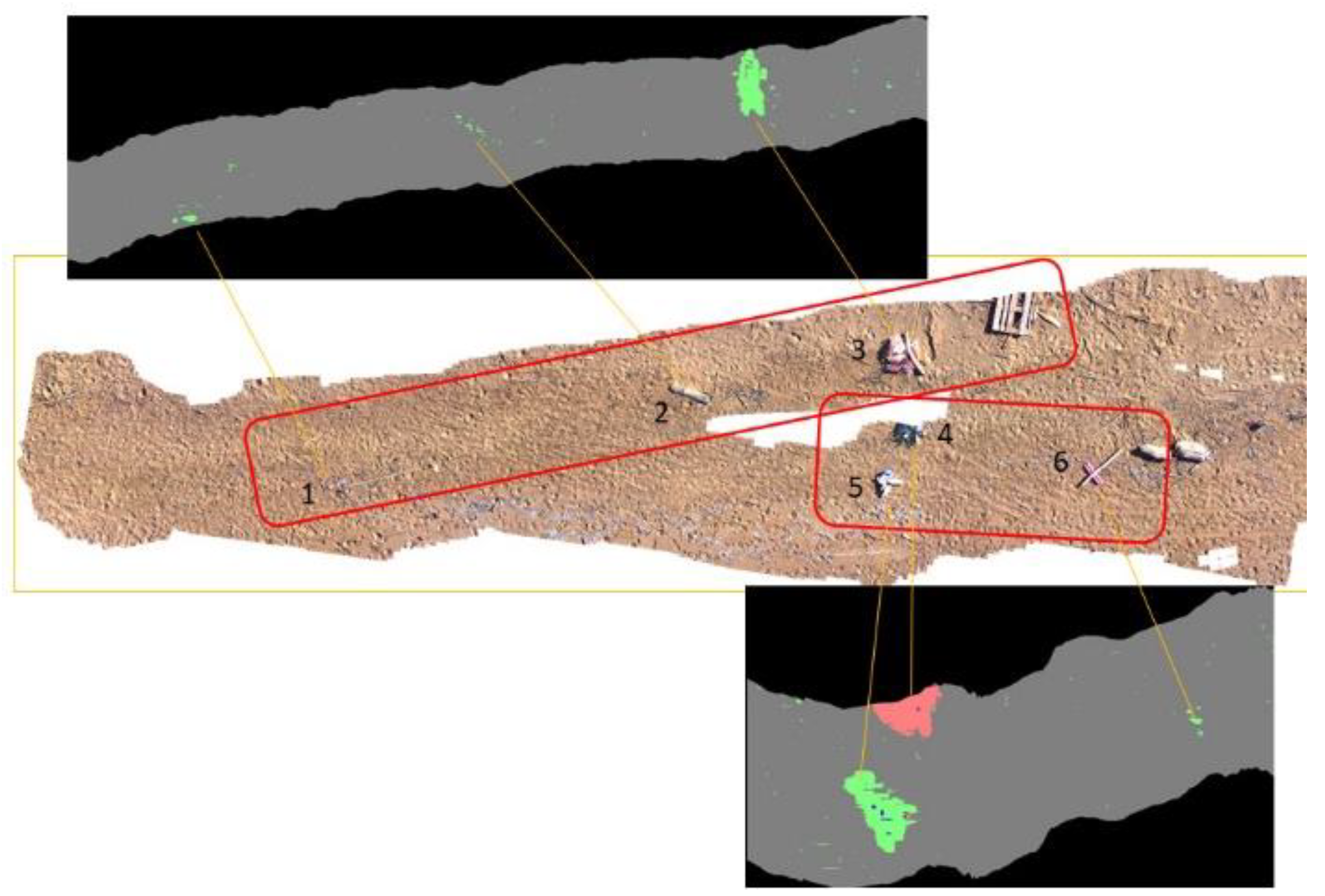

2. Materials and Methods

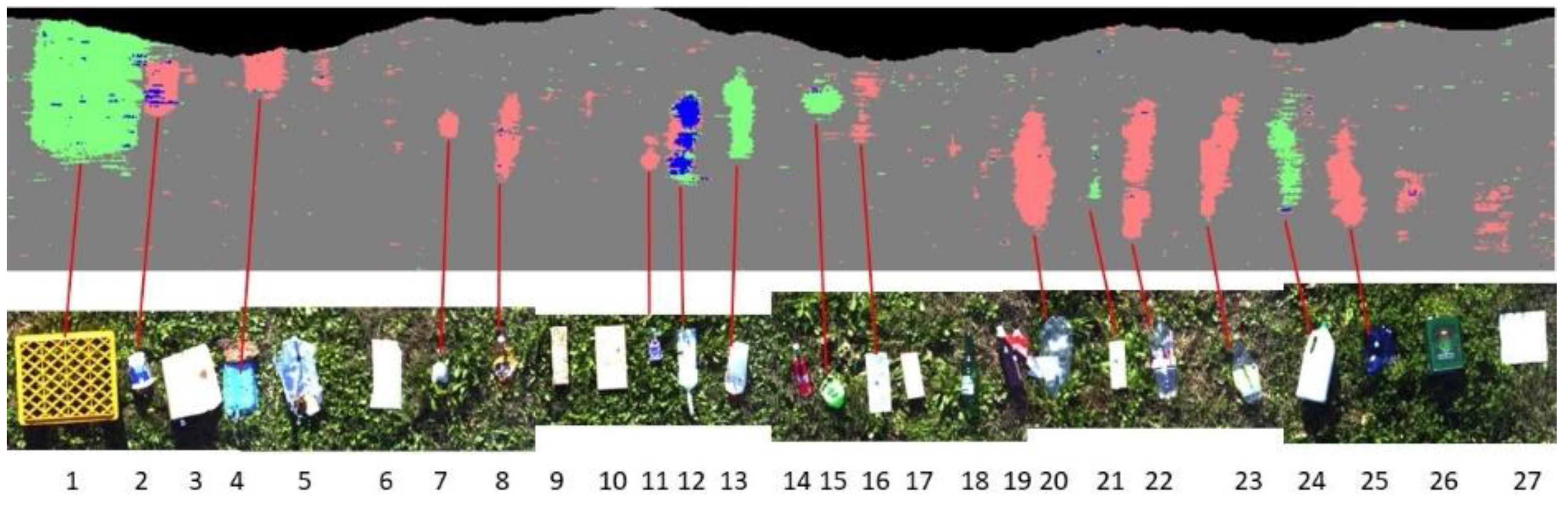

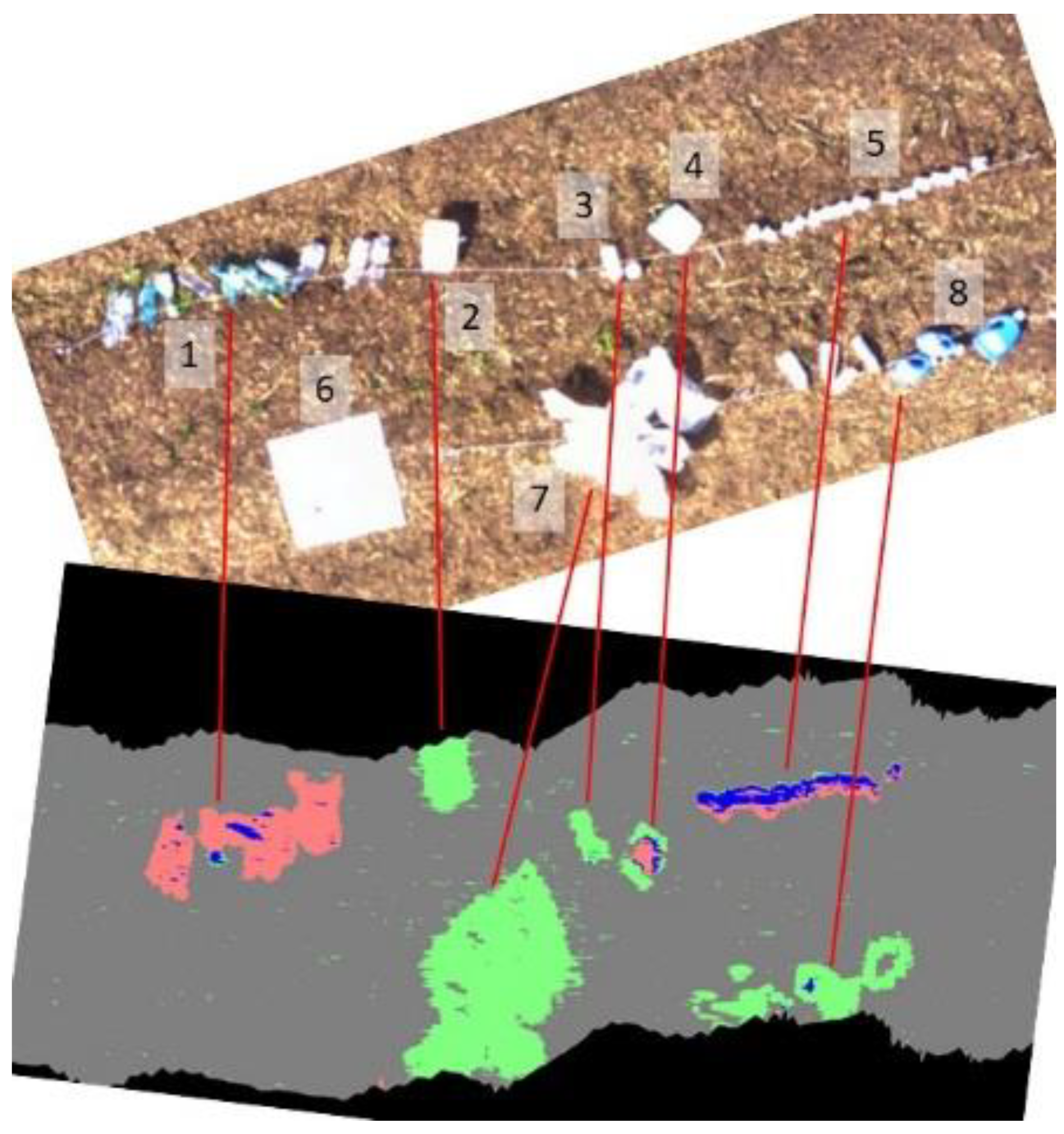

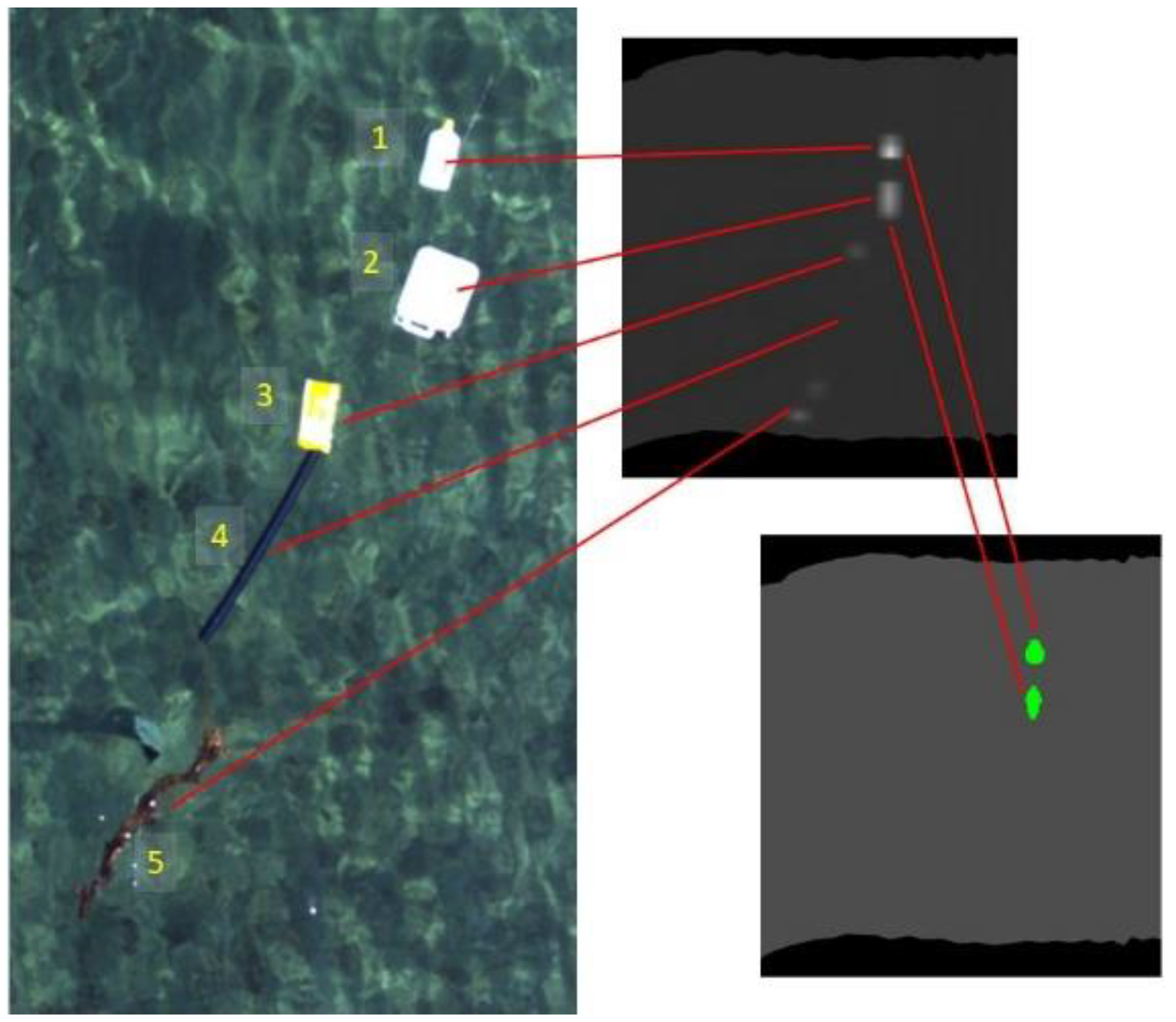

2.1. The Inspected Sites

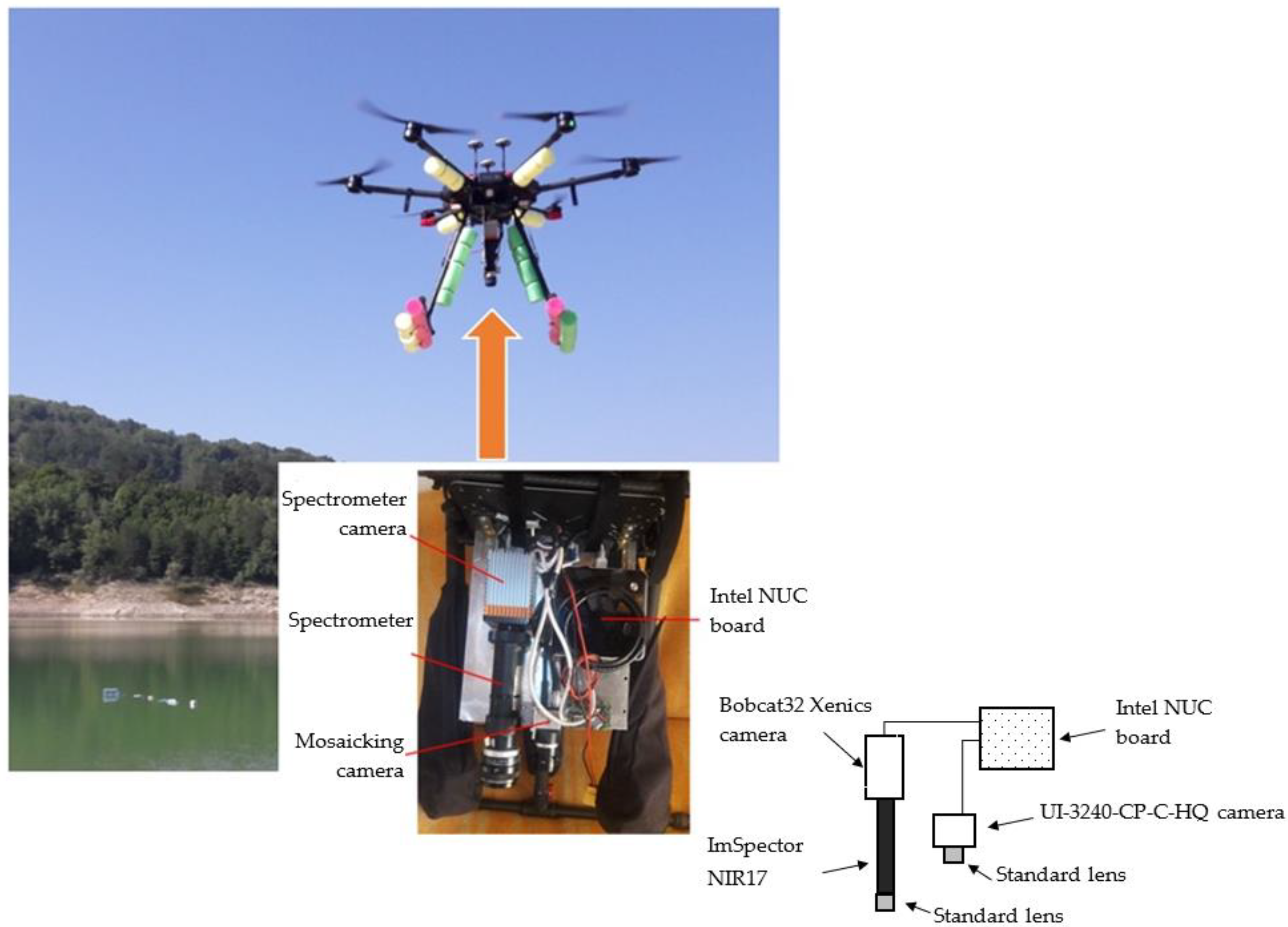

2.2. The Hyperspectral Imaging System, the UAV, and Onboard Instrumentation

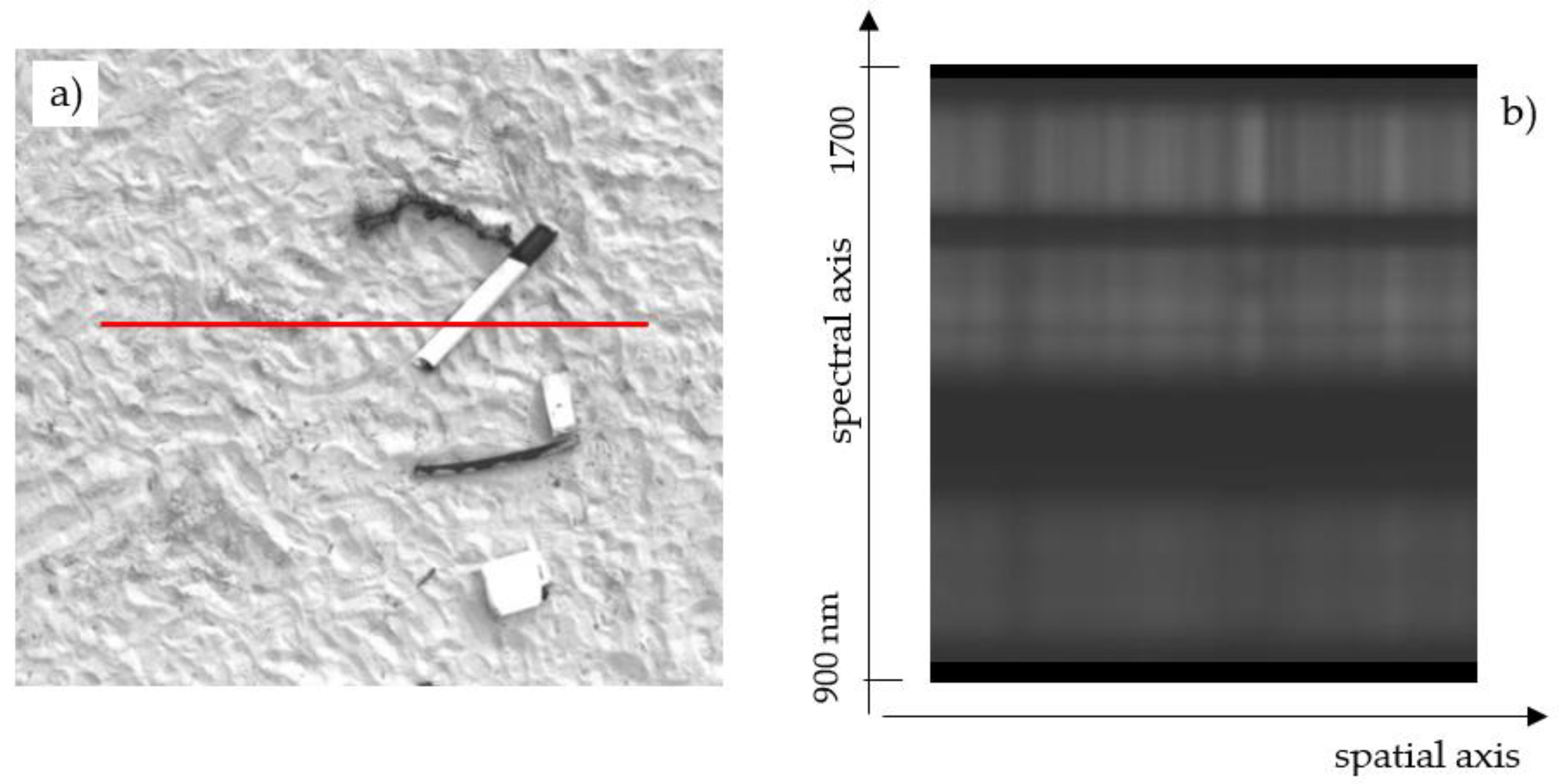

2.3. Hyperspectral Image Processing

- (i)

- Transformation of visible images taken over the scene into a single image obtained through a mosaicking procedure; translations, rotations, and scale changes between each couple of consecutive images are taken into account.

- (ii)

- Use of mosaicking results to correctly assign the line image acquired by the spectrometer within the investigated area.

- (iii)

- Construction of the hyperspectral cube (image of the scene at the different wavelengths).

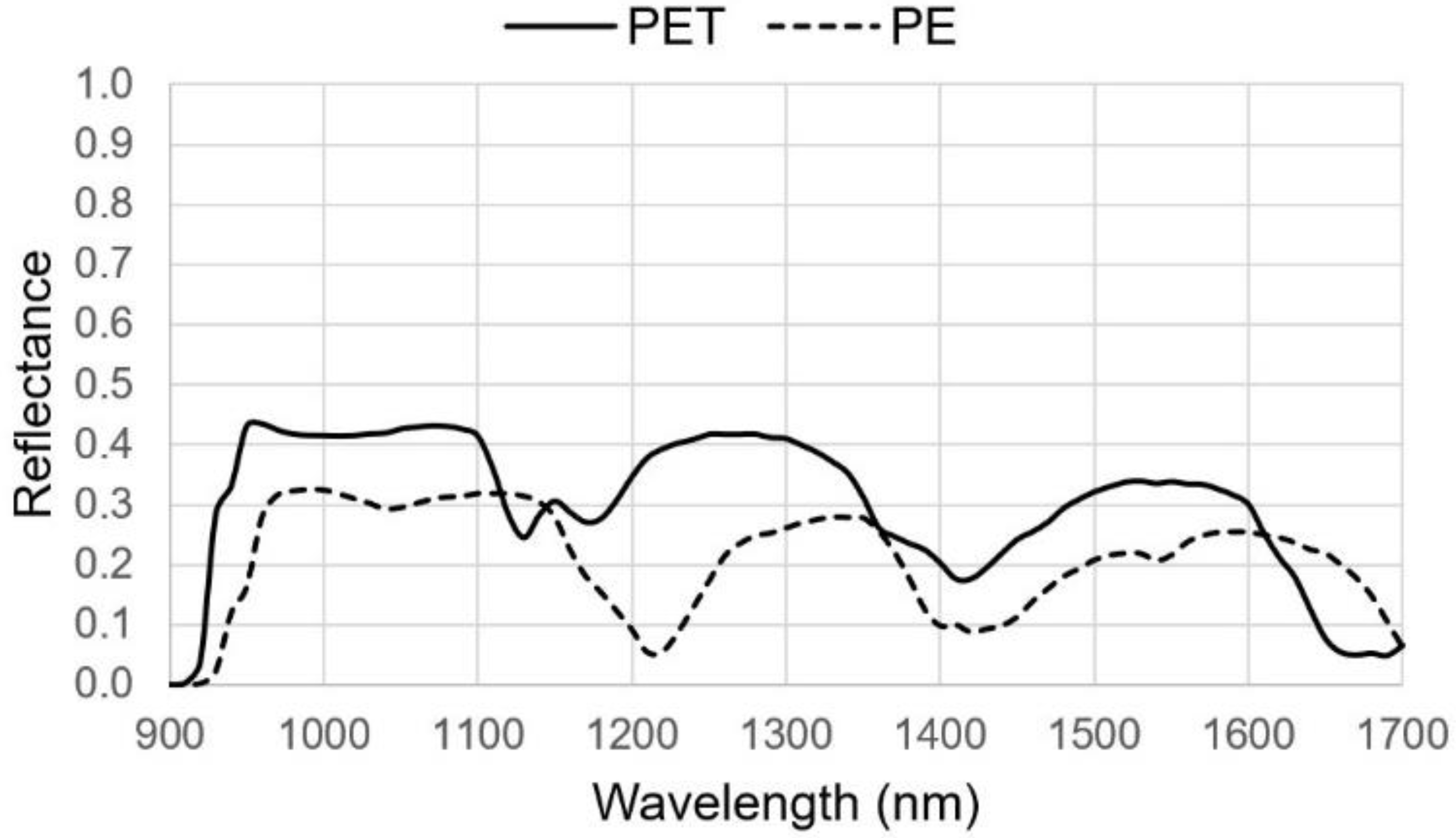

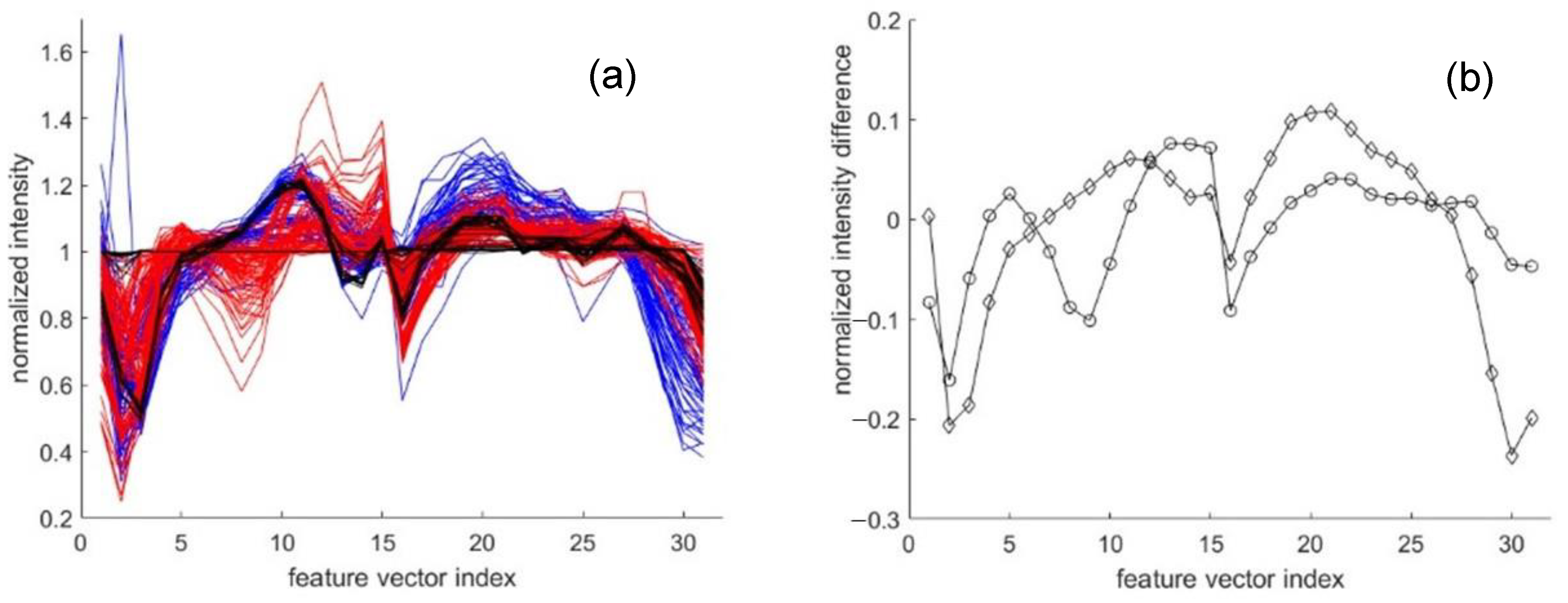

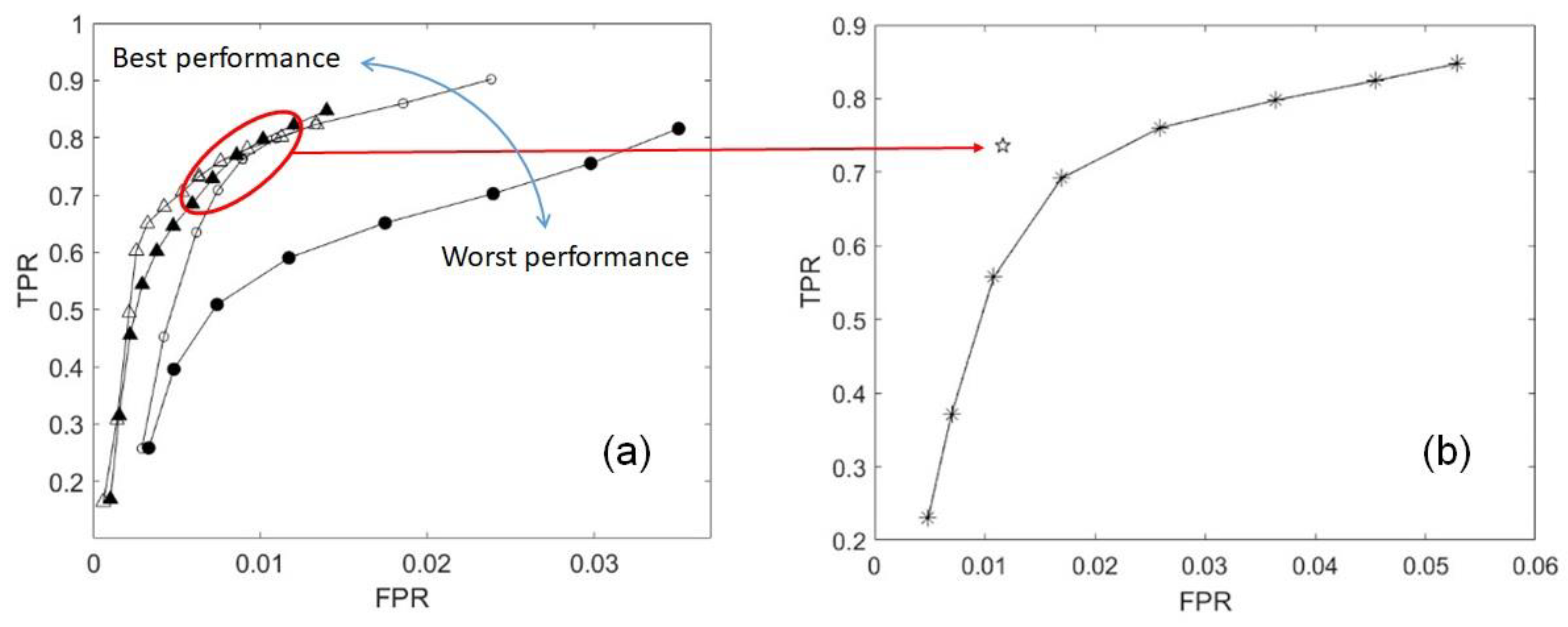

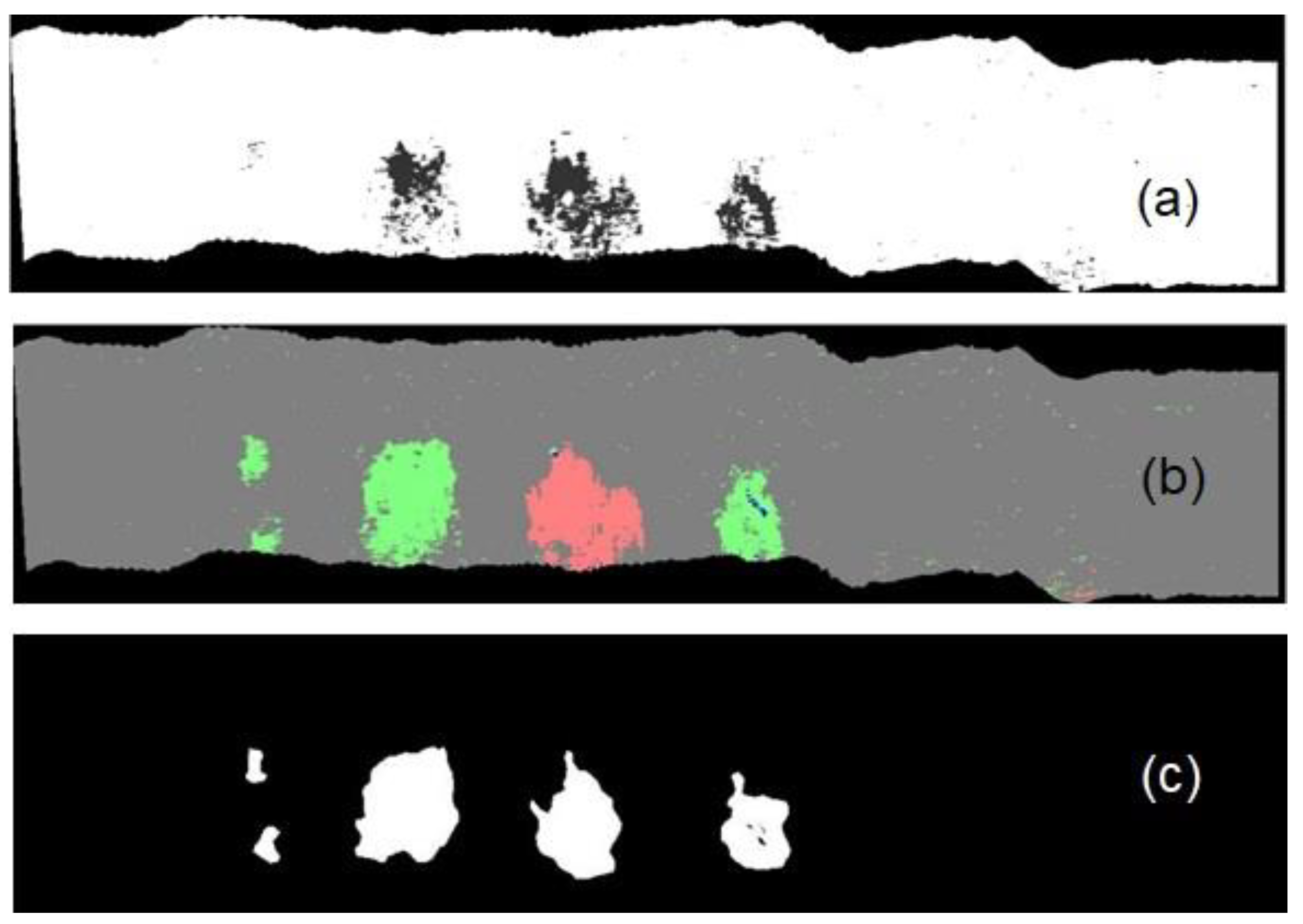

2.4. The Plastics Detection Algorithm

- Set 1 contains all samples of the original set, labeled into plastics (positive class) or non-plastics (negative class). This set reflects the final purpose of the detection.

- Set 2 contains all samples of PE for the positive class, and all non-plastic samples in the negative class.

- Set 3 is obtained in the same way as Set 2, for PET samples.

- Set 4 contains all samples of PE for the positive class, and all non-plastic samples together with all PET samples in the negative class.

- Set 5 is obtained in the same way as Set 4, but for PET samples in the positive class and PE samples in the negative one.

3. Results and Discussion

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gall, S.C.; Thompson, R.C. The impact of debris on marine life. Mar. Pollut. Bull. 2015, 92, 170–179. [Google Scholar] [CrossRef]

- Maximenko, N.; Corradi, P.; Law, K.L.; Van Sebille, E.; Garaba, S.P.; Lampitt, R.S.; Galgani, F.; Martinez-Vicente, V.; Goddijn-Murphy, L.; Veiga, J.M.; et al. Toward the integrated marine debris observing system. Front. Mar. Sci. 2019, 6, 447. [Google Scholar] [CrossRef]

- Martínez-Vicente, V.; Clark, J.R.; Corradi, P.; Aliani, S.; Arias, M.; Bochow, M.; Bonnery, G.; Cole, M.; Cózar, A.; Donnelly, R.; et al. Measuring marine plastic debris from space: Initial assessment of observation requirements. Remote Sens. 2019, 11, 2443. [Google Scholar] [CrossRef]

- Garaba, S.P.; Acuña-Ruz, T.; Mattar, C.B. Hyperspectral longwave infrared reflectance spectra of naturally dried algae, anthropogenic plastics, sands and shells. Earth Syst. Sci. Data 2020, 12, 2665–2678. [Google Scholar] [CrossRef]

- Van Den Broek, W.H.A.M.; Wienke, D.; Melssen, W.J.; Buydens, L.M.C. Plastic material identification with spectroscopic near infrared imaging and artificial neural networks. Anal. Chim. Acta 1998, 361, 161–176. [Google Scholar] [CrossRef]

- Moroni, M.; Mei, A.; Leonardi, A.; Lupo, E.; La Marca, F. PET and PVC separation with hyperspectral imagery. Sensors 2015, 15, 2205–2227. [Google Scholar] [CrossRef]

- Balsi, M.; Esposito, S.; Moroni, M. Hyperspectral characterization of marine plastic litters. In Proceedings of the 2018 IEEE International Workshop on Metrology for the Sea, Learning to Measure Sea Health Parameters (MetroSea), Bari, Italy, 8–10 October 2018; pp. 28–32. [Google Scholar]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- White, J.C.; Coops, N.C.; Wulder, M.A.; Vastaranta, M.; Hilker, T.; Tompalski, P. Remote sensing technologies for enhancing forest inventories: A review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Arnó, J.; Martínez-Casasnovas, J.A.; Ribes-Dasi, M.; Rosell, J.R. Review. Precision viticulture. Research topics, challenges and opportunities in site-specific vineyard management. Span. J. Agric. Res. 2009, 7, 779–790. [Google Scholar] [CrossRef]

- Tanda, G.; Chiarabini, V. Use of multispectral and thermal imagery in precision viticulture. J. Phys. Conf. Ser. 2019, 1224, 012034. [Google Scholar] [CrossRef]

- Tanda, G.; Balsi, M.; Fallavollita, P.; Chiarabini, V. A UAV-based thermal-imaging approach for the monitoring of urban landfills. Inventions 2020, 5, 55. [Google Scholar] [CrossRef]

- Toth, C.; Józków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Goddijn-Murphy, L.; Peters, S.; van Sebille, E.; James, N.A.; Gibb, S. Concept for a hyperspectral remote sensing algorithm for floating marine macro plastics. Mar. Pollut. Bull. 2018, 126, 255–262. [Google Scholar] [CrossRef]

- Garaba, S.P.; Aitken, J.; Slat, B.; Dierssen, H.M.; Lebreton, L.; Zielinski, O.; Reisser, J. Sensing ocean plastics with an airborne hyperspectral shortwave infrared imager. Environ. Sci. Technol. 2018, 52, 11699–11707. [Google Scholar] [CrossRef]

- Topouzelis, K.; Papakonstantinou, A.; Garaba, S.P. Detection of floating plastics from satellite and unmanned aerial systems (Plastic Litter Project 2018). Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 175–183. [Google Scholar] [CrossRef]

- Topouzelis, K.; Papageorgiou, D.; Karagaitanakis, A.; Papakonstantinou, A.; Ballesteros, M.A. Remote sensing of sea surface artificial floating plastic targets with Sentinel-2 and unmanned aerial systems (Plastic Litter Project 2019). Remote Sens. 2020, 12, 2013. [Google Scholar] [CrossRef]

- Gao, B.C.; Montes, M.J.; Davis, C.O.; Goetz, F.H. Atmospheric correction algorithms for hyperspectral remote sensing data of land and ocean. Remote Sens. Environ. 2009, 113, S17–S24. [Google Scholar] [CrossRef]

- Moroni, M.; Dacquino, C.; Cenedese, A. Mosaicing of hyperspectral images: The application of a spectrograph imaging device. Sensors 2012, 12, 10228–10247. [Google Scholar] [CrossRef]

- Moroni, M. Vegetation monitoring via a novel push-broom-sensor-based hyperspectral device. J. Phys. Conf. Ser. 2019, 1249, 012007. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Zortea, M.; Zanotta, D.C.; Shimabukuro, Y.E.; Souza Filho, C.R. Mapping tree species in tropical seasonal semi-deciduous forests with hyperspectral and multispectral data. Remote Sens. Environ. 2016, 179, 66–78. [Google Scholar] [CrossRef]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Peng, H.C.; Long, F.; Ding, C. Feature selection based on mutual information: Criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

| Rank Order | PE Wavelength [nm] | PET Wavelength [nm] |

|---|---|---|

| 1 | 1150 | 1620 |

| 2 | 1560 | 1550 |

| 3 | 1210 | 1180 |

| 4 | 1270 | 1570 |

| 5 | 1570 | 1510 |

| 6 | 1180 | 1270 |

| 7 | 1550 | 1560 |

| 8 | 1220 | 1220 |

| 9 | 1200 | 1540 |

| 10 | 1250 | 1240 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balsi, M.; Moroni, M.; Chiarabini, V.; Tanda, G. High-Resolution Aerial Detection of Marine Plastic Litter by Hyperspectral Sensing. Remote Sens. 2021, 13, 1557. https://doi.org/10.3390/rs13081557

Balsi M, Moroni M, Chiarabini V, Tanda G. High-Resolution Aerial Detection of Marine Plastic Litter by Hyperspectral Sensing. Remote Sensing. 2021; 13(8):1557. https://doi.org/10.3390/rs13081557

Chicago/Turabian StyleBalsi, Marco, Monica Moroni, Valter Chiarabini, and Giovanni Tanda. 2021. "High-Resolution Aerial Detection of Marine Plastic Litter by Hyperspectral Sensing" Remote Sensing 13, no. 8: 1557. https://doi.org/10.3390/rs13081557

APA StyleBalsi, M., Moroni, M., Chiarabini, V., & Tanda, G. (2021). High-Resolution Aerial Detection of Marine Plastic Litter by Hyperspectral Sensing. Remote Sensing, 13(8), 1557. https://doi.org/10.3390/rs13081557