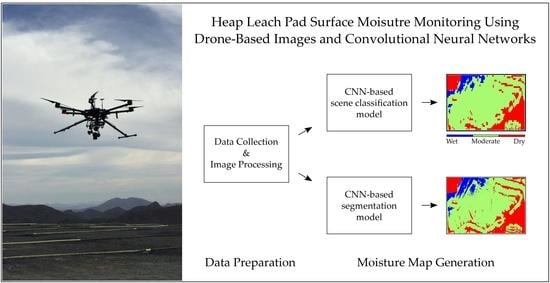

Heap Leach Pad Surface Moisture Monitoring Using Drone-Based Aerial Images and Convolutional Neural Networks: A Case Study at the El Gallo Mine, Mexico

Abstract

1. Introduction

2. Materials and Methods

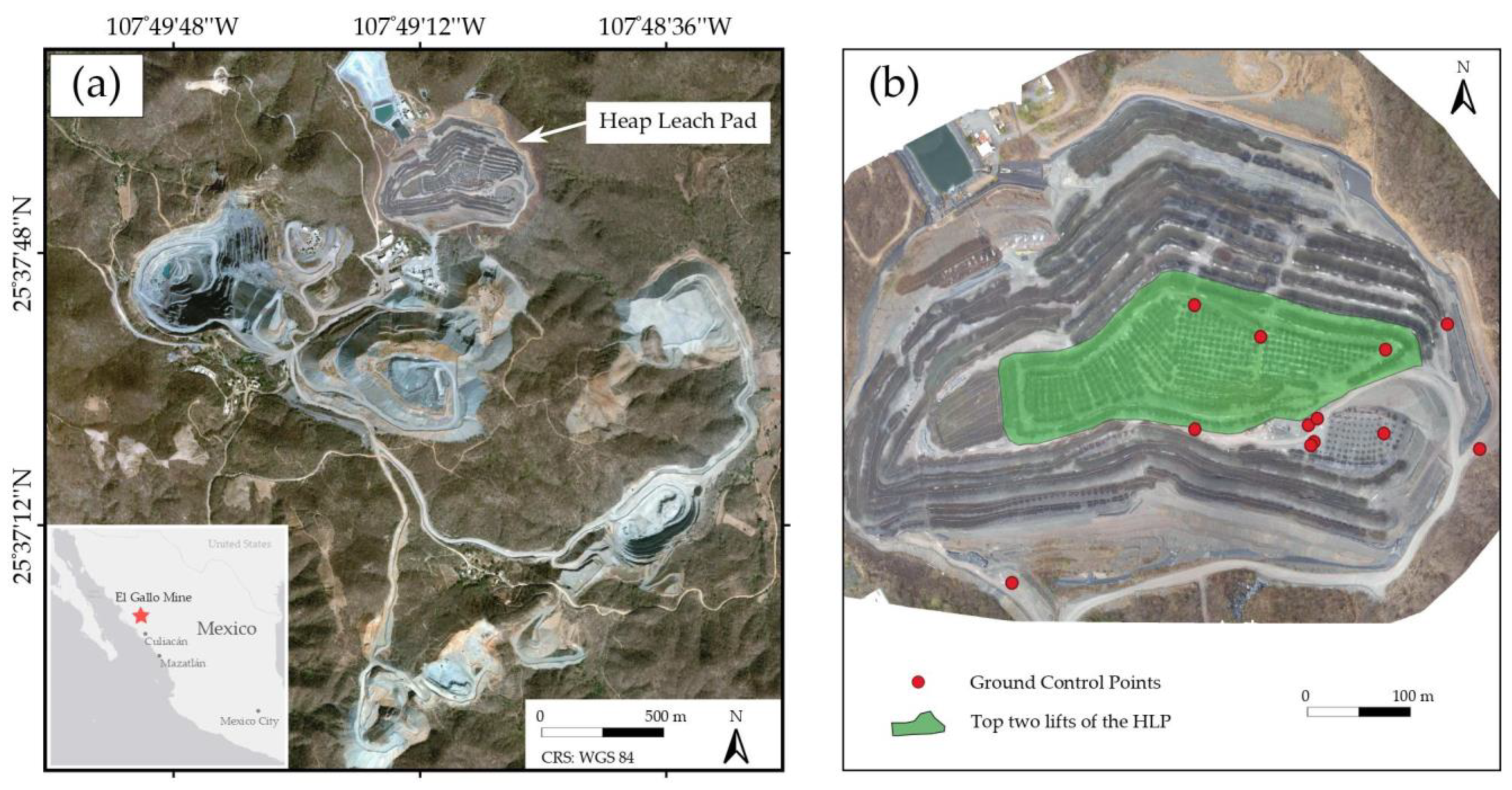

2.1. Study Site

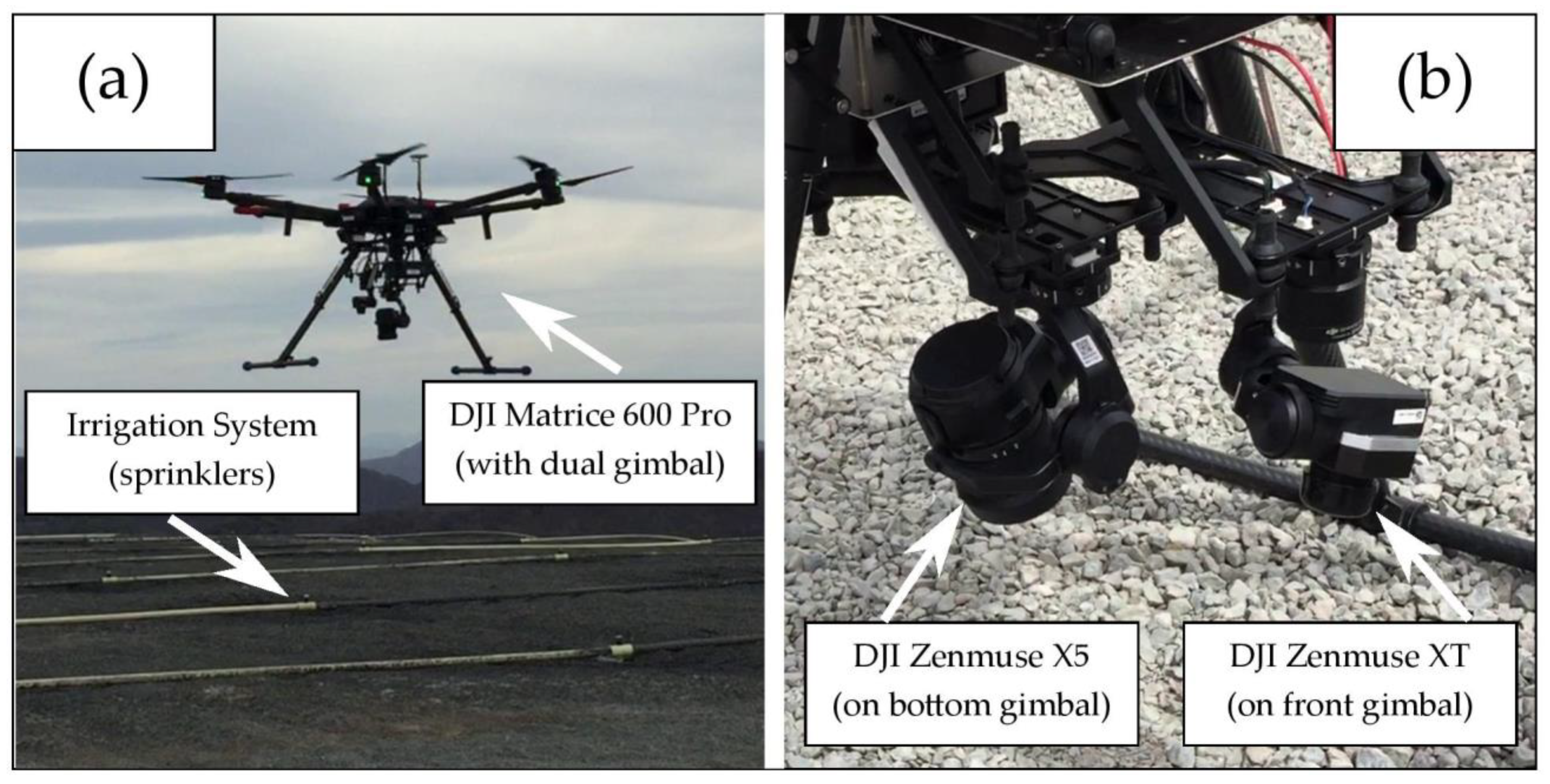

2.2. UAV Platform and Sensors

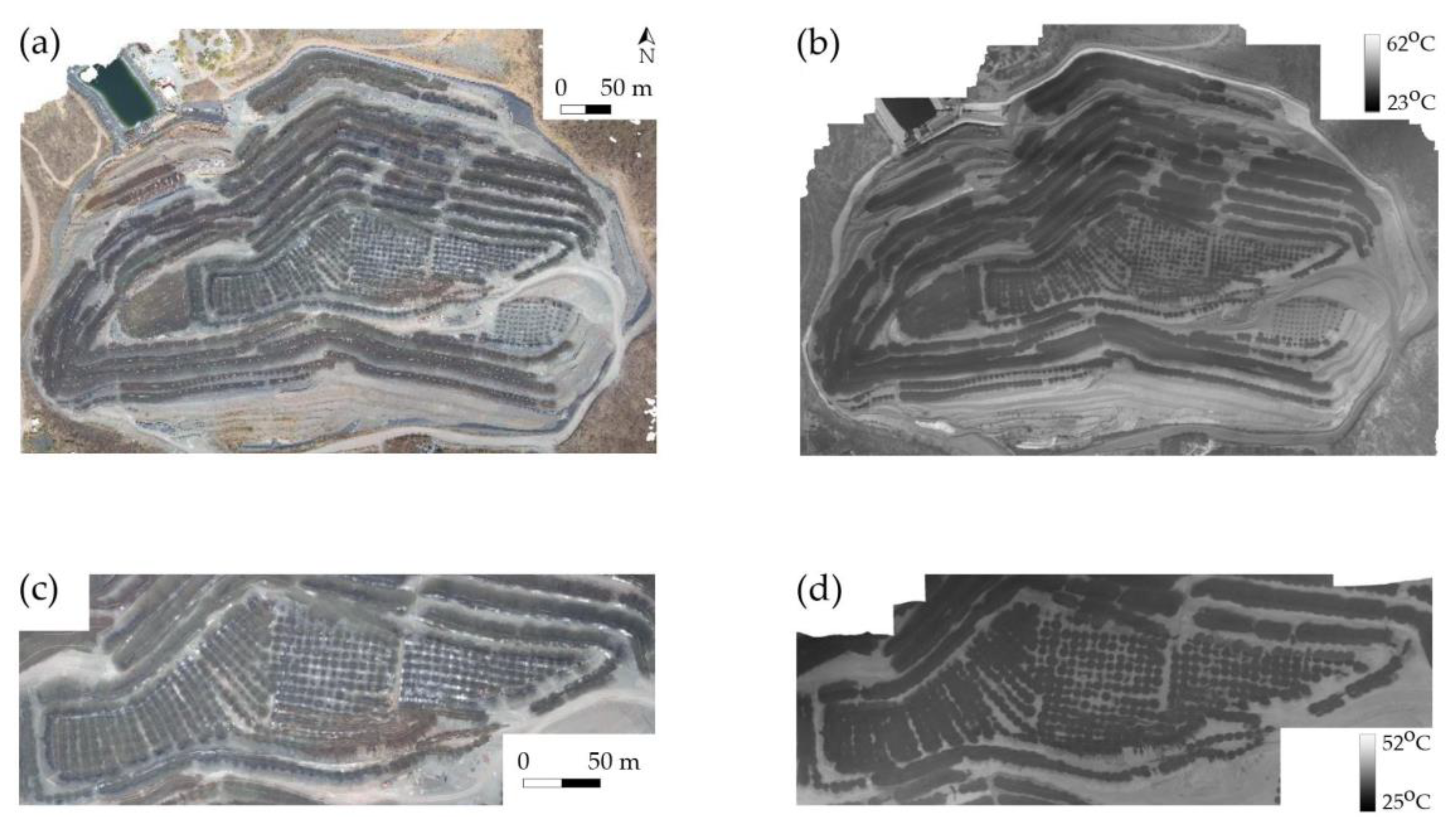

2.3. Data Acquisition and UAV Flight Plans

2.4. Image Processing

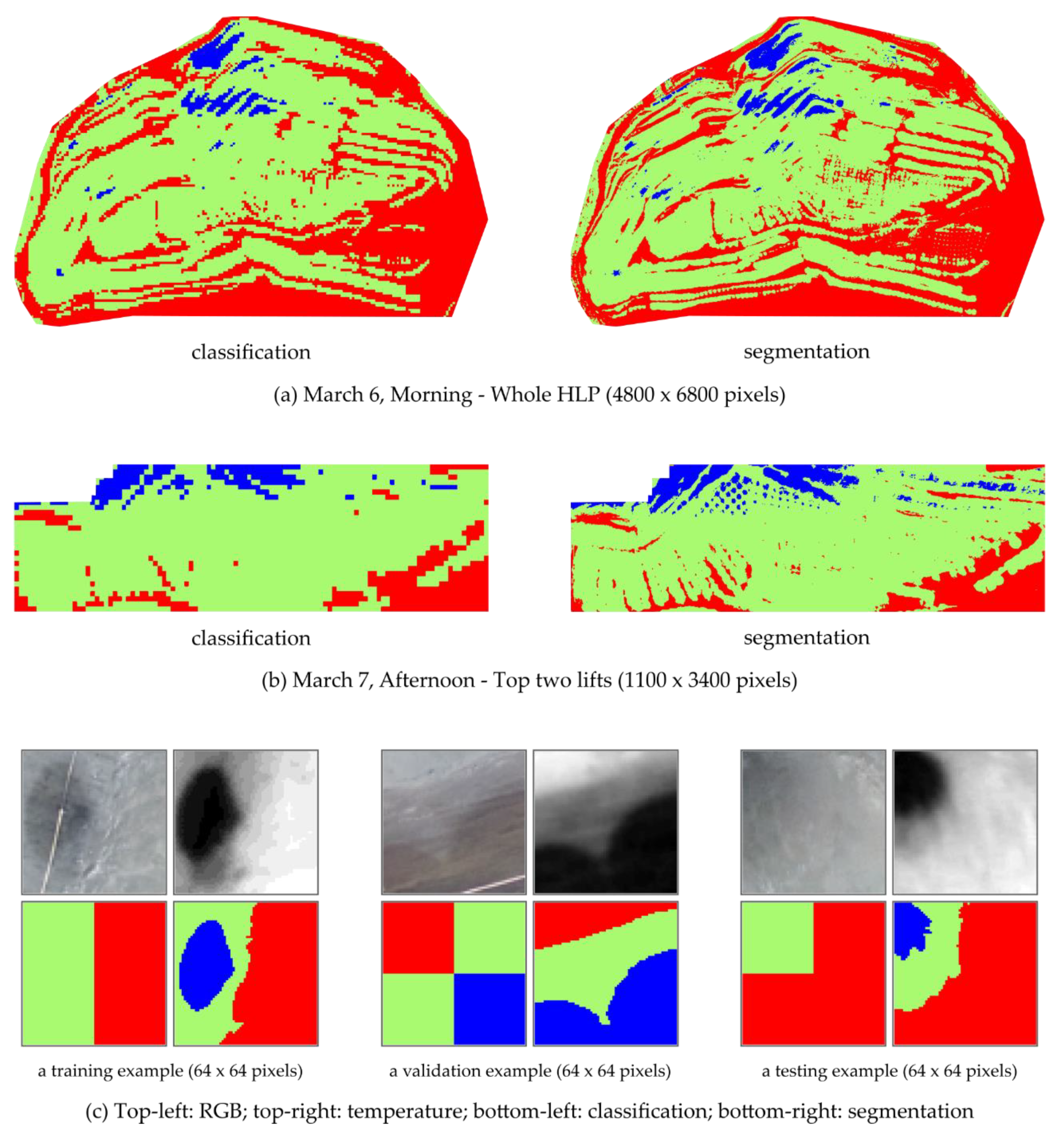

2.5. Dataset Creation and Partition for Moisture Map Generation Using CNN

2.5.1. Creation of Scene Classification Dataset

2.5.2. Creation of Semantic Segmentation Dataset

2.6. Scene Classification CNNs

2.6.1. Network Architectures of Classification CNNs

2.6.2. Training Setup of Classification CNNs

2.7. Semantic Segmentation CNN

2.7.1. Network Architectures of Segmentation CNN

2.7.2. Training Setup of Segmentation CNN

2.8. Moisture Map Generation

3. Results

3.1. Evaluation of Classification CNN

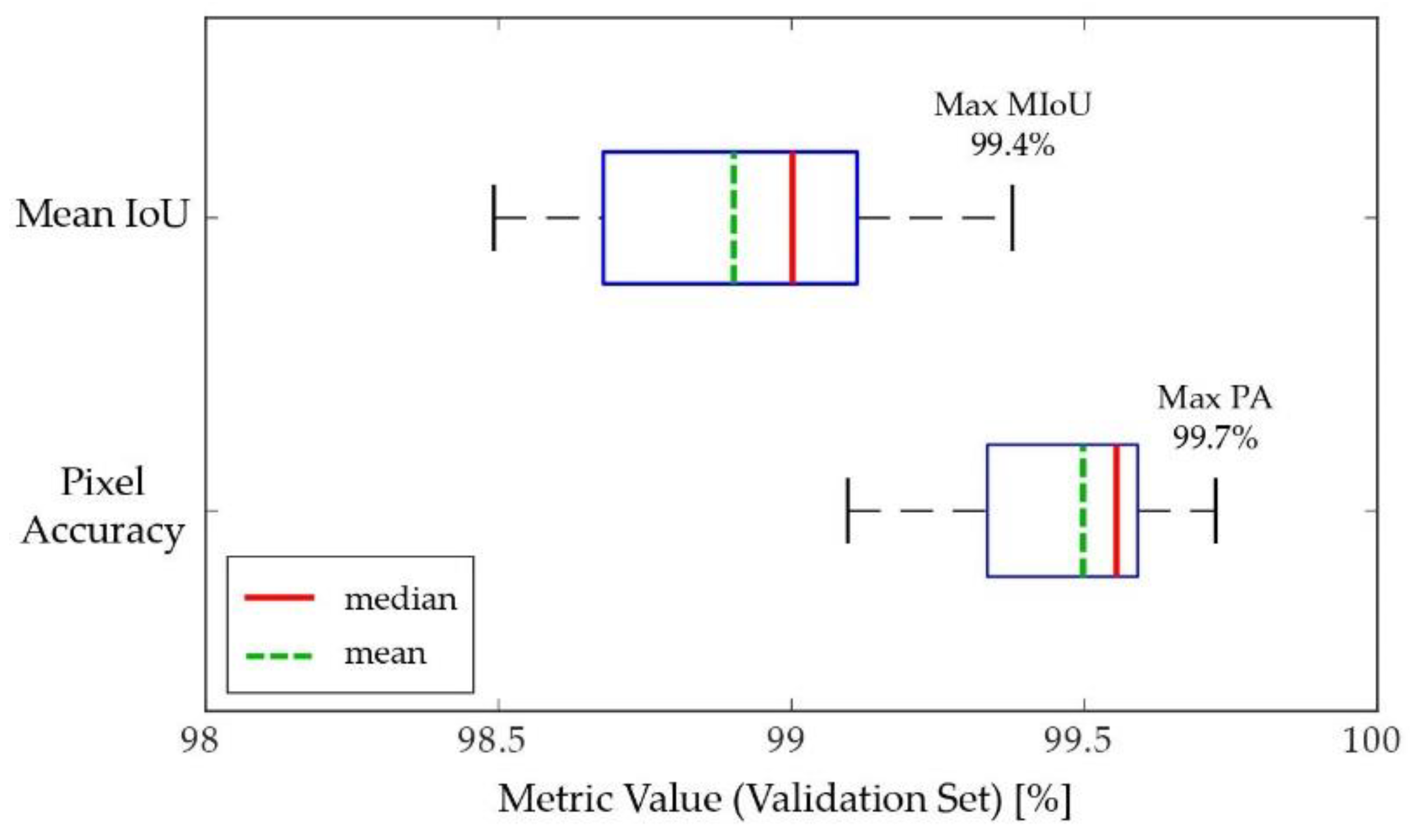

3.2. Evaluation of Segmentation CNN

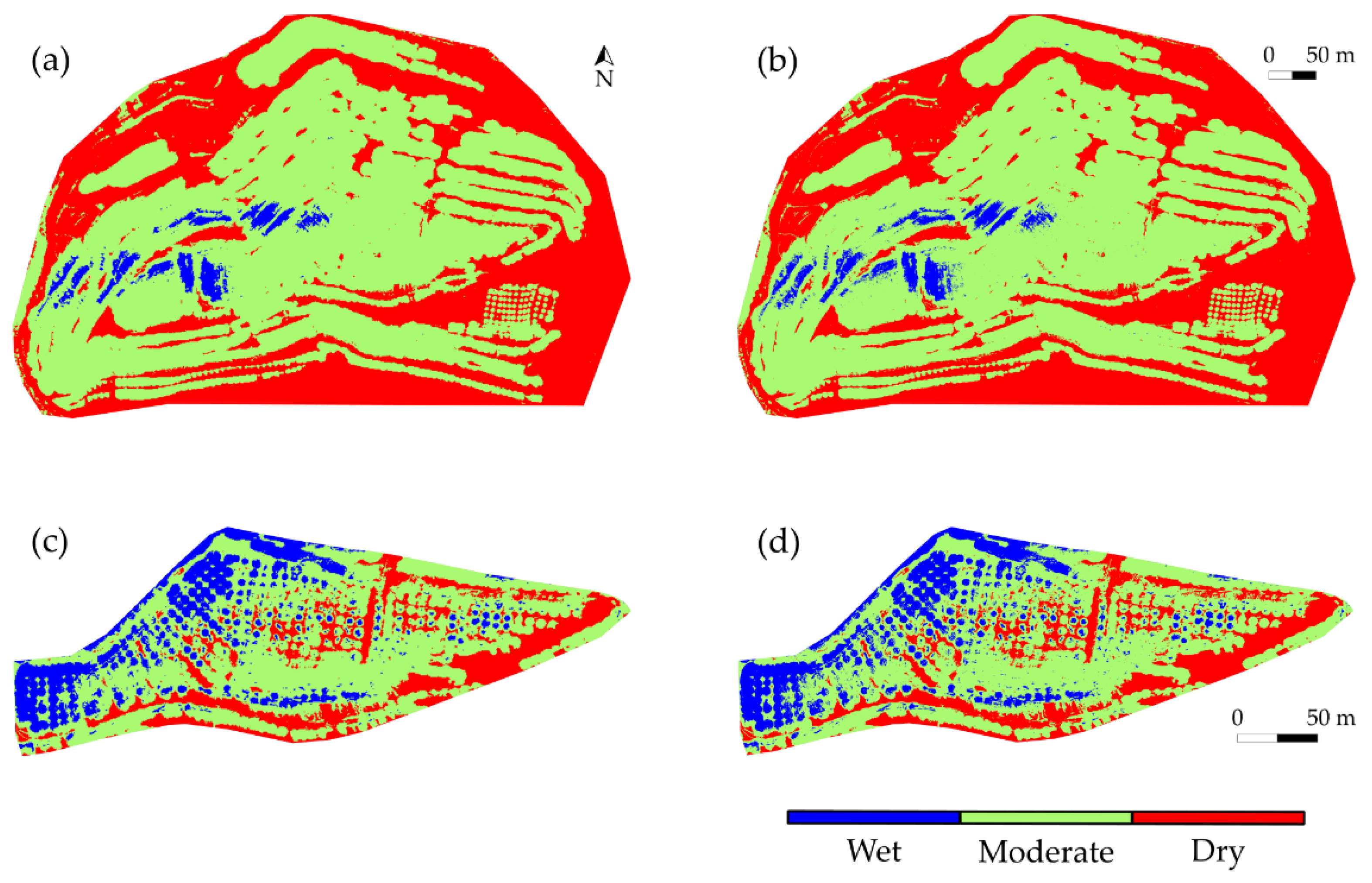

3.3. Generated HLP Surface Moisture Maps

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ghorbani, Y.; Franzidis, J.-P.; Petersen, J. Heap leaching technology—Current state, innovations, and future directions: A review. Miner. Process. Extr. Met. Rev. 2016, 37, 73–119. [Google Scholar] [CrossRef]

- Pyper, R.; Seal, T.; Uhrie, J.L.; Miller, G.C. Dump and Heap Leaching. In SME Mineral Processing and Extractive Metallurgy Handbook; Dunne, R.C., Kawatra, S.K., Young, C.A., Eds.; Society for Mining, Metallurgy, and Exploration: Englewood, CO, USA, 2019; pp. 1207–1224. [Google Scholar]

- Kappes, D.W. Precious Metal Heap Leach Design and Practice. In Mineral Processing Plant Design, Practice, and Control 1; Society for Mining, Metallurgy, and Exploration: Englewood, CO, USA, 2002; pp. 1606–1630. [Google Scholar]

- Watling, H. The bioleaching of sulphide minerals with emphasis on copper sulphides—A review. Hydrometallurgy 2006, 84, 81–108. [Google Scholar] [CrossRef]

- Lankenau, A.W.; Lake, J.L. Process for Heap Leaching Ores. U.S. Patent 3,777,004A, 4 December 1973. [Google Scholar]

- Roman, R.J.; Poruk, J.U. Engineering the Irrigation System for a Heap Leach Operation; Society for Mining, Metallurgy and Exploration: Englewood, CO, USA, 1996; pp. 96–116. [Google Scholar]

- Marsden, J.; Todd, L.; Moritz, R. Effect of Lift Height, Overall Heap Height and Climate on Heap Leaching Efficiency; Society for Mining, Metallurgy and Exploration: Englewood, CO, USA, 1995. [Google Scholar]

- Bouffard, S.C.; Dixon, D.G. Investigative study into the hydrodynamics of heap leaching processes. Met. Mater. Trans. B 2000, 32, 763–776. [Google Scholar] [CrossRef]

- Pyke, P.D. Operations of a Small Heap Leach; The Australasian Institute of Mining and Metallurgy: Victoria, Australia, 1994; pp. 103–110. [Google Scholar]

- Salvini, R.; Mastrorocco, G.; Seddaiu, M.; Rossi, D.; Vanneschi, C. The use of an unmanned aerial vehicle for fracture mapping within a marble quarry (Carrara, Italy): Photogrammetry and discrete fracture network modelling. Geomatics Nat. Hazards Risk 2016, 8, 34–52. [Google Scholar] [CrossRef]

- Valencia, J.; Battulwar, R.; Naghadehi, M.Z.; Sattarvand, J. Enhancement of explosive energy distribution using UAVs and machine learning. In Mining Goes Digital; Taylor & Francis Group: London, UK, 2019; pp. 671–677. ISBN 978-0-367-33604-2. [Google Scholar]

- Zhang, S.; Liu, W. Application of aerial image analysis for assessing particle size segregation in dump leaching. Hydrometallurgy 2017, 171, 99–105. [Google Scholar] [CrossRef]

- Ren, H.; Zhao, Y.; Xiao, W.; Hu, Z. A review of UAV monitoring in mining areas: Current status and future perspectives. Int. J. Coal Sci. Technol. 2019, 6, 320–333. [Google Scholar] [CrossRef]

- Martínez-martínez, J.; Corbí, H.; Martin-rojas, I.; Baeza-carratalá, J.F.; Giannetti, A. Stratigraphy, petrophysical characterization and 3D geological modelling of the historical quarry of Nueva Tabarca Island (western Mediterranean): Implications on heritage conservation. Eng. Geol. 2017, 231, 88–99. [Google Scholar] [CrossRef]

- Dominici, D.; Alicandro, M.; Massimi, V. UAV photogrammetry in the post-earthquake scenario: Case studies in L’Aquila. Geomatics Nat. Hazards Risk 2017, 8, 87–103. [Google Scholar] [CrossRef]

- Mu, Y.; Zhang, X.; Xie, W.; Zheng, Y. Automatic Detection of Near-Surface Targets for Unmanned Aerial Vehicle (UAV) Magnetic Survey. Remote Sens. 2020, 12, 452. [Google Scholar] [CrossRef]

- Bemis, S.P.; Micklethwaite, S.; Turner, D.; James, M.R.; Akciz, S.; Thiele, S.T.; Bangash, H.A. Ground-based and UAV-based photogrammetry: A multi-scale, high-resolution mapping tool for structural geology and paleoseismology. J. Struct. Geol. 2014, 69, 163–178. [Google Scholar] [CrossRef]

- Beretta, F.; Rodrigues, A.L.; Peroni, R.L.; Costa, J.F.C.L. Automated lithological classification using UAV and machine learning on an open cast mine. Appl. Earth Sci. 2019, 128, 79–88. [Google Scholar] [CrossRef]

- Alvarado, M.; Gonzalez, F.; Fletcher, A.; Doshi, A. Towards the Development of a Low Cost Airborne Sensing System to Monitor Dust Particles after Blasting at Open-Pit Mine Sites. Sensors 2015, 15, 19667–19687. [Google Scholar] [CrossRef] [PubMed]

- Zwissler, B. Dust Susceptibility at Mine Tailings Impoundments: Thermal Remote Sensing for Dust Susceptibility Characterization and Biological Soil Crusts for Dust Susceptibility Reduction; Michigan Technological University: Houghton, MI, USA, 2016. [Google Scholar]

- Bamford, T.; Medinac, F.; Esmaeili, K. Continuous Monitoring and Improvement of the Blasting Process in Open Pit Mines Using Unmanned Aerial Vehicle Techniques. Remote Sens. 2020, 12, 2801. [Google Scholar] [CrossRef]

- Medinac, F.; Bamford, T.; Hart, M.; Kowalczyk, M.; Esmaeili, K. Haul Road Monitoring in Open Pit Mines Using Unmanned Aerial Vehicles: A Case Study at Bald Mountain Mine Site. Mining Met. Explor. 2020, 37, 1877–1883. [Google Scholar] [CrossRef]

- Bamford, T.; Esmaeili, K.; Schoellig, A.P. A real-time analysis of post-blast rock fragmentation using UAV technology. Int. J. Mining Reclam. Environ. 2017, 31, 1–18. [Google Scholar] [CrossRef]

- Medinac, F.; Esmaeili, K. Integrating unmanned aerial vehicle photogrammetry in design compliance audits and structural modelling of pit walls. In Proceedings of the 2020 International Symposium on Slope Stability in Open Pit Mining and Civil Engineering, Perth, Australia, 26–28 October 2020; pp. 1439–1454. [Google Scholar]

- Francioni, M.; Salvini, R.; Stead, D.; Giovannini, R.; Riccucci, S.; Vanneschi, C.; Gullì, D. An integrated remote sensing-GIS approach for the analysis of an open pit in the Carrara marble district, Italy: Slope Stability assessment through kinematic and numerical methods. Comput. Geotech. 2015, 67, 46–63. [Google Scholar] [CrossRef]

- Tang, M.; Esmaeili, K. Mapping Surface Moisture of a Gold Heap Leach Pad at the El Gallo Mine Using a UAV and Thermal Imaging. Mining Met. Explor. 2020, 1–15. [Google Scholar] [CrossRef]

- Daud, O.; Correa, M.; Estay, H.; Ruíz-del-Solar, J. Monitoring and Controlling Saturation Zones in Heap Leach Piles Using Thermal Analysis. Minerals 2021, 11, 115. [Google Scholar] [CrossRef]

- Sobayo, R.; Wu, H.; Ray, R.L.; Qian, L. Integration of Convolutional Neural Network and Thermal Images into Soil Moisture Estimation. In Proceedings of the 1st International Conference on Data Intelligence and Security, South Padre Island (SPI), TX, USA, 8–10 April 2018; pp. 207–210. [Google Scholar] [CrossRef]

- Hu, Z.; Xu, L.; Yu, B. Soil Moisture Retrieval Using Convolutional Neural Networks: Application to Passive Microwave Remote Sensing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef]

- Ge, L.; Hang, R.; Liu, Y.; Liu, Q. Comparing the Performance of Neural Network and Deep Convolutional Neural Network in Estimating Soil Moisture from Satellite Observations. Remote Sens. 2018, 10, 1327. [Google Scholar] [CrossRef]

- Fu, T.; Ma, L.; Li, M.; Johnson, B.A. Using convolutional neural network to identify irregular segmentation objects from very high-resolution remote sensing imagery. J. Appl. Remote Sens. 2018, 12, 025010. [Google Scholar] [CrossRef]

- Isikdogan, F.; Bovik, A.C.; Passalacqua, P. Surface Water Mapping by Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4909–4918. [Google Scholar] [CrossRef]

- Wang, T.; Liang, J.; Liu, X. Soil Moisture Retrieval Algorithm Based on TFA and CNN. IEEE Access 2018, 7, 597–604. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 436–444. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 2449, 2352–2449. [Google Scholar] [CrossRef]

- Alom, Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Essen, B.C.; Awwal, A.A.S.; Asari, V.K. A State-of-the-Art Survey on Deep Learning Theory and Architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Amara, J.; Bouaziz, B.; Algergawy, A. A Deep Learning-Based Approach for Banana Leaf Diseases Classification; Datenbanksysteme für Business, Technologie und Web: Bonn, Germany, 2017; pp. 79–88. [Google Scholar]

- Kemker, R.; Salvaggio, C.; Kanan, C. Algorithms for semantic segmentation of multispectral remote sensing imagery using deep learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 60–77. [Google Scholar] [CrossRef]

- Xia, G.-S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A Benchmark Data Set for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Y.; Li, Y. A Survey on Deep Learning-Driven Remote Sensing Image Scene Understanding: Scene Classification, Scene Retrieval and Scene-Guided Object Detection. Appl. Sci. 2019, 9, 2110. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 13, 3735–3756. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Lateef, F.; Ruichek, Y. Survey on semantic segmentation using deep learning techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Medinac, F. Advances in Pit Wall Mapping and Slope Assessment Using Unmanned Aerial Vehicle Technology; Univeristy of Toronto: Toronto, ON, Canada, 2019. [Google Scholar]

- Gu, H.; Lin, Z.; Guo, W.; Deb, S. Retrieving Surface Soil Water Content Using a Soil Texture Adjusted Vegetation Index and Unmanned Aerial System Images. Remote Sens. 2021, 13, 145. [Google Scholar] [CrossRef]

- Long, D.; Rehm, P.J.; Ferguson, S. Benefits and challenges of using unmanned aerial systems in the monitoring of electrical distribution systems. Electr. J. 2018, 31, 26–32. [Google Scholar] [CrossRef]

- Dugdale, S.J.; Kelleher, C.A.; Malcolm, I.A.; Caldwell, S.; Hannah, D.M. Assessing the potential of drone-based thermal infrared imagery for quantifying river temperature heterogeneity. Hydrol. Process. 2019, 33, 1152–1163. [Google Scholar] [CrossRef]

- Tziavou, O.; Pytharouli, S.; Souter, J. Unmanned Aerial Vehicle (UAV) based mapping in engineering geological surveys: Considerations for optimum results. Eng. Geol. 2018, 232, 12–21. [Google Scholar] [CrossRef]

- Langford, M.; Fox, A.; Smith, R.S. Chapter 5—Using different focal length lenses, camera kits. In Langford’s Basic Photography; Routledge: Oxfordshire, UK, 2010; pp. 92–113. [Google Scholar]

- Linder, W. Digital Photogrammetry: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Agisoft. Metashape; Agisoft: St. Petersburg, Russia, 2019. [Google Scholar]

- Gupta, R.P. Remote Sensing Geology; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Univeristy of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Zou, J.; Li, W.; Chen, C.; Du, Q. Scene Classification using local and global features with collaborative representation fusion. Inf. Sci. 2016, 348, 209–226. [Google Scholar] [CrossRef]

- Dong, Q.; Gong, S.; Zhu, X. Imbalanced Deep Learning by Minority Class Incremental Rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1367–1381. [Google Scholar] [CrossRef]

- Van Horn, G.; Mac Aodha, O.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The iNaturalist Species Classification and Detection Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 8769–8778. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Computer Vision–ECCV 2014; Springer: Cham, Swizerland, 2014; pp. 740–755. [Google Scholar]

- Johnson, J.M.; Khoshgoftaar, T. Survey on deep learning with class imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sandler, M.; Zhu, M.; Zhmoginov, A.; Mar, C.V. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Hu, F.; Xia, G.-S.; Hu, J.; Zhang, L. Transferring Deep Convolutional Neural Networks for the Scene Classification of High-Resolution Remote Sensing Imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 1–70. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network In Network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks; Springer: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2015, arXiv:1603.04467. [Google Scholar]

- Chollet, F.; Keras. Available online: https://keras.io (accessed on 20 February 2021).

- Hinton, G.; Srivastava, N.; Swersky, K. Neural Networks for Machine Learning Lecture 6a Overview of Mini-Batch Gradient Descent. Available online: http://www.cs.toronto.edu/~hinton/coursera/lecture6/lec6.pdf (accessed on 20 February 2021).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Hinton, G.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Clement, L.; Kelly, J. How to Train a CAT: Learning Canonical Appearance Transformations for Direct Visual Localization Under Illumination Change. IEEE Robot. Autom. Lett. 2018, 3, 2447–2454. [Google Scholar] [CrossRef]

- Ulku, I.; Akagunduz, E. A Survey on Deep Learning-Based Architectures for Semantic Segmentation on 2D Images. arXiv 2020, arXiv:1912.10230. [Google Scholar]

- Hollander, M.; Wolfe, D.A.; Chicken, E. Nonparametric Statistical Methods; John Wiley and Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Devore, J. Probability and Statistics for Engineering and the Sciences, 8th ed.; Nelson Education: Scarborough, ON, Canada, 2011. [Google Scholar]

- DeGroot, M.; Schervish, M. Probability and Statistics, 4th ed.; Pearson Education: London, UK, 2013. [Google Scholar]

- Walpole, R.E.; Myers, R.H.; Myers, S.L.; Ye, K. Probability and Statistics for Engineers and Scientists, 9th ed.; Pearson Education: London, UK, 2012. [Google Scholar]

- Gómez-Chova, L.; Tuia, D.; Moser, G.; Camps-Valls, G. Multimodal Classification of Remote Sensing Images: A Review and Future Directions. Proc. IEEE 2015, 103, 1560–1584. [Google Scholar] [CrossRef]

- Quevedo, G.R.V.; Mancilla, J.R.; Cordero, A.A.A. System and Method for Monitoring and Controlling Irrigation Delivery in Leaching Piles. U.S. Patent 20150045972A1, 19 September 2017. [Google Scholar]

- Franson, J.C. Cyanide poisoning of a Cooper’s hawk (Accipiter cooperii). J. Vet. Diagn. Investig. 2017, 29, 258–260. [Google Scholar] [CrossRef]

- Marsden, J.O. Gold and Silver. In SME Mineral Processing and Extractive Metallurgy Handbook; Dunne, R.C., Kawatra, S.K., Young, C.A., Eds.; Society for Mining, Metallurgy, and Exploration: Englewood, CO, USA, 2019; pp. 1689–1728. [Google Scholar]

- Lillesand, T.M.; Kiefer, R.W.; Chipman, J.W. Remote Sensing and Image Interpretation; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Aganj, I.; Fischl, B. Multimodal Image Registration through Simultaneous Segmentation. IEEE Signal Process. Lett. 2017, 24, 1661–1665. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Liu, Z.; Liu, S.; Ma, J.; Wang, F. Registration of infrared and visible light image based on visual saliency and scale invariant feature transform. EURASIP J. Image Video Process. 2018, 2018, 45. [Google Scholar] [CrossRef]

- Raza, S.; Sanchez, V.; Prince, G.; Clarkson, J.P.; Rajpoot, N.M. Registration of thermal and visible light images of diseased plants using silhouette extraction in the wavelet domain. Pattern Recognit. 2015, 48, 2119–2128. [Google Scholar] [CrossRef]

| Flight Parameters | Flight Mission 1 | Flight Mission 2 |

|---|---|---|

| Region of interest | Top two lifts of the HLP | Whole HLP |

| Flight altitude | 90 m | 120 m |

| Flight duration | 7 min | 24 min |

| Area surveyed | 4 ha | 22 ha |

| Ground sampling distance (RGB) | 2.3 cm/pixel | 3.0 cm/pixel |

| Ground sampling distance (thermal) | 12 cm/pixel | 15 cm/pixel |

| 6 March 2019 | 7 March 2019 | 8 March 2019 | ||||

|---|---|---|---|---|---|---|

| Morning | Afternoon | Morning | Afternoon | Morning | Afternoon | |

| Whole HLP * | T: 620 | T: 618 | T: 618 | T: 621 | T: 618 | T: 619 |

| C: 273 | C: 281 | C: 270 | C: 289 | C: 290 | C: 275 | |

| Top two lifts * | T: 178 | T: 170 | T: 174 | T: 169 | T: 170 | T: 173 |

| C: 58 | C: 74 | C: 74 | C: 81 | C: 73 | C: 76 | |

| Moisture Classes | Whole Dataset (125,252 Images) | Training Set (84,528 Images) | Validation Set (20,008 Images) | Test Set (20,716 Images) |

|---|---|---|---|---|

| “Dry” | 37,251 | 24,851 | 5696 | 6704 |

| “Moderate” | 68,115 | 44,250 | 10,679 | 13,186 |

| “Wet” | 19,886 | 15,427 | 3633 | 826 |

| Moisture Classes | Whole Dataset (128.3 M Pixels) | Training Set (86.6 M Pixels) | Validation Set (20.5 M Pixels) | Test Set (21.2 M Pixels) |

|---|---|---|---|---|

| “Dry” | 31.3% | 30.8% | 30.1% | 34.1% |

| “Moderate” | 51.3% | 49.4% | 50.3% | 60.2% |

| “Wet” | 17.4% | 19.8% | 19.6% | 5.7% |

| Layer Name | Layer Output Dimension (Height × Width × Channel) | Operation | Stride |

|---|---|---|---|

| Input | 32 × 32 × 4 | - | - |

| Conv1 | 30 × 30 × 96 | Conv 3 × 3, 96 | 1 |

| Conv2 | 28 × 28 × 256 | Conv 3 × 3, 256 | 1 |

| MaxPool1 | 14 × 14 ×256 | Max pool 2 × 2 | 2 |

| Conv3 | 14 × 14 × 384 | Conv 3 × 3, 384, padding 1 | 1 |

| Conv4 | 14 × 14 × 384 | Conv 3 × 3, 384, padding 1 | 1 |

| Conv5 | 14 × 14 × 256 | Conv 3 × 3, 256, padding 1 | 1 |

| MaxPool2 | 7 × 7 × 256 | Max pool 2 × 2 | 2 |

| FC1 | 1 × 1 × 1024 | Flatten, 1024-way FC | - |

| FC2 | 1 × 1 × 1024 | 1024-way FC | - |

| FC3 (Output) | 1 × 1 × 3 | Three-way FC, softmax | - |

| Layer/Block Name | Layer/Block Output Dimension | Operation | Stride |

|---|---|---|---|

| Input | 32 × 32 × 4 | - | - |

| Conv1 | 16 × 16 × 64 | Conv 7 × 7, 64 | 2 |

| MaxPool1 | 8 × 8 × 64 | Max pool 3 × 3 | 2 |

| Block1_x | 8 × 8 × 256 | 1 | |

| Block2_x | 4 × 4 × 512 | 1 | |

| Block3_x | 2 × 2 × 1024 | 1 | |

| Block4_x | 1 × 1 × 2048 | 1 | |

| FC (Output) | 1 × 1 × 3 | Global avg. pool, three-way FC, softmax | - |

| Layer/Block Name | Layer/Block Output Dimension | Operation | Stride for 3 × 3 Convolution |

|---|---|---|---|

| Input | 32 × 32 × 4 | - | - |

| Conv1 | 16 × 16 × 32 | Conv 3 × 3, 32 | 2 |

| Block0 | 16 × 16 × 16 | 1 | |

| Block1 | 8 × 8 × 24 | 2 | |

| Block2 | 8 × 8 × 24 | 1 | |

| Block3 | 4 × 4 × 32 | 2 | |

| Block4 | 4 × 4 × 32 | 1 | |

| Block5 | 4 × 4 × 32 | 1 | |

| Conv2 | 4 × 4 × 1280 | Conv 1 × 1, 1280 | 1 |

| FC (Output) | 1 × 1 × 3 | Global avg. pool, three-way FC, softmax | - |

| Block/Layer Name | Operation | Output Dimension (Height × Width × Channel) |

|---|---|---|

| Input | - | 64 × 64 × 4 |

| Encoder1 | 64 × 64 × 64 | |

| MaxPool1 | Max pool 2 × 2, stride 2 | 32 × 32 × 64 |

| Encoder2 | 32 × 32 × 128 | |

| MaxPool2 | Max pool 2 × 2, stride 2 | 16 × 16 × 128 |

| Encoder3 | 16 × 16 × 256 | |

| MaxPool3 | Max pool 2 × 2, stride 2 | 8 × 8 × 256 |

| Encoder4 | 8 × 8 × 512 | |

| UpConv1 | Up-Conv 3 × 3, 256 | 16 × 16 × 256 |

| Concatenation1 | Concatenate output of Encoder3 | 16 × 16 × 512 |

| Decoder1 | 16 × 16 × 256 | |

| UpConv2 | Up-Conv 3 × 3, 128 | 32 × 32 × 128 |

| Concatenation2 | Concatenate output of Encoder2 | 32 × 32 × 256 |

| Decoder2 | 32 × 32 × 128 | |

| UpConv3 | Up-Conv 3 × 3, 64 | 64 × 64 × 64 |

| Concatenation3 | Concatenate output of Encoder1 | 64 × 64 × 128 |

| Decoder3 | 64 × 64 × 64 | |

| Conv1 | Conv 1 × 1, 3, softmax | 64 × 64 × 3 |

| Output | Pixel-wise Argmax | 64 × 64 × 1 |

| Tested Models | KS Test Result | WRS Test Result | ||

|---|---|---|---|---|

| Statistically Similar (5% Significance) | p-Values | Statistically Similar (5% Significance) | p-Values | |

| AlexNet—ResNet50 | No | 0.0025 | No | 0.0493 |

| AlexNet—MobileNetV2 | No | 0.0025 | No | 0.0006 |

| ResNet50—MobileNetV2 | Yes | 0.5941 | Yes | 0.7789 |

| Model | Predicted Class | True Class Label | ||

|---|---|---|---|---|

| Dry | Moderate | Wet | ||

| Modified AlexNet Overall Accuracy: 98.4 | Dry | 98.8 | 0.5 | 0 |

| Moderate | 1.2 | 99.1 | 15.4 | |

| Wet | 0 | 0.4 | 84.6 | |

| ResNet50 Overall Accuracy: 99.2 | Dry | 99.3 | 0.2 | 0 |

| Moderate | 0.7 | 99.3 | 3.4 | |

| Wet | 0 | 0.5 | 96.6 | |

| Modified MobileNetV2 Overall Accuracy: 99.1 | Dry | 99.5 | 0.4 | 0 |

| Moderate | 0.5 | 99.0 | 2.9 | |

| Wet | 0 | 0.6 | 97.1 | |

| Model | Predicted Label | True Label | ||

|---|---|---|---|---|

| Dry | Moderate | Wet | ||

| Modified U-Net PA: 99.7 MIoU: 98.1 | Dry | 100 | 0.1 | 0.0 |

| Moderate | 0.0 | 99.9 | 4.6 | |

| Wet | 0.0 | 0.0 | 95.4 | |

| F1 score | 99.9 | 99.7 | 97.4 | |

| IoU | 99.9 | 99.5 | 95.0 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, M.; Esmaeili, K. Heap Leach Pad Surface Moisture Monitoring Using Drone-Based Aerial Images and Convolutional Neural Networks: A Case Study at the El Gallo Mine, Mexico. Remote Sens. 2021, 13, 1420. https://doi.org/10.3390/rs13081420

Tang M, Esmaeili K. Heap Leach Pad Surface Moisture Monitoring Using Drone-Based Aerial Images and Convolutional Neural Networks: A Case Study at the El Gallo Mine, Mexico. Remote Sensing. 2021; 13(8):1420. https://doi.org/10.3390/rs13081420

Chicago/Turabian StyleTang, Mingliang, and Kamran Esmaeili. 2021. "Heap Leach Pad Surface Moisture Monitoring Using Drone-Based Aerial Images and Convolutional Neural Networks: A Case Study at the El Gallo Mine, Mexico" Remote Sensing 13, no. 8: 1420. https://doi.org/10.3390/rs13081420

APA StyleTang, M., & Esmaeili, K. (2021). Heap Leach Pad Surface Moisture Monitoring Using Drone-Based Aerial Images and Convolutional Neural Networks: A Case Study at the El Gallo Mine, Mexico. Remote Sensing, 13(8), 1420. https://doi.org/10.3390/rs13081420