1. Introduction

Ship detection plays a crucial role in maritime transportation, maritime surveillance applications in fishing, and maritime rights maintenance. Synthetic aperture radar (SAR), as active remote sensing, is most suitable for ship detection because it is sensitive to hard targets. Furthermore, SAR works throughout the day and in all weather conditions. In recent years, many SAR satellites, such as Radarsat1/2, TerraSAR-X, Sentinel-1, COSMO-SkyMed, and GF-3, have been providing a wide variety of SAR images with different resolutions, modes, and polarizations for maritime application, thereby enabling ship detection.

According to previous research, ship detection usually involves land-ocean segmentation, preprocessing, prescreening, and discrimination. Constant false alarm rate (CFAR) [

1,

2,

3,

4], as a traditional method, is typically used in ship detection. Furthermore, these methods are dependent on the statistical distribution of sea clutter, which is difficult to accurately estimate because of sea waves and ocean currents. Besides, the window size of protection and background influences the detection effectiveness. Land-ocean segmentation is also unavoidable, thereby causing poor robustness for SAR imagery in those methods. These traditional ship-detection methods require extensive calculations to address the parameters of statistical distribution, which is not sufficiently flexible and intelligent, and the detection speed does not meet actual needs.

At present, with the development of big data and deep learning technologies, convolutional neural networks (CNN) are widely used in mapping ice-wedge polygon (IWP) [

5,

6], identifying damaged buildings [

7], classifying sea ice cover and land type [

8,

9,

10], and so on. Those CNN models successfully developed an automatic extraction framework for high spatial resolution remote sensing applications in a large-scale application. However, those CNN models need the input data and ground truth annotation one-to-one correspondence. In some research fields, the ground truth data are not easy to obtain due to lack of expert knowledge and time consumption. Besides, a growing number of researchers are beginning to study object detection based on convolutional neural network (CNN) methods. Single-stage methods, such as a proposed region-based convolutional network (R-CNN) [

11], Fast R-CNN [

12], and Faster R-CNN [

13], and two-stage methods such as SSD [

14], YOLO V1/V2/V3/V4 [

14,

15,

16,

17,

18], and RetinaNet [

19], have exhibited impressive results on various object detection benchmarks based on PASCAL VOC [

20] and MS COCO [

21] datasets. However, the natural images differ from the SAR images, which are produced through a coherent imaging process that leads to foreshortening, layover, and shadowing. Apart from the image mechanisms, targets in SAR images vary, such as ghosts, islands, artificial objects, island, or a harbor that displays similar backscattering mechanisms to ships, which lead to a high rate of false alarms. Therefore, to apply the deep learning algorithm to the SAR data, researchers have constructed SAR Ship Detection Dataset [

22], SAR-Ship-Dataset [

23], OpenSAR [

24], and high-resolution SAR image dataset [

25] containing Sentinel-1, Radarsat-2, TerraSAR-X, COSMO-SkyMe, and GaoFen-3 images. These datasets vary in polarization (HH, HV, VH, and VV), resolution (0.5, 1, 3, 5, 8, and 10 m), incidence angle, imaging mode, and background.

Compared with the PASCAL and COCO datasets, the SAR datasets have a low volume. When training the object detectors for ship detection in SAR images, finetuning or transfer learning is widely used. These CNN methods have been used for target detection in SAR images, ship detection [

26], and land target detection [

27], and have performed better than the traditional methods.

The deficiency of the method is that average precision is low because the models fail to consider the SAR image mechanisms [

22]. However, the pretraining time and detection speed of classical object detectors usually do not meet the requirements of real-time ship detection, maritime rescue, and emergency military decision-making. In recent years, many researchers have paid attention to ship detection using CNN objectors. A grid CNN was proposed and proved to improve the accuracy and speed of ship detection [

28]. Receptive pyramid network extraction strategies and attention mechanism technology are proved to improve the accuracy of ship detection [

29]. These methods have relatively deep convolutional layers, hundreds of millions of parameters, and involve a long training time. Besides, in the data-driven CNN model, it is not easy and time-consuming to obtain the true value of the target bounding box corresponding to the input image. Therefore, these methods do not meet the requirements of fast processing, real-time response, and large-scale detection.

To achieve low complexity and high reliability through a CNN, some researchers have begun to split the images into small patches in the pre-screening stage and then use a relatively lightweight CNN model to classify the patches. Thereafter, the classification results are mapped onto the original images. A two-stage framework involves pre-screening and a relatively simple CNN architecture have been proposed [

30,

31], but in the pre-screening stage where a simple constant false alarm rate detector is used. As mentioned, the CFAR detector falls into a large number of calculations to solve the parameters of the statistical distribution and ignores small targets. Six convolutional layers, three max-pooling layers, and two full-connection layers are proposed to ship classification based on GF3-SAR images [

26]. In these methods, CFAR and Ostu are typically used to obtain candidate targets in the pre-screening stage, and then a simple CNN model is used to reduce false alarms and recognize the ship. Unfortunately, time consumption is increased when sea-land segmentation and CFAR detector are applied in the pre-screening stage. Although the Ostu improves the speed of the pre-screening stage, the threshold may not work effectively and may cause an excessive number of false alarms. After the SAR image preprocessing, the CNN model can perform ship detection from all patches, but the accuracy of ship detection needs to be improved for the small-level ship. Besides, scholars had analyzed and discussed the ship detection in the CNN method, but the feature visualization and analysis of ship detection in both VH and VV polarization were less discussed, which is important in understanding ship detection through the CNN method. Thus, in this letter, we are mainly concerned with ship detection accuracy and feature visualization and analysis by using the VH and VV polarization.

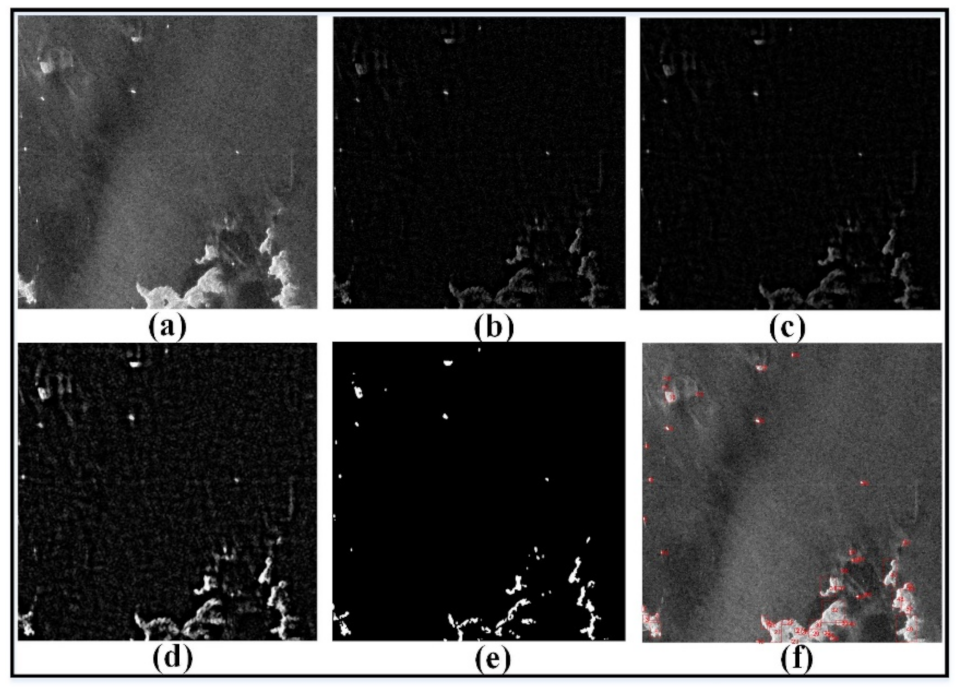

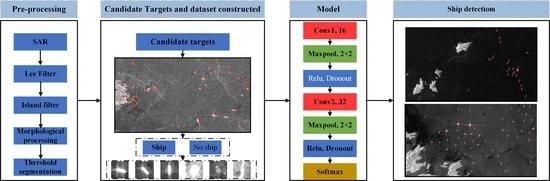

Considering these difficulties, we propose a two-stage ship-detection method. In the first stage, Lee and island filters are used to reduce the noise and false alarms. Then, an exponential inverse cumulative distribution function (EICDF) [

32,

33] is applied to quickly estimate the segmentation threshold and obtain candidate detection results with relatively few false alarms. Then, all candidates are put in a lightweight CNN to accurately recognize the ships. Finally, the feature visualization and analysis of ship detection are carried out by the Grad-class activation mapping (Grad-CAM). The main contributions of the work are as follows:

The first ship detection method for SAR images is proposed. To quickly obtain candidate detection results, this study presents a fast threshold segmentation for candidate detection, which has been proved to reduce false alarms, obtain all candidate ships with different scales, and save time in the offshore area.

Most detectors consist of deep architecture and millions of parameters, thereby resulting in complex extraction features and lengthy pretraining time. In this study, a simple lightweight CNN architecture, which is fast and effective, was proposed to detect the ship.

The Grad-CAM was introduced to explain and visualize the CNN model, and then analyze the great attention pixel when the ship and false alarm were predicted.

The rest of this paper is organized as follows. In

Section 2, we present the details of the dataset, data pre-processing, and the proposed method.

Section 3 reports the experiment results.

Section 4 and

Section 5 present the discussion and conclusions, respectively. Finally, a summary of this paper is provided.

3. Experimental Results and Analysis

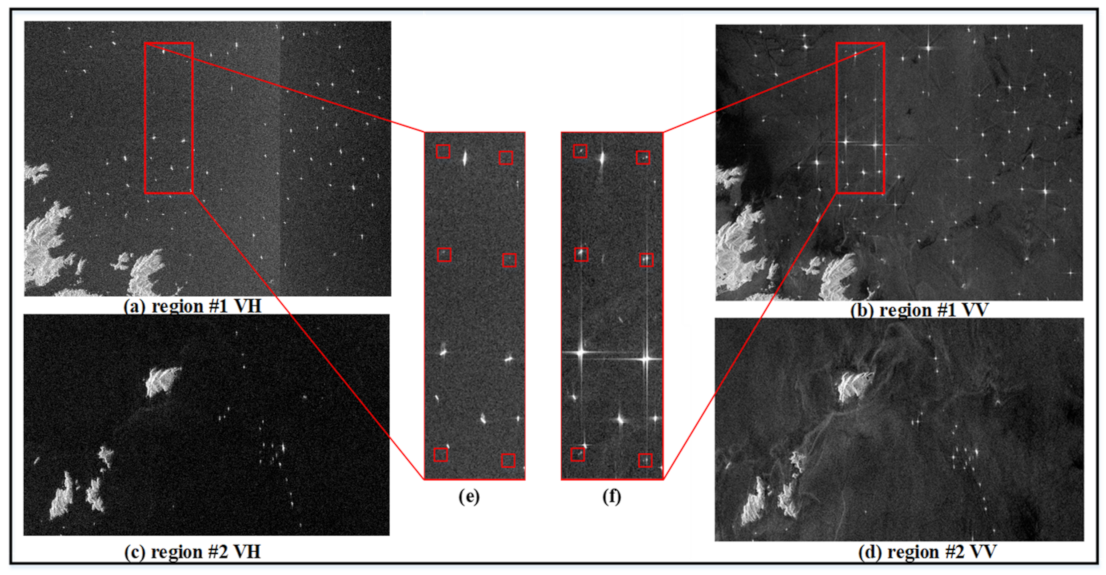

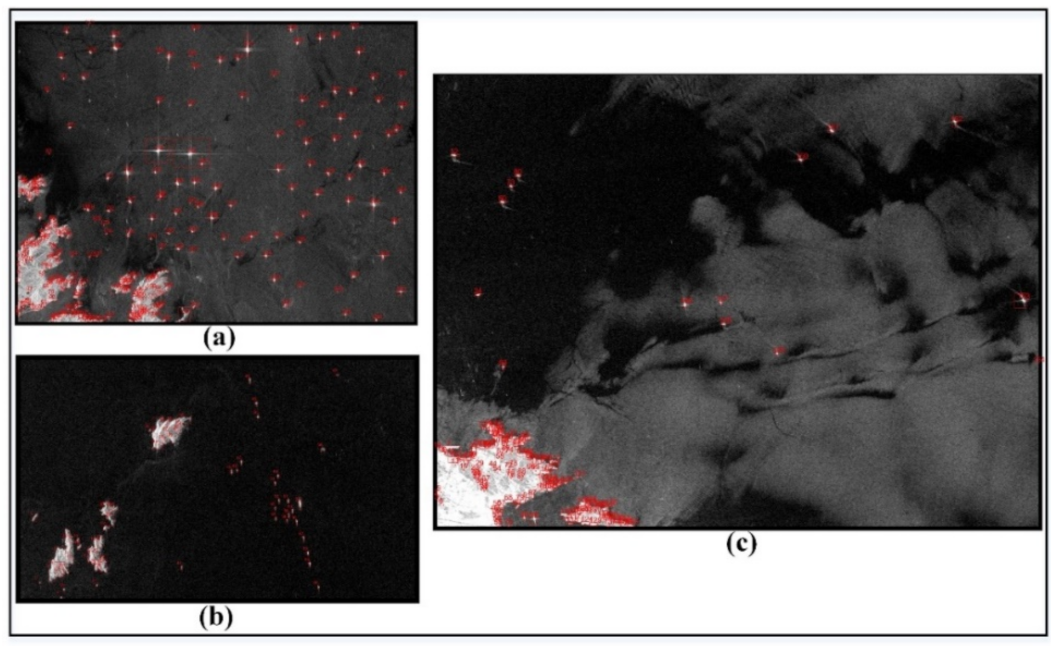

For the comprehensive evaluation of the ship detection result using the proposed method, two sub-images of the No. 1 SAR image acquired on 23 June 2020 located in the East China Sea area were clipped, as shown in

Figure 6a–d. The sizes of the two sub-images were 3791 × 2847 and 2589 × 1565. Besides, a sub-image of 4339 × 3258 was also clipped from the No.4 SAR image acquired on 13 February 2021, located in the Huanghai Sea area, as shown in

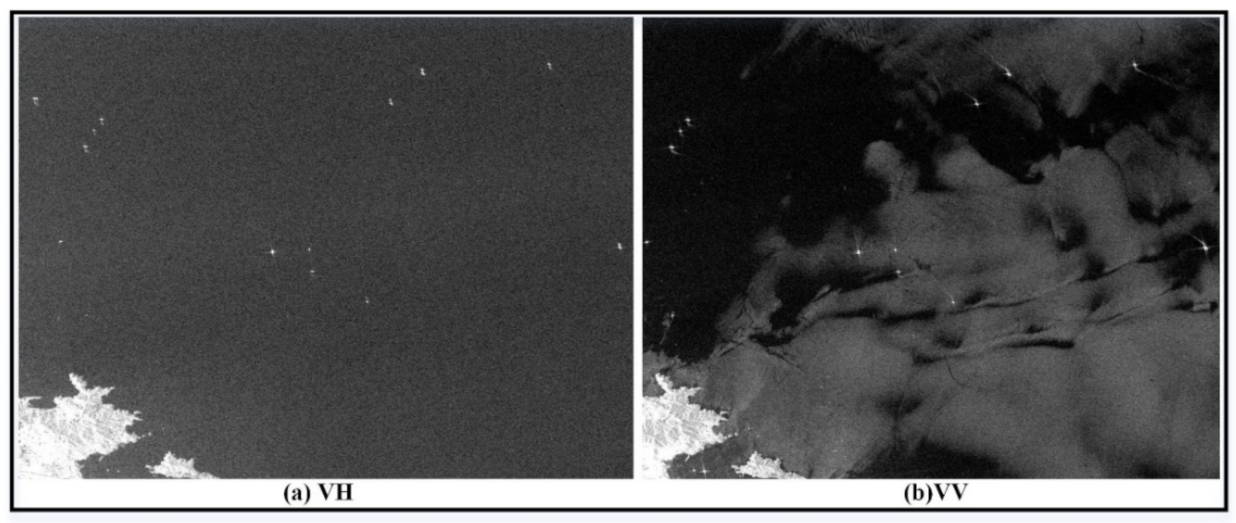

Figure 7a,b.

Figure 6 shows that the land and island contain sea areas in both regions. The azimuth ambiguities are often caused by the sampling of the Doppler spectrum at finite intervals of the pulse repetition frequency (PRF) due to the acquisition mode of two channels [

37]. Thus, in the SAR images, a small amount of “ghost” appears around the ship in high-speed movement, but is not negligible in ship detection.

Figure 6e,f shows the azimuth ambiguities caused by ships moving at high speed. In general, the scattering intensity of co-polarization (see

Figure 6b,d) is higher than that of cross-polarization (see

Figure 6a,b). Thus, the same targets may present different scattering intensity in VH and VV polarization. The characteristic of the target in VH polarization is less than that in VV polarization, especially for the small targets. In previous studies, the co-polarization data were also selected for ship detection. However, the VH polarization is less influenced by azimuth ambiguities. Thus, in PolSAR images, the azimuth ambiguity was usually suppressed by two cross-polarization channels [

37]. However, in previous studies, the performance of ship detection by VH and VV polarization was less discussed. Thus, considering the characteristics of dual-polarization SAR in marine imaging, we utilized VH and VV to detect the ship using the CNN method.

Figure 8 shows the candidate results of ship detection based on the method described in

Section 2.3. The sub-image with complex background presents that all ships can be detected, and false alarm caused by land, island and azimuth ambiguity also can be detected. There are 122 true ships and 244 false alarm targets in the sub-images of No. 1 SAR image and 17 true ship and 137 false alarm targets in the sub-image of No. 4 SAR image. The ground truth can be obtained by using SAR expert knowledge interpretation and Google Earth in order to evaluate the performance of the proposed method in the next section. It should be noted that the interpretation of those ground truth is to identify false alarms by comparing the SAR image with the high-resolution optical image on Google Earth and then identifying the ship based on the scattering characteristics and context of the ship on the SAR image.

3.1. Training Details

In this section, the implementation of the hardware and platform is introduced in our experiments. We perform the experiments on the Ubuntu 14.04 operating system with an 11.9 GB memory NVIDIA TITAN Xp GPU. Inspired by the hyperparameters set of the literature [

23,

41,

42], the learning rate, batch size, max epoch, moment, and momentum were set at 0.01, 32, 0.9, 1000, and 0.0005, respectively. Considering the SAR characteristics, we discarded the data augmentation in our experiment [

43]. A set of optimal hyperparameters for a learning algorithm list in

Table 5.

To compare with our method, we also introduced machine-learning methods such as KNN, SVM, RF, and the classic CNN LeNet-5 method, which was commonly used and showed good performance in the classification task. In this letter, KNN, SVM, and random forest (RF) were implemented on the Ubuntu 14.04 operating system and Scikit-learn in Python. The parameters of KNN, SVM, and RF can be set with the default parameters. Besides, the classic CNN LeNet-5 method was also used. The hyperparameters of LeNet-5 were set as the M-LeNet. To ensure similarity in input data, these data were normalized to 0 and 1, with the values of mean and variance set to 0.5.

The training and validation samples are listed in

Table 6. In all methods, the training and validation sample comes from 11 July 2020 (No. 2) and 23 July 2020 (No. 3), and the ratio is set at 8:2 in the training stage. In the testing stage, the test sample comes from the SAR data acquired on 23 June 2020 (No. 1) and 13 February 2021 (No. 4).

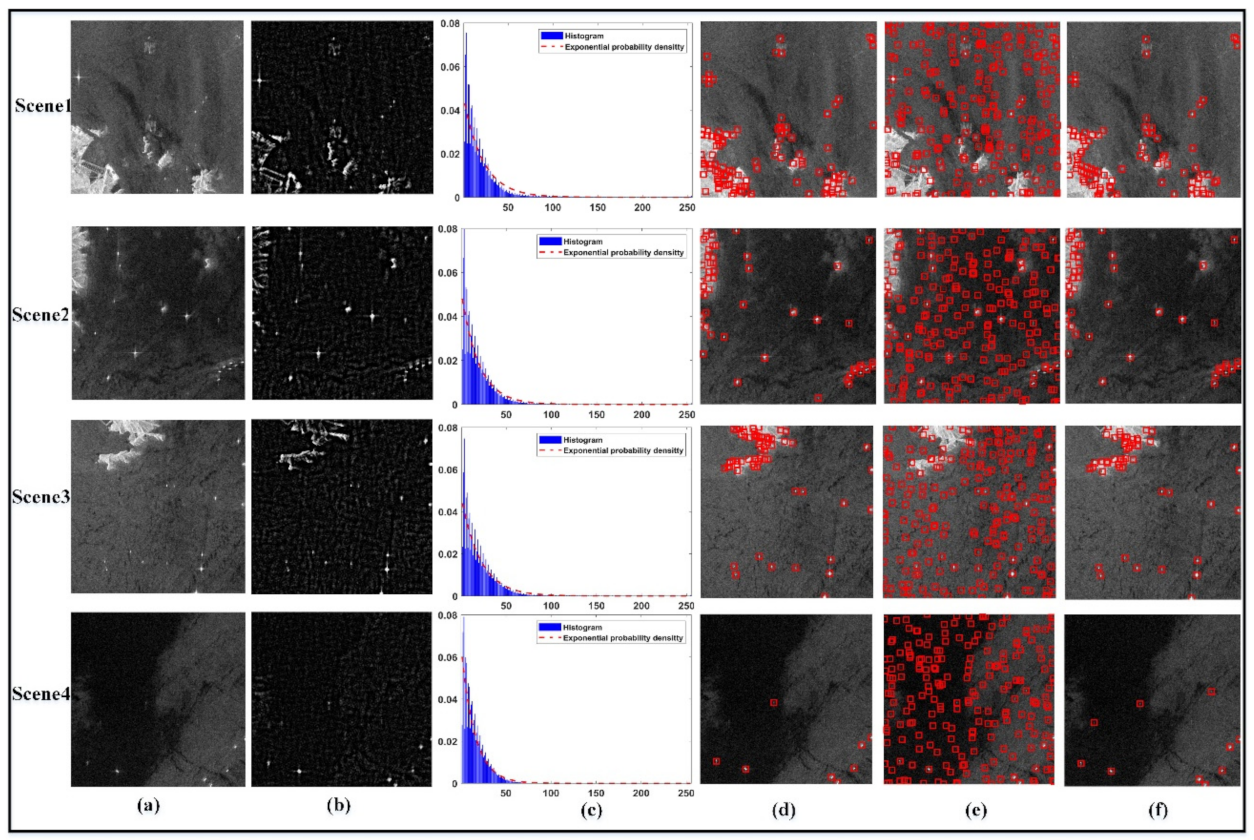

3.2. VH Polarization Results

In this section, we first conducted the experiments on VH polarization by KNN, SVM, RF, LeNet-5, and our method.

Figure 8 shows the candidate results of the ship and false alarm. Apart from the true ship, many false alarm targets are detected in the land and island areas. As mentioned above, in order to detect ships more accurately, a lightweight CNN method is proposed. Meanwhile, the KNN, SVM, RF, and classic LeNet-5 methods were introduced to indicate the effectiveness of our methods.

Figure 9 shows the results of different methods through which all ships could be detected and false alarms were reduced further. The KNN method presents more false alarms and fewer true ships than the other methods. The performance of different machine learning methods was discussed [

30,

44]. Noi and Kappas [

44] confirmed that when the number of training samples increases from 1267 pixels to 2619 pixels (each class has 135 polygons) in land cover classification experiments, the accuracy of SVM and RF is significantly better than that of KNN. Wang et al. [

30] also demonstrated that the performance of KNN is less than that of RF and SVM in ship detection. Thus, the performance of RF and SVM is reasonably better than that of KNN. In the CNN method, the performance of M-LeNet is better than that of the LeNet-5 method.

To quantitatively evaluate the performance of KNN, SVM, RF, LeNet-5, and M-LeNet, we introduced the evaluation indicator, such as accuracy, precision, recall, and F1 score. In these evaluation indicators, the F1 score is the weighted average of precision and recall, and is usually more useful than accuracy. The equations are as follows:

where true positive (TP) means that the ships are correctly predicted, true negative (TN) means that the ships are predicted to be false alarms, false positive (FP) means that the actual class is a false alarm and the predicted class is the ship, and false negative (FN) means that the actual class is the ship but the predicted class is a false alarm. In the CNN, the input data are the slices, the output is the probability of ships and false alarms. Hence, the evaluation performance is based on the number of ships and false alarms. Then, the accuracy, precision, recall, and F1 score were evaluated based on ground truth and the number of predictions of the ship and false alarm slices.

In addition, the number of missed ships and the number of false alarms were also calculated. In the sub-images, 122 true ships were obtained through expert knowledge interpretation using the SAR scattering mechanism.

Table 7 presents the detailed evaluation indicators. RF provides the best evaluation indicators compared with KNN and SVM for the machine learning method. M-LeNet presents the best evaluation indicators for the CNN method. The number of the least missed ship is one in the RF method, and the number of the most missed ship is five in the KNN method. The number of the least false alarms is zero in M-LeNet, and the number of the most false alarms is eleven. The false alarm mainly occurs in the land areas in the lower-left corner of the image, which shows a structure similar to a ship, with low surrounding background. Furthermore, the false alarms caused by azimuth ambiguity are also incorrectly detected. Compared with VV polarization, VH polarization has lower backscattering, especially for small targets. Thus, the poor performance of this type of ship fails to be detected in the CNN methods, as indicated by the blue rectangles in

Figure 9. The CNN method generally exhibits better performance than the machine learning method. Although several ships are missed, the overall performance of M-LeNet is better than that of RF. The reason is that the RF classifier based on the statistical model is sensitive to the image pixels, while the convolution and pooling kernel operations lead to the small targets miss detailed texture information and rich semantic information in the CNN method. Thus, the performance of small target detection in RF is better than that of M-LeNet and the performance of false alarm detection in M-LeNet is better than that of RF. Although the number of correct ship detections by M-LeNet is not as much as that of RF, the false number of ship detections is less than that of RF and LeNet-5. The comprehensive evaluation indicators such as the F1 score, accuracy, and recall show better performance than RF. M-LeNet showed the best performance with an F1 score of 0.99 and an accuracy of 99.40%. Besides, in order to show our CNN model more transferability, a SAR image located in the Huanghai Sea area was used to test the performance of ship detection. In order to quickly evaluate the accuracy, a sub-image with the size of 4339 × 3258 was clipped. In the sub-images, 17 true ships were obtained through expert knowledge interpretation and Google Earth. Although the number of the ship is less than the sub-images in No. 1, the VH and VV polarization shows different sea background.

Figure 10 shows the detection results and

Table 8 presents the detailed evaluation indicators. The LeNet-5 presents better performance than KNN, SVM, and RF with an F1 score of 0.90 and an accuracy of 98.05%. The M-LeNet shows the best performance in those methods with an F1 score of 0.97 and an accuracy of 99.35%.

3.3. VV Polarization Results

Figure 11 shows the detection results of VV polarization. Similar to VH polarization, the more false alarms were reduced, the more ships were retained. In sub-image 1, the more false alarms mainly appeared in

Figure 11a,b,d. In sub-image 2, the false alarms mainly existed in

Figure 11f,g.

Figure 11 shows that RF performs best in machine learning and M-LeNet performs best in deep learning. To quantitatively compare the performance of different methods, we calculated the accuracy, precision, recall, and F1 score.

Table 9 presents the results of the evaluation indicators. The number of the least missed ship is zero in the RF and SVM method, and the number of the most missed ship is ten in the LeNet-5 method. The number of the least false alarms is three in M-LeNet, and the number of the most false alarms is twenty-one in the KNN method. RF and SVM could detect all the true ships, but a few false alarms were retained compared with VH polarization. Similar to the performance of VH polarization, KNN had more missed ships and false alarms. LeNet-5 performed worst with more missed ships. Although the number of missed ships in the M-LeNet method was more than that of RF and SVM, the comprehensive evaluation indicators showed the best performance with an F1 score of 0.98 and an accuracy of 98.2%. The characteristics of false alarms caused by azimuth ambiguity are similar to those of the true ship, so distinguishing the false alarms is difficult. Although the M-LeNet method could reduce false alarms caused by azimuth ambiguity more effectively than other methods, the false alarms still existed. In [

31], 680 ships and 170 ghosts were selected for training; the experiments on the Sentinel-1 images showed encouraging results, but further improvement is needed. In our experiment, the number of ghosts was under 0.2%, which indicated a great imbalance for ship and ghost training samples. Thus, the predicted performance for the ghost is poor.

Figure 12 shows the detection result and

Table 10 presents the evaluation index of VV polarization in the No.4 sub-image of the Huanghai Sea area. Different from the VH polarization in

Figure 10, the VV polarization image shows an inhomogeneous pattern in the SAR scene due to other marine phenomena that may exist in the images, e.g., moderate-to-high wind, upwelling, and eddies [

45,

46]. In those methods, the RF shows the better performance with an F1 score of 0.97 and an accuracy of 99.35% than other methods. Although the M-LeNet achieves an F1 score of 0.92 and an accuracy of 98.05%, the M-LeNet enables all ships detected in the inhomogeneous.

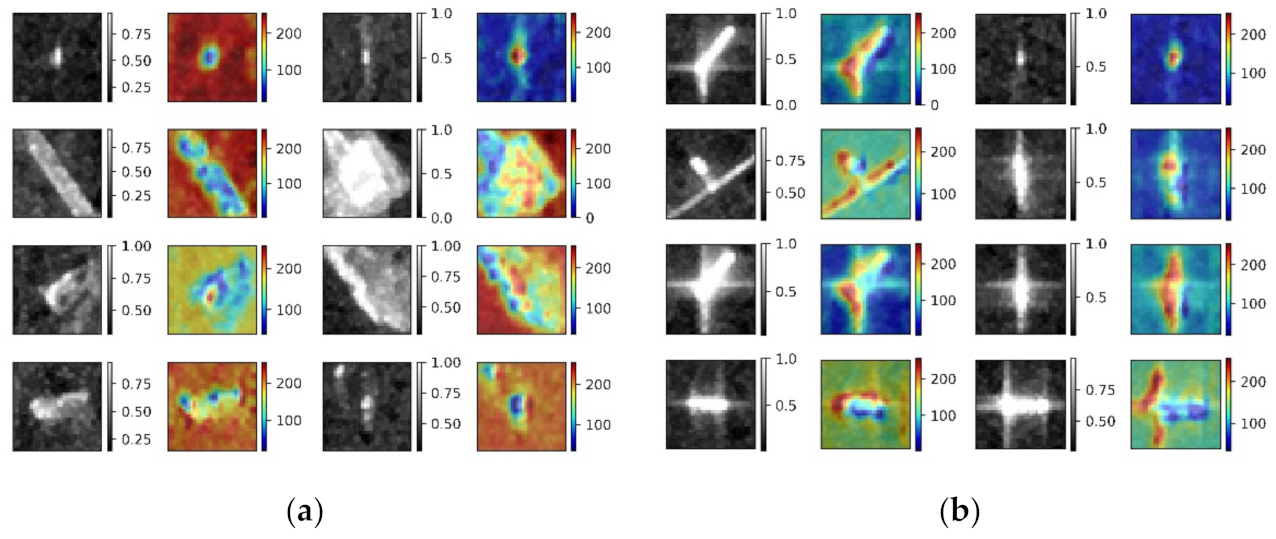

3.4. CNN Feature Visualization Analysis

Deep neural networks have enabled unprecedented breakthroughs in classification, semantic segmentation, and object detection task. Although those CNN networks enable superior performance, interpreting and visualizing them are difficult due to the lack of decomposability into intuitive and understandable components [

47]. CAM was proposed to identify discriminative regions by a restricted class of image classifications and to gain a better understanding of a model. However, any fully connected layer of the model was removed, and instead of global average pooling (GAP) to obtain the localization of a class [

48]. Thus, altering the model architecture was unavoidable, training is needed again, and the available staffing scenarios are restricted. Grad-CAM improved the CAM by using the gradient information flowing into the last convolutional layer of CNN to understand the importance of each neuron for a classification decision [

49]. Similar to CAM, Grad-CAM uses the feature maps produced by the last convolutional layer of a CNN. In CAM, we weigh these feature maps using weights taken out of the last fully connected layer of the network. In Grad-CAM, we obtained neuron importance weight using

(Equation (5)) calculated based on the global average pool, with the gradients over the height dimension (indexed by

) and the width dimension (indexed by

). Therefore, Grad-CAM obtained the class discriminative localization map

without a particular model architecture because we can calculate gradients through any kind of neural network layer we want.

performs a weighted combination of forward activation maps, and follows it by ReLU to obtain the final class discriminative saliency map, as shown in Equation (6).

where weight

is the feature map

of a target class.

represents feature map

.

is the feature map of a convolutional layer,

of height

, and width

for any class

,

is the feature map

of a convolutional layer, i.e.,

. Detailed information can be found in [

49].

The output of Grad-CAM is a “class-discriminative localization map,” i.e., a heatmap where the hot part corresponds to a particular class.

Figure 13 and

Figure 14 show the Grad-CAM visualization heatmap for “false alarm” and “ship” of VH and VV polarization, respectively. The heatmap represents the image region with the greatest attention from CNN for the correct prediction of images belonging to a particular class.

Figure 13a and

Figure 14a show great attention through the CNN prediction of images belonging to false alarms. These image slices belong to the same area of the VH and VV polarization, which contain buildings near the sea-land, small island, reef, and azimuth ambiguity. The heatmap of false alarms shows that the surrounding background was conducive to the false alarm recognition. The azimuth ambiguity presented different characteristics in VH and VV polarization; a similar phenomenon has been discussed in

Section 3. Fortunately, the azimuth ambiguity could be observed in the first row in VH and VV polarization. The azimuth ambiguity scattering intensity in VV polarization was more obvious than that in VH polarization. Furthermore, the false alarm in VV polarization presented different characteristics. One focused on the surrounding background from the heatmap, and another focused on the azimuth ambiguity itself, which was why the azimuth ambiguity of false alarm could not predict better in polarization.

Figure 13b and

Figure 14b show great attention through the CNN prediction of images belonging to the ship. The different scale ships with high scattering intensity had an important contribution to ship recognition than the surrounding sea surface, which was different from the false alarm in the VH and VV polarization.

4. Discussion

The performance of ship detection in CNN methods proves its great potential in different backgrounds such as incidence angles, wind speeds, sea states, and ocean dynamic parameters that mainly influence the backscattering coefficient between the ocean surface and the ship [

23,

50,

51]. Besides, the scattering characteristics of ghosts caused by azimuth ambiguity when the ship is moving at high speed is similar to the characteristics of the ship, thereby causing difficulty in distinguishing between the ship and ghost in a single-polarization image. The CNN method also shows great potential. In this study, the performance of lightweight CNN does not completely suppress the ghost due to the lack of adequate training samples in VV polarization. Fortunately, the ghost in VH polarization is less affected, and thus, the performance of lightweight CNN shows the best result in VH polarization. Future work will be conducted to add the training samples of the ghost.

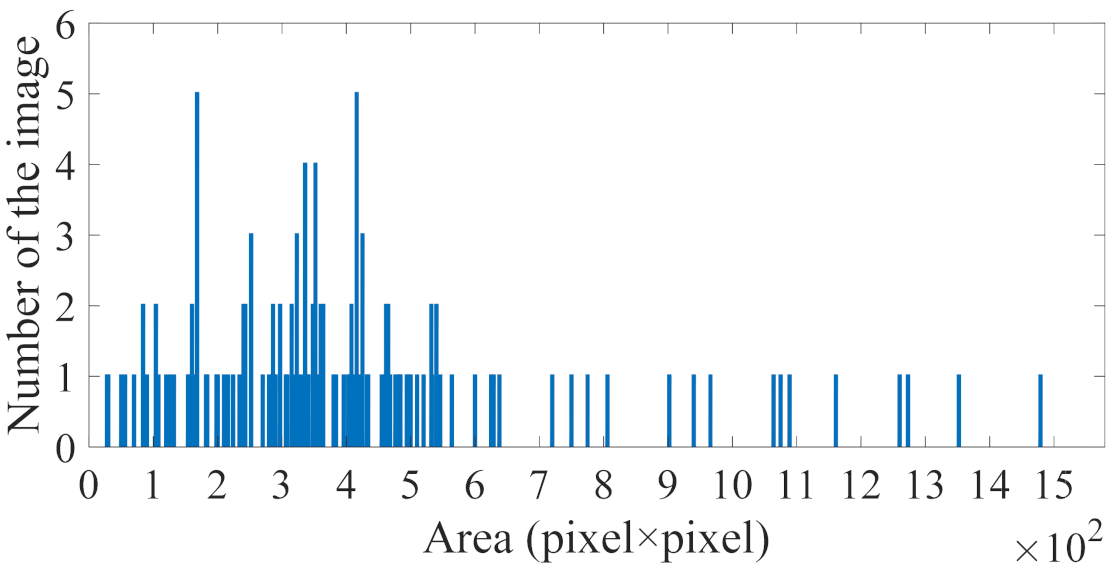

In the object detectors, the size of small targets is less than 32 × 32 for nature images [

21]. However, the SAR images are different from the nature images, and the size of the ship is usually much less than 32 × 32, especially for ships operating offshore.

Figure 15 shows the size of the ship in the test SAR image. Almost all ships have an area of less than 32 × 32, and most ships have an area of less than 24 × 25. The SVM and RF methods based on statistical characteristics show good performance with the fewest ships missed, especially the small ships in VV polarization; however, some false alarms cannot be avoided. The PFN module and feature fusion strategy are often used to improve the detection accuracy and reduce the false alarms of the small target [

26,

42,

52]. Furthermore, those modules always integrate into the VGG16 and ResNet-50 networks [

42,

53]; the CNN models are complex and have many parameters to train. The PFN module and feature fusion strategy show effectiveness for small goals in object detectors, but may show poor effectiveness for much less than 32 × 32. Thus, in this study, we provide a dataset and two-stage method for ship detection with the SAR image, where even extremely small ships can be completely recognized in the first stage. In the second stage, the different scale candidates in the test SAR images can be accurately detected by considering context background information. The best and stable performance of ship detection is demonstrated by M-LeNet, which can reduce the false alarms and missed ships, and obtain higher precision in VH and VV polarization than other methods in different ocean areas and scenarios.

In the previous studies, the ship detection using sentinel-1 SAR images was carried out by Wang et al. [

54]. The performance of ship detection can reach an accuracy of 98.07% and an F1 score of 0.90 by Faster RCNN, thus, the number of false alarms was detected to be relatively large [

54]. The accuracy could reach 90.05% based on YOLOv2 for imagery [

55]. The test precision and F1 score were 91.3% and 0.92 for detecting multiscale ships and small ships, when using the GF-3 dataset, respectively [

42]. In [

29], the attention module was used to improve the performance of ship detection, the recall, precision, and F1 score could reach 0.96, 96.4%, and 0.96, respectively. Although the performance of ship detection was improved, the model complexity had increased. To reduce model complexity, a simple CNN was used to detect the ship, and the accurate rate of ship detection was 97.2% when using the spaceborne image [

30]. The lightweight CNN was proposed to improve the accuracy and F1 score in our experiments. The performance of lightweight CNN shows that the best result can reach an accuracy of 99.4% and an F1 score of 0.99 based on Sentinel-1 images.

Figure 15 shows the most ship has an area of less than 24 × 25 pixel. The test accuracy and F1 score also demonstrate the proposed method can detect the small-level ship. To sum up, the proposed method can detect the ship effectively in contrast to that with the detector above. Unfortunately, it was rarely analyzed and visualized the feature to gain a better understanding of a model in the previous studies. In order to understand and visualize the model, the Grad-CAM was used, and the result demonstrated it could help us understand the mechanism of how the ship and false alarm was predicted by the lightweight CNN model work. Hence, based on the visualization and analysis of the Grad-CAM, it can be used to help to detect the ship with the weakly unsupervised method in future work.

From the above discussion, the lightweight CNN we proposed can show good performance in different ocean areas and scenarios. The difference with those detectors [

29,

54,

55] does not need the input data and ground truth bounding box one-to-one correspondence, and only labeled in ship and no-ship. Besides, the CNN model we proposed is simplified as a shallow convolution neural network and improves efficiency in comparison with Faster RCNN, SSD, and Yolo, etc. However, comparing with those detectors, the CNN model we proposed is not end-to-end. To obtain the detect result, the data preprocess first needs to be applied to SAR images, then the lightweight CNN is used to accurately detect the ship. Although, the proposed method shows an accuracy of 99.4% and an F1 score of 0.99, how to simplify the data preprocess and integrate it into the CNN model to achieve end-to-end training is worth considering in future work. Besides, the ocean surface is modulated and complexed by ocean dynamics processes such as wind, waves, upwelling, and eddies, as well as sea state. Due to the limited data for training, it cannot cover all sea state conditions. The CNN model was not truly explored with comparably limited training data by Zhang et al. [

5]. Hence, in order to make the CNN model to have more generalization capability, more data should be added in future work.