Assimilation of LAI Derived from UAV Multispectral Data into the SAFY Model to Estimate Maize Yield

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Site and Experimental Design

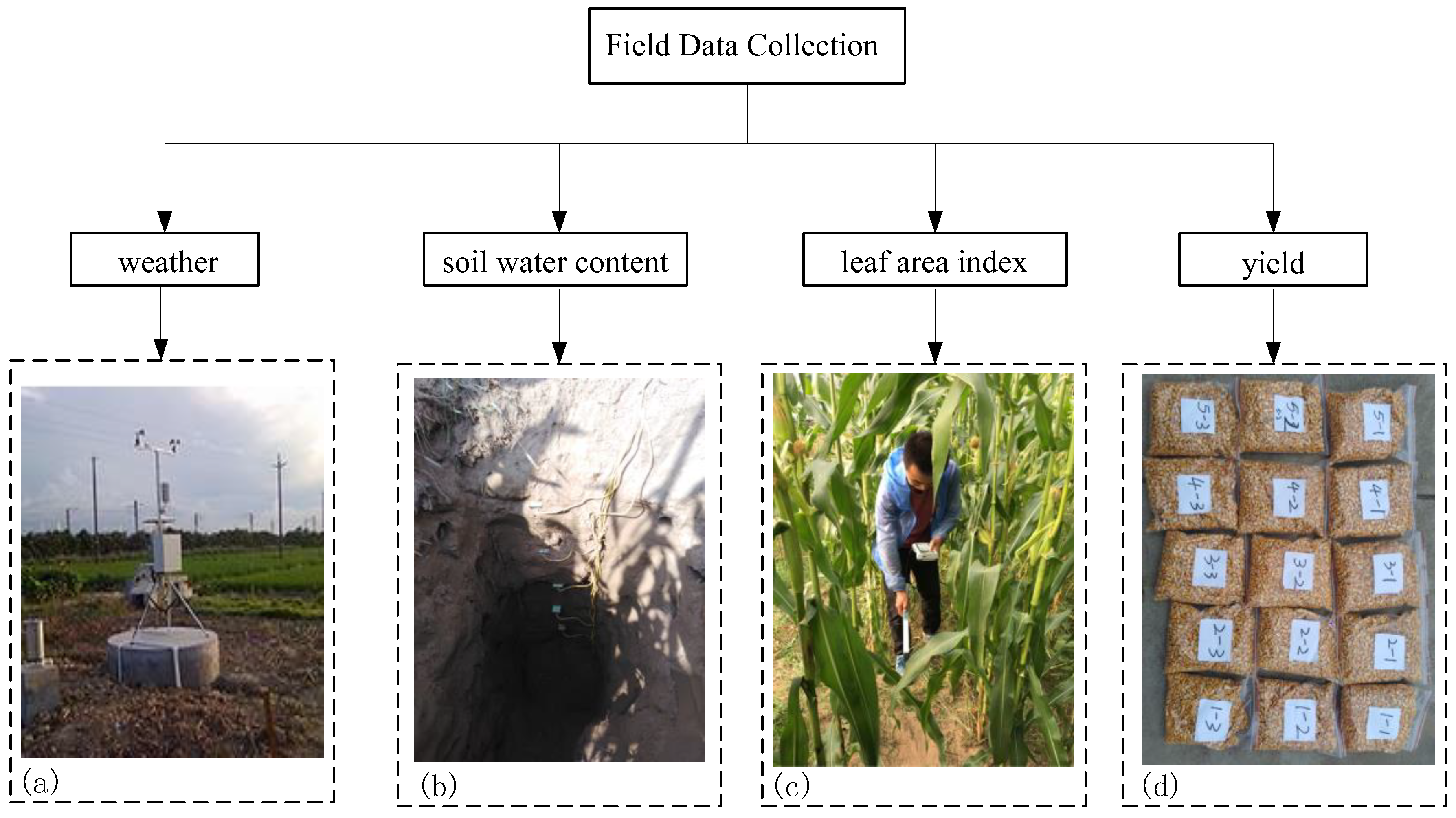

2.2. Field Data Collection

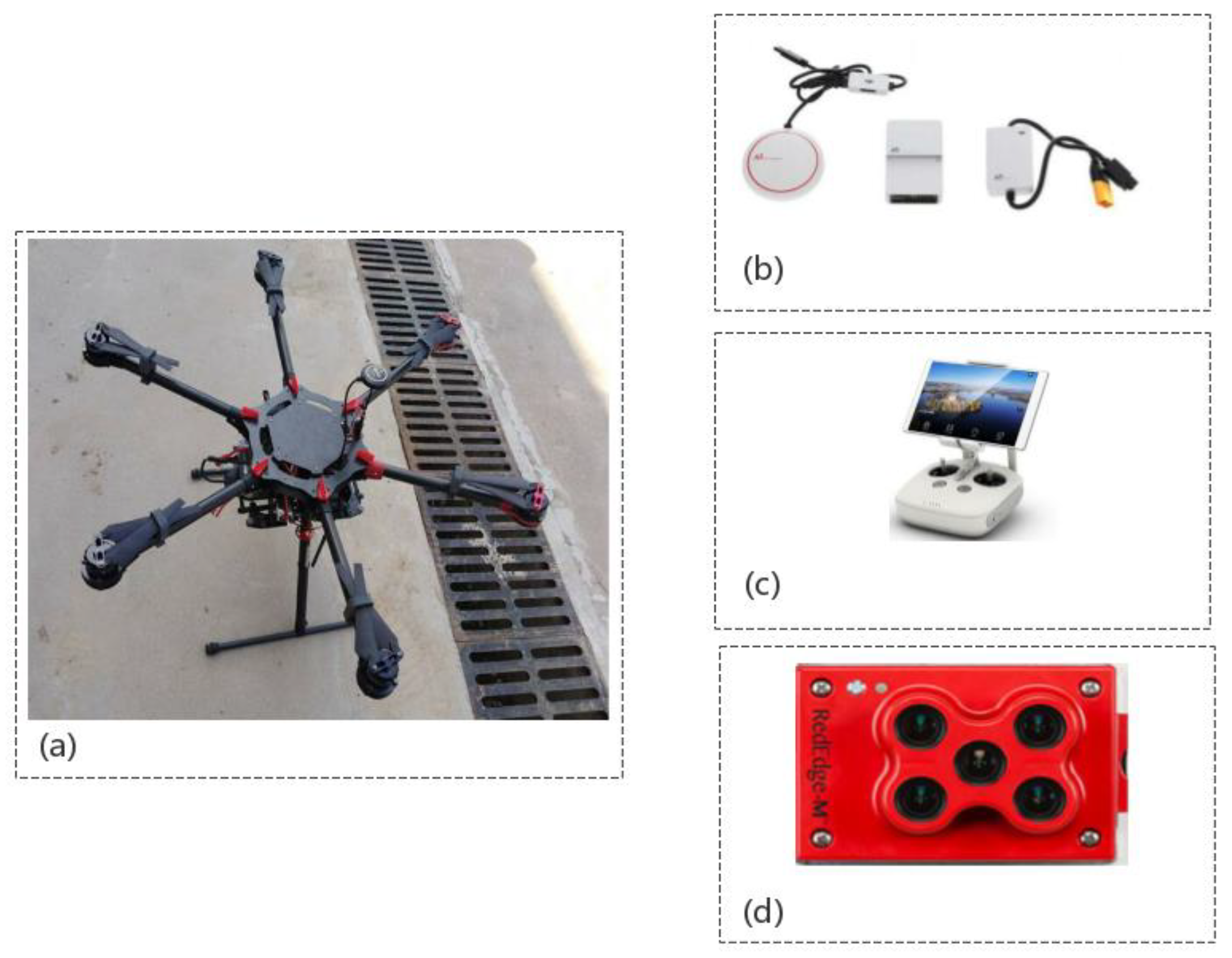

2.3. UAV Camera System for Multispectral Imagery and Data Collection

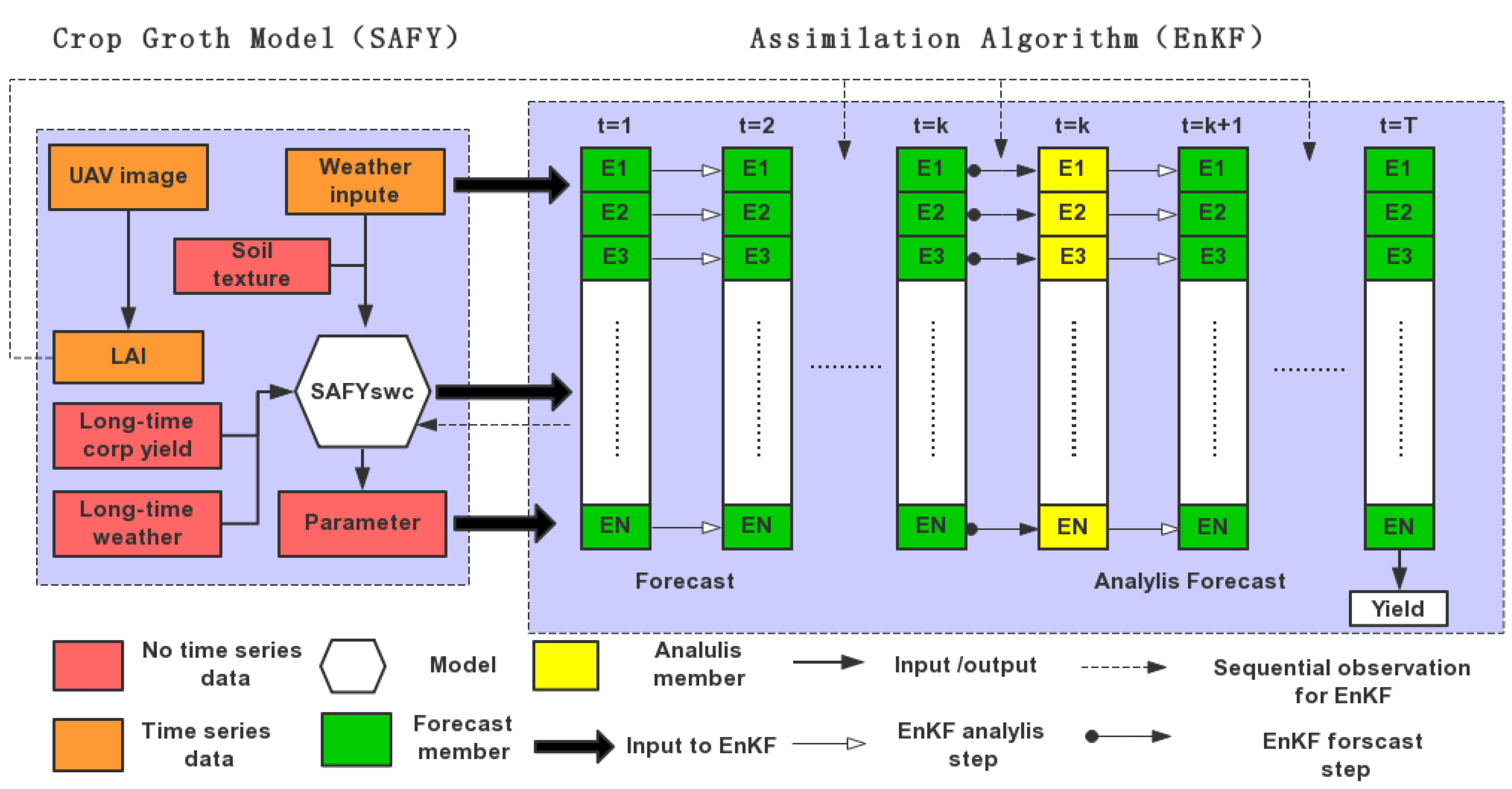

2.4. Crop Growth Model

2.5. Data Assimilation and Technical Processes

2.6. Vegetation Index Calculation and Model Evaluation

3. Result

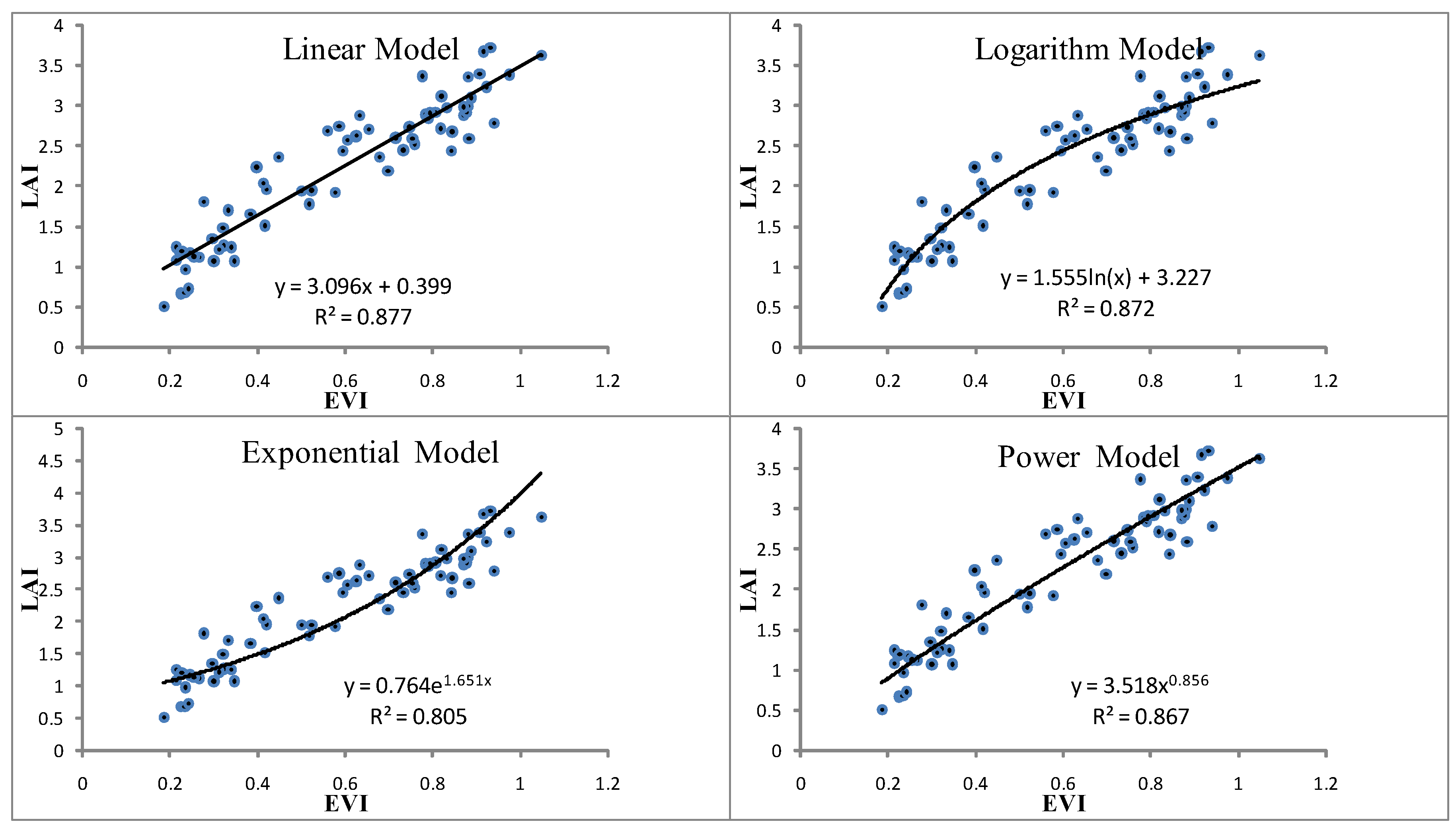

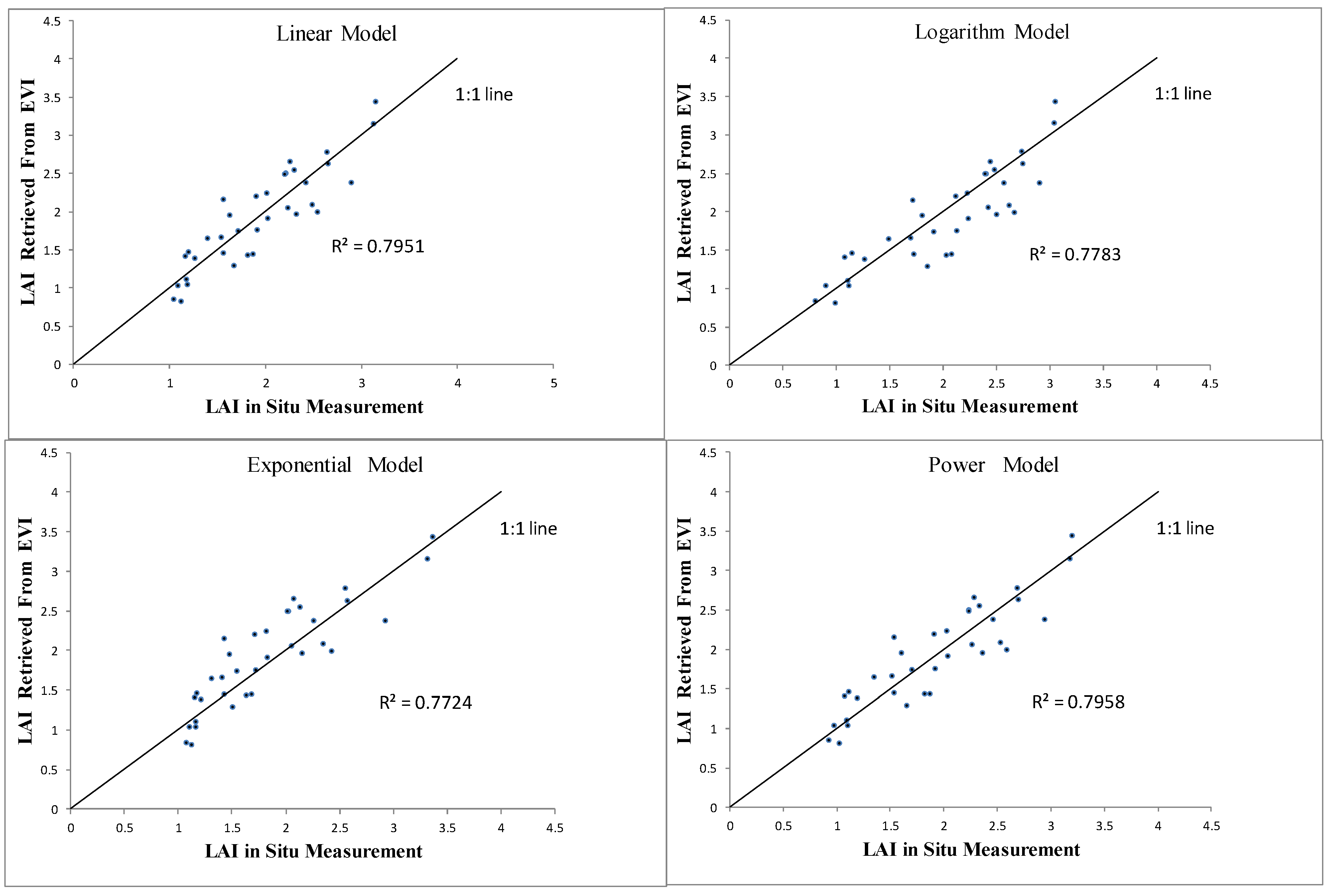

3.1. LAI Estimation Using Vegetation Indices

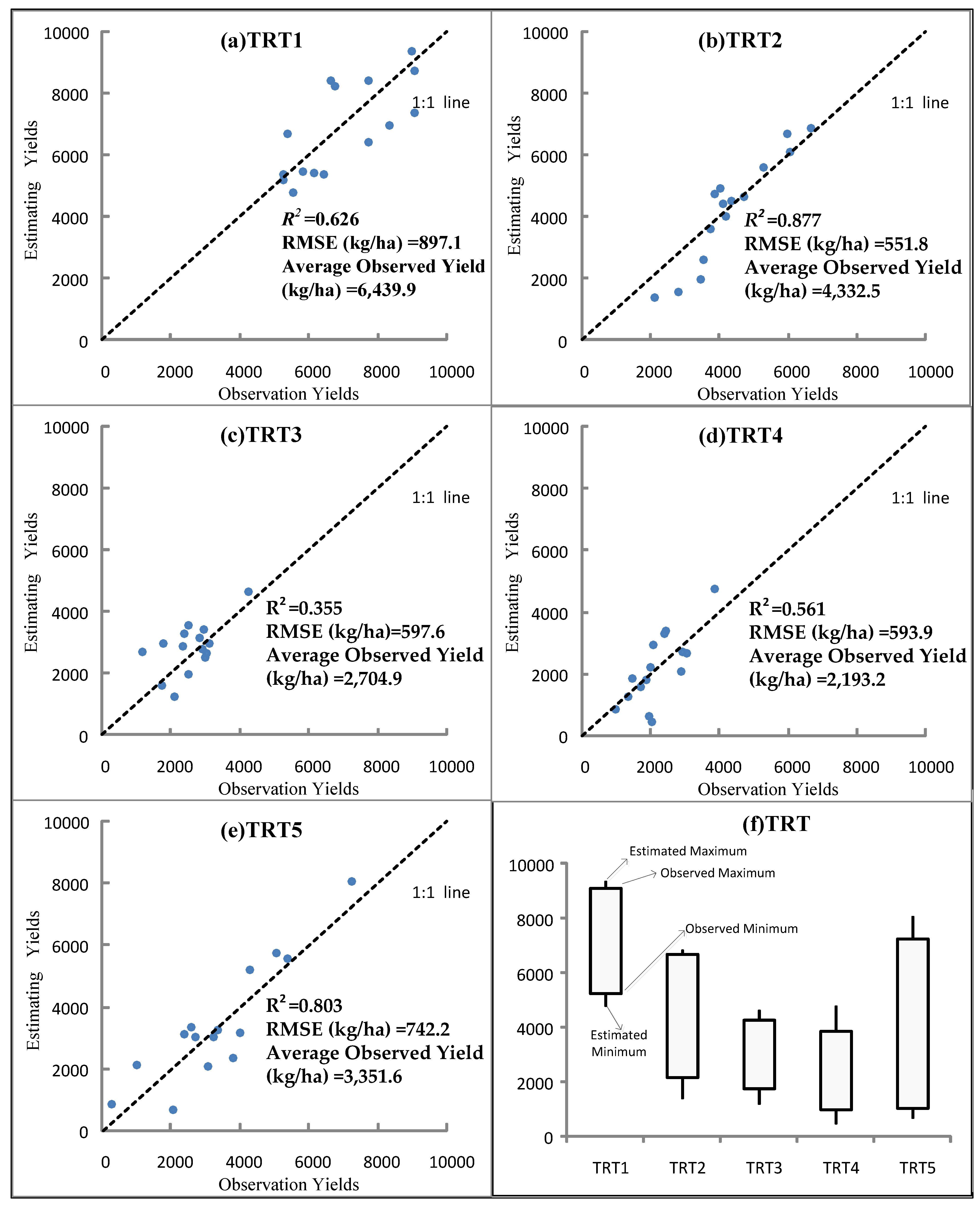

3.2. Comparison of Observation Yield and Estimated Yield

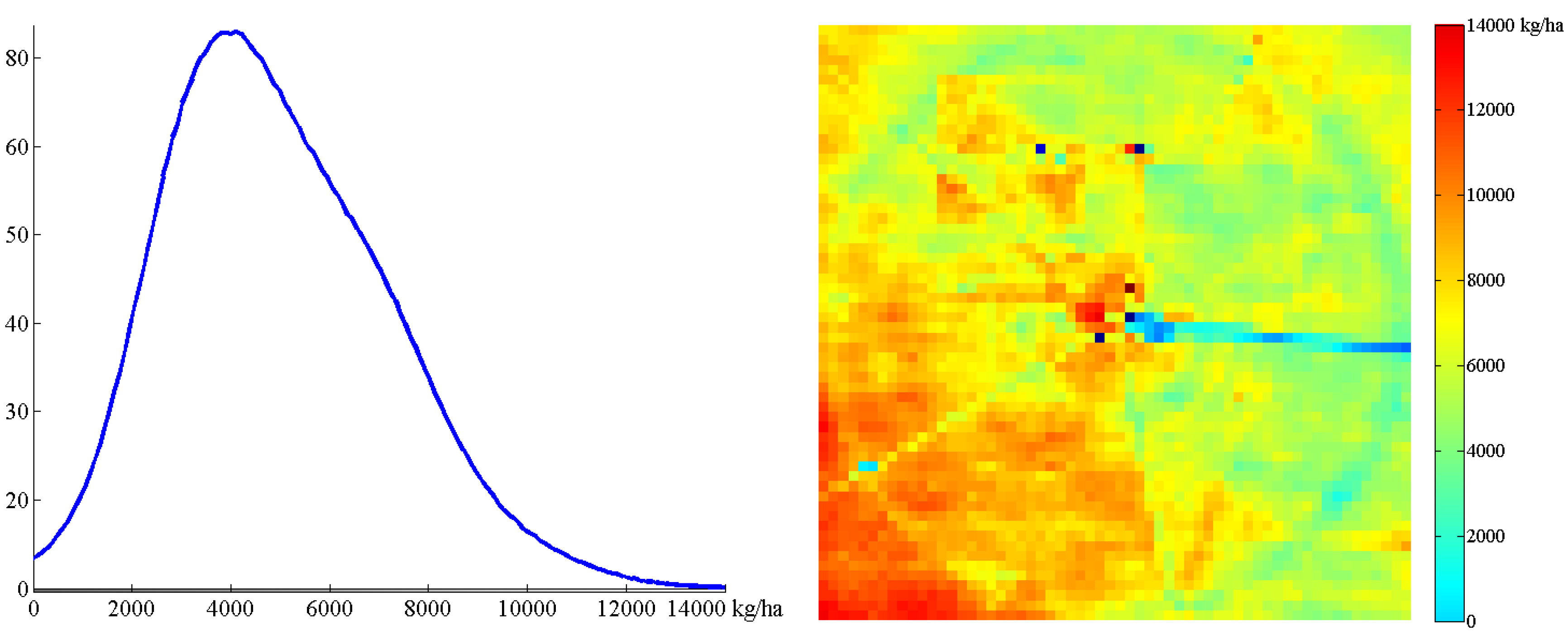

3.3. Yield Mapping

4. Discussion

4.1. Accuracy of LAI Inversion and its Influence on Yield Estimation Accuracy

4.2. Uncertainties in the Estimated Crop Yield

4.3. Yield Estimation and Data Assimilation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Heimlich, R.; Sonka, S.; Khanna, M.; Lowenberg-DeBoer, J. Precision agriculture in the twenty-first century: Report of the National Research Council committee. Am. J. Agr. Econ. 1998, 80, 1159. [Google Scholar]

- Idso, S.B.; Jackson, R.D.; Reginato, R.J. Remote-Sensing of Crop Yields. Science 1977, 196, 19–25. [Google Scholar] [CrossRef]

- Liu, J.R.M.J.; Liu, J.; Miller, J.R.; Pattey, E.; Haboudane, D.; Strachan, I.B.; Hinther, M. Monitoring crop biomass accumulation using multi-temporal hyperspectral remote sensing data. In Proceedings of the 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; pp. 1637–1640. [Google Scholar] [CrossRef]

- Toscano, P.; Castrignanò, A.; Di Gennaro, S.F.; Vonella, A.V.; Ventrella, D.; Matese, A. A Precision Agriculture Approach for Durum Wheat Yield Assessment Using Remote Sensing Data and Yield Mapping. Agronomy 2019, 9, 437. [Google Scholar] [CrossRef]

- Shang, J.; Liu, J.; Poncos, V.; Geng, X.; Qian, B.; Chen, Q.; Dong, T.; Macdonald, D.; Martin, T.; Kovacs, J.; et al. Detection of Crop Seeding and Harvest through Analysis of Time-Series Sentinel-1 Interferometric SAR Data. Remote Sens. 2020, 12, 1551. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Qian, B.; Zhao, T.; Jing, Q.; Geng, X.; Wang, J.; Huffman, T.; Shang, J. Estimating winter wheat biomass by assimilating leaf area index derived from fusion of Landsat-8 and MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 63–74. [Google Scholar] [CrossRef]

- Dong, T.; Shang, J.; Liu, J.; Qian, B.; Jing, Q.; Ma, B.; Huffman, T.; Geng, X.; Sow, A.; Shi, Y.; et al. Using RapidEye imagery to identify within-field variability of crop growth and yield in Ontario, Canada. Precis. Agric. 2019, 20, 1231–1250. [Google Scholar] [CrossRef]

- Shang, J.; Liu, J.; Ma, B.; Zhao, T.; Jiao, X.; Geng, X.; Huffman, T.; Kovacs, J.M.; Walters, D. Mapping spatial variability of crop growth conditions using RapidEye data in Northern Ontario, Canada. Remote Sens. Environ. 2015, 168, 113–125. [Google Scholar] [CrossRef]

- Rudorff, B.F.T.; Batista, G.T. Wheat yield estimation at the farm level using TM Landsat and agrometeorological data. Int. J. Remote Sens. 1991, 12, 2477–2484. [Google Scholar] [CrossRef]

- Ruwaimana, M.; Satyanarayana, B.; Otero, V.; Muslim, A.M.; Syafiq A, M.; Ibrahim, S.; Raymaekers, D.; Koedam, N.; Dahdouh-Guebas, F. The advantages of using drones over space-borne imagery in the mapping of mangrove forests. PLoS ONE 2018, 13, e0200288. [Google Scholar] [CrossRef] [PubMed]

- Duan, B.; Fang, S.H.; Zhu, R.S.; Wu, X.T.; Wang, S.Q.; Gong, Y.; Peng, Y. Remote Estimation of Rice Yield With Un-manned Aerial Vehicle (UAV) Data and Spectral Mixture Analysis. Front. Plant Sci. 2019, 10, 204. [Google Scholar] [CrossRef] [PubMed]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Eblimit, K.; Peterson, K.T.; Hartling, S.; Esposito, F.; Khanal, K.; Newcomb, M.; Pauli, D.; et al. UAV-Based High Resolution Thermal Imaging for Vegetation Monitoring, and Plant Phenotyping Using ICI 8640 P, FLIR Vue Pro R 640, and thermoMap Cameras. Remote Sens. 2019, 11, 330. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J. Winter Wheat Canopy Height Extraction from UAV-Based Point Cloud Data with a Moving Cuboid Filter. Remote Sens. 2019, 11, 1239. [Google Scholar] [CrossRef]

- Sanches, G.M.; Duft, D.G.; Kölln, O.T.; Luciano, A.C.D.S.; De Castro, S.G.Q.; Okuno, F.M.; Franco, H.C.J. The potential for RGB images obtained using unmanned aerial vehicle to assess and predict yield in sugarcane fields. Int. J. Remote Sens. 2018, 39, 5402–5414. [Google Scholar] [CrossRef]

- Song, Y.; Wang, J.; Shang, J.; Liao, C. Using UAV-Based SOPC Derived LAI and SAFY Model for Biomass and Yield Estimation of Winter Wheat. Remote Sens. 2020, 12, 2378. [Google Scholar] [CrossRef]

- Bansod, B.; Singh, R.; Thakur, R.; Singhal, G. A comparision between satellite based and drone based remote sensing technology to achieve sustainable development: A review. J. Agric. Environ. Int. Dev. 2017, 111, 383–407. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Yao, X.; Wang, N.; Liu, Y.; Cheng, T.; Tian, Y.; Chen, Q.; Zhu, Y. Estimation of Wheat LAI at Middle to High Levels Using Unmanned Aerial Vehicle Narrowband Multispectral Imagery. Remote Sens. 2017, 9, 1304. [Google Scholar] [CrossRef]

- Hoffmann, H.; Jensen, R.; Thomsen, A.; Nieto, H.; Rasmussen, J.; Friborg, T. Crop water stress maps for an entire growing season from visible and thermal UAV imagery. Biogeosciences 2016, 13, 6545–6563. [Google Scholar] [CrossRef]

- Ferencz, C.; Bognár, P.; Lichtenberger, J.; Hamar, D.; Tarcsai, G.; Timár, G.; Molnár, G.; Pásztor, S.; Steinbach, P.; Székely, B.; et al. Crop yield estimation by satellite remote sensing. Int. J. Remote Sens. 2004, 25, 4113–4149. [Google Scholar] [CrossRef]

- Shang, J.; Liu, J.; Huffman, T.; Qian, B.; Pattey, E.; Wang, J.; Zhao, T.; Geng, X.; Kroetsch, D.; Dong, T.; et al. Estimating plant area index for monitoring crop growth dynamics using Landsat-8 and RapidEye images. J. Appl. Remote Sens. 2014, 8, 85196. [Google Scholar] [CrossRef]

- Hunt, J.E.R.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; Mccarty, G.W. Acquisition of NIR-Green-Blue Digital Photographs from Unmanned Aircraft for Crop Monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Qian, B.; Jing, Q.; Croft, H.; Chen, J.; Wang, J.; Huffman, T.; Shang, J.; Chen, P. Deriving Maximum Light Use Efficiency From Crop Growth Model and Satellite Data to Improve Crop Biomass Estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 104–117. [Google Scholar] [CrossRef]

- Casanova, D.; Epema, G.; Goudriaan, J. Monitoring rice reflectance at field level for estimating biomass and LAI. Field Crop. Res. 1998, 55, 83–92. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Kouadio, L.; Newlands, N.K.; Davidson, A.; Zhang, Y.; Chipanshi, A. Assessing the Performance of MODIS NDVI and EVI for Seasonal Crop Yield Forecasting at the Ecodistrict Scale. Remote Sens. 2014, 6, 10193–10214. [Google Scholar] [CrossRef]

- Peng, Y.; Zhu, T.; Li, Y.; Dai, C.; Fang, S.; Gong, Y.; Wu, X.; Zhu, R.; Liu, K. Remote prediction of yield based on LAI estimation in oilseed rape under different planting methods and nitrogen fertilizer applications. Agric. For. Meteorol. 2019, 271, 116–125. [Google Scholar] [CrossRef]

- Kim, N.; Ha, K.-J.; Park, N.-W.; Cho, J.; Hong, S.; Lee, Y.-W. A Comparison Between Major Artificial Intelligence Models for Crop Yield Prediction: Case Study of the Midwestern United States, 2006–2015. ISPRS Int. J. Geoinf. 2019, 8, 240. [Google Scholar] [CrossRef]

- Khaki, S.; Wang, L. Crop Yield Prediction Using Deep Neural Networks. Front. Plant Sci. 2019, 10, 621. [Google Scholar] [CrossRef]

- Cheng, Z.; Meng, J.; Wang, Y. Improving Spring Maize Yield Estimation at Field Scale by Assimilating Time-Series HJ-1 CCD Data into the WOFOST Model Using a New Method with Fast Algorithms. Remote Sens. 2016, 8, 303. [Google Scholar] [CrossRef]

- Duchemin, B.; Maisongrande, P.; Boulet, G.; Benhadj, I. A simple algorithm for yield estimates: Evaluation for semi-arid irrigated winter wheat monitored with green leaf area index. Environ. Model. Softw. 2008, 23, 876–892. [Google Scholar] [CrossRef]

- Brisson, N.; Gary, C.; Justes, E.; Roche, R.; Mary, B.; Ripoche, D.; Zimmer, D.; Sierra, J.; Bertuzzi, P.; Burger, P.; et al. An overview of the crop model stics. Eur. J. Agron. 2003, 18, 309–332. [Google Scholar] [CrossRef]

- Steduto, P.; Hsiao, T.C.; Raes, D.; Fereres, E. AquaCrop-The FAO Crop Model to Simulate Yield Response to Water: I. Concepts and Underlying Principles. Agron. J. 2009, 101, 426–437. [Google Scholar] [CrossRef]

- Monteith, J.L. Solar Radiation and Productivity in Tropical Ecosystems. J. Appl. Ecol. 1972, 9, 747. [Google Scholar] [CrossRef]

- Maas, S.J. Parameterized Model of Gramineous Crop Growth: I. Leaf Area and Dry Mass Simulation. Agron. J. 1993, 85, 348–353. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, J.; Dong, T.; Shang, J.; Tang, M.; Zhao, L.; Cai, H. Evaluation of the Simple Algorithm for Yield Estimate Model in Winter Wheat Simulation under Different Irrigation Scenarios. Agron. J. 2019, 111, 2970–2980. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, J.; Dong, T.; Pattey, E.; Shang, J.; Tang, M.; Cai, H.; Saddique, Q. Coupling Hyperspectral Remote Sensing Data with a Crop Model to Study Winter Wheat Water Demand. Remote Sens. 2019, 11, 1684. [Google Scholar] [CrossRef]

- Mariotto, I.; Thenkabail, P.S.; Huete, A.; Slonecker, E.T.; Platonov, A. Hyperspectral versus multispectral crop-productivity modeling and type discrimination for the HyspIRI mission. Remote Sens. Environ. 2013, 139, 291–305. [Google Scholar] [CrossRef]

- Johnson, D.M. An assessment of pre- and within-season remotely sensed variables for forecasting corn and soybean yields in the United States. Remote Sens. Environ. 2014, 141, 116–128. [Google Scholar] [CrossRef]

- Zaman-Allah, M.; Vergara, O.; Araus, J.L.; Tarekegne, A.; Magorokosho, C.; Zarco-Tejada, P.J.; Hornero, A.; Albà, A.H.; Das, B.; Craufurd, P.Q.; et al. Unmanned aerial platform-based multi-spectral imaging for field phenotyping of maize. Plant Methods 2015, 11, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Franklin, S.E.; Guo, X.; Cattet, M. Remote Sensing of Ecology, Biodiversity and Conservation: A Review from the Perspective of Remote Sensing Specialists. Sensors 2010, 10, 9647–9667. [Google Scholar] [CrossRef]

- Sidike, P.; Asari, V.K.; Sagan, V. Progressively Expanded Neural Network (PEN Net) for hyperspectral image classification: A new neural network paradigm for remote sensing image analysis. ISPRS J. Photogramm. Remote Sens. 2018, 146, 161–181. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Maresma, Á.; Ariza, M.; Martinez, E.; Lloveras, J.; Martínez-Casasnovas, J.A. Analysis of Vegetation Indices to Determine Nitrogen Application and Yield Prediction in Maize (Zea mays L.) from a Standard UAV Service. Remote Sens. 2016, 8, 973. [Google Scholar] [CrossRef]

- Zhao, Y.; Chen, S.; Shen, S. Assimilating remote sensing information with crop model using Ensemble Kalman Filter for improving LAI monitoring and yield estimation. Ecol. Model. 2013, 270, 30–42. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, Q.; Zhou, J.; Zhang, G.; Chen, C.; Wang, J. Assimilating remote sensing information into a coupled hydrology-crop growth model to estimate regional maize yield in arid regions. Ecol. Model. 2014, 291, 15–27. [Google Scholar] [CrossRef]

- Ines, A.V.; Das, N.N.; Hansen, J.W.; Njoku, E.G. Assimilation of remotely sensed soil moisture and vegetation with a crop simulation model for maize yield prediction. Remote Sens. Environ. 2013, 138, 149–164. [Google Scholar] [CrossRef]

- Sakov, P.; Oke, P.R. A deterministic formulation of the ensemble Kalman filter: An alternative to ensemble square root filters. Tellus A: Dyn. Meteorol. Oceanogr. 2008, 60, 361–371. [Google Scholar] [CrossRef]

- Pauwels, V.R.N.; Verhoest, N.E.C.; De Lannoy, G.J.M.; Guissard, V.; Lucau, C.; Defourny, P. Optimization of a coupled hydrology-crop growth model through the assimilation of observed soil moisture and leaf area index values using an ensemble Kalman filter. Water Resour. Res. 2007, 43, 1637–1640. [Google Scholar] [CrossRef]

- de Wit, A.; van Diepen, C. Crop model data assimilation with the Ensemble Kalman filter for improving regional crop yield forecasts. Agric. For. Meteorol. 2007, 146, 38–56. [Google Scholar] [CrossRef]

- Tang, J.; Han, W.; Zhang, L. UAV Multispectral Imagery Combined with the FAO-56 Dual Approach for Maize Evapotranspiration Mapping in the North China Plain. Remote Sens. 2019, 11, 2519. [Google Scholar] [CrossRef]

- Silvestro, P.C.; Pignatti, S.; Yang, H.; Yang, G.; Pascucci, S.; Castaldi, F.; Casa, R. Sensitivity analysis of the Aquacrop and SAFYE crop models for the assessment of water limited winter wheat yield in regional scale applications. PLoS ONE 2017, 12, e0187485. [Google Scholar] [CrossRef] [PubMed]

- Duchemin, B.; Fieuzal, R.; Rivera, M.A.; Ezzahar, J.; Jarlan, L.; Rodriguez, J.C.; Hagolle, O.; Watts, C. Impact of Sowing Date on Yield and Water Use Efficiency of Wheat Analyzed through Spatial Modeling and FORMOSAT-2 Images. Remote Sens. 2015, 7, 5951–5979. [Google Scholar] [CrossRef]

- Battude, M.; Al Bitar, A.; Morin, D.; Cros, J.; Huc, M.; Sicre, C.M.; Le Dantec, V.; Demarez, V. Estimating maize biomass and yield over large areas using high spatial and temporal resolution Sentinel-2 like remote sensing data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar] [CrossRef]

- Betbeder, J.; Fieuzal, R.; Baup, F. Assimilation of LAI and Dry Biomass Data from Optical and SAR Images Into an Agro-Meteorological Model to Estimate Soybean Yield. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2540–2553. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Andreadis, K.M.; Das, N.; Stampoulis, D.; Ines, A.; Fisher, J.B.; Granger, S.; Kawata, J.; Han, E.; Behrangi, A. The Regional Hydrologic Extremes Assessment System: A software framework for hydrologic modeling and data assimilation. PLoS ONE 2017, 12, e0176506. [Google Scholar] [CrossRef]

- Kang, Y.; Özdoğan, M. Field-level crop yield mapping with Landsat using a hierarchical data assimilation approach. Remote Sens. Environ. 2019, 228, 144–163. [Google Scholar] [CrossRef]

- Huang, J.; Sedano, F.; Huang, Y.; Ma, H.; Li, X.; Liang, S.; Tian, L.; Zhang, X.; Fan, J.; Wu, W. Assimilating a synthetic Kalman filter leaf area index series into the WOFOST model to improve regional winter wheat yield estimation. Agric. For. Meteorol. 2016, 216, 188–202. [Google Scholar] [CrossRef]

- Curnel, Y.; De Wit, A.J.; Duveiller, G.; Defourny, P. Potential performances of remotely sensed LAI assimilation in WOFOST model based on an OSS Experiment. Agric. For. Meteorol. 2011, 151, 1843–1855. [Google Scholar] [CrossRef]

| Treatment | Applied Water Depth (mm) | |||

|---|---|---|---|---|

| Late Vegetative (07.04–07.27) | Reproductive (07.28–08.18) | Maturation (08.19–09.4) | Total | |

| TRT1 | 98.2 (100%) | 86.3 (100%) | 71.7 (100%) | 256.2 (100%) |

| TRT2 | 98.2 (100%) | 63.9 (74%) | 71.7 (100%) | 233.8 (91%) |

| TRT3 | 98.2 (100%) | 46.6 (54%) | 71.7 (100%) | 216.5 (85%) |

| TRT4 | 72.6 (74%) | 63.9 (74%) | 71.7 (100%) | 208.2 (81%) |

| TRT5 | 72.6 (74%) | 86.3 (100%) | 71.7 (100%). | 230.6 (90%) |

| Band No. | Name | Center Wavelength | Bandwidth | Panel Reflectance |

|---|---|---|---|---|

| 1 | Blue | 475 nm | 20 nm | 0.57 |

| 2 | Green | 560 nm | 20 nm | 0.57 |

| 3 | Red | 668 nm | 10 nm | 0.56 |

| 4 | NIR | 840 nm | 40 nm | 0.51 |

| 5 | RedEdge | 717 nm | 10 nm | 0.55 |

| Parameter | Description | Notation | Unit | Initial Value or Range a | |

|---|---|---|---|---|---|

| Fixed | Climatic efficiency | Ratio of incoming photosynthetically active radiation to global radiation | ε | - | 0.48 |

| Light-interception coefficient | Coefficient in Beer’s Law | K | - | 0.5 | |

| Optimal temperature for crop growth | The optimal temperature for crop functions | Topt | °C | 30 | |

| Minimum temperature for crop growth | The minimum temperature below which crop growth stops | Tmin | °C | 10 | |

| Maximum temperature for crop growth | The maximum temperature above which crop growth stops | Tmax | °C | 47 | |

| Specific leaf area | The ratio of leaf area to dry leaf mass | SLA | m2/g | 0.024 | |

| Initial aboveground biomass | The aboveground mass at emergence | DAM0 | g/m2 | 3.7 | |

| Root growth rate | Increase of root depth over time | Rgrt | cm·°C−1·Day−1 | 0.22 | |

| Root length/weight ratio | The ratio of root length to root dry weight | Rrt | cm/g | 0.98 | |

| Bare soil albedo | Albedo of bare soils | SALB | - | 0.16 | |

| Free | Day of Emergence | The day of the year when the dry biomass of the crop is 2.5 g/m2 | D0 | day | 120–160 |

| Leaf Partitioning Coefficient 1 | Initial fraction of daily accumulated dry biomass partitioned to leaf at the emergence | PLa | - | 0.1–0.4 | |

| Leaf Partitioning Coefficient 2 | PLa and PLb together determine the cumulated GDD when LAI reaches peak value | PLb | - | 0.001–0.01 | |

| Leaf Senescence Coefficient 1 | Cumulated GDD when leaf senescence starts (LAI decreases) | SenA | °C·Day | 500–1200 | |

| Leaf Senescence Coefficient 2 | Determines the rate of leaf senescence | SenB | °C·Day | 2000–15,000 | |

| Effective Light Use Efficiency | The ratio between produced dry biomass and APAR | ELUE | g/MJ | 2.5–5 | |

| Vegetation Indices | Equation |

|---|---|

| Normalized difference vegetation index | NDVI = (NIR − R)/(NIR + R) |

| Optimized soil-adjusted vegetation index | OSAVI = (NIR − R)/(NIR + R + X) (x = 0.16) |

| Green normalized difference vegetation index | GNDVI = (NIR − G)/(NIR + G) |

| Enhanced vegetation index without a blue band | EVI2 = 2.5 (NIR − R)/(NIR + 2.4R + 1) |

| Modified secondary soil adjusted vegetation index | |

| Enhanced vegetation index | EVI = 2.5 (NIR − R)/(NIR + 6.0R − 7.5B + 1) |

| Vegetation Index | Optimal Model | Validation Models | ||

|---|---|---|---|---|

| R2 | RMSE | R2 | RMSE | |

| NDVI | 0.823 | 0.646 | 0.784 | 0.659 |

| OSAVI | 0.799 | 0.669 | 0.772 | 0.682 |

| GNDVI | 0.792 | 0.697 | 0.743 | 0.718 |

| EVI2 | 0.724 | 0.774 | 0.690 | 0.791 |

| MSAVI2 | 0.741 | 0.728 | 0.734 | 0.742 |

| EVI | 0.877 | 0.609 | 0.795 | 0.621 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, X.; Han, W.; Ao, J.; Wang, Y. Assimilation of LAI Derived from UAV Multispectral Data into the SAFY Model to Estimate Maize Yield. Remote Sens. 2021, 13, 1094. https://doi.org/10.3390/rs13061094

Peng X, Han W, Ao J, Wang Y. Assimilation of LAI Derived from UAV Multispectral Data into the SAFY Model to Estimate Maize Yield. Remote Sensing. 2021; 13(6):1094. https://doi.org/10.3390/rs13061094

Chicago/Turabian StylePeng, Xingshuo, Wenting Han, Jianyi Ao, and Yi Wang. 2021. "Assimilation of LAI Derived from UAV Multispectral Data into the SAFY Model to Estimate Maize Yield" Remote Sensing 13, no. 6: 1094. https://doi.org/10.3390/rs13061094

APA StylePeng, X., Han, W., Ao, J., & Wang, Y. (2021). Assimilation of LAI Derived from UAV Multispectral Data into the SAFY Model to Estimate Maize Yield. Remote Sensing, 13(6), 1094. https://doi.org/10.3390/rs13061094