1. Introduction

A hyperspectral image (HSI) has a high spectral and spatial resolution, which provides rich information about ground truths [

1]. Hyperspectral sensors mounted on different space platforms, i.e., imaging spectrometers, are used to capture images in the ultraviolet, visible, near-infrared, and mid-infrared regions of the electromagnetic spectrum, where tens to hundreds of continuous and subdivided spectral bands simultaneously image the target area. The exploitation of HSI signatures is a powerful tool for observing the surface of the Earth. Therefore, there are a wide range of processing techniques to explore HSI data [

2,

3,

4], and HSI classification is one of the most popular techniques for HSI data exploitation. HSI classification is widely applied in many fields, such as agricultural applications [

5], forestry and environmental management [

6], water and maritime resource management [

7], and military and defense applications [

8].

Compared to traditional HSI classification algorithms, convolutional neural networks (CNNs) can not only explore spectral information, but can also combine spatial information with spectral information [

9]. Moreover, because of the share of parameters to tackle the curse of dimensionality [

10] and the local receptive field to learn spatial information [

11], CNNs show good performance in HSI band selection [

12,

13], feature extraction and classification [

14]. There are many CNN models used as HSI classifiers, including the spectral CNN [

15,

16], the spatial CNN [

17,

18], and the spectral–spatial CNN [

19,

20]. With the advance of remote sensing technology, better classifiers are being developed to further improve the classification performance and the complexity of CNNs. On the one hand, some new types of CNNs have been introduced for HSI classification, such as the dense CNN using shortcut connections between layers [

21] and the deep residual network with skip connections [

22]. On the other hand, some other techniques have been combined with CNNs, such as the attention mechanism [

23], sparse representation [

24], multi-scale fusion [

25], and discriminant embedding [

26].

Although the aforementioned CNN models have achieved remarkable progress in HSI classification tasks in recent years, there are two major problems that should be considered when applying these CNN models for HSI classification. On the one hand, designing state-of-the-art successful CNN architectures requires substantial professional knowledge of human experts and consume a significant amount of time for repeated debugging. For example, ResNet [

27] and DesNet [

21] were carefully handcrafted by taking into consideration the manual domain knowledge. On the other hand, manually optimizing a CNN when applying it to different data sources may take days or even weeks and should always be fine-tuned; additionally, this process results in a heavy computational burden, and is not affordable for most researchers and developers [

28].

To overcome the problems of handcrafting CNN models, neural architecture search (NAS) [

29] is an import method to automatically design neural architectures. Since Zoph et al. [

30] successfully discovered a neural network architecture that can achieve comparable performance to handcrafted CNNs, there has been a growing interest in designing a robust and well-performing neural architecture from the predefined search space by NAS. There are many different search strategies that can be used to design a proper CNN architecture, including evolutionary algorithms (EAs), reinforcement learning (RL), and gradient-based methods. Recently, Chen et al. [

31] proposed the automatic design of CNNs for HSI classification, based on the gradient descent method, and the automatic CNNs achieved better performance compared to some state-of-the-art deep learning methods. However, the method based on gradient descent easily converges to the local optimal, and it cannot be used when there is a vanishing gradient problem or an exploding gradient problem. EAs search globally, and population-based optimization affords EAs a very good global search ability. To address the NAS problem, a global search technique is required, which has always been a topic of interest for discovering the neural architecture by the EA search strategy. Junior et al. [

32] found good chain CNN architectures based on the particle swarm optimization (PSO) search strategy using nine datasets, which can only search simple chain-CNNs. Real et al. [

33] proposed AmoebaNet-A and found comparable cell-based neural architectures to some state-of-the-art ImageNet models using the CIFAR-10 dataset, showing that they consumed a significant time without weight-sharing. An alternative architecture optimization method is neuroevolution, which is inspired by the evolution of natural brains. Stanley et al. [

34] proposed a classic neuroevolutionary approach, NEAT, Neural Networks through Augmenting Topologieswhich can modify weights and add nodes and connections in neural networks. HyperNEAT [

35,

36] is an improvement of NEAT, which uses an indirect encoding method named CPPNs [

37] that can generate the patterns of weights in neural networks themselves to evolve into bigger network.

To automatically search for CNN architectures for HSI classification, we propose an NAS method named PSO-Net, which is based on PSO and consumes a significant amount of time to search for the optimal architecture. To further accelerate optimal architecture generation, we propose CPSO-Net with weight-sharing parameter optimization based on PSO-Net. We propose two cell-based CNN architecture search methods by PSO, capable of searching globally, compared to the gradient descent method. The main contributions of this paper are summarized as follows:

- (1)

Two methods based on PSO are explored to automatically design the CNN architecture for HSI classification.

- (2)

A novel encoding strategy is devised that can be used to encode architectures into arrays with the information of the connections and basic operations types between the nodes in computation cells.

- (3)

To improve the search efficiency, CPSO-Net maintains continuous SuperNet sharing parameters for all particles and optimizes by collecting the gradients of all individuals in the population.

- (4)

PSO-Net and CPSO-Net are tested on four biased and unbiased hyperspectral datasets with limited training samples, showing comparable performance to the state-of-the-art CNN classification methods.

The remainder of this paper is structured as follows. Detailed related work about neural architecture search and PSO is presented in

Section 2.

Section 3 introduces the proposed PSO-Net and CPSO-Net in detail.

Section 4 summarizes and analyzes a series of experiments performed for HSI classification.

Section 5 provides the main conclusions and perspectives of this work.

3. Proposed Method

In this section, we propose a cell-based CNN architecture search method by particle swarm optimization at first, named PSO-Net. To make the search stage more efficient and to reduce the time consumed, we developed another novel continuous PSO approach for searching neural architectures, namely CPSO-Net.

The two proposed methods are all based on the PSO architecture search step, including four procedures to search for the optimal CNN architecture: Construction of the search space, initialization of the architectures, weight optimization of the neural network constructed by the architectures, fitness evaluation of the individual particles, and particle update. CPSO-Net is an improvement based on PSO-Net, and the two methods have different procedures for the parameter optimization of architectures. The weight optimization of the former is the individual weight optimization of all particles, and the latter is weight-sharing by the inherited weights from the SuperNet.

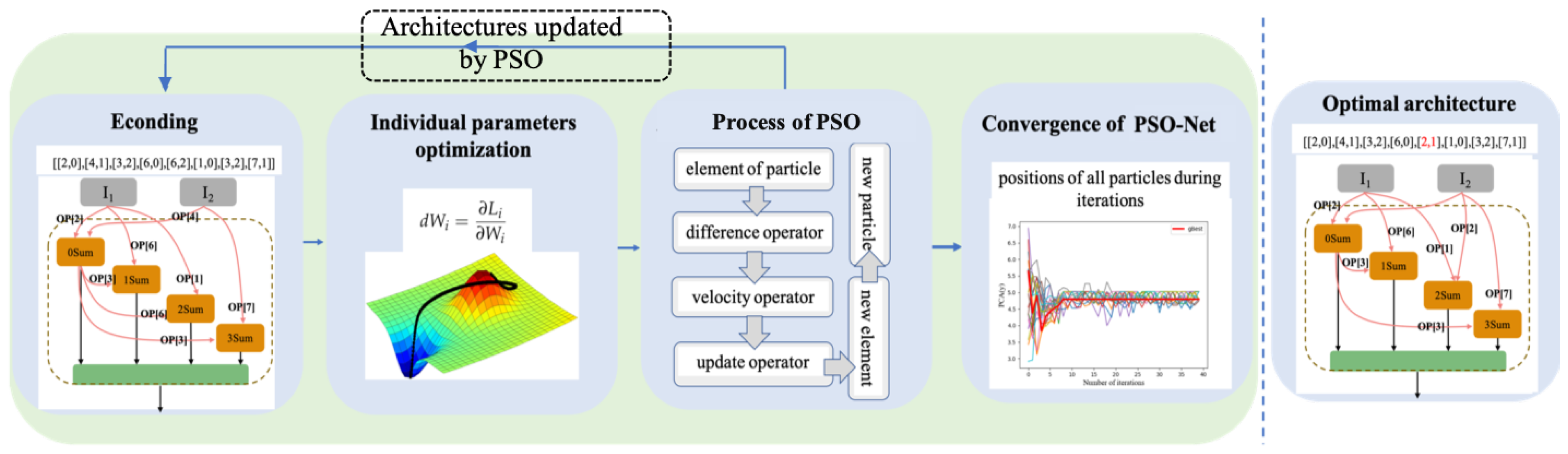

Figure 1 shows the framework of the stages of PSO-Net. From the framework, one can see that network architectures are encoded into arrays. Then, the gradient descent method is used separately to obtain the weight parameters for all architectures, and PSO is used as the architecture search method to optimize the architectures by the fitness of all particles. Finally, the optimal architecture is found after reaching the number of iterations.

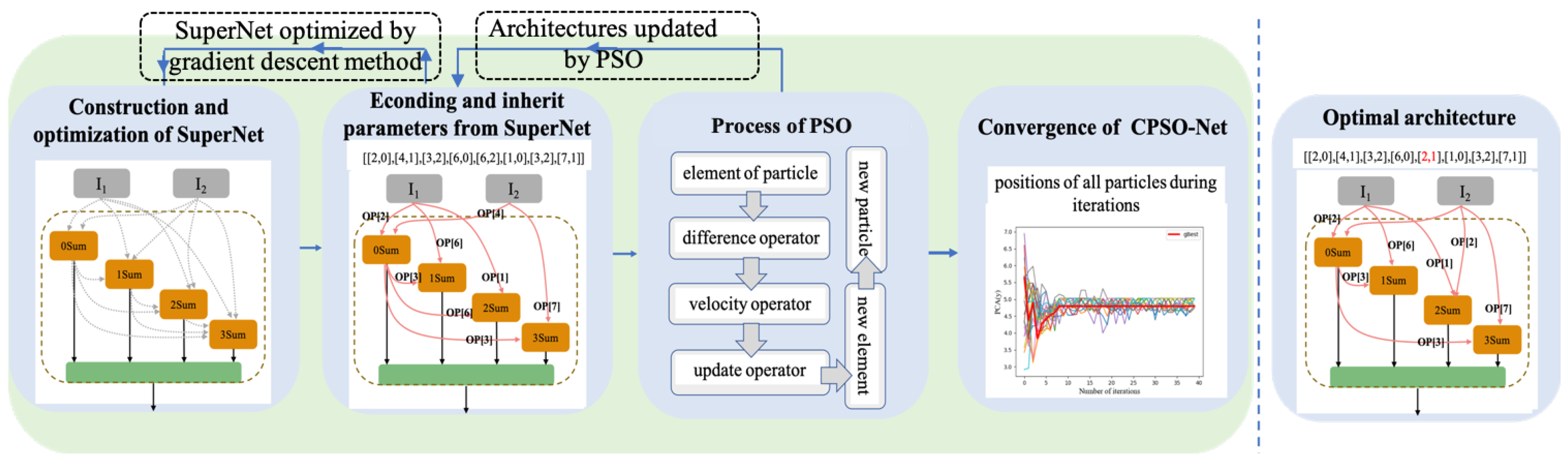

Figure 2 shows the framework of the stages of CPSO-Net, which is proposed-based PSO-Net. The main difference of

Figure 2 is the procedure of the parameter optimization of architectures. To accelerate the optimal architecture generation, a SuperNet is maintained to share the parameters for all particles and is optimized by collecting the gradients of all individuals in the population. Rather than training all the individuals of PSO-Net, the SuperNet of CPSO-Net is optimized only once during one iteration, which dramatically reduces the computational complexity by separately learning each network.

All the operations of PSO-Net and CPSO-Net are detailed in the following subsections.

3.1. Construction of the Search Space

The search space contains two parts, namely all candidate basic operations and connection nodes. On the one hand, there are three main basic operation types: Convolution operations, pooling operations, and nonlinear operations. All candidate basic operations are placed into the operations list named

to prepare for the next encoding strategy. On the other hand, the cell-based search space is used to design CNN architectures in the algorithm we proposed. Each neural network architecture is built by stacking two types of

k number computation cells, which includes normal cells and one reduction cell. Each cell consists of an ordered sequence with two input nodes,

n intermediate nodes, and one output node. The input of the input nodes are the cell outputs in the previous two cells, and the output of the cell is a cascade by the

n intermediate nodes, each of which is calculated by the sum of two computations. Additionally, each computation is based on a random one operation of the basic operations

computing on a random one node of its previous nodes. Each computation is defined as an element, which is composed of the basic operation and the computed node. Therefore, there are 2

n elements in each cell with

n intermediate nodes.

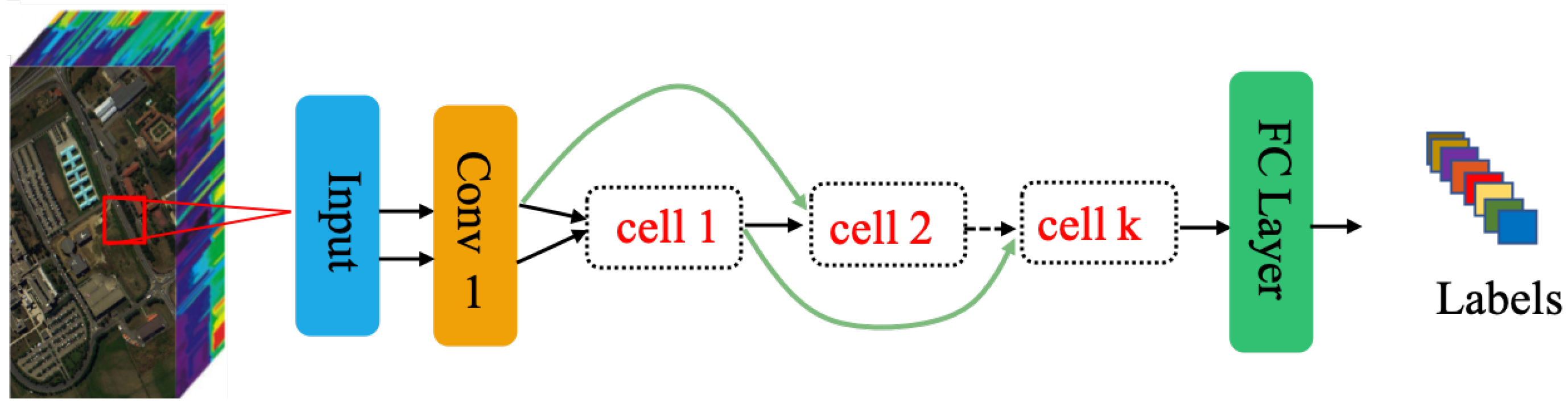

Figure 3 shows the general architecture stacking by the automatically designed computation cells by PSO-Net and CPSO-Net.

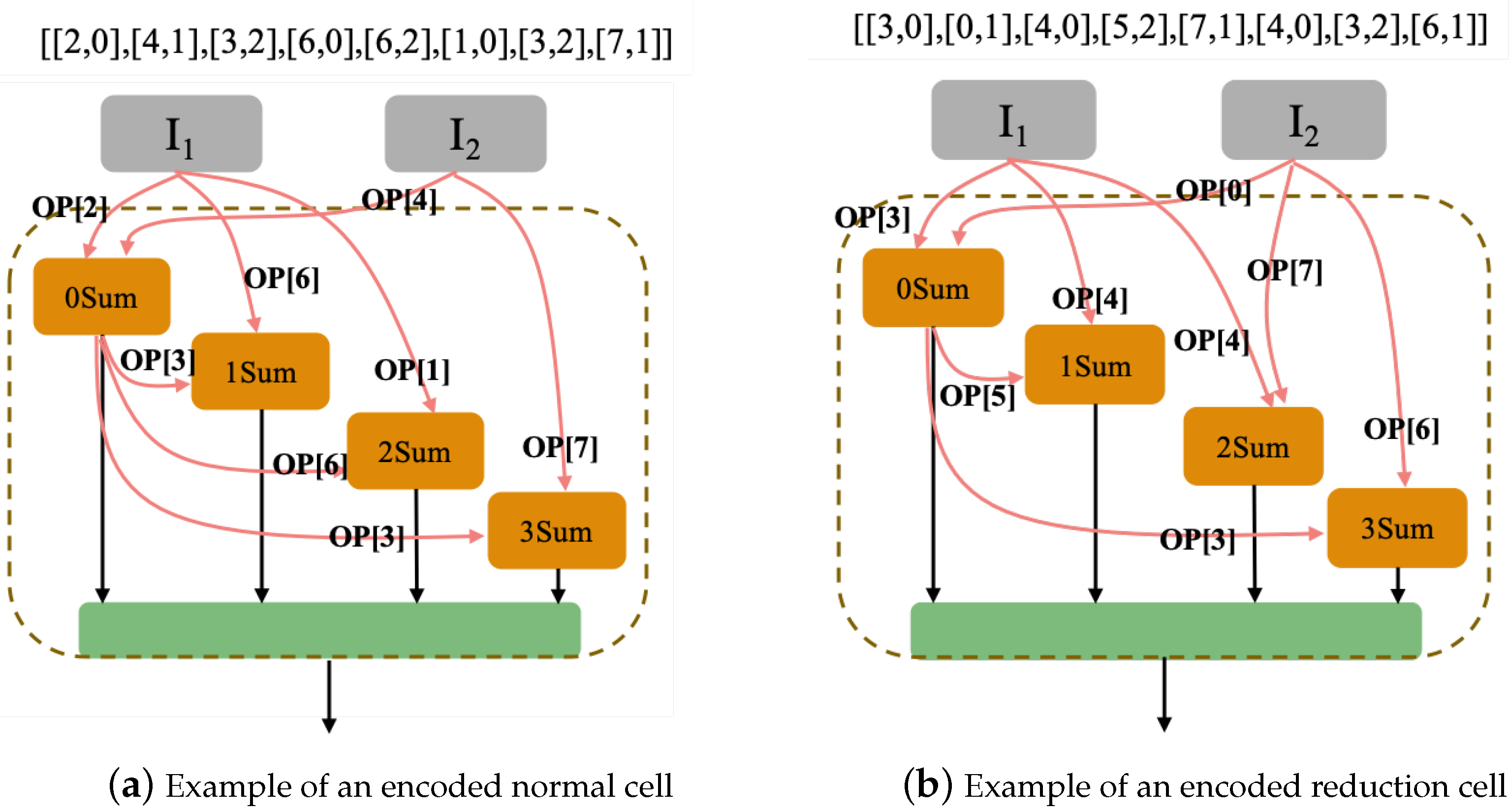

3.2. Initialization of the Swarm

Particle encoding is the core of the initialization of architectures. A novel encoding strategy was devised that can be used to encode architectures into arrays with the information of all of the basic operations and connections between the nodes in computation cells—where the first and second input node is numbered the 0th and 1th in all nodes, and the

n intermediate nodes are numbered in cells successively following the number of two input nodes. First, an array with a shape of (2, 2

n, 2) is built, which represents an architecture. The first dimension of the array represents two types of computation cells, namely normal cells and reduction cells. The second dimension of the array represents the 2

n elements (defined in

Section 3.1) in each cell with

n intermediate nodes. The third dimension of the array is composed of the index of basic operations list

and the computed node. Next,

P particles of the swarm are initialized by the above encoding strategy.

Figure 4 shows an example of an encoded architecture, including an encoded normal cell and a reduction cell with four intermediate nodes.

3.3. The Weight Optimization of the Neural Network Constructed by Architectures

The weight of the neural network constructed by architectures should be optimized, when receiving information of the connection and basic operation type between the nodes in computation cells.

3.3.1. Individual Parameter Optimization of PSO-Net

The parameter optimization of PSO-Net involves training individual particles by the gradient descent method. Each network

we searched can be represented by the particle P

and a set of full precision parameters

, where

P is the population size. The training dataset

X is denoted as the input data, and

is the prediction of the

i-th network

. The loss can be expressed as

, where

is the criterion and

Y is the target. The parameter

of

can be optimized by:

3.3.2. Weight-Sharing Parameter Optimization of CPSO-Net

To further accelerate optimal network generation, we built a SuperNet that includes all candidate operations and sharing parameters W for all particles. Each particle is regarded as a subnet and inherits the weight parameters from the fixed SuperNet, which can be optimized by the gradient descent method. Therefore, there is no need to train each particle from the beginning to the end, and the search time can therefore be greatly reduced. After the parameters have converged, the architecture could alternately be optimized by the PSO algorithm.

Sampling from the SuperNet

, the different network

is a part of

, and the parameter W

of

can be inherited from

W. Therefore, W

can be represent as

, where ⊙ is the mask operation that retains the parameters of the corresponding nodes of particle

. Therefore, the gradient of

also can be calculated as:

The SuperNet shares

W for all different architectures, so the gradient of parameter

W should be calculated by collecting the gradients of all individuals in the population:

Inspired by the mini-batch samples idea of stochastic gradient descent for updating parameters, we used mini-batch architectures to accumulate the gradients of all individuals for updating the shared weight W.

3.4. Fitness Evaluation

Fitness evaluation is performed using the HSI classification accuracy of the optimized neural network on the validation set, and aimed to estimate the performance of the architectures. Each particle is evaluated as a solution by the fitness evaluation function. Its optimal solution in the past is the , and the current global optimal solution in the swarm is the . By the fitness of PSO-Net and CPSO-Net, we can find the and .

3.5. Particle Update

The difference and the velocity operator are devised based on PSO to update the particles. The particle P is updated element by element until all elements of the particle have been updated, so the particle update problem is transformed into the element update problem. Before being able to update an element, the difference between two elements with the same index in two particles needs to be measured, and the velocity of the element needs to be computed.

The difference is calculated by comparing and . If both elements are the same, the difference is “None”, which means no difference. If the two elements are different, the result of is .

The velocity of any given element of a particle is based on the two differences: and , where , , and P have the same index in a particle. Each element velocity is chosen from the two differences based on the decision factor and a number r obtained at random from [0,1). If , the algorithm selects from the difference . Otherwise, the algorithm selects from the difference .

Each element is updated according to its element velocity. If its element velocity is “None”, the element remains the same, otherwise it should be displaced by its velocity. Particles are modified element by element according to the element velocity.

Figure 5 shows the update of an element of a particle.

The essence of the role of a particle update is that a particle update can be converted to an element update in the particle. We assumed that the elements of

and

can obtain good fitness, so that

and

can achieve good fitness. Therefore, we compared

P to

and

element by element, and determined whether the element

P needs to be replaced by

or

.

Figure 4 shows the update process of particles.

4. Experimental Results and Analysis

In this section, we explain the experimental setting of the method proposed and a comparative algorithm, followed by the empirical results, to confirm the effectiveness of the proposed method for automatically designing CNNs for HSI classification based on four HSI datasets with biased and unbiased data. The experimental conditions were as follows: A NVIDIA GTX1080 Ti GPU, a E5-2620 CPU, and a memory of 32 GB.

4.1. Datasets

In this section, we used four standard hyperspectral datasets to evaluate the methods we proposed. As shown in

Table 1, the four datasets were Salinas Valley in California in the USA (Salinas), a mixed vegetation site over the Indian Pines test area in northwestern Indiana in the USA (Indian Pines), an urban site over the University of Pavia in Italy (Pavia), and the Kennedy Space Center (KSC) in Florida in the USA. The detailed training samples of each class are shown in

Table 1.

The first dataset, Salinas, was collected by the Airborne Visible Infrared Imaging Spectrometer (AVIRIS) with 224-band over Salinas Valley, California, which is of a high spatial resolution (3.7 m/pixels). The available dataset consists of 204 bands with pixels after discarding the 20 water absorption bands and the 16 land cover classes.

The second dataset, Indian Pines, was captured by the AVIRIS sensor with 220-band over the Indian Pines test area. The available dataset consists of 200 bands with pixels after removing the water absorption bands. The ground reference map covers 16 classes of interest.

The third dataset, University of Pavia, was collected by the ROSIS-3 sensor with 115-band over the University of Pavia, which is of a high spatial resolution (1.3 m/pixels) to avoid mixed pixels. The available dataset consists of 103 bands with pixel vectors after removing the noisy bands. The ground reference map covers nine classes of interest.

The fourth dataset, KSC, was captured by the airborne AVIRIS sensor over KSC, Florida, at an altitude of approximately 20 km with a spatial resolution of 18 m. The available dataset consists of 176 bands with pixels after removing the water absorption and noisy bands. The ground reference map covers 13 classes of interest.

4.2. Comparative Experiment

To evaluate the performance of the two methods we proposed, some CNN classification methods, including handcrafted CNN models and automatically designed CNN models based on spatial–spectral information, were employed to achieve a comprehensive comparison with the PSO-Net and CPSO-Net methods. We chose several handcrafted CNN models that have reported results in the spectral–spatial information hyperspectral datasets to test the proposed algorithms. The CNN [

51] was conducted, which is a sample CNN designed to tackle the problem of HSI spectral–spatial classification. The spectral–spatial residual network (SSRN) [

22] and ResNet [

27] are residual-based methods, which can achieve good classification performance. DesNet [

21] using shortcut connections between layers was used for comparison. Moreover, an automatically designed CNN method, namely Auto-CNN, was introduced, which explores the automatic design of CNNs for HSI classification by the gradient descent and achieved good performance. To demonstrate the performance of the two methods we proposed, we made a comparison in the classification accuracy and the computational complexity with handcrafted and automatically designed CNN models. To further illustrate the search effectiveness of PSO-Net and CPSO-Net, we compared them with a peer, the automatically designed method Auto-CNN, in terms of time complexity.

4.3. Experimental Settings

In this section, we explain the detailed parameter setting for our experiments. For the automatically designed algorithms, including Auto-CNN, PSO-Net, and CPSO-Net, the search space should be predefined. We set the number of computation cells k to 3 and the intermediate nodes n to 4, and the basic operation list includes the following candidate basic operations: None; 3 × 3 max pooling, abbreviated as max_3 × 3; 3 × 3 average pooling, abbreviated as avg_3 × 3; skip connection, abbreviated as skip; 3 × 3 separable convolution, abbreviated as sep_3 × 3; 5 × 5 separable convolution, abbreviated as sep_5 × 5; 3× 3 dilated convolution, abbreviated as dil_3 × 3; 5 × 5 dilated convolution, abbreviated as dil_5 × 5. For HSI classification, the general architecture of automatically designed CNNs includes three parts. First, hyperspectral datasets as an input pass one 1 × 1 bottleneck convolution layers to set the number of HSI bands to 10 for the next computation cells. Then, two normal cells with padding and setting stride to 1, and reduction cells with padding and setting stride to 2 are stacked to complete the CNN. Finally, the final labels can be obtained by the fully connected layer.

For all the experiments in this work, we split the labeled samples of the HSI datasets into three subsets by sampling without replacement, namely the training, validation, and test sets. To ensure that all classes are included in the training, validation, and test dataset, we choose samples including all classes based on the proportion of every class in whole dataset and samples in each class are randomly selected with the same probability in this class. Based on the rule above, we chose 200 samples, including all classes as the training set and 600 samples as the validation set for each dataset. To demonstrate the stability of the two proposed methods, we conducted the experiments five times with random split datasets. Details of the training samples of each class of the four datasets are shown in

Table 1. All the remaining labeled samples served as the test set to evaluate the capability of the network. The training set was used to train the network, and the validation set was used to evaluate the performance of the architectures found and to search for the optimal architecture for all the automatic design algorithms.

For biased datasets, we chose 7 × 7 × B neighborhoods of a pixel as input, where B is the number of HSI bands. For unbiased datasets, samples with 32 × 32 slides were not enough to divide into the training and validation datasets, so we chose 7 × 7 neighborhoods of a pixel, and none of the samples were overlapping. For all the CNN models designed by hand, i.e., 3-D CNN, SSRN, ResNet, DesNet, we set the training epochs to 100 epochs. The learning rate for the weights of all these models was 0.025. For 3-D Auto-CNN, we set the training epochs in the architecture search and test process both to 100 epochs. The learning rate for the weights in the search and test process was 0.025 and 0.05, respectively. For PSO-Net, we set the swarm size to 100, the number of iterations to 40,

to 0.5, the training epochs in the architecture search and test process both to 100 epochs, and the learning rate for the weights in the architecture search and test process to 0.025. For CPSO-Net, only the training epochs in the architecture search were different from above, inspired by the mini-batch architecture idea of stochastic gradient descent for updating parameters, with only one epoch achieving good performance. For the test process, the experimental settings for the different methods can be found in

Table 2.

4.4. Results Analysis

In this section, we analyze the classification results and compare our algorithm with other methods in terms of classification accuracy, parameters, and time complexity on biased hyperspectral datasets to illustrate the effectiveness of our algorithms. We also demonstrate the optimal architectures searched by our methods, and analyze the convergence to illustrate the feasibility of the methods we proposed. Finally, we conduct experiments on unbiased hyperspectral datasets to further show our algorithms’ advantages.

4.4.1. Classification Accuracy

Table 3,

Table 4,

Table 5 and

Table 6 show the detailed classification results on test datasets of the two methods we proposed and the other comparative algorithm. As shown in

Table 3,

Table 4,

Table 5 and

Table 6, it is obvious that PSO-Net and CPSO-Net achieved a big improvement classification accuracy over the handcraft methods, including CNN, SSRN, ResNet, and DesNet. Therefore, we mainly compared the methods with Auto-CNN, which is an automatically designed method as well. For the University of Pavia dataset, CPSO-Net improved the OA, AA, and Kappa of Auto-CNN by 1.34%, 0.64%, and 0.0185, respectively, and PSO-Net exhibited the best OA, AA, and Kappa, with improvements of 1.78%, 2.31%, and 0.0221 over Auto-CNN. respectively. For the Salinas dataset, PSO-Net and CPSO-Net performed almost the same, and CPSO-Net exhibited the best OA, AA and Kappa, with improvements of 1.22%, 1.95% and 0.0221 over Auto-CNN, respectively. For the Indian Pines dataset, both PSO-Net and CPSO-Net achieved higher classification results than the other algorithms. CPSO-Net exhibited the best AA and Kappa, while PSO-Net exhibited the best OA, with improvements of 1.88%, 0.0171, and 1.37% over Auto-CNN, respectively. For the KSC dataset, similar results to the other datasets were obtained. Obviously, CPSO-Net dramatically accelerated the optimal network generation and achieved quality performance comparable to PSO-Net. For the Salinas, Indian Pines, and KSC datasets, CPSO-Net achieved even better classification performance than PSO-Net, with some improvement over PSO-Net in terms of AA, OA, and Kappa. There may be some significant differences in every class accuracy. For example, CPSO-Net achieved particularly improvement of 12.98% over Auto-CNN in the first-class classification on Salinas. In addition,

Table 7 shows the detailed average rankings of the classification accuracy on four datasets through the minimize performance measure based Friedman test [

52], which is a statistical test for the homogeneity of multiple samples. From

Table 7, we can see that the proposed approaches get almost similar ranking and rank higher than other comparative algorithms in terms of OA, AA, and Kappa.

To sum up from the classification accuracy analysis above, we can draw two conclusions. On the one hand, the PSO-Net and CPSO-Net algorithms made significant improvements over the state-of-the-art CNN models designed by hand, including CNN, SSRN, ResNet, and DesNet. Additionally, the proposed approaches are able to find better architectures than Auto-CNN optimized by the gradient descent. On the other hand, the two methods proposed herein achieved almost the same classification performance, while CPSO-Net dramatically reduced the time computation.

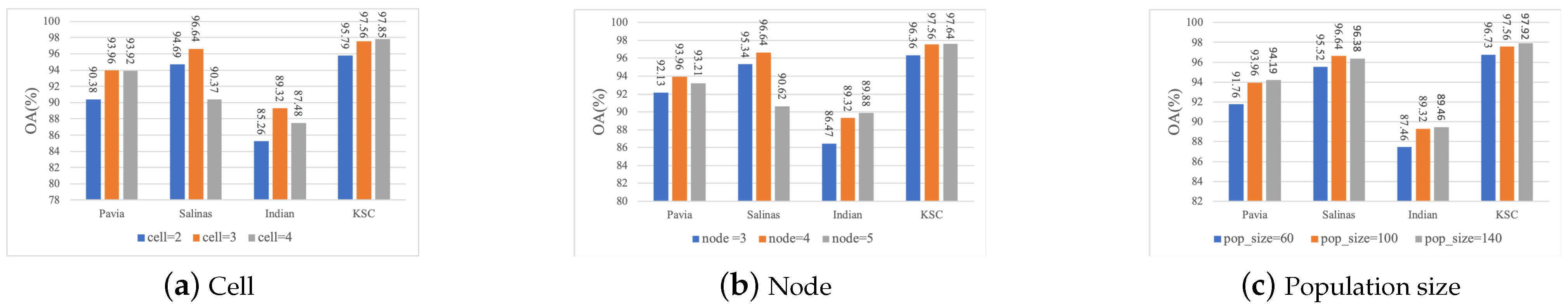

In addiction, taking CPSO-Net as example, we made a sensitivity hyperparameter analysis of the cell numbers with an additional two and four cells, intermediate nodes with an additional three and five nodes, and a population size with an additional 60 and 140. From

Figure 6a,b, it can be seen that fewer number of cells or intermediate nodes achieved a lower OA, but the models with a higher number of cells or intermediate nodes did not necessarily lead to better performance. The main reason is that the models with simple architectures may not be able to extract deep features, and complex models may cause an overfitting problem with limited training samples. From

Figure 6c, we can see that a small population may make architectures fall into the local optimal, but a large population will not greatly improve the classification performance when spending much more time.

4.4.2. Complexity Analysis

To analyze the complexity of the method we proposed, the results of several trainable parameters compared to both human-designed and automatically designed CNNs are shown in the bottom row of

Table 3,

Table 4,

Table 5 and

Table 6, and the time consumed compared to the peer automatically designed method are shown in

Figure 7. From

Table 3,

Table 4,

Table 5 and

Table 6, it is obvious that the models searched by PSO-Net and CPSO-Net achieved better results with the state-of-the-art CNNs designed by human experts while having significantly less parameters, which means less time and computational complexity to train the CNN models. There are two main reasons for the above results. First, a CNN model with a high number of trainable parameters may cause an overfitting problem with limited training samples. Second, automatically designed methods can search CNNs more suitably for specific HSI datasets. For the automatically designed methods Auto-CNN, PSO-Net, and CPSO-Net, even though they had the same search space, CPSO-Net and PSO-Net performed better than Auto-CNN. However, there was no consistent pattern between the number of parameters of the models searched by the three automatically designed methods.

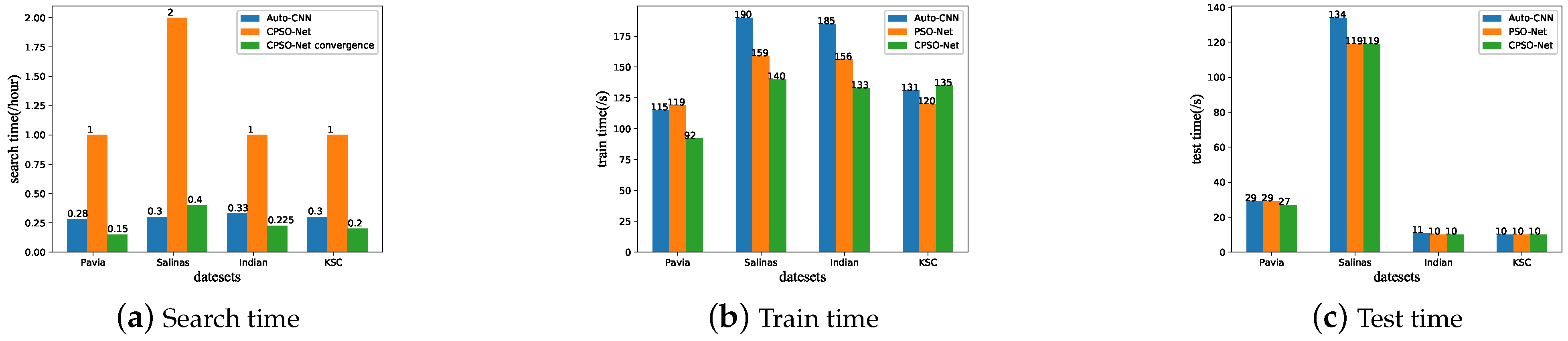

To further compare the search effectiveness of these automatically designed methods,

Figure 7 shows the time consumed in the different stages of the peer automatically designed CNN methods based on four biased HSI datasets. PSO-Net consumed approximately 15 h to converge, which is a significant time compared to the other two automatically designed methods. Therefore,

Figure 7a only shows the search time of Auto-CNN, CPSO-Net, and CPSO-Net convergence. From

Table 3,

Table 4,

Table 5 and

Table 6 and

Figure 7a, we can see that CPSO-Net and PSO-Net achieved better performance with a big improvement for Auto-CNN, but CPSO-Net dramatically reduced the search time by the weight-sharing parameters from the SuperNet compared to PSO-Net. The time of the total iteration of CPSO-Net consumed more time than Auto-CNN, while it converged at six, eight, nine, and eight iterations (the detailed convergence analysis can be seen is

Section 4.4.3) based on Pavia, Salinas, Indian Pines, and KSC, respectively. CPSO-Net only consumed approximately 15 min to converge, which is almost close to and even less than Auto-CNN. From

Figure 7b, we can see that the training time of the CNNs searched by CPSO-Net was less than that of PSO-Net and Auto-CNN based on the Pavia, Salinas, and Indian Pines datasets. From

Figure 7c, the three automatic-designed method, i.e., Auto-CNN, PSO-Net, and CPSO-Net, consumed almost the same time to test all the data of the CNNs searched.

To sum up, from

Figure 7, we can see that PSO-Net consumed a significant amount of time to converge, while CPSO-Net dramatically accelerated the search generation. The time CPSO-Net consumed to converge was almost same as and even less than Auto-CNN.

4.4.3. Convergence Analysis

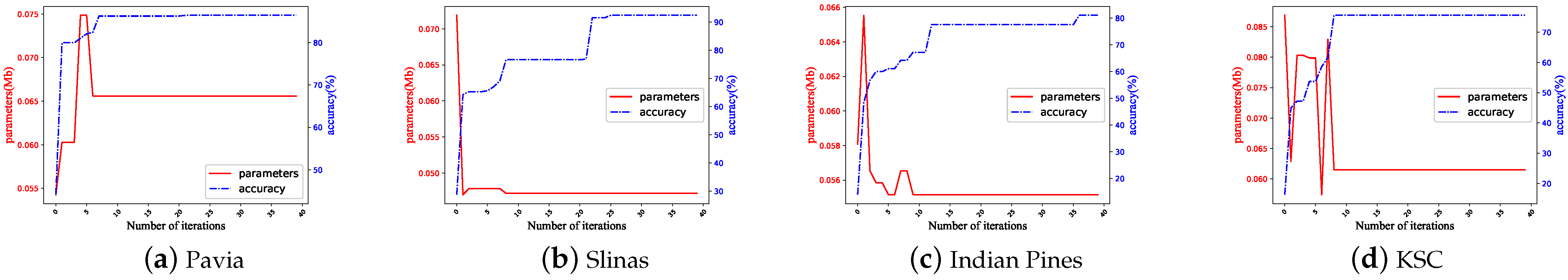

To dramatically accelerate optimal network generation, CPSO-Net shared the weights of SuperNet for all particles based on PSO-Net and achieved quality performance comparable to PSO-Net. Therefore, we mainly analyze the convergence of CPSO-Net in this section. The convergence of the architectures was different from the other solutions, and it is not enough only to consider the fitness of particles. The fitness was determined by the HSI classification accuracy of the optimized neural network, which was composed of the architectures and weight parameters. However, the number of parameters of the architectures and the position of the particles were only related to architecture. Therefore, the architecture convergence analysis of CPSO-Net took the number of parameters of the architectures and the position of all the particles as important criteria.

The number of parameters of the architectures were closely related to the operations in said architectures. With the alter of the operations in the architectures, the number of parameters changed accordingly, representing the architectures converging when the number of parameters remained the same during the generations.

Figure 8 shows the accuracy and number of parameters of

during iterations based on four datasets by CPSO-Net. From the number of parameters of

, we can see that the architectures converged at six, eight, nine, and eight iterations based on the Pavia, Salinas, Indian Pines, and KSC datasets, respectively. The

accuracy of the validation dataset still increased after the convergence of architectures. The main reason is that the parameters of the architectures inherited from the SuperNet continued to be optimized, while SuperNet was trained until reaching the maximum iterations.

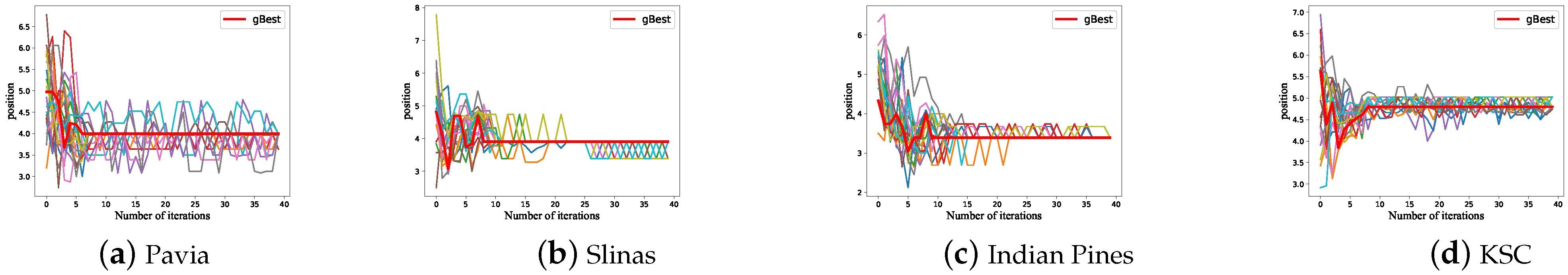

We further visualized the position of 20 particles selected randomly and

during the iterations based on four datasets by CPSO-Net in

Figure 9. The position of the particles was projected from the array (encoding from the architectures) into one-dimensional space using principal component analysis (PCA). As we can see, all particles divided globally at initialization, gradually tended toward focusing on global exploration with the iteration, toward the best particle position found thus far, and the position of

converged at the same iteration as the number of parameters of

, as shown in

Figure 8.

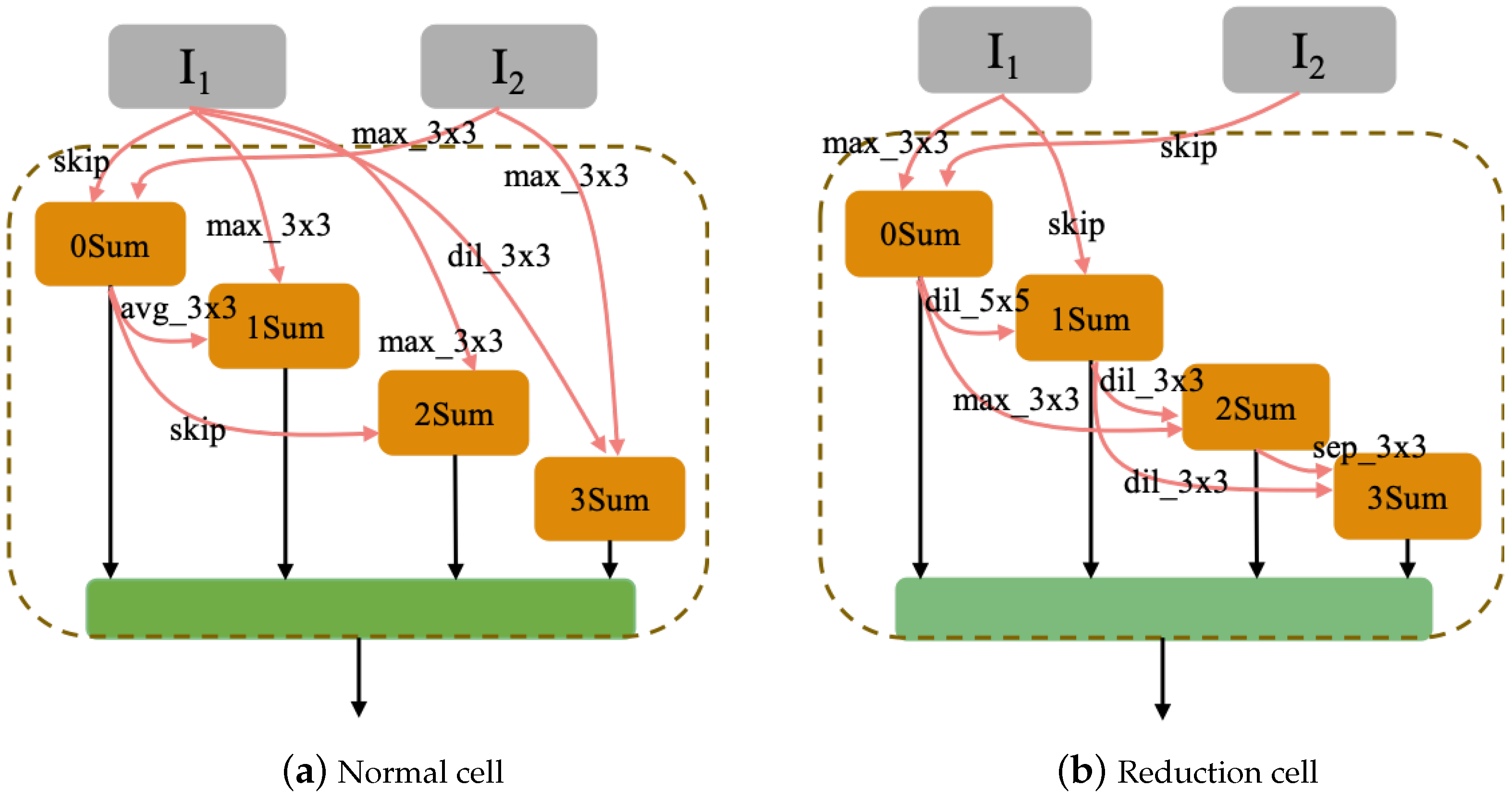

4.4.4. Optimal Architecture

To find the optimal architecture is the main purpose of the method we proposed, the optimal architecture can be searched at the end of the algorithms. After searching, the optimal architecture can be used to classify all the data. Herein, we took PSO-Net based on biased the University of Pavia dataset and CPSO-Net based on the biased Salinas dataset as examples. The architectures of the optimal CNN architectures found by the proposed PSO-Net and CPSO-Net are shown in

Figure 10 and

Figure 11. Compared to the handcraft CNNs, PSO-Net and CPSO-Net designed the optimal architectures in a predefined search space without professional knowledge of human experts and without spending significant time on repeated debugging. The search space is constructed by incorporating typical properties of successful architectures designed by hand. Therefore, the optimal architectures searched includes some typical properties of handcraft CNNs. For example, inspired by the ResNet, the optimal architectures in

Figure 10 and

Figure 11 introduced skip connections.

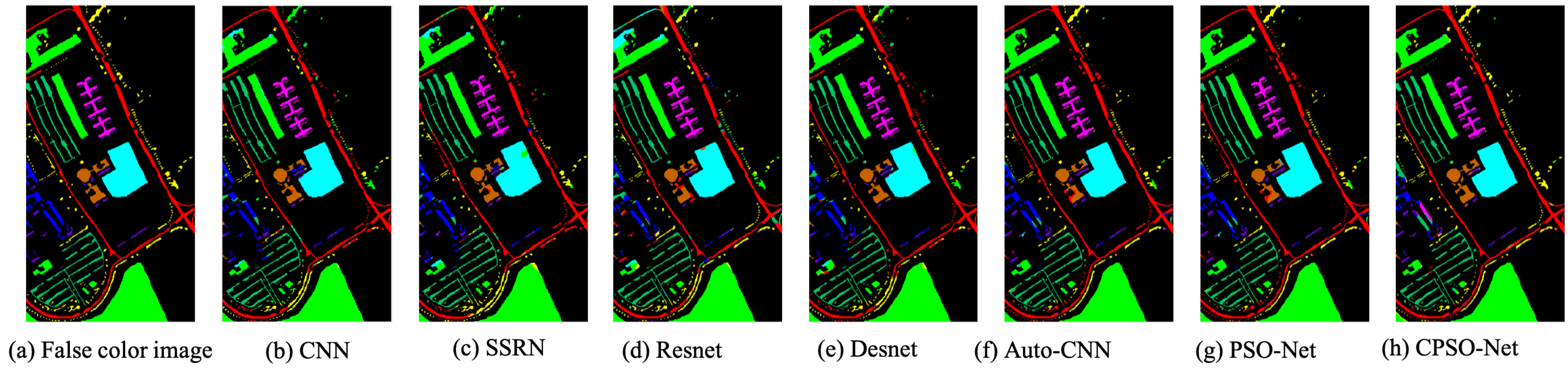

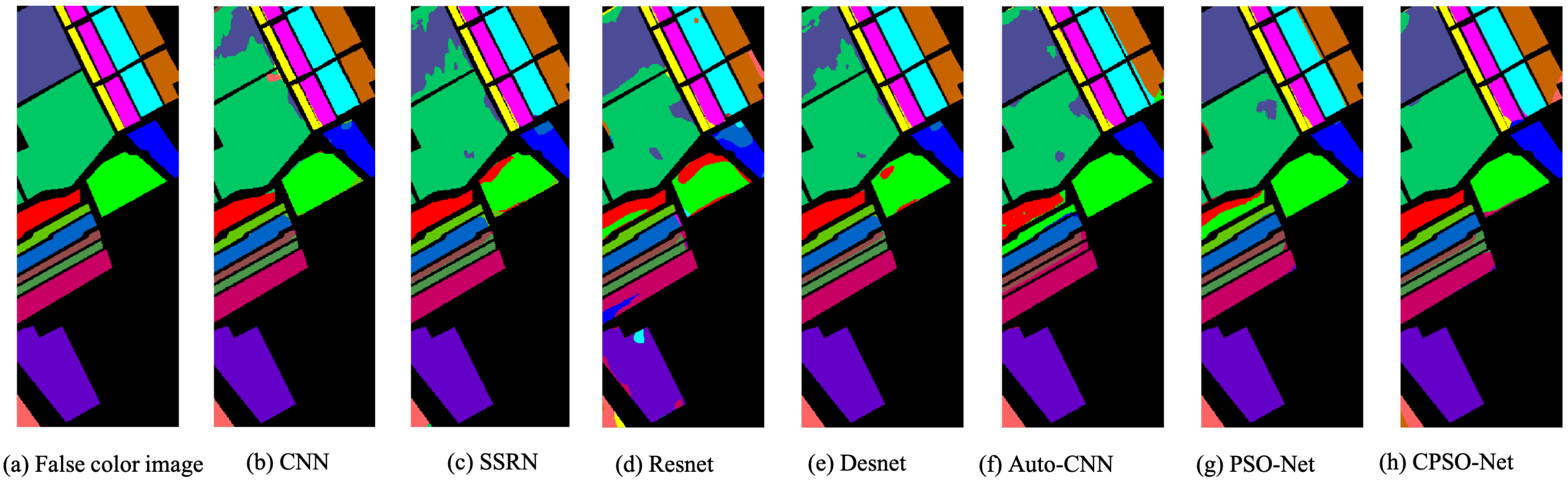

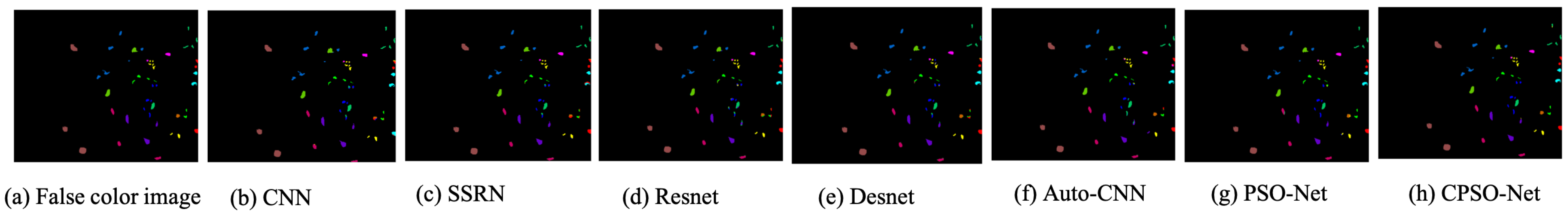

4.4.5. Classification Maps

To clearly represent the classification results, we plotted the whole image classification maps of all the models. The classification maps obtained by the different models based on four biased datasets are shown in

Figure 12,

Figure 13,

Figure 14 and

Figure 15. We evaluated the classification accuracies from the noise scatter in the visual perspective. From the results, we can see that CPSO-Net achieved less scatter in the class with the largest area compared to the other models, which means that CPSO-Net can obtain more precise classification accuracy in this class. From the resulting images, we figured out how the different classification methods affected the classification results. Obviously, the automatically designed CNNs had less noise than the handcrafted CNN model, which demonstrates the feasibility of designing CNN models automatically. It is obvious that the CNN search by the methods we proposed had less scatter than the automatically design method Auto-CNN for HSI classification, which further demonstrates the effectiveness of PSO-Net and CPSO-Net.

4.5. Unbiased HSI Classification

There may be pixel overlapping and training-test information leakage problems on biased datasets which lead to over-optimistic results for spectral-spatial methods. Therefore, to further demonstrate the effectiveness of PSO-Net and CPSO-Net, we conducted some more experiments on the unbiased hyperspectral datasets and tested the two methods in comparison to CNN, SSRN, ResNet, DesNet, and Auto-CNN based on the four biased HSI datasets of Pavia, Salinas, Indian Pines, and KSC. There are some methods to split training and test datasets without pixels overlapping and training-test information leakage, such as patch-based [

53] and set-to-sets distance based [

54] methods.

For the unbiased datasets, samples with 32 × 32 slides were not enough for dividing into the training and test datasets, so we chose 7 × 7 neighborhoods of a pixel according to the patch-based [

53] method, and none of the samples were overlapping. When the All other experimental settings were the same with the aforementioned biased datasets.

From

Table 8,

Table 9,

Table 10 and

Table 11, it is obvious to see that PSO-Net and CPSO-Net performed much better than the other models, and the improvements of PSO-Net and CPSO-Net were significant. In particular, the performance of CPSO-Net was better and consumed much less time than PSO-Net. However, the results of different unbiased spatial-based HSI classification methods were all lower than the performance of the biased methods.

5. Conclusions

In this paper, we proposed two novel evolutionary-based search methods, PSO-Net and CPSO-Net, which use particle swarm optimization as the search strategy to search for the optimal architecture. For PSO-Net, the gradient descent method was used to obtain the weight parameters for all architectures separately, and PSO was used to optimize the architectures and search for the optimal architecture until reaching the maximum number of iterations. To accelerate the optimal network generation, CPSO-Net made an improvement based on the PSO-Net parameter optimization of architectures. For CPSO-Net, we maintained a SuperNet with all candidate operations and shared the weight parameters for all individuals. There was no need to train each particle from the beginning to the end, so the search time was reduced greatly. Our results showed that PSO-Net and CPSO-Net can achieve better classification accuracies compared to the state-of-the-art algorithms of the automatic design of CNNs for HSI classification, and CPSO-Net can obtain almost the same results with much less time consumed than PSO-Net.

For future works, we will consider multiple complementary objectives, such as the number of parameters and the classification accuracy to achieve the Pareto optimal front. To further demonstrate the effectiveness of the methods we proposed herein, we will test these methods on the same common image classification datasets.