Estimating Canopy Density Parameters Time-Series for Winter Wheat Using UAS Mounted LiDAR

Abstract

1. Introduction

2. Materials and Methods

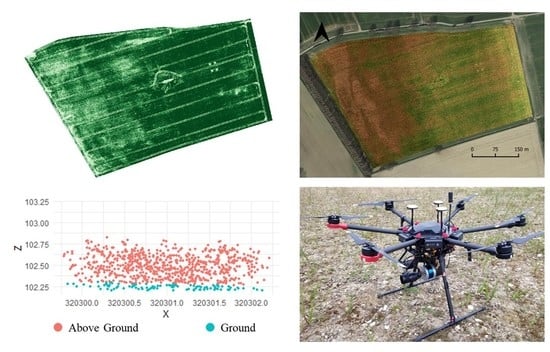

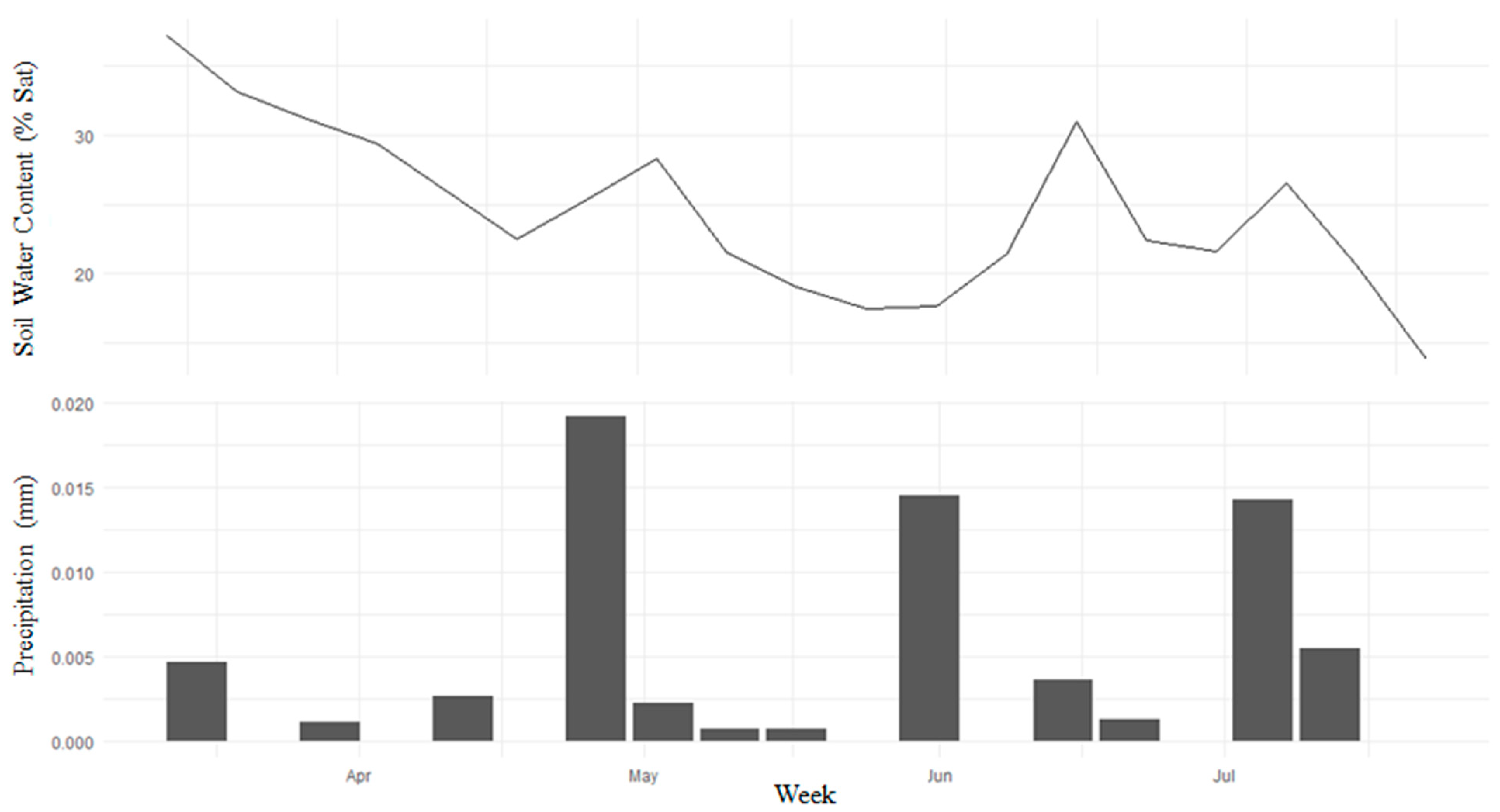

2.1. Study Area

2.2. Data Acquisition

2.2.1. UAS and Sensors

2.2.2. Data Acquisition and Initial Processing

2.2.3. Ground Sampling

2.3. LAI, GAI, BAI Estimation

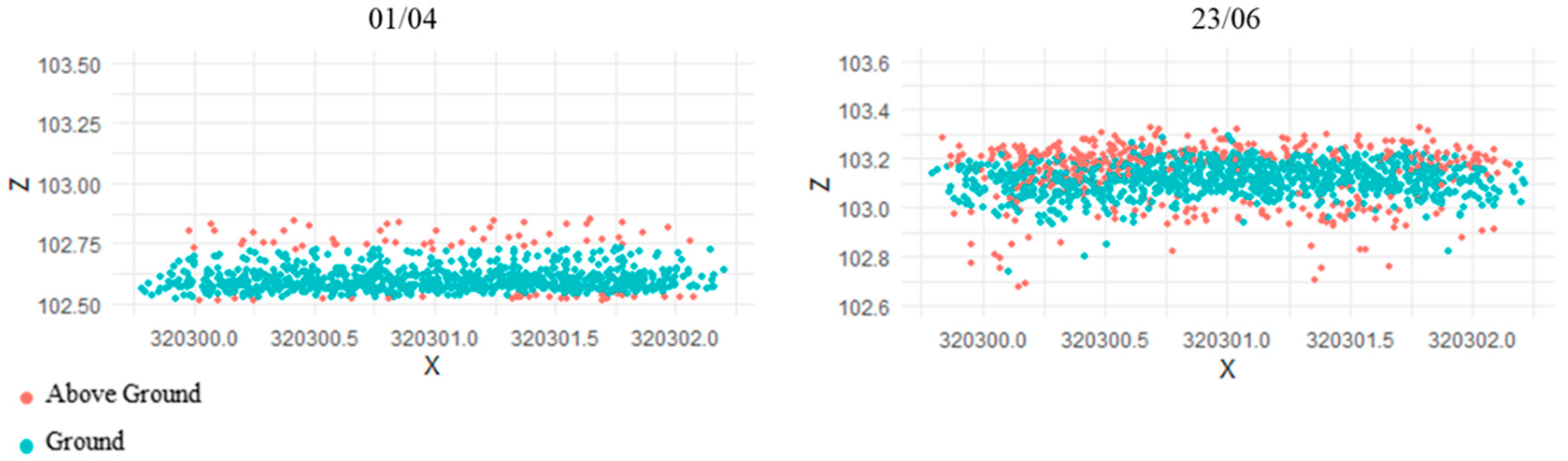

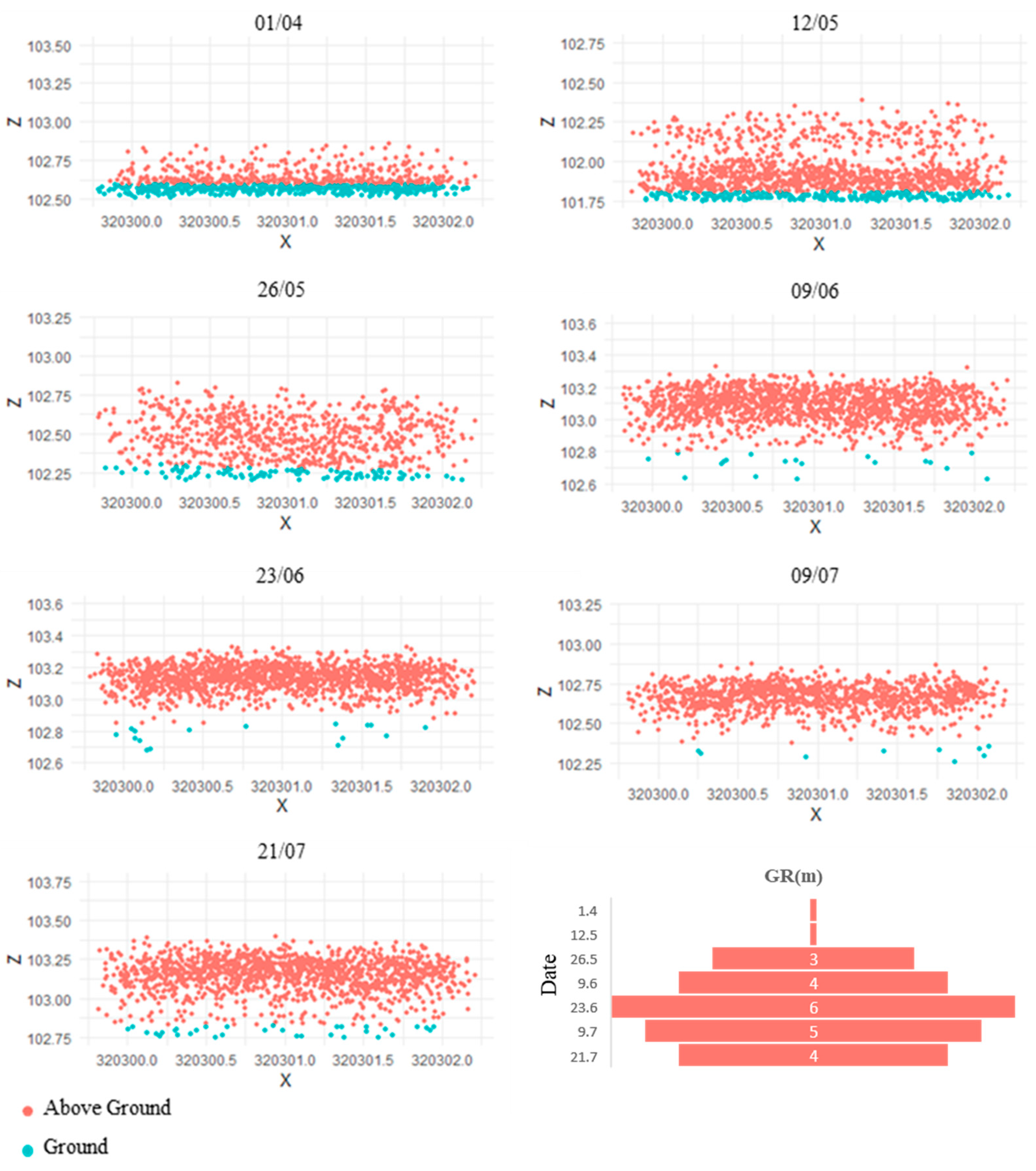

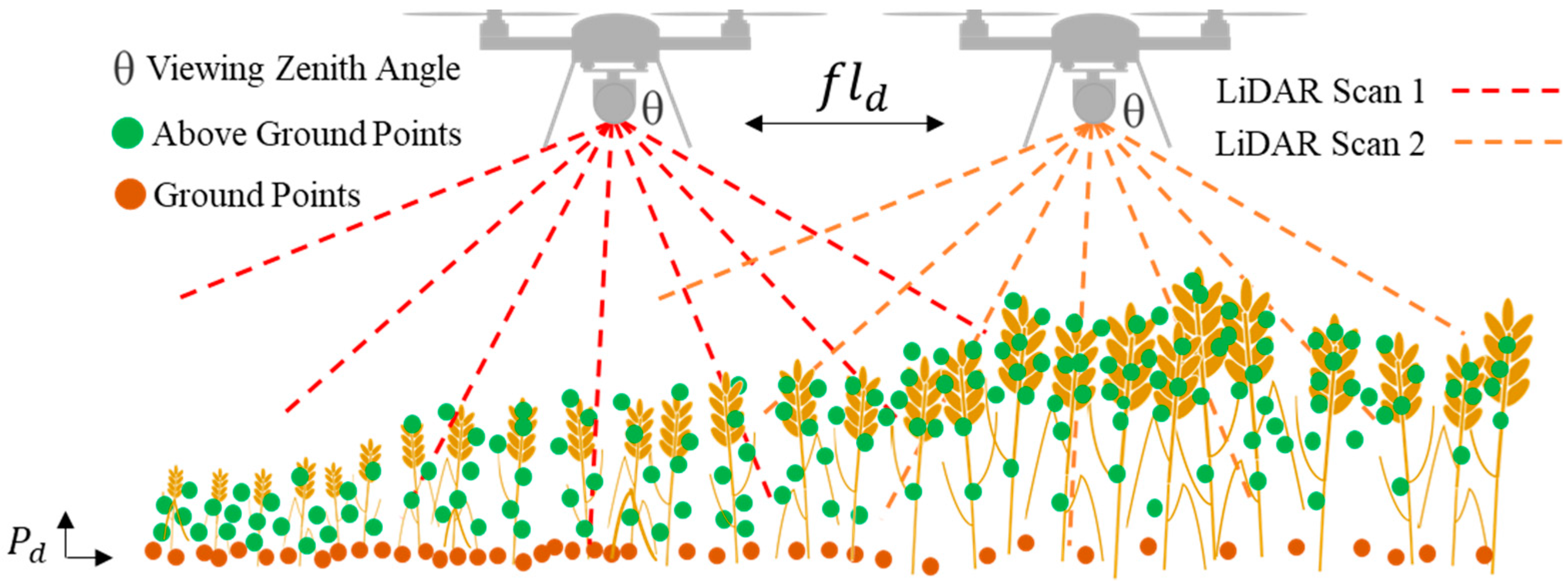

2.3.1. Deriving PAILiDAR

2.3.2. Deriving GAImultispectral

3. Results

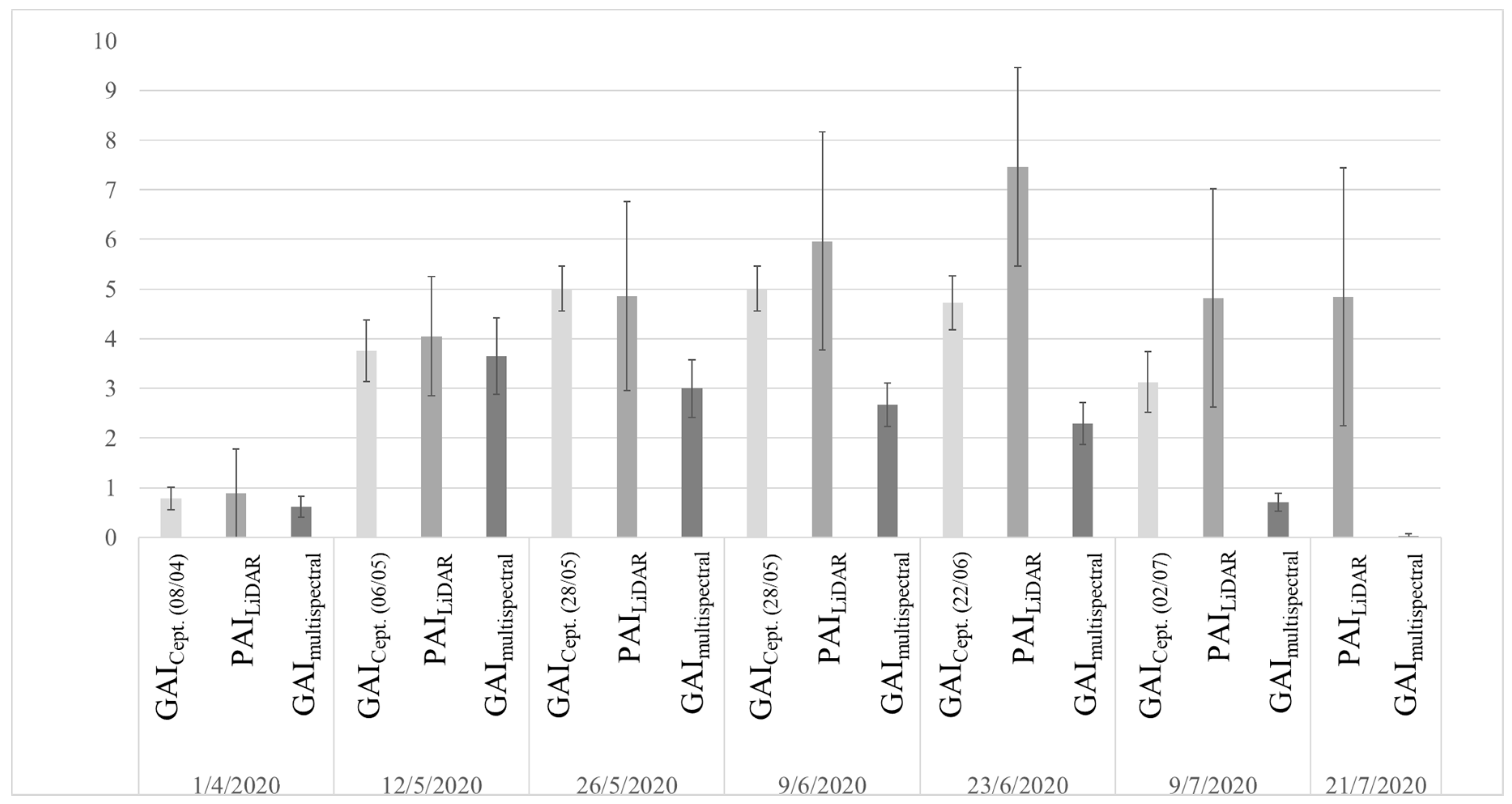

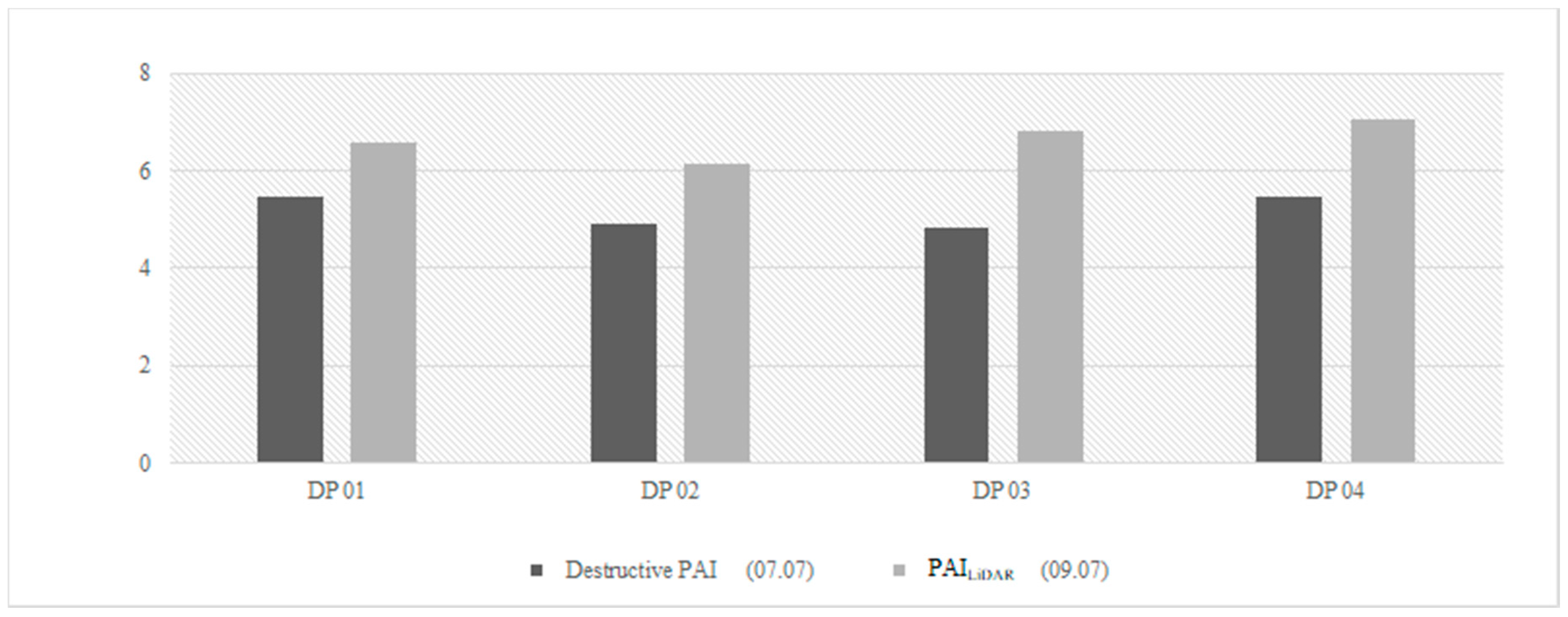

3.1. Comparison against Ground Measurements

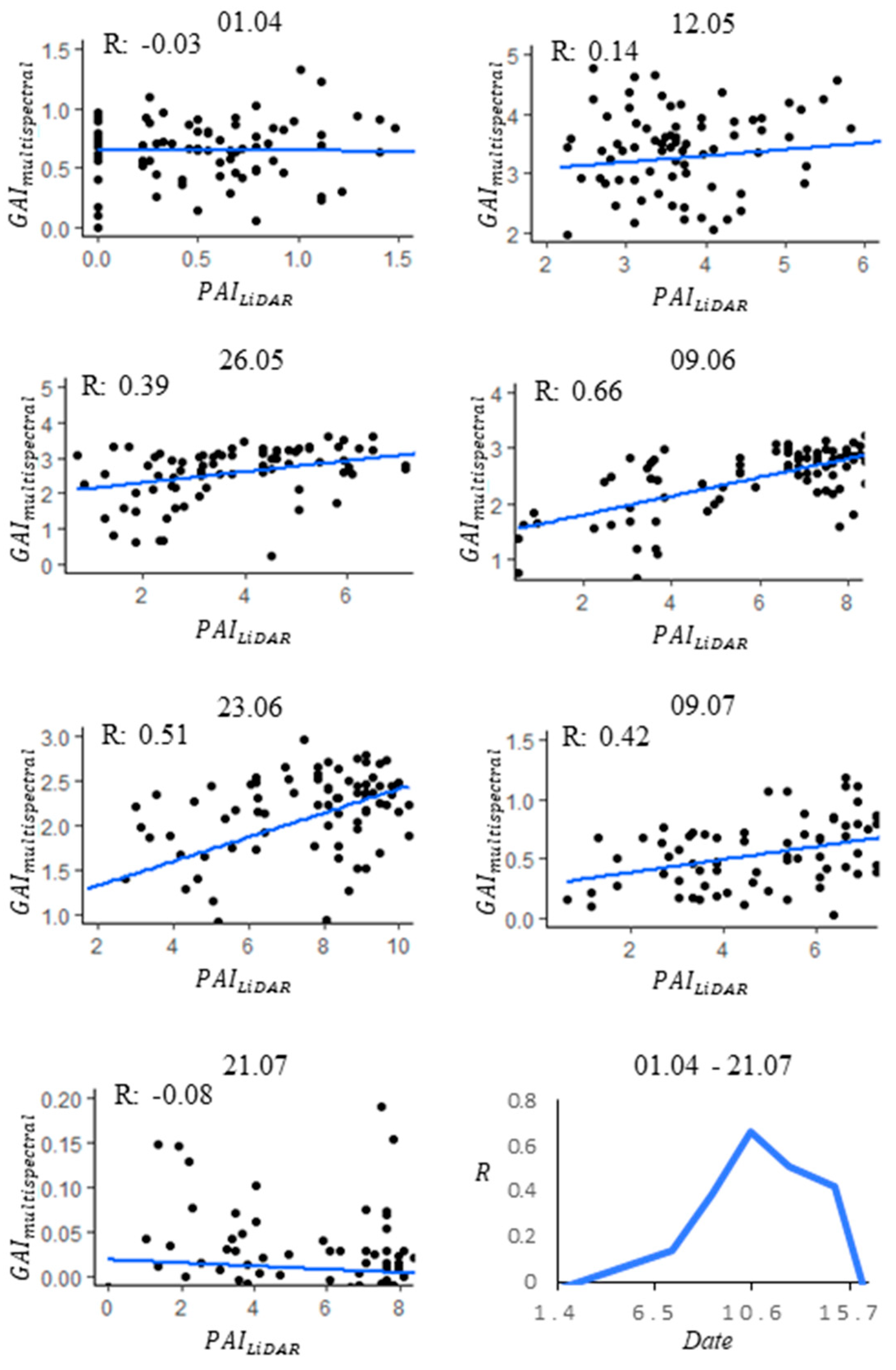

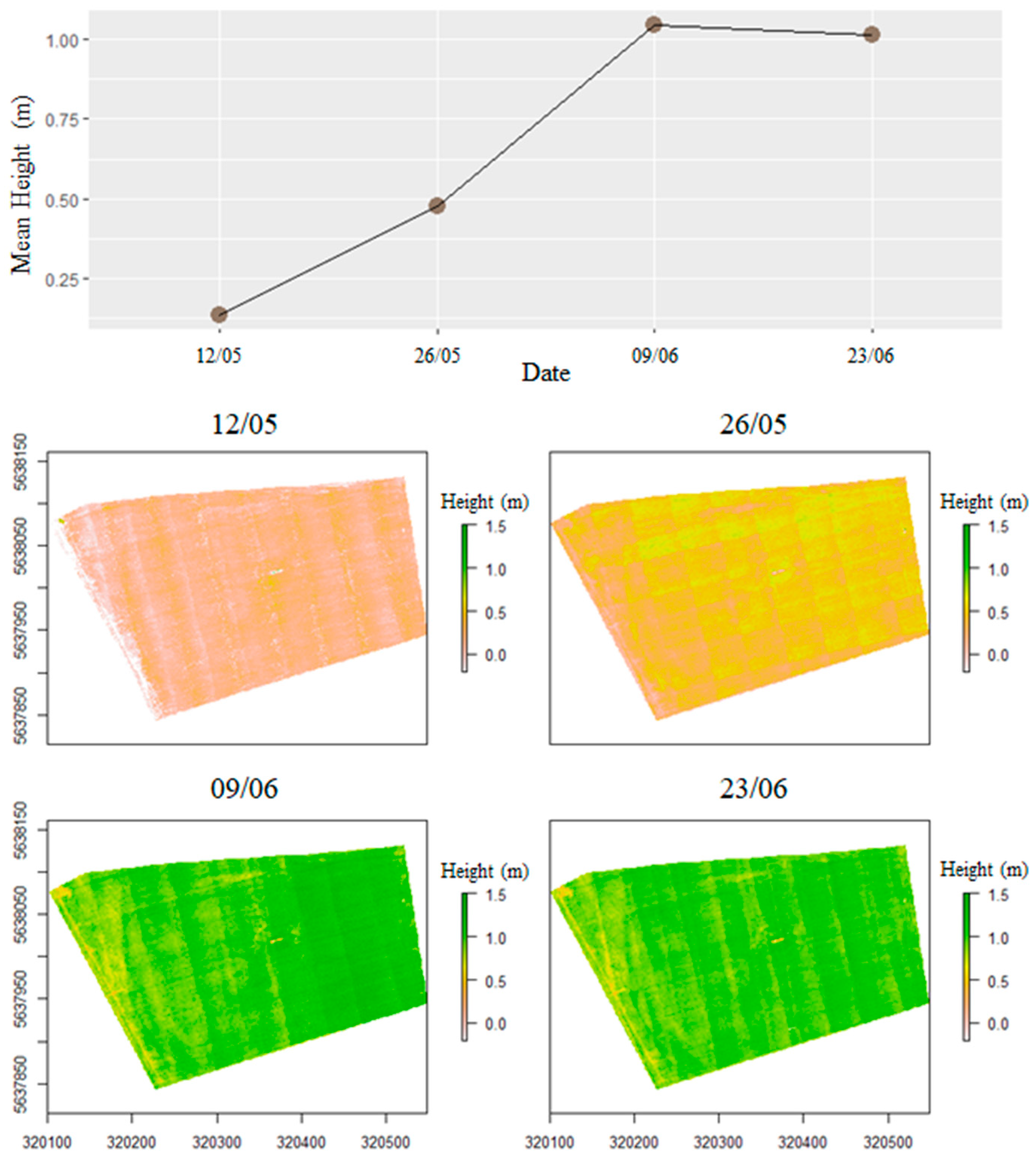

3.2. Spatial Variation in Values

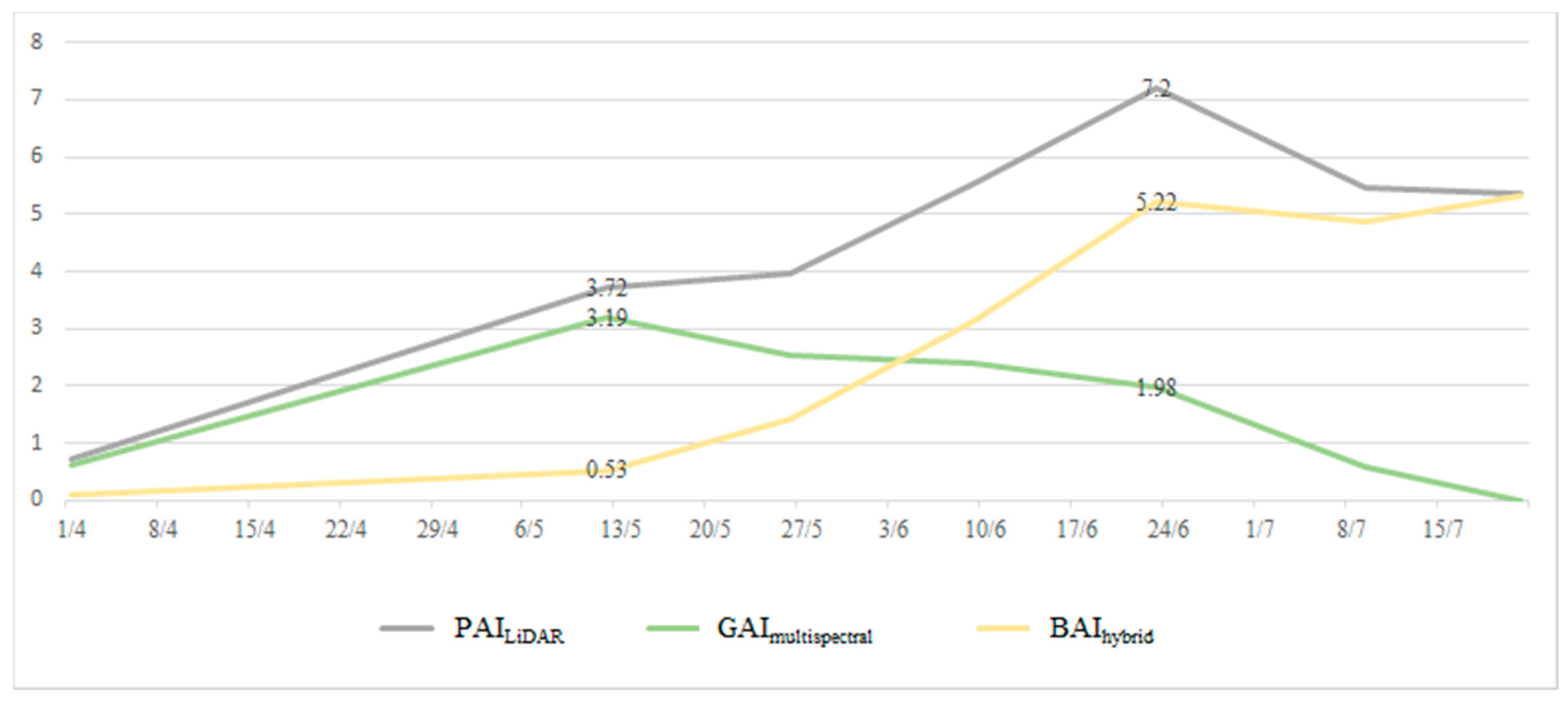

3.3. PAI, GAI, and BAI

4. Discussion and Future Directions for Improvement

4.1. Time-Series Trend

4.2. PAILiDAR Spatial Variation

4.3. Impacts of the Extinction Coefficient k(θ)

4.4. Future Insights

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Yan, G.; Hu, R.; Luo, J.; Weiss, M.; Jiang, H.; Mu, X.; Xie, D.; Zhang, W. Review of indirect optical measurements of leaf area index: Recent advances, challenges, and perspectives. Agric. For. Meteorol. 2019, 265, 390–411. [Google Scholar] [CrossRef]

- Zheng, G.; Moskal, L.M. Retrieving Leaf Area Index (LAI) using remote sensing: Theories, methods and sensors. Sensors 2009, 9, 2719–2745. [Google Scholar] [CrossRef] [PubMed]

- Fang, H.; Baret, F.; Plummer, S.; Schaepman-Strub, G. An overview of global Leaf Area Index (LAI): Methods, products, validation, and applications. Rev. Geophys. 2019, 57, 739–799. [Google Scholar] [CrossRef]

- Pasqualotto, N.; Delegido, J.; Van Wittenberghe, S.; Rinaldi, M.; Moreno, J. Multi-crop green LAI estimation with a new simple Sentinel-2 LAI Index (SeLI). Sensors 2019, 19, 904. [Google Scholar] [CrossRef] [PubMed]

- Delegido, J.; Verrelst, J.; Rivera, J.P.; Ruiz-Verdú, A.; Moreno, J. Brown and green LAI mapping through spectral indices. Int. J. Appl. Earth Obs. Geoinformation 2015, 35(Part B), 350–358. [Google Scholar] [CrossRef]

- Liu, S.; Baret, F.; Abichou, M.; Boudon, F.; Thomas, S.; Zhao, K.; Fournier, C.; Andrieu, B.; Irfan, K.; Hemmerlé, M.; et al. Estimating wheat green area index from ground-based LiDAR measurement using a 3D canopy structure model. Agric. For. Meteorol. 2017, 247, 12–20. [Google Scholar] [CrossRef]

- Stark, B.; Zhao, T.; Chen, Y. An analysis of the effect of the bidirectional reflectance distribution function on remote sensing imagery accuracy from Small Unmanned Aircraft Systems. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), IEEE, Arlington, VA, USA, 7–10 June 2016; pp. 1342–1350. [Google Scholar]

- Baret, F.; de Solan, B.; Lopez-Lozano, R.; Ma, K.; Weiss, M. GAI Estimates of row crops from downward looking digital photos taken perpendicular to rows at 57.5° zenith angle: Theoretical considerations based on 3D architecture models and application to wheat crops. Agric. For. Meteorol. 2010, 150, 1393–1401. [Google Scholar] [CrossRef]

- Kokaly, R.F.; Asner, G.P.; Ollinger, S.V.; Martin, M.E.; Wessman, C.A. Characterizing canopy biochemistry from imaging spectroscopy and its application to ecosystem studies. Remote Sens. Environ. 2009, 113, S78–S91. [Google Scholar] [CrossRef]

- Bakuła, K.; Salach, A.; Wziątek, D.Z.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Evaluation of the accuracy of lidar data acquired using a UAS for levee monitoring: Preliminary results. Int. J. Remote. Sens. 2017, 38, 2921–2937. [Google Scholar] [CrossRef]

- Leblanc, S.G.; Chen, J.M.; Fernandes, R.; Deering, D.W.; Conley, A. Methodology comparison for canopy structure parameters extraction from digital hemispherical photography in boreal forests. Agric. For. Meteorol. 2005, 129, 187–207. [Google Scholar] [CrossRef]

- Van Gardingen, P.; Jackson, G.; Hernandez-Daumas, S.; Russell, G.; Sharp, L. Leaf Area Index estimates obtained for clumped canopies using hemispherical photography. Agric. For. Meteorol. 1999, 94, 243–257. [Google Scholar] [CrossRef]

- Ariza-Carricondo, C.; Di Mauro, F.; De Beeck, M.O.; Roland, M.; Gielen, B.; Vitale, D.; Ceulemans, R.; Papale, D. A comparison of different methods for assessing leaf area index in four canopy types. Cent. Eur. For. J. 2019, 65, 67–80. [Google Scholar] [CrossRef]

- Welles, J.M.; Cohen, S. Canopy structure measurement by gap fraction analysis using commercial instrumentation. J. Exp. Bot. 1996, 47, 1335–1342. [Google Scholar] [CrossRef]

- Broadhead, J.; Muxworthy, A.; Ong, C.; Black, C. Comparison of methods for determining leaf area in tree rows. Agric. For. Meteorol. 2003, 115, 151–161. [Google Scholar] [CrossRef][Green Version]

- Kalacska, M.; Calvo-Alvarado, J.C.; Sanchez-Azofeifa, G.A. Calibration and assessment of seasonal changes in leaf area index of a tropical dry forest in different stages of succession. Tree Physiol. 2005, 25, 733–744. [Google Scholar] [CrossRef] [PubMed]

- Olivas, P.C.; Oberbauer, S.F.; Clark, D.B.; Clark, D.A.; Ryan, M.G.; O’Brien, J.J.; Ordoñez, H. Comparison of direct and indirect methods for assessing Leaf Area Index across a tropical rain forest landscape. Agric. For. Meteorol. 2013, 177, 110–116. [Google Scholar] [CrossRef]

- Kobayashi, H.; Ryu, Y.; Baldocchi, D.D.; Welles, J.M.; Norman, J.M. On the correct estimation of gap fraction: How to remove scattered radiation in gap fraction measurements? Agric. For. Meteorol. 2013, 174–175, 170–183. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F.; Smith, G.; Jonckheere, I.; Coppin, P. Review of methods for in situ leaf area index (LAI) determination. Agric. For. Meteorol. 2004, 121, 37–53. [Google Scholar] [CrossRef]

- Fang, H.; Li, W.; Wei, S.; Jiang, C. Seasonal variation of leaf area index (LAI) over paddy rice fields in NE China: Intercomparison of destructive sampling, LAI-2200, digital hemispherical photography (DHP), and AccuPAR methods. Agric. For. Meteorol. 2014, 198–199, 126–141. [Google Scholar] [CrossRef]

- Martinez, B.; García-Haro, F.; Camacho de Coca, F. Derivation of high-resolution leaf area index maps in support of validation activities: Application to the cropland Barrax site. Agric. For. Meteorol. 2009, 149, 130–145. [Google Scholar] [CrossRef]

- Richardson, A.D.; Dail, D.B.; Hollinger, D. Leaf Area Index uncertainty estimates for model–data fusion applications. Agric. For. Meteorol. 2011, 151, 1287–1292. [Google Scholar] [CrossRef]

- Verger, A.; Martínez, B.; Coca, F.C.; García-Haro, F.J. Accuracy assessment of fraction of vegetation cover and leaf area index estimates from pragmatic methods in a cropland area. Int. J. Remote Sens. 2009, 30, 2685–2704. [Google Scholar] [CrossRef]

- Woodgate, W.; Jones, S.D.; Suarez, L.; Hill, M.J.; Armston, J.D.; Wilkes, P.; Soto-Berelov, M.; Haywood, A.; Mellor, A. Understanding the variability in ground-based methods for retrieving canopy openness, gap fraction, and leaf area index in diverse forest systems. Agric. For. Meteorol. 2015, 205, 83–95. [Google Scholar] [CrossRef]

- Chiroro, D.; Armston, J.; Makuvaro, V. An investigation on the utility of the Sunscan ceptometer in estimating the leaf area index of a sugarcane canopy. Proc. South Afr. Technol. Assoc. 2006, 80, 143. [Google Scholar]

- Dhami, H.; Yu, K.; Xu, T.; Zhu, Q.; Dhakal, K.; Friel, J.; Li, S.; Tokekar, P. Crop height and plot estimation from unmanned aerial vehicles using 3D LiDAR. arXiv 2020, arXiv:1910.14031. [Google Scholar]

- Neuville, R.; Bates, J.S.; Jonard, F. Estimating forest structure from UAV-mounted LiDAR point cloud using machine learning. Remote. Sens. 2021, 13, 352. [Google Scholar] [CrossRef]

- Meng, X.; Shang, N.; Zhang, X.; Li, C.; Zhao, K.; Qiu, X.; Weeks, E. Photogrammetric UAV Mapping of Terrain under Dense Coastal Vegetation: An object-oriented classification ensemble algorithm for classification and terrain correction. Remote. Sens. 2017, 9, 1187. [Google Scholar] [CrossRef]

- Huang, Z.; Zou, Y. Inversion of Forest Leaf Area Index based on Lidar data. TELKOMNIKA Telecommun. Comput. Electron. Control. 2016, 14, 44. [Google Scholar] [CrossRef][Green Version]

- Korhonen, L.; Korpela, I.; Heiskanen, J.; Maltamo, M. Airborne discrete-return LIDAR data in the estimation of vertical canopy cover, angular canopy closure and leaf area index. Remote. Sens. Environ. 2011, 115, 1065–1080. [Google Scholar] [CrossRef]

- Morsdorf, F.; Kötz, B.; Meier, E.; Itten, K.; Allgöwer, B. Estimation of LAI and fractional cover from small footprint airborne laser scanning data based on gap fraction. Remote. Sens. Environ. 2006, 104, 50–61. [Google Scholar] [CrossRef]

- Richardson, J.J.; Moskal, L.M.; Kim, S.-H. Modeling approaches to estimate effective leaf area index from aerial discrete-return LIDAR. Agric. For. Meteorol. 2009, 149, 1152–1160. [Google Scholar] [CrossRef]

- Sabol, J.; Patočka, Z.; Mikita, T. Usage of Lidar Data for Leaf Area Index estimation. Geosci. Eng. 2014, 60, 10–18. [Google Scholar] [CrossRef]

- Sasaki, T.; Imanishi, J.; Ioki, K.; Song, Y.; Morimoto, Y. Estimation of leaf area index and gap fraction in two broad-leaved forests by using small-footprint airborne LiDAR. Landsc. Ecol. Eng. 2013, 12, 117–127. [Google Scholar] [CrossRef]

- Rogers, S.R.; Manning, I.; Livingstone, W. Comparing the spatial accuracy of digital surface models from four unoccupied aerial systems: Photogrammetry versus LiDAR. Remote. Sens. 2020, 12, 2806. [Google Scholar] [CrossRef]

- Becirevic, D.; Klingbeil, L.; Honecker, A.; Schumann, H.; Rascher, U.; Léon, J.; Kuhlmann, H. On the derivation of crop heights from multitemporal UAV based imagery. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, 95–102. [Google Scholar] [CrossRef]

- Cao, L.; Liu, H.; Fu, X.; Zhang, Z.; Shen, X.; Ruan, H. Comparison of UAV LiDAR and digital aerial photogrammetry point clouds for estimating forest structural attributes in subtropical planted forests. Forests 2019, 10, 145. [Google Scholar] [CrossRef]

- Davidson, L.; Mills, J.P.; Haynes, I.; Augarde, C.; Bryan, P.; Douglas, M. Airborne to UAS LiDAR: An analysis of UAS LiDAR ground control targets. ISPRS Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 255–262. [Google Scholar] [CrossRef]

- Bukowiecki, J.; Rose, T.; Ehlers, R.; Kage, H. High-throughput prediction of whole season green area index in winter wheat with an airborne multispectral sensor. Front. Plant Sci. 2020, 10, 10. [Google Scholar] [CrossRef]

- Deery, D.M.; Rebetzke, G.J.; Jimenez-Berni, J.A.; Condon, A.G.; Smith, D.J.; Bechaz, K.M.; Bovill, W.D. Ground-Based LiDAR improves phenotypic repeatability of above-ground biomass and crop growth rate in wheat. Plant Phenomics 2020, 2020, 1–11. [Google Scholar] [CrossRef]

- Wang, D.; Xin, X.; Shao, Q.; Brolly, M.; Zhu, Z.; Chen, J. Modeling aboveground biomass in hulunber grassland ecosystem by using unmanned aerial vehicle discrete lidar. Sensors 2017, 17, 180. [Google Scholar] [CrossRef]

- Fu, Z.; Jindi, W.; Jinling, S.; Zhou, H.; Pang, Y.; Chen, B. Estimation of forest canopy leaf area index using MODIS, MISR, and LiDAR observations. J. Appl. Remote. Sens. 2011, 5, 53530. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C. Update of forest inventory data with LIDAR and high spatial resolution satellite imagery. Can. J. Remote. Sens. 2008, 34, 5–12. [Google Scholar] [CrossRef]

- Jensen, J.L.; Humes, K.S.; Vierling, L.A.; Hudak, A.T. Discrete return lidar-based prediction of leaf area index in two conifer forests. Remote. Sens. Environ. 2008, 112, 3947–3957. [Google Scholar] [CrossRef]

- Ma, H.; Song, J.; Wang, J.; Xiao, Z.; Fu, Z. Improvement of spatially continuous forest LAI retrieval by integration of discrete airborne LiDAR and remote sensing multi-angle optical data. Agric. For. Meteorol. 2014, 60–70. [Google Scholar] [CrossRef]

- Bogena, H.; Montzka, C.; Huisman, J.; Graf, A.; Schmidt, M.; Stockinger, M.; Von Hebel, C.; Hendricks-Franssen, H.; Van Der Kruk, J.; Tappe, W.; et al. The TERENO-Rur hydrological observatory: A multiscale multi-compartment research platform for the advancement of hydrological science. Vadose Zone J. 2018, 17, 180055. [Google Scholar] [CrossRef]

- Brogi, C.; Huisman, J.A.; Herbst, M.; Weihermüller, L.; Klosterhalfen, A.; Montzka, C.; Reichenau, T.G.; Vereecken, H. Simulation of spatial variability in crop leaf area index and yield using agroecosystem modeling and geophysics-based quantitative soil information. Vadose Zone J. 2020, 19, e20009. [Google Scholar] [CrossRef]

- Hoffmeister, D.; Waldhoff, G.; Korres, W.; Curdt, C.; Bareth, G. Crop height variability detection in a single field by multi-temporal terrestrial laser scanning. Precis. Agric. 2016, 17, 296–312. [Google Scholar] [CrossRef]

- Gielen, B.; Acosta, M.; Altimir, N.; Buchmann, N.; Cescatti, A.; Ceschia, E.; Fleck, S.; Hörtnagl, L.; Klumpp, K.; Kolari, P.; et al. Ancillary vegetation measurements at ICOS ecosystem stations. Int. Agrophysics 2018, 32, 645–664. [Google Scholar] [CrossRef]

- Chen, J.M.; Rich, P.M.; Gower, S.T.; Norman, J.M.; Plummer, S. Leaf area index of boreal forests: Theory, techniques, and measurements. J. Geophys. Res. Atmos. 1997, 102, 29429–29443. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote. Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Ali, M.; Montzka, C.; Stadler, A.; Menz, G.; Thonfeld, F.; Vereecken, H. Estimation and validation of RapidEye-based time-series of leaf area index for winter wheat in the Rur Catchment (Germany). Remote Sens. 2015, 7, 2808–2831. [Google Scholar] [CrossRef]

- Thorp, K.R.; Hunsaker, D.J.; French, A.N. Assimilating leaf area index estimates from remote sensing into the simulations of a cropping systems model. Trans. ASABE 2010, 53, 251–262. [Google Scholar] [CrossRef]

- Ryu, J.-H.; Na, S.-I.; Cho, J. Inter-comparison of normalized difference vegetation index measured from different footprint sizes in cropland. Remote. Sens. 2020, 12, 2980. [Google Scholar] [CrossRef]

- Khaliq, A.; Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Chiaberge, M.; Gay, P. Comparison of satellite and UAV-based multispectral imagery for vineyard variability assessment. Remote. Sens. 2019, 11, 436. [Google Scholar] [CrossRef]

- Tan, C.-W.; Zhang, P.-P.; Zhou, X.-X.; Wang, Z.-X.; Xu, Z.-Q.; Mao, W.; Li, W.-X.; Huo, Z.-Y.; Guo, W.-S.; Yun, F. Quantitative monitoring of leaf area index in wheat of different plant types by integrating NDVI and Beer-Lambert law. Sci. Rep. 2020, 10, 929. [Google Scholar] [CrossRef]

- Solberg, S.; Næsset, E.; Hanssen, K.H.; Christiansen, E. Mapping defoliation during a severe insect attack on Scots pine using airborne laser scanning. Remote. Sens. Environ. 2006, 102, 364–376. [Google Scholar] [CrossRef]

| Date | GAImultispectral k(θ) | PAILiDAR k(θ) |

|---|---|---|

| 1/4 | 0.47 | 0.37 |

| 12/5 | 0.34 | 0.37 |

| 26/5 | 0.22 | 0.35 |

| 9/6 | 0.19 | 0.4 |

| 23/6 | 0.17 | 0.4 |

| 9/7 | 0.09 | 0.45 |

| 21/7 | 0.09 | 0.45 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bates, J.S.; Montzka, C.; Schmidt, M.; Jonard, F. Estimating Canopy Density Parameters Time-Series for Winter Wheat Using UAS Mounted LiDAR. Remote Sens. 2021, 13, 710. https://doi.org/10.3390/rs13040710

Bates JS, Montzka C, Schmidt M, Jonard F. Estimating Canopy Density Parameters Time-Series for Winter Wheat Using UAS Mounted LiDAR. Remote Sensing. 2021; 13(4):710. https://doi.org/10.3390/rs13040710

Chicago/Turabian StyleBates, Jordan Steven, Carsten Montzka, Marius Schmidt, and François Jonard. 2021. "Estimating Canopy Density Parameters Time-Series for Winter Wheat Using UAS Mounted LiDAR" Remote Sensing 13, no. 4: 710. https://doi.org/10.3390/rs13040710

APA StyleBates, J. S., Montzka, C., Schmidt, M., & Jonard, F. (2021). Estimating Canopy Density Parameters Time-Series for Winter Wheat Using UAS Mounted LiDAR. Remote Sensing, 13(4), 710. https://doi.org/10.3390/rs13040710