Automatic High-Accuracy Sea Ice Mapping in the Arctic Using MODIS Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Data

2.1.1. MODIS Sensors and Datasets

2.1.2. Land Mask

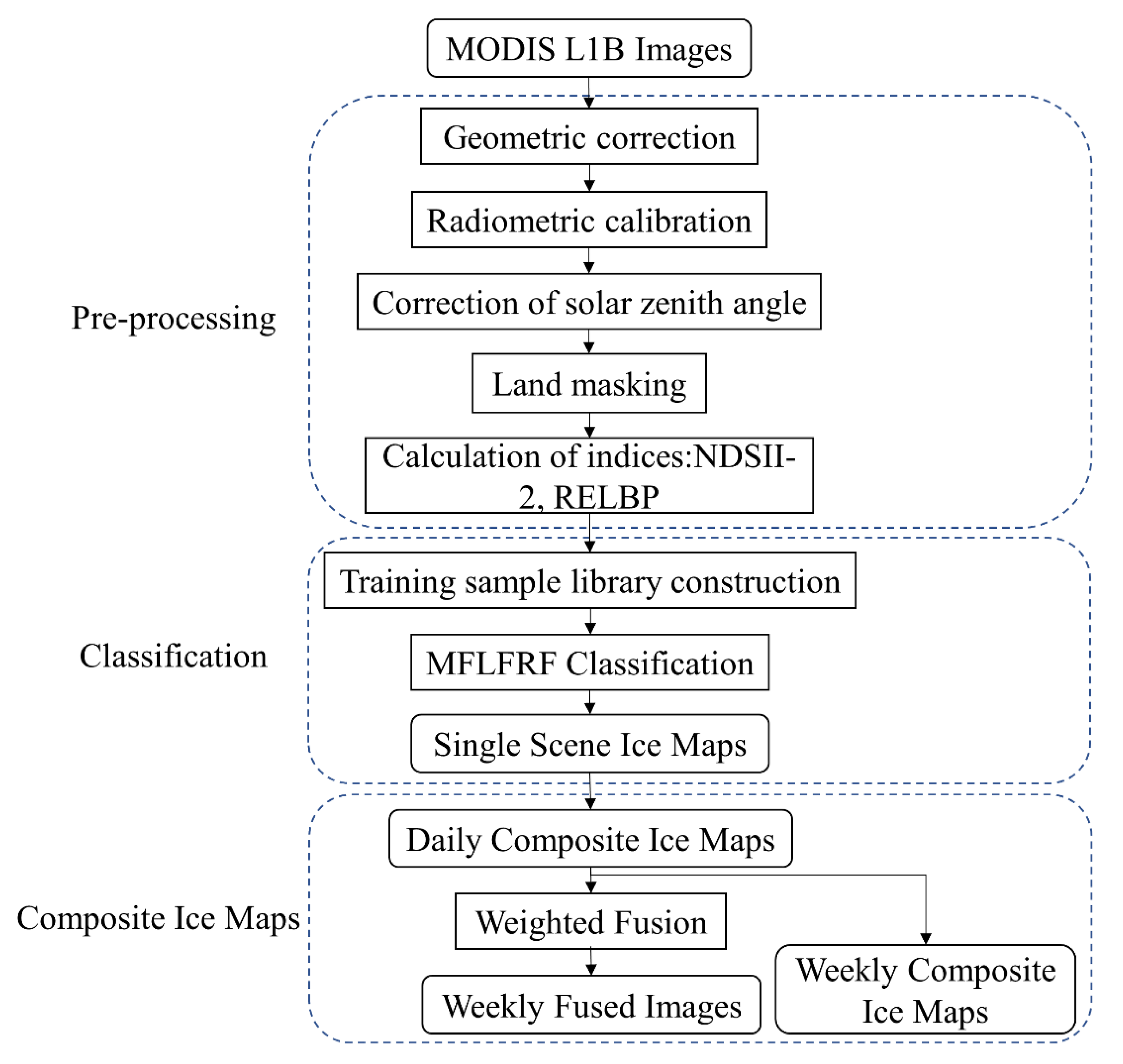

2.2. Method

2.2.1. Pre-Processing

- Radiometric calibration:

- Solar zenith angle correction:

- Feature attribute selection:

2.2.2. Classification Using the MFLFRF Algorithm

- Construction of training sample library:

- Classification Method Details:

2.2.3. Composite Ice Presence Maps

- Calculate the number of times N of non-cloud categories for each pixel among all image classification results from the Terra or Aqua satellites in a day.

- (1) Ice extraction: Judge whether N is greater than the threshold T1. T1 is the threshold of the number of ice occurrences for each pixel per day and was defined as 5 in this study. If N is greater than T1, the pixel is judged to be in the category corresponding to the mode of the non-cloud sequence, and ice extraction is performed on the entire Arctic region. If N is less than T1, the pixel is judged to be a cloud. (2) Water extraction: Determine whether N is greater than the threshold T2. T2 is the threshold of the number of water occurrences for each pixel per day and was defined as 2 in this study. If N is greater than T2, the pixel is judged to be in the category corresponding to the mode of the non-cloud sequence, and water extraction is performed on the entire Arctic region; if N is less than T2, the pixel is judged to be a cloud.

- Synthesize the results extracted in step 2 to obtain daily synthetic ice maps.

- Repeat steps 1 to 3 to calculate the ice map for seven consecutive days and synthesize the final ice map for the week.

- Use all daily synthetic ice maps for seven consecutive days to correct the classification results of the MFLFRF algorithm. According to the corrected classification results, the pre-processed images are fused by assigning weights to obtain weekly fused images.

- The specific processing flow is shown in Figure 2.

- Daily and weekly composite ice presence maps:

- Weekly fused images:

3. Results

3.1. Results of the Ice Map Products

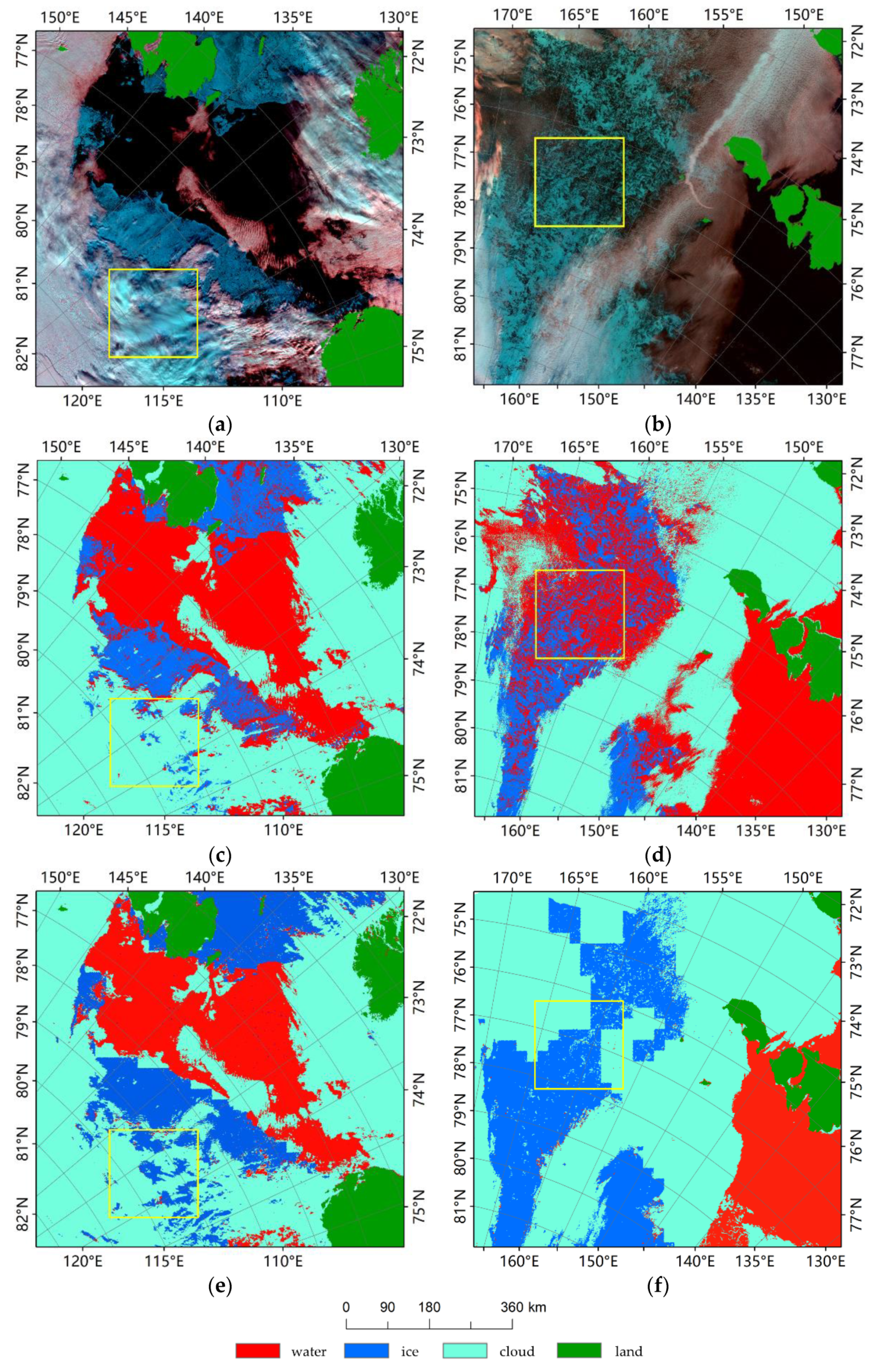

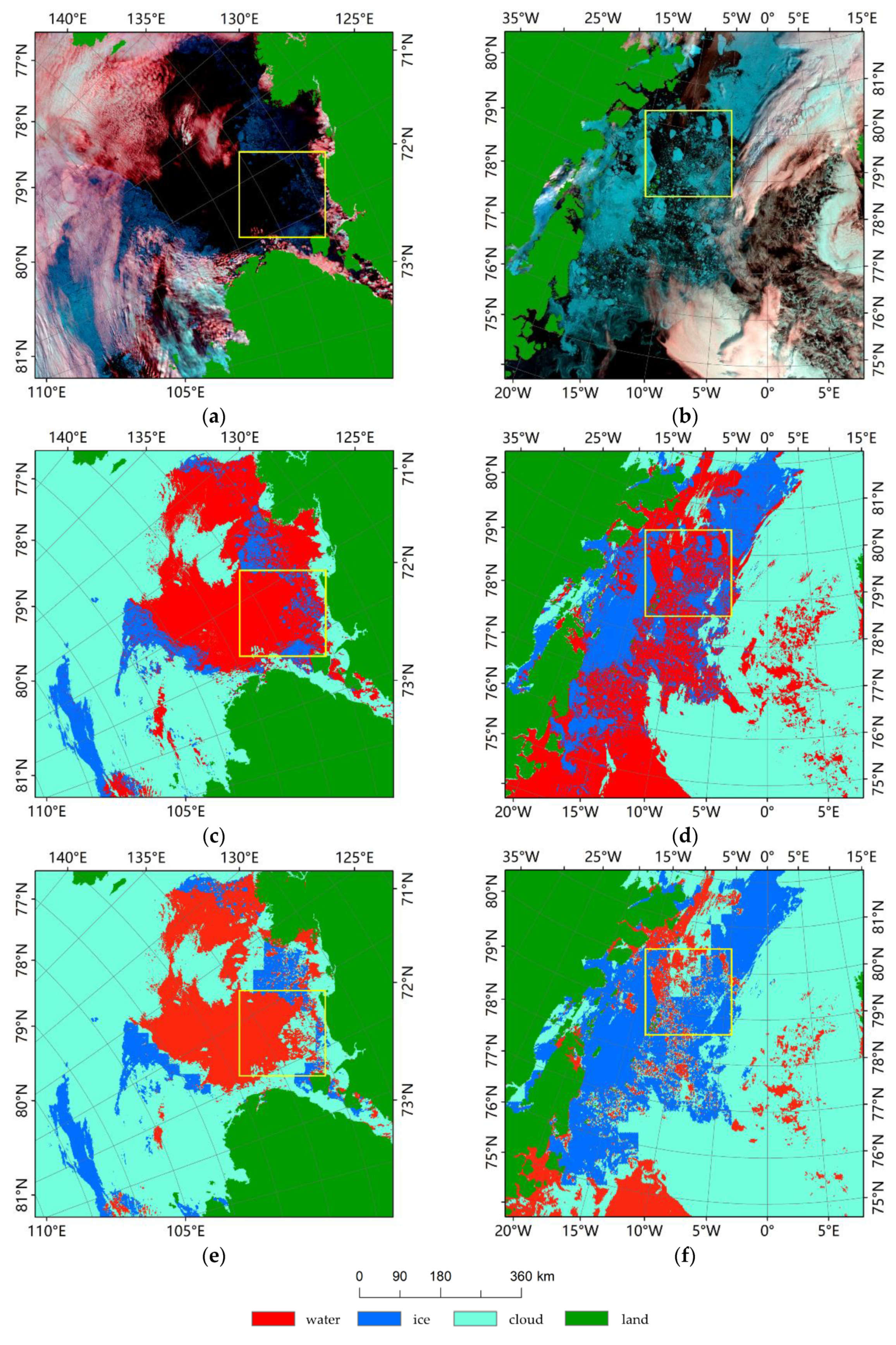

3.1.1. The Single-Scene Ice Presence Maps

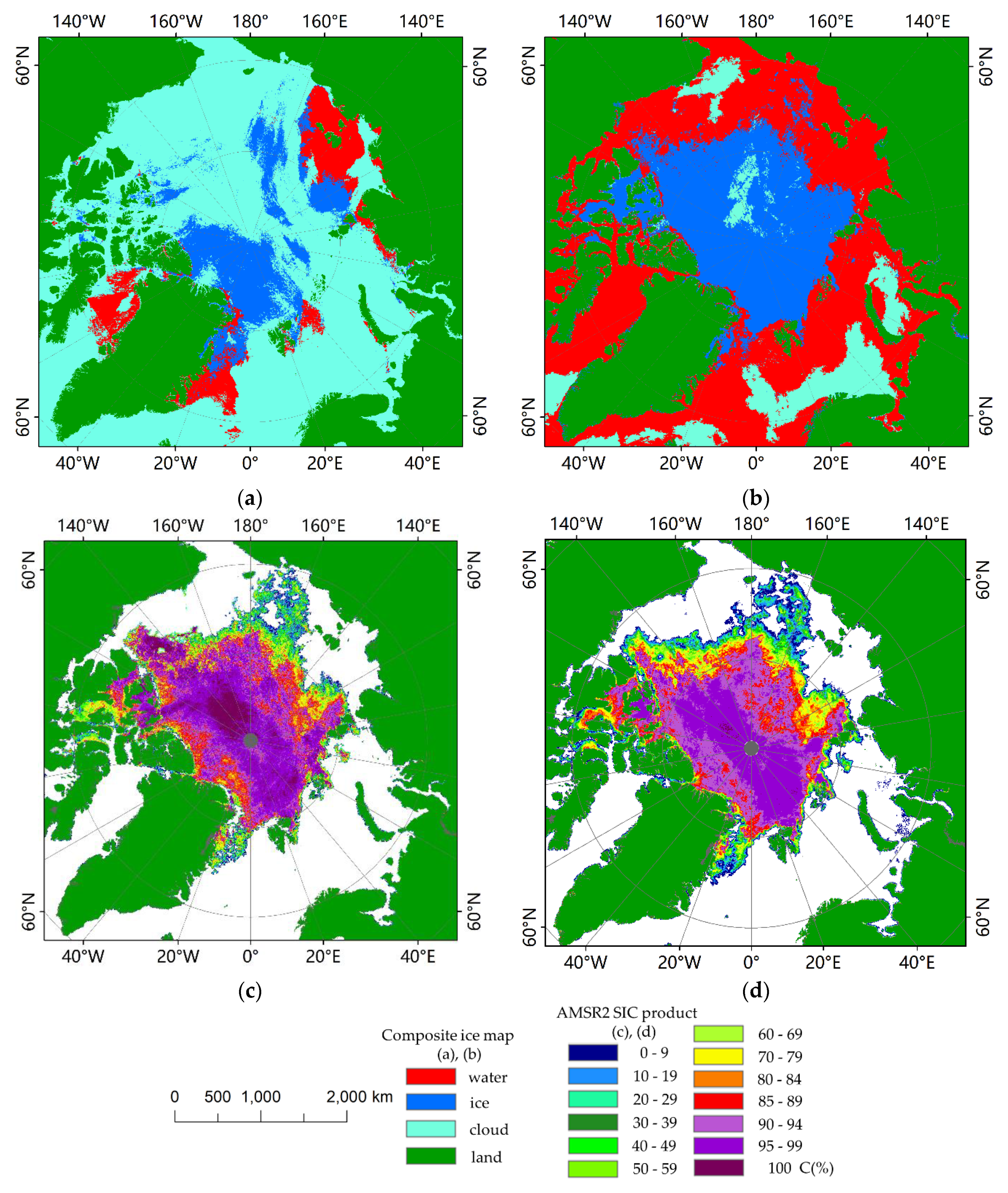

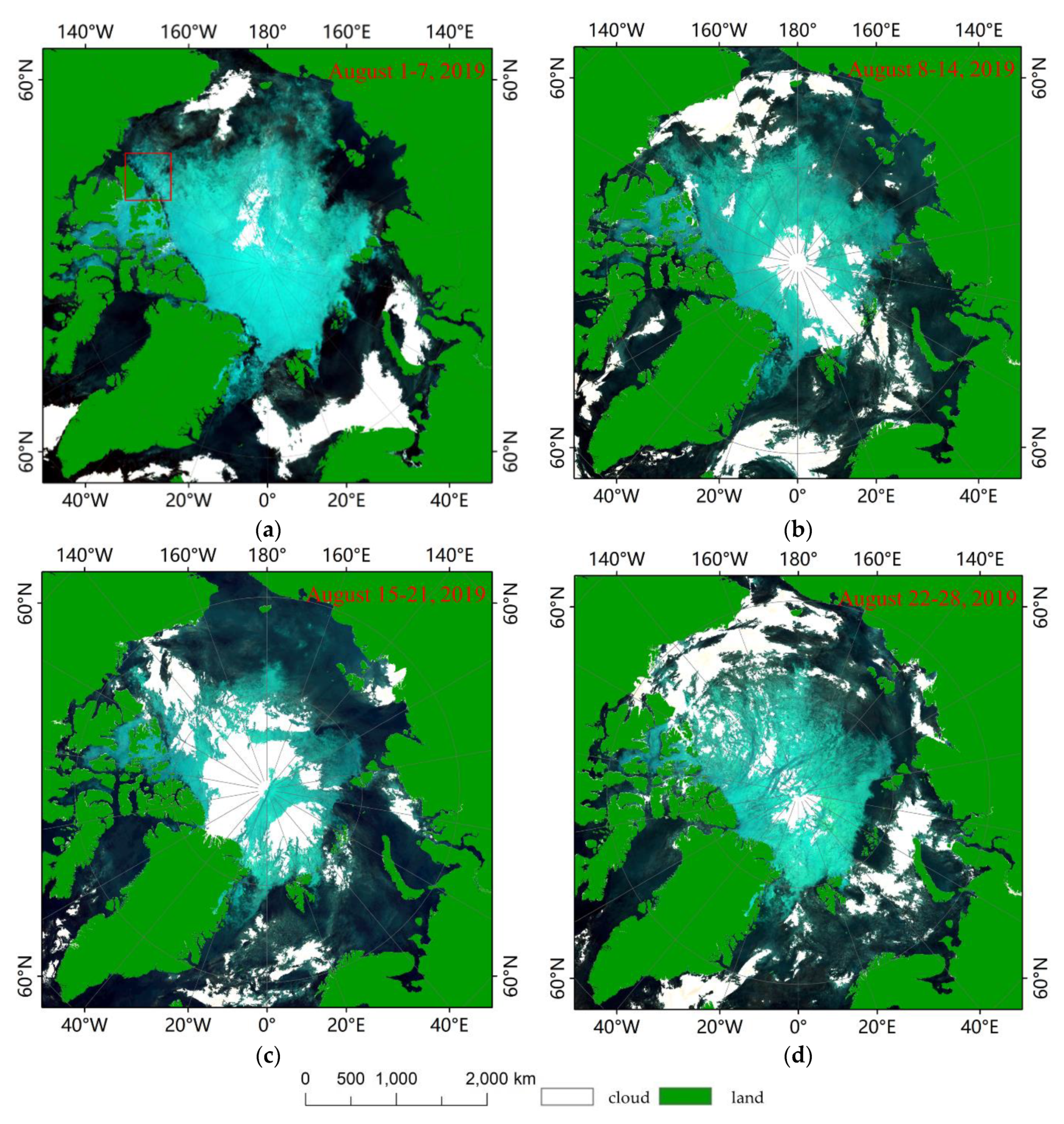

3.1.2. The Daily and Weekly Composite Ice Presence Maps

3.1.3. The Weekly Fused Optical Images

3.2. Accuracy of the Ice Map Products

3.2.1. Accuracy of the Single-Scene Ice Presence Maps

3.2.2. Accuracy of Daily and Weekly Composite Ice Presence Maps

3.2.3. Accuracy of Weekly Fused Optical Images

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pörtner, H.-O.; Roberts, D.C.; Masson-Delmotte, V.; Zhai, P.; Tignor, M.; Poloczanska, E.; Mintenbeck, K.; Alegría, A.; Nicolai, M.; Okem, A. IPCC, 2019: IPCC Special Report on the Ocean and Cryosphere in a Changing Climate. p. 765. Available online: https://www.ipcc.ch/srocc/ (accessed on 12 November 2019).

- Suddenly in Second Place. Available online: http://nsidc.org/arcticseaicenews/2020/10/lingering-seashore-days/ (accessed on 5 October 2020).

- Maslanik, J.; Dunn, J. On the role of sea-ice transport in modifying arctic responses to global climate change. Ann. Glaciol. 1996, 25, 102–106. [Google Scholar] [CrossRef]

- Diebold, F.X.; Rudebusch, G.D. Probability Assessments of an Ice-Free Arctic: Comparing Statistical and Climate Model Projections. Working Pap. Ser. 2020. [Google Scholar] [CrossRef]

- Vickers, H.; Karlsen, S.R.; Malnes, E. A 20-Year MODIS-Based Snow Cover Dataset for Svalbard and Its Link to Phenological Timing and Sea Ice Variability. Remote. Sens. 2020, 12, 1123. [Google Scholar] [CrossRef]

- Dupont, N.; Durant, J.M.; Langangen, Ø.; Gjøsæter, H.; Stige, L.C. Sea ice, temperature, and prey effects on annual variations in mean lengths of a key Arctic fish, Boreogadus saida, in the Barents Sea. ICES J. Mar. Sci. 2020, 77, 1796–1805. [Google Scholar] [CrossRef]

- Song, C.; Zhang, Y.; Xu, Z.; Hao, Z.; Wang, X. Route Selection of the Arctic Northwest Passage Based on Hesitant Fuzzy Decision Field Theory. IEEE Access 2019, 7, 19979–19989. [Google Scholar] [CrossRef]

- Wang, Q.; Lu, P.; Zu, Y.; Li, Z.; Leppäranta, M.; Zhang, G. Comparison of Passive Microwave Data with Shipborne Photographic Observations of Summer Sea Ice Concentration along an Arctic Cruise Path. Remote. Sens. 2019, 11, 2009. [Google Scholar] [CrossRef]

- Haas, C.; Howell, S.E.L. Ice thickness in the Northwest Passage. Geophys. Res. Lett. 2015, 42, 7673–7680. [Google Scholar] [CrossRef]

- Su, J.; Xu, N.; Zhao, J.; Li, X. Features of Northwest Passage Sea Ice’s Distribution and Variation under Arctic Rapidly Warming Condition. Chin. J. Polar Res. 2010, 22, 104–124. [Google Scholar] [CrossRef]

- Cong, X. Potential Influence of the Northwest Passage on Global Economics and China’s Countermeasures: Based on Global Multi-Regional CGE Model. World Econ. Polit. 2017, 2, 106–129. [Google Scholar]

- Ivanova, N.; Pedersen, L.T.; Tonboe, R.; Kern, S.; Heygster, G.; Lavergne, T.; Sorensen, A.C.; Saldo, R.; Dybkjaer, G.; Brucker, L.; et al. Inter-comparison and evaluation of sea ice algorithms: Towards further identification of challenges and optimal approach using passive microwave observations. Cryosphere 2015, 9, 1797–1817. [Google Scholar] [CrossRef]

- Markus, T.; Cavalieri, D. An enhancement of the NASA Team sea ice algorithm. IEEE Trans. Geosci. Remote. Sens. 2002, 38, 1387–1398. [Google Scholar] [CrossRef]

- Shokr, M.; Lambe, A.; Agnew, T. A New Algorithm (ECICE) to Estimate Ice Concentration from Remote Sensing Observations: An Application to 85-GHz Passive Microwave Data. IEEE Trans. Geosci. Remote. Sens. 2008, 46, 4104–4121. [Google Scholar] [CrossRef]

- Spreen, G.; Kaleschke, L.; Heygster, G. Sea ice remote sensing using AMSR-E 89-GHz channels. J. Geophys. Res. Space Phys. 2008, 113. [Google Scholar] [CrossRef]

- Meier, W.; Stewart, J.S. Assessing the Potential of Enhanced Resolution Gridded Passive Microwave Brightness Temperatures for Retrieval of Sea Ice Parameters. Remote. Sens. 2020, 12, 2552. [Google Scholar] [CrossRef]

- Cavalieri, D.J.; Parkinson, C.L.; Gloersen, P.; Zwally, H.J. Arctic and Antarctic Sea Ice Concentrations from Multichannel Passive-Microwave Satellite Data Sets: October 1978–September 1995: User’s Guide. NASA Tech. Memo. 1997, 17, 104647. [Google Scholar]

- Alexander, B.; Lars, K.; Stefan, K.J.R.S. Investigating High-Resolution AMSR2 Sea Ice Concentrations during the February 2013 Fracture Event in the Beaufort Sea. Remote Sens. 2014, 6, 3841–3856. [Google Scholar]

- Comiso, J.C.; Meier, W.; Markus, T. Annomalies and Trends in the Sea Ice Cover from 40 years of Passive Microwave Data. In Proceedings of the AGU Fall Meeting Abstracts, Washington, DC, USA, 10–14 December 2018. [Google Scholar]

- Eastwood, S. Sea Ice Product User’s Manual OSI-401-a, OSI402-a, OSI-403-a; Version 3.11; EUMETSAT: Darmstadt, Germany, 2014. [Google Scholar]

- Zhao, J.; Zhou, X.; Sun, X.; Cheng, J.; Bo, H.U.; Chunhua, L.I.; Press, C.O. The inter comparison and assessment of satellite sea-ice concentration datasets from the arctic. Remote Sens. 2017, 21, 351–364. [Google Scholar]

- Scheuchl, B.; Caves, R.; Cumming, I.; Staples, G. Automated sea ice classification using spaceborne polarimetric SAR data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Sydney, Australia, 9–13 July 2001. IEEE Cat. No.01CH37217. [Google Scholar]

- Soh, L.-K.; Tsatsoulis, C.; Gineris, D.; Bertoia, C. ARKTOS: An Intelligent System for SAR Sea Ice Image Classification. IEEE Trans. Geosci. Remote. Sens. 2004, 42, 229–248. [Google Scholar] [CrossRef]

- Yu, Q.; Clausi, D.A. SAR Sea-Ice Image Analysis Based on Iterative Region Growing Using Semantics. IEEE Trans. Geosci. Remote. Sens. 2007, 45, 3919–3931. [Google Scholar] [CrossRef]

- Chen, S.; Shokr, M.; Li, X.; Ye, Y.; Zhang, Z.; Hui, F.; Cheng, X. MYI Floes Identification Based on the Texture and Shape Feature from Dual-Polarized Sentinel-1 Imagery. Remote. Sens. 2020, 12, 3221. [Google Scholar] [CrossRef]

- Kruk, R.; Fuller, M.C.; Komarov, A.S.; Isleifson, D.; Jeffrey, I. Proof of Concept for Sea Ice Stage of Development Classification Using Deep Learning. Remote. Sens. 2020, 12, 2486. [Google Scholar] [CrossRef]

- Aulicino, G.; Wadhams, P.; Parmiggiani, F. SAR Pancake Ice Thickness Retrieval in the Terra Nova Bay (Antarctica) during the PIPERS Expedition in Winter 2017. Remote. Sens. 2019, 11, 2510. [Google Scholar] [CrossRef]

- Boulze, H.; Korosov, A.; Brajard, J. Classification of Sea Ice Types in Sentinel-1 SAR Data Using Convolutional Neural Networks. Remote. Sens. 2020, 12, 2165. [Google Scholar] [CrossRef]

- Ding, F.; Shen, H.; Perrie, W.; He, Y. Is Radar Phase Information Useful for Sea Ice Detection in the Marginal Ice Zone? Remote. Sens. 2020, 12, 1847. [Google Scholar] [CrossRef]

- Shokr, M.; Sinha, N. Sea Ice: Physics and Remote Sensing; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Gignac, C.; Bernier, M.; Chokmani, K.; Poulin, J. IceMap250—Automatic 250 m Sea Ice Extent Mapping Using MODIS Data. Remote. Sens. 2017, 9, 70. [Google Scholar] [CrossRef]

- Drüe, C. High-resolution maps of the sea-ice concentration from MODIS satellite data. Geophys. Res. Lett. 2004, 312, 183–213. [Google Scholar] [CrossRef]

- Choi, H.; Bindschadler, R. Cloud detection in Landsat imagery of ice sheets using shadow matching technique and automatic normalized difference snow index threshold value decision. Remote Sens. Environ. 2004, 91, 237–242. [Google Scholar] [CrossRef]

- Xiao, X.; Shen, Z.; Qin, X. Assessing the potential of vegetation sensor data for mapping snow and ice cover: A Normalized Difference Snow and Ice Index. Int. J. Remote Sens. 2001, 22, 2479–2487. [Google Scholar] [CrossRef]

- Keshri, A.K.; Shukla, A.; Gupta, R.P. ASTER ratio indices for supraglacial terrain mapping. Int. J. Remote. Sens. 2008, 30, 519–524. [Google Scholar] [CrossRef]

- Zhan, Y.; Wang, J.; Shi, J.; Cheng, G.; Yao, L.; Sun, W. Distinguishing Cloud and Snow in Satellite Images via Deep Convolutional Network. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 1785–1789. [Google Scholar] [CrossRef]

- Varshney, D.; Gupta, P.K.; Persello, C.; Nikam, B.R. Snow and Cloud Discrimination using Convolutional Neural Networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 59–63. [Google Scholar] [CrossRef]

- Ghasemian, N.; Akhoondzadeh, M. Introducing two Random Forest based methods for cloud detection in remote sensing images. Adv. Space Res. 2018, 62, 288–303. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G. MODIS/Terra Sea Ice Extent 5-Min L2 Swath 1 km; Version 6; NASA National Snow and Ice Data Center Distributed Active Archive Center: Boulder, CO, USA, 2015. [Google Scholar] [CrossRef]

- Chan, M.A.; Comiso, J.C. Arctic Cloud Characteristics as Derived from MODIS, CALIPSO, and CloudSat. J. Clim. 2013, 26, 3285–3306. [Google Scholar] [CrossRef]

- Wolfe, R.; Roy, D.; Vermote, E. MODIS land data storage, gridding, and compositing methodology: Level 2 grid. IEEE Trans. Geosci. Remote. Sens. 1998, 36, 1324–1338. [Google Scholar] [CrossRef]

- Running, S.W.; Justice, C.; Salomonson, V.; Hall, D.; Barker, J.; Kaufmann, Y.J.; Strahler, A.H.; Huete, A.R.; Muller, J.; Vanderbilt, V.; et al. Terrestrial remote sensing science and algorithms planned for EOS/MODIS. Int. J. Remote. Sens. 1994, 15, 3587–3620. [Google Scholar] [CrossRef]

- Zhou, J.; Zhou, R.; Li, S.; Ding, K. Research of MODIS L1B-level Data Radiometric Correction and Solar Zenith Correction. J. Northeast For. Univ. 2010, 30, 77–81. [Google Scholar]

- Bishop, M.P.; Bjornsson, H.; Haeberli, W.; Oerlemans, J.; Shroder, J.F.; Tranter, M.; Singh, V.P.; Haritashya, U.K. Encyclopedia of Snow, Ice and Glaciers; Springer: Berlin, Germany, 2011. [Google Scholar] [CrossRef]

- Dozier, J. Spectral signature of alpine snow cover from the landsat thematic mapper. Remote. Sens. Environ. 1989, 28, 9–22. [Google Scholar] [CrossRef]

- Shao, Z.; Deng, J.; Wang, L.; Fan, Y.; Sumari, N.S.; Cheng, Q. Fuzzy AutoEncode Based Cloud Detection for Remote Sensing Imagery. Remote. Sens. 2017, 9, 311. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Liu, L.; Lao, S.; Fieguth, P.W.; Guo, Y.; Wang, X.; Pietikainen, M. Median Robust Extended Local Binary Pattern for Texture Classification. IEEE Trans. Image Process. 2016, 25, 1368–1381. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Chapman, M.; Deng, F.; Ji, Z.; Yang, X. Integration of orthoimagery and lidar data for object-based urban thematic mapping using random forests. Int. J. Remote. Sens. 2013, 34, 5166–5186. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Ghimire, B.; Rogan, J.; Chicaolmo, M.; Rigol-Sanchez, J. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote. Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Du, P.; Samat, A.; Waske, B.; Liu, S.; Li, Z. Random Forest and Rotation Forest for fully polarized SAR image classification using polarimetric and spatial features. ISPRS J. Photogramm. Remote. Sens. 2015, 105, 38–53. [Google Scholar] [CrossRef]

- Topouzelis, K.; Psyllos, A. Oil spill feature selection and classification using decision tree forest on SAR image data. ISPRS J. Photogramm. Remote. Sens. 2012, 68, 135–143. [Google Scholar] [CrossRef]

- Lee, S.H.; Stockwell, D.A.; Joo, H.-M.; Son, Y.B.; Kang, C.-K.; Whitledge, T.E. Phytoplankton production from melting ponds on Arctic sea ice. J. Geophys. Res. Space Phys. 2012, 117, 4030. [Google Scholar] [CrossRef]

- Perovich, D.K.; Richter-Menge, J.A. Surface characteristics of lead ice. J. Geophys. Res. Space Phys. 1994, 99, 16341–16350. [Google Scholar] [CrossRef]

| Band Number | Spatial Resolution (m) | Bandwidth (μm) | Part of Spectrum |

|---|---|---|---|

| 1 | 250 | 0.620–0.670 | VIR (red) |

| 2 | 250 | 0.841–0.876 | NIR |

| 3 | 500 | 0.459–0.479 | VIR (blue) |

| 4 | 500 | 0.545–0.565 | VIR (green) |

| 5 | 500 | 1.230–1.250 | NIR |

| 6 | 500 | 1.628–1.652 | SWIR |

| 7 | 500 | 2.105–2.155 | SWIR |

| Sample Category | Ice | Water | Cloud | ||

|---|---|---|---|---|---|

| Cloud 1 | Cloud 2 | Cloud 3 | |||

| Total Pixels | 14,162 | 13,944 | 10,057 | 11,292 | 10,343 |

| Ground Truth | ||||||

|---|---|---|---|---|---|---|

| Water | Ice | Cloud | Total | Commission Error | ||

| MFLFRF Map | Water | 489 | 7 | 2 | 498 | 1.81% |

| Ice | 0 | 392 | 6 | 398 | 1.51% | |

| Cloud | 0 | 39 | 3065 | 3104 | 1.27% | |

| Total | 489 | 438 | 3073 | 4000 | N/A | |

| Omission Error | 0.00% | 10.50% | 0.26% | N/A | ||

| Overall Accuracy | 98.65% | |||||

| Ground Truth | ||||||

|---|---|---|---|---|---|---|

| Water | Ice | Cloud | Total | Commission Error | ||

| MOD29 Map | Water | 441 | 9 | 16 | 466 | 5.36% |

| Ice | 21 | 378 | 79 | 478 | 20.92% | |

| Cloud | 27 | 51 | 2978 | 3056 | 2.55% | |

| Total | 489 | 438 | 3073 | 4000 | N/A | |

| Omission Error | 10.88% | 13.70% | 3.09% | N/A | ||

| Overall Accuracy | 94.93% | |||||

| AMSR2 Sea Ice Maps | |||||

|---|---|---|---|---|---|

| Water | Ice | Total | Commission Error | ||

| MFLFRF Map | Water | 894 | 41 | 935 | 4.39% |

| Ice | 3 | 2062 | 2065 | 0.15% | |

| Total | 897 | 2103 | 3000 | N/A | |

| Omission Error | 0.33% | 1.95% | N/A | ||

| Overall Accuracy | 98.53% | ||||

| AMSR2 Sea Ice Maps | |||||

|---|---|---|---|---|---|

| Water | Ice | Total | Commission Error | ||

| MFLFRF Map | Water | 239 | 9 | 248 | 3.63% |

| Ice | 2 | 550 | 552 | 0.36% | |

| Total | 241 | 559 | 800 | N/A | |

| Omission Error | 0.83% | 1.64% | N/A | ||

| Overall Accuracy | 98.60% | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, L.; Ma, Y.; Chen, F.; Liu, J.; Yao, W.; Shang, E. Automatic High-Accuracy Sea Ice Mapping in the Arctic Using MODIS Data. Remote Sens. 2021, 13, 550. https://doi.org/10.3390/rs13040550

Jiang L, Ma Y, Chen F, Liu J, Yao W, Shang E. Automatic High-Accuracy Sea Ice Mapping in the Arctic Using MODIS Data. Remote Sensing. 2021; 13(4):550. https://doi.org/10.3390/rs13040550

Chicago/Turabian StyleJiang, Liyuan, Yong Ma, Fu Chen, Jianbo Liu, Wutao Yao, and Erping Shang. 2021. "Automatic High-Accuracy Sea Ice Mapping in the Arctic Using MODIS Data" Remote Sensing 13, no. 4: 550. https://doi.org/10.3390/rs13040550

APA StyleJiang, L., Ma, Y., Chen, F., Liu, J., Yao, W., & Shang, E. (2021). Automatic High-Accuracy Sea Ice Mapping in the Arctic Using MODIS Data. Remote Sensing, 13(4), 550. https://doi.org/10.3390/rs13040550