Estimating Leaf Nitrogen Content in Corn Based on Information Fusion of Multiple-Sensor Imagery from UAV

Abstract

1. Introduction

2. Materials and Methods

2.1. UAV System

2.2. Data Collection and Acquisition

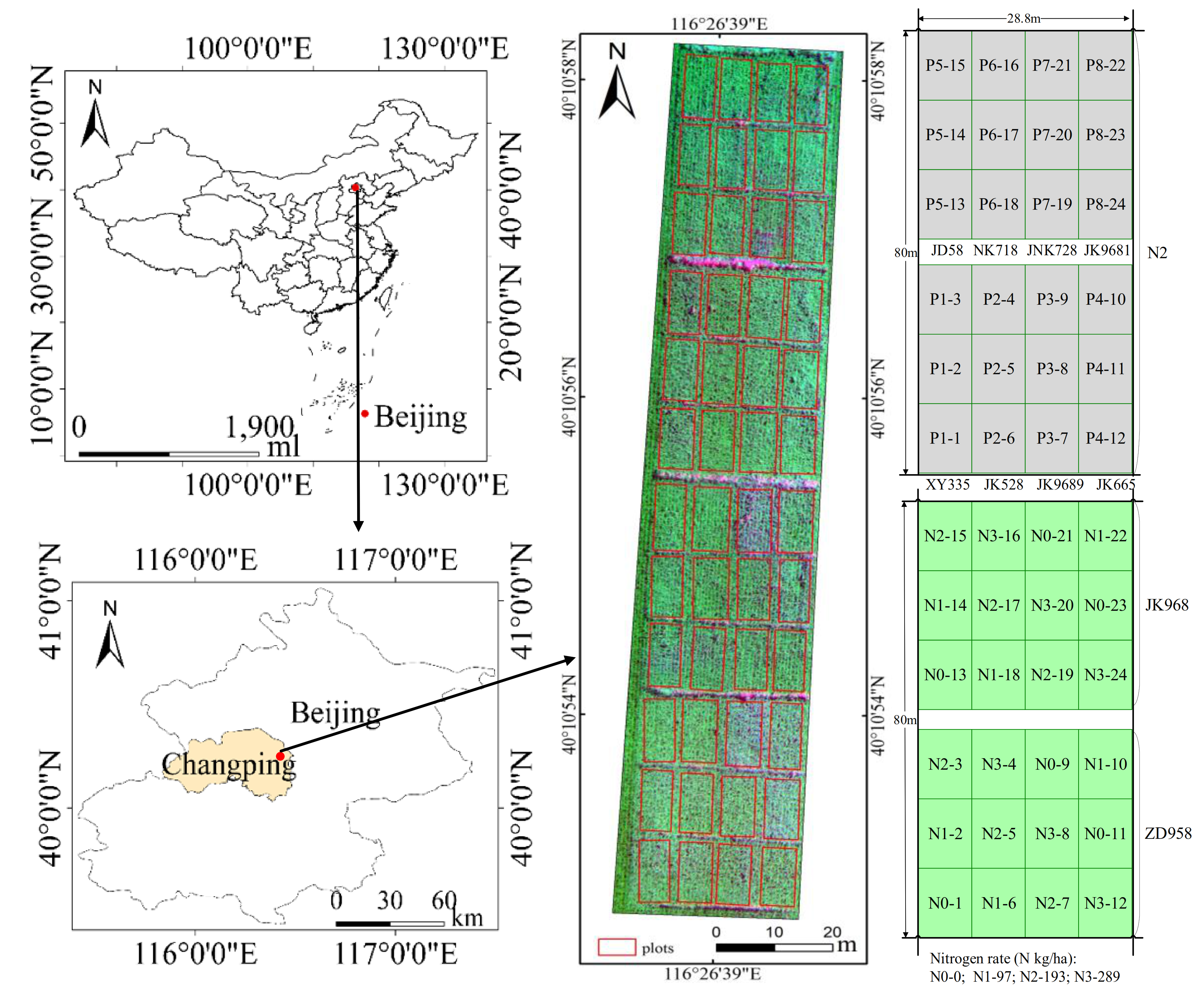

2.2.1. Study Site and Experimental Design

2.2.2. Determination of Leaf Nitrogen Concentration

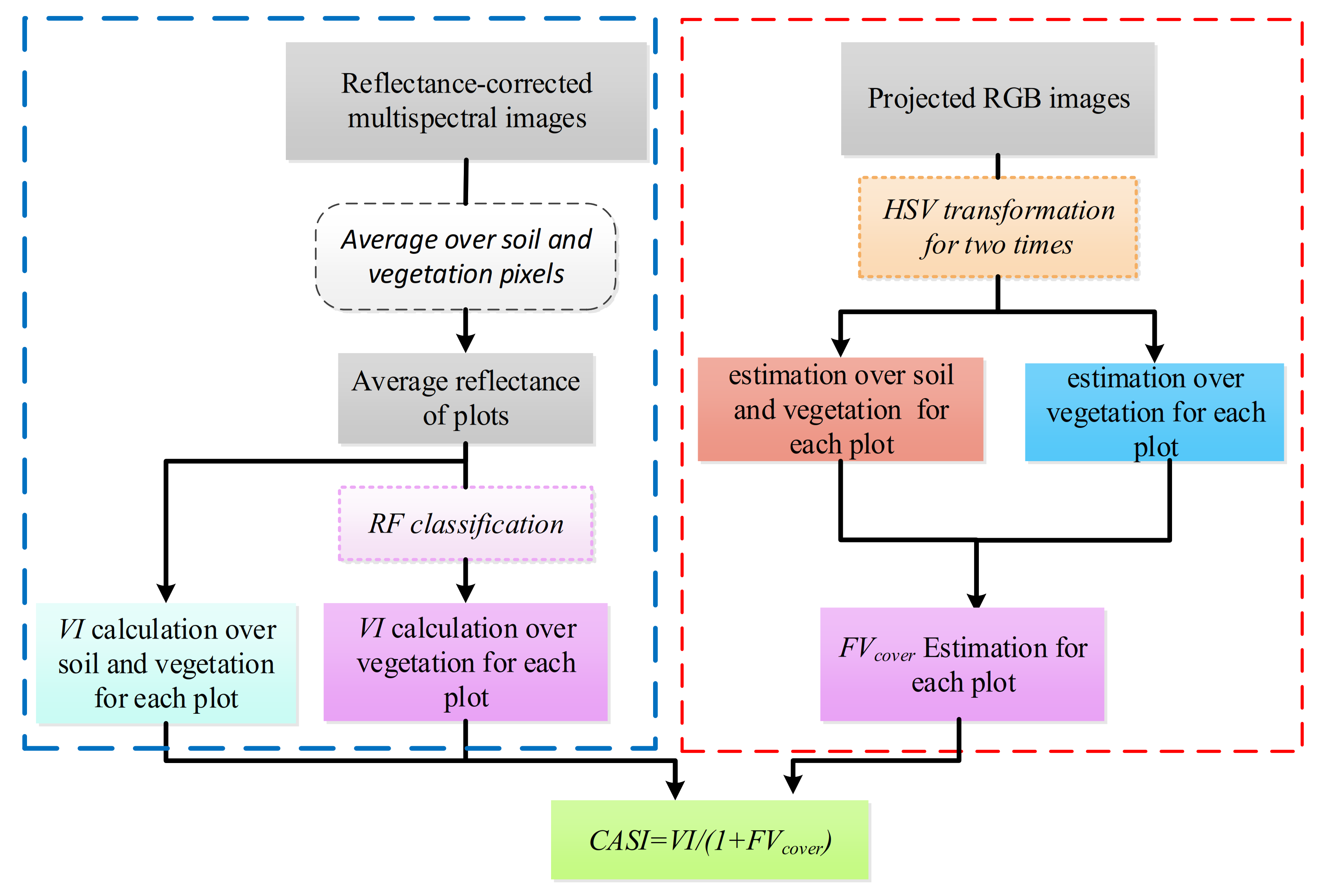

2.3. Methods and Principles

2.3.1. Image Acquisition

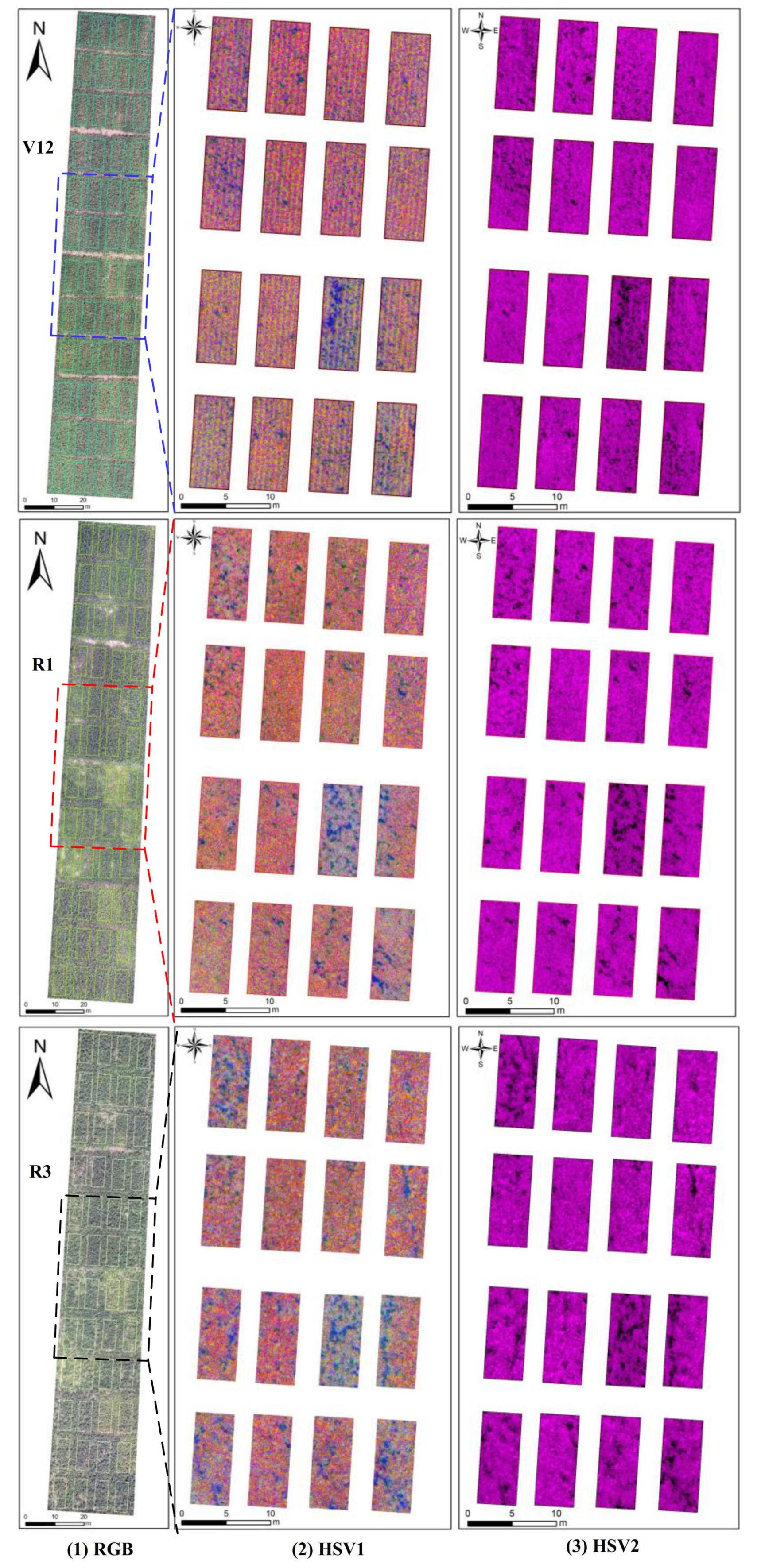

2.3.2. Image Processing

2.3.3. Vegetation Coverage

2.3.4. Spectral Variables

2.3.5. Random Frog Algorithm

2.3.6. Partial Least Squares Regression

2.3.7. Data Evaluation

3. Results

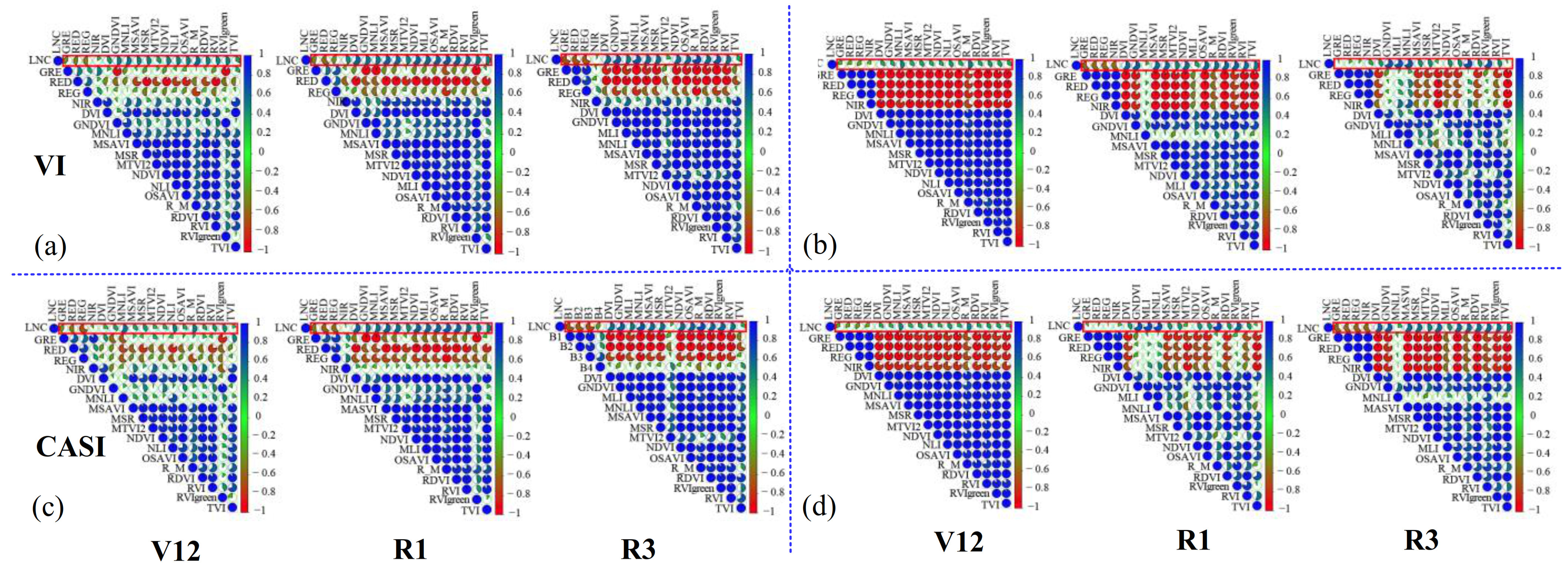

3.1. Relationship between VIs, CASIs, and LNC

3.2. Selection of Vegetation Features by RFA

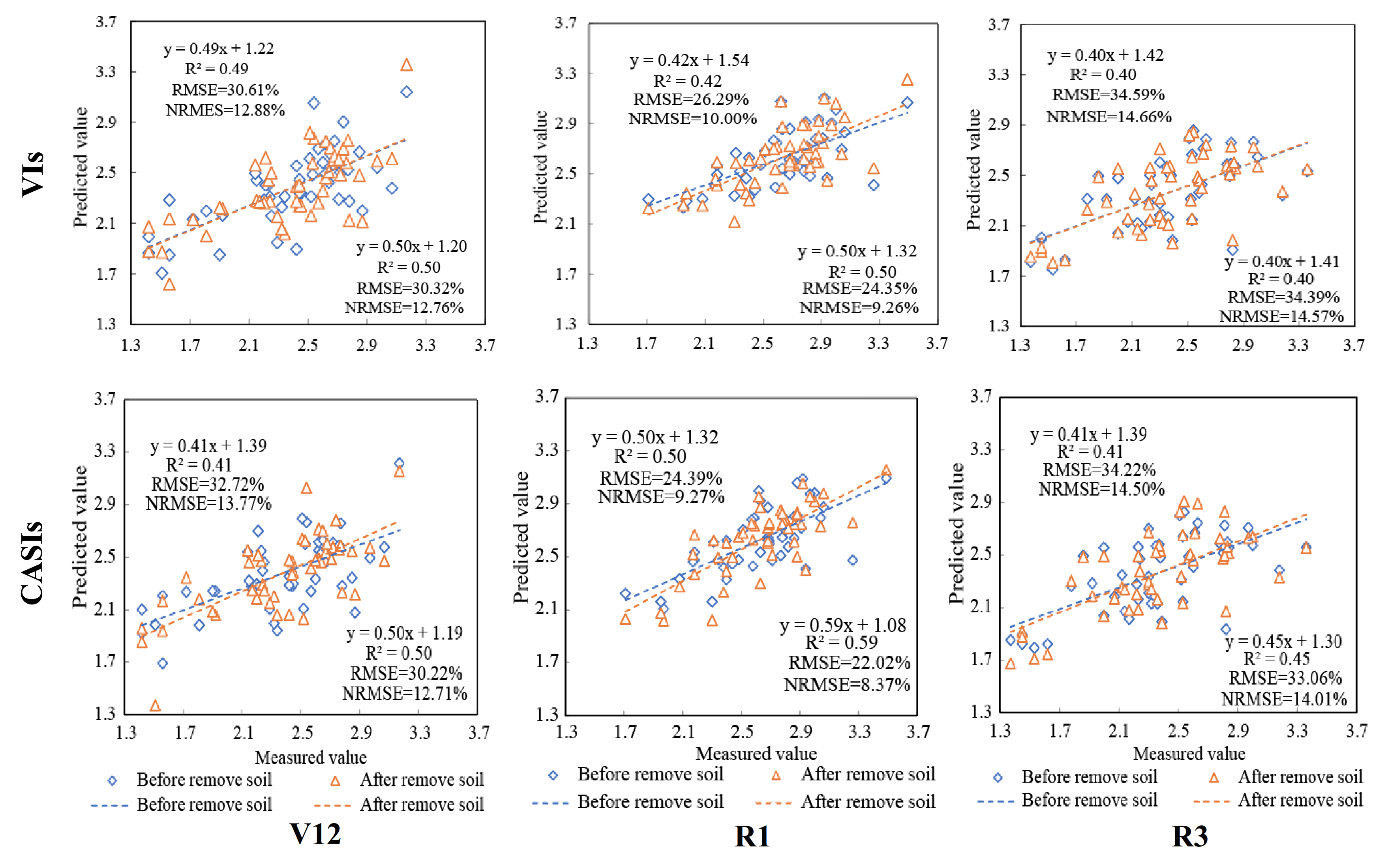

3.3. Estimation of LNC in Corn Using CASIs and VIs

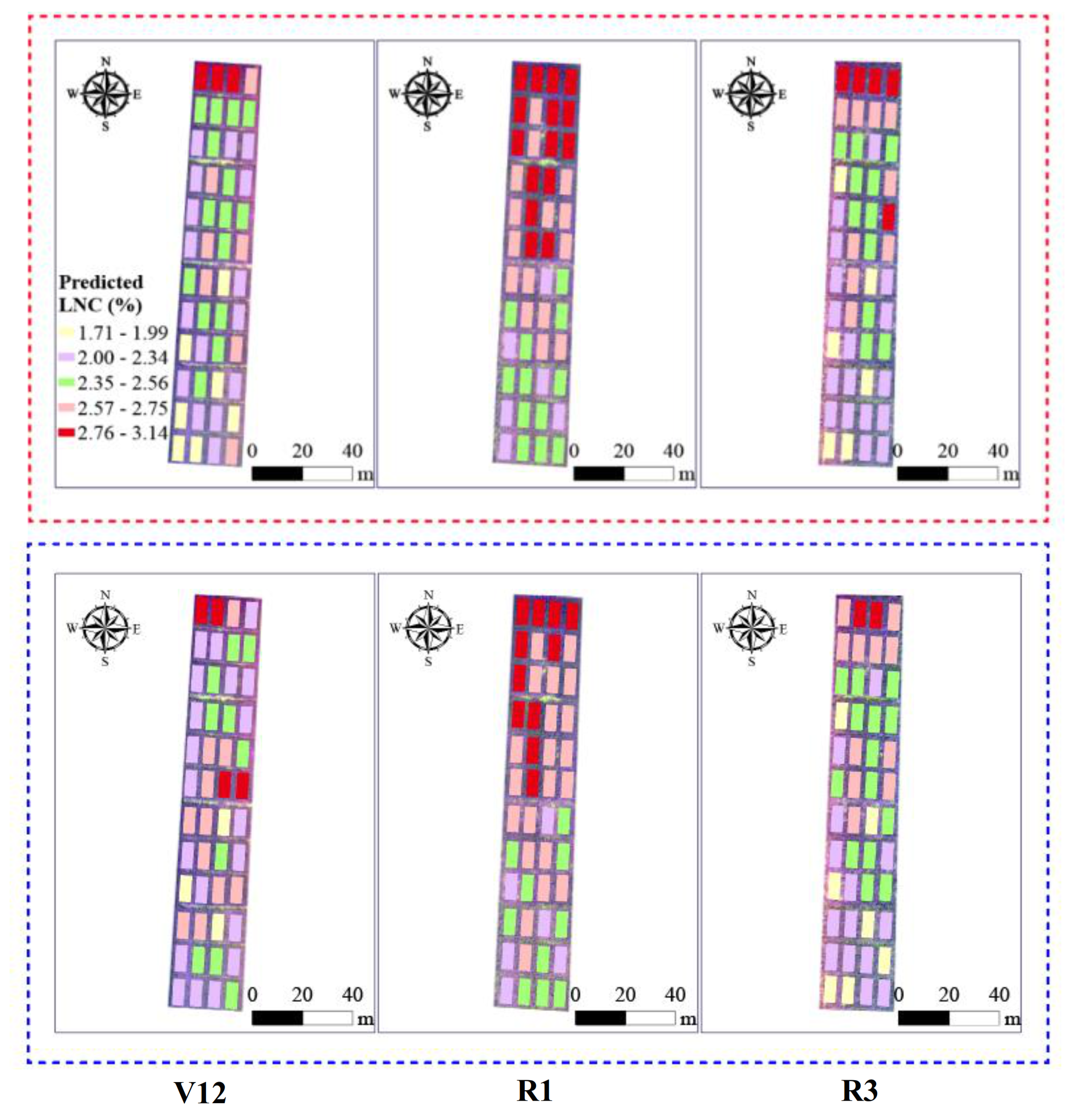

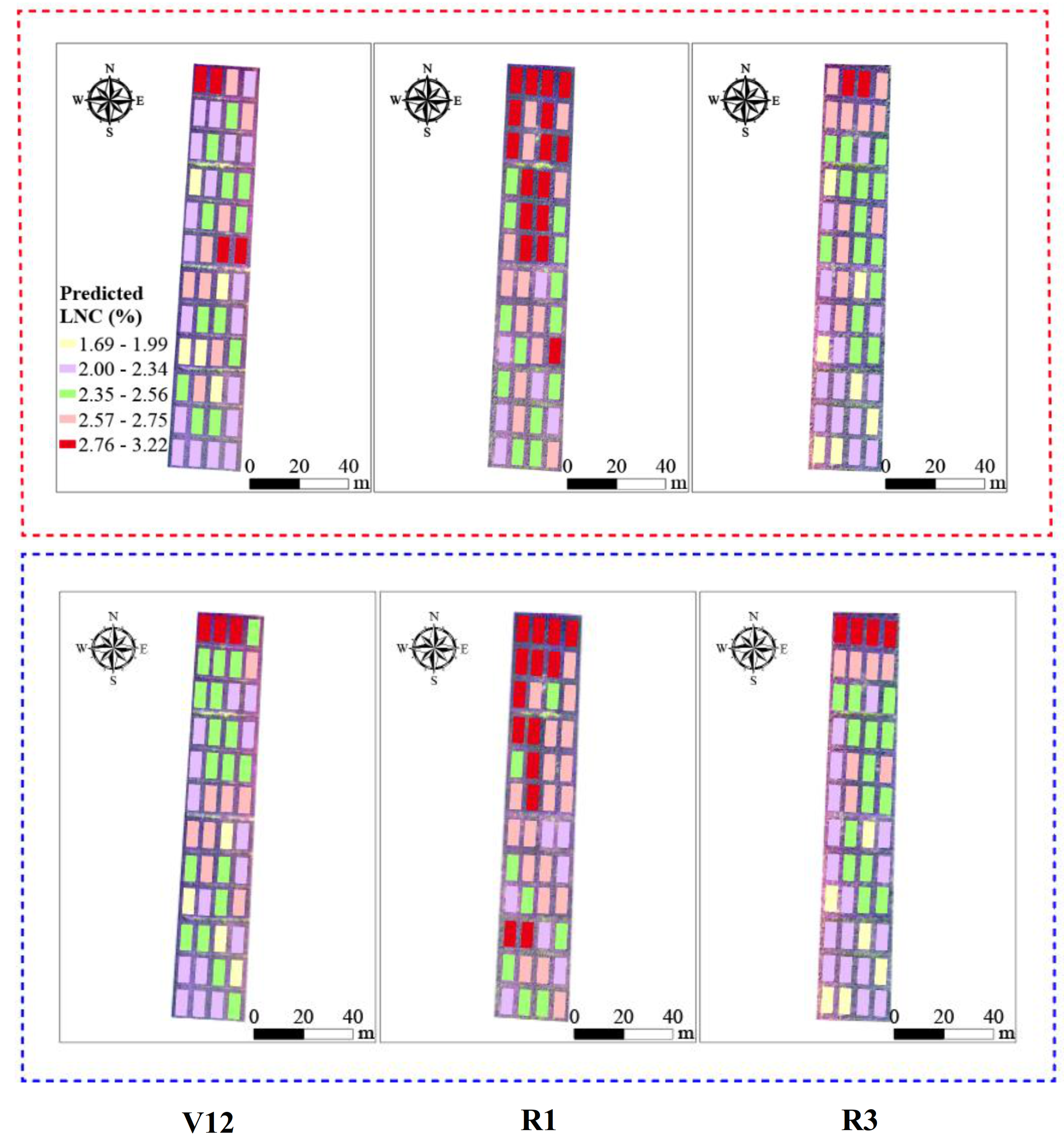

3.4. Mapping LNC Based on UAV Images at Plot Scale

4. Discussion

4.1. Partial Soil or Shadow Removal on UAV Images

4.2. Vegetation Features for LNC Evaluates

4.3. Advantages and Disadvantages of UAV Data Applications

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fitzgerald, G.; Rodriguez, D.; O’Leary, G. Measuring and predicting canopy nitrogen nutrition in wheat using a spectral index—The canopy chlorophyll content index (CCCI). Field Crop. Res. 2010, 116, 318–324. [Google Scholar] [CrossRef]

- Xue, L.; Luo, W.; Cao, W.; Tian, Y. Research progress on the water and nitrogen detection using spectral reflectance. Int. J. Remote Sens. 2003, 7, 73–80. [Google Scholar]

- Tremblay, N. Determining nitrogen requirements from crops characteristics. Benefits and challenges. In Recent Research Development Agronomy and Horticultur, 1st ed.; Pandalai, S.G., Ed.; Research Signpost: Kerala, India, 2004; pp. 157–182. [Google Scholar]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; Moreno, M.A. Applications of georeferenced high-resolution images obtained with unmanned aerial vehicles. Part I: Description of image acquisition and processing. Precis. Agric. 2014, 15, 579–592. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed mapping in early-season maize fields using object-based analysis of Unmanned Aerial Vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef]

- Swain, K.C.; Thomson, S.J.; Jayasuriya, H.P.W. Adoption of an unmanned helicopter for low-altitude remote sensing to estimate yield and total biomass of a rice crop. Trans. ASABE 2010, 53, 21–27. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Hameed, I.A.; Balasundram, S.K.; Ahmad, D.; Weltzien, C.; Yamin, M. Fundamental Research on Unmanned Aerial Vehicles to Support Precision Agriculture in Oil Palm Plantations. In Agricultural Robots-Fundamentals and Applications, 1st ed.; Zhou, J., Ed.; IntechOpen: Nanjing, China, 2019; pp. 91–116. [Google Scholar]

- Swain, K.C.; Jayasuriya, H.P.W.; Salokhe, V.M. Suitability of low-altitude remote sensing images for estimating nitrogen treatment variations in rice cropping for precision agriculture adoption. J. Appl. Remote Sens. 2007, 1, 6656–6659. [Google Scholar] [CrossRef]

- Osco, L.P.; Junior, J.M.; Ramos, A.P.M.; Furuya, D.E.G.; Santana, D.C.; Teodoro, L.P.R.; Gonçalves, W.N.; Baio, F.H.R.; Pistori, H.; Junior, C.A.D.S.; et al. Leaf nitrogen concentration and plant height prediction for maize using UAV-based multispectral imagery and machine learning techniques. Remote Sens. 2020, 12, 3237. [Google Scholar] [CrossRef]

- Lee, H.; Wang, J.; Leblon, B. Using linear regression, Random Forests, and Support Vector Machine with unmanned aerial vehicle multispectral images to predict canopy nitrogen weight in corn. Remote Sens. 2020, 12, 2071. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H.; Wang, P. Quantitative modelling for leaf nitrogen content of winter wheat using UAV-based hyperspectral data. Int. J. Remote Sens. 2017, 38, 2117–2134. [Google Scholar] [CrossRef]

- Lu, J.; Cheng, D.; Geng, C.; Zhang, Z.; Xiang, Y.; Hu, T. Combining plant height, canopy coverage and vegetation index from UAV-based RGB images to estimate leaf nitrogen concentration of summer maize. Biosyst. Eng. 2021, 202, 42–54. [Google Scholar] [CrossRef]

- Kefauver, S.C.; Vicente, R.; Vergara-Díaz, O.; Fernandez-Gallego, J.A.; Kerfal, S.; Lopez, A. Comparative UAV and field phenotyping to assess yield and nitrogen use efficiency in hybrid and conventional barley. Front. Plant Sci. 2017, 8, 1733. [Google Scholar] [CrossRef] [PubMed]

- Huete, A.; Jackson, R.D.; Post, D.F. Spectral response of a plant canopy with different soil backgrounds. Remote Sens. Environ. 1985, 17, 37–53. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.D.; Baret, F. Optimization of soil adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Major, D.; Frederic, B.; Guyot, G. A ratio vegetation index adjusted for soil brightness. Int. J. Remote Sens. 1990, 11, 727–740. [Google Scholar] [CrossRef]

- Liu, S.; Yang, G.; Jing, H.; Feng, H.; Li, H.; Chen, P.; Yang, W. Retrieval of winter wheat nitrogen content based on UAV digital image. Trans. CSAE 2019, 35, 75–85, (In Chinese with English Abstract). [Google Scholar]

- Yao, X.; Ren, H.; Cao, Z.; Tian, Y.; Cao, W.; Zhu, Y.; Cheng, T. Detecting leaf nitrogen content in wheat with canopy hyperspectrum under different soil backgrounds. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 114–124. [Google Scholar] [CrossRef]

- Ritchie, S.W.; Hanway, J.J. How a Corn Plant Develops; Special Report No. 48; Iowa State University of Science and Technology, Cooperative Extension Service: Ames, IA, USA, 1986; pp. 1–21. [Google Scholar]

- Tsai, C.M.; Yeh, Z.M. Contrast enhancement by automatic and parameter-free piecewise linear transformation for color images. IEEE Trans. Consum. Electron. 2008, 54, 213–219. [Google Scholar] [CrossRef]

- Guo, P.; Wu, F.; Dai, J.; Wang, H.; Xu, L.; Zhang, G. Comparison of farmland crop classification methods based on visible light images of unmanned aerial vehicles. Trans. CSAE 2017, 33, 112–119, (In Chinese with English Abstract). [Google Scholar]

- Vimal, C.; Sathish, B. Random Forest Classifier Based ECG Arrhythmia Classification. Int. J. Healthc. Inf. Syst. Inform. 2010, 5, 1–10. [Google Scholar] [CrossRef]

- Torres, M.; Qiu, G.P. Automatic habitat classification using image analysis and random forest. Ecol. Inform. 2014, 23, 126–136. [Google Scholar] [CrossRef]

- Akar, Ö.; Güngör, O. Classication of multispectral images using Random Forest algorithm. J. Geod. Geoinf. 2012, 1, 105–112. [Google Scholar] [CrossRef]

- Li, C.; Wang, J.; Liu, L.; Wang, R. Automated digital image analyses for estimating percent ground cover of winter wheat based on object features. J. Zhejiang Univ. Agric. Life Sci. 2004, 30, 650–656, (In Chinese with English Abstract). [Google Scholar]

- Jia, J.; Hu, Y.; Liu, L. Useing Digital Photography to Measure Vegetation Coverage in Qinghai-Tibet Plateau. J. Geoinf. Sci. 2010, 12, 880–885, (In Chinese with English Abstract). [Google Scholar]

- Zhang, J.; Pu, R.; Yuan, L. Integrating remotely sensed and meteorological observations to forecast wheat powdery mildew at a regional scale. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4328–4339. [Google Scholar] [CrossRef]

- Fan, L.; Zhao, J.; Xu, X.; Liang, D.; Yang, G.; Feng, H.; Yang, H.; Yulong, W.; Chen, G.; Wei, P. Hyperspectral-based estimation of leaf nitrogen content in corn using optimal selection of multiple spectral variables. Sensors 2019, 19, 2898. [Google Scholar] [CrossRef]

- Xu, X.; Zhao, C.; Wang, J.; Zhang, J.; Song, X. Using optimal combination method and in situ hyperspectral measurements to estimate leaf nitrogen concentration in barley. Precis. Agric. 2014, 15, 227–240. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C.L. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Gitelson, A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gong, P.; Pu, R.; Biging, G.S.; Larrieu, M.R. Estimation of forest leaf area index using vegetation indices derived from Hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef]

- Qi, J.G.; Chehbouni, A.; Huete, A.; KERR, Y.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third ERTS Symosium, Washington, DC, USA, 10–14 December 1973; Volume 1, pp. 48–62. [Google Scholar]

- Goel, N.S.; Qin, W. Influences of canopy architecture on relationships between various vegetation indices and LAI and Fpar: A computer simulation. Remote Sens. Rev. 1994, 10, 309–347. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Roujean, J.L.; Bŕeon, F.M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Xue, L.H.; Cao, W.X.; Luo, W.H.; Dai, T.B.; Zhu, Y. Monitoring leaf nitrogen status in rice with canopy spectral reflectance. Agron. J. 2004, 96, 135–142. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Li, H.; Xu, Q.; Liang, Y. Random Frog: An efficient reversible jump Markov Chain Monte Carlo-like approach for variable selection with applications to gene selection and disease classification. Anal Chim. Acta 2012, 740, 20–26. [Google Scholar] [CrossRef]

- Hu, M.H.; Zhai, G.T.; Zhao, Y.; Wang, Z.D. Uses of selection strategies in both spectral and sample spaces for classifying hard and soft blueberry using near infrared data. Sci. Rep. 2018, 8, 6671. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Kong, W.; Liu, F.; He, Y. Measurement of aspartic acid in oilseed rape leaves under herbicide stress using near infrared spectroscopy and chemometrics. Heliyon 2016, 2, e00064. [Google Scholar] [CrossRef] [PubMed]

- Wold, S.; Ruhe, A.; Wold, H.; Dunn, W.J., III. The collinearity problem in linear regression. The partial least squares (PLS) approach to generalized inverses. SIAM J. Sci. Stat. Comput. 1984, 5, 735–743. [Google Scholar] [CrossRef]

- Li, F.; Mistele, B.; Hu, Y.; Chen, X.; Schmidhalter, U. Reflectance estimation of canopy nitrogen content in winter wheat using optimised hyperspectral spectral indices and partial least squares regression. Eur. J. Agron. 2014, 52, 198–209. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Doraiswamy, P.C.; McMurtrey, J.E.; Daughtry, C.S.T.; Perry, E.M.; Akhmedov, B. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 103–112. [Google Scholar] [CrossRef]

- Cho, M.A.; Skidmore, A.K. A new technique for extracting the red edge position from hyperspectral data: The linear extrapolation method. Remote Sens. Environ. 2006, 101, 181–193. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsenb, R.N.; Christensena, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Farou, B.; Rouabhia, H.E.; Seridi, H.; Akdag, H. Novel approach for detection and removal of moving cast shadows based on RGB, HSV and YUV color spaces. Comput. Inform. 2017, 36, 837–856. [Google Scholar] [CrossRef]

- Surkutlawar, S.; Kulkarni, R.K. Shadow suppression using RGB and HSV color space in moving object detection. Int. J. Adv. Comput. Sci. Appl. 2013, 4, 164. [Google Scholar]

- Zhang, Y.; Hartemink, A.E. A method for automated soil horizon delineation using digital images. Geoderma 2019, 343, 97–115. [Google Scholar] [CrossRef]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crop. Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Osco, L.P.; Ramos, A.P.M.; Pinheiro, M.M.F.; Moriya, É.A.S.; Imai, N.N.; Estrabis, N.; Ianczyk, F.; de’Araújo, F.F.; Liesenberg, V.; de Castro Jorge, L.A.; et al. A machine learning approach to predict nutrient content in valencia-orange leaf hyperspectral measurements. Remote Sens. 2020, 12, 906. [Google Scholar] [CrossRef]

- Zheng, H.; Li, W.; Jiang, J.; Liu, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Zhang, Y.; Yao, X. A comparative assessment of different modeling algorithms for estimating leaf nitrogen content in winter wheat using multispectral images from an unmanned aerial vehicle. Remote Sens. 2018, 10, 2026. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Ma, B.; Kovacs, J.M.; Walters, D.; Jiao, X.; Geng, X.; Shi, Y. Assessment of red-edge vegetation indices for crop leaf area index estimation. Remote Sens. Environ. 2019, 222, 133–143. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Li, Z.; Li, H.; Li, Z.; Xu, X.; Song, X.; Zhang, Y.; Duan, D.; Zhao, C.; et al. Progress of hyperspectral data processing and modelling for cereal crop nitrogen monitoring. Comput. Electron. Agric. 2020, 172, 105321. [Google Scholar] [CrossRef]

- Sun, L.; Cheng, L. Anti-soil background capacity with vegetation biochemicalcomponent spectral model. Acta Ecol. Sin. 2011, 31, 1641–1652. [Google Scholar]

- Gilabert, M.; González-Piqueras, J.; Garcııa-Haro, F.; Meliá, J. A generalized soil-adjusted vegetation index. Remote Sens. Environ. 2002, 82, 303–310. [Google Scholar] [CrossRef]

- Chen, P.; Haboudane, D.; Tremblay, N.; Wang, J.; Vigneault, P.; Li, B. New spectral indicator assessing the efficiency of crop nitrogen treatment in corn and wheat. Remote Sens. Environ. 2010, 114, 1987–1997. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

| Sensor Name | Sensor Type | Spectral Band (nm) | Resolution (pixels) | FOV (H° × V°) | Shutter Type | Weight (g) |

|---|---|---|---|---|---|---|

| Parrot Sequoia | MS | 550, 660, 735, 790 * | 1280 × 960 | 62.2 × 48.7 | Global | 107 |

| Cyber-shot DSC-QX100 | RGB | N/A | 5472×3648 | 82.0 × 64.5 | Global | 179 |

| Growth Stage | Sample Number | Max | Min | Mean | SD | CV |

|---|---|---|---|---|---|---|

| V12 | 48 | 3.17 | 1.42 | 2.38 | 0.43 | 0.18 |

| R1 | 48 | 3.49 | 1.17 | 2.63 | 0.35 | 0.13 |

| R3 | 48 | 3.36 | 1.37 | 2.36 | 0.45 | 0.19 |

| VIs | Name | Formula | Reference |

|---|---|---|---|

| DVI | Difference Vegetation Index | [31] | |

| GNDVI | Green NDVI | [32] | |

| MNLI | Modified Non-linear Vegetation Index | [33] | |

| MSAVI | Modified SAVI | [34] | |

| MSR | Modified Simple Ratio | [35] | |

| MTVI2 | Modified Triangular Vegetation Index 2 | [36] | |

| NDVI | Normalized Difference Vegetation Index | [37] | |

| NLI | Non-linear Vegetation Index | [38] | |

| OSAVI | Optimization of Soil-Adjusted Vegetation Index | [16] | |

| R_M | Red Model | [39] | |

| RDVI | Renormalized Difference Vegetation Index | [40] | |

| RVI | Ratio Vegetation Index | [41] | |

| RVIgreen | Green Ratio Vegetation Index | [42] | |

| TVI | Triangular Vegetation Index | [43] |

| Indices | Growth Stages/VIs vs CASIs | Growth Stages (Removing Soil Noise)/VIs vs CASIs | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| V12 | R1 | R3 | V12 | R1 | R3 | |||||||

| GRE | −0.377 ** | −0.416 ** | −0.690 ** | −0.695 ** | −0.454 ** | −0.525 ** | −0.262 | −0.297 | −0.036 | −0.12 | −0.607 ** | −0.606 ** |

| RED | −0.449 ** | −0.510 ** | −0.667 ** | −0.662 ** | −0.614 ** | −0.628 ** | −0.26 | −0.295 | 0.158 | 0.072 | −0.586 ** | −0.590 ** |

| REG | −0.494 ** | −0.686 ** | −0.672 ** | −0.728 ** | −0.436 ** | −0.594 ** | −0.304 | −0.343 | 0.107 | −0.017 | −0.610 ** | −0.610 ** |

| NIR | 0.152 | −0.119 | 0.064 | −0.394 ** | 0.450 ** | −0.032 | −0.211 | −0.276 | 0.317 | 0.186 | −0.580 ** | −0.583 ** |

| DVI | 0.393 ** | 0.26 | 0.592 ** | 0.598 ** | 0.611 ** | 0.613 ** | 0.317 | 0.293 | 0.391 ** | 0.379 ** | 0.589 ** | 0.589 ** |

| GNDVI | 0.502 ** | 0.343 | 0.656 ** | 0.658 ** | 0.541 ** | 0.503 ** | 0.314 | 0.294 | 0.664 ** | 0.669 ** | 0.622 ** | 0.625 ** |

| MNLI | 0.540 ** | 0.577 ** | 0.677 ** | 0.676 ** | 0.527 ** | 0.550 ** | 0.462 ** | 0.460 ** | 0.677 ** | 0.705 ** | 0.175 | 0.237 |

| MSAVI | 0.466 ** | 0.33 | 0.592 ** | 0.676 ** | 0.616 ** | 0.619 ** | 0.319 | 0.296 | 0.406 ** | 0.395 ** | 0.592 ** | 0.594 ** |

| MSR | 0.462 ** | 0.387 ** | 0.569 ** | 0.571 ** | 0.589 ** | 0.588 ** | 0.34 | 0.321 | 0.424 ** | 0.417 ** | 0.578 ** | 0.579 ** |

| MTVI2 | 0.351 | 0.242 | 0.22 | 0.131 | 0.473 ** | 0.450 ** | 0.309 | 0.284 | −0.149 | −0.233 | 0.488 ** | 0.473 ** |

| NDVI | 0.480 ** | 0.281 | 0.593 ** | 0.597 ** | 0.616 ** | 0.618 ** | 0.302 | 0.278 | 0.416 ** | 0.406 ** | 0.594 ** | 0.597 ** |

| NLI | 0.474 ** | 0.465 ** | 0.527 ** | 0.525 ** | 0.586 ** | 0.597 ** | 0.382 ** | 0.365 | 0.692 ** | 0.717 ** | 0.333 | 0.408 ** |

| OSAVI | 0.483 ** | 0.293 | 0.596 ** | 0.600 ** | 0.616 ** | 0.619 ** | 0.307 | 0.283 | 0.412 ** | 0.401 ** | 0.592 ** | 0.594 ** |

| R_M | 0.584 ** | 0.509 ** | 0.707 ** | 0.709 ** | 0.641 ** | 0.655 ** | 0.506 ** | 0.494 ** | 0.712 ** | 0.721 ** | 0.545 ** | 0.571 ** |

| RDVI | 0.477 ** | 0.307 | 0.595 ** | 0.601 ** | 0.614 ** | 0.616 ** | 0.311 | 0.287 | 0.406 ** | 0.395 ** | 0.592 ** | 0.593 ** |

| RVI | 0.445 ** | 0.375 ** | 0.548 ** | 0.540 ** | 0.566 ** | 0.546 ** | 0.366 | 0.335 | 0.643 ** | 0.613 ** | 0.605 ** | 0.589 ** |

| RVIgreen | 0.481 ** | 0.429 ** | 0.641 ** | 0.638 ** | 0.541 ** | 0.475 ** | 0.356 | 0.324 | 0.426 ** | 0.387 ** | 0.563 ** | 0.548 ** |

| TVI | 0.323 | 0.192 | 0.333 | 0.285 | 0.516 ** | 0.495 ** | 0.306 | 0.28 | −0.066 | −0.145 | 0.521 ** | 0.509 ** |

| Types | Conditions | Stages | Features |

|---|---|---|---|

| VIs | Not removing soil | V12 | REG, NLI, DVI, MNLI, R_M |

| R1 | NLI, NIR, NDVI, RDVI, OSAVI | ||

| R3 | GNDVI, NIR, GRE, DVI, MNLI | ||

| Soil removal | V12 | REG, NLI, DVI, MNLI, R_M | |

| R1 | NLI, NIR, NDVI, RDVI, OSAVI | ||

| R3 | GNDVI, NIR, GRE, DVI, MNLI | ||

| CASIs | Not removing soil | V12 | REG, NIR, GRE, DVI, MNLI |

| R1 | REG, NIR, NDVI, RDVI, OSAVI | ||

| R3 | REG, NIR, GRE, DVI, MNLI | ||

| Soil removal | V12 | REG, NIR, GRE, DVI, MNLI | |

| R1 | REG, NIR, NDVI, RDVI, OSAVI | ||

| R3 | REG, NIR, GRE, DVI, MNLI |

| Conditions | Stages | CASI | VI | ||||

|---|---|---|---|---|---|---|---|

| R2 | RMSE | NRMSE | R2 | RMSE | NRMSE | ||

| No removal of soil | V12 | 0.41 | 32.72% | 13.77% | 0.49 | 30.61% | 12.88% |

| R1 | 0.50 | 24.39% | 9.27% | 0.42 | 26.29% | 10.00% | |

| R3 | 0.41 | 34.22% | 14.50% | 0.40 | 34.59% | 14.66% | |

| Soil removal | V12 | 0.50 | 30.22% | 12.71% | 0.50 | 30.32% | 12.76% |

| R1 | 0.59 | 22.02% | 8.37% | 0.50 | 24.35% | 9.26% | |

| R3 | 0.45 | 33.06% | 14.01% | 0.40 | 34.39% | 14.57% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Fan, L.; Li, Z.; Meng, Y.; Feng, H.; Yang, H.; Xu, B. Estimating Leaf Nitrogen Content in Corn Based on Information Fusion of Multiple-Sensor Imagery from UAV. Remote Sens. 2021, 13, 340. https://doi.org/10.3390/rs13030340

Xu X, Fan L, Li Z, Meng Y, Feng H, Yang H, Xu B. Estimating Leaf Nitrogen Content in Corn Based on Information Fusion of Multiple-Sensor Imagery from UAV. Remote Sensing. 2021; 13(3):340. https://doi.org/10.3390/rs13030340

Chicago/Turabian StyleXu, Xingang, Lingling Fan, Zhenhai Li, Yang Meng, Haikuan Feng, Hao Yang, and Bo Xu. 2021. "Estimating Leaf Nitrogen Content in Corn Based on Information Fusion of Multiple-Sensor Imagery from UAV" Remote Sensing 13, no. 3: 340. https://doi.org/10.3390/rs13030340

APA StyleXu, X., Fan, L., Li, Z., Meng, Y., Feng, H., Yang, H., & Xu, B. (2021). Estimating Leaf Nitrogen Content in Corn Based on Information Fusion of Multiple-Sensor Imagery from UAV. Remote Sensing, 13(3), 340. https://doi.org/10.3390/rs13030340