Abstract

In recent years unmanned aerial vehicles (UAVs) have emerged as a popular and cost-effective technology to capture high spatial and temporal resolution remote sensing (RS) images for a wide range of precision agriculture applications, which can help reduce costs and environmental impacts by providing detailed agricultural information to optimize field practices. Furthermore, deep learning (DL) has been successfully applied in agricultural applications such as weed detection, crop pest and disease detection, etc. as an intelligent tool. However, most DL-based methods place high computation, memory and network demands on resources. Cloud computing can increase processing efficiency with high scalability and low cost, but results in high latency and great pressure on the network bandwidth. The emerging of edge intelligence, although still in the early stages, provides a promising solution for artificial intelligence (AI) applications on intelligent edge devices at the edge of the network close to data sources. These devices are with built-in processors enabling onboard analytics or AI (e.g., UAVs and Internet of Things gateways). Therefore, in this paper, a comprehensive survey on the latest developments of precision agriculture with UAV RS and edge intelligence is conducted for the first time. The major insights observed are as follows: (a) in terms of UAV systems, small or light, fixed-wing or industrial rotor-wing UAVs are widely used in precision agriculture; (b) sensors on UAVs can provide multi-source datasets, and there are only a few public UAV dataset for intelligent precision agriculture, mainly from RGB sensors and a few from multispectral and hyperspectral sensors; (c) DL-based UAV RS methods can be categorized into classification, object detection and segmentation tasks, and convolutional neural network and recurrent neural network are the mostly common used network architectures; (d) cloud computing is a common solution to UAV RS data processing, while edge computing brings the computing close to data sources; (e) edge intelligence is the convergence of artificial intelligence and edge computing, in which model compression especially parameter pruning and quantization is the most important and widely used technique at present, and typical edge resources include central processing units, graphics processing units and field programmable gate arrays.

1. Introduction

Agriculture is the foundation of society and national economies, and one of the most important industries in China. Acquiring timely and reliable agriculture information such as crop growth and yields is crucial to the establishment of related policies and plans for food security, poverty reduction and sustainable development. In recent years precision agriculture (PA) has developed rapidly, which refers to a management strategy that gathers, processes and analyzes temporal, spatial and individual data in agricultural production. This data is combined with other information to support management decisions with estimated variability for improved resource use efficiency, productivity, quality, profitability and sustainability of agricultural production according to the International Society of Precision Agriculture (ISPA) [1,2]. It can help to reduce costs and environmental impacts by providing farmers with detailed spatial information to optimize field practices [3,4].

The traditional way to get the prerequisite knowledge for PA depends on labor-intensive and subjective investigation, which consumes a large amount of human time and financial resources. Since remote sensing (RS) allows for a high frequency of information gathering without making physical contact at a low cost [5], it has been widely used as a powerful tool for rapid, accurate and dynamic agriculture applications [6,7]. RS data are mainly collected by three kinds of platforms, i.e., spaceborne, airborne, and ground-based [5]. Spaceborne includes satellite RS and can provide large-scale spatial coverage, but can suffer from fixed and long revisit periods and cloud occlusion, limiting its application for fine-scale PA [8,9]. Additionally, relatively low spatial and temporal resolution and high equipment costs become critical bottlenecks [10]. Ground-based remote sensors (onboard vehicles, ships, fixed or movable elevated platforms) are suitable for small scale monitoring. In comparison, airborne platforms can collect data with high spatial resolution and flexibility in terms of flight configurations such as observation angles, flight routes [7]. An unmanned aerial vehicle (UAV) is a powered, aerial vehicle without any human operator, which can fly autonomously or be controlled remotely with various payloads [11]. Due to their advantages in terms of flexible data acquisition and high spatial resolution [12], UAVs are quickly evolving and provide a powerful technical approach for many applications in PA, for example, crop state mapping [13,14], crop yield prediction [15,16], diseases detection [17,18], weed management [19,20] rapidly and nondestructively.

Compared with traditional mechanism-based methods, machine learning (ML) methods have long been applied in a variety of agriculture applications to discover patterns and correlations due to their capability to address linear and non-linear issues from large numbers of inputs [7,21]. For example, Su et al. [22] proposed a support vector machine-based crop model for large-scale simulation of rice production in China, and Everingham et al. [23] utilize a random forest model to predict sugarcane yield with simulated and observed variables as inputs. An ML pipeline typically consists of feature extraction and a classification or regression module for prediction, and its performance heavily relies on the handcrafted feature extraction techniques [24,25]. In the past years, with the development of computing and storage capability, deep learning (DL), which is composed of “deep” layers to learn the representation of data with multiple levels of abstraction and discovers intricate structure in large datasets by using the backpropagation algorithm [26], has improved the state-of-the-art in a variety of tasks. This includes computer vision, natural language processing, speech recognition etc. In the RS community, even for typical PA applications with UAV data (e.g., weed detection [27], crops and plants counting [28], land cover and crop type classification [29]), DL has emerged as an intelligent and robust tool [30].

DL has achieved success with high accuracy for PA, for instance, the DL model in [27] provides much better weed detection results than ML methods in the bean field with a performance gain greater than 20%, and more PA applications boosted by DL have shown similar promising superiority. However, the successful implementation of DL comes at the cost of high computational, memory and network requirements at both the training and inference stages [31]. For example, the VGG-16, an early classic convolutional neural network (CNN) used for classification contains around 140 million parameters, consumes over 500MB of memory and has 15 billion floating point of operations (FLOPs) [32]. It is challenging to deploy deep neural network models in scenarios onboard mobile airborne and spaceborne platforms with limited computation, storage, power consumption, and bandwidth resources [33]. To meet the computational requirements of DL, a common way is to utilize cloud computing, where data are moved from the data sources located at the network edge such as smartphones and internet-of-things (IoT) sensors to the cloud [31]. However, the cloud-computing mode might put great pressure on network bandwidth and cause significant latency when moving massive data across the wide area network (WAN) [34]. Besides the above, privacy leakage is also a major concern [35]. The emerging of edge computing fulfills the above-mentioned issues.

According to the Edge Computing Consortium (ECC), edge computing is a distributed open platform at the network edge, close to the things or data sources, and integrating the capabilities of networks, storage and applications [36]. In this new computing paradigm, data does not need to be sent to a Cloud or other remote centralized or distributed systems for further processing. The combination of edge computing and artificial intelligence (AI) yields edge intelligence, the next stage of edge computing. It aims to use AI technology to empower the edge. Edge is a relative concept, which refers to any resource, storage, and network resource from the data source to the cloud-computing center. The resources on this path can be regarded as a continuous system. Currently, there is no formal definition of edge intelligence internationally. Most organizations refer to edge intelligence as the paradigm of running AI algorithms locally on an end device, with data created on the device [34]. It enables the deployment of AI algorithms on intelligent edge devices with built-in processors for onboard analytics or AI (e.g., UAVs, sensors and IoT gateways) that are closer to the data sources [34,37]. However, more researchers consider that edge intelligence should not be restricted to running AI models on edge devices or servers. A broader definition divides edge intelligence into AI for edge (intelligence-enabled edge computing) and AI on edge. The former tries to provide optimal solutions to key problems in edge computing with AI technologies, while the latter focuses on the way to carry out the entire process of building AI models, i.e., model training and inference, on the edge [38]. Zhou et al. further present a definition of six levels to fully exploit the available data and resources across end devices, edge nodes, and cloud datacenters, thus optimizing the performance of training and inferencing an AI model [34].

There already exist many reviews for agriculture with UAVs [2,8,9,39,40,41] and DL [24,42,43,44]. However, the research and practice of edge intelligence are still in an early stage, and to the best of our knowledge, there is a literature gap to review the advances combining edge intelligence and UAV RS in the PA area. Therefore, in this paper we attempt to provide an in-depth and comprehensive survey on the latest development of PA with UAV RS and edge intelligence. The main contributions of this paper are as follows:

1. The most relevant DL techniques and their latest implementations in PA are reviewed in detail. Specifically, this paper gives a comprehensive publicly available UAV-based RS datasets for intelligent agriculture, which attempts to facilitate the validation of DL-based methods for the community.

2. The cloud computing and edge computing paradigms for the UAV RS in PA are discussed in this paper.

3. The relevant edge intelligence techniques are thoroughly reviewed and analyzed for UAV RS in PA for the first time to the best of our knowledge. Particularly, this paper gives a compilation of the UAV intelligent edge devices and the latest development of edge inference with model compression in detail.

The remainder of this paper is structured as follows. Section 2 presents the application of UAV RS technology in PA, including the relevant fundamentals of UAV systems, RS sensors and typical applications in PA. Section 3 gives the DL methods and publicly available datasets used in PA. Section 4 emphatically analyzes the edge intelligence for UAV RS in PA, including the cloud and edge computing paradigms, basic concepts and major components of edge intelligence, network model design and edge resources. Future directions are given in Section 5 and conclusions are drawn in Section 6.

2. UAV Remote Sensing in Precision Agriculture

2.1. UAV Systems and Sensors for Precision Agriculture

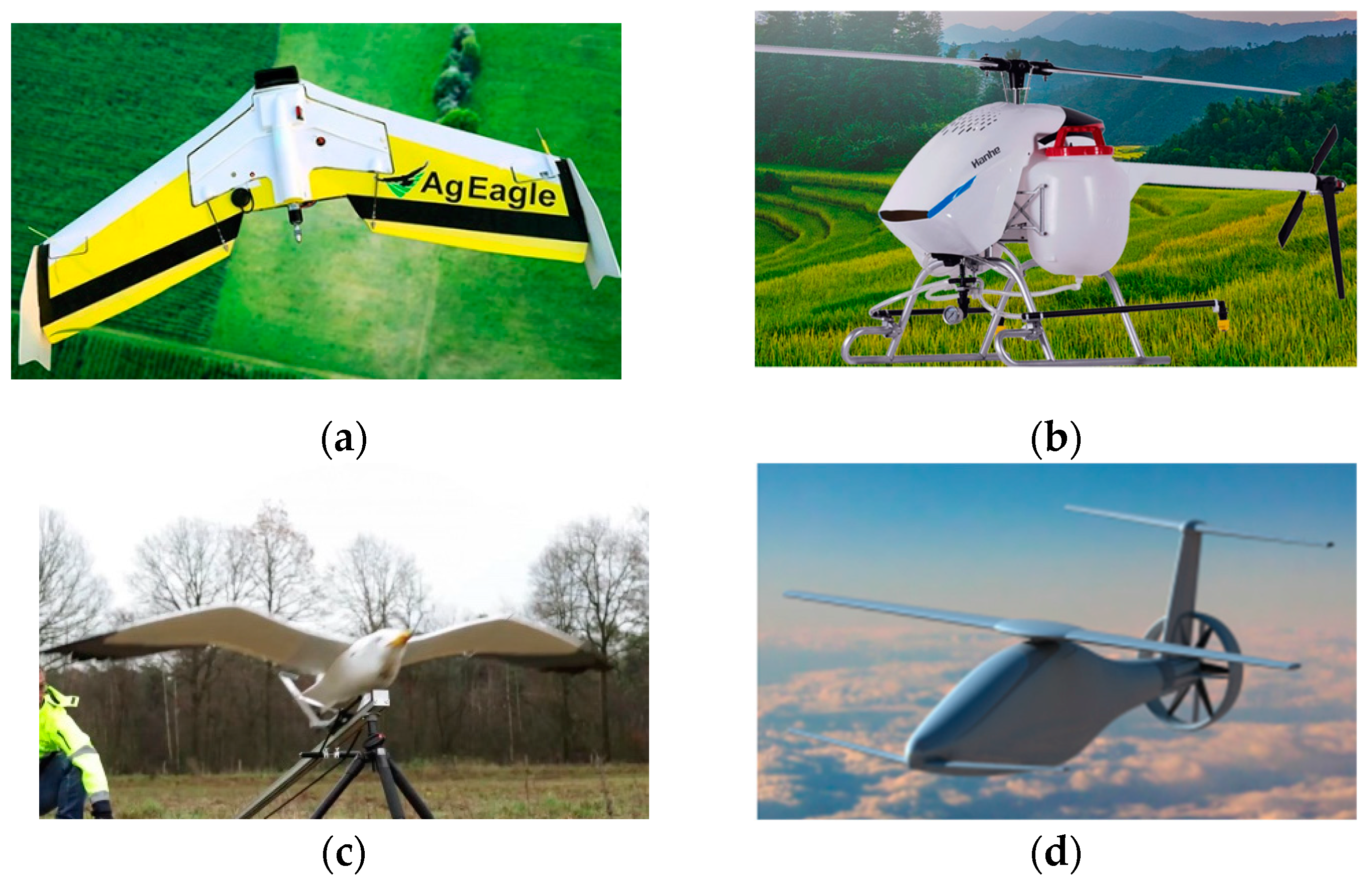

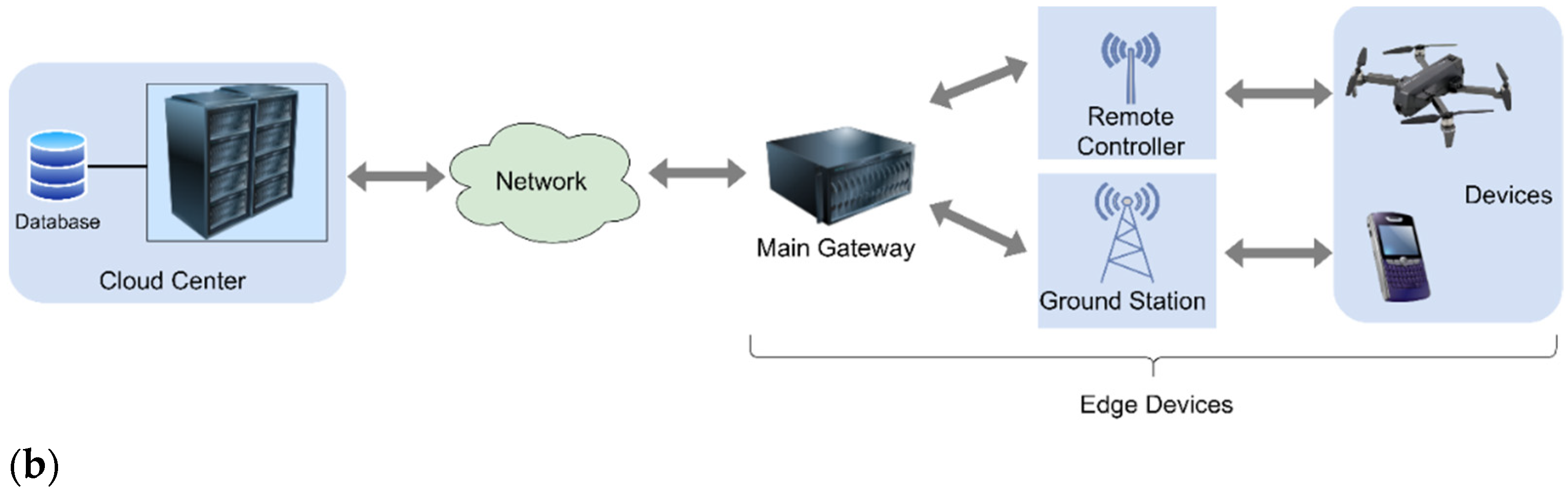

UAV systems differ in size, weight, load, power, endurance time, purpose etc., and there are many kinds of taxonomic approaches. According to the Civil Aviation Administration of China, UAVs mainly serve for military and civilian fields. Agriculture belongs to the latter. In terms of the operational risk, mainly including the metrics of size, the weight of UAVs and the ability to carry payloads when performing missions, civilian UAVs can be divided into mini UAV, light UAV, small UAV, medium UAV, and large UAV [45]. Their major characteristics are listed in Table 1. In addition, according to the aerodynamic features, UAVs are usually classified into fixed-wing, rotary-wing, flapping-wing and hybrid UAVs shown in Table 2 [9,46,47]. For fixed-wing UAVs, the main wing surface that generates lift is fixed relative to the fuselage, and the power device generates the forward force. Rotary-wing UAVs possess power devices and rotor blades that are rotating relative to the fuselage for generating lift during flight, and further mainly include unmanned helicopters and multi-rotor UAVs, for instance, tricopters, quadcopters, hexacopters and octocopters, which can take off, land and hover vertically. Flapping-wing UAVs obtain lift and power by flapping wings up and down like birds and insects, and are suitable for small, light and mini UAVs. The hybrid layout UAVs consists of a combination of basic layout types, mainly including tilt-rotor UAVs and rotor/fixed UAVs. Figure 1 shows the examples of typical UAVs.

Table 1.

Categories and characteristics of UAVs according to operational risks [45].

Table 2.

Categories and characteristics of UAVs according to aerodynamic features [9,46,47].

Figure 1.

Examples of typical UAVs: (a) A fixed-wing UAV “AgEagle RX60” from AgEagle (https://ageagle.com/agriculture/# (accessed on 17 October 2021)); (b) An unmanned helicopter “Shuixing No.1” from Hanhe (http://www.hanhe-aviation.com/products2.html (accessed on 17 October 2021)); (c) A flapping-wing UAV from the Drone Bird Company “AVES Series” (https://www.thedronebird.com/aves/ (accessed on 17 October 2021)); (d) A hybrid UAV “Linglong” from Northwestern Polytechnical University (https://wurenji.nwpu.edu.cn/cpyf/cpjj1/xzjyfj_ll_.htm (accessed on 17 October 2021)).

In the agriculture RS field concerned in this paper, UAVs used are less than 116 kg in general, and most belong to the “small” (≤15 kg) or “light” (≤7 kg) categories, and fly lower than 1 km, i.e., at a low altitude of 100 to 1000 m or ultra-low altitude of 1 to 100 m [9,45]. On the other hand, flapping-wing UAVs and hybrid UAVs are not often used; fixed-wing UAVs and industrial rotor-wing UAVs are the mainstream currently. Specifically, since multi-rotor UAVs are more cost-effective than the other types, and are generally more stable than unmanned helicopters during flight, they are the most widely used in the PA field [8].

Besides, UAVs can be equipped with a variety of payloads for different purposes. To capture agriculture information, UAVs used in PA are generally with remote sensors including RGB imaging, multispectral and hyperspectral imaging sensors, thermal infrared sensors, light detection and ranging (LiDAR), and synthetic aperture radar (SAR) [9,48,49]. Their major characteristics and applications in PA are summarized in Table 3.

Table 3.

The major characteristics and applications of sensors mounted on UAVs in PA.

2.2. Application of UAV Remote Sensing in Precision Agriculture

The major objectives of PA are to increase crop yields, improve product quality, make efficient use of agrochemical products, save energy and protect the physical environment against pollution [47]. With the advantages of cost-effective, high-resolution imagery [67], UAVs have now been commonly used in the PA area, mainly for monitoring [12,68,69,70] and spraying [71,72,73]. For the former, different sensors onboard UAVs capture RS data, which are utilized to identify specific spatial features and time variant information of crop characteristics; for the latter, UAV systems are used to spray accurate amounts of pesticides and fertilizers, thus to mitigate possible diseases and pests and increase crop yields and product quality [47]. RS provides an effective tool for UAV-based PA monitoring, and the most common related applications are as follows.

- Weed detection and mapping: As weeds have been responsible for most agricultural yield losses, the utilization of herbicides is important in the growth of crops, but the unreasonable use will cause a series of environmental problems. To fulfill precision weed management [19,74], UAV RS can help to accurately locate weed areas, analyze weed types and weed density etc., thus using herbicides at fixed points quantitatively or applying improved and targeted mechanical soil tillage [27]. Weeds detection and mapping tries to find/map the locations of weeds in the obtained UAV RS images, and is achieved generally based on the different spatial distribution [27,75], shape [76], spectral signatures [53,77,78,79], or their combinations [80] of weeds compared to normal crops. Accordingly, the most important sensors as UAV payload are mainly RGB sensors [27,76,77,80], multispectral sensors [53,78] and hyperspectral sensors [79] ADDIN.

- Crop pest and disease detection: Field crops are subjected to the attack of various pests and diseases at stages from sowing to harvest, which affects the yield and quality of crops and become one of the main limits to agricultural production in China. As the main part of pest and disease management, early detection of pests and diseases from UAV RS images allows efficient application of pesticides and an early response to the production costs and environmental impact. Crop pest and disease detection tries to locate the possible pest or disease infected areas on leaves from observed UAV RS images, and the detection basis is mainly their spectral difference [81]. To obtain more details of pests and diseases on leaves, UAVs are usually with low flight height for observations with high spatial or spectral resolution [82,83,84]. Commonly mounted sensors are RGB sensors [83,85,86,87], multispectral sensors [88], infrared sensors [89] and hyperspectral sensors [82,84].

- Crop growth monitoring: RS can be used to monitor group and individual characteristics of crop growth, e.g., crop seedling condition, growth status and changes. Crop growth information monitoring is fundamental for regulating crop growth, diagnosing crop nutrient deficiencies, analyzing and predicting crop yield etc., and can provide decision-making basis for agricultural policy formulation and food trade. Crop growth monitoring is to build a multitemporal crop model to allow for comparison of different phenological stages [90], and UAV provides a good platform for obtaining crop information [91]. The crop growth is generally quantified by several indices, such as LAI, leaf dry weight, leaf nitrogen accumulation, etc., in which multiple spectral bands are usually needed. As a relatively more comprehensive task, sensors onboard UAVs are usually multispectral/hyperspectral ones [91,92] or the combination of RGB and infrared [93] or LiDAR [63].

- Crop yield estimation: Accurate yield estimates are essential for predicting the volume of stock needed and organizing harvesting operations. RS information can be used as input variables or parameters to directly or indirectly reflect the influencing factors in the process of crop growth and yield formation, alone or in combination with other information for crop yield estimation. It tries to estimate the crop yield by observing the morphological characteristics of crops in a non-destructive way [16]. Similar to the task of crop growth monitoring, crop yield estimation also relies on multiple spectral bands for better and richer information. Therefore, UAVs are usually equipped with multimodal sensors, for example, hyperspectral/multispectral [15,16,94,95,96,97], thermal infrared [95], and combination with RGB [15,16,94,95,96,97] or SAR [66].

- Crop type classification: Crop type maps are one of the most essential inputs for agriculture tasks such as crop yield estimation, and accurate crop type identification is important for subsidy control, biodiversity monitoring, soil protection, sustainable application of fertilizer etc. There exist practices to explore the discrimination of different crop types from RS images in complex and heterogeneous environments. Crop type classification task tries to discriminate different types of crop into a map based on the information captured by RS data, and is similar to land cover/land use classification [98]. According to the demands of different tasks, it can be implemented from different spatial scales. For larger scale classification, SAR sensors are used [64,99,100], and for smaller scale, RGB images from UAVs can be utilized [101], or with SAR data fused [102].

3. Deep Learning in Precision Agriculture with UAV Remote Sensing

3.1. Deep Learning Methods in Precision Agriculture

DL is a subset of artificial neural network (ANN) methods in machine learning. DL consists of several connected layers of neurons with activations like ANNs, but with more hidden layers and deeper and more complex combinations, which is responsible for obtaining better learning patterns than a common ANN. The concept of DL is proposed in 2006 by Hinton et al. [103] in which key issues for deep ANN training are solved. With the advance of computational capacity of computer hardware and the availability of large amounts of labeled samples, the massive training and inference of DL become possible and efficient, which makes DL outperform traditional ML methods in a variety of applications. In the last decade, DL methods have gained increasingly more attention, and become the de facto mainstream of ML. More fundamental details of DL models such as the activation functions, loss functions, optimizers, basic structures are referred to [26].

According to the data type processed and the type of network architectures, different types of DL models are designed and representative ones are CNN, recurrent neural network (RNN), and generative adversarial network (GAN) etc. These three types of network architectures are the most widely used in agriculture applications, especially for the UAV RS. CNNs are designed to deal with grid-like data, such as images, and it is therefore very suitable for supervised image processing and computer vision applications. It is usually composed of three distinct hierarchical structures, such as convolutional layers, pooling layers, and fully connected layers. Typical CNN architectures are AlexNet [104], GoogleNet [105], and ResNet [106] etc. RNNs, as supervised models, have also been applied to deal with time-series data via modeling time-related features. One typical RNN architecture is long short-term memory with its basic unit remembering information from arbitrary time intervals. Another network architecture that is popular and successful in recent years is GAN [107]. A GAN model consists of two sub-networks, one is generative network and the other is discriminative network. Its main idea comes from Zero-Sum Game, in which the generative network tries to generate samples as vivid as possible and the discriminative network tries to discriminate the fake ones and real ones. GANs have also been applied to image-to-image translation [108,109], sample augmentation [110,111] in the field of RS.

In UAV RS scenarios, most applications utilize images captured by cameras as their data inputs, i.e., they are computer vision related tasks. In this way, UAV RS tasks in PA that uses DL methods (mainly CNN) can be divided into three typical and principal computer vision tasks: classification, detection and segmentation [112].

- Classification tries to predict the presence/absence of at least one object of a particular object class in the image, and DL algorithms are required to provide a real-valued confidence of the object’s presence. Classification methods are mainly used to recognize crop diseases [86,113,114], weed type [27,115,116], or crop type [117,118].

- Detection tasks try to predict the bounding box of each object of a particular object class in the image with associated confidence, i.e., answer the question “where are the instances in the image, if any?” It means that the extracted object information is relatively more precise. Typical applications are finding the crops with pests [119] or other diseases [120], locating the weeds in the images [121,122], counting the crop number for yield estimation [123,124,125] or disaster evaluation [126], etc.

- Segmentation is a task that predicts the object label (for semantic segmentation) or instance label (for instance segmentation) of each pixel in the test image, which can be viewed as a more precise classification for each pixel. It can not only locate objects, but also obtain their pixels at a finer-grained level. Therefore, segmentation methods are usually used to accurately locate features of interest in images. Semantic segmentation can help locate crop leaf diseases [127,128], generating weed maps [76,78,129], or assessing crop growth [130,131] and yields [132], while instance segmentation can detect crop and weed plants [133,134], or conduct crop seed phenotyping [135] at a finer level.

Overall, the three principal kinds of computer vision techniques have played a crucial role in UAV-based RS for PA and support various typical applications as mentioned in Section 2.2, mainly including crop pest and disease detection, weed detection and mapping, crop growth monitoring and yield estimation, crop type classification etc. Table 4 shows a compilation of typical examples in PA using DL methods.

Table 4.

DL-based methods for typical UAV RS applications in PA.

3.2. Dataset for Intelligent Precision Agriculture

The sensors integrated with a UAV depend on the purpose, size, weight, power consumption etc. A number of reviews discuss the sensors on UAVs in the PA field, and to the best of our knowledge, only Zhang et al. [158] listed some datasets in agricultural dense scenes, but whether these datasets can be publicly available are not indicated. Hence, in this paper we give a compilation of publicly available UAV dataset with labels for the PA applications together with their descriptions including the platform, data type, major applications and links in Table 5, which attempt to facilitate the development, testing and comparison of relevant DL methods. We also summarized the available datasets with labels for related tasks from sensors onboard satellites, which may be used to obtain a pre-trained model.

Table 5.

A compilation of publicly available datasets with labels for PA applications.

4. Edge Intelligence for UAV RS in Precision Agriculture

4.1. Cloud and Edge Computing for UAVs

4.1.1. Cloud Computing Paradigm for UAVs

Cloud computing is a computing paradigm that provides end-users with infrastructure, platforms, software and other on-demand shared services through integrating large-scale and scalable computing, storage, data, applications and other distributed computing resources over an Internet connection [172,173]. The main characteristic of cloud computing is the change in the way resources are used. End-users normally run applications on their end-devices while the core service and processing are performed on cloud servers. At the same time, end-users do not need to master the corresponding technology or operation skills for device maintenance, but only focus on the required services. It improves service quality while reducing operation and maintenance costs. The key services that cloud computing offers include infrastructure as a service (IAAS), platform as a service (PAAS) and software as a service (SAAS) [174]. The cloud-computing paradigm provides the major following advantages:

- The number of servers in the cloud is huge, providing users with powerful computing and storage resources for massive UAV RS data processing.

- Cloud computing supports users to obtain services at any location from various terminals such as a laptop or a phone through virtualization.

- Cloud computing is a distributed computing architecture, and issues such as single-point errors are inevitable. The fault-tolerant mechanisms such as copy strategies ensure high reliability for various processing and analysis services.

- Cloud centers can dynamically allocate or release resources according to the needs of specific users, and can meet the dynamic scale growth requirements of applications and users. It benefits from the scalability of cloud computing.

There exist researches to construct cloud-based systems for UAV RS. Jeong et al. [175] proposes a UAV control system that performs real-time image processing in a cloud system and controls a UAV according to these computed results, wherein the UAV contains the minimal essential control function and shares data with the cloud server via WiFi. In [176], a cloud-based environment for generating yield estimation maps from apple orchards is presented using UAV images. The DL model for apple detection is trained and verified offline in the cloud service of Google Colab, along with the aid of GIS tools. Similarly, Ampatzidis et al. [143] developed a cloud and AI based application, i.e., Agroview to accurately and rapidly process, analyze and visualize data collected from UAVs. Agroview uses the Amazon Web Service (AWS) that provides highly reliable and scalable infrastructure for deploying cloud-based applications, a main application control machine as user interface, one instance for central processing units (CPU) intensive usage like data stitching, and one instance for graphics processing units (GPU) intensive usage like tree detection algorithm.

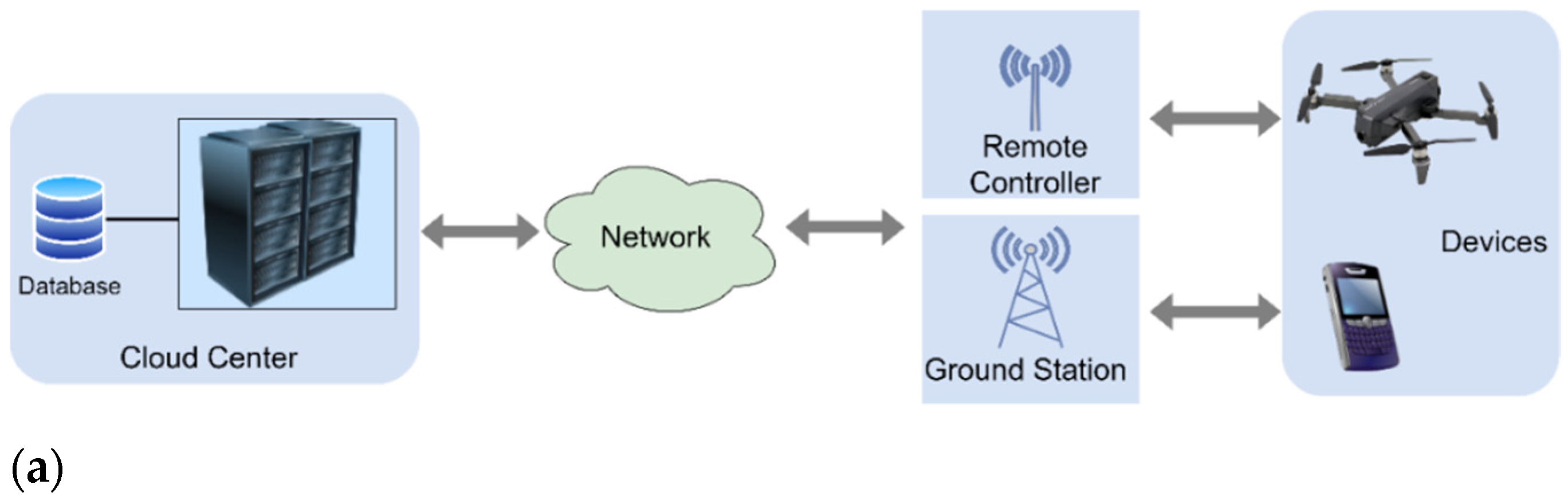

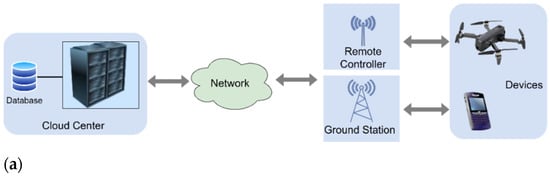

Current cloud-based applications generally follow the pipeline as shown in Figure 2a. According to the pattern of cloud computing, it suffers from the following disadvantages [177]. (a) With the growing quantity of data generated at the edge, the speed of data transportation through network is becoming the bottleneck for the cloud-computing paradigm. (b) The number of sensors at the edge of the network is increasing dramatically and data produced will be enormous, making conventional cloud computing not efficient enough to handle all these data. (c) In the cloud-computing paradigm, the end-devices at the edge usually play as a data consumer. The change from data consumer to data producer/consumer requires more function placement at the edge.

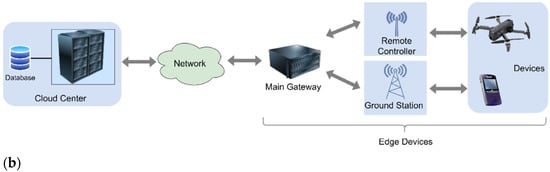

Figure 2.

Cloud computing paradigm and edge computing paradigm for UAV RS. (a) Cloud computing paradigm; (b) Edge computing paradigm.

4.1.2. Edge Computing Paradigm for UAVs

Edge computing fulfills the above-mentioned disadvantages by bringing the computing and storage resources to the edge of the network, which is close to mobile devices or sensors [177]. In the edge computing paradigm, the edge can perform computing offloading, data storage, caching and processing, as well as distribute request and delivery service from cloud to end-users. In recent years, edge computing has attracted tremendous attention for its low latency, mobility, proximity to the end-users and location awareness, compared to the cloud computing paradigm [137,172,178] as shown in Figure 2b. Compared with cloud computing, edge computing has the following characteristics:

- With the rapid development of IoT, devices around the world generate massive data, but only a few are critical and most are temporary, which do not require long-term storage. A large amount of temporary data are processed at the edge of the network, thereby reducing the pressure on network bandwidth and data centers.

- Although cloud computing can provide services for mobile devices to make up for their lack of computing, storage, power resources, the network transmission speed is limited by the development of communication technology, and there are issues such as unstable links and routing in a complex network environment. These factors can cause high latency, excessive jitter and slow data transmission speed, thus reducing the response of cloud services. Edge computing provides services near users, which can enhance the responsiveness of services.

- Edge computing provides infrastructures for the storage and use of critical data and improves data security.

For UAV-based RS applications in PA, edge computing is ideal for online tasks that require the above promising features. There are ways to avoid massive data from transferring to the cloud. The first one is to implement a UAV on-board real-time processing platform and delivery only the key information to the network, and the other one is to deploy a local ground station for UAV information processing.

As the computing and storage resources are quite limited, the optimization of computing workflow and algorithms are therefore required. For example, Li et al. [120] developed an airborne edge computing system for pine wilt disease detection of coniferous forests. The images captured by an onboard camera are directly passed to the edge computing module in which the lightweight YOLO model is implemented due to the limited processing and storage resources. Similarly, Deng et al. [137] used a lightweight segmentation model for real-time weed mapping on a NIVIDA Jetson TX board. Camargo et al. [115] specially optimized ResNet model and changed it from 32-bit to 16-bit to reduce computing on an NVIDIA Jetson AGX Xavier embedded system. Many researchers [179,180] also exploited the acceleration of traditional image processing algorithms for various RS data types on UAV platforms.

4.2. Edge Intelligence: Convergence of AI and Edge Computing

Artificial intelligence methods are computationally and storage intensive. Although it can achieve excellent performance in most applications, it also places high computing and storage demands on resources, making it challenge for real applications. The emerging of edge computing solves the above key problems in artificial intelligence applications on edge devices. The combination of edge computing and artificial intelligence yields edge intelligence [38]. Currently, there is no formal definition for edge intelligence internationally. Edge intelligence is regarded as the paradigm of running AI algorithms locally on an intelligent edge device [34]. Researchers also try to give a broader definition, which mainly includes AI for edge and AI on edge. The former part solves the problems in edge computing with AI, while the latter is the common definition [34] and the focus of this paper. In edge intelligence applications, edge devices can reduce the amount of data transferred to the central cloud and greatly save bandwidth resources. Meanwhile, running DL models at edge devices has lower computing consumption, higher performance, and can avoid possible privacy risks.

In the scope of this review, the combination of intelligent UAV RS and edge computing results in more effective PA applications. To obtain better performance, DL models tend to be designed deeper and more complex, which inevitably causes delays. Limited by processing and storage resources, these complex DL models can hardly be directly applied to UAVs. Much work needs to be fulfilled before implementing on the resources-limited UAV edge platforms for efficient PA applications. According to existing work, the major components of edge intelligence include: (a) edge caching, a distributed data system near end users to collect and store the data produced by edge devices and surrounding environments, and also the data received from the Internet; (b) edge training, a distributed learning procedure that learns the optimal models with the training set cached at the edge; (c) edge inference, which infers the testing instance on edge devices and servers using a trained model or algorithm; and d) edge offloading, a distributed computing paradigm that offers computing service for edge caching, edge training and edge inference [181]. As for UAV RS in PA, the existing studies mainly focus on edge training and edge inference, especially the inference onboard UAVs. On the other hand, for an edge intelligence system and industrial ecosystem, algorithms and computing resources are the key elements. As a result, we discuss the relevant developments from the perspectives of model design and edge resources for the edge intelligence in PA with UAV RS in Section 4.3 and Section 4.4.

4.3. Lightweight Network Model Design

To obtain higher classification, detection or segmentation accuracy, the deep CNN models are designed with deeper, wider and more complex architectures, which inevitably leads to computation-intensive and storage-intensive algorithms on computing devices. When it comes to edge devices, especially UAVs, their limited computing, storage, power consumption and bandwidth resources can hardly meet the requirements of intelligent applications.

Research has found that the structure of deep neural networks is redundant. Based on this property, the compression of deep neural networks will therefore greatly ease the burden of inference and accelerate computing to accommodate the usage on UAV platforms. In recent years, researchers have made great efforts to compress and accelerate deep neural network models from the aspects of algorithm optimization, hardware implementation and co-design [182]. The work in [183] by Han et al. is widely considered to be the first to systematically carry out deep model compression. Its main work includes pruning network connections to keep the more important ones only, quantitating model parameters to reduce the model volume and improve the efficiency, and further compressing the model through Huffman Coding. Through model compression, it is conducive to reducing computing, memory and power consumption, which makes it easier to be deployed to the UAV systems.

The mainstream deep model compression methods can be divided into the following categories: (1) lightweight convolution design, (2) parameter pruning, (3) low-rank factorization, (4) parameter quantization, and (5) knowledge distillation. Each tries to compress the model from different aspects, and they are always used with the combination. Table 6 shows a compilation of lightweight inference applications onboard UAVs for PA. As shown in Table 6, the research of the edge inference of UAV RS in the PA field is in the starting stage yet, and there are only a few attempts at general model quantization and pruning methods. Hence, we describe the categories and the corresponding development in details, taking more domains in addition to agriculture for edge inference onboard UAVs into consideration below.

Table 6.

A compilation of lightweight inference applications onboard UAVs for PA.

4.3.1. Lightweight Convolution Design

Lightweight convolution design refers to the compact design of convolutional filters. The convolutional filter is actually used for translation invariant feature extraction, and makes up the majority of CNN operations. Therefore, lightweight convolution design has been a hot research direction in DL.

It tries to replace the original heavy convolutional filters with compact ones. Specifically, it transforms the convolutional filter with large size into several smaller-sized ones and concatenates their results to achieve equivalent convolution results, as smaller-sized filters calculate much faster. Typical designs are SqueezeNet [187], MobileNet [188], and ShuffleNet [189]. SqueezeNet designs a fire module composed of a squeeze layer with 1×1 filters to reduce the input channels, and an expanding layer with a mix of 1×1 and 3×3 filters. MobileNet adopts the idea of depthwise separable convolutions to reduce the volume of parameters and computations, in which depthwise convolutions are used for feature extraction and pointwise convolutions are deployed to build feature via linear combinations of input channels. ShuffleNet designs with group convolution and channel shuffle to reduce parameters, and can obtain similar results compared with the original convolutions.

In [190], a DL fire recognition algorithm is proposed for embedded intelligent forest fire monitoring using UAVs. It is based on the lightweight MobileNet V3 to reduce the complexity of the conventional YOLOv4 network architecture. With regards to the model parameters, a decline of 63.91% from 63.94 million to 23.08 million is obtained. Egli et al. [184] designs a computationally lightweight CNN with a sequential model design with four consecutive convolution/pooling layers for tree species classification that uses high-resolution RGB images from automated UAV observations, which outperforms several different architectures on the available data set. Similarly, in order to accommodate the real-time performance on UAVs, Hua et al. [191] designs a lightweight E-Mobile Net as the backbone network of feature extraction for real-time tracking.

4.3.2. Parameter Pruning

In deep models, not all parameters contribute to the outstanding discriminative performance, thus many of them can be removed from the network while having the least effect on the accuracy of the trained models. Based on the principle, parameter pruning tries to prune out the redundant non-informative parameters from convolutional layers and fully connected layers for less computational operations and memory consumption.

There are several ways of pruning with different granularity. Some unimportant weight connection can be pruned out with certain threshold [192]. Similarly, individual redundant neurons, along with their input and output connections, can be pruned [193]. Furthermore, the filters composed of neurons can also be removed according to their importance which is indicated by L1 or L2 norm [194]. With the coarsest granularity, layers that are least informative can also be pruned, as shown in [185]. As for connection-level and neuron-level pruning, they introduce unstructured sparse connections in the network, which will also affect the computational efficiency. On the contrary, filter-level and layer-level pruning does not interfere with the normal forward computing, which can therefore compress the model and accelerate the model inference. Worth noting that pruning is always accompanied with model fine-tuning.

Wang et al. [190] eliminated the redundant channels through channel-level sparsity-induced regularization, and achieved a significant drop of model parameter number and inference time by over 95% and 75% but with comparable accuracy, thus making it suitable for real-time fire monitoring on UAV platforms. [185] adopts two ways of pruning, with one-shot pruning to achieve the desired compression ratio in a single step and iterative pruning to gradually remove connections until obtaining the targeted compression ratio. The model is retrained to readapt the parameters after pruning iterations to recover the accuracy drop. Aiming at secure edge computing for agricultural object detection application, Fan et al. [195] use layer pruning and filter pruning together to achieve a smaller structure and maximize real-time performance.

4.3.3. Low-rank Factorization

Low-rank factorization tries to factorize a large weight matrix or tensor into several smaller dimension matrices or tensors. It can be applied to both convolutional layer and fully-connected layer. When convolutional filters are factorized, it will make the inference process faster, and when applied to denser fully-connected layers, it will remove redundancy and reduce the storage requirements.

Lebedev et al. [196] explore the low-rank factorization of deep network through tensor decomposition and discriminative fine-tuning. Based on CP-decomposition, they decompose the original convolutional layer into a sequence of four layers with smaller filters, thus reducing the computations. Similarly, famous factorization methods like Tucker decomposition [197], and singular value decomposition [198,199] are also widely applied with low-rank constraints in the model training process to reduce the number of parameters and speed-up the network.

To meet the severe constraints of typical embedded systems in the applications for grape leaf disease detection, a low-rank CNN architecture LR-Net based on Tensor decomposition is developed in [200] for both convolutional layer and fully-connected layer, and the obvious performance gain is obtained compared with other lightweight network architectures.

4.3.4. Parameter Quantization

The intention of parameter quantization is to reduce the volume of the trained model during storage and transmission. Generally, weights in deep models are stored as 32-bit floating-point numbers. If their number of bits is reduced, it will lead to the reduction of operations and model sizes.

In recent years, low-bit quantization is becoming popular for deep model compression and acceleration. There are two types of quantization, one is parameter sharing for the trained model, and the other is the low-bit representation for model training. Parameter sharing designs a function that maps various weight parameters to the same value. In [201], a new network architecture HashedNets is designed, in which the weight parameters are randomly mapped to hash bucket through hash function and every parameter shares the same weight value. [202] develops an approximation that quantizes the gradients to 8-bit for GPU cluster parallelism. Further, [203] proposes incremental network quantization method that lossless quantizes parameters to low 5-bit. The challenging binary neural network [137] is also in the spot of researches.

To develop a lightweight network architecture for weed mapping tasks onboard UAVs, [137] conducted optimization and precision calibration during the inference process. The precision was reduced from 32-bit to 16-bit. Similarly, Camargo et al. [115] shifted their ResNet-18 model from 32-bit to 16-bit and observed speed performance decline on NVIDIA Jetson AGX Xavier. To be able to execute deep models efficiently in embedded platforms, Blekos et al. [186] perform quantization on the trained U-Net model to 8-bit integers with acceptable losses.

4.3.5. Knowledge Distillation

The main objective of knowledge distillation is to train a student network from the teacher network while maintaining its generalization capability [204]. The student network is lighter, i.e., having a smaller model size and less computation, but with the same or comparable performance as the larger network.

Great efforts have been done to improve the supervision of student network by different knowledge transferred. Romero et al. [205] proposed a FitNets model which teaches the student network to imitate the hints from both middle layers and output layer of the teach network. Instead of hard labels that are used, the work in [206] utilizes soft labels as the representation from teacher network. Kim et al. [207] proposed a paraphrasing based knowledge transfer method which uses convolution operations to paraphrase the teacher model knowledge and translate it to a student model. From the point of teacher networks, student networks can also learn knowledge from multiple teachers [208].

In the field of UAV based deep model inference, knowledge distillation is a promising direction. In [209], YOLO + MobileNet model acts as the teacher network, while the pruned model functions as the student network, and knowledge distillation algorithm is used to improve the detection accuracy of the pruned model. Qiu et al. [210] propose to distill knowledge to a lighter distilled network through soft labels from trained teacher network MobileNet. Similar applications using knowledge distillation for model compression can be found in [211,212].

4.4. Edge Resources for UAV RS

The key idea of edge computing is that computing should be closer to the data sources and users. It can avoid massive data transfer to the cloud and process data near the places where things and people produce or consume data, thus reducing the latency, pressure on network bandwidth and demand for computing and storage resources. Edge is a relative concept to the network core. It refers to any resource, storage, and network resource from the data source to the cloud-computing center. The resources on this path (from the data sources to cloud centers) can be regarded as a continuous system. Generally, the resources at the edge mainly include user terminals such as mobile phones and personal computers, infrastructure such as WiFi access points, cellular network base stations and routers, and embedded devices such as cameras and set-top boxes. These numerous resources around users are independent of each other, which are called edge nodes. In this paper, we focus on the scope of AI on edge among the edge intelligence, which is to run AI models on intelligent edge devices. Such devices have built-in processors with onboard analytics or AI capabilities, mainly including sensors, UAVs, autonomous cars, etc. Rather than uploading, processing and storing data to a cloud, intelligent edge devices offer the ability to process certain amounts of data directly, while reducing latency, bandwidth requirement, cost, privacy threats, etc.

For the scenario of edge computing for UAV RS in PA, applications can be deployed on the UAV intelligent edge devices with embedded computing platforms or edge servers. Here in this paper we mainly discuss the former. To accelerate the processing of complex DL models, a few types of onboard hardware accelerators are mainly included in UAV solutions currently.

The following list the popular examples, which are divided into the general-purpose CPU based solutions, GPU solutions and field programmable gate arrays (FPGA) solutions. Very few studies also use microcontroller unit (MCU) [213] and vision processing unit (VPU) [214] for UAV image recognition and monitoring.

- General-purpose CPU based solutions: Multi-core CPUs are latency-oriented architectures, which have more computational power per core, but less number of cores, and are more suitable for task-level parallelism [215,216]. As for the general-purpose software-programmable platforms, Raspberry Pi has been widely adopted as ready-to-use solutions for a variety of UAV applications due to their weight, size and low power consumption.

- GPU solutions: GPUs have been designed as throughput-oriented architectures, and own less powerful cores than that of CPUs but have hundreds or thousands of cores and significantly larger memory bandwidth, which make GPUs suitable for data-level parallelism [215]. In recent years, the embedded GPUs especially from NVIDIA, for example, the Jetson boards, standing out among the other manufacturers have been widely used to provide flexible solutions compared with FPGAs.

- FPGA solutions: The advent of FPGA-based embedded platforms allows combining high-level management capabilities of processors and flexible operations of programmable hardware [217]. With the advantages of: a) relatively smaller size and weight compared with clusters, multi-core and many-core processors, b) significantly lower power consumption compared with GPUs, and c) reprogrammed ability during the flight different from application-specific integrated circuit (ASIC), FPGA-based platforms such as the Xilinx Zynq-7000 family provide plenty of solutions for real-time processing onboard UAVs [218].

Table 7 gives a compilation of edge computing platforms onboard UAVs for typical RS applications with specific platform vendor, model, configurations and applications.

Table 7.

A compilation of computing platforms onboard UAVs for typical RS applications.

5. Future Directions

Despite the great progress of DL and UAV RS techniques in the PA field, the research and practice of edge intelligence, especially in PA is still in an early stage. In addition to common challenges in PA, UAV RS and edge intelligence, here we list a few specific issues that need to be addressed within the scope of this paper.

- Lightweight intelligent model design in PA for edge inference. As mentioned in Section 4, most DL-based models for UAV RS data processing and analytics in PA are highly resources intensive. Hardware with powerful computing capability is important to support the training and inference of these large AI models. Currently, there are just a few studies towards applying common parameter pruning and quantization methods in PA with UAV RS. The metrics of size and efficiency can be further improved by considering the data and algorithm characteristics and exploiting other sophisticated model compression techniques such as knowledge distillation and a combination of multiple compression methods [183]. In addition, instead of using existing AI models, the neural architecture search (NAS) technique [243] can be utilized to derive models tailored to the hardware resource constraints on the performance metrics, e.g., latency and energy efficiency considering the underlying edge devices [34].

- Transfer learning and incremental learning for intelligent PA models on UAV edge devices. The performance of many DL models heavily relies on the quantity and quality of datasets. However, it is difficult or expensive to collect a large amount of data with labels. Therefore, edge devices can exploit transfer learning to learn a competitive model, in which a pre-trained model with a large-scale dataset is further fine-tuned according to the domain-specific data [244,245]. Secondly, edge devices such as UAVs may collect data with different distributions or even data belonging to an unknown class compared with the original training data during flight. The model on the edge devices can be updated by incremental learning to give better prediction performance [246].

- Collaboration of RS cloud centers, ground control stations and UAV edge devices. To bridge the gap between the low computing and storage capabilities of edge devices and the high resource requirements of DL training, the collaborative computing between the end, the edge and the cloud is a possible solution. It has become the trend for edge intelligence architectures and application scenes. A good cloud-edge-end collaboration architecture should take into account the characteristics of heterogeneous devices, asynchronous communication and diverse computing and storage resources, thus achieving collaborative model training and inference [247]. In the conventional mode, the model training is often performed in the cloud, and the trained model is deployed on edge devices. This mode is simple, but cannot fully utilize resources. For the case of edge intelligence for UAV RS in PA, decentralized edge devices and data centers can cooperate with each other to train or improve a model by using federated learning [248].

6. Conclusions

This paper gives a systematic and comprehensive overview of the latest development of PA promoted by UAV RS and edge intelligence techniques. We first introduce the application of UAV RS in PA, including the fundamentals of various types of UAV systems and sensors and typical applications to give a preliminary picture. The latest development of DL methods and public datasets in PA with UAV RS are then presented. Subsequently, we give a thorough analysis of the development of edge intelligence in PA with UAV RS, including the cloud computing and edge computing paradigms, the basic concepts and major components (i.e., edge caching, edge training, edge inference and edge offloading) of edge intelligence, the developments from the perspectives of network model design and edge resources. Finally, we present several issues that need to be further addressed.

Through this survey, we provide preliminary insights into how PA benefits from UAV RS together with edge intelligence. In recent years, the small and light, fixed-wing or industrial rotor-wing UAV systems have been widely adopted in PA. Due to the advantages of easy-to-use, high flexibility, high resolution and being less affected by clouds during flight at low altitudes, UAV RS has become a powerful manner to monitor agricultural conditions. In addition, the integration of DL techniques in PA with UAV RS reached higher accuracies compared with traditional analysis methods. These PA applications have been transformed into computer vision tasks including classification, object detection and segmentation, and CNN and RNN are the most widely adopted network architectures. There are also a few publicly available UAV datasets for intelligent PA, mainly from RGB sensors and very few from multispectral and hyperspectral sensors. These datasets can facilitate the validation and comparison of DL methods. However, deep models generally bring higher computing, memory and network requirements, hence cloud computing is a common solution to increase efficiency with high scalability and low cost, but at the cost of high latency and pressure on the network bandwidth. The emerging of edge computing brings the computing to the edge of the network close to the data sources. The AI and edge computing further yield edge intelligence, providing a promising solution for efficient intelligent UAV RS applications. In terms of hardware, typical computing solutions include CPUs, GPUs and FPGAs. From the perspectives of algorithm, lightweight model design deriving from model compression techniques especially model pruning and quantization is one of the most significant and widely used technique. The PA supported by advanced UAV RS and edge intelligence techniques offers the capabilities to increase productivity and efficiency while reducing costs.

The research and practice of edge intelligence, especially in PA with UAV RS is still in an early stage. In the future, in addition to the general challenges of PA, UAV RS and edge intelligence, there are issues within the scope of this paper that need to be addressed. These directions can include designing and implementing lightweight models for PA with UAV RS on edge devices, realizing transfer learning and incremental learning for intelligent PA models on UAV edge devices, and efficient collaboration of RS cloud centers, ground control stations and UAV edge devices.

Author Contributions

Conceptualization, J.L., J.X. and J.Y.; literature investigation and analysis, J.L., Y.J. and J.X.; writing—original draft preparation, J.L., J.X., R.L. and J.Y.; writing—review and editing, J.L., J.Y. and L.W; visualization, J.L., Y.J. and J.X.; supervision, J.L. and L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China under Grant No. 41901376 and No. 42172333, and in part by the Fundamental Research Funds for the Central Universities, China University of Geosciences (Wuhan).

Data Availability Statement

Data sharing is not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- ISPA. Precision Ag Definition. Available online: https://www.ispag.org/about/definition (accessed on 17 October 2021).

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Schimmelpfennig, D. Farm profits and adoption of precision agriculture; U.S. Department of Agriculture, Economic Research Service: Washington, DA, USA, 2016.

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Eskandari, R.; Mahdianpari, M.; Mohammadimanesh, F.; Salehi, B.; Brisco, B.; Homayouni, S. Meta-Analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-Environmental Monitoring Using Machine Learning and Statistical Models. Remote Sens. 2020, 12, 3511. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Jang, G.; Kim, J.; Yu, J.-K.; Kim, H.-J.; Kim, Y.; Kim, D.-W.; Kim, K.-H.; Lee, C.W.; Chung, Y.S. Review: Cost-Effective Unmanned Aerial Vehicle (UAV) Platform for Field Plant Breeding Application. Remote Sens. 2020, 12, 998. [Google Scholar] [CrossRef] [Green Version]

- US Department of Defense. Unmanned Aerial Vehicle. Available online: https://www.thefreedictionary.com/Unmanned+Aerial+Vehicle (accessed on 19 October 2021).

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Christiansen, M.P.; Laursen, M.S.; Jørgensen, R.N.; Skovsen, S.; Gislum, R. Designing and Testing a UAV Mapping System for Agricultural Field Surveying. Sensors 2017, 17, 2703. [Google Scholar] [CrossRef] [Green Version]

- Popescu, D.; Stoican, F.; Stamatescu, G.; Ichim, L.; Dragana, C. Advanced UAV–WSN System for Intelligent Monitoring in Precision Agriculture. Sensors 2020, 20, 817. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Zha, Y.; Zhu, P. Deep convolutional neural networks for rice grain yield estimation at the ripening stage using UAV-based remotely sensed images. Field Crops Res. 2019, 235, 142–153. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Coombes, M.; Hu, X.; Wang, C.; Xu, X.; Li, Q.; Guo, L.; Chen, W.-H. Wheat yellow rust monitoring by learning from multispectral UAV aerial imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

- Guo, A.; Huang, W.; Dong, Y.; Ye, H.; Ma, H.; Liu, B.; Wu, W.; Ren, Y.; Ruan, C.; Geng, Y. Wheat Yellow Rust Detection Using UAV-Based Hyperspectral Technology. Remote Sens. 2021, 13, 123. [Google Scholar] [CrossRef]

- Bajwa, A.; Mahajan, G.; Chauhan, B. Nonconventional Weed Management Strategies for Modern Agriculture. Weed Sci. 2015, 63, 723–747. [Google Scholar] [CrossRef]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV Low-Altitude Remote Sensing for Precision Weed Management. Weed Technol. 2018, 32, 2–6. [Google Scholar] [CrossRef]

- Van Klompenburg, T.; Kassahun, A.; Catal, C. Crop yield prediction using machine learning: A systematic literature review. Comput. Electron. Agric. 2020, 177, 105709. [Google Scholar] [CrossRef]

- Su, Y.-X.; Xu, H.; Yan, L.-J. Support vector machine-based open crop model (SBOCM): Case of rice production in China. Saudi J. Biol. Sci. 2017, 24, 537–547. [Google Scholar] [CrossRef]

- Everingham, Y.; Sexton, J.; Skocaj, D.; Inman-Bamber, G. Accurate prediction of sugarcane yield using a random forest algorithm. Agron. Sustain. Dev. 2016, 36, 27. [Google Scholar] [CrossRef] [Green Version]

- Chandra, A.L.; Desai, S.V.; Guo, W.; Balasubramanian, V.N. Computer vision with deep learning for plant phenotyping in agriculture: A survey. arXiv Prepr. 2020, arXiv:2006.11391. [Google Scholar]

- Zhou, L.; Zhang, C.; Liu, F.; Qiu, Z.; He, Y. Application of Deep Learning in Food: A Review. Compr. Rev. Food Sci. Food Saf. 2019, 18, 1793–1811. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with Unsupervised Data Labeling for Weed Detection in Line Crops in UAV Images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Kitano, B.T.; Mendes, C.C.T.; Geus, A.R.; Oliveira, H.C.; Souza, J.R. Corn plant counting using deep learning and UAV images. IEEE Geosci. Remote. Sens. Lett. 2019, 1–5. [Google Scholar] [CrossRef]

- Nowakowski, A.; Mrziglod, J.; Spiller, D.; Bonifacio, R.; Ferrari, I.; Mathieu, P.P.; Garcia-Herranz, M.; Kim, D.-H. Crop type mapping by using transfer learning. Int. J. Appl. Earth Obs. Geoinf. 2021, 98, 102313. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnarson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Chen, J.; Ran, X. Deep Learning with Edge Computing: A Review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Liu, J.; Liu, R.; Ren, K.; Li, X.; Xiang, J.; Qiu, S. High-Performance Object Detection for Optical Remote Sensing Images with Lightweight Convolutional Neural Networks. In Proceedings of the 2020 IEEE 22nd International Conference on High Performance Computing and Communications; IEEE 18th International Conference on Smart City; IEEE 6th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Yanuca Island, Cuvu, Fiji, 14–16 December 2020; pp. 585–592. [Google Scholar]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef] [Green Version]

- Pu, Q.; Ananthanarayanan, G.; Bodik, P.; Kandula, S.; Akella, A.; Bahl, P.; Stoica, I. Low latency geo-distributed data analytics. ACM SIGCOMM Comp. Com. Rev. 2015, 45, 421–434. [Google Scholar] [CrossRef] [Green Version]

- Sittón-Candanedo, I.; Alonso, R.S.; Rodríguez-González, S.; Coria, J.A.G.; De La Prieta, F. Edge Computing Architectures in Industry 4.0: A General Survey and Comparison. International Workshop on Soft Computing Models in Industrial and Environmental Applications; Springer: Berlin/Heidelberg, Germany, 2019; pp. 121–131. [Google Scholar]

- Plastiras, G.; Terzi, M.; Kyrkou, C.; Theocharidcs, T. Edge intelligence: Challenges and opportunities of near-sensor machine learning applications. In Proceedings of the 2018 IEEE 29th International Conference on Application-Specific Systems, Architectures and Processors (ASAP), Milano, Italy, 10–12 July 2018; pp. 1–7. [Google Scholar]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef] [Green Version]

- Boursianis, A.D.; Papadopoulou, M.S.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in smart farming: A comprehensive review. Internet Things 2020, 100187, in press. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned Aerial Vehicles in Agriculture: A Review of Perspective of Platform, Control, and Applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Mogili, U.R.; Deepak, B.B.V.L. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef] [Green Version]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Santos, L.; Santos, F.N.; Oliveira, P.M.; Shinde, P. Deep Learning Applications in Agriculture: A Short Review. Iberian Robotics Conference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 139–151. [Google Scholar]

- Civil Aviation Administration of China. Interim Regulations on Flight Management of Unmanned Aerial Vehicles. 2018; Volume 2021. Available online: http://www.caac.gov.cn/HDJL/YJZJ/201801/t20180126_48853.html (accessed on 17 October 2021).

- Park, M.; Lee, S.; Lee, S. Dynamic topology reconstruction protocol for uav swarm networking. Symmetry 2020, 12, 1111. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Hayat, S.; Yanmaz, E.; Muzaffar, R. Survey on Unmanned Aerial Vehicle Networks for Civil Applications: A Communications Viewpoint. IEEE Commun. Surv. Tutor. 2016, 18, 2624–2661. [Google Scholar] [CrossRef]

- Xie, C.; Yang, C. A review on plant high-throughput phenotyping traits using UAV-based sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Triantafyllou, A.; Bibi, S.; Sarigannidis, P.G. Data acquisition and analysis methods in UAV-based applications for Precision Agriculture. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 377–384. [Google Scholar]

- Tahir, M.N.; Lan, Y.; Zhang, Y.; Wang, Y.; Nawaz, F.; Shah, M.A.A.; Gulzar, A.; Qureshi, W.S.; Naqvi, S.M.; Naqvi, S.Z.A. Real time estimation of leaf area index and groundnut yield using multispectral UAV. Int. J. Precis. Agric. Aviat. 2020, 3. [Google Scholar]

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multi-spectral UAV data. Int. J. Remote Sens. 2018, 39, 5432–5452. [Google Scholar] [CrossRef]

- Wang, H.; Mortensen, A.K.; Mao, P.; Boelt, B.; Gislum, R. Estimating the nitrogen nutrition index in grass seed crops using a UAV-mounted multispectral camera. Int. J. Remote Sens. 2019, 40, 2467–2482. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J., Jr. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Ge, X.; Wang, J.; Ding, J.; Cao, X.; Zhang, Z.; Liu, J.; Li, X. Combining UAV-based hyperspectral imagery and machine learning algorithms for soil moisture content monitoring. PeerJ 2019, 7, e6926. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Yang, G.; Liu, J.; Zhang, X.; Xu, B.; Wang, Y.; Zhao, C.; Gai, J. Estimation of soybean breeding yield based on optimization of spatial scale of UAV hyperspectral image. Trans. Chin. Soc. Agric. Eng. 2017, 33, 110–116. [Google Scholar]

- Prakash, A. Thermal remote sensing: Concepts, issues and applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 239–243. [Google Scholar]

- Weng, Q. Thermal infrared remote sensing for urban climate and environmental studies: Methods, applications, and trends. ISPRS J. Photogramm. Remote Sens. 2009, 64, 335–344. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- Dong, P.; Chen, Q. LiDAR Remote Sensing and Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Zhou, L.; Gu, X.; Cheng, S.; Yang, G.; Shu, M.; Sun, Q. Analysis of plant height changes of lodged maize using UAV-LiDAR data. Agriculture 2020, 10, 146. [Google Scholar] [CrossRef]

- Shendryk, Y.; Sofonia, J.; Garrard, R.; Rist, Y.; Skocaj, D.; Thorburn, P. Fine-scale prediction of biomass and leaf nitrogen content in sugarcane using UAV LiDAR and multispectral imaging. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102177. [Google Scholar] [CrossRef]

- Ndikumana, E.; Minh, D.H.T.; Baghdadi, N.; Courault, D.; Hossard, L. Deep Recurrent Neural Network for Agricultural Classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef] [Green Version]

- Lyalin, K.S.; Biryuk, A.A.; Sheremet, A.Y.; Tsvetkov, V.K.; Prikhodko, D.V. UAV synthetic aperture radar system for control of vegetation and soil moisture. In Proceedings of the 2018 IEEE Conference of Russian Young Researchers in Electrical and Electronic Engineering (EIConRus), St. Petersburg and Moscow, Russia, 29 January–1 February 2018; pp. 1673–1675. [Google Scholar]

- Liu, C.-A.; Chen, Z.-X.; Shao, Y.; Chen, J.-S.; Hasi, T.; Pan, H.-Z. Research advances of SAR remote sensing for agriculture applications: A review. J. Integr. Agric. 2019, 18, 506–525. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Allred, B.; Eash, N.; Freeland, R.; Martinez, L.; Wishart, D. Effective and efficient agricultural drainage pipe mapping with UAS thermal infrared imagery: A case study. Agric. Water Manag. 2018, 197, 132–137. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. J. Sensors 2017, 2017, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Dai, B.; He, Y.; Gu, F.; Yang, L.; Han, J.; Xu, W. A vision-based autonomous aerial spray system for precision agriculture. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 507–513. [Google Scholar]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.; Pessin, G.; de Carvalho, A.; Krishnamachari, B.; Ueyama, J. An adaptive approach for UAV-based pesticide spraying in dynamic environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Faiçal, B.S.; Pessin, G.; Filho, G.P.R.; Carvalho, A.C.P.L.F.; Gomes, P.H.; Ueyama, J. Fine-Tuning of UAV Control Rules for Spraying Pesticides on Crop Fields: An Approach for Dynamic Environments. Int. J. Artif. Intell. Tools 2016, 25, 1660003. [Google Scholar] [CrossRef] [Green Version]

- Esposito, M.; Crimaldi, M.; Cirillo, V.; Sarghini, F.; Maggio, A. Drone and sensor technology for sustainable weed management: A review. Chem. Biol. Technol. Agric. 2021, 8, 18. [Google Scholar] [CrossRef]

- Bah, M.D.; Dericquebourg, E.; Hafiane, A.; Canals, R. Deep Learning based Classification System for Identifying Weeds using High-Resolution UAV Imagery. Science and Information Conference; Springer: Berlin/Heidelberg, Germany, 2018; pp. 176–187. [Google Scholar]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e0196302. [Google Scholar] [CrossRef] [Green Version]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. UK 2019, 9, 1–12. [Google Scholar] [CrossRef]

- Sa, I.; Popović, M.; Khanna, R.; Chen, Z.; Lottes, P.; Liebisch, F.; Nieto, J.; Stachniss, C.; Walter, A.; Siegwart, R. WeedMap: A Large-Scale Semantic Weed Mapping Framework Using Aerial Multispectral Imaging and Deep Neural Network for Precision Farming. Remote Sens. 2018, 10, 1423. [Google Scholar] [CrossRef] [Green Version]

- Scherrer, B.; Sheppard, J.; Jha, P.; Shaw, J.A. Hyperspectral imaging and neural networks to classify herbicide-resistant weeds. J. Appl. Remote Sens. 2019, 13, 044516. [Google Scholar] [CrossRef]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep learning versus Object-based Image Analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Hasan, R.I.; Yusuf, S.M.; Alzubaidi, L. Review of the State of the Art of Deep Learning for Plant Diseases: A Broad Analysis and Discussion. Plants 2020, 9, 1302. [Google Scholar] [CrossRef] [PubMed]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-Based Remote Sensing Technique to Detect Citrus Canker Disease Utilizing Hyperspectral Imaging and Machine Learning. Remote Sens. 2019, 11, 1373. [Google Scholar] [CrossRef] [Green Version]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.A.D.S.; Amorim, W.P.; Roel, A.R.; Pistori, H. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A Deep Learning-Based Approach for Automated Yellow Rust Disease Detection from High-Resolution Hyperspectral UAV Images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef] [Green Version]

- Hu, G.; Yin, C.; Wan, M.; Zhang, Y.; Fang, Y. Recognition of diseased Pinus trees in UAV images using deep learning and AdaBoost classifier. Biosyst. Eng. 2020, 194, 138–151. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Oliveira, A.D.S.; Alvarez, M.; Amorim, W.P.; Belete, N.A.D.S.; Da Silva, G.G.; Pistori, H. Automatic Recognition of Soybean Leaf Diseases Using UAV Images and Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 17, 903–907. [Google Scholar] [CrossRef]