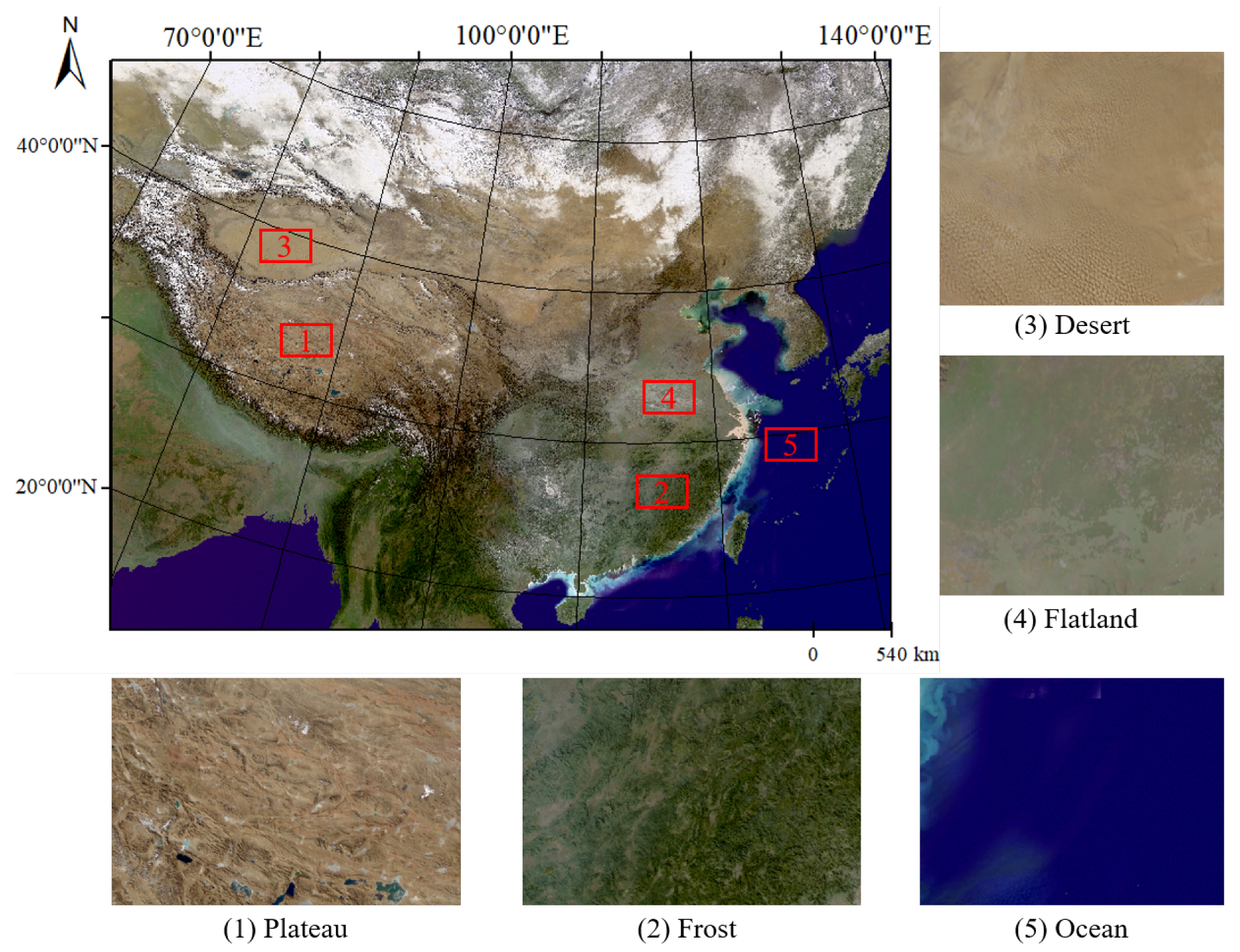

Figure 1.

Location and true-color combination 250 m FY-3D data (R, G, B) of the study area. The region contains many kinds of landcover, including oceans, deserts, forests, plateau, and others.

Figure 1.

Location and true-color combination 250 m FY-3D data (R, G, B) of the study area. The region contains many kinds of landcover, including oceans, deserts, forests, plateau, and others.

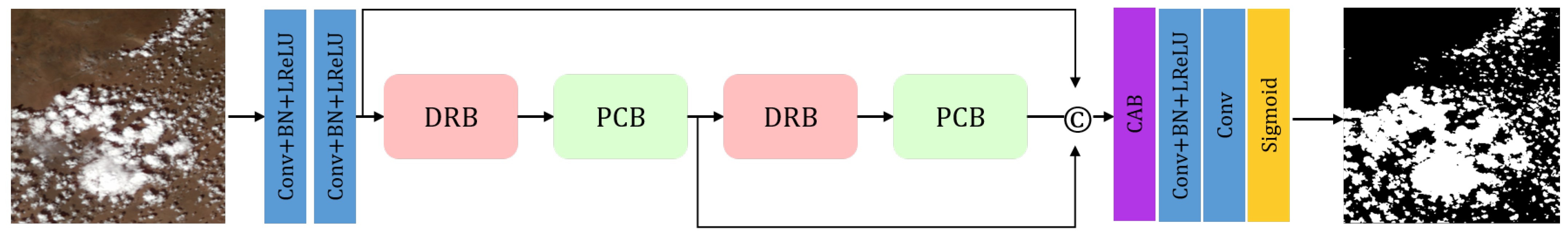

Figure 2.

The architecture of the proposed Pyramid Contextual Network for cloud detection contains Dilated Residual Blocks (DRB), Pyramid Contextual Blocks (PCB), and Channel Attention Block (CAB). The DRB includes dilated convolution and residual connection to acquire a wider receptive field. The PCB is designed for grasping global contextual information. The CAB is used for choosing the best channel to make the cloud mask. The network takes a cloud image as input and outputs the cloud mask.

Figure 2.

The architecture of the proposed Pyramid Contextual Network for cloud detection contains Dilated Residual Blocks (DRB), Pyramid Contextual Blocks (PCB), and Channel Attention Block (CAB). The DRB includes dilated convolution and residual connection to acquire a wider receptive field. The PCB is designed for grasping global contextual information. The CAB is used for choosing the best channel to make the cloud mask. The network takes a cloud image as input and outputs the cloud mask.

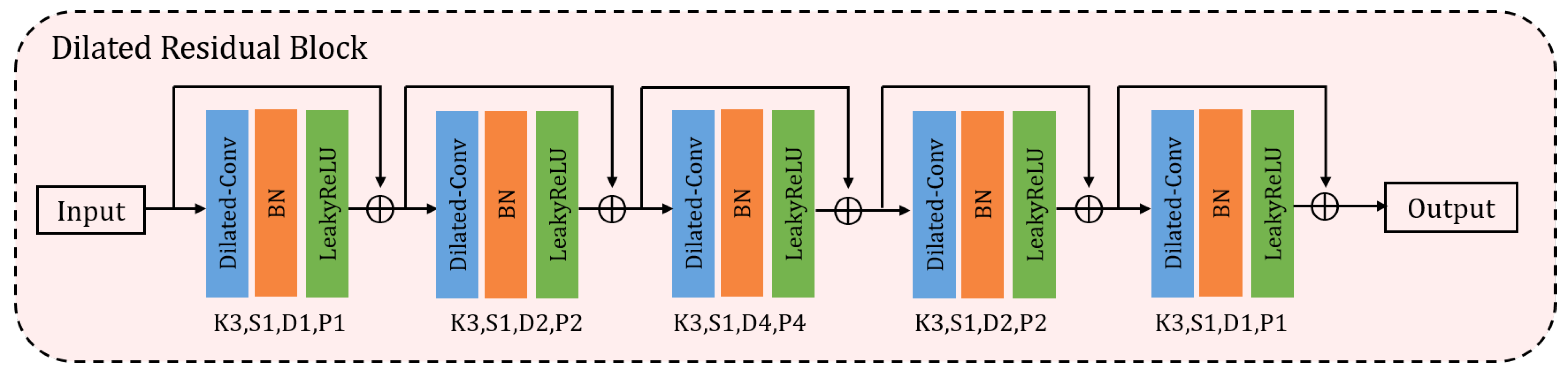

Figure 3.

Architecture of Dilated Residual Block. The input feature is fed into DRB block and go through five Dilated Conv-BN-LeakyReLU groups. To preserve the information of the input feature, we add the residual connection to ensure that there is no loss of information from the beginning layers. K, S, D, P mean kernel size, stride, dilated rate and padding size, respectively.

Figure 3.

Architecture of Dilated Residual Block. The input feature is fed into DRB block and go through five Dilated Conv-BN-LeakyReLU groups. To preserve the information of the input feature, we add the residual connection to ensure that there is no loss of information from the beginning layers. K, S, D, P mean kernel size, stride, dilated rate and padding size, respectively.

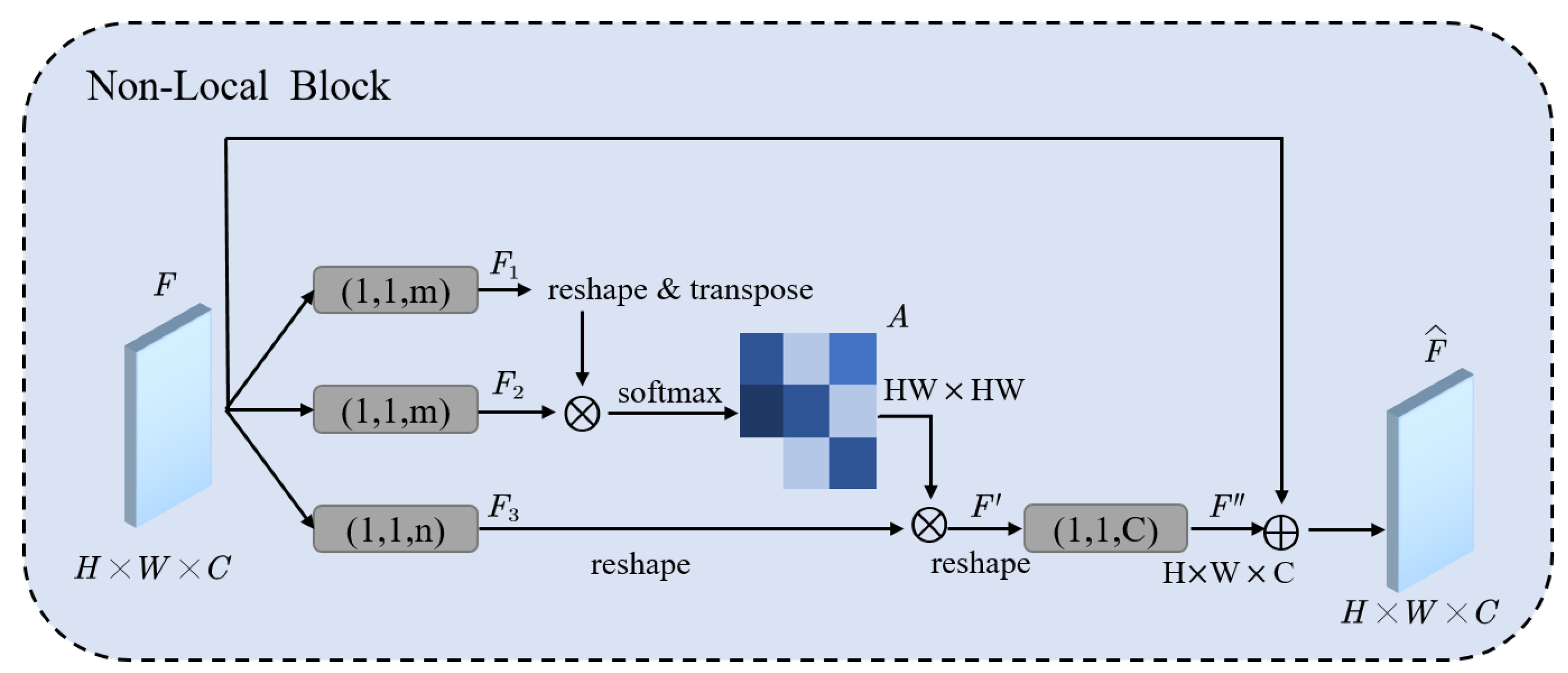

Figure 4.

The architecture of non-local block [

45]. “⨂” denotes matrix multiplication, and “⨁” denotes the element-wise sum. The SoftMax operation is performed on each row.

A is the attention map which can capture long-range dependencies. The gray boxes denote

convolutions, (k, s, f) mean kernel size k, stride s and number of filters f.

Figure 4.

The architecture of non-local block [

45]. “⨂” denotes matrix multiplication, and “⨁” denotes the element-wise sum. The SoftMax operation is performed on each row.

A is the attention map which can capture long-range dependencies. The gray boxes denote

convolutions, (k, s, f) mean kernel size k, stride s and number of filters f.

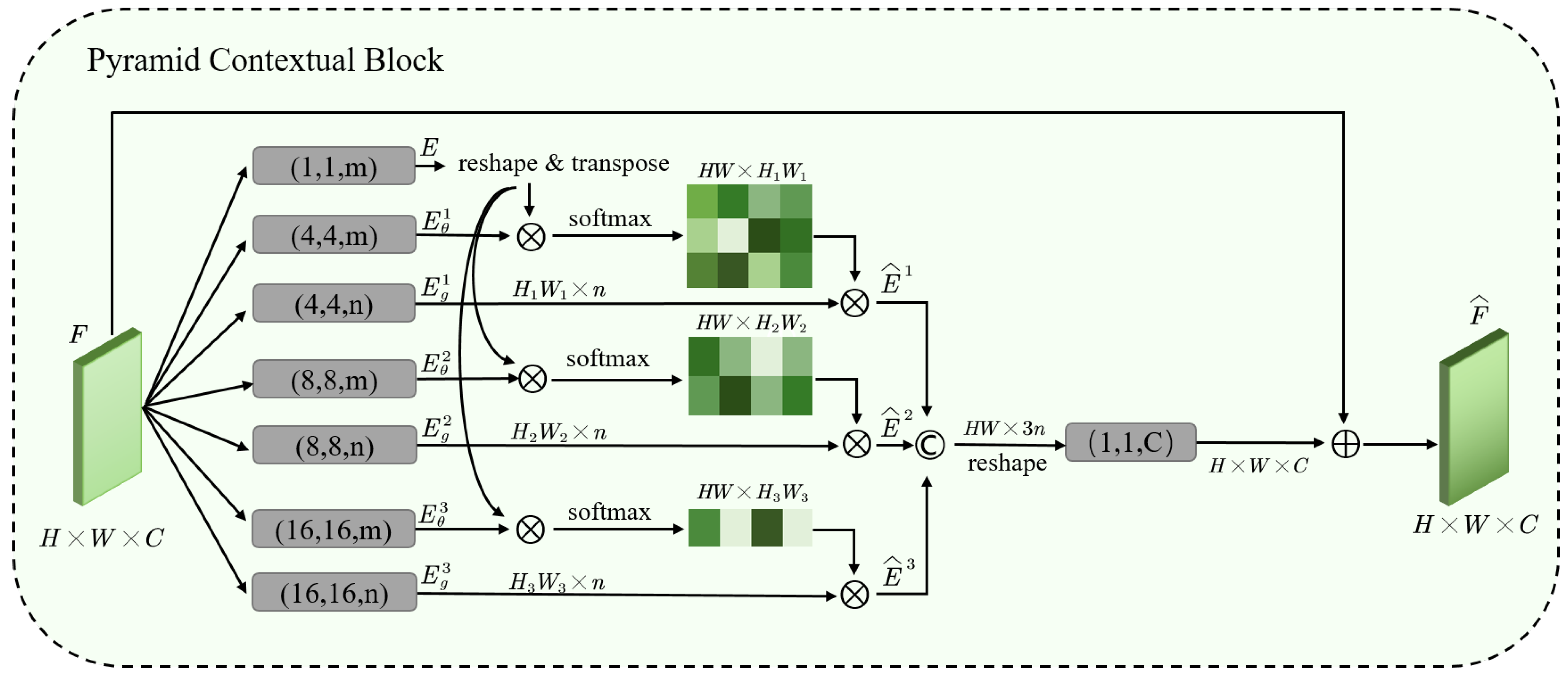

Figure 5.

The architecture of our Pyramid Contextual Block. “⨂” denotes matrix multiplication, and “⨁” denotes element-wise sum. The SoftMax operation is performed on each row. Compared with non-local block, we use multi-scale features and different sizes of convolution kernels to reduce the parameters and obtain long-range correlation of the whole image. , and are the enhanced features generated by multi-scale attention maps. is the output feature of PCB. The gray boxes denote convolutional layers, (k, s, f) mean kernel size k, stride s and number of filters f.

Figure 5.

The architecture of our Pyramid Contextual Block. “⨂” denotes matrix multiplication, and “⨁” denotes element-wise sum. The SoftMax operation is performed on each row. Compared with non-local block, we use multi-scale features and different sizes of convolution kernels to reduce the parameters and obtain long-range correlation of the whole image. , and are the enhanced features generated by multi-scale attention maps. is the output feature of PCB. The gray boxes denote convolutional layers, (k, s, f) mean kernel size k, stride s and number of filters f.

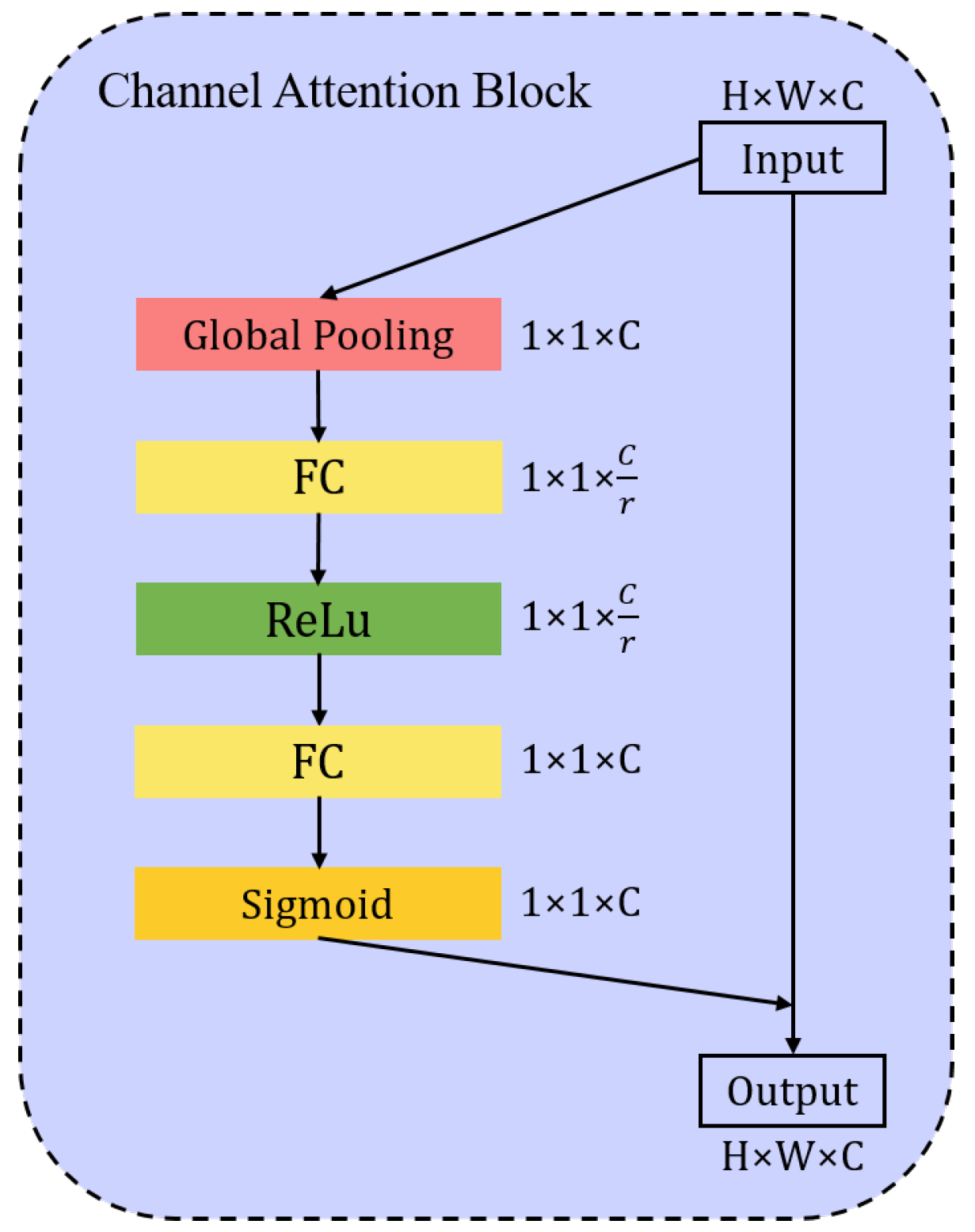

Figure 6.

The architecture of Channel Attention Block. The feature map obtains a channel descriptor of after squeeze and resampling operations. Then, it is activated by a self-gating mechanism based on channel dependence. The feature maps are reweighted to generate the output which can be fed directly into subsequent layers.

Figure 6.

The architecture of Channel Attention Block. The feature map obtains a channel descriptor of after squeeze and resampling operations. Then, it is activated by a self-gating mechanism based on channel dependence. The feature maps are reweighted to generate the output which can be fed directly into subsequent layers.

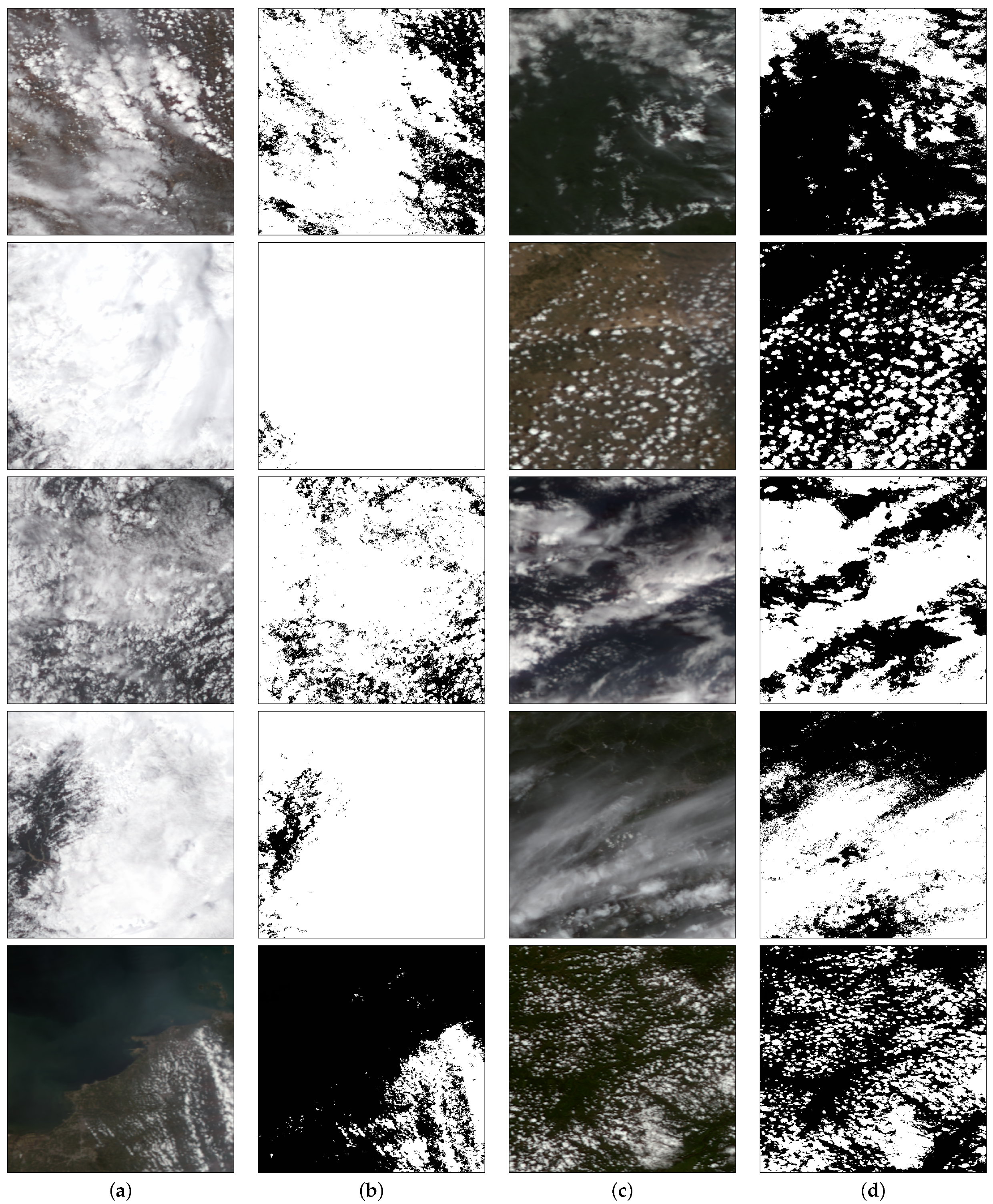

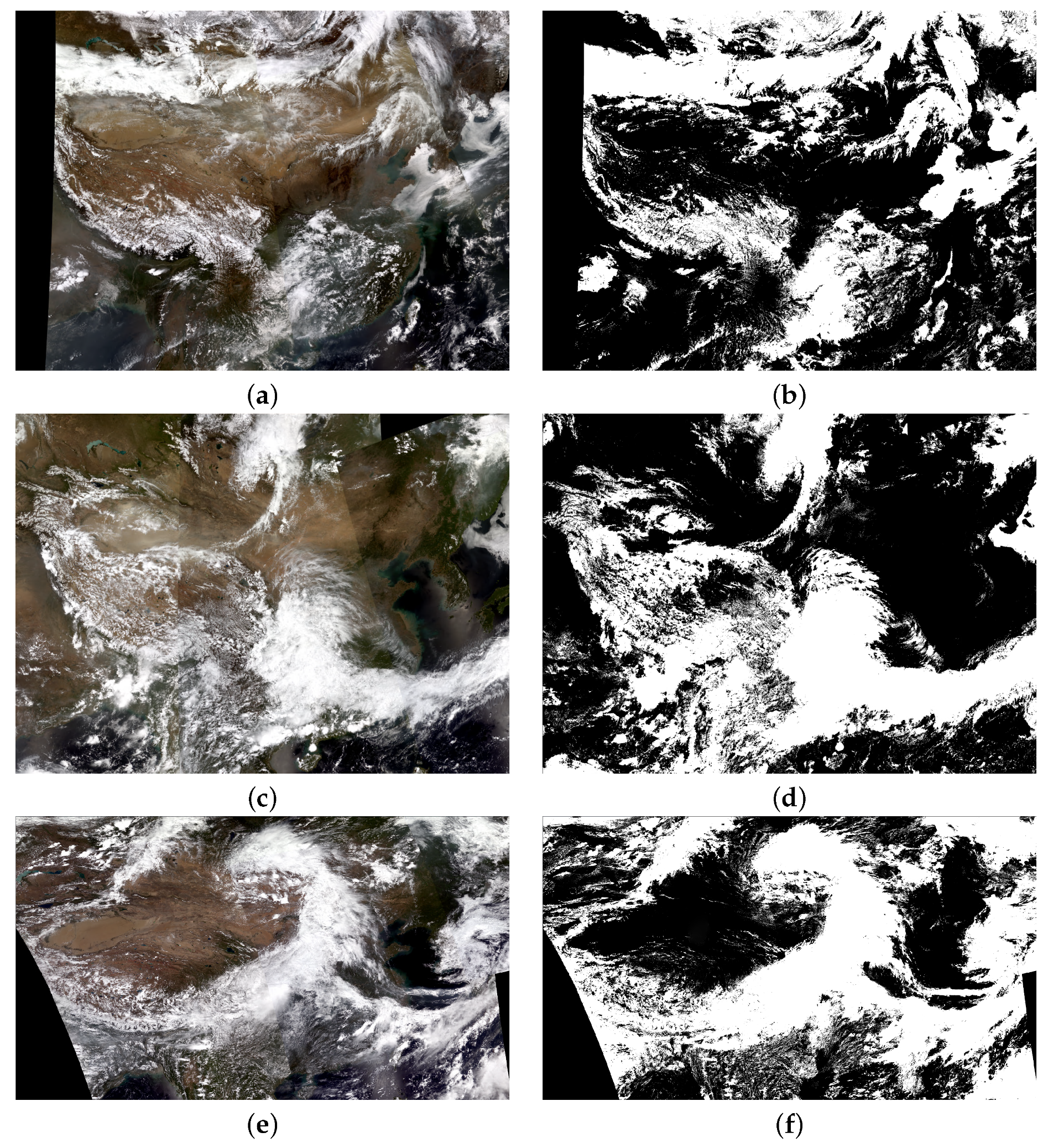

Figure 7.

Some samples in our dataset. Our dataset contains different landcovers, including oceans, deserts, forests, grasslands, and others. (a,c) Cloud Images; (b,d) Masks.

Figure 7.

Some samples in our dataset. Our dataset contains different landcovers, including oceans, deserts, forests, grasslands, and others. (a,c) Cloud Images; (b,d) Masks.

Figure 8.

Loss and F1 Score of PCNet for training and validating the datasets. (a) The training and validation loss change with the epochs on the datasets. (b) The training and validation F1 Score change with the epochs on the datasets.

Figure 8.

Loss and F1 Score of PCNet for training and validating the datasets. (a) The training and validation loss change with the epochs on the datasets. (b) The training and validation F1 Score change with the epochs on the datasets.

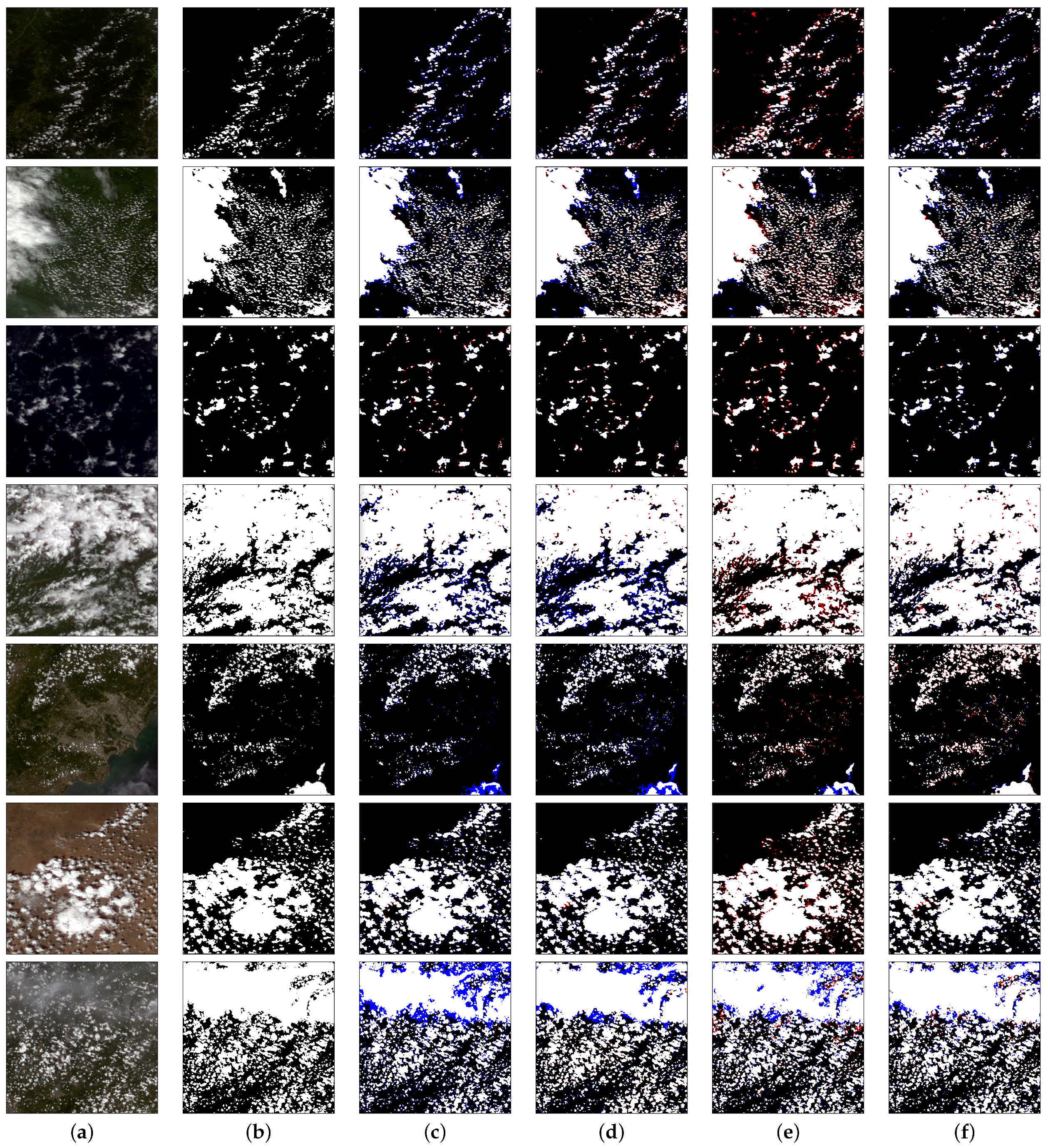

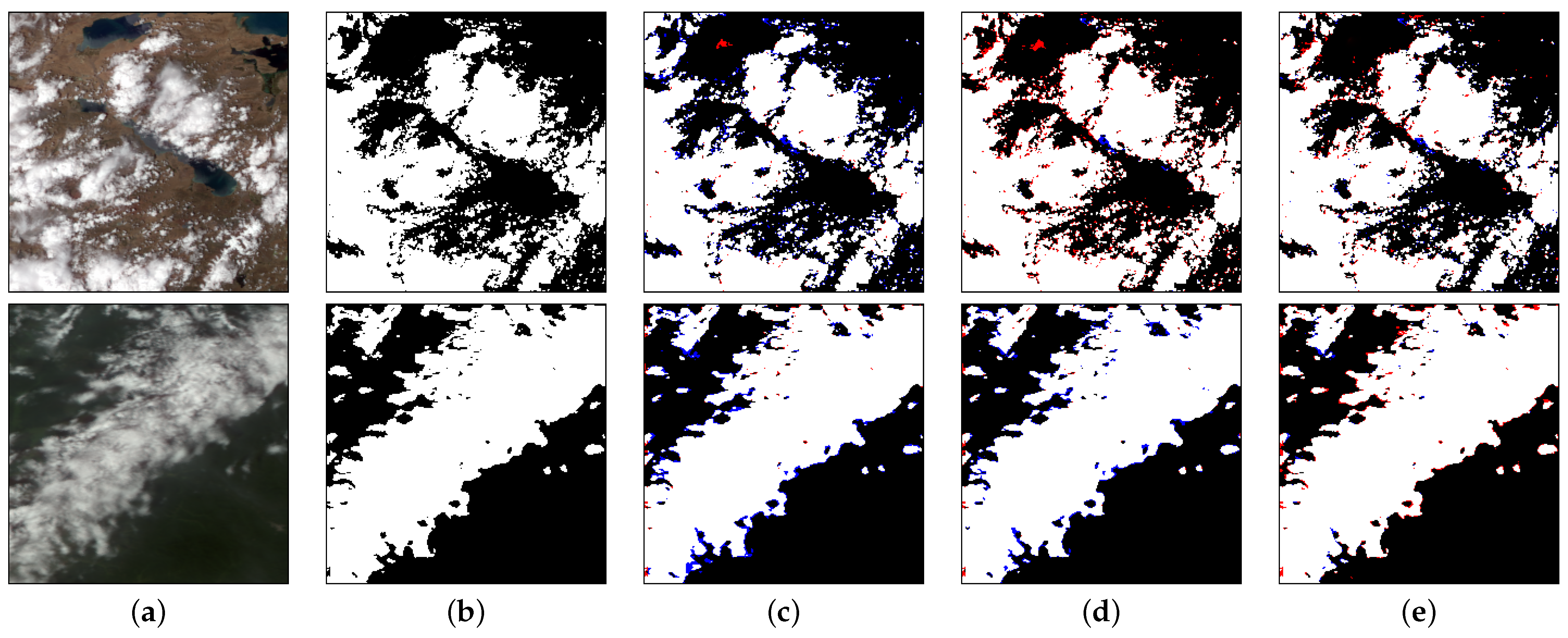

Figure 9.

The visualization of cloud detection. The first column (

a) is the actual color of the FY-3D remote-sensing imagery; the second column (

b) shows the corresponding ground truth; the third column (

c) shows the results generated by UNet++ and the fourth column (

d) shows the results generated by UNet3+; the fifth column (

e) shows the prediction results of DeepLabV3+; the sixth column (

f) shows the prediction results of our method. White, red, blue and black mean the

TP,

FP,

FN and

TN, respectively. (

a) Input; (

b) Ground Truth; (

c) UNet++ [

50]; (

d) UNet3+ [

51]; (

e) DeepLabV3+ [

53]; (

f) Our.

Figure 9.

The visualization of cloud detection. The first column (

a) is the actual color of the FY-3D remote-sensing imagery; the second column (

b) shows the corresponding ground truth; the third column (

c) shows the results generated by UNet++ and the fourth column (

d) shows the results generated by UNet3+; the fifth column (

e) shows the prediction results of DeepLabV3+; the sixth column (

f) shows the prediction results of our method. White, red, blue and black mean the

TP,

FP,

FN and

TN, respectively. (

a) Input; (

b) Ground Truth; (

c) UNet++ [

50]; (

d) UNet3+ [

51]; (

e) DeepLabV3+ [

53]; (

f) Our.

Figure 10.

The visualization of results for Chinese region. (a,c,e) True-color remote-sensing image of FY-3D MERSI 250 m. (b,d,f) The cloud masks generated by our method. (a) 1 April 2018, FY-3D MERSI-250 m actual color image of China. (b) The result generated by our method. (c) 1 June 2019, FY-3D MERSI-250 m actual color image of China. (d) The result generated by our method. (e) 3 July 2020, FY-3D MERSI-250 m actual color image of China. (f) The result generated by our method.

Figure 10.

The visualization of results for Chinese region. (a,c,e) True-color remote-sensing image of FY-3D MERSI 250 m. (b,d,f) The cloud masks generated by our method. (a) 1 April 2018, FY-3D MERSI-250 m actual color image of China. (b) The result generated by our method. (c) 1 June 2019, FY-3D MERSI-250 m actual color image of China. (d) The result generated by our method. (e) 3 July 2020, FY-3D MERSI-250 m actual color image of China. (f) The result generated by our method.

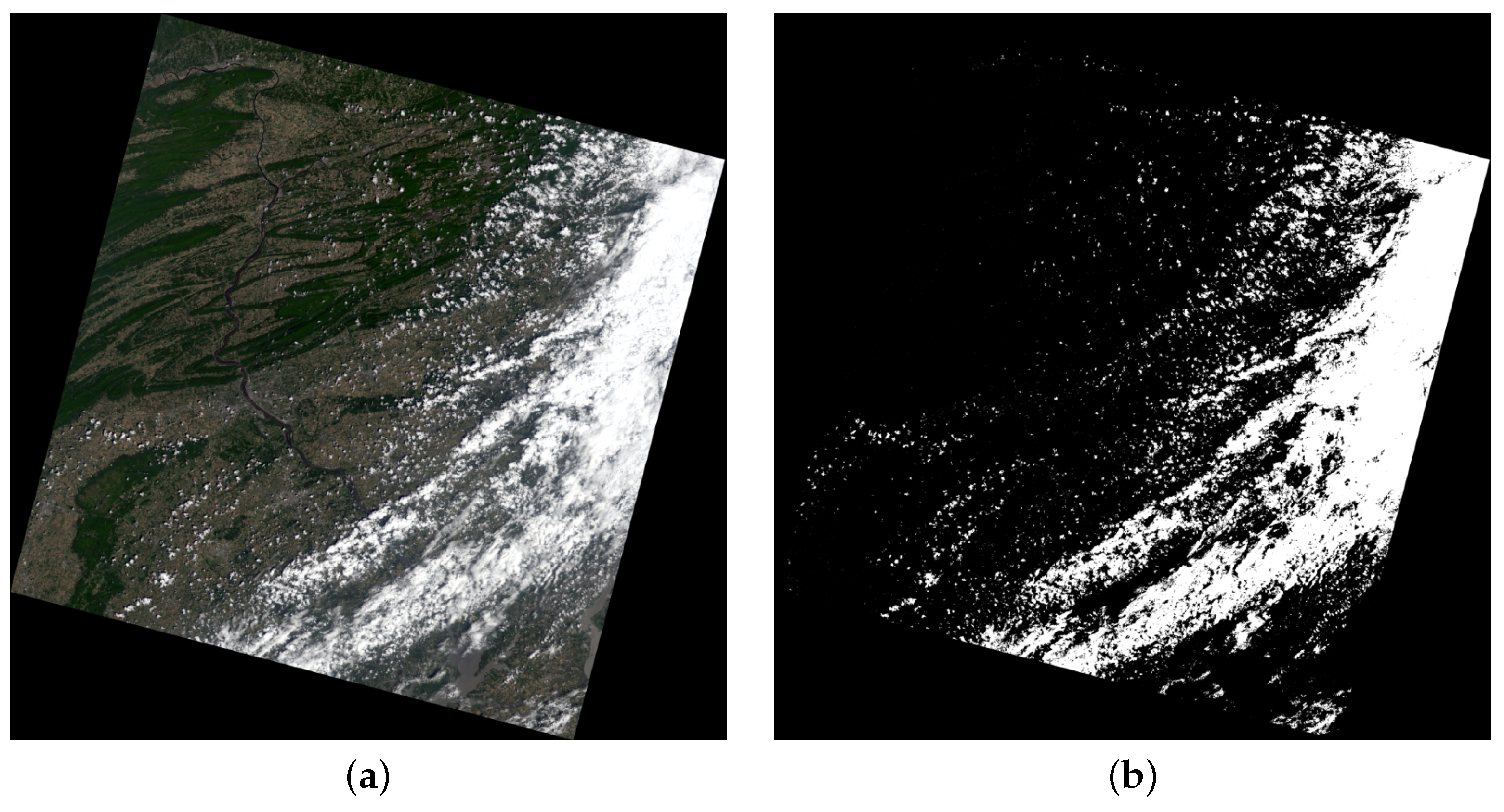

Figure 11.

The visualization of results for LC08_L1TP_015032_20210614_20210622_01_T1. (a) True-color imagery of Landsat 8. (b) The cloud mask generated by our method.

Figure 11.

The visualization of results for LC08_L1TP_015032_20210614_20210622_01_T1. (a) True-color imagery of Landsat 8. (b) The cloud mask generated by our method.

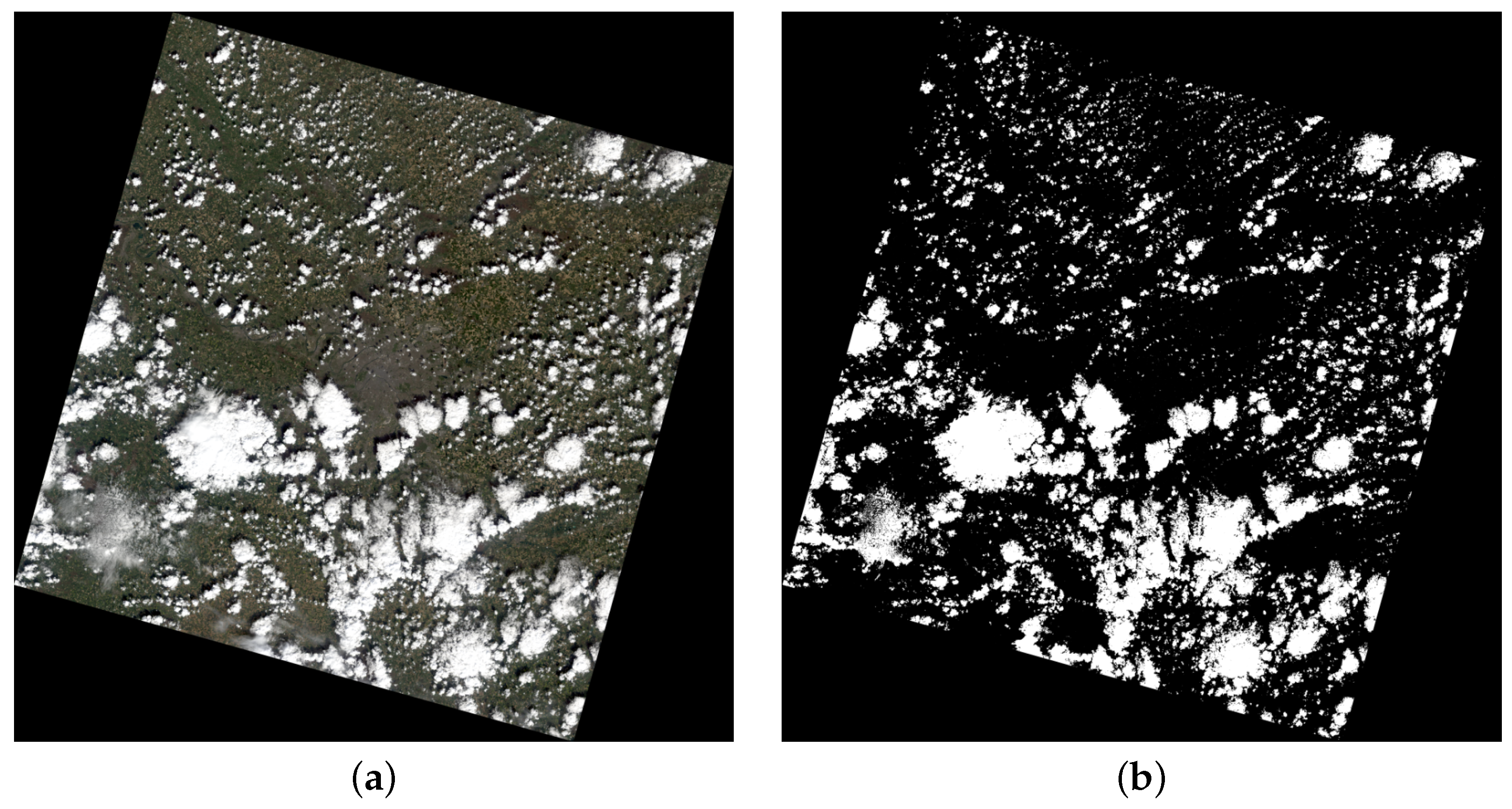

Figure 12.

The visualization of results for LC08_L1TP_199026_20210420_20210430_01_T1. (a) True-color imagery of Landsat 8. (b) The cloud mask generated by our method.

Figure 12.

The visualization of results for LC08_L1TP_199026_20210420_20210430_01_T1. (a) True-color imagery of Landsat 8. (b) The cloud mask generated by our method.

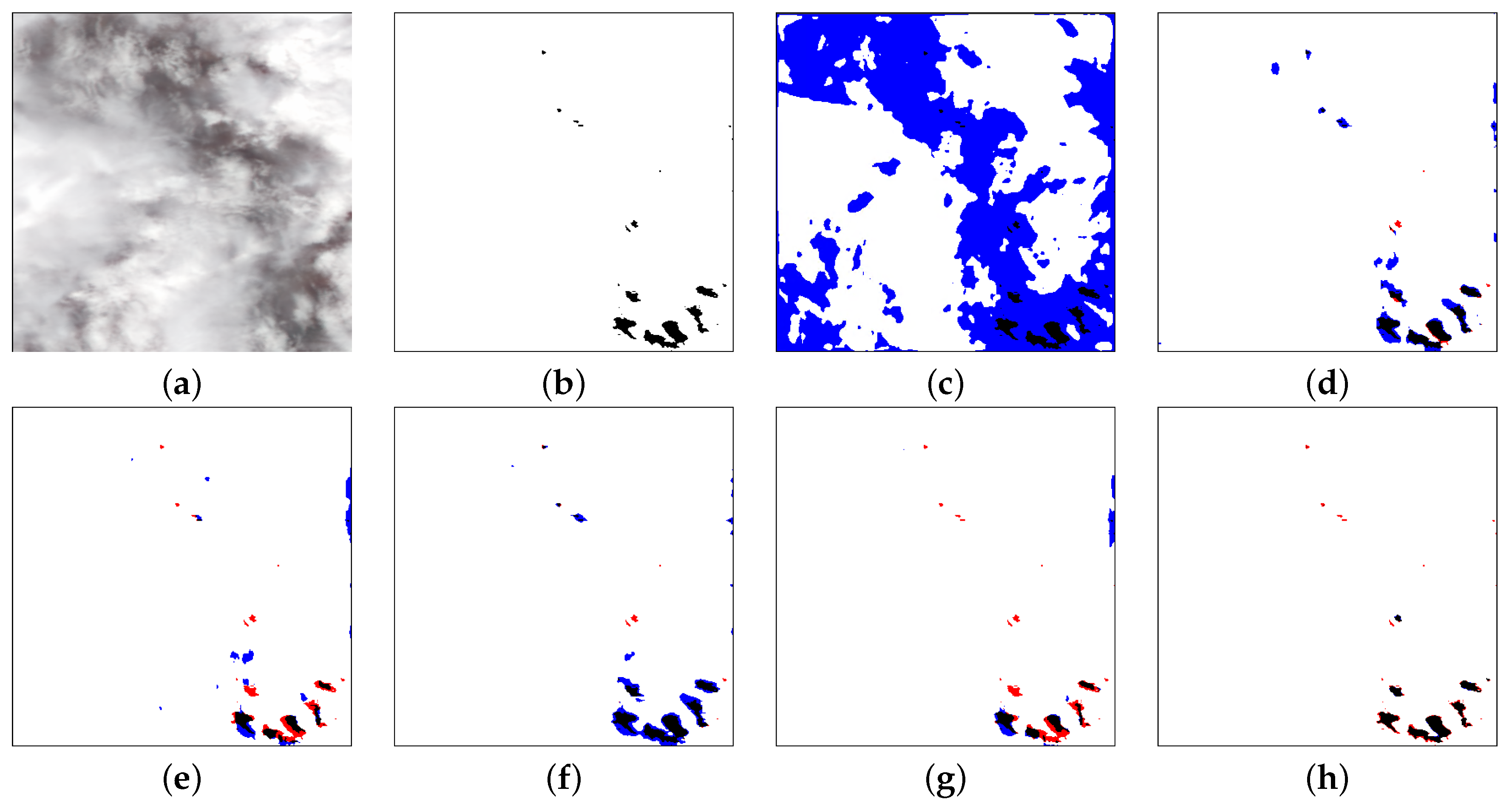

Figure 13.

Cloud-detection results comparisons. From left to right are (a) input; (b) ground truth; (c) dilated rate = ; (d) dilated rate = ; (e) dilated rate = ; (f) dilated rate = ; (g) dilated rate = ; (h) dilated rate = . White, red, blue and black mean the TP, FP, FN and TN, respectively.

Figure 13.

Cloud-detection results comparisons. From left to right are (a) input; (b) ground truth; (c) dilated rate = ; (d) dilated rate = ; (e) dilated rate = ; (f) dilated rate = ; (g) dilated rate = ; (h) dilated rate = . White, red, blue and black mean the TP, FP, FN and TN, respectively.

Figure 14.

Cloud-detection result comparisons. From left to right are (a) input; (b) ground truth; (c) dilated rate = ; (d) dilated rate = ; (e) dilated rate = ; (f) dilated rate = ; (g) dilated rate = ; (h) dilated rate = . White, red, blue and black mean the TP, FP, FN and TN, respectively.

Figure 14.

Cloud-detection result comparisons. From left to right are (a) input; (b) ground truth; (c) dilated rate = ; (d) dilated rate = ; (e) dilated rate = ; (f) dilated rate = ; (g) dilated rate = ; (h) dilated rate = . White, red, blue and black mean the TP, FP, FN and TN, respectively.

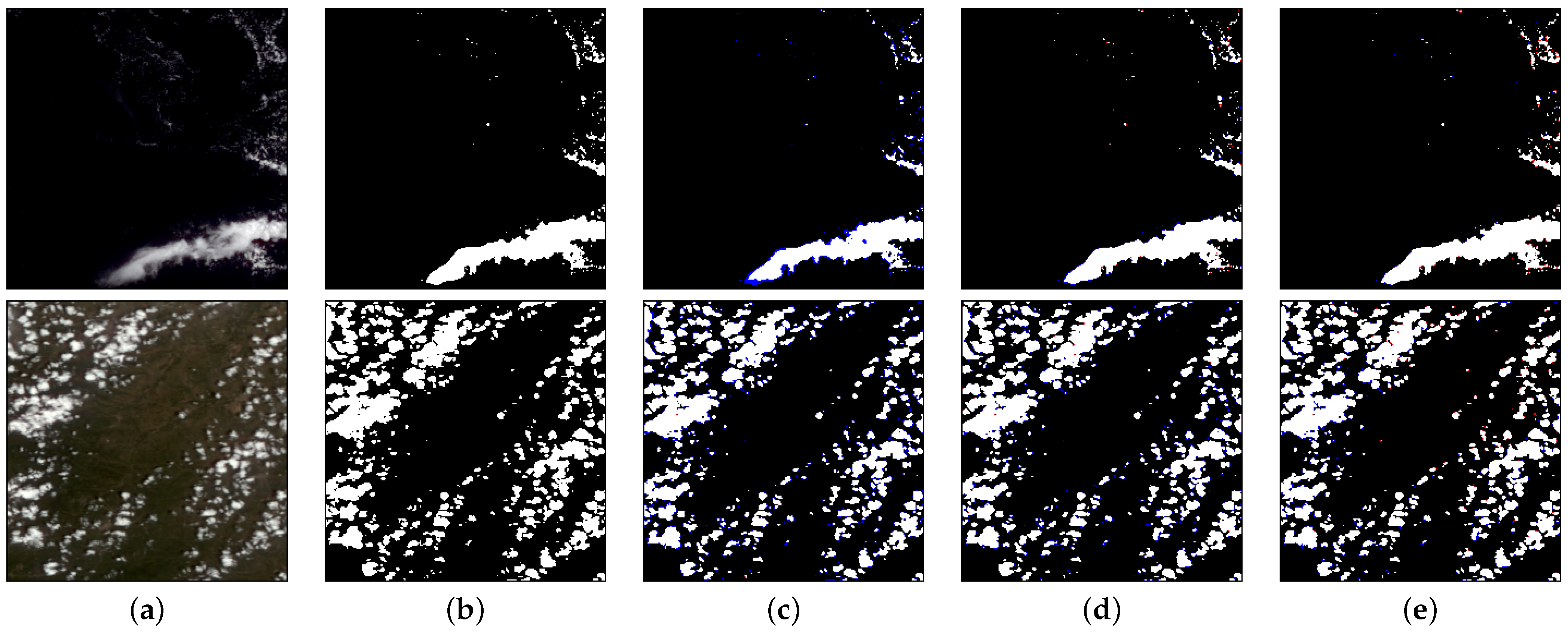

Figure 15.

Cloud-detection results comparisons. From left to right are (a) input; (b) ground truth; (c) strides = ; (d) strides = ; (e) strides = . White, red, blue and black mean the TP, FP, FN and TN, respectively.

Figure 15.

Cloud-detection results comparisons. From left to right are (a) input; (b) ground truth; (c) strides = ; (d) strides = ; (e) strides = . White, red, blue and black mean the TP, FP, FN and TN, respectively.

Figure 16.

Cloud-detection result comparisons. From left to right are (a) input; (b) ground truth; (c) replace the PCB with DRB; (d) replace the PCB with Non-Local Block; (e) Our method. White, red, blue and black mean the TP, FP, FN and TN, respectively.

Figure 16.

Cloud-detection result comparisons. From left to right are (a) input; (b) ground truth; (c) replace the PCB with DRB; (d) replace the PCB with Non-Local Block; (e) Our method. White, red, blue and black mean the TP, FP, FN and TN, respectively.

Table 1.

Image distribution of cloud coverage in training and test datasets.

Table 1.

Image distribution of cloud coverage in training and test datasets.

| Cloud Coverage | Number in Training Set | Ratio in Training Set | Number in Test Set | Ratio in Test Set |

|---|

| 1139 | 20.46% | 319 | 22.87% |

| 1251 | 22.47% | 279 | 20.05% |

| 1484 | 26.66% | 383 | 27.55% |

| 1693 | 30.41% | 411 | 29.53% |

Table 2.

The structure of Dilated Residual Block (input size = ).

Table 2.

The structure of Dilated Residual Block (input size = ).

| Layer | Kernal | Stride | Dilated Rate | Padding | Output |

|---|

| Dilated Convolution | 3 | 1 | 1 | 1 | |

| BN + LeakyReLU | | | | | |

| Residual | | | | | |

| Dilated Convolution | 3 | 1 | 2 | 2 | |

| BN + LeakyReLU | | | | | |

| Residual | | | | | |

| Dilated Convolution | 3 | 1 | 4 | 4 | |

| BN + LeakyReLU | | | | | |

| Residual | | | | | |

| Dilated Convolution | 3 | 1 | 2 | 2 | |

| BN + LeakyReLU | | | | | |

| Residual | | | | | |

| Dilated Convolution | 3 | 1 | 1 | 1 | |

| BN + LeakyReLU | | | | | |

| Residual | | | | | |

Table 3.

Quantitative comparison of cloud detection with Precision, Recall, F1 Score and Accuracy. The best results are marked in bold font.

Table 3.

Quantitative comparison of cloud detection with Precision, Recall, F1 Score and Accuracy. The best results are marked in bold font.

| Method | Precision | Recall | F1 Score | Accuracy |

|---|

| U-Net [49] | 0.965 | 0.849 | 0.903 | 0.850 |

| UNet++ [50] | 0.973 | 0.845 | 0.905 | 0.846 |

| UNet3+ [51] | 0.969 | 0.917 | 0.942 | 0.901 |

| PSPNet [52] | 0.951 | 0.860 | 0.903 | 0.855 |

| DeepLabv3+ [53] | 0.963 | 0.881 | 0.919 | 0.862 |

| Our method | 0.971 | 0.932 | 0.951 | 0.917 |

Table 4.

The effectiveness of threshold for sigmoid classifier.

Table 4.

The effectiveness of threshold for sigmoid classifier.

| Threshold | Precision | Recall | F1 Score | Accuracy |

|---|

| 0.2 | 0.617 | 0.998 | 0.762 | 0.835 |

| 0.3 | 0.821 | 0.986 | 0.896 | 0.887 |

| 0.4 | 0.932 | 0.950 | 0.941 | 0.899 |

| 0.5 | 0.971 | 0.932 | 0.951 | 0.917 |

| 0.6 | 0.986 | 0.909 | 0.946 | 0.914 |

| 0.7 | 0.991 | 0.850 | 0.915 | 0.882 |

| 0.8 | 0.997 | 0.589 | 0.741 | 0.827 |

Table 5.

This is effect analysis of the number of blocks and dilated rates.

Table 5.

This is effect analysis of the number of blocks and dilated rates.

| The Number of Blocks | Dilated Rate | Precision | Recall | F1 Score | Accuracy |

|---|

| | | 0.904 | 0.827 | 0.864 | 0.845 |

| 4 | | 0.924 | 0.885 | 0.904 | 0.861 |

| | | 0.911 | 0.863 | 0.886 | 0.855 |

| | | 0.953 | 0.864 | 0.906 | 0.881 |

| | | 0.969 | 0.915 | 0.941 | 0.901 |

| | | 0.961 | 0.925 | 0.942 | 0.903 |

| 5 | | 0.955 | 0.911 | 0.932 | 0.894 |

| | | 0.947 | 0.915 | 0.930 | 0.893 |

| | Our method | 0.971 | 0.932 | 0.951 | 0.917 |

| | | 0.873 | 0.801 | 0.835 | 0.822 |

| 6 | | 0.884 | 0.833 | 0.858 | 0.837 |

| | | 0.902 | 0.876 | 0.889 | 0.856 |

Table 6.

The effectiveness of different numbers of kernel sizes in PCB for cloud detection.

Table 6.

The effectiveness of different numbers of kernel sizes in PCB for cloud detection.

| Strides | Precision | Recall | F1 Score | Accuracy |

|---|

| (2, 4, 8) | 0.973 | 0.905 | 0.938 | 0.898 |

| (8, 16, 32) | 0.964 | 0.874 | 0.917 | 0.859 |

| (4, 8, 16) (Our method) | 0.971 | 0.932 | 0.951 | 0.917 |

Table 7.

The effectiveness of PCB for cloud detection. The Params and MACs are calculated at the input size is .

Table 7.

The effectiveness of PCB for cloud detection. The Params and MACs are calculated at the input size is .

| Method | Precision | Recall | F1 Score | Accuracy | Params(K) | MACs(G) |

|---|

| Only DRB | 0.913 | 0.788 | 0.846 | 0.784 | 885.057 | 58.014 |

| NLB | 0.969 | 0.883 | 0.924 | 0.866 | 4533.377 | 34.966 |

| PCB | 0.971 | 0.932 | 0.951 | 0.917 | 4666.497 | 37.630 |

Table 8.

The effectiveness of CAB for cloud detection.

Table 8.

The effectiveness of CAB for cloud detection.

| Method | Precision | Recall | F1 Score | Accuracy |

|---|

| w/o CAB | 0.965 | 0.927 | 0.945 | 0.905 |

| CAB | 0.971 | 0.932 | 0.951 | 0.917 |