Abstract

Heritage buildings are often lost without being adequately documented. Significant research has gone into automated building modelling from point clouds, challenged by irregularities in building design and the presence of occlusion-causing clutter and non-Manhattan World features. Previous work has been largely focused on the extraction and representation of walls, floors, and ceilings from either interior or exterior single storey scans. Significantly less effort has been concentrated on the automated extraction of smaller features such as windows and doors from complete (interior and exterior) scans. In addition, the majority of the work done on automated building reconstruction pertains to the new-build and construction industries, rather than for heritage buildings. This work presents a novel multi-level storey separation technique as well as a novel door and window detection strategy within an end-to-end modelling software for the automated creation of 2D floor plans and 3D building models from complete terrestrial laser scans of heritage buildings. The methods are demonstrated on three heritage sites of varying size and complexity, achieving overall accuracies of 94.74% for multi-level storey separation and 92.75% for the building model creation. Additionally, the automated door and window detection methodology achieved absolute mean dimensional errors of 6.3 cm.

1. Introduction

Indoor modelling and 3D building reconstruction are important parts of various industries, including heritage management, construction, smart city modelling, and navigation. In digital heritage, the advent of laser scanning has dramatically increased the ability to create accurate 3D models for the purpose of archiving, public outreach, and creating heritage building information models (HBIM). However, there is still room for improvement when it comes to the automated creation of these building models. The representation of doors and windows adds to the completeness of a 3D building model or 2D floor plan created from a point cloud. In addition, the presence of multi-level floors and ceilings cannot be dealt with by existing storey separation algorithms. The goal of this modelling project is the generation of a complete building model including floors, ceilings, walls, doors, and windows, along with novel detection methodologies for multi-level storey separation and door and window detection.

Standard practice for the creation of 2D floor plans or 3D building models from point clouds involves the manual tracing of distinguishable features in a CAD environment [1]. The point cloud is manipulated, and features such as walls, floors, ceilings, doors, and windows are manually delineated by tracing along the outlines of the features. These extracted features are then combined to create a building information model (BIM). This practice introduces plentiful opportunities for human error and subjectivity. Subjective modelling occurs when a modeller’s possession or lack of knowledge affects the outcome of the modelling process. This can influence the correctness and accuracy of the manually extracted models. In addition, this manual modelling strategy is tedious and time-consuming to perform.

There are gaps in the current work on building modelling when it comes to the automated separation of multi-level storeys from multi-storey buildings. The vast majority of existing building reconstruction algorithms are designed to work on a single storey, as this largely simplifies the modelling process. For multi-storey buildings, the individual storeys must be separated before the modeling can begin. This has been successfully accomplished through the use of vertical histograms for identifying the planar slabs representing floors and ceilings within a multi-storey building. However, the presence of multi-level storeys cannot be dealt with using current storey separation techniques. This work presents a new method for the automated separation of multi-level building storeys.

Doors and windows are important when it comes to the creation of 2D floor plans or 3D building models because they can help in applications such as navigation, emergency simulations, and defining the contextual relationships between buildings and their inhabitants. This last application is important when it comes to the modelling of heritage buildings, how they have changed through time, and understanding how they were once used. In some cases, doors and windows are manually added into a model after either a manual or an automatic extraction of the walls has been completed [2,3]. The automated methods that currently exist for the extraction of doors and windows often depend on additional image-based datasets to accompany the point clouds. Existing methods are also highly regularized and expect to detect doors and windows of predefined dimensions. This work presents a new, non-regularized method for the automated extraction of doors and windows from point clouds using only geometric information.

This paper begins with the description of previous work on both storey separation and door and window extraction from point clouds in Section 2. The methodology of the novel multi-level storey separation and door and window extraction are presented in Section 3. The description of the heritage datasets is provided in Section 4, and the results of the developed methodologies are presented in Section 5. Finally, conclusions are made regarding the novel methodologies developed in this work in Section 6.

2. Related Work

From an automated modelling perspective, significant work has been done on both the creation of 2D floor plans and 3D building models from point clouds. The majority of previous work has focused on the implementation of data-driven methods for the extraction of recognizable features from point clouds [4]. An array of statistical methods such as the Hough Transform [5,6], RANSAC [1], PCA [7], and region growing [8] have been used to perform point cloud segmentation for building reconstruction. More commonly, a combination of multiple statistical methods are employed to perform point cloud segmentation [9,10]. Machine learning methods have been employed to segment and classify building features from a point cloud [11], proving more successful than data-driven methods in complex environments with high levels of occlusion but with high levels of computational complexity [12,13].

2.1. Storey Separation

Storey separation involves breaking a large, multi-storey building down into individual storeys. The vast majority of building reconstruction algorithms have been designed to work on one storey at a time, so when a complex dataset with more than one storey needs to be modelled, the individual storeys are split up and each storey is processed by the algorithm individually.

In previous work, separating multiple storeys from a single building has assumed that the floor or ceiling can be represented by a single horizontal plane for an entire storey. It has also been assumed in previous work that the floor and ceiling of each storey are perpendicular to the local gravity vector. The most popular method for storey separation has focused on identifying the peaks and nadir sections in a vertical histogram [14,15,16]. They assume locations of the histogram peaks represent floor and ceilings of a buildings’ storeys, and the nadir sections represent the space between storeys. However, none of these existing methods can perform automatic storey separation on multi-level storeys.

In complex buildings, it is possible to have multiple floor or ceiling heights within the same storey. These design decisions add to the complexity of the storey-separation process. In some cases where storeys vary in height or where boundaries vary from storey to storey, the floor and ceiling of adjacent storeys can overlap. Therefore, it cannot be assumed that all the points belonging to a floor or ceiling slab will fall within the same vertical histogram bin. This calls for a more localized approach, individually reconstructing each floor or ceiling slab in an iterative process and checking for coverage achieved by the slab in relation to the dimensions of the building. Using the boundaries of the building will help in correctly assigning the points to their respective floor or ceiling slab.

2.2. Door and Window Extraction

The problem of door and window detection has not been explored in as much depth as other elements of indoor 3D building modeling and has only been examined on a dataset specific basis. As noted by Babacan et al. [17], door detection has only arisen in very recent studies. The detection of doors, extremely common indoor building elements, is useful for understanding the environmental structure in order to perform efficient navigation or to plan appropriate evacuation routes. In previous work, door and window detection for indoor scenes captured from point clouds has had two main strategies: based on holes or based on edges [18]. The first strategy is focused on finding holes within extracted planar walls, either using point density analysis or ray tracing. The second is based on finding edges within, most commonly, an image-based dataset and then connecting adjacent edges using assumptions about the expected size and shape of existing window and door features. However, holes in the dataset caused by occlusions need to be distinguished from holes in the dataset caused by open doors or windows. This is a prevalent challenge in the current state of door and window extraction, especially in as-built indoor modelling such as heritage modelling, where the presence of clutter and occlusions is much higher than that in new-build or construction modeling.

The starting point for most window and door extraction techniques is the identification of holes or gaps within an extracted planar feature, as seen in [8,19]. The developed wall line tracing algorithm in [8] uses PCA, and the wall opening algorithm uses region growing to extend the detected features. In [19], the edges of these holes are detected using a least squares fit to a line, and then segmented and classified into four sets of top, bottom, left, and right to represent the outlines of door and window features. In [20], a voxel-based visibility analysis using ray-tracing is performed to recognize openings in planar features. In [21], a mobile laser scanning (MLS) point cloud is captured from an exterior perspective, and scanline analysis is used to detect holes within the planes that could be door or window candidates. These openings are found by recognizing patterns of repeating gaps in the scanlines and then searching for collinear segments representing the edges of a door or window feature.

Furthermore, the diagonal representations of an open door in the final point cloud have been used in previous work, including [17], to help identify doors. The method presented in [17] investigates the connectivity of the extracted line segments and uses the anchor position of an open door to help identify one edge of a doorway. Most similar to the method developed in this work is the computation of wall volumes presented in [19]. In this method, wall volumes are found by determining a wall’s closest neighbour; however, wall volumes are not employed when looking for window and door candidates.

Previous work that has focused on the extraction of windows and/or doors from laser scan data has used a variety of assumptions. In some cases, such as [15], assumptions are made about the expected dimensions of doors. Similar to [19], they assume that a door is lower than the height of the wall is which it is contained, whereas an opening extends the entire height of the wall and reaches the ceiling. Assumptions in [20] include Manhattan-World geometry, access to a priori architectural information concerning the building, and that the doors and windows are a consistent size throughout the building. In [22], ground plan contours are extracted using cell decomposition guided by a translational sweep algorithm. Rules are defined by human knowledge about building features, and doors are detected by assuming that they will produce gaps within a planar surface at a height of one meter. A regularized approach is common, as the linear nature of doors and window features can often be depended on. This is prevalent in the work of [8]. Another common method of door detection is presented in [23], where knowledge of the scanner’s position within an indoor environment is required. This method proved successful in determining windows and doors from a single room; however, it was untested on larger, more complex datasets with multiple rooms or hallways.

Many strategies employed for door and window extraction have made use of point cloud data, images and/or color data. In [24], differences in visual brightness, infrared opaqueness, and point density were used to detect windows from an interior perspective using both LiDAR and images. This involves the use of a trained classifier to identify window or glass regions based on the refractivity of their returns. Presented in [25] is a data-driven method for geometric reconstruction of indoor structural elements using the point cloud, and a model-driven method for the recognition of closed doors in image data based on the generalized 2D Hough Transform to detect linear edges. The model-based approach to door detection proves relatively robust to outliers and occlusion. Similarly, in [26], the coplanarity of doors and walls is solved by using a colorized approach. This method uses both the color information along with geometry to detect doors as openings in voxelized planar walls. Of course, this assumes that the door is of a different color to the surrounding wall plane.

2.3. Summary

As shown in this section, there are gaps in the current state-of-the-art for storey separation and door and window detection. For storey segmentation, the improvement comes from the development of an algorithm that can deal with multi-level storeys and is not solely dependent on the location of the full bins in the vertical histogram. The door and window extraction method developed in this work allows for the purely automatic extraction of door and windows from wall-defined search spaces. This comes from having complete indoor/outdoor scans of the building, as is the convention in heritage modelling.

3. Methodology

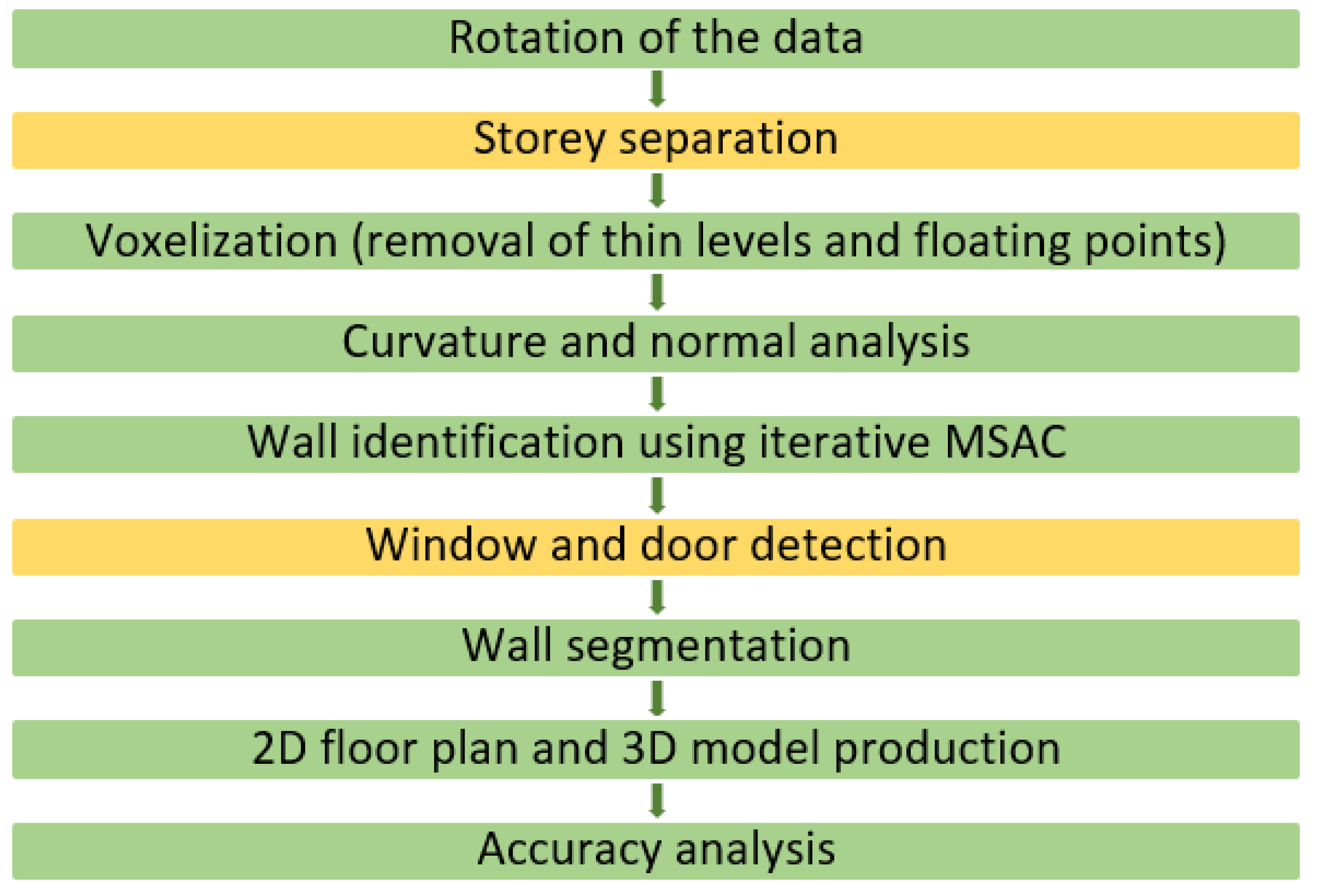

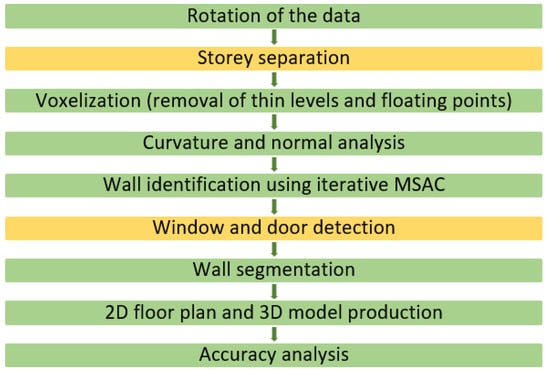

The novel strategies developed in this work for storey segmentation and door and window detection are contained within the complete end-to-end modelling strategy for automatically creating 2D floor plans and 3D building models from point clouds. The complete methodology is presented in Figure 1.

Figure 1.

Flowchart of methodology, novel methodologies in yellow.

The datasets used to test the developed methods were down sampled to 5 cm. This was done to make the processing time tractable in an environment such as MATLAB, while preserving the level of detail required to accurately determine the location of the floors and ceilings. The impact of the down sampling on the results will be presented in Section 5.

In order to extract the door and window features, planar features must be detected from the point cloud and used to create the search space. This starts by rotating the point cloud to align with the cardinal X and Y directions. It is assumed that the Z direction is parallel to the local gravity vector, ensured by leveling the laser scanner at each scan location. A 2D PCA-based rotation is used to rotate to data around the Z axis to align with the cardinal X and Y directions. In this work, it is assumed that the detected building features are planar and align with the cardinal directions of the dataset.

3.1. Storey Separation

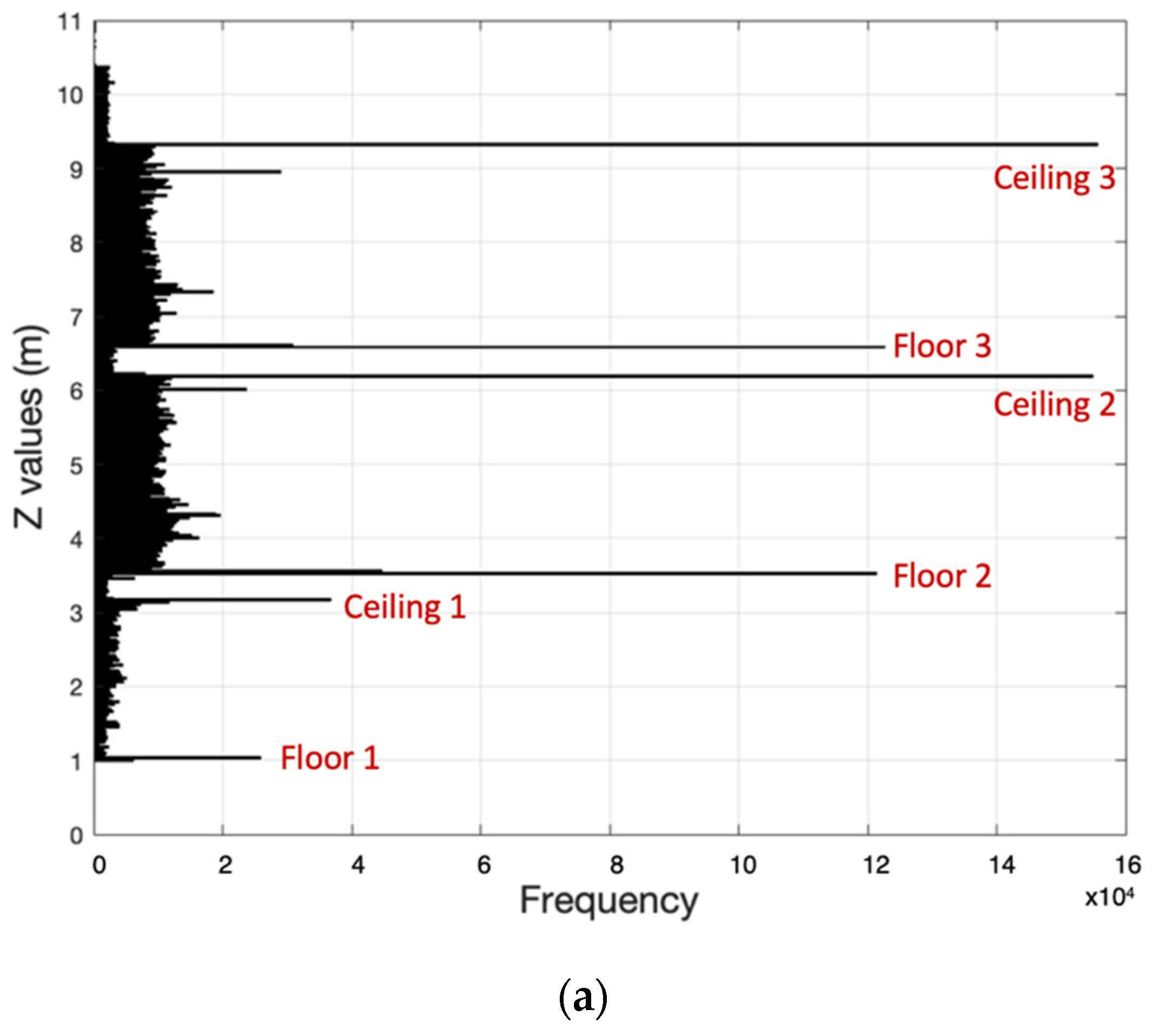

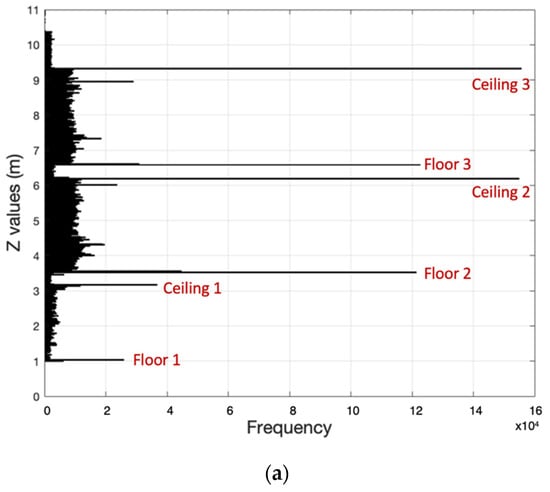

The storey separation algorithm developed in this work employs a vertical histogram to identify horizontal planar surfaces. A vertical histogram illustrates the distribution of points by representing the horizontal structures as bins with large counts, while vertical structures such as walls are depicted as bins with low counts. In addition, objects such as furniture can create local maxima in the vertical histogram. Two examples of vertical histograms of buildings with single level and multi-level storeys are presented in Figure 2.

Figure 2.

Vertical histogram examples: (a) Building with single-level storeys; (b) Building with multi-level storeys.

Figure 2 shows the difference in vertical histograms between a building with three single-level storeys (Figure 2a) compared to a building with four multi-level storeys (Figure 2b). It is evident from Figure 2 that the heights of full bins extracted from a vertical histogram are not enough to identify multi-level floor and ceiling slabs.

In this method, the histogram bins with the most points are extracted from the vertical histogram. A bin size of 3 cm is used for the desired level of detail to be realized. This value was determined through manual experimentation. Smaller bin sizes tended to make the problem intractable by subdividing the planes unnecessarily, while larger bin values grouped overlapping ceiling and floor planes together. In addition, non-level floors found in heritage structures exacerbated the problem. In this work, full bins are defined as those containing more than three scaled median absolute deviations (MAD) away from the median. The MAD is a more robust measure of the standard deviation of a series of data when detecting outliers [27] and can be calculated as follows:

where is the number of points in the bin total bins andis an N × 1 vector containing the number of total points in each bin of the vertical histogram.

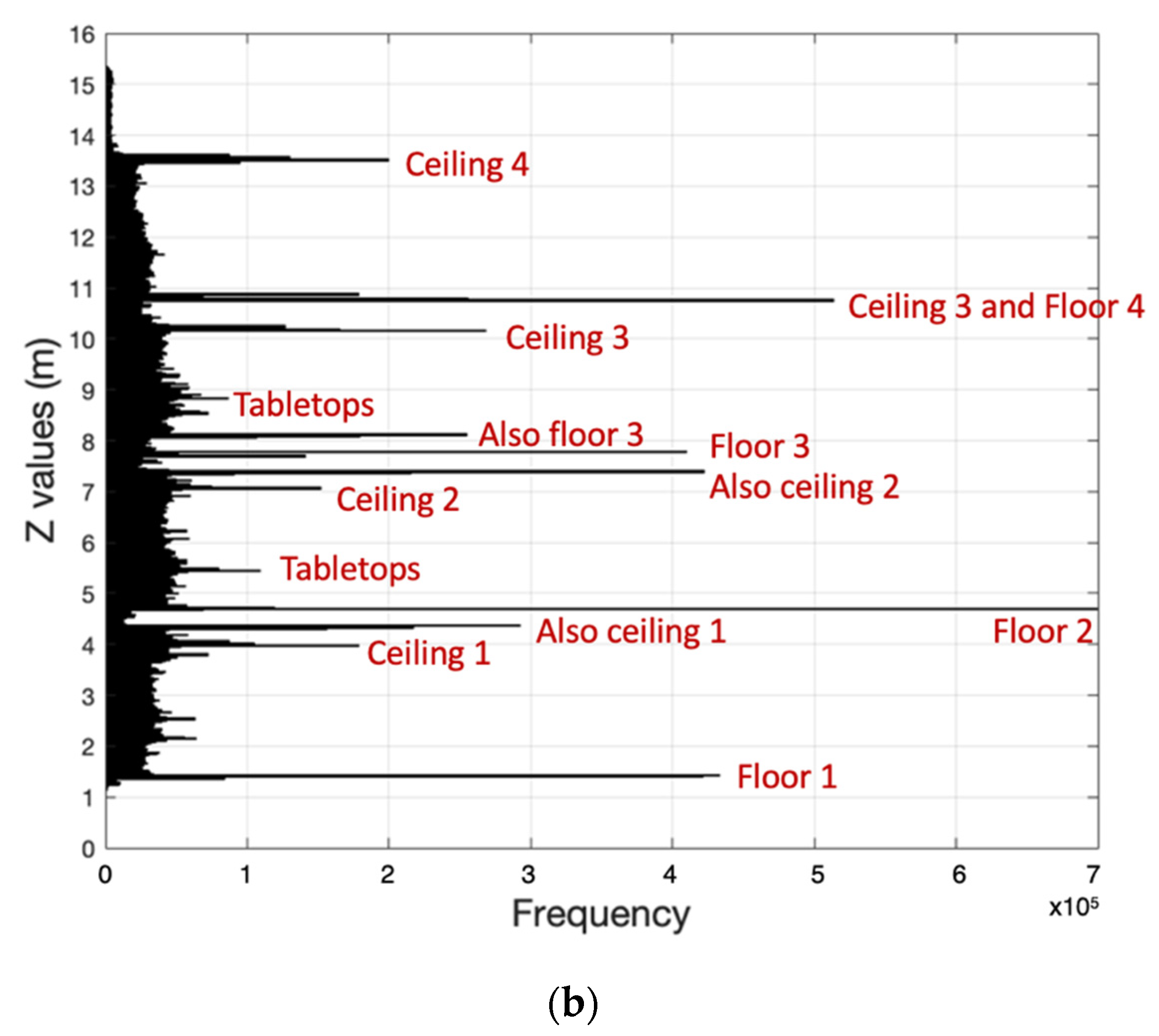

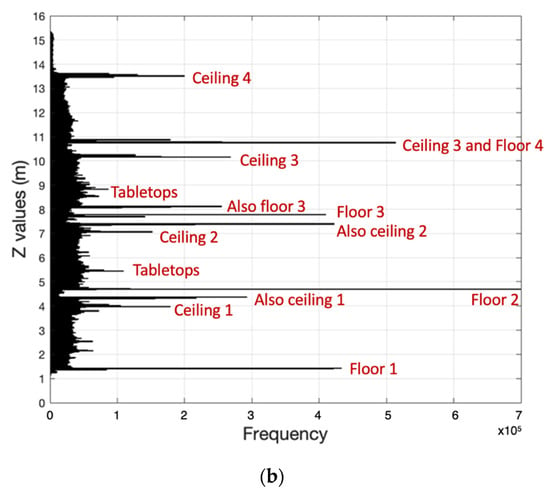

These full bins are transformed into binary images, presented in Figure 3, and a connected components procedure is performed. Each full bin “slice” of the vertical histogram is converted into a binary image. This is done by voxelizing the matrix into a 10 cm × 10 cm grid. If a grid square is occupied, it is assigned a value of one, and if a grid square is unoccupied, it is assigned a value of zero. In a binary image, connected components are groups of pixels whose edges or corners are touching. Connected components are used to determine if the extracted plane has many connected points, most likely representing a floor or ceiling slab. This was done to ensure that furniture planes were not included in the floor and ceiling segments, as it is possible that planar returns from desks or tabletops can create full histogram bins at neither a floor nor a ceiling level.

Figure 3.

Planar slab examples from the second storey of Old Sun Main Building. The ceiling slab contains four full bins, tabletop returns represent a full bin, and the floor slab contains two full bins. Black represents the portions of the floor and ceiling that were successfully reconstructed, while white represents the gaps in the floor and ceiling slabs.

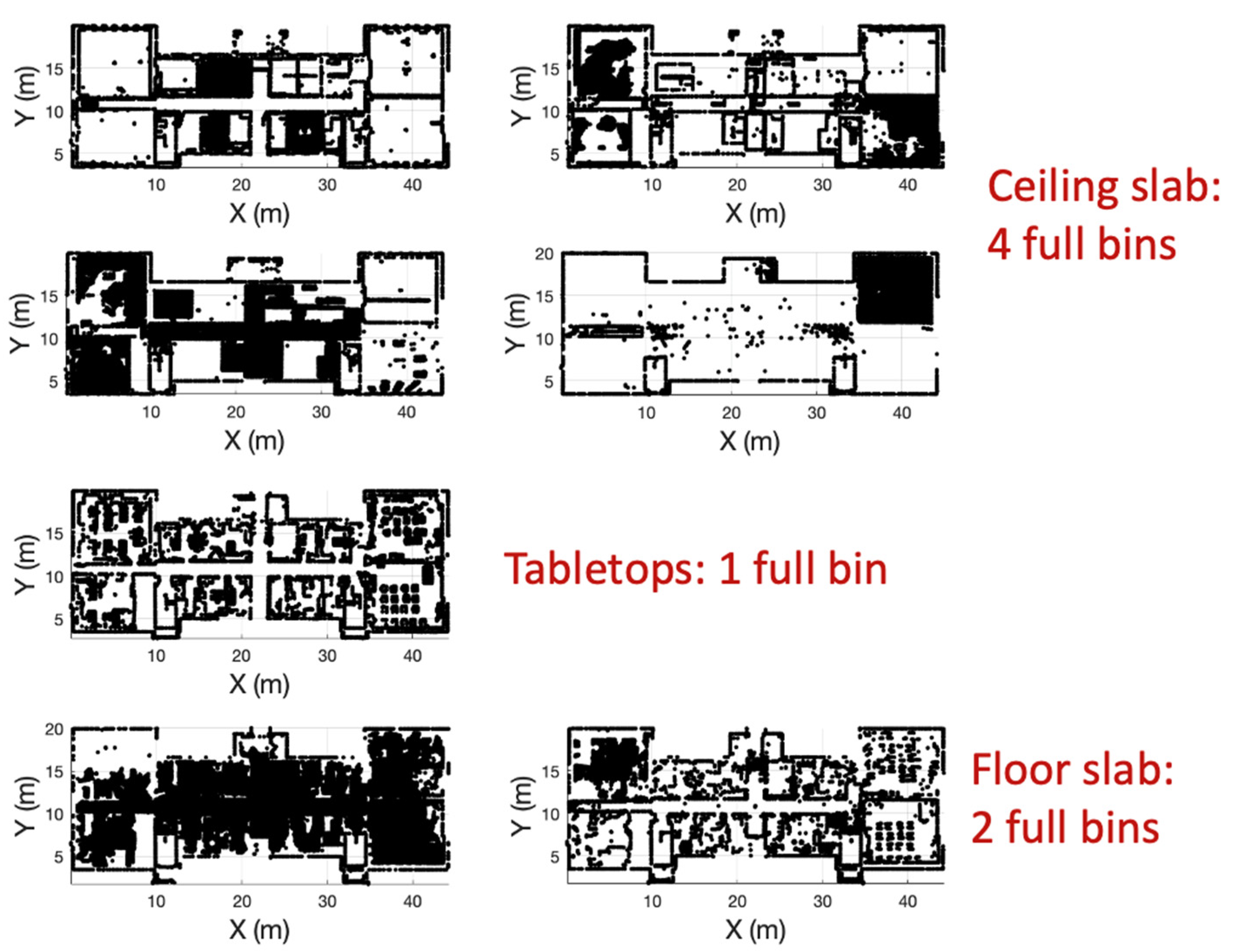

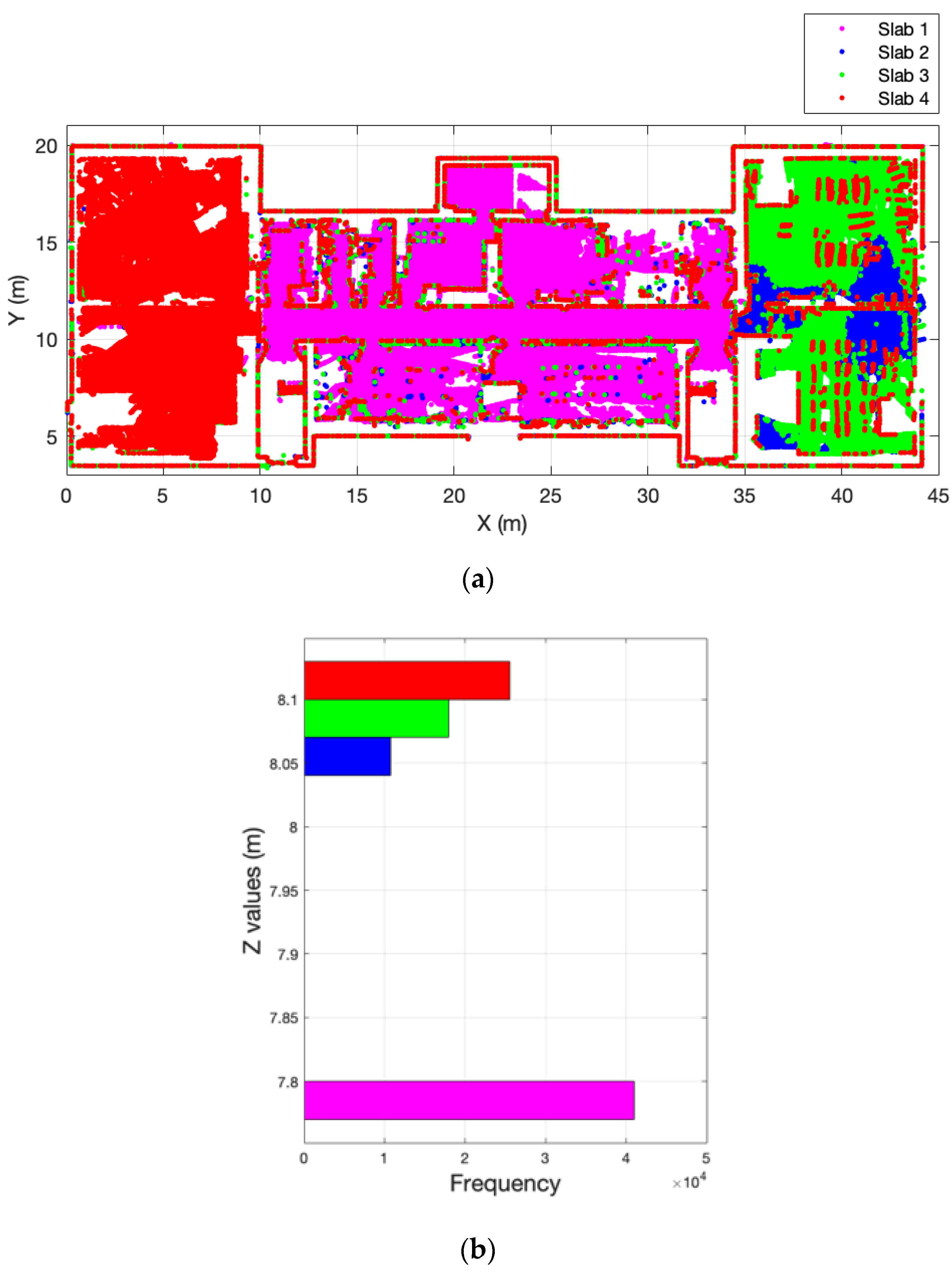

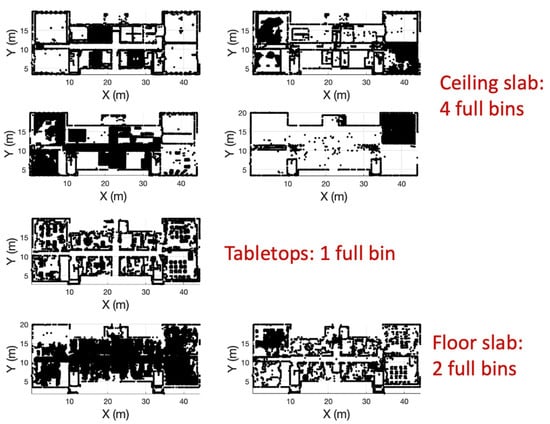

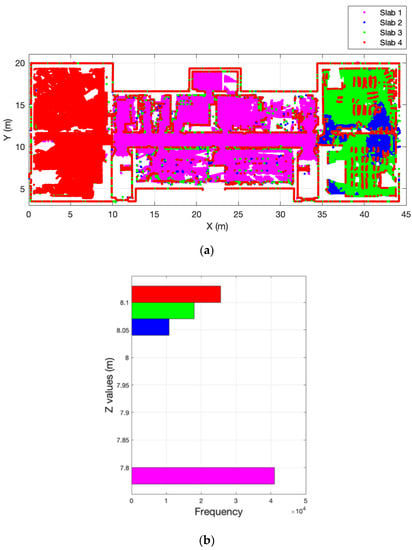

The points in each full bin are distributed into a 10 cm × 10 cm grid space. This grid space was chosen based on the average wall thickness exhibited by the point cloud. The size of the grid was consistent throughout the three datasets tested. An iterative procedure is then performed to compute the number of grid cells that overlap between neighbouring full bins. This assumes that a floor or ceiling plane, even if it is not all at the same level, will possess coverage (occupied grid cells) for the majority of the building dimensions. The overlap percentage is the number of repeating occupied grid cells between two neighbouring horizontal planes. In this step, planes are combined until an overlap percentage above a predetermined threshold is achieved. At this point, a new floor or ceiling slab is initiated and the process continues until it has assigned all the detected planes into either a floor or ceiling section. The iterative slab construction is illustrated in Figure 4, showing the four combined planes that make up a single floor slab for the third storey of the Old Sun Main Building.

Figure 4.

Old Sun Main Building Storey 3: (a) Floor slab constructed from four planar slabs (magenta, blue, green, and red) extracted from the vertical histogram; (b) The vertical histogram of the floor slab.

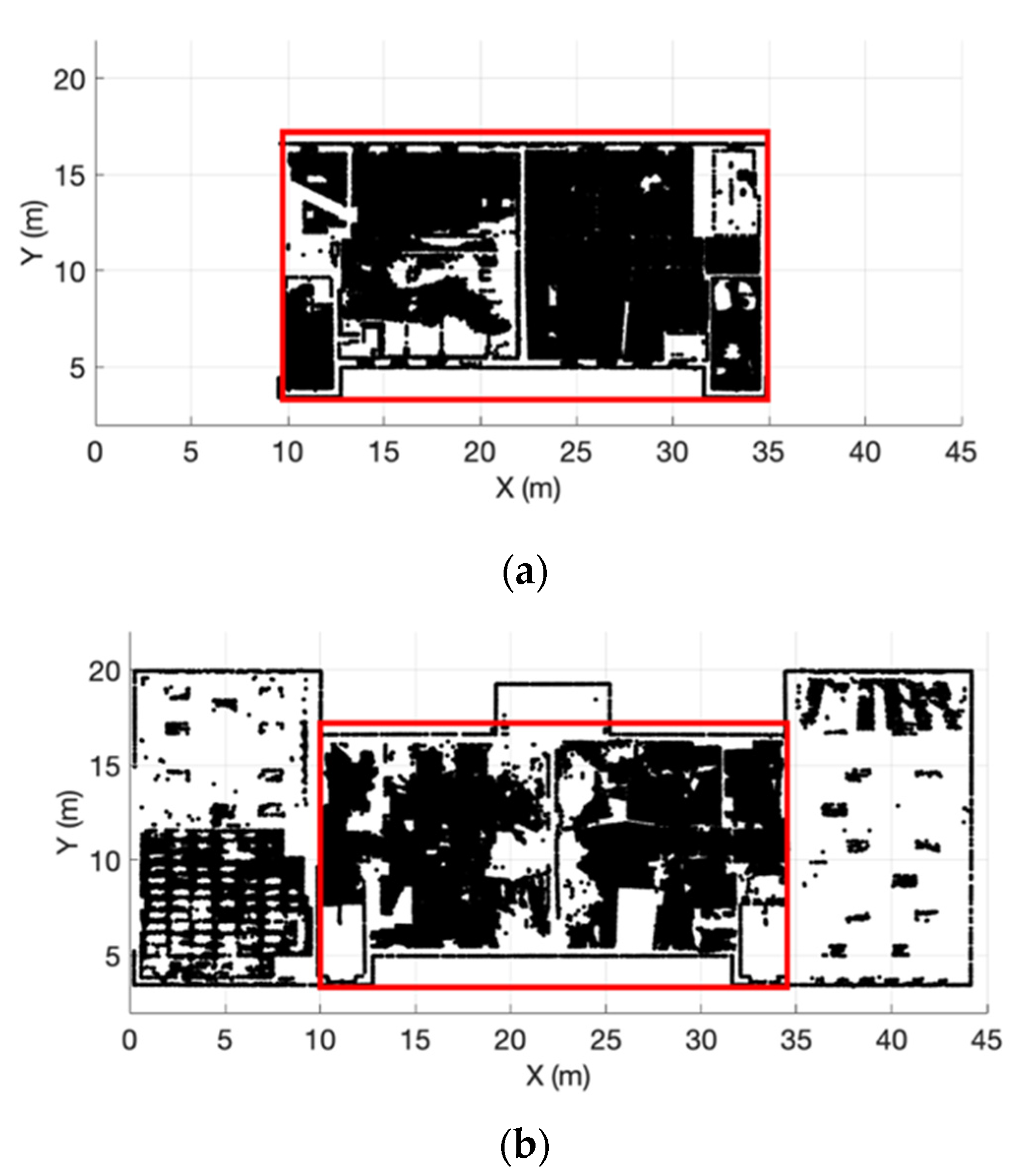

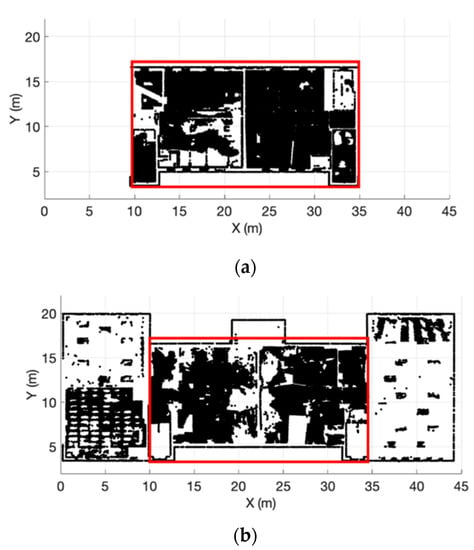

The four sections shown in Figure 4 are merged to form the complete floor slab. Holes are filled in using the mean height of the surrounding points. Finally, the floor and ceiling slabs are matched with their respective ceiling or floor based on the dimensions of their boundaries. Here, the assumption is made that the boundary of the floor and ceiling on any storey should be the same. If the boundary sizes are different, as seen in Figure 5a,b, the smaller of the two boundaries is taken and matched onto the respective floor or ceiling slab. The points existing outside of the red boundary seen in Figure 5a are assumed to belong to the neighbouring storey and are added to the ceiling slab of the lower storey. In this way, the new storey separation method can deal with storeys where the floor or ceiling slab overlaps with the upper or lower floor or ceiling slab of another storey.

Figure 5.

Boundary matching: (a) Extracted ceiling slab in black with calculated boundary in red; (b) Matched boundary on floor slab in red outline. Points in (b) outside of the red outline will be removed from the floor slab.

Once the floor and ceiling planes have been correctly identified, the point cloud is split up into single-storey point clouds by finding the points occurring between these extracted floor and ceiling slabs. To do this, an alpha shape boundary is computed using the floor and ceiling slabs for each storey, and the entire dataset is searched for points that are located within the detected floor and ceiling slabs. Once these individual storeys have been created, the algorithm can continue the point cloud modelling process.

3.2. Data Cleaning and Feature Isolation

The next step is to remove clutter such as furniture and false returns from the single-storey point clouds. To achieve this, the voxel size was set to be 10 cm, again based on the average thickness of the walls observed in the point clouds. Voxels representing vertical features such as walls should contain more points, whereas voxels representing horizontal features such as ceilings and floors should contain fewer points, thus representing a ‘thin layer’. These thin layers, voxels with less than 10 points, are removed as they do not represent building features. The voxel space representation of the data was further used for removing floating points—voxels that were occupied but were surrounded by empty voxels.

Curvature and normal direction are used to further isolate the building features. Curvature measures the rate of change of a surface normal and can be computed from the eigenvalues, extracted from the covariance matrix, as shown in Equation (2) [9]:

where . Eigenvalues indicate the variance in the principal directions.

In addition, the direction of the normal, as determined by the direction of the smallest eigenvector, is used to establish the orientation of the neighbourhood of points within the dataset. In this case, the curvature and normal direction are computed for a neighbourhood of points in order to evaluate the planarity and orientation of the neighbourhood of the seed point. A neighborhood size of 100 points was experimentally determined to give the best estimates for curvature and normal direction. If the curvature value is low, the point likely belongs to a planar surface. In addition, if the direction of the normal vector of the neighbourhood of points is perpendicular to the local gravity vector, then the seed point likely belongs to a vertical planar segment, such as a wall.

3.3. Wall Detection

With the building features isolated, the wall detection procedure can begin. The use of the M-estimator Sample Consensus (MSAC) in this work allows for the detection of vertical planar structures. MSAC is a modification of the more well-known RANSAC, first introduced by Fischler and Bolles in 1981 [28] as a parameter estimation method for a given model with a large number of outliers. In the original formulation of RANSAC, the consensus set was ranked by its cardinality (the total number of inliers), and the consensus set with the largest number of inliers was deemed the best CS. However, the dependence on cardinality can be improved upon through the use of M-estimators [29], which leads to the RANSAC offshoot used in this work: M-estimator Sample Consensus (MSAC).

The instantiation of MSAC used in this work was developed by Marco Zuliani [30] in MATLAB and modified by the first author of this paper. For the purposes of this work, only vertical wall segments are desired, so the MSAC algorithm was modified to only accept consensus sets that form a vertical wall based on the direction of its normal vector. The MSAC method used to perform the wall detection allows for a noise standard deviation, , to be defined by the user. In this case, was defined to be 0.01 m, which tended to give wall estimates with thicknesses between 0.05–0.15 m, corresponding to the observed wall thickness and previously defined voxel size.

3.4. Door and Window Extraction

The methodology to detect window and door features utilizes the search space defined by two walls and finds the points located between them. The search space is defined between neighbouring planar segments detected from the iterative MSAC procedure. The nearest neighbouring wall has a normal that points in the same direction as the seed wall. Each planar segment in the series of potential nearest neighbour candidates is first tested to see whether it is parallel to the seed segment. Once the parallel planar segments have been identified, the one nearest the seed segment is chosen as the nearest neighbour. This process continues until all segments have either been assigned a nearest neighbour segment or are determined to have no neighbours.

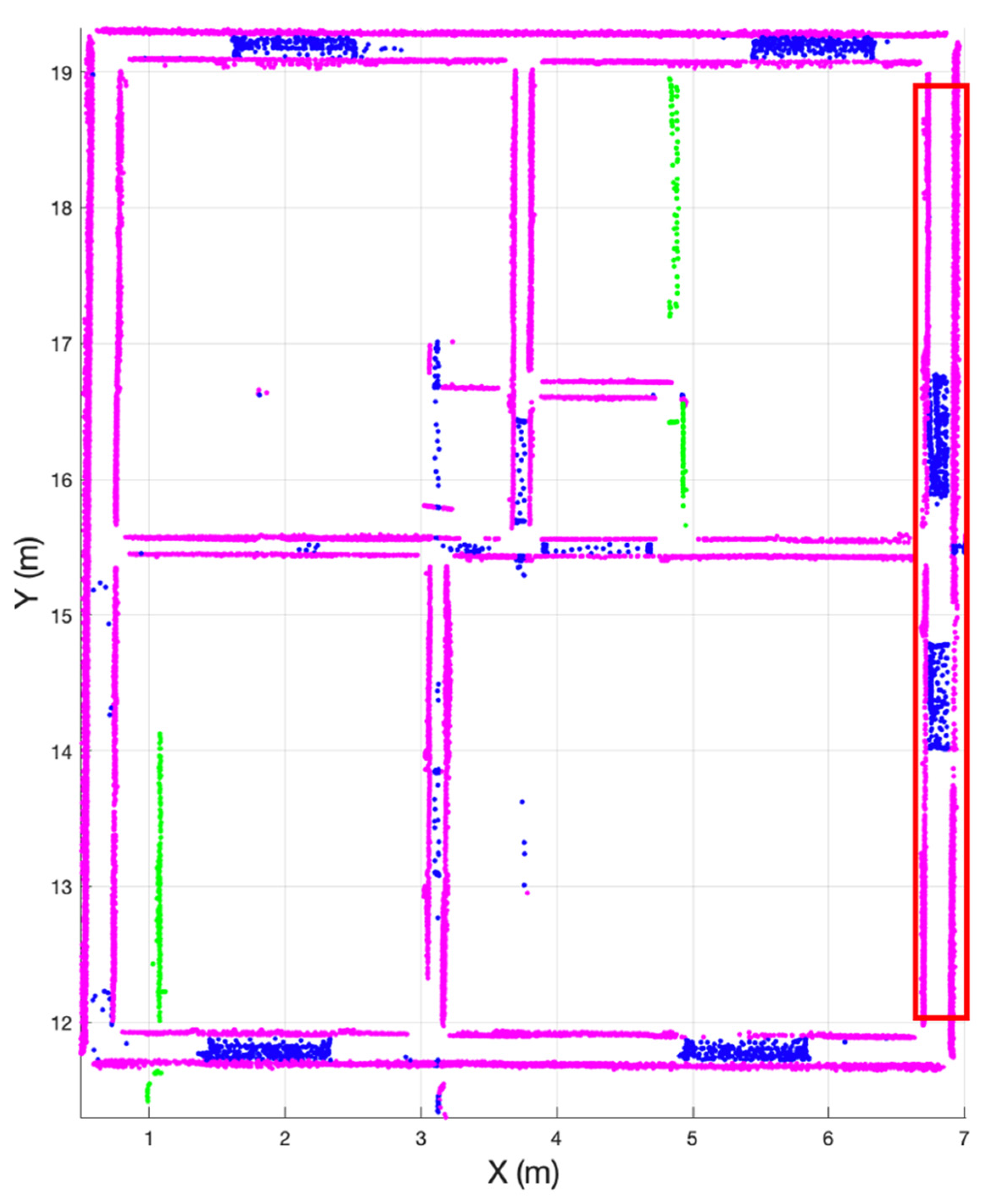

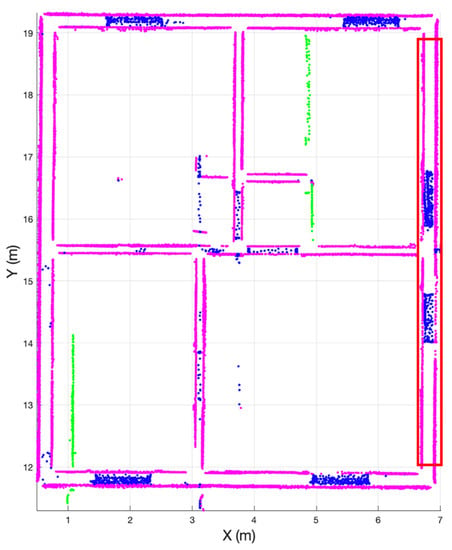

Once the walls have been detected and assigned a nearest neighbour(s), they can be used as bounding boxes in which to look for window and door features. If it is assumed that both sides of an interior or an exterior wall are captured, and if a door or window feature is located within that wall, the points belonging to the door or window will lie within the space defined by either side of the captured wall. This concept can be visualized using Figure 6, where the neighbouring walls and the identified points between those walls are presented.

Figure 6.

Signal House door and window candidates in their search space; walls in magenta, door and window candidates in blue, and walls with no neighbours in green. Red box outlines the door and window candidate points displayed in Figure 7.

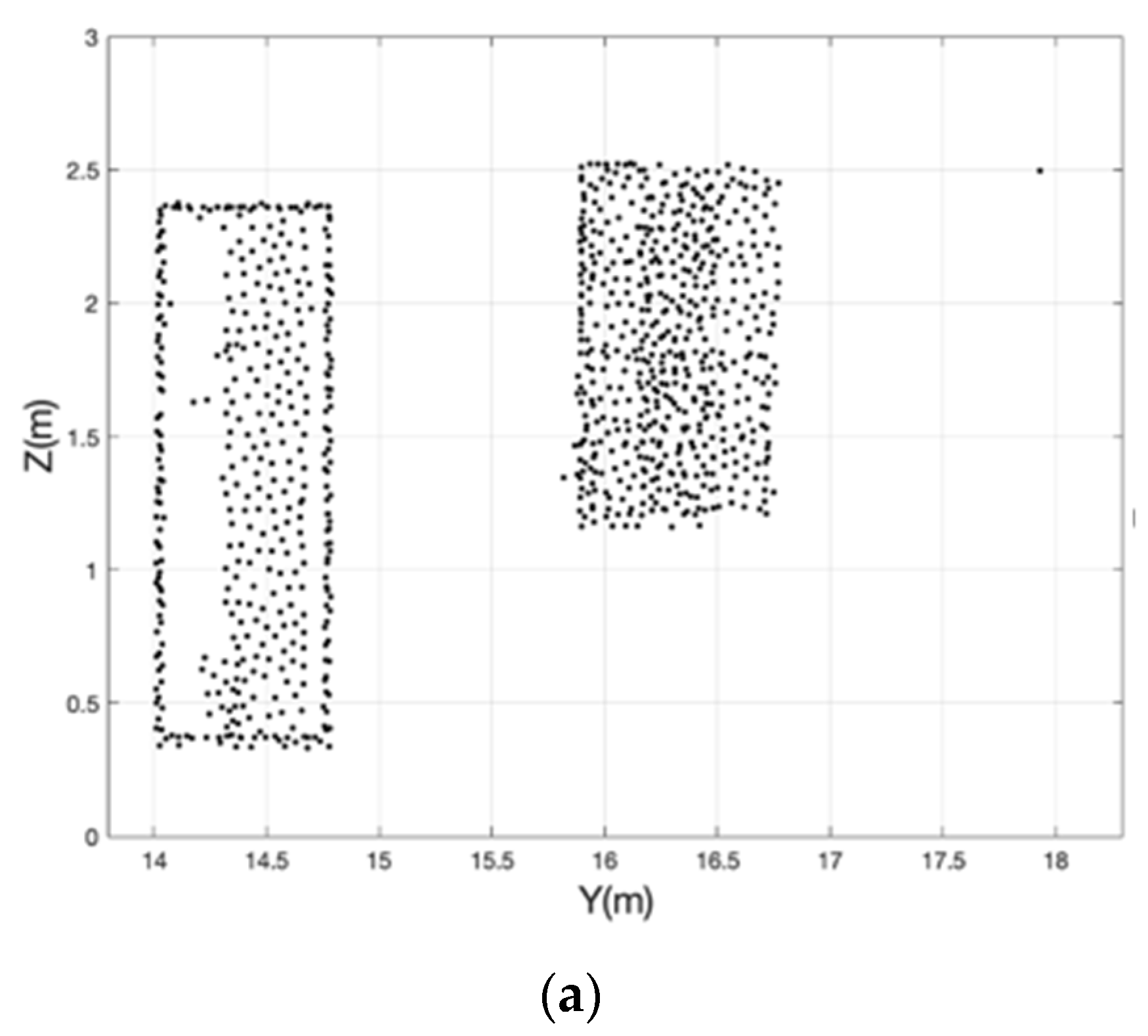

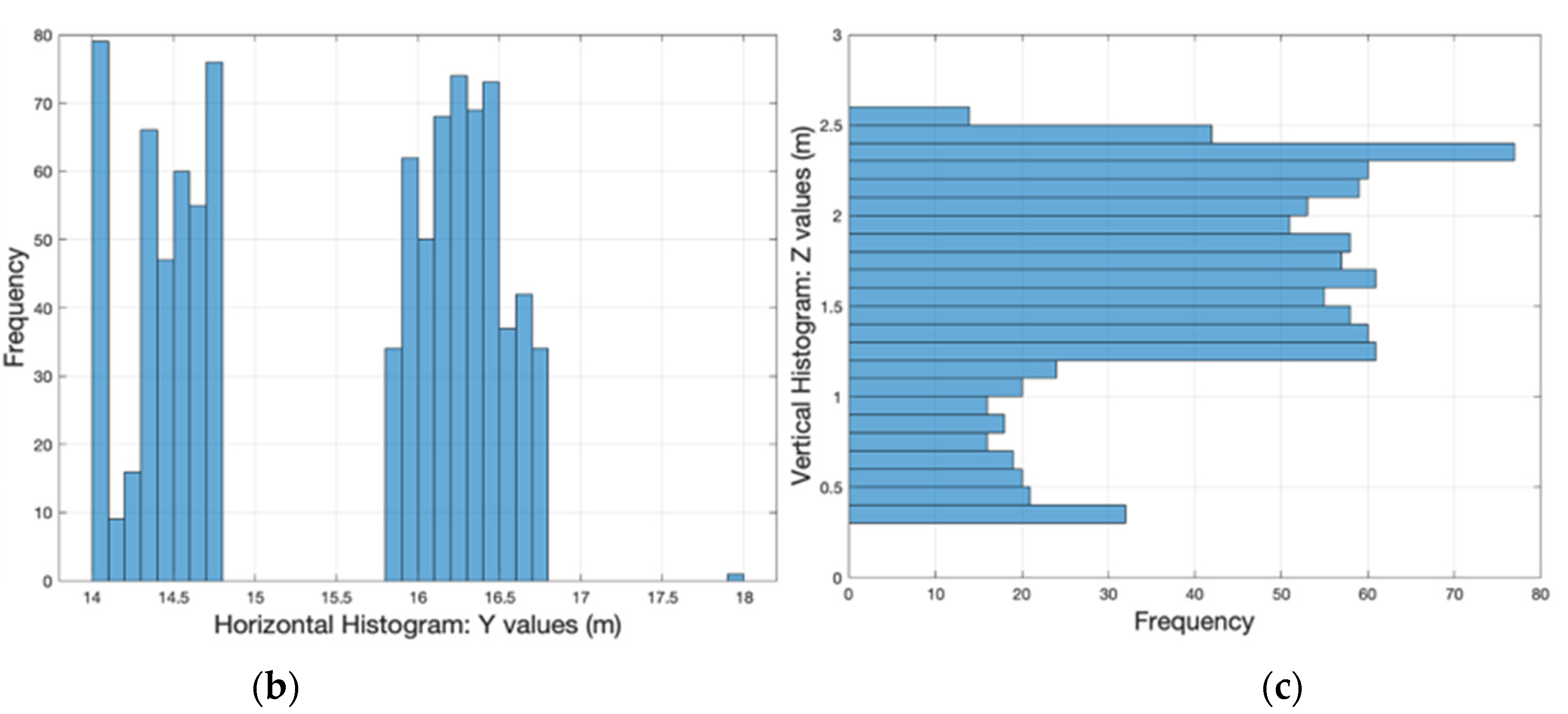

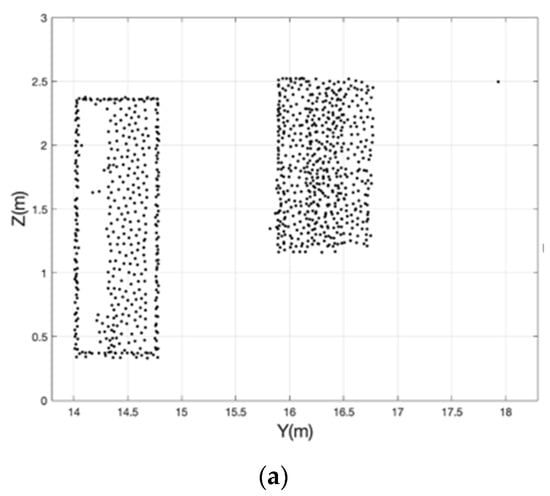

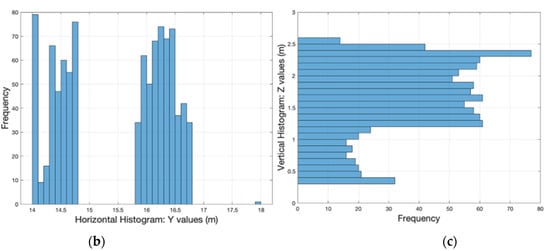

The door and window candidates are split into clusters based on a histogram of point density in both the horizontal and vertical directions. Using a manually determined bin size of 10 cm, consistent with the voxel size used earlier, histograms were computed for each series of points occurring between two neighbouring wall segments. Points are clustered if there are empty bins in the histogram occurring between full bins. In this way, the doors and windows are identified and false candidates are removed. An illustration of these vertical and horizontal density histograms is shown in Figure 7.

Figure 7.

Door and window candidates: (a) extracted points representing two window features, (b) horizontal density histogram, and (c) vertical density histogram.

For windows, the minimum dimension is 0.38 m with a minimum area of 0.35 m2. For doors, the minimum width is 0.80 m and the minimum height is 1.98 m. These dimensional constraints were obtained from the common door and windows sizes defined by the National Building Code of Canada [31]. Although new buildings must follow the National Building Code of Canada in their construction, it must be remembered that many heritage buildings were constructed before the use of building codes was commonplace. This adds to the challenge of modelling heritage building features because anomalies can exist where door or window sizes fall outside of those defined by a modern building code. However, for the three buildings modelled in this project, the vast majority of the door and window features fell within these guidelines.

Finally, the detected walls, door, and windows are represented by their extremities for translation to the CAD model. For 2D shapes, the minimum and maximum values of a feature’s X and Y coordinates are stored. For 3D shapes, the minimum and maximum values of a feature’s X, Y, and Z coordinates are stored. This allows for the representation of simple planar features in the 2D or 3D CAD model.

3.5. Quality Assessment

The success of the proposed methods was quantified using precision, recall, and accuracy measures. The ground truth model construction followed the conventional building model extraction techniques of tracing over features and recording their dimensions. The comparison between the truth model and the calculated model can illustrate the successes and challenges of the developed methods. Previous work, including [32,33,34], used a truth model to evaluate the success of their building modelling methodologies. In the work presented here, the quality of the detected windows and doors will also be evaluated in detail by comparing the calculated dimensions of the doors and windows to the true dimensions of the doors and windows, following examples of precision and recall analysis from [17,24,25,26].

This truth model was compared to the calculated model and the precision, recal, and accuracy values were computed as follows.

where are true positives (points contained in both the calculated and the truth model), are false positives (points contained in the calculated model but not in the truth model), are false negatives (points contained in the truth model but not in the calculated model), and are true negatives (points contained in neither the calculated model nor the truth model). If the points within the features modelled by the algorithms are “calculated”, and the points within the features produced by the truth model are “true”, then precision represents the calculated walls that are true, while recall represents the number of true walls that were calculated. Accuracy represents the total number of correctly classified points over the total number of points in the point cloud. Values close to 1, or close to 100%, for precision, recall, and accuracy are desirable.

4. Dataset Description

Point cloud datasets from three Canadian heritage sites were used to test the developed algorithms. This included the Royal Canadian Corps of Signals Transmitter Station (Signal House) on Qikiqtaruk/Herschel Island, Territorial Park, Yukon; the Jobber’s House in Fish Creek Provincial Park, Alberta; and the Old Sun Community College, formerly the Old Sun Residential School located on Treaty 7 land and belonging to the Siksika Nation, one of the three nations of the Blackfoot Confederacy. The scans were collected with a combination of the Z + F IMAGER 5010X, Z + F IMAGER 5016 and the Leica BLK360, and registered using targeted and cloud-based registration in Z+F Laser Control.

4.1. Signal House, Qikiqtaruk/Herschel Island, Territorial Park

The dataset used for development of the algorithm is the Signal House. Located in the Canadian Arctic, Qikiqtaruk has been in use by Inuvialuit and Euro-North American groups for over 800 years. The building was erected by the Royal Canadian Corps of Signals in 1930 and used as a transmitter station connecting the parts of the Yukon and the Northwest Territories to southern Canada. Over time, the building has undergone renovations to accommodate North West Mounted Police as well as Territorial Park staff and scientists. The building was scanned in the summers of 2018 and 2019 as a part of a larger scanning project to digitally preserve many of the historic buildings on the island that are subject to the detrimental effects of climate change, the melting of permafrost, and rising sea levels. The terrestrial laser scan of the Signal House took approximately four hours, with a total of 20 scans. An image of the Signal House is included in Figure 8.

Figure 8.

Signal House, Herschel Island—Qikiqtaruk.

This dataset was chosen as it exemplifies the type of wood frame structure commonly found in heritage buildings across western Canada. The approximate dimensions of the Signal House are 6.5 m × 8 m × 3 m. It is a simple building, with one storey and four main rooms. The building has one exterior door, four interior doors, and five windows. Although the layout of the building is simple, the rooms contained a large amount of furniture. This helped to illustrate the effectiveness of the door and window extraction method when subjected to clutter and occlusions.

4.2. Jobber’s House, Fish Creek Provincial Park

The second dataset used to illustrate the suitability of the developed algorithm to model heritage buildings is the Jobber’s House. Located in Fish Creek Provincial Park just south of Calgary, Alberta, the house was part of a larger ranch homesteaded in 1902. The name “Jobber” refers to the head herdsman who was responsible for repairs around the ranch. The building was scanned in May 2019. The terrestrial laser scan of the Jobber’s House took approximately eight hours, with a total of 40 scans. An image of the building captured during the scanning is included in Figure 9.

Figure 9.

Jobber’s House, Fish Creek Provincial Park.

The building contains two storeys and was therefore a useful test of the storey separation algorithm developed in this project. The Jobber’s House has windows on all sides, a front and back door, and a covered front porch. The wood construction and lack of a foundation make the building particularly susceptible to deterioration and gradual shifting over time. The approximate dimensions of the Jobber’s House are 7.5 m × 8 m × 7 m. The dataset has intermediate complexity when compared to Old Sun and the Signal House. In addition, when the scan was captured, the Jobber’s House had little to no furniture in many of the rooms, and therefore was able to illustrate the success of the algorithms in a building with minor clutter and occlusions when compared to the major levels of clutter present at both the Signal House and Old Sun.

4.3. Old Sun Residential School

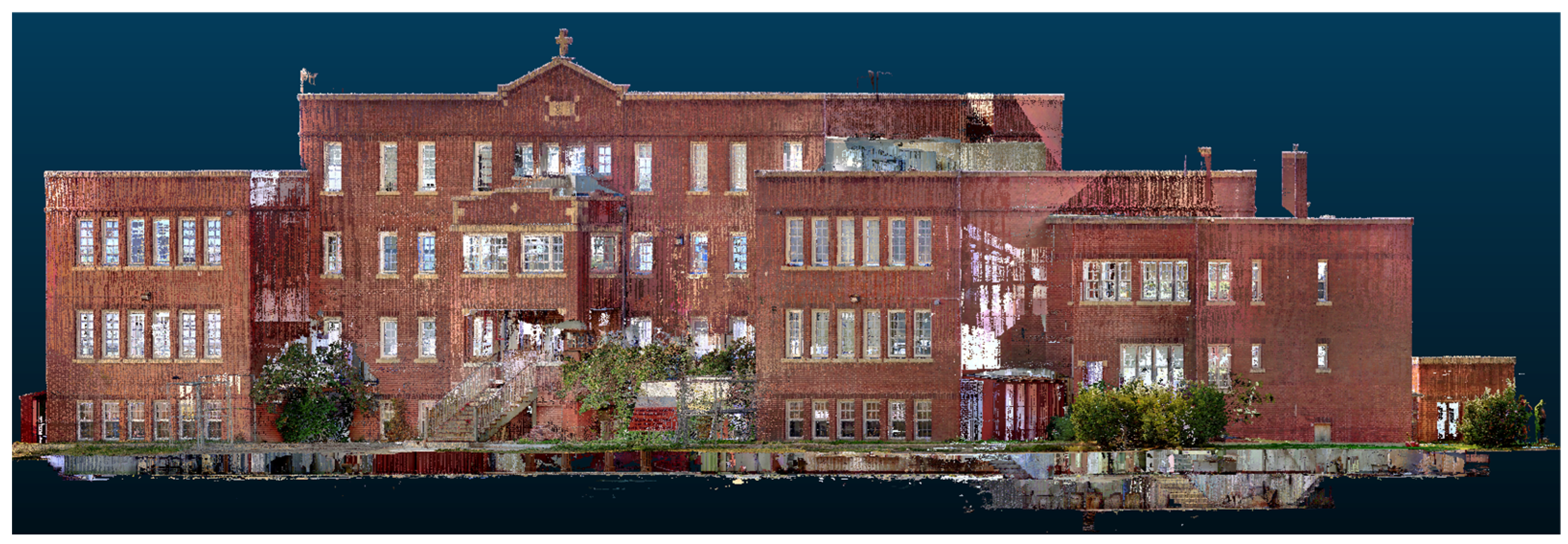

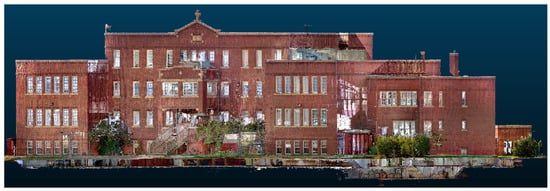

The Old Sun Community College, formerly the Old Sun Residential School, was operated by the Anglican Church as an Indian Residential School from 1929–1971. The building has three floors and a large basement, totalling approximately 1200 square meters. This point cloud dataset was collected in August 2020. The terrestrial laser scan of the Signal House took 9 days, with a total of 300 scans. The captured point cloud is presented in Figure 10.

Figure 10.

Old Sun Residential School: captured point cloud.

The building was split into three sections to simplify the modelling and the presentation of results. All sections of Old Sun have storeys with multi-level floors and/or ceilings. The main building has a long central hallway flanked by two classrooms on either end. The classrooms are two steps up (approximately 30 cm) from the hallway, and therefore have multiple floor and ceiling levels. The approximate dimensions of the Old Sun main building are 45 m × 20 m, with four storeys, the Old Sun rear building is 22 m × 30 m, with two storeys, and the Old Sun annex is 9.5 m × 12 m, with three storeys. The height of the various sections of the building ranges from 3 m to 14 m. These sections are illustrated in Figure 11.

Figure 11.

Old Sun sections: main building outlined in yellow, rear building in red, annex in green.

5. Results and Discussion

The storey separation algorithm and the door and window detection algorithm were tested individually and as part of the end-to-end modelling solution. The results of these tests are presented in the following sections.

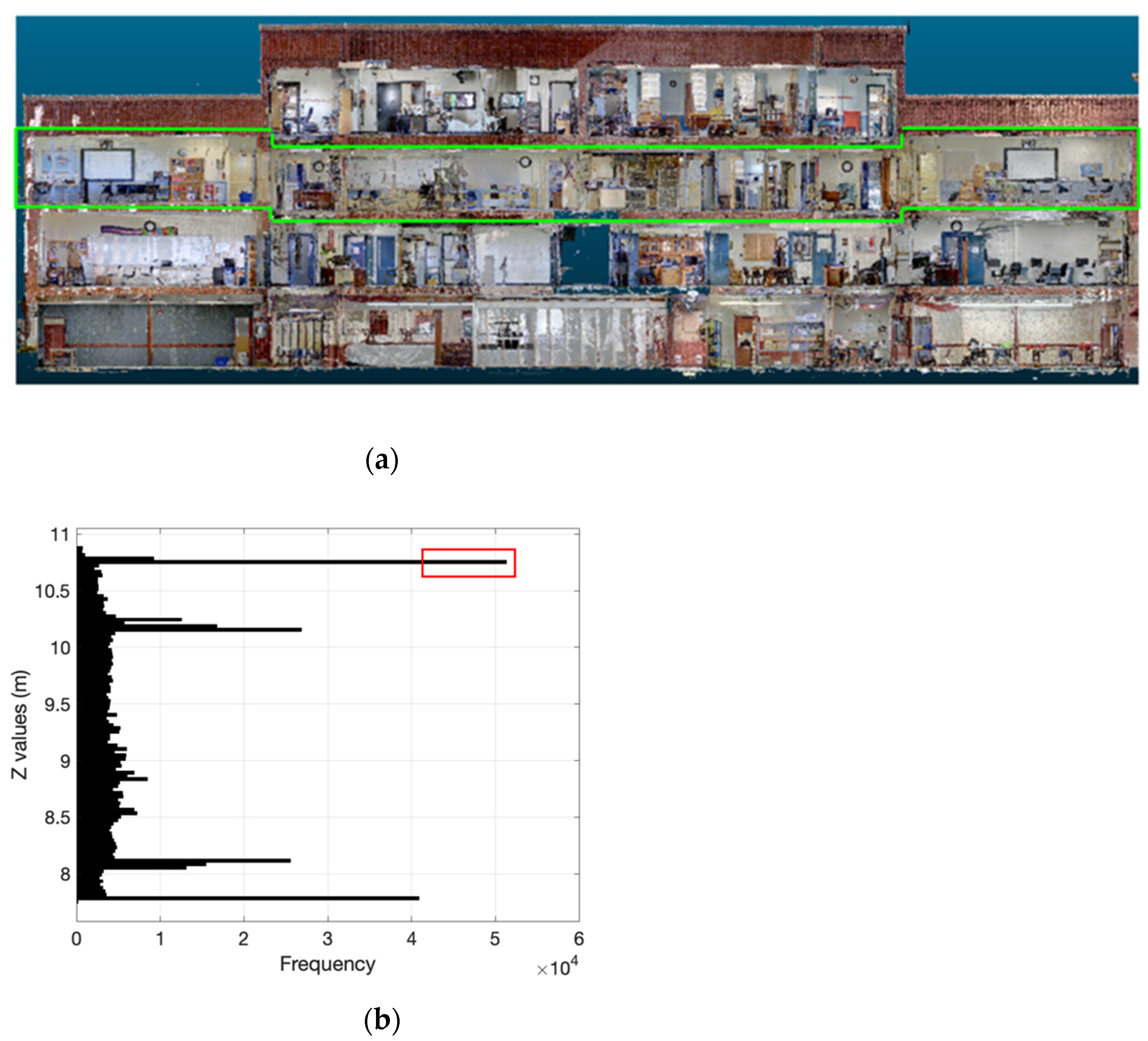

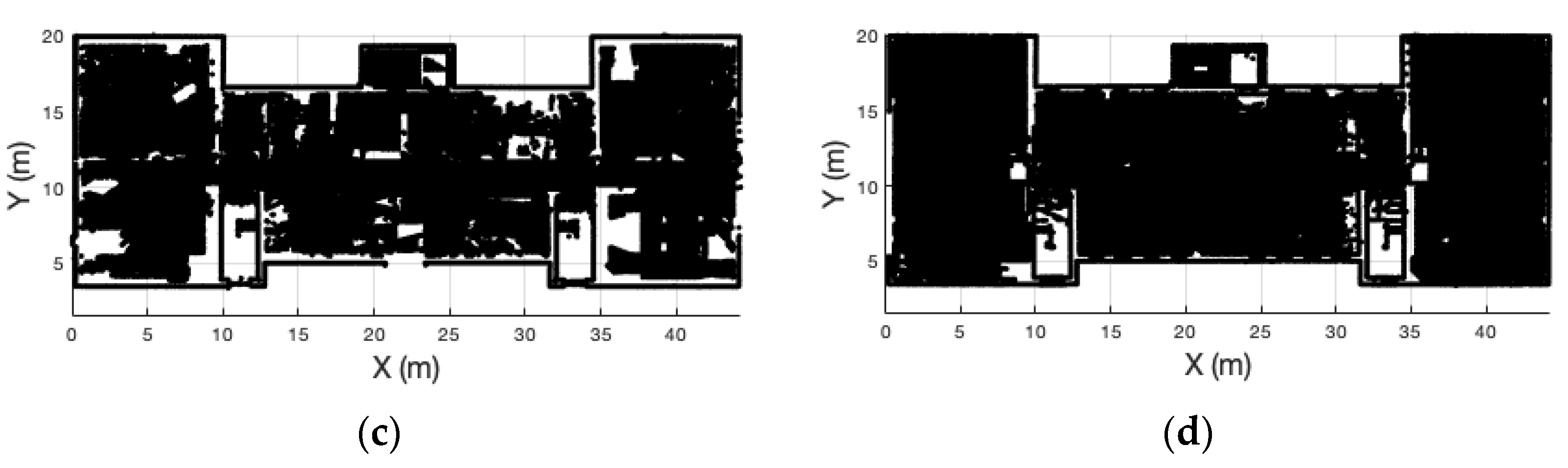

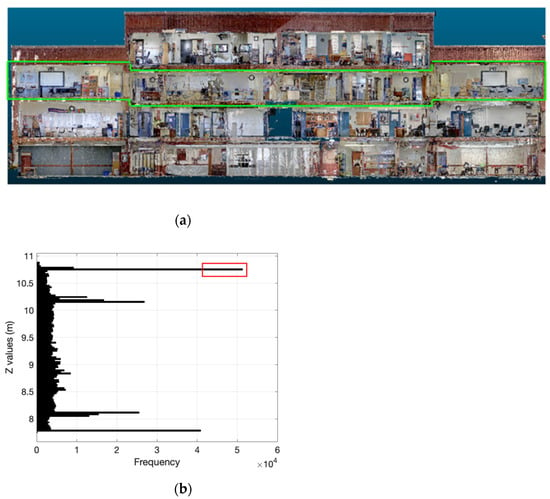

5.1. Storey Separation

A cross section of the main building and the annex of the Old Sun School is presented in Figure 12a, illustrating the multiple heights of the floor and ceiling of the third storey of the main building, as well as the overlap in heights of the third storey and the fourth storey of the main building. The significant level of clutter present in the building is also visible in Figure 12a. The complete vertical histogram for the Old Sun Main Building is presented in Figure 12b. The vertical histogram for the third storey of the Old Sun Main Building showing multiple floor and ceiling heights is presented in Figure 12b.

Figure 12.

(a) Cross section of Old Sun Main Building with Storey 3 outlined in green; (b) Vertical histogram for Old Sun Main Building Storey 3 where multi-level floor and ceiling slabs are present, overlapping floor and ceiling segment outlined in red.

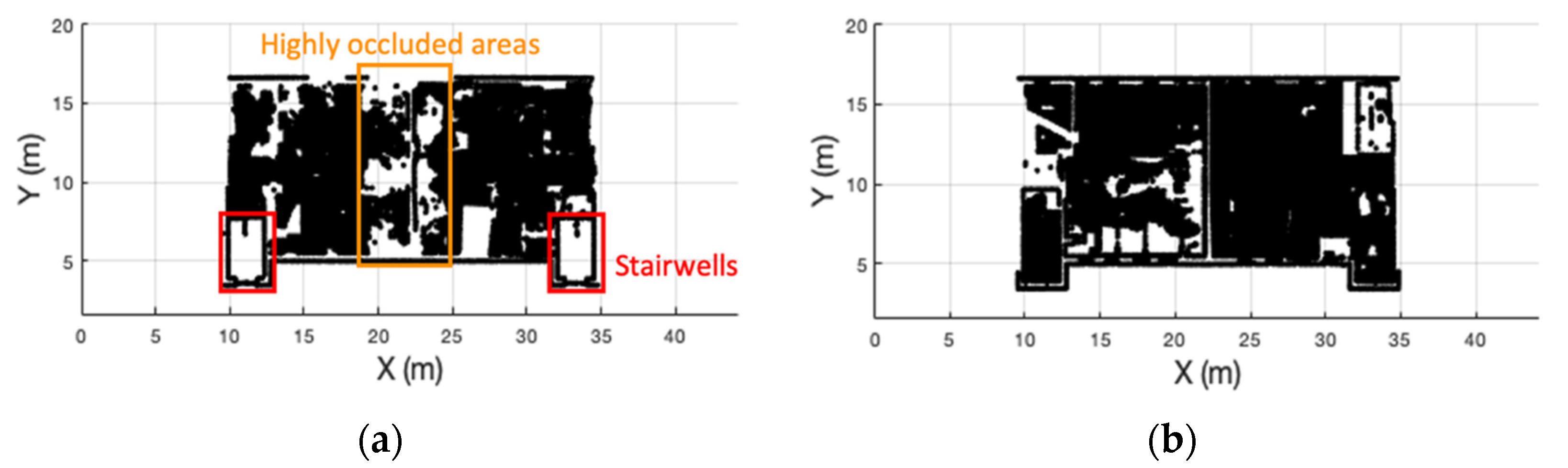

Some sections of the third storey ceiling and the fourth storey floor are equal, shown by the extended full bin at the top of Figure 12b. It is necessary to use the boundary extraction method to match the outline of the ceiling and floor to ensure that every floor point has a corresponding ceiling point located above it. If it does not, then the point likely belongs to the lower storey, in this case the storey 3 ceiling. To illustrate the results for the storey separation procedure of the Old Sun Main Building, the reconstructed floor and ceiling slabs for storey 3 and storey 4 are presented in Figure 13.

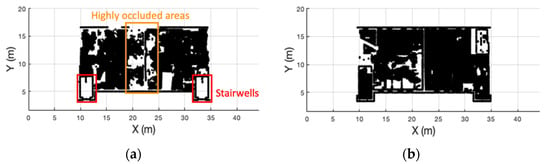

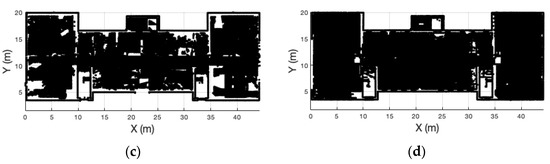

Figure 13.

Results for storey separation of Old Sun; (a) Fourth storey floor; (b) Fourth storey ceiling; (c) Third storey floor; (d) Third storey ceiling.

In Figure 13, the coverage for the multi-level floor and ceiling slabs of storey 3 and storey 4 shows almost all sections correctly reconstructed. The two stairwells located at the bottom of Figure 13a–c are empty because there was no single floor or ceiling plane to reconstruct. In addition, is can be seen from Figure 13 that the coverage achieved on the ceiling slabs is better than the coverage achieved on the floor slabs. This is because furniture sits on the floor and obstructs the scanner from collecting points, especially in cluttered buildings.

Additional results for the storey separation methodology are presented in Table 1. For the storey separation method, three sets of floor and ceiling slabs were extracted from the Jobber’s House, the Old Sun main building, and the Old Sun rear building. These datasets were chosen because the second storey of the Jobber’s House represented a tilted floor and ceiling, while both the third storey of the Old Sun main building and the second storey of the Old Sun rear building represented multi-level floor and ceiling slabs.

Table 1.

Storey separation results.

The algorithm was designed to deal with multi-level floor and ceiling slabs, as seen in the third storey of the Old Sun main building and the second storey of the Old Sun rear building. The results in Table 1 show successful results for both the Old Sun storeys, with accuracy values of 93.65% and 96.30%. Low values for recall were achieved for the second storey of the Jobber’s House due to the presence of a tilted floor and ceiling plane. The floor and ceiling of the second storey of the Jobber’s House were tilted by approximately 1.5°. This caused inaccuracies in the developed floor and ceiling extraction method. This is a possible scenario, especially in heritage buildings; however, the algorithm was not designed to deal with tilted floors and ceilings, hence the poor results for recall.

5.2. Door and Window Extraction

Four datasets were chosen for analyzing the performance of door and window extraction as well as floor plan and building model creation. These four datasets possess varying characteristics such as size, complexity, level of clutter, and construction method. The number of doors and windows contained within the truth model compared to the number of doors and window in the calculated model for each of the four datasets is presented in Table 2.

Table 2.

Door and window extraction results.

The performance of the door and window extraction algorithm was better on datasets with lower complexity, such as the Signal House and the Jobber’s House. In addition, the algorithm performed well when detecting the windows of the Old Sun Annex, a promising result given the thickness of the exterior brick walls. The door and window detection algorithm struggled on the third storey of the Old Sun Main Building. The algorithm did not successfully detect the doors along the long narrow hallway due to the lack of consistent planes due to planar surfaces affixed to the wall such as notice boards in the hallway and smart screens in the classrooms.

The door and window extraction method was further tested on the doors and windows from the Signal House dataset. The calculated doors and windows were compared to the true dimensions of the doors and windows measured from the point cloud. The difference between the width and height of the doors and windows for the calculated model and the truth model are presented in Table 3.

Table 3.

Door and window dimensions for the Signal House.

Sub-decimeter results were achieved for the automated extraction of doors and windows. The extracted door dimensions are slightly more accurate than the windows due to the reduced presence of glass-caused outliers. As seen from the values in Table 3, the dimensional estimates were commonly larger than the true value, indicating that the histogram-based clustering method tending to over-estimate the size of a window or door feature. This comes from the 10cm size of the histogram bin that was used to cluster the points.

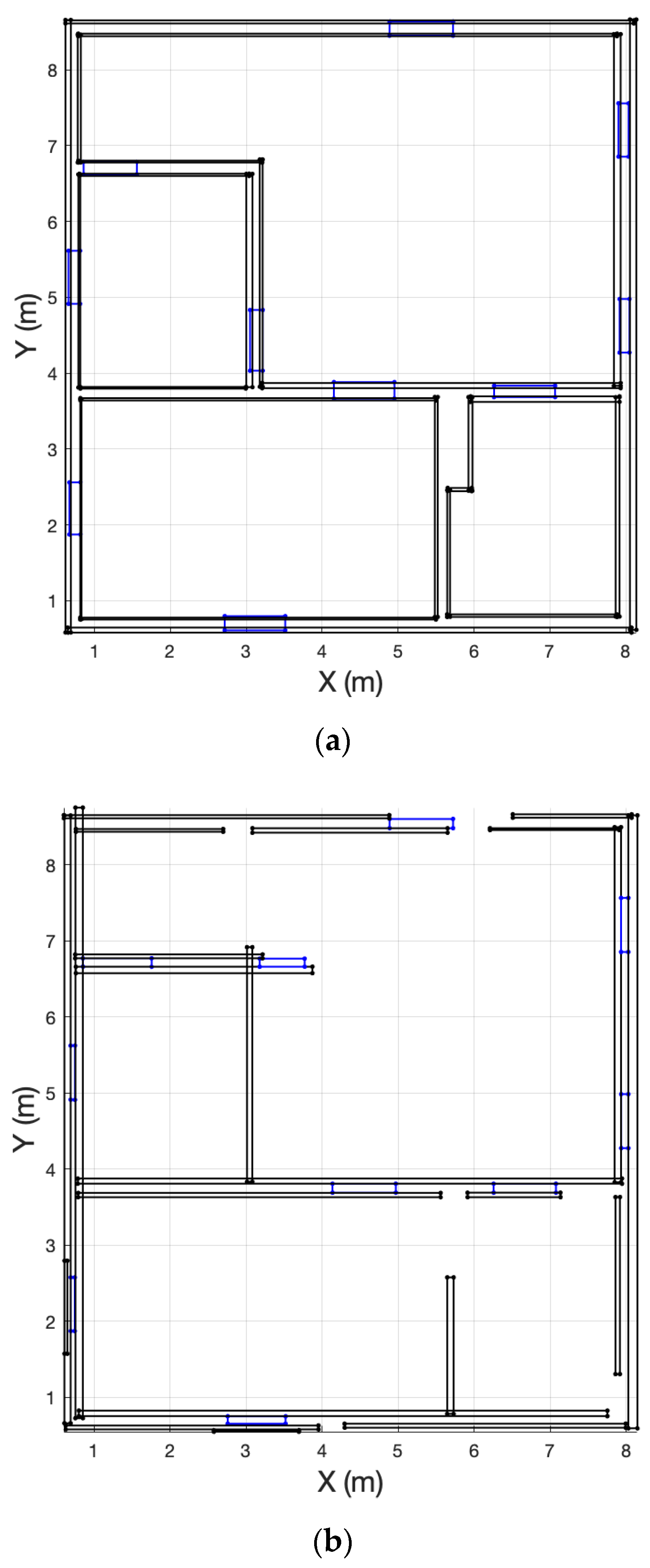

5.3. 2D Floor Plan and 3D Building Model Creation

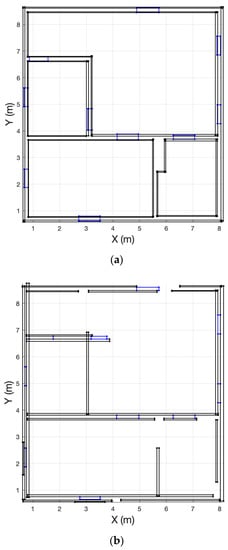

The 2D floor plans and 3D building models were calculated following the methodology described by Figure 1. The calculated model was then compared to a truth model to quantify the accuracy of the result. The 2D floor plan truth model and calculated model are presented for the first storey of the Jobber’s House in Figure 14a and Figure 14b, respectively.

Figure 14.

Jobber’s House Storey 1: (a) Truth model; (b) Calculated model. Walls in black, windows and doors in blue.

As seen in Figure 14b, the majority of the walls, windows, and doors have been successfully extracted by the automated method. Furniture located along the left central wall and the wall separating the kitchen from the rear room at the bottom of Figure 14b caused the algorithm to miss some wall segments. However, all the doors and windows were successfully extracted, with only one false detection, likely caused by the presence of clutter.

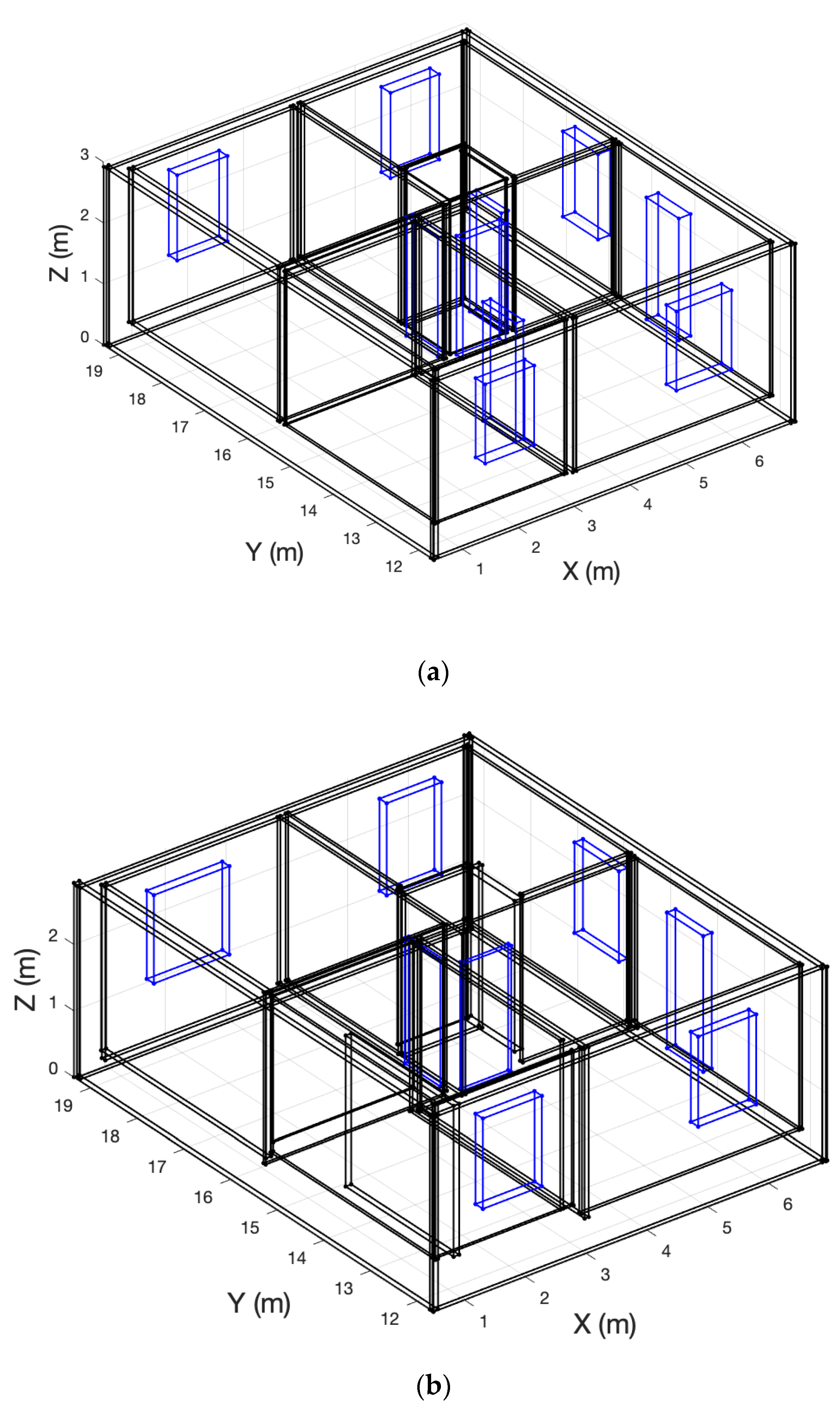

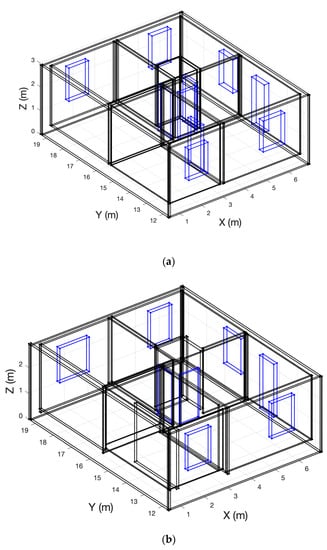

The algorithm creates 2D floor plans and 3D building models simultaneously. The building model created for the Signal House is presented in Figure 15.

Figure 15.

Signal House: (a) Truth model; (b) Calculated model. Walls in black, windows and doors in blue.

As seen in Figure 15, the vast majority of the walls, as well as the doors and windows, of the Signal House have been correctly detected by the algorithm. All the windows have been detected, and four of five doors have been detected, with no false detections. The fifth door was not detected because it was located within a very small wall segment that the algorithm was not able to reconstruct.

The 2D floor plans and 3D building models were produced for all storeys of all buildings; however, only some were compared against a truth model to analyze the accuracy of the solution for building storeys with varying size, complexity, presence of clutter, and wall thicknesses. The results for the tested models are presented in Table 4.

Table 4.

2D floor plan and 3D model creation results.

The datasets increase in size and complexity throughout Table 4. Due to its small size and low complexity, it is expected that the Signal House will have the best results for precision, recall, and accuracy. The quality measures for the Jobber’s House show a slight decrease relative to those of the Signal House, but are all still greater than 92%. Similar results were achieved for the Old Sun Annex. The dimensions of the Old Sun Annex are only slightly bigger than the Jobber’s House, and the levels of complexity are comparable. The main difference between these two datasets is the presence of thick exterior walls from Old Sun’s brick construction. These two datasets were compared to see if the large difference in thickness between interior walls and exterior walls would have an impact on the results of the modelling. As seen in Table 3, the results of storey 1 of the Jobber’s House and storey 3 of the Old Sun Annex are extremely similar. This is a good indicator that the algorithm can deal with varied wall thickness throughout a building. Finally, storey 3 of the Old Sun Main Building shows a dramatic increase in size, complexity, and level of clutter when compared to the other three datasets. As expected, the results have shown a decrease in quality for precision, recall, and accuracy.

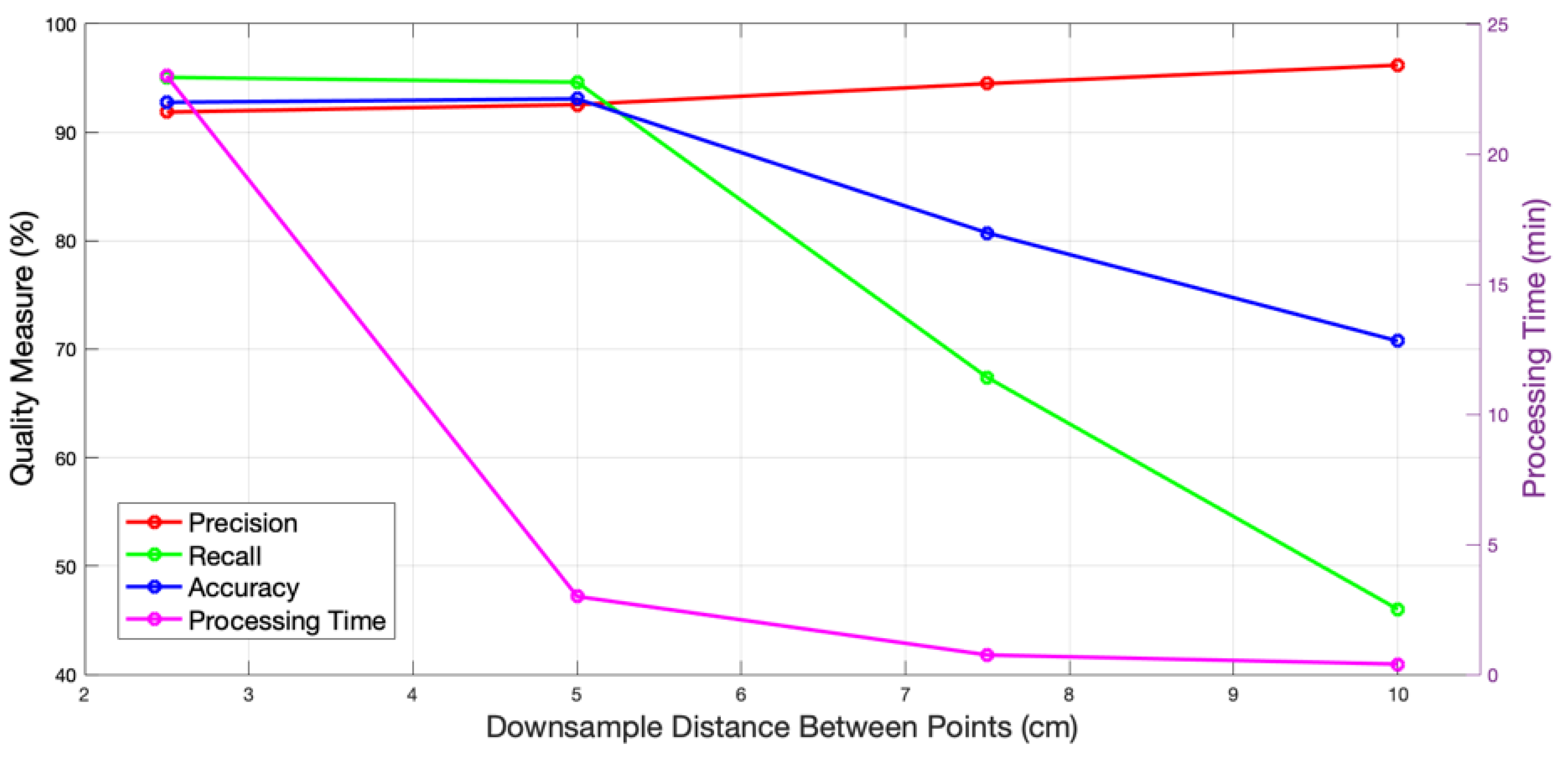

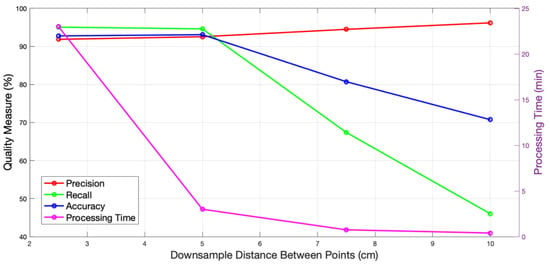

5.4. Down Sampling

Point clouds often contain millions of points, and down sampling of a dataset must be performed in order to run various building modelling algorithms in order to reduce the computational load. The down sampling process should not compromise the accuracy of the building modelling process. Testing was therefore performed to quantify any effects of down sampling by varying the minimum distance between points and comparing the respective quality measures. A value of 2.5 cm was chosen as the first down sampling distance as this was the minimum value required to reduce the point cloud enough to run through the programming environment. The down sampling distance was then increased using an increment of the smallest distance. The results of the building model creation were used to determine the quality measures for each down sampling distance. The effects of different down sample distances on quality measures of precision, recall, and accuracy are presented in Figure 16.

Figure 16.

Results for various down sample distances (2.5 cm, 5 cm, 7.5 cm, and 10 cm) in the Signal House dataset.

It is clear from Figure 16 that the down sample distance of 5 cm was the best choice in terms of maximizing quality measures and minimizing processing time. The quality measures, including recall and accuracy, began to decline when the distance between points was larger than 5 cm. In addition, the processing time dropped off steeply from 2.5 cm to 5 cm, and then continued to decrease marginally.

6. Conclusions

The methods developed in this work aim to automatically reconstruct basic building features. The development of a novel strategy for the automated separation of multi-level storeys and the automated extraction of doors and windows will remove steps from the modelling process that would typically be performed manually. The automation of these steps of the building modelling process will eliminate much of the time, cost, and errors associated with manual modelling. The final models created in this work can be directly imported into a CAD environment such as Autodesk for further editing and manipulation depending on the desired application.

The datasets tested in this work were collected from heritage buildings located in western Canada; however, the algorithm will work on non-heritage buildings. In addition, the method has been proven successful with varying sizes, varying complexity, the presence of clutter, and different construction types. The algorithms developed in this work will reduce the amount of manual modelling that has to be performed in order to transform point clouds into floor plans and building models.

Future work includes the automated detection of tilted storeys, a possible building characteristic in heritage buildings, as well as the quantification of clutter levels within a building. Dealing with the presence of clutter, most often in the form of furniture, will improve the quality of the extracted results.

Author Contributions

Conceptualization, K.P., D.D.L. and P.D.; methodology, K.P. and D.D.L.; software, K.P.; validation, K.P.; formal analysis, K.P.; investigation, K.P.; resources, D.D.L. and P.D.; data curation, K.P. and P.D.; writing—original draft preparation, K.P.; writing—review and editing, K.P., D.D.L. and P.D.; visualization, K.P.; supervision, D.D.L. and P.D.; project administration, P.D.; funding acquisition, K.P., D.D.L. and P.D. All authors have read and agreed to the published version of the manuscript.

Funding

Support for this work was provided by the Alberta Graduate Excellence Scholarship (AGES), the Natural Sciences and Engineering Research Council (NSERC) of Canada Graduate Scholarship—Master’s (CGS-M) Program and NSERC (RGPIN/03775-2018).

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

Data supporting the reported results can be found on https://alberta.preserve.ucalgary.ca/ (accessed on 25 August 2021).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Thomson, C.; Boehm, J. Automatic Geometry Generation from Point Clouds for BIM. Remote Sens. 2015, 7, 11753–11775. [Google Scholar] [CrossRef] [Green Version]

- Khoshelham, K.; Díaz Vilariño, L. 3D Modelling of Interior Spaces: Learning the Language of Indoor Architecture. In Proceedings of the ISPRS Technical Commission V Symposium, Riva del Garda, Italy, 23–25 June 2014; Volume XL-5. [Google Scholar]

- Tran, H.; Khoshelham, K. Procedural Reconstruction of 3D Indoor Models from Lidar Data Using Reversible Jump Markov Chain Monte Carlo. Remote Sens. 2020, 12, 838. [Google Scholar] [CrossRef] [Green Version]

- Xia, S.; Chen, D.; Wang, R.; Li, J.; Zhang, X. Geometric Primitives in LiDAR Point Clouds: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 685–707. [Google Scholar] [CrossRef]

- Xiao, J.; Furukawa, Y. Reconstructing the World’s Museums. In Proceedings of the Computer Vision—ECCV 2012, Florence, Italy, 7–13 October 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 668–681. [Google Scholar]

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor scene reconstruction using feature sensitive primitive extraction and graph-cut. ISPRS J. Photogramm. Remote Sens. 2014, 90, 68–82. [Google Scholar] [CrossRef] [Green Version]

- Nurunnabi, A.; Belton, D.; West, G. Robust Segmentation for Large Volumes of Laser Scanning Three-Dimensional Point Cloud Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4790–4805. [Google Scholar] [CrossRef]

- Yang, F.; Zhou, G.; Su, F.; Zuo, X.; Tang, L.; Liang, Y.; Zhu, H.; Li, L. Automatic Indoor Reconstruction from Point Clouds in Multi-room Environments with Curved Walls. Sensors 2019, 19, 3798. [Google Scholar] [CrossRef] [Green Version]

- Belton, D.; Mooney, B.; Snow, T.; Bae, K.-H. Automated Matching of Segmented Point Clouds to As-built Plans. In Proceedings of the Surveying and Spatial Sciences Conference 2011, Wellington, New Zealand, 21–25 November 2011. [Google Scholar]

- Xie, L.; Wang, R.; Ming, Z.; Chen, D. A Layer-Wise Strategy for Indoor As-Built Modeling Using Point Clouds. Appl. Sci. 2019, 9, 2904. [Google Scholar] [CrossRef] [Green Version]

- Bassier, M.; Vergauwen, M.; Van Genechten, B. Automated Classification of Heritage Buildings for As-Built BIM Using Machine Learning Techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 25–30. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, A.; Le, B. 3D point cloud segmentation: A survey. In Proceedings of the 2013 6th IEEE Conference on Robotics, Automation and Mechatronics (RAM), Manila, Philippines, 12–15 November 2013; pp. 225–230. [Google Scholar]

- Grilli, E.; Menna, F.; Remondino, F. A Review of Point Clouds Segmentation and Classification Algorithms. In Proceedings of the ISPRS—International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Nafplio, Greece, 1–3 March 2017; pp. 339–344. [Google Scholar]

- Turner, E.; Zakhor, A. Watertight As-Built Architectural Floor Plans Generated from Laser Range Data. In Proceedings of the Visualization Transmission 2012 Second International Conference on 3D Imaging, Modeling, Processing, Zurich, Switzerland, 13–15 October 2012; pp. 316–323. [Google Scholar]

- Li, L.; Su, F.; Yang, F.; Zhu, H.; Li, D.; Zuo, X.; Li, F.; Liu, Y.; Ying, S. Reconstruction of Three-Dimensional (3D) Indoor Interiors with Multiple Stories via Comprehensive Segmentation. Remote Sens. 2018, 10, 1281. [Google Scholar] [CrossRef] [Green Version]

- Macher, H.; Landes, T.; Grussenmeyer, P. From Point Clouds to Building Information Models: 3D Semi-Automatic Reconstruction of Indoors of Existing Buildings. Appl. Sci. 2017, 7, 1030. [Google Scholar] [CrossRef] [Green Version]

- Babacan, K.; Jung, J.; Wichmann, A.; Jahromi, B.A.; Shahbazi, M.; Sohn, G.; Kada, M. Towards Object Driven Floor Plan Extraction from Laser Point Clouds. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B3, 3–10. [Google Scholar] [CrossRef] [Green Version]

- Xia, S.; Wang, R. Façade Separation in Ground-Based LiDAR Point Clouds Based on Edges and Windows. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1041–1052. [Google Scholar] [CrossRef]

- Jung, J.; Stachniss, C.; Ju, S.; Heo, J. Automated 3D volumetric reconstruction of multiple-room building interiors for as-built BIM. Adv. Eng. Inform. 2018, 38, 811–825. [Google Scholar] [CrossRef]

- Previtali, M.; Díaz-Vilariño, L.; Scaioni, M. Towards Automatic Reconstruction of Indoor Scenes from Incomplete Point Clouds: Door and Window Detection and Regularization. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII–4, 507–514. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Y.; Peter, M.; Zhong, R.; Oude Elberink, S.; Zhou, Q. Space Subdivision in Indoor Mobile Laser Scanning Point Clouds Based on Scanline Analysis. Sensors 2018, 18, 1838. [Google Scholar] [CrossRef] [Green Version]

- Budroni, A.; Böhm, J. Automatics 3D modelling of indoor Manhattan-World scenes from laser data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 115–120. [Google Scholar]

- Michailidis, G.-T.; Pajarola, R. Bayesian graph-cut optimization for wall surfaces reconstruction in indoor environments. Vis. Comput. 2017, 33, 1347–1355. [Google Scholar] [CrossRef] [Green Version]

- Zhang, R.; Zakhor, A. Automatic identification of window regions on indoor point clouds using LiDAR and cameras. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; pp. 107–114. [Google Scholar]

- Díaz-Vilariño, L.; Khoshelham, K.; Martínez-Sánchez, J.; Arias, P. 3D Modeling of Building Indoor Spaces and Closed Doors from Imagery and Point Clouds. Sensors 2015, 15, 3491–3512. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Quintana, B.; Prieto, S.A.; Adán, A.; Bosché, F. Door detection in 3D coloured point clouds of indoor environments. Autom. Constr. 2018, 85, 146–166. [Google Scholar] [CrossRef]

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.; Bolles, R. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Available online: http://www.cs.ait.ac.th/~mdailey/cvreadings/Fischler-RANSAC.pdf (accessed on 28 April 2020).

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef] [Green Version]

- Zuliani, M. RANSAC for Dummies 2008; Vision Research Lab, University of California: Santa Barbara, CA, USA, 2009. [Google Scholar]

- National Research Council Canada; Associate Committee on the National Building Code; Canadian Commission on Building and Fire Codes. National Building Code of Canada; Associate Committee on the National Building Code, National Research Council of Canada: Ottawa, ON, Canada, 2010; ISBN 978-0-660-19975-7.

- Okorn, B.; Pl, V.; Xiong, X.; Akinci, B.; Huber, D. Toward Automated Modeling of Floor Plans. In Proceedings of the Symposium on 3D Data Processing, Visualization and Transmission, Espace Saint Martin, Paris, France, 17–20 May 2010. [Google Scholar]

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor Scene Reconstruction using Primitive-driven Space Partitioning and Graph-cut. Eurographics Workshop Urban Data Model. Vis. 2013. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D.; Ruwanpura, J.Y. Robust Segmentation of Planar and Linear Features of Terrestrial Laser Scanner Point Clouds Acquired from Construction Sites. Sensors 2018, 18, 819. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).