Landslide Detection from Open Satellite Imagery Using Distant Domain Transfer Learning

Abstract

:1. Introduction

2. Materials

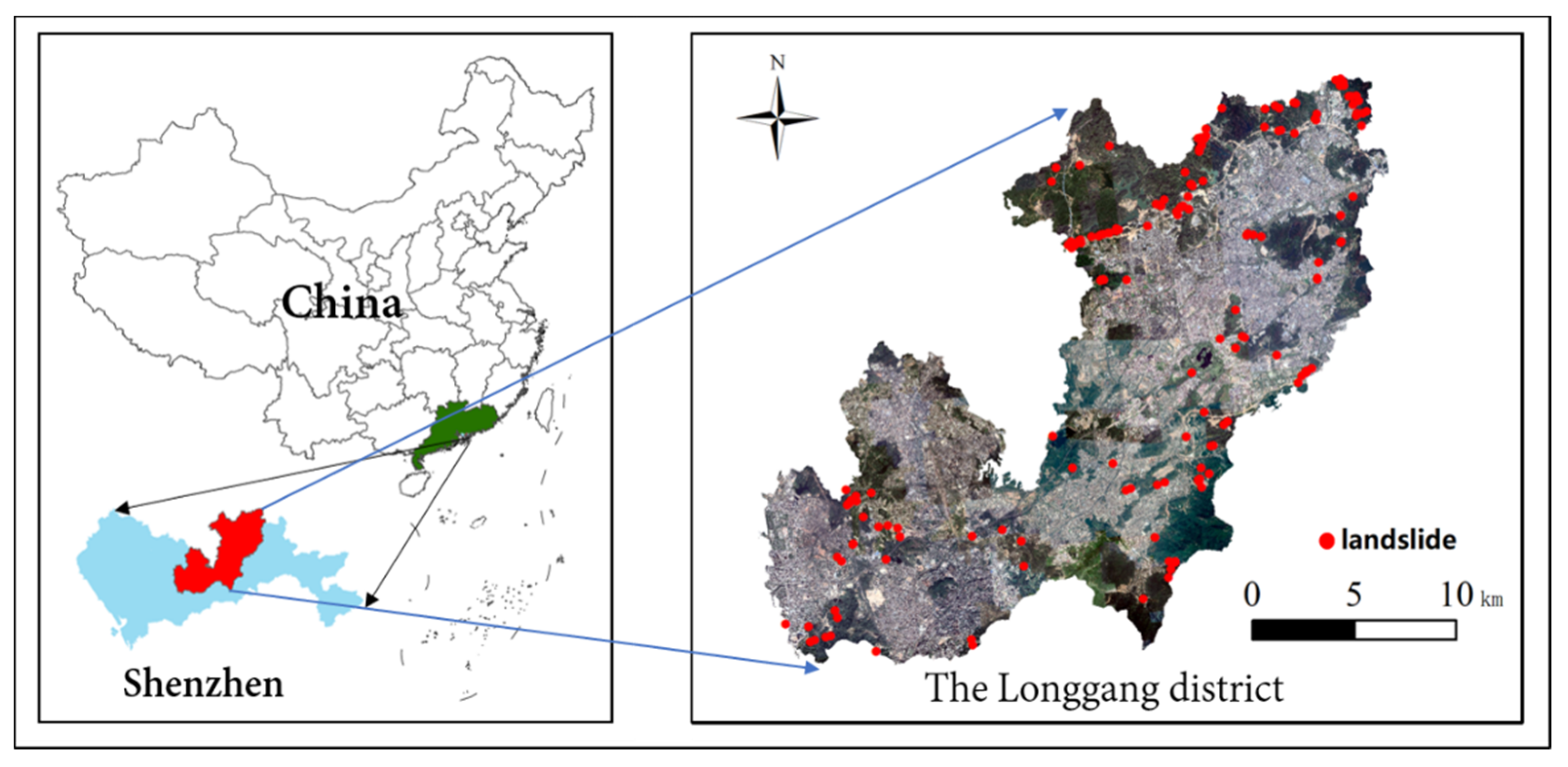

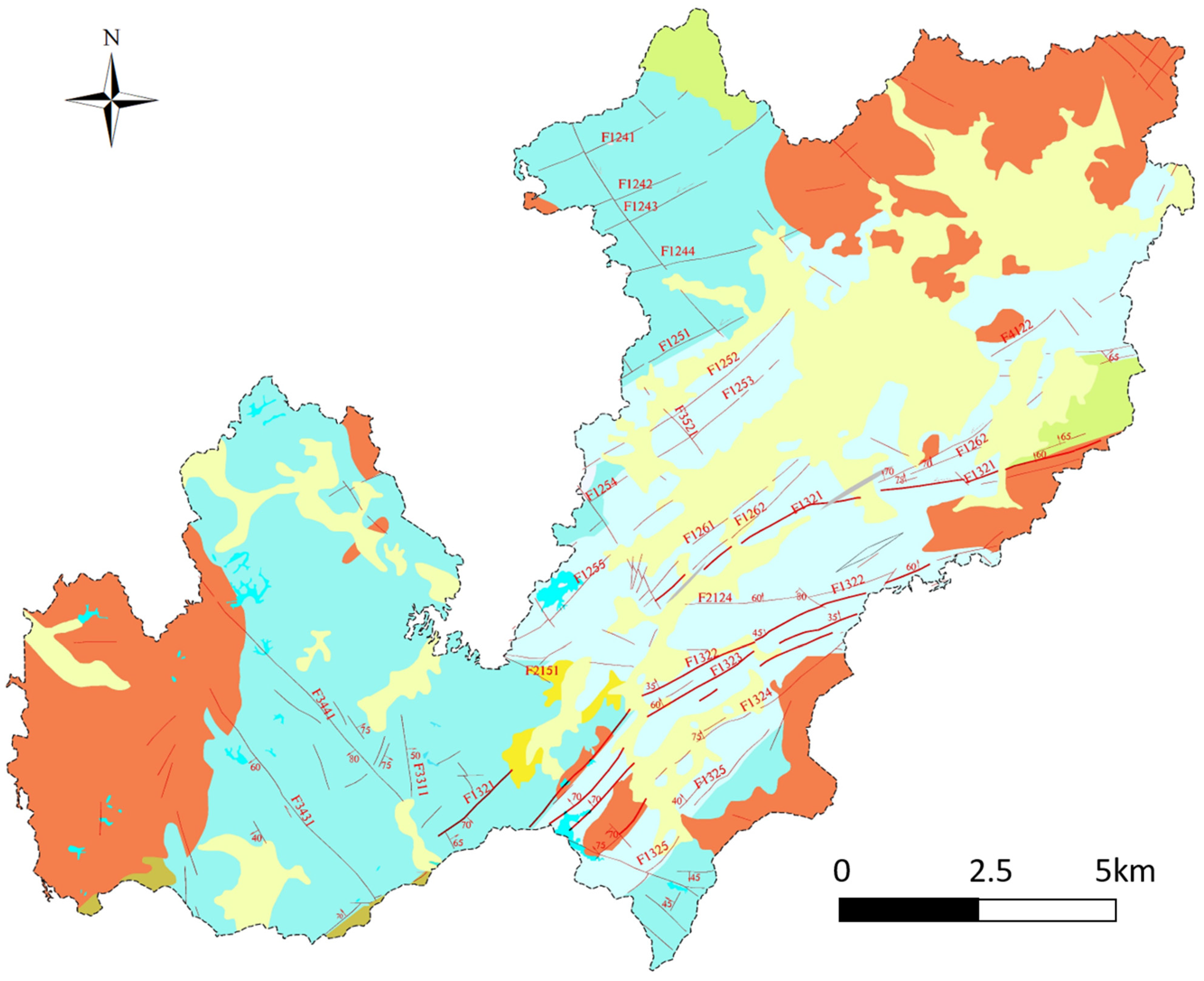

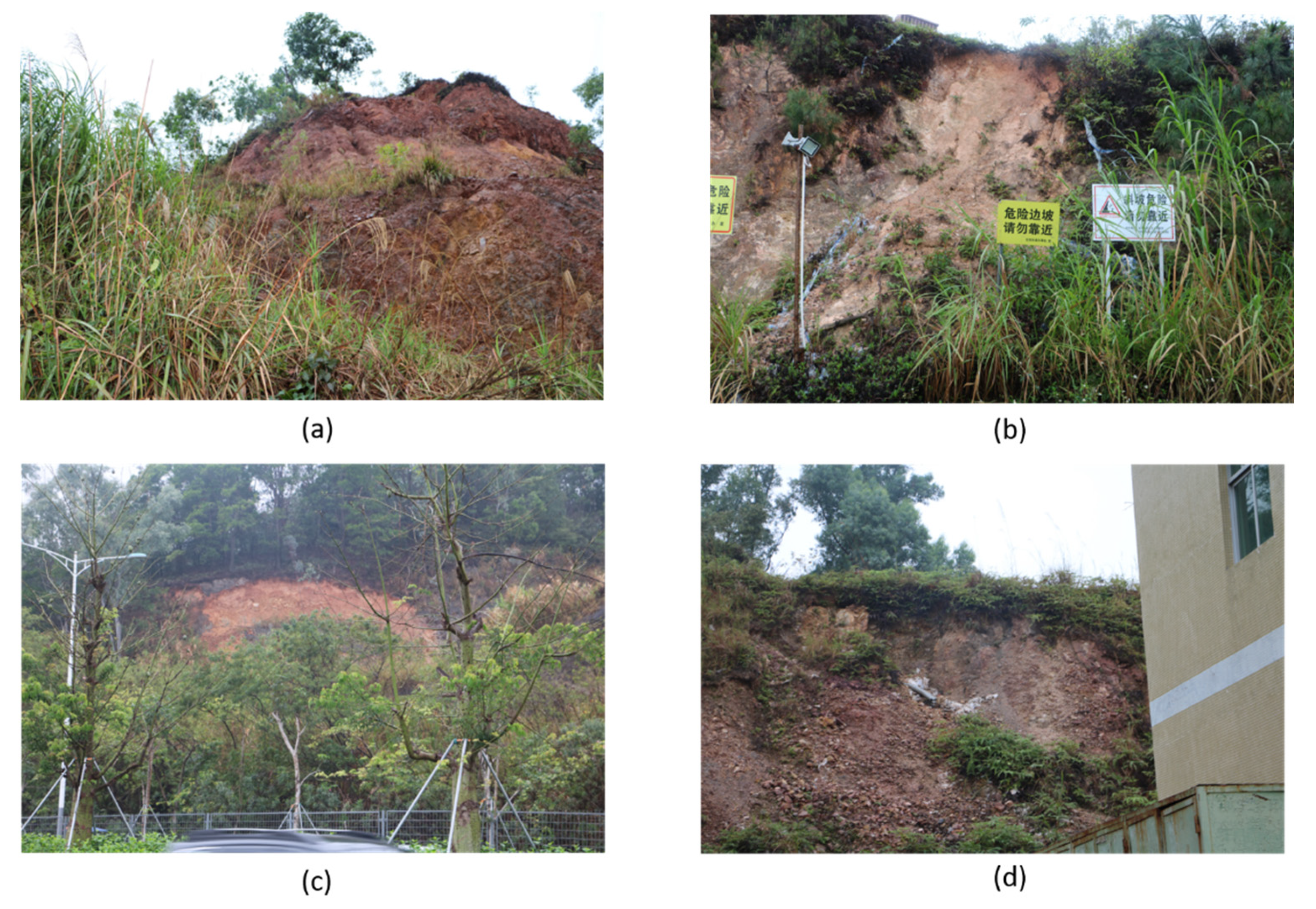

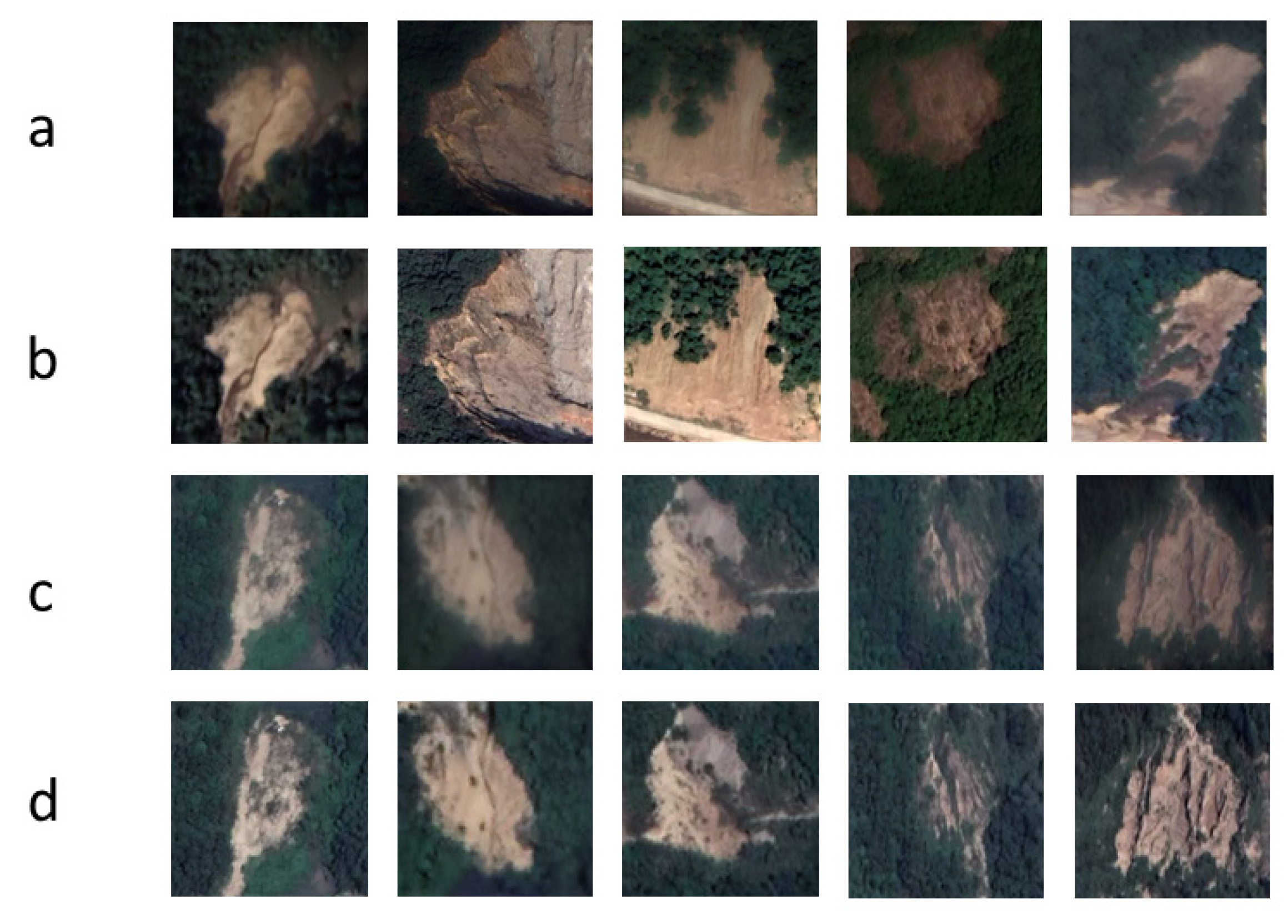

2.1. Study Area and Dataset

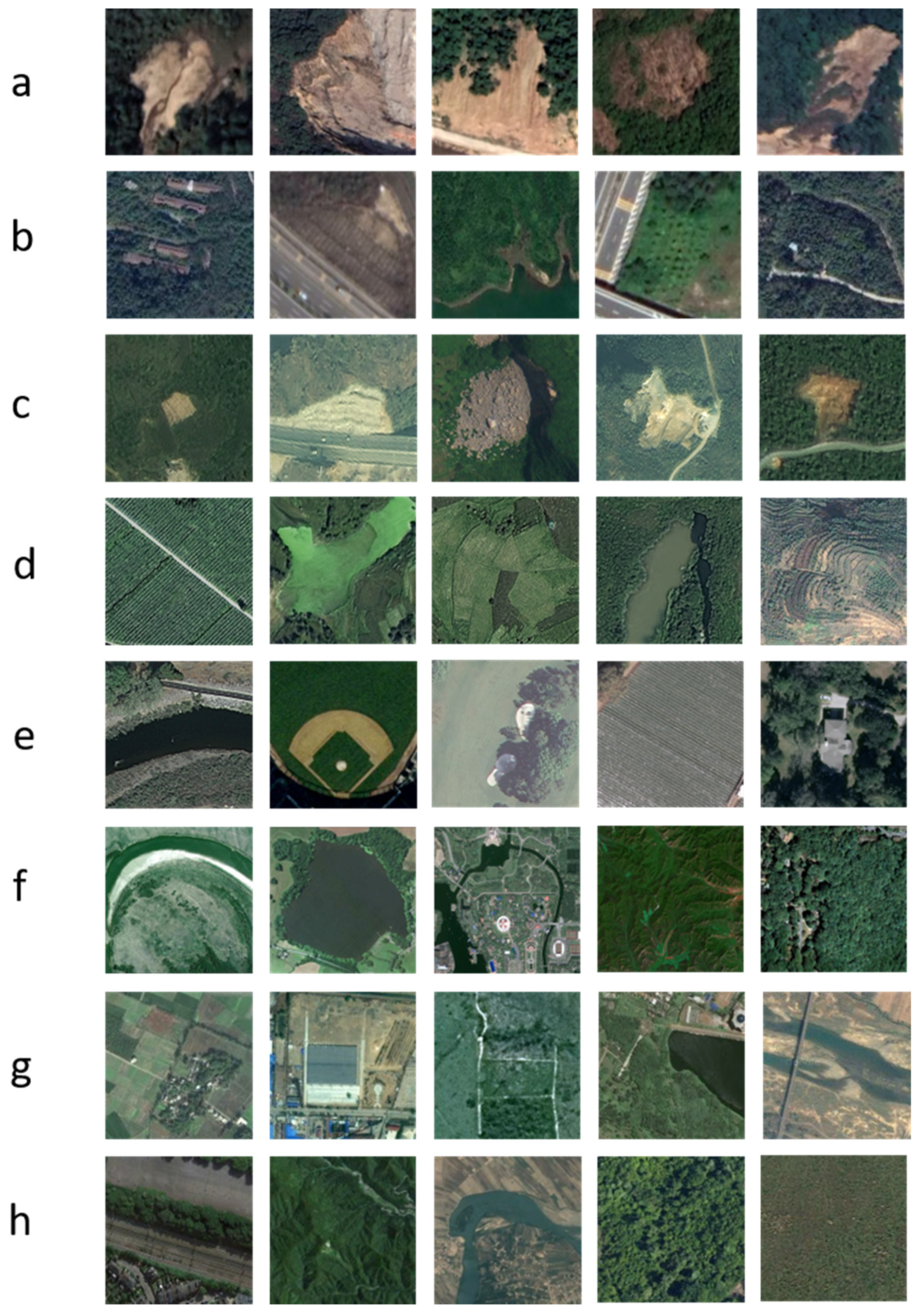

2.2. The Source Domain Datasets

3. Methods

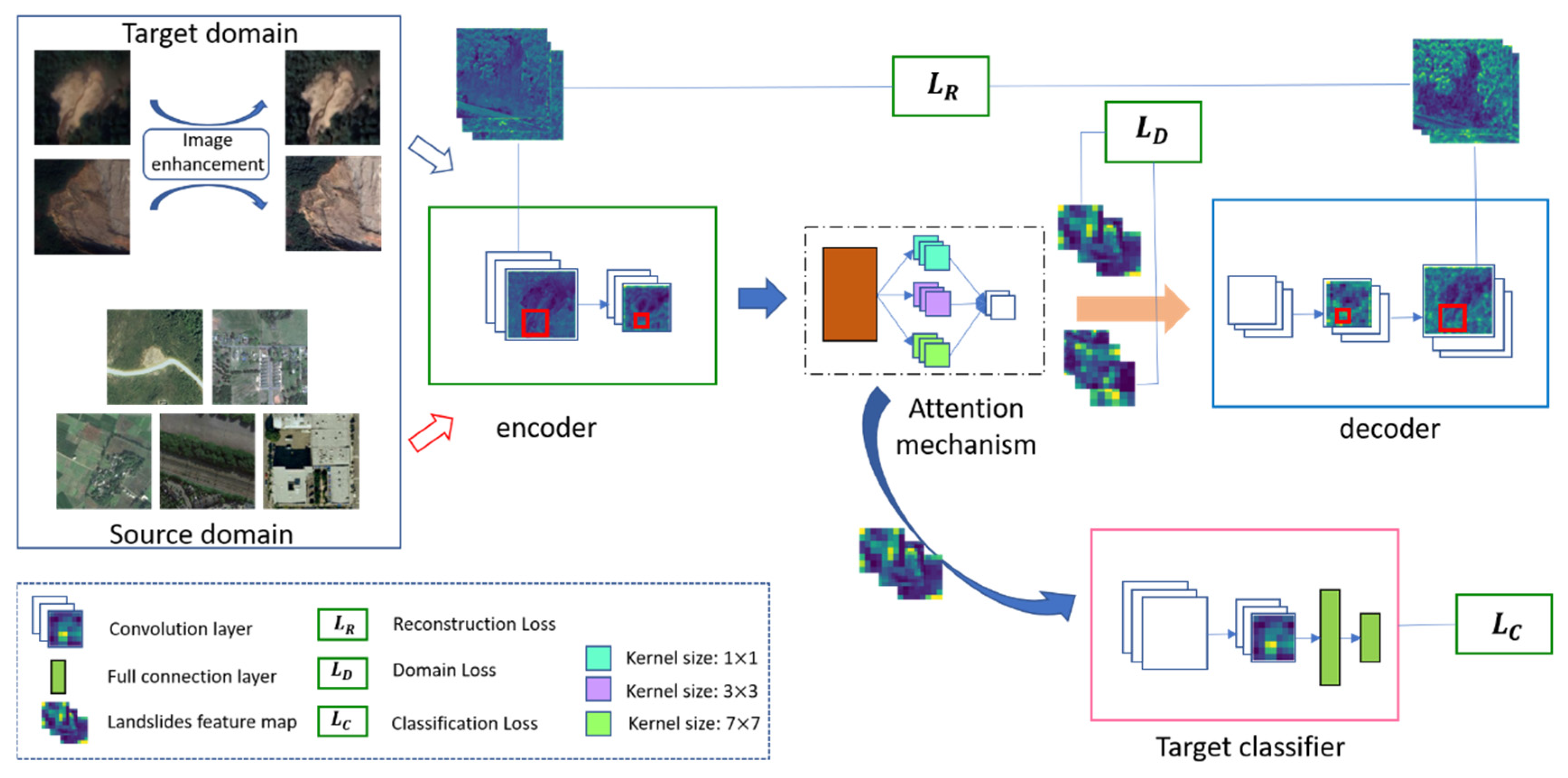

3.1. Image Enhancement

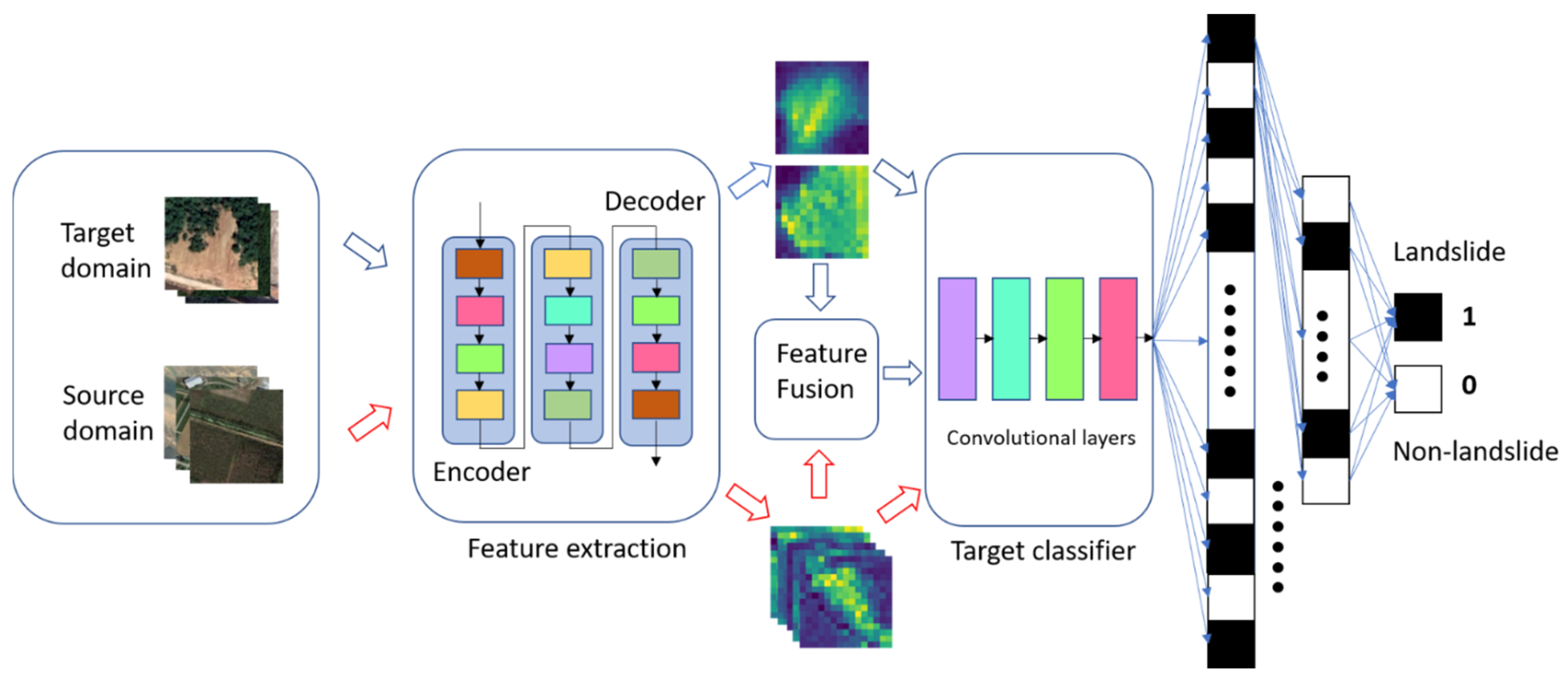

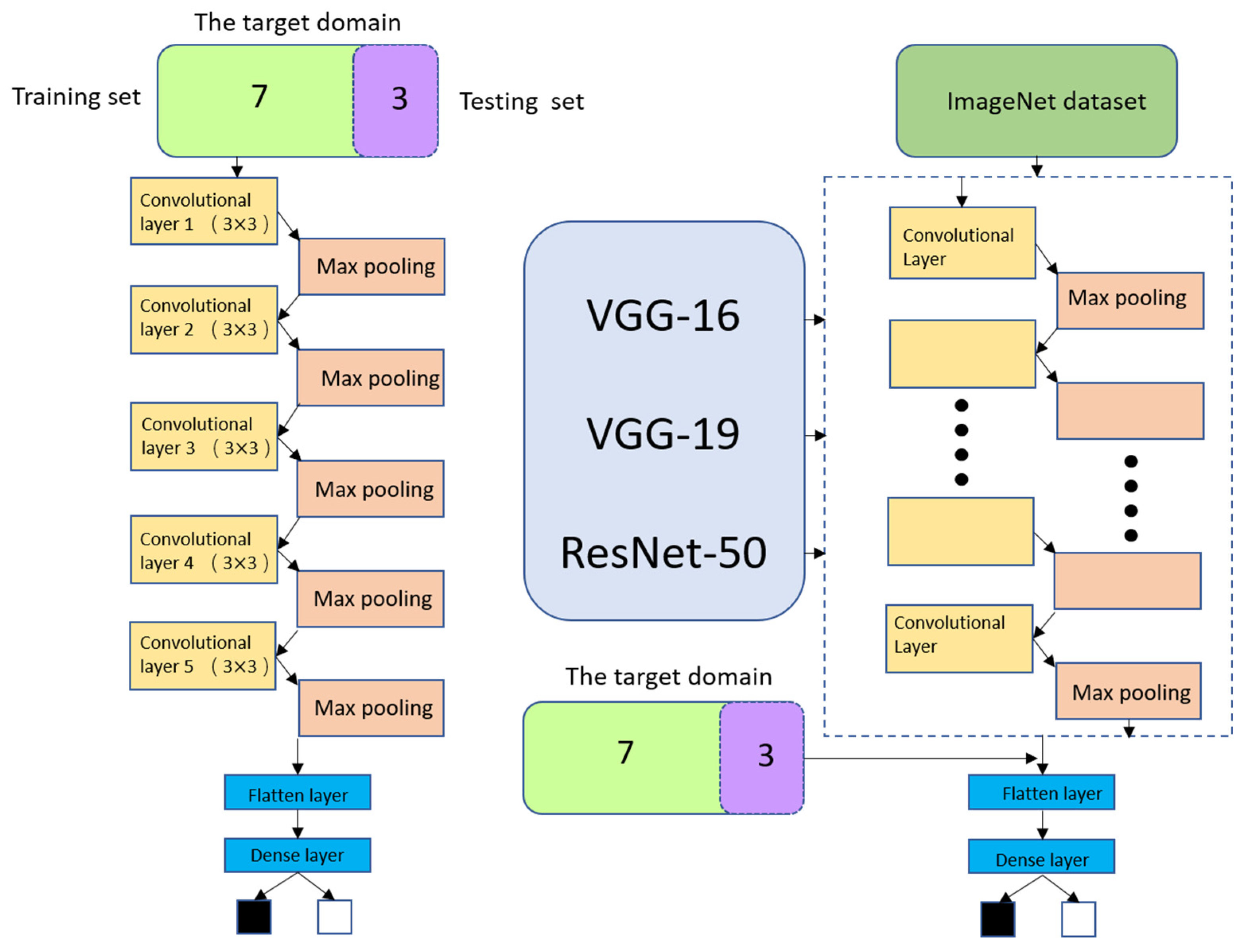

3.2. Distant Domain Transfer Learning

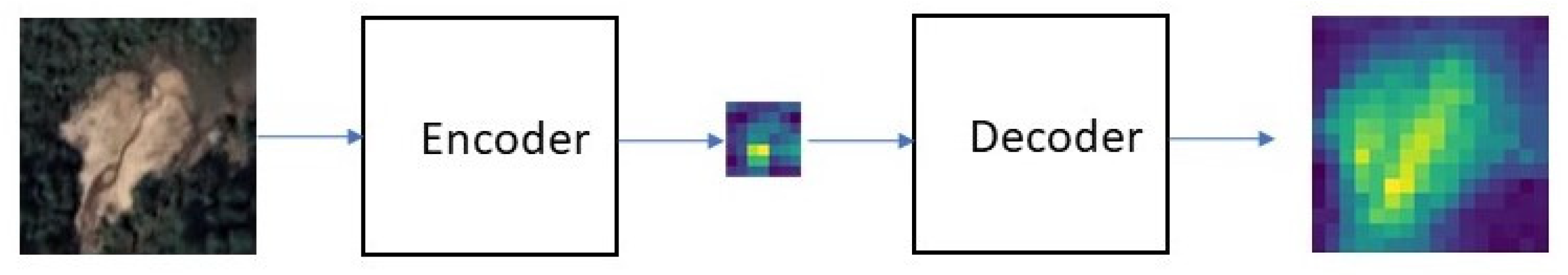

3.2.1. Autoencoder

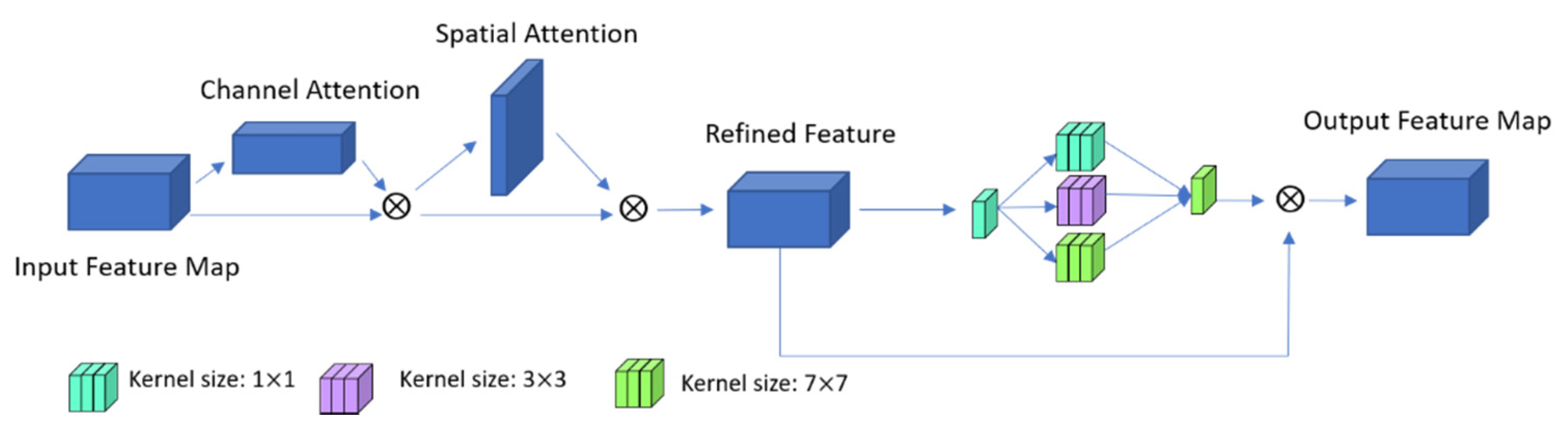

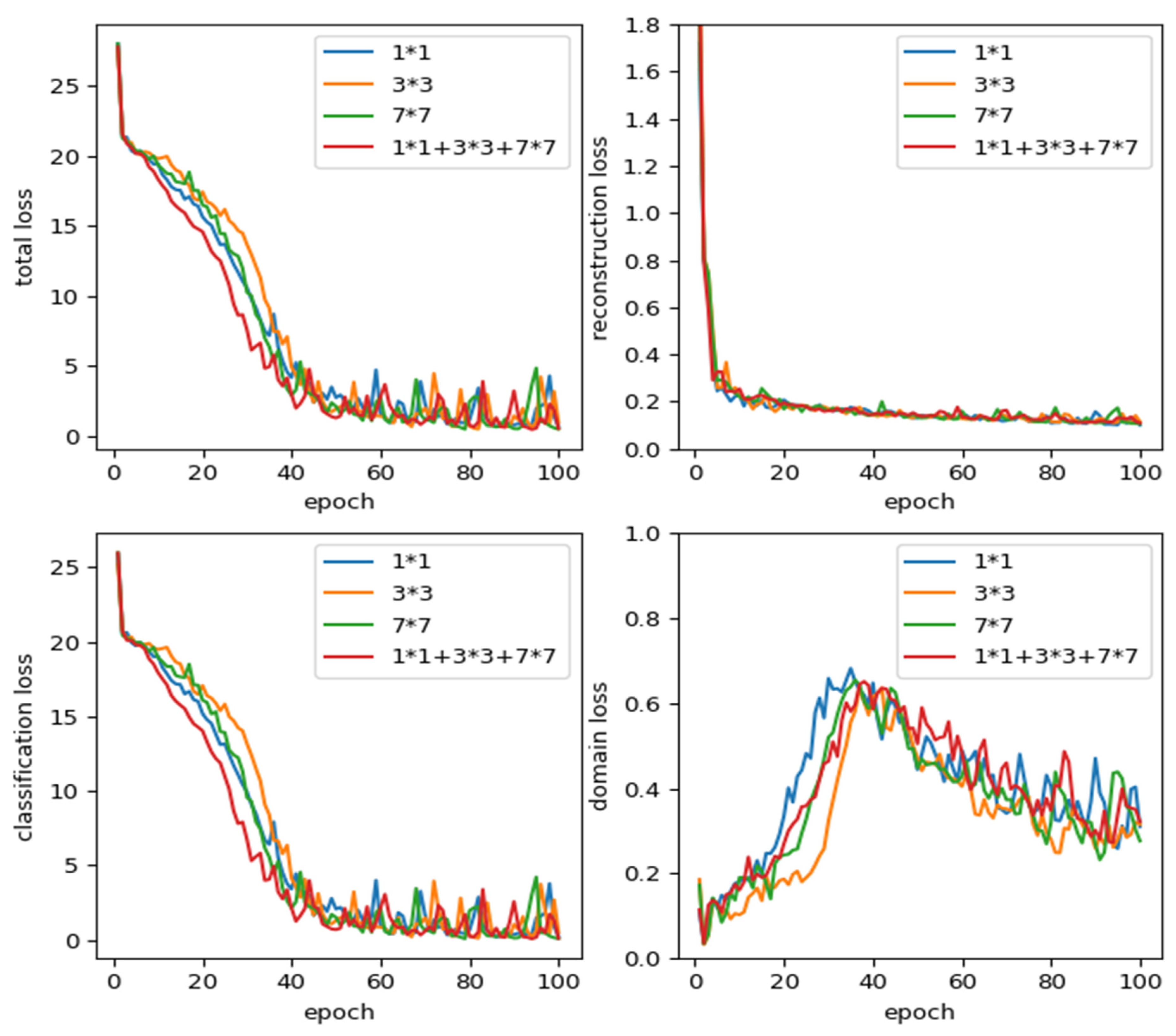

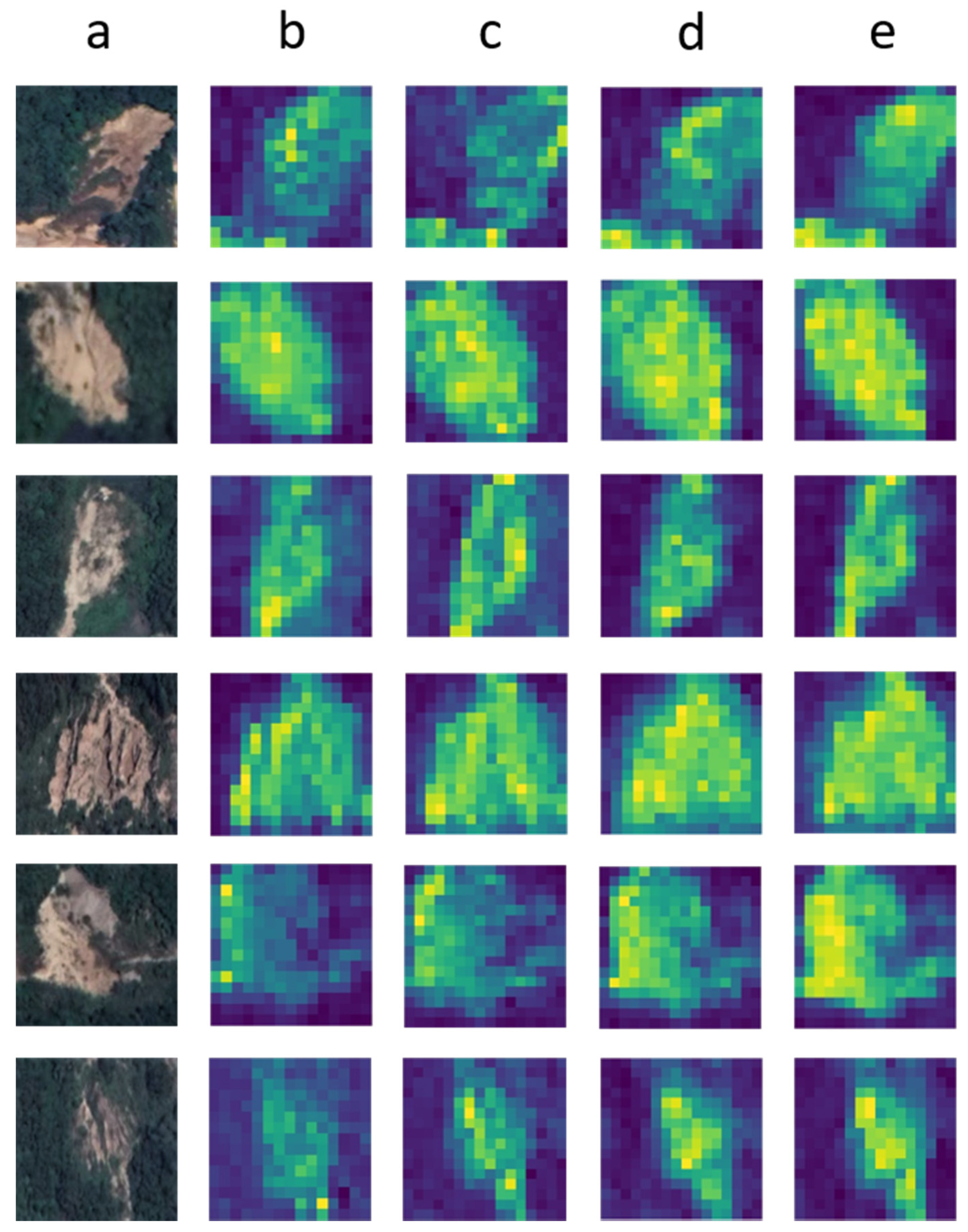

3.2.2. Attention Mechanism

3.2.3. The Loss of the Model

1. Reconstruction Loss

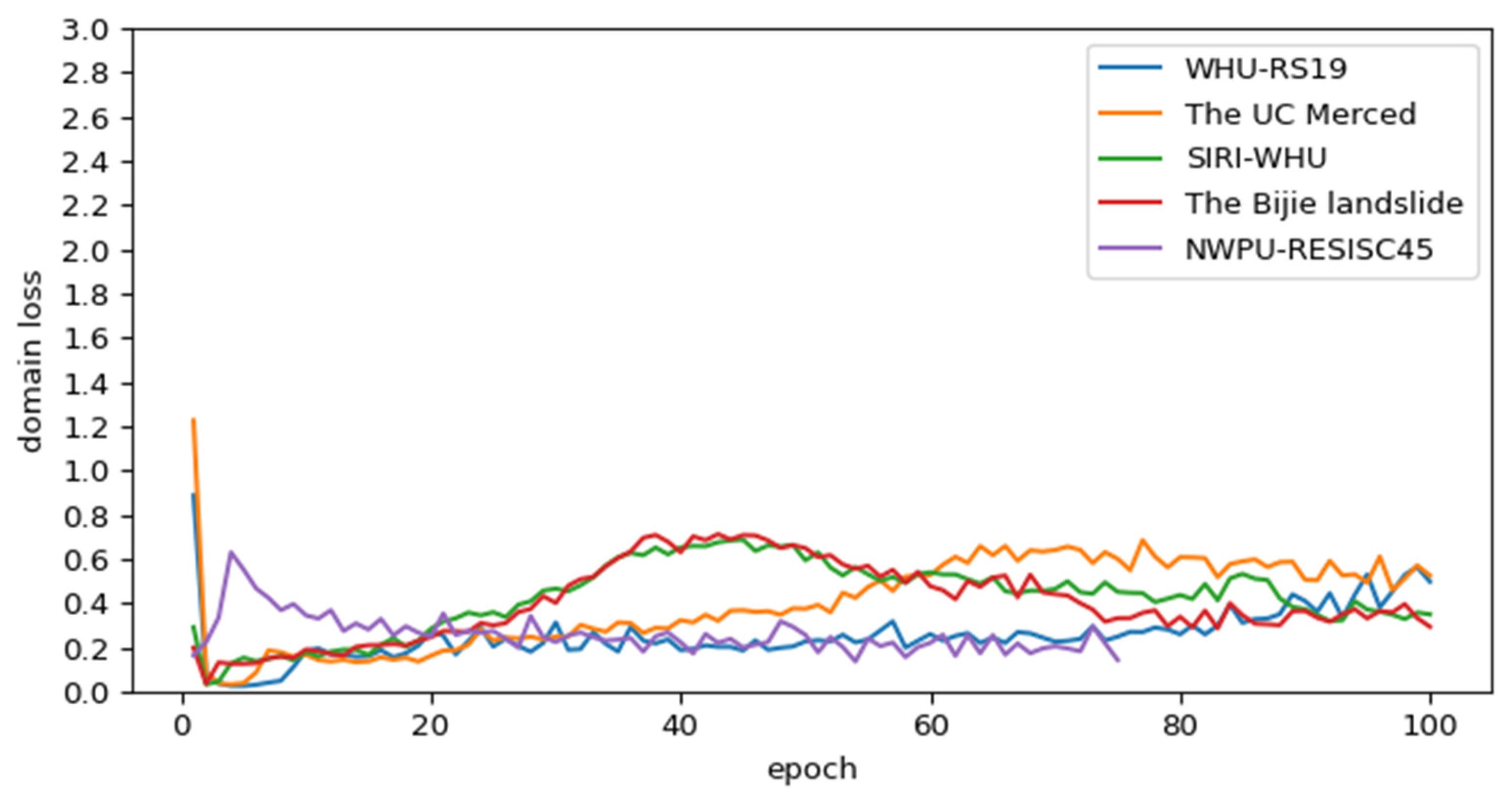

2. Domain Loss

3. Classification Loss

4. The Total Loss of the DDTL

4. Results

4.1. The Result of Image Enhancement

4.2. The Comparison of Different Models

4.2.1. Landslide Detection by Pretrained Model

4.2.2. Landslide Detection by the CNN Model

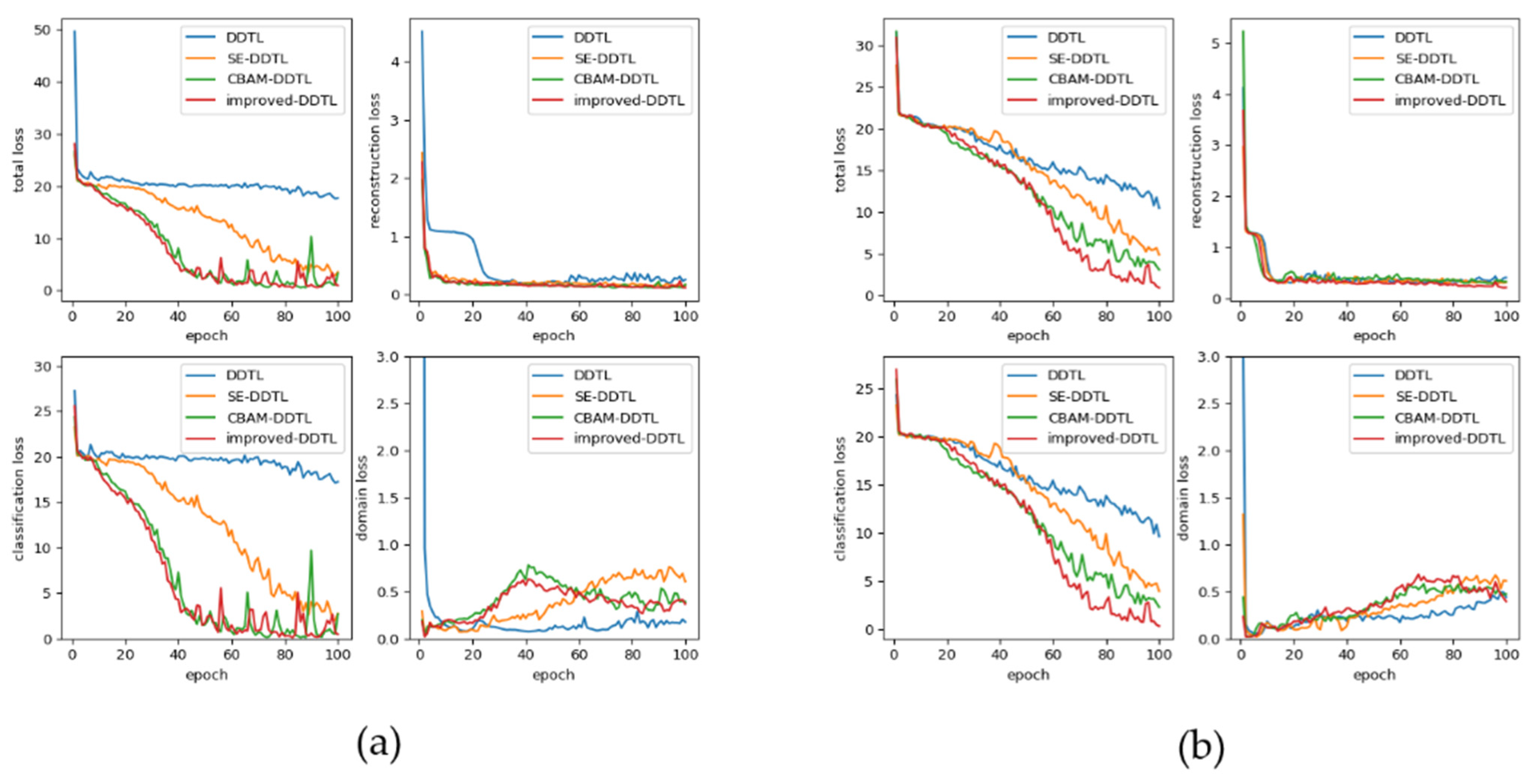

4.3. The Comparison of Different Attention Mechanisms

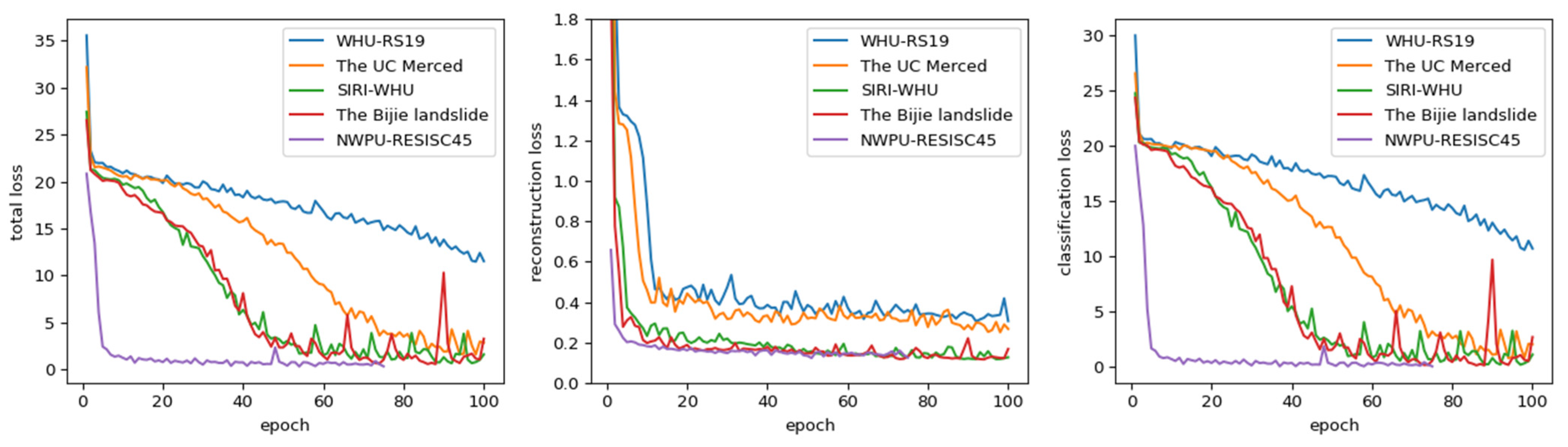

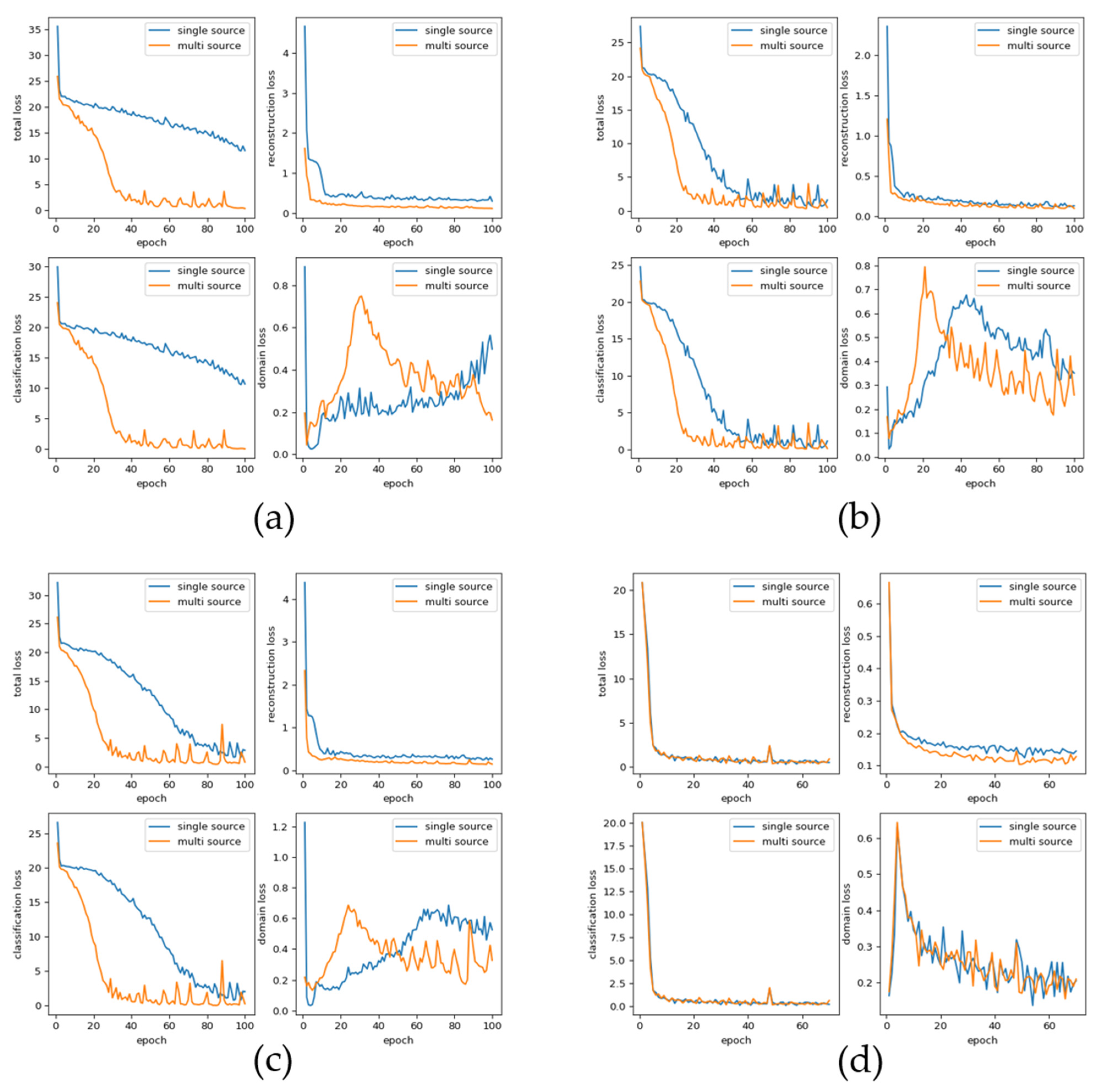

4.4. The Comparison of Different Source Domains

4.5. Effectiveness Verification of Landslide Detection

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mohan, A.; Singh, A.K.; Kumar, B.; Dwivedi, R. Review on remote sensing methods for landslide detection using machine and deep learning. Trans. Emerg. Telecommun. Technol. 2020, 32, e3998. [Google Scholar] [CrossRef]

- Iverson, R.M. Landslide triggering by rain infiltration. Water Resour. Res. 2000, 36, 1897–1910. [Google Scholar] [CrossRef] [Green Version]

- Haque, U.; da Silva, P.F.; Devoli, G.; Pilz, J.; Zhao, B.; Khaloua, A.; Wilopo, W.; Andersen, P.; Lu, P.; Lee, J.; et al. The human cost of global warming: Deadly landslides and their triggers (1995–2014). Sci. Total Environ. 2019, 682, 673–684. [Google Scholar] [CrossRef]

- Zhou, S.Y.; Gao, L.; Zhang, L.M. Predicting debris-flow clusters under extreme rainstorms: A case study on Hong Kong Island. Bull. Eng. Geol. Environ. 2019, 78, 5775–5794. [Google Scholar] [CrossRef]

- Hölbling, D.; Füreder, P.; Antolini, F.; Cigna, F.; Casagli, N.; Lang, S. A Semi-Automated Object-Based Approach for Landslide Detection Validated by Persistent Scatterer Interferometry Measures and Landslide Inventories. Remote Sens. 2012, 4, 1310–1336. [Google Scholar] [CrossRef] [Green Version]

- Ji, S.; Yu, D.; Shen, C.; Li, W.; Xu, Q. Landslide detection from an open satellite imagery and digital elevation model dataset using attention boosted convolutional neural networks. Landslides 2020, 17, 1337–1352. [Google Scholar] [CrossRef]

- Miele, P.; Di Napoli, M.; Guerriero, L.; Ramondini, M.; Sellers, C.; Corona, M.A.; Di Martire, D. Landslide Awareness System (LAwS) to Increase the Resilience and Safety of Transport Infrastructure: The Case Study of Pan-American Highway (Cuenca-Ecuador). Remote Sens. 2021, 13, 1564. [Google Scholar] [CrossRef]

- Qi, T.J.; Zhao, Y.; Meng, X.M.; Chen, G.; Dijkstra, T. AI-Based Susceptibility Analysis of Shallow Landslides Induced by Heavy Rainfall in Tianshui, China. Remote Sens. 2021, 13, 1819. [Google Scholar] [CrossRef]

- Liu, B.; He, K.; Han, M.; Hu, X.W.; Ma, G.T.; Wu, M.Y. Application of UAV and GB-SAR in Mechanism Research and Monitoring of Zhonghaicun Landslide in Southwest China. Remote Sens. 2021, 13, 1653. [Google Scholar] [CrossRef]

- Pham, B.T.; Prakash, I.; Jaafari, A.; Bui, D.T. Spatial Prediction of Rainfall-Induced Landslides Using Aggregating One-Dependence Estimators Classifier. J. Indian Soc. Remote Sens. 2018, 46, 1457–1470. [Google Scholar] [CrossRef]

- Xie, M.; Jean, N.; Burke, M.; Lobell, D.; Ermon, S. Transfer Learning from Deep Features for Remote Sensing and Poverty Mapping; Assoc Advancement Artificial Intelligence: Palo Alto, CA, USA, 2016; pp. 3929–3935. [Google Scholar]

- Qiao, S.; Qin, S.; Chen, J.; Hu, X.; Ma, Z. The Application of a Three-Dimensional Deterministic Model in the Study of Debris Flow Prediction Based on the Rainfall-Unstable Soil Coupling Mechanism. Processes 2019, 7, 99. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.; Qin, S.; Qiao, S.; Chen, Y.; Su, G.; Cheng, Q.; Zhang, Y.; Guo, X. Exploring the impact of introducing a physical model into statistical methods on the evaluation of regional scale debris flow susceptibility. Nat. Hazards 2021, 106, 881–912. [Google Scholar] [CrossRef]

- Yao, J.; Qin, S.; Qiao, S.; Che, W.; Chen, Y.; Su, G.; Miao, Q. Assessment of Landslide Susceptibility Combining Deep Learning with Semi-Supervised Learning in Jiaohe County, Jilin Province, China. Appl. Sci. 2020, 10, 5640. [Google Scholar] [CrossRef]

- Bui, D.T.; Tsangaratos, P.; Nguyen, V.-T.; Liem, N.V.; Trinh, P.T. Comparing the prediction performance of a Deep Learning Neural Network model with conventional machine learning models in landslide susceptibility assessment. Catena 2020, 188, 104426. [Google Scholar] [CrossRef]

- Ding, A.; Zhang, Q.; Zhou, X.; Dai, B. Automatic recognition of landslide based on CNN and texture change detection. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 444–448. [Google Scholar]

- Yang, B.; Wang, S.; Zhou, Y.; Wang, F.; Hu, Q.; Chang, Y.; Zhao, Q. Extraction of road blockage information for the Jiuzhaigou earthquake based on a convolution neural network and very-high-resolution satellite images. Earth Sci. Inform. 2019, 13, 115–127. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Blaschke, T.; Gholamnia, K.; Meena, S.; Tiede, D.; Aryal, J. Evaluation of Different Machine Learning Methods and Deep-Learning Convolutional Neural Networks for Landslide Detection. Remote Sens. 2019, 11, 196. [Google Scholar] [CrossRef] [Green Version]

- Prakash, N.; Manconi, A.; Loew, S. Mapping Landslides on EO Data: Performance of Deep Learning Models vs. Traditional Machine Learning Models. Remote Sens. 2020, 12, 346. [Google Scholar] [CrossRef] [Green Version]

- Sameen, M.I.; Pradhan, B. Landslide Detection Using Residual Networks and the Fusion of Spectral and Topographic Information. IEEE Access 2019, 7, 114363–114373. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X.; Jian, J. Remote Sensing Landslide Recognition Based on Convolutional Neural Network. Math. Probl. Eng. 2019, 2019, 1–12. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Meena, S.R.; Blaschke, T.; Aryal, J. UAV-Based Slope Failure Detection Using Deep-Learning Convolutional Neural Networks. Remote Sens. 2019, 11, 2046. [Google Scholar] [CrossRef] [Green Version]

- Shawky, O.A.; Hagag, A.; El-Dahshan, E.-S.A.; Ismail, M.A. Remote sensing image scene classification using CNN-MLP with data augmentation. Optik 2020, 221, 165356. [Google Scholar] [CrossRef]

- Censi, A.M.; Ienco, D.; Gbodjo, Y.J.E.; Pensa, R.G.; Interdonato, R.; Gaetano, R. Attentive Spatial Temporal Graph CNN for Land Cover Mapping from Multi Temporal Remote Sensing Data. IEEE Access 2021, 9, 23070–23082. [Google Scholar] [CrossRef]

- Zhu, H.; Xie, C.; Fei, Y.; Tao, H. Attention Mechanisms in CNN-Based Single Image Super-Resolution: A Brief Review and a New Perspective. Electronics 2021, 10, 1187. [Google Scholar] [CrossRef]

- Xu, R.; Tao, Y.; Lu, Z.; Zhong, Y. Attention-Mechanism-Containing Neural Networks for High-Resolution Remote Sensing Image Classification. Remote Sens. 2018, 10, 1602. [Google Scholar] [CrossRef] [Green Version]

- Chan, C.S.; Anderson, D.T.; Ball, J.E. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Ma, L.; Fu, X.; Liu, C.; Wang, Z.; Tang, M.; Li, N. Landslides Information Extraction Using Object-Oriented Image Analysis Paradigm Based on Deep Learning and Transfer Learning. Remote Sens. 2020, 12, 752. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Liu, F.; Zhang, H.; Liang, Z. Convolutional neural network based heterogeneous transfer learning for remote-sensing scene classification. Int. J. Remote Sens. 2019, 40, 8506–8527. [Google Scholar] [CrossRef]

- Pires de Lima, R.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2019, 12, 86. [Google Scholar] [CrossRef] [Green Version]

- Tan, B.; Zhang, Y.; Pan, S.J.; Yang, Q. Distant Domain Transfer Learning; Assoc Advancement Artificial Intelligence: Palo Alto, CA, USA, 2017; pp. 2604–2610. [Google Scholar]

- Tan, B.; Song, Y.; Zhong, E.; Yang, Q. Transitive Transfer Learning. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 1155–1164. [Google Scholar]

- Niu, S.; Hu, Y.; Wang, J.; Liu, Y.; Song, H. Feature-based Distant Domain Transfer Learning. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5164–5171. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, München, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [Green Version]

- Jiang, G.G.; Tian, Y.; Xiao, C.C. GIS-based Rainfall-Triggered Landslide Warning and Forecasting Model of Shenzhen. In Proceedings of the 2013 21st International Conference on Geoinformatics, Kaifeng, China, 20–22 June 2013; Hu, S., Ye, X., Eds.; IEEE: New York, NY, USA, 2013. [Google Scholar]

- He, X.C.; Xu, Y.S.; Shen, S.L.; Zhou, A.N. Geological environment problems during metro shield tunnelling in Shenzhen, China. Arab. J. Geosci. 2020, 13, 1–18. [Google Scholar] [CrossRef]

- Guzzetti, F.; Peruccacci, S.; Rossi, M.; Stark, C.P. The rainfall intensity–duration control of shallow landslides and debris flows: An update. Landslides 2008, 5, 3–17. [Google Scholar] [CrossRef]

- Zhang, X.; Song, J.; Peng, J.; Wu, J. Landslides-oriented urban disaster resilience assessment-A case study in ShenZhen, China. Sci. Total Environ. 2019, 661, 95–106. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.Y.; Shen, P.; Zhang, L.M. How does a cluster of buildings affect landslide mobility: A case study of the Shenzhen landslide. Landslides 2019, 16, 2421–2431. [Google Scholar] [CrossRef]

- Rau, J.-Y.; Jhan, J.-P.; Rau, R.-J. Semiautomatic Object-Oriented Landslide Recognition Scheme From Multisensor Optical Imagery and DEM. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1336–1349. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Zhao, B.; Zhong, Y.F.; Zhang, L.P.; Huang, B. The Fisher Kernel Coding Framework for High Spatial Resolution Scene Classification. Remote Sens. 2016, 8, 157. [Google Scholar] [CrossRef] [Green Version]

- Zhao, B.; Zhong, Y.F.; Xia, G.S.; Zhang, L.P. Dirichlet-Derived Multiple Topic Scene Classification Model for High Spatial Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2108–2123. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Dai, D.; Yang, W. Satellite Image Classification via Two-Layer Sparse Coding with Biased Image Representation. IEEE Geosci. Remote Sens. Lett. 2011, 8, 173–176. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Zheng, J.; Hu, H.; Li, B. Naturalness Preserved Enhancement Algorithm for Non-Uniform Illumination Images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef]

- Ibrahim, H.; Kong, N.S.P. Brightness Preserving Dynamic Histogram Equalization for Image Contrast Enhancement. IEEE Trans. Consum. Electron. 2007, 53, 1752–1758. [Google Scholar] [CrossRef]

- Ying, Z.Q.; Li, G.; Ren, Y.R.; Wang, R.G.; Wang, W.M. A New Image Contrast Enhancement Algorithm Using Exposure Fusion Framework. In Computer Analysis of Images and Patterns: 17th International Conference, Caip 2017, Pt II; Felsberg, M., Heyden, A., Kruger, N., Eds.; Springer International Publishing Ag: Cham, Switzerland, 2017; Volume 10425, pp. 36–46. [Google Scholar]

- Ying, Z.; Li, G.; Ren, Y.; Wang, R.; Wang, W. A New Low-Light Image Enhancement Algorithm Using Camera Response Model. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 3015–3022. [Google Scholar]

- Niu, S.; Liu, Y.; Wang, J.; Song, H. A Decade Survey of Transfer Learning (2010–2020). IEEE Trans. Artif. Intell. 2020, 1, 151–166. [Google Scholar] [CrossRef]

- Tan, B.; Yu, Z.; Pan, S.J.; Qiang, Y. Distant Domain Transfer Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. In Artificial Neural Networks and Machine Learning—ICANN 2018; Springer: Cham, Switzerland, 2018; pp. 270–279. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Notti, D.; Giordan, D.; Cina, A.; Manzino, A.; Maschio, P.; Bendea, I.H. Debris Flow and Rockslide Analysis with Advanced Photogrammetry Techniques Based on High-Resolution RPAS Data. Ponte Formazza Case Study (NW Alps). Remote Sens. 2021, 13, 1797. [Google Scholar] [CrossRef]

- Niu, S.; Liu, M.; Liu, Y.; Wang, J.; Song, H. Distant Domain Transfer Learning for Medical Imaging. IEEE J. Biomed. Health Inform. 2021, 21, 1-1. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, L. Geological Disaster Recognition on Optical Remote Sensing Images Using Deep Learning. Procedia Comput. Sci. 2016, 91, 566–575. [Google Scholar] [CrossRef] [Green Version]

- Turchenko, V.; Chalmers, E.; Luczak, A. A Deep Convolutional Auto-Encoder with Pooling—Unpooling Layers in Caffe. arXiv 2017, arXiv:1701.04949. [Google Scholar]

- Catani, F. Landslide detection by deep learning of non-nadiral and crowdsourced optical images. Landslides 2020, 18, 1025–1044. [Google Scholar] [CrossRef]

- Sun, B.; Saenko, K. Deep CORAL: Correlation Alignment for Deep Domain Adaptation. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; pp. 443–450. [Google Scholar]

- Borgwardt, K.M.; Gretton, A.; Rasch, M.J.; Kriegel, H.-P.; Schoelkopf, B.; Smola, A.J. Integrating structured biological data by Kernel Maximum Mean Discrepancy. Bioinformatics 2006, 22, E49–E57. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Bai, S.; Wang, J.; Zhang, Z.; Cheng, C. Combined landslide susceptibility mapping after Wenchuan earthquake at the Zhouqu segment in the Bailongjiang Basin, China. Catena 2012, 99, 18–25. [Google Scholar] [CrossRef]

- Xu, C.; Xu, X.; Dai, F.; Wu, Z.; He, H.; Shi, F.; Wu, X.; Xu, S. Application of an incomplete landslide inventory, logistic regression model and its validation for landslide susceptibility mapping related to the May 12, 2008 Wenchuan earthquake of China. Nat. Hazards 2013, 68, 883–900. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Kai, L.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Cheng, G.; Ma, C.; Zhou, P.; Yao, X.; Han, J. Scene classification of high resolution remote sensing images using convolutional neural networks. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 767–770. [Google Scholar]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional Neural Networks for Medical Image Analysis: Full Training or Fine Tuning? IEEE Trans. Med. Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Qin, S.; Qiao, S.; Dou, Q.; Che, W.; Su, G.; Yao, J.; Nnanwuba, U.E. Spatial Predictions of Debris Flow Susceptibility Mapping Using Convolutional Neural Networks in Jilin Province, China. Water 2020, 12, 2079. [Google Scholar] [CrossRef]

| Dataset | Images | Total Images |

|---|---|---|

| Bijie landslide dataset | 770 landslides samples and 2003 negative samples | 2773 |

| UC Merced land use dataset | 21 classes × 100 images | 2100 |

| Google image dataset of SIRI-WHU | 12 classes × 200 images | 2400 |

| WHU-RS19 | 12 classes × 50 samples | 1005 |

| NWPU-RESISC45 | 45 classes × 700 images | 31,500 |

| Enhanced Images | Original Images | |||||

|---|---|---|---|---|---|---|

| Accuracy | Precision | Loss | Accuracy | Precision | Loss | |

| CNN | 86.16 | 0.8974 | 0.9144 | 83.94 | 0.8915 | 0.5518 |

| VGG-16 | 87.09 | 0.8674 | 0.8674 | 85.71 | 0.8504 | 0.3503 |

| VGG-19 | 88.24 | 0.9085 | 0.2144 | 86.26 | 0.8835 | 0.2572 |

| ResNet-50 | 89.86 | 0.9130 | 0.8299 | 88.38 | 0.9085 | 1.0596 |

| DDTL | 88.01 | 0.9174 | 0.7342 | 87.94 | 0.9114 | 0.8465 |

| Target domain | RGB | DEM | RGB + DEM |

| Source domain | RGB | DEM | RGB + DEM |

| Total loss | 19.636 | 27.84 | 17.65 |

| Dataset | DDTL | SE-DDTL | CBAM-DDTL | Improved DDTL |

|---|---|---|---|---|

| Bijie landslide dataset | 79.69 | 93.36 | 95.89 | 96.03 |

| UC Merced land use dataset | 87.33 | 94.57 | 95.78 | 96.78 |

| Auxiliary Source Domain | RS19-WHU | SIRI-WHU | UC Merced Land Use | NWPU-RESISC45 |

|---|---|---|---|---|

| Primary Source Domain | The Bijie landslide dataset | |||

| Single source | 87.96 | 93.63 | 92.38 | 96.01 |

| Multisource | 94.03 | 96.25 | 94.46 | 96.53 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, S.; Guo, X.; Sun, J.; Qiao, S.; Zhang, L.; Yao, J.; Cheng, Q.; Zhang, Y. Landslide Detection from Open Satellite Imagery Using Distant Domain Transfer Learning. Remote Sens. 2021, 13, 3383. https://doi.org/10.3390/rs13173383

Qin S, Guo X, Sun J, Qiao S, Zhang L, Yao J, Cheng Q, Zhang Y. Landslide Detection from Open Satellite Imagery Using Distant Domain Transfer Learning. Remote Sensing. 2021; 13(17):3383. https://doi.org/10.3390/rs13173383

Chicago/Turabian StyleQin, Shengwu, Xu Guo, Jingbo Sun, Shuangshuang Qiao, Lingshuai Zhang, Jingyu Yao, Qiushi Cheng, and Yanqing Zhang. 2021. "Landslide Detection from Open Satellite Imagery Using Distant Domain Transfer Learning" Remote Sensing 13, no. 17: 3383. https://doi.org/10.3390/rs13173383

APA StyleQin, S., Guo, X., Sun, J., Qiao, S., Zhang, L., Yao, J., Cheng, Q., & Zhang, Y. (2021). Landslide Detection from Open Satellite Imagery Using Distant Domain Transfer Learning. Remote Sensing, 13(17), 3383. https://doi.org/10.3390/rs13173383