Modeling of Environmental Impacts on Aerial Hyperspectral Images for Corn Plant Phenotyping

Abstract

:1. Introduction

- Collect time-series hyperspectral images of two varieties of corn plants with three nitrogen treatments from V4 to R1 every 2.5 min throughout the whole growing season, along with synchronized environmental condition data.

- Build a prediction model for the environment-induced variation in each of the measured phenotyping features (e.g., NDVI and RWC) with time-series decomposition and ANN method.

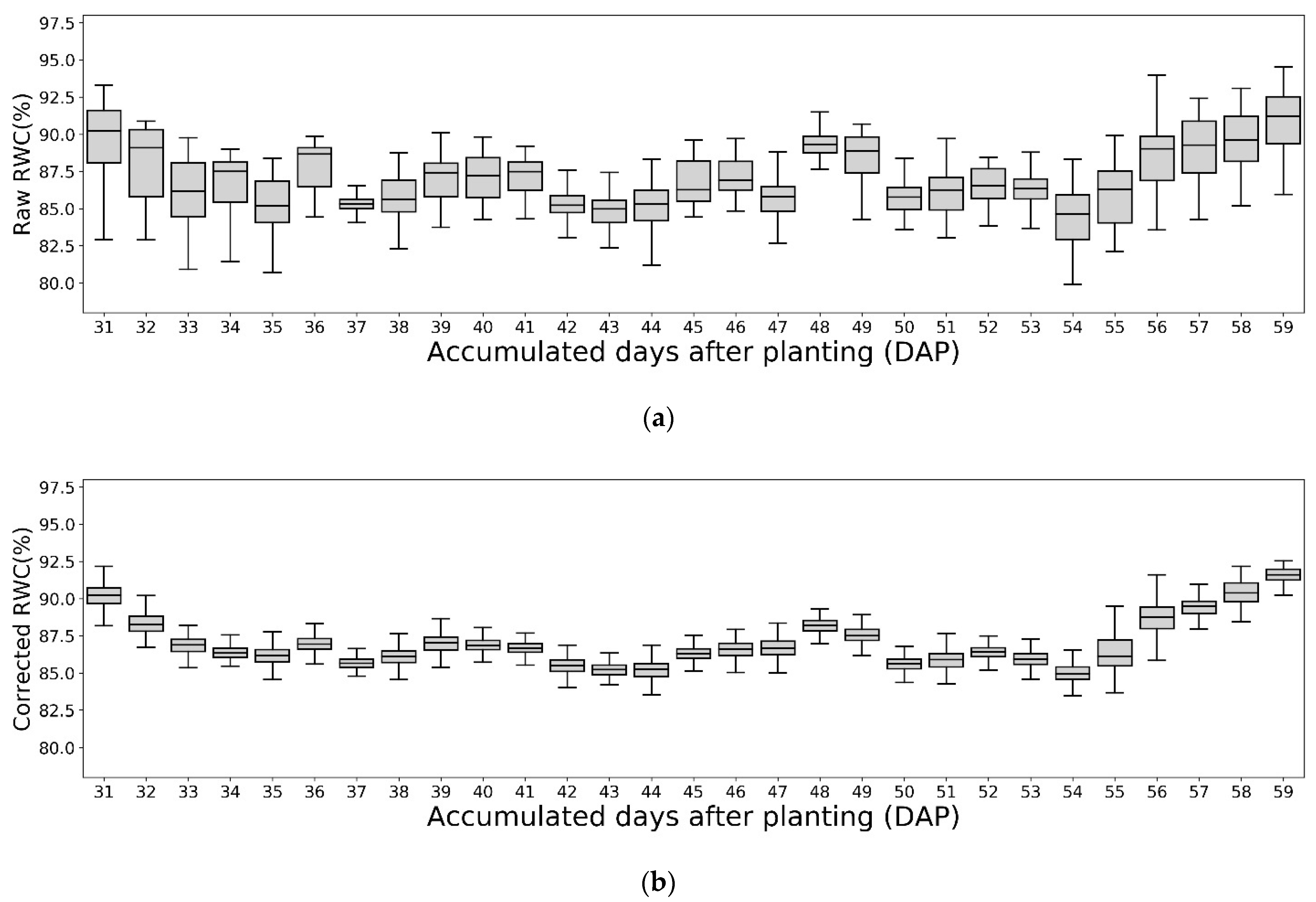

- Evaluate the performance of the trained ANN models and their effects in removing the environmental noise by comparing the variances in the phenotyping features (e.g., NDVI and RWC) before and after the model correction.

2. Materials and Methods

2.1. Experiment Design and Data Collection

2.2. Time Series Decomposition for Environment-Induced Variation

2.3. Environmental Data Transformation and Selection

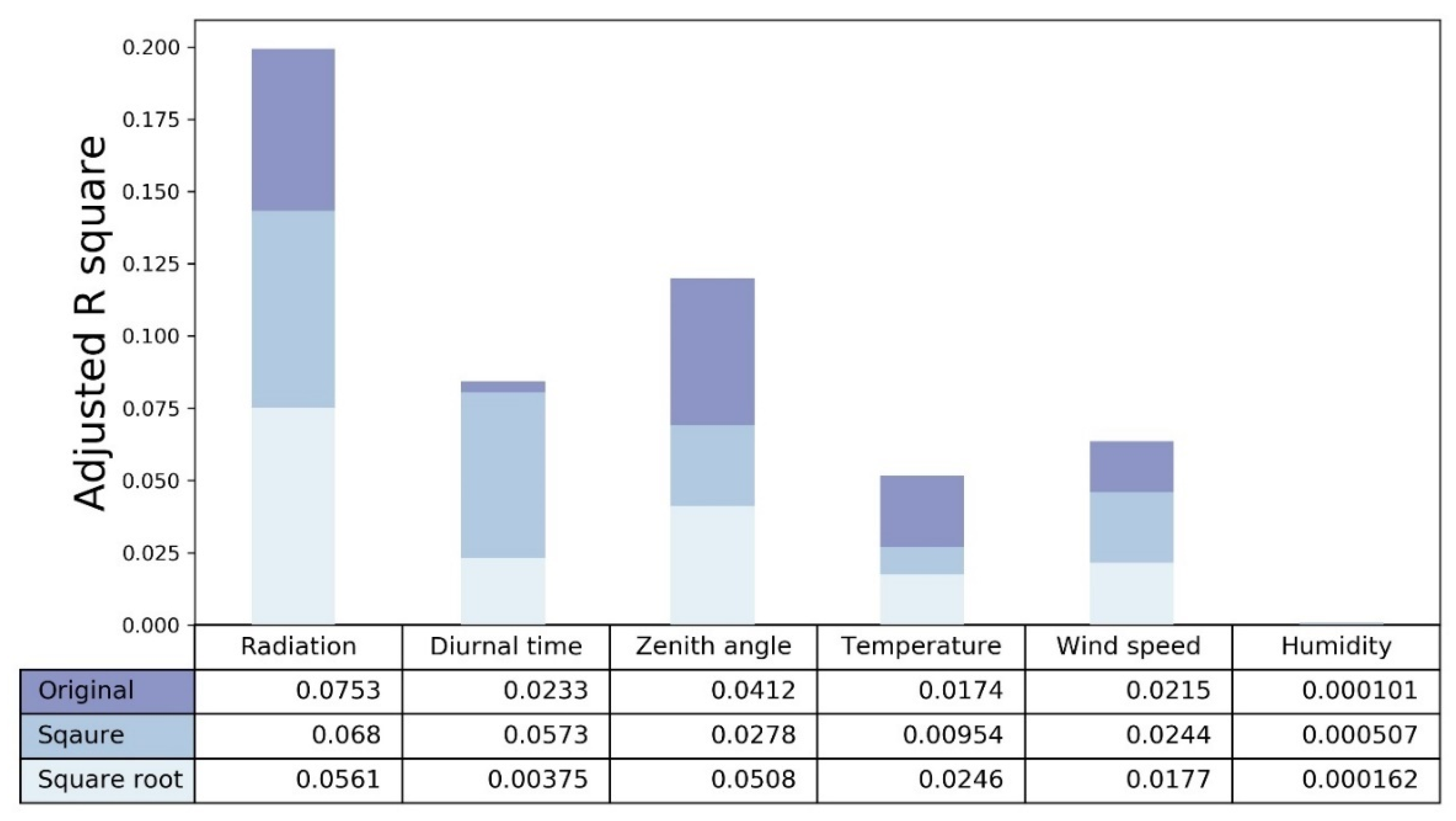

2.4. Data Quality Check

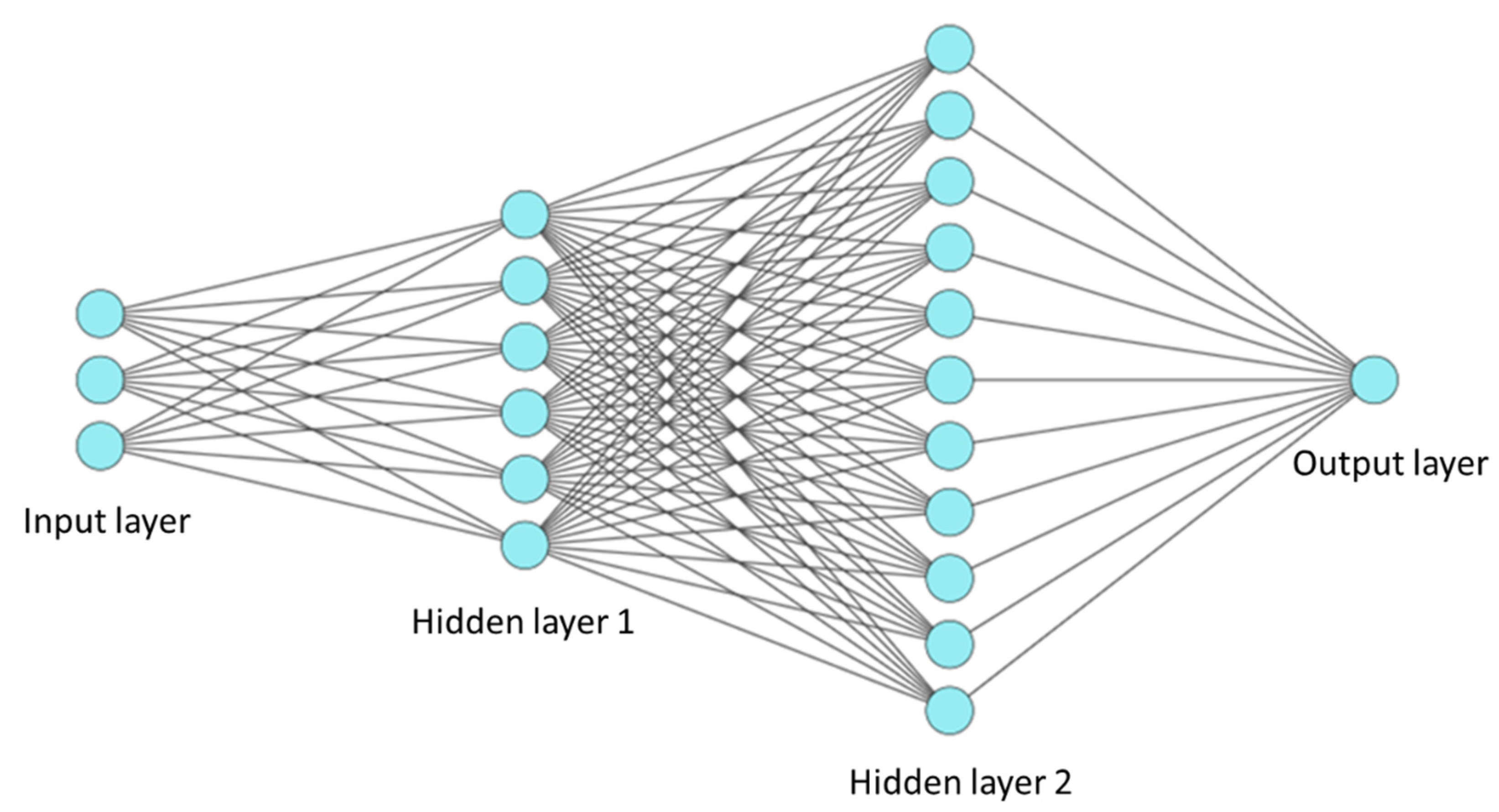

2.5. Artificial Neural Network (ANN) Model

2.5.1. Architecture

2.5.2. Training and Optimization

2.6. Performance Evaluation

2.6.1. Evaluation Metrics

2.6.2. Multi-Model Comparison Analysis across Genotypes and Nitrogen Treatments

2.6.3. Phenotyping Features for Testing the Model and Workflow

2.7. Software and Computation

3. Results

3.1. Time Series Decomposition Result

3.2. Environmental Feature Selection and Range

3.3. Performance of the ANN Models

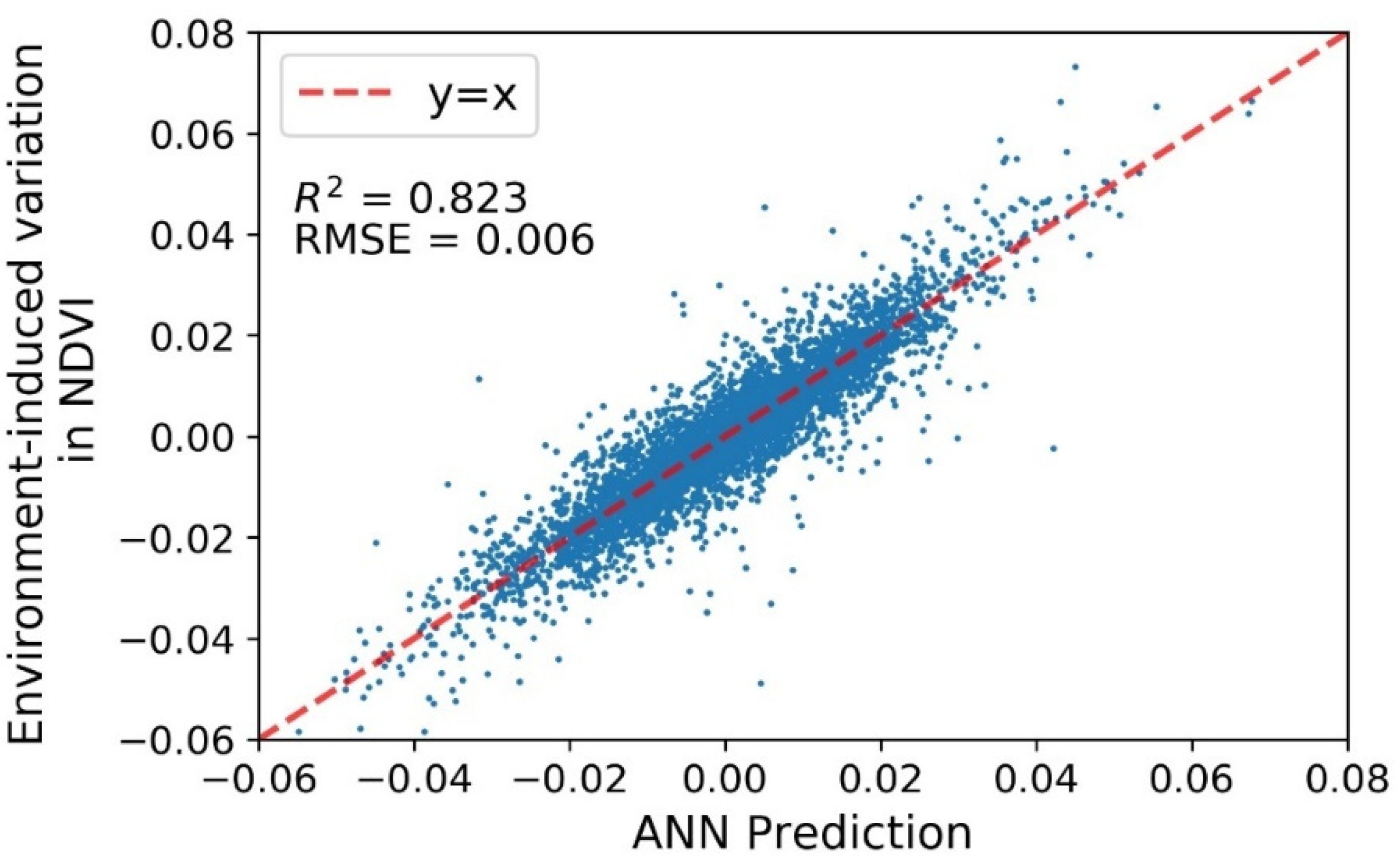

3.3.1. Overall Performance

3.3.2. Multi-Model Comparison Analysis across Genotypes and Nitrogen Treatments

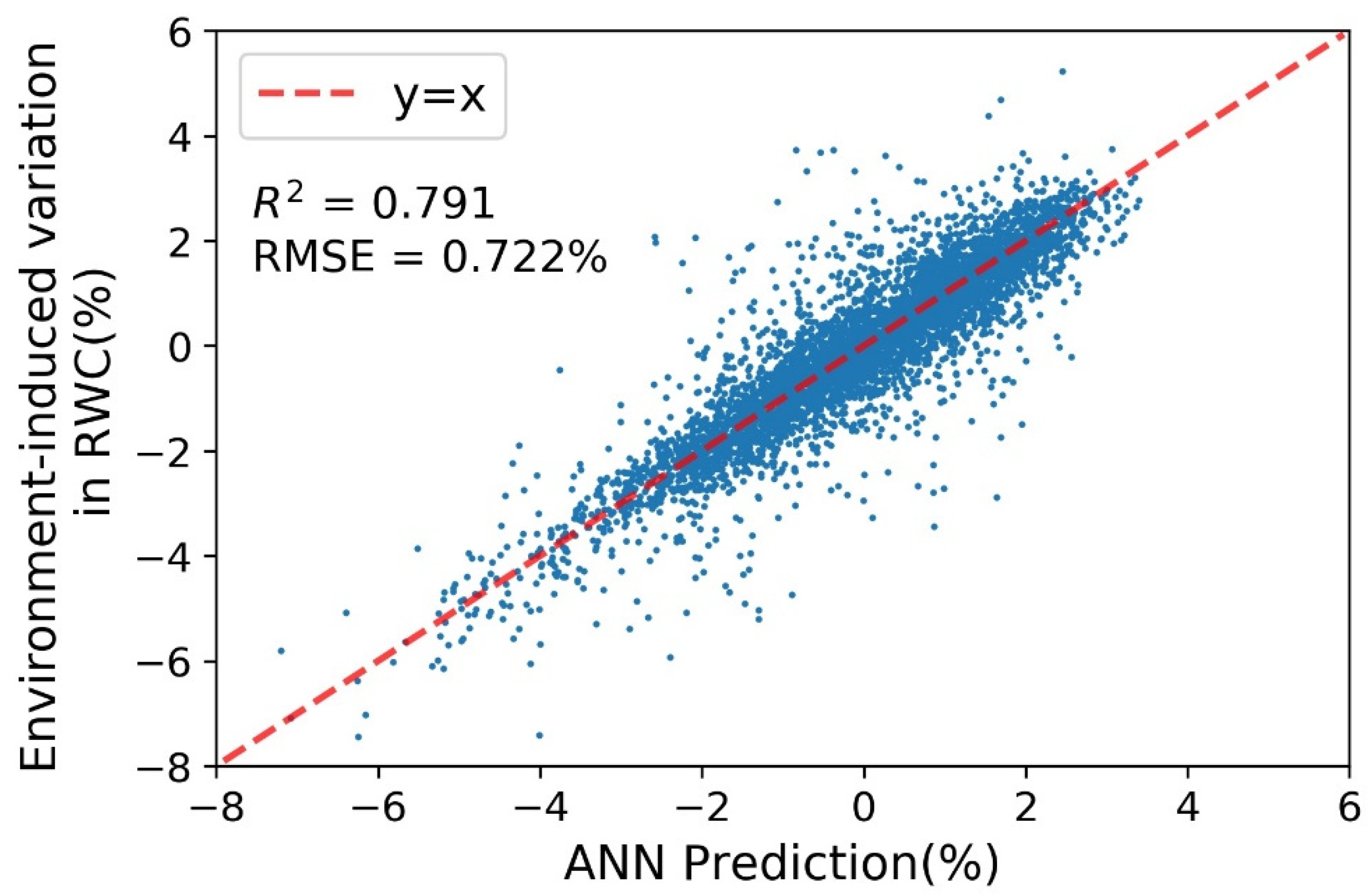

3.4. Modeling of Environmentally Induced Variation in Predicted RWC

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, L.; Zhang, Q.; Huang, D. A Review of Imaging Techniques for Plant Phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Cherkauer, K.; Bowling, L. Corn Response to Climate Stress Detected with Satellite-Based NDVI Time Series. Remote Sens. 2016, 8, 269. [Google Scholar] [CrossRef] [Green Version]

- Gracia-Romero, A.; Kefauver, S.C.; Fernandez-Gallego, J.A.; Vergara-Díaz, O.; Nieto-Taladriz, M.T.; Araus, J.L. UAV and ground image-based phenotyping: A proof of concept with durum wheat. Remote Sens. 2019, 11, 1244. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Wang, L.; Wang, J.; Song, Z.; Rehman, T.U.; Bureetes, T.; Ma, D.; Chen, Z.; Neeno, S.; Jin, J. Leaf Scanner: A portable and low-cost multispectral corn leaf scanning device for precise phenotyping. Comput. Electron. Agric. 2019, 167, 105069. [Google Scholar] [CrossRef]

- Wang, L.; Jin, J.; Song, Z.; Wang, J.; Zhang, L.; Rehman, T.U.; Ma, D.; Carpenter, N.R.; Tuinstra, M.R. LeafSpec: An accurate and portable hyperspectral corn leaf imager. Comput. Electron. Agric. 2020, 169, 105209. [Google Scholar] [CrossRef]

- Ma, D.; Carpenter, N.; Maki, H.; Rehman, T.U.; Tuinstra, M.R.; Jin, J. Greenhouse environment modeling and simulation for microclimate control. Comput. Electron. Agric. 2019, 162, 134–142. [Google Scholar] [CrossRef]

- Zhang, L.; Maki, H.; Ma, D.; Sánchez-Gallego, J.A.; Mickelbart, M.V.; Wang, L.; Rehman, T.U.; Jin, J. Optimized angles of the swing hyperspectral imaging system for single corn plant. Comput. Electron. Agric. 2019, 156, 349–359. [Google Scholar] [CrossRef]

- Ma, D.; Maki, H.; Neeno, S.; Zhang, L.; Wang, L.; Jin, J. Application of non-linear partial least squares analysis on prediction of biomass of maize plants using hyperspectral images. Biosyst. Eng. 2020, 200, 40–54. [Google Scholar] [CrossRef]

- Ma, D.; Wang, L.; Zhang, L.; Song, Z.; Rehman, T.U.; Jin, J. Stress distribution analysis on hyperspectral corn leaf images for improved phenotyping quality. Sensors 2020, 20, 3659. [Google Scholar] [CrossRef]

- Rehman, T.U.; Ma, D.; Wang, L.; Zhang, L.; Jin, J. Predictive spectral analysis using an end-to-end deep model from hyperspectral images for high-throughput plant phenotyping. Comput. Electron. Agric. 2020, 177, 105713. [Google Scholar] [CrossRef]

- Gamon, J.A.; Kovalchuck, O.; Wong, C.Y.S.; Harris, A.; Garrity, S.R. Monitoring seasonal and diurnal changes in photosynthetic pigments with automated PRI and NDVI sensors. Biogeosciences 2015, 12, 4149–4159. [Google Scholar] [CrossRef] [Green Version]

- Padilla, F.M.; de Souza, R.; Peña-Fleitas, M.T.; Grasso, R.; Gallardo, M.; Thompson, R.B. Influence of time of day on measurement with chlorophyll meters and canopy reflectance sensors of different crop N status. Precis. Agric. 2019, 20, 1087–1106. [Google Scholar] [CrossRef] [Green Version]

- Beneduzzi, H.M.; Souza, E.G.; Bazzi, C.L.; Schenatto, K. Temporal variability in active reflectance sensor-measured NDVI in soybean and wheat crops. Eng. Agric. 2017, 37, 771–781. [Google Scholar] [CrossRef]

- Maji, S.; Chandra, B.; Viswavidyalaya, K. Diurnal Variation in Spectral Properties of Potato under Different Dates of Planting and N-Doses Diurnal Variation in Spectral Properties of Potato under Different Dates of Planting and N-Doses. Environ. Ecol. 2014, 33, 478–483. [Google Scholar]

- Ranson, K.J.; Daughtry, C.S.T.; Biehl, L.L.; Bauer, M.E. Sun-view angle effects on reflectance factors of corn canopies. Remote Sens. Environ. 1985, 18, 147–161. [Google Scholar] [CrossRef]

- Jackson, R.D.; Pinter, P.J.; Idso, S.B.; Reginato, R.J. Wheat spectral reflectance: Interactions between crop configuration, sun elevation, and azimuth angle. Appl. Opt. 1979, 18, 3730–3732. [Google Scholar] [CrossRef]

- Ishihara, M.; Inoue, Y.; Ono, K.; Shimizu, M.; Matsuura, S. The impact of sunlight conditions on the consistency of vegetation indices in croplands-Effective usage of vegetation indices from continuous ground-based spectral measurements. Remote Sens. 2015, 7, 14079–14098. [Google Scholar] [CrossRef] [Green Version]

- Danner, M.; Berger, K.; Wocher, M.; Mauser, W.; Hank, T. Fitted PROSAIL parameterization of leaf inclinations, water content and brown pigment content for winter wheat and maize canopies. Remote Sens. 2019, 11, 1150. [Google Scholar] [CrossRef] [Green Version]

- An, N.; Welch, S.M.; Markelz, R.J.C.; Baker, R.L.; Palmer, C.M.; Ta, J.; Maloof, J.N.; Weinig, C. Quantifying time-series of leaf morphology using 2D and 3D photogrammetry methods for high-throughput plant phenotyping. Comput. Electron. Agric. 2017, 135, 222–232. [Google Scholar] [CrossRef] [Green Version]

- Di Gennaro, S.F.; Rizza, F.; Badeck, F.W.; Berton, A.; Delbono, S.; Gioli, B.; Toscano, P.; Zaldei, A.; Matese, A. UAV-based high-throughput phenotyping to discriminate barley vigour with visible and near-infrared vegetation indices. Int. J. Remote Sens. 2018, 39, 5330–5344. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A Review on the Use of Unmanned Aerial Vehicles and Imaging Sensors for Monitoring and Assessing Plant Stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef] [Green Version]

- Krishna, K.R. Agricultural Drones: A Peaceful Pursuit; Taylor & Francis: Abingdon, UK, 2018. [Google Scholar]

- Miura, T.; Huete, A.R. Performance of three reflectance calibration methods for airborne hyperspectral spectrometer data. Sensors 2009, 9, 794–813. [Google Scholar] [CrossRef] [Green Version]

- Jacquemoud, S.; Baret, F. PROSPECT: A model of leaf optical properties spectra. Remote Sens. Environ. 1990, 34, 75–91. [Google Scholar] [CrossRef]

- Berger, K.; Atzberger, C.; Danner, M.; Wocher, M.; Mauser, W.; Hank, T. Model-based optimization of spectral sampling for the retrieval of crop variables with the PROSAIL model. Remote Sens. 2018, 10, 2063. [Google Scholar] [CrossRef] [Green Version]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT + SAIL models: A review of use for vegetation characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- De Souza, E.G.; Scharf, P.C.; Sudduth, K.A. Sun position and cloud effects on reflectance and vegetation indices of corn. Agron. J. 2010, 102, 734–744. [Google Scholar] [CrossRef] [Green Version]

- Wang, T.; Rostamza, M.; Song, Z.; Wang, L.; McNickle, G.; Iyer-Pascuzzi, A.S.; Qiu, Z.; Jin, J. SegRoot: A high throughput segmentation method for root image analysis. Comput. Electron. Agric. 2019, 162, 845–854. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.E.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef] [Green Version]

- Yilmaz, I.; Kaynar, O. Multiple regression, ANN (RBF, MLP) and ANFIS models for prediction of swell potential of clayey soils. Expert Syst. Appl. 2011, 38, 5958–5966. [Google Scholar] [CrossRef]

- Mokarram, M.; Bijanzadeh, E. Prediction of biological and grain yield of barley using multiple regression and artificial neural network models. Aust. J. Crop. Sci. 2016, 10, 895–903. [Google Scholar] [CrossRef]

- Zhang, Z.; Masjedi, A.; Zhao, J.; Crawford, M.M. Prediction of sorghum biomass based on image based features derived from time series of UAV images. Int. Geosci. Remote Sens. Symp. 2017, 2017, 6154–6157. [Google Scholar] [CrossRef]

- Ma, D.; Rehman, T.U.; Zhang, L.; Jin, J. Modeling of diurnal changing patterns in airborne crop remote sensing images. Remote Sens. 2021, 13, 1719. [Google Scholar] [CrossRef]

- Ma, D.; Carpenter, N.; Amatya, S.; Maki, H.; Wang, L.; Zhang, L.; Neeno, S.; Tuinstra, M.R.; Jin, J. Removal of greenhouse microclimate heterogeneity with conveyor system for indoor phenotyping. Comput. Electron. Agric. 2019, 166, 104979. [Google Scholar] [CrossRef]

- Schafleitner, R.; Gutierrez, R.; Espino, R.; Gaudin, A.; Pérez, J.; Martínez, M.; Domínguez, A.; Tincopa, L.; Alvarado, C.; Numberto, G.; et al. Field screening for variation of drought tolerance in Solanum tuberosum L. by agronomical, physiological and genetic analysis. Potato Res. 2007, 50, 71–85. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Walthall, C.L.; Kim, M.S.; De Colstoun, E.B.; McMurtrey Iii, J.E. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Weatherley, P.E. Studies in the Water Relations of the Cotton Plant. II. Diurnal and Seasonal Variations in Relative Turgidity and Environmental Factors. New Phytol. 1951, 50, 36–51. [Google Scholar] [CrossRef]

- Zhou, X.; Xu, Y.; Zhang, F. Evaluation of effect of diurnal ambient temperature range on solar chimney power plant performance. Int. J. Heat Mass Transf. 2017, 115, 398–405. [Google Scholar] [CrossRef]

- Rojo, J.; Rivero, R.; Romero-Morte, J.; Fernández-González, F.; Pérez-Badia, R. Modeling pollen time series using seasonal-trend decomposition procedure based on LOESS smoothing. Int. J. Biometeorol. 2017, 61, 335–348. [Google Scholar] [CrossRef] [PubMed]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A seasonal-trend decomposition. J. Off. Stat. 1990, 6, 3–73. [Google Scholar]

- Kusiak, A. Feature transformation methods in data mining. IEEE Trans. Electron. Packag. Manuf. 2001, 24, 214–221. [Google Scholar] [CrossRef]

- Helland, I.S. On the interpretation and use of R2 in regression analysis. Biometrics 1987, 43, 61–69. [Google Scholar] [CrossRef]

- Schwertman, N.C.; de Silva, R. Identifying outliers with sequential fences. Comput. Stat. Data Anal. 2007, 51, 3800–3810. [Google Scholar] [CrossRef]

- Mellit, A.; Pavan, A.M. A 24-h forecast of solar irradiance using artificial neural network: Application for performance prediction of a grid-connected PV plant at Trieste, Italy. Sol. Energy 2010, 84, 807–821. [Google Scholar] [CrossRef]

- Sharma, S.; Sharma, S. Activation functions in neural networks. Data Sci. 2017, 6, 310–316. [Google Scholar] [CrossRef]

- Zhang, X.; Sugano, Y.; Bulling, A. Revisiting data normalization for appearance-based gaze estimation. Eye Track. Res. Appl. Symp. 2018. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Le, Q.V.; Ngiam, J.; Coates, A.; Lahiri, A.; Prochnow, B.; Ng, A.Y. On optimization methods for deep learning. In Proceedings of the 28th International Conference on Machine Learning, ICML 2011, Washington, DC, USA, 28 June–2 July 2011. [Google Scholar]

- Jacobs, R.A. Increased rates of convergence through learning rate adaptation. Neural Netw. 1988, 1, 295–307. [Google Scholar] [CrossRef]

- Gholamrezaei, M.; Ghorbanian, K. Rotated general regression neural network. In Proceedings of the 2007 International Joint Conference on Neural Networks, Orlando, FL, USA, 12–17 August 2007; Volume 2, pp. 1959–1964. [Google Scholar] [CrossRef]

- Livingstone, D.J. Artificial Neural Networks: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Cabrera-Bosquet, L.; Molero, G.; Stellacci, A.; Bort, J.; Nogués, S.; Araus, J. NDVI as a potential tool for predicting biomass, plant nitrogen content and growth in wheat genotypes subjected to different water and nitrogen conditions. Cereal Res. Commun. 2011, 39, 147–159. [Google Scholar] [CrossRef]

- The MathWorks Inc. MATLAB Version 9.4.0.813654 (R2018a); The MathWorks Inc.: Natick, MA, USA, 2018. [Google Scholar]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009; ISBN 1441412697. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the NIPS 2017 Workshop Autodiff Submission, Long Beach, CA, USA, 28 October 2017. [Google Scholar]

- McKinney, W. Data structures for statistical computing in python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; Volume 445, pp. 51–56. [Google Scholar]

- Oliphant, T.E. A Guide to NumPy; Trelgol Publishing: USA, 2006; Volume 1, Available online: https://web.mit.edu/dvp/Public/numpybook.pdf (accessed on 29 May 2021).

- Waskom, M.; Botvinnik, O.; O’Kane, D.; Hobson, P.; Lukauskas, S.; Gemperline, D.C.; Augspurger, T.; Halchenko, Y.; Cole, J.B.; Warmenhoven, J.; et al. mwaskom/seaborn: v0.8.1 (September 2017). Zenodo 2017. [Google Scholar] [CrossRef]

- Hunter, J.D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Jones, H.G.; Serraj, R.; Loveys, B.R.; Xiong, L.; Wheaton, A.; Price, A.H. Thermal infrared imaging of crop canopies for the remote diagnosis and quantification of plant responses to water stress in the field. Funct. Plant Biol. 2009, 36, 978–989. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Duan, Y.; Zhang, L.; Rehman, T.U.; Ma, D.; Jin, J. Precise Estimation of NDVI with a Simple NIR Sensitive RGB Camera and Machine Learning Methods for Corn Plants. Sensors 2020, 20, 3208. [Google Scholar] [CrossRef]

| Plant Groups | Genotypes | N Treatments | Abbrev |

|---|---|---|---|

| 1 | B73 × Mo17 (Genotype 1) | High | G1H |

| 2 | B73 × Mo17 (Genotype 1) | Medium | G1M |

| 3 | B73 × Mo17(Genotype 1) | Low | G1L |

| 4 | P1105AM (Genotype 2) | High | G2H |

| 5 | P1105AM (Genotype 2) | Medium | G2M |

| 6 | P1105AM (Genotype 2) | Low | G2L |

| 1–6 combined | All combined | All combined | All |

| Data Collection | Sampling Days | # Samples | Variables |

|---|---|---|---|

| Hyperspectral images | 31 | 8631 | VNIR Spectra: 376–1044 nm with 1.22 nm interval. |

| Environmental data | 31 | 8631 | Air temperature (°C) |

| Sun radiation (W/m2) | |||

| Wind speed (m/s) | |||

| Solar zenith angle (degree) | |||

| Humidity (%) | |||

| Diurnal time (min) |

| Datasets | Number of Samples before the Quality Check | Number of Samples after the Quality Check |

|---|---|---|

| G1H | 8631 | 5070 |

| G1M | 8631 | 5092 |

| G1L | 8631 | 5083 |

| G2H | 8631 | 5108 |

| G2M | 8631 | 5084 |

| G2L | 8631 | 5093 |

| All | 51,789 | 30,530 |

| Environmental Variables | Min | Max |

|---|---|---|

| Sun radiation (W/m2) | 85.76 | 954.23 |

| Diurnal time (min) | 600 (at 10 a.m.) | 1050 (at 5:30 p.m.) |

| Solar zenith angle (degree) | 35.2 | 78.26 |

| Air temperature (°C) | 11.79 | 33.27 |

| Wind speed (m/s) | 0 | 8.3 |

| Humidity (%) | 26.52 | 97.06 |

| Groups | N | Mean | StDev | SE Mean | T-Value | p-Value |

|---|---|---|---|---|---|---|

| Raw NDVI | 31 | 0.000230 | 0.000174 | 0.000031 | 5.78 | <0.01 |

| Corrected NDVI | 31 | 0.0000472 | 0.0000248 | 0.0000045 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, D.; Rehman, T.U.; Zhang, L.; Maki, H.; Tuinstra, M.R.; Jin, J. Modeling of Environmental Impacts on Aerial Hyperspectral Images for Corn Plant Phenotyping. Remote Sens. 2021, 13, 2520. https://doi.org/10.3390/rs13132520

Ma D, Rehman TU, Zhang L, Maki H, Tuinstra MR, Jin J. Modeling of Environmental Impacts on Aerial Hyperspectral Images for Corn Plant Phenotyping. Remote Sensing. 2021; 13(13):2520. https://doi.org/10.3390/rs13132520

Chicago/Turabian StyleMa, Dongdong, Tanzeel U. Rehman, Libo Zhang, Hideki Maki, Mitchell R. Tuinstra, and Jian Jin. 2021. "Modeling of Environmental Impacts on Aerial Hyperspectral Images for Corn Plant Phenotyping" Remote Sensing 13, no. 13: 2520. https://doi.org/10.3390/rs13132520

APA StyleMa, D., Rehman, T. U., Zhang, L., Maki, H., Tuinstra, M. R., & Jin, J. (2021). Modeling of Environmental Impacts on Aerial Hyperspectral Images for Corn Plant Phenotyping. Remote Sensing, 13(13), 2520. https://doi.org/10.3390/rs13132520