1. Introduction

Earth observation satellites (EOSs) are platforms equipped with optical instruments in order to take photographs of specific areas at the request of users [

1]. Currently, EOSs have been extensively employed in scientific research, mainly in environment and disaster surveillance [

2], ocean monitoring [

3], agricultural harvesting [

4], etc. However, with the increase in multi-user and multi-satellite space application scenarios [

5,

6], it is becoming more difficult to meet various observation requirements under the limitation of satellite resources. Therefore, an effective EOS scheduling algorithm plays an important role to improve high-quality space-based information services, and not only guides the corresponding EOSs on how to perform the following actions, but also controls the time to start the observations [

5]. The main purpose is to maximize the observation profit within the limited observation time window and with other resources (for example, the available energy, the remaining data storage, etc.) [

7,

8].

The EOS scheduling problem (EOSSP) is well known as a complex non-deterministic polynomial (NP) hard problem and multiple objective combinational optimization problem [

9,

10]. Currently, inspired by increasing demands on scheduling EOSs effectively and efficiently, the study of the EOSSP has gained more and more attention. Wang et al. [

7] summarized that the EOSSP could be divided into time-continuous and time-discrete problems. In time-continuous models [

11,

12,

13], a continuous decision variable is introduced to represent the observation start time for each visible time window (VTW), which is defined to check whether tasks are scheduled or not. On the other hand, for time-discrete models [

14,

15,

16], each time window generates multiple observation tasks for the same target. In this way, each candidate task has a determined observation time, and binary decision variables are introduced to represent whether a task is operated in a specific time slice.

General solving algorithms are usually classified into exact method, heuristic, metaheuristic [

17,

18] and machine learning [

7]. Exact methods, such as branch and bound (BB) [

19] and mixed integer linear programming (MILP) [

15], have deterministic solving steps and could solve problems in polynomial time, but it is nearly impossible to build a deterministic model for a larger scale problem. Heuristic methods can be used to speed up the process by finding a satisfactory solution, but this method depends on a specific heuristic policy and the policy is not always feasible. Jang et al. [

20] proposed a heuristic solution approach to solve the image collection planning problem of the KOMPSAT-2 satellite. Liu et al. [

21] combined the neural network method and heuristic search algorithm and the result was superior to the existing heuristic search algorithm in terms of the overall profit. Alternatively, without relying on a specific heuristic policy, metaheuristic methods could provide a sufficiently high-quality and universal solution to an optimization problem. For example, Kim et al. [

22] proposed an optimal algorithm based on a genetic algorithm for synthetic aperture radar (SAR) imaging satellite constellation scheduling. Niu et al. [

23] presented a multi-objective genetic algorithm to solve the problem of satellite areal task scheduling during disaster emergency responses. Long et al. [

24] proposed a two-phase GA–SA hybrid algorithm for the EOSSP which is superior to the GA or SA algorithm alone. Although metaheuristic algorithms could gain better operation results and have been widely adopted in the EOSSP, they easily fall into local optimum [

25] due to the dependence on one certain mathematical model. Consequently, this makes us turn to deep reinforcement learning (DRL), which is known as a model-free solution and can autonomously build a general task scheduling strategy by training [

26]. It has the promising potential to be applied in combinatorial optimization problems [

27,

28,

29].

As a significant research domain in machine learning, DRL has achieved success in game playing and robot control. Moreover, recently, DRL has also gained more and more attention in optimization domains. Bello et al. [

27] presented a DRL framework using neural networks and a policy gradient algorithm for solving problems modeled as the traveling salesman problem (TSP). Additionally, for the TSP, Khalil et al. [

28] embedded a graph in DRL networks and it learnt effective policies. Furthermore, Nazari et al. [

29] gave an end-to-end framework with a parameterized stochastic policy for solving the vehicle routing problem (VRP), which is an expanded problem based on the TSP. Peng et al. [

30] presented a dynamic attention model with dynamic encoder–decoder architecture and obtained a good generalization performance in the VRP. Besides the TSP and VRP, another type of optimization problem, resource allocation [

25], also has been solved by RL. Khadilkar et al. [

31] adopted RL to schedule time resourced for a railway system, and it was found that the Q-learning algorithm is superior to heuristic approaches in effectiveness. Ye et al. [

32] proposed a decentralized resource allocation mechanism for vehicle-to-vehicle communications based on DRL and improved the communication resource allocation.

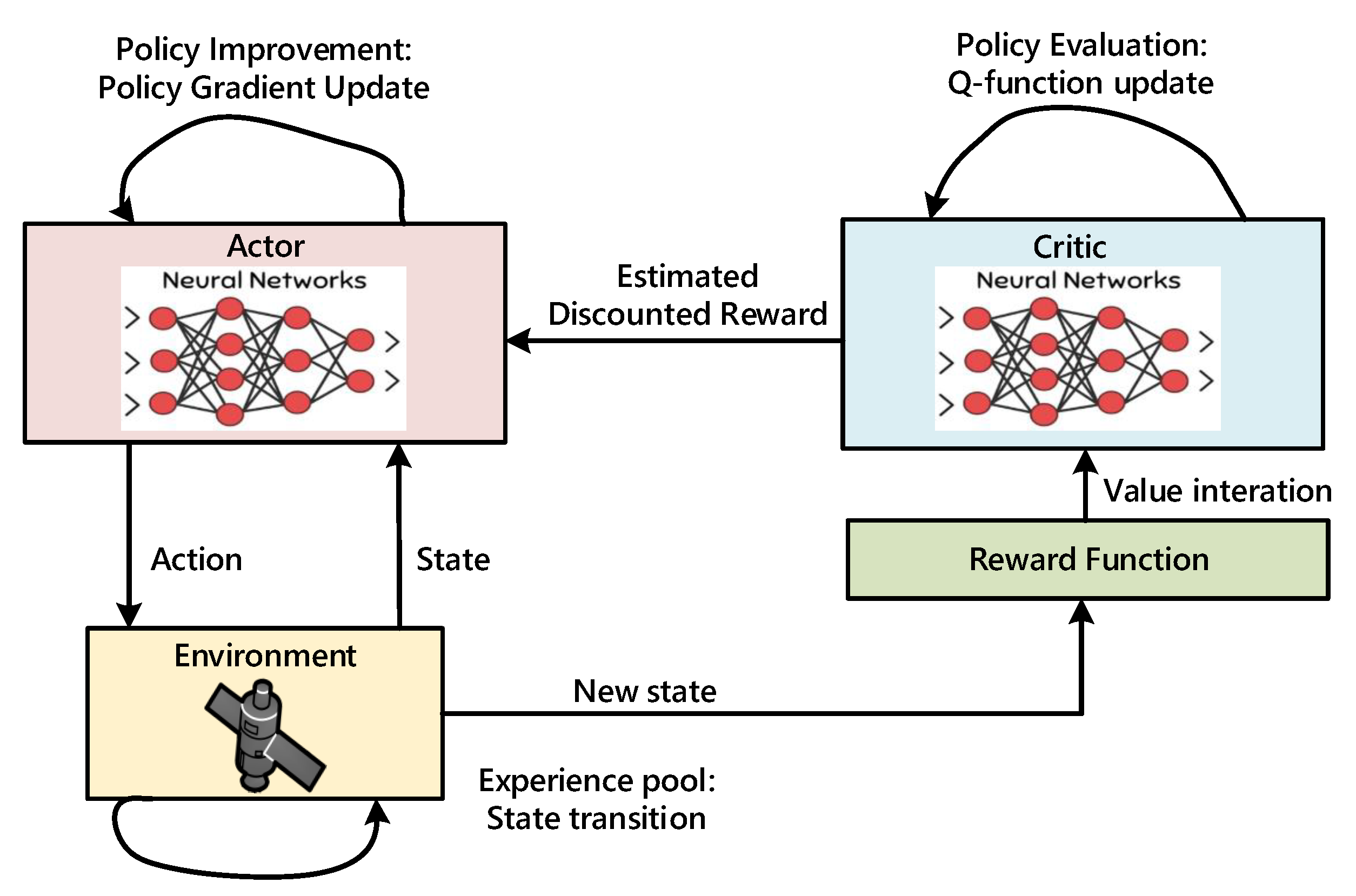

Inspired by the above applications of DRL, solving the EOSSP by using DRL has become a feasible solution. Hadj-Salah et al. [

33] adopted A2C to handle the EOSSP in order to reduce the time to completion of large-area requests. Wang et al. [

34] proposed a real-time online scheduling method for image satellites by importing A3C into satellite scheduling. Zhao et al. [

35] developed a two-phase neural combinatorial optimization RL method to address the EOSSP with the consideration of the transition time constraint and image quality criteria. Lam et al. [

36] proposed a training system based on RL which is fast enough to generate decisions in near real time.

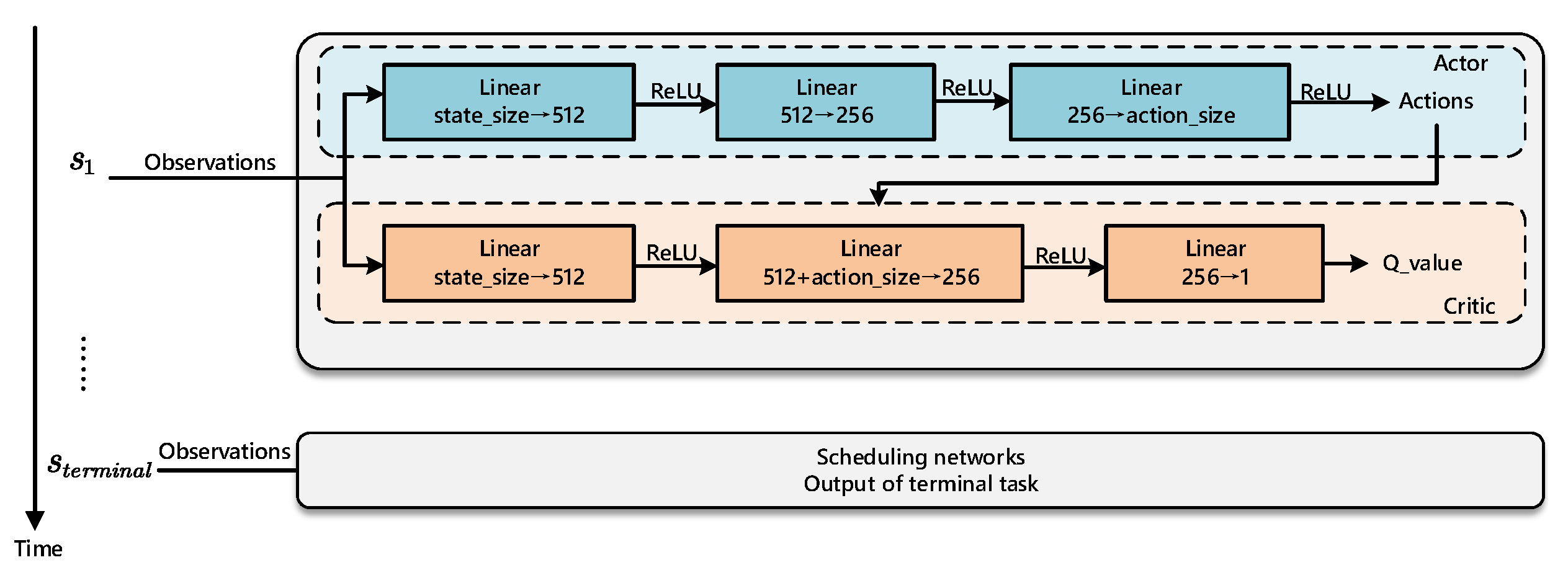

In the present paper, the EOS scheduling problems as a time-continuous model with multiple constraints are revised by adopting the deep deterministic policy gradient (DDPG) algorithm, and comparisons with the traditional metaheuristic methods are conducted with an increase in the task scale. The major highlights are summarized as follows:

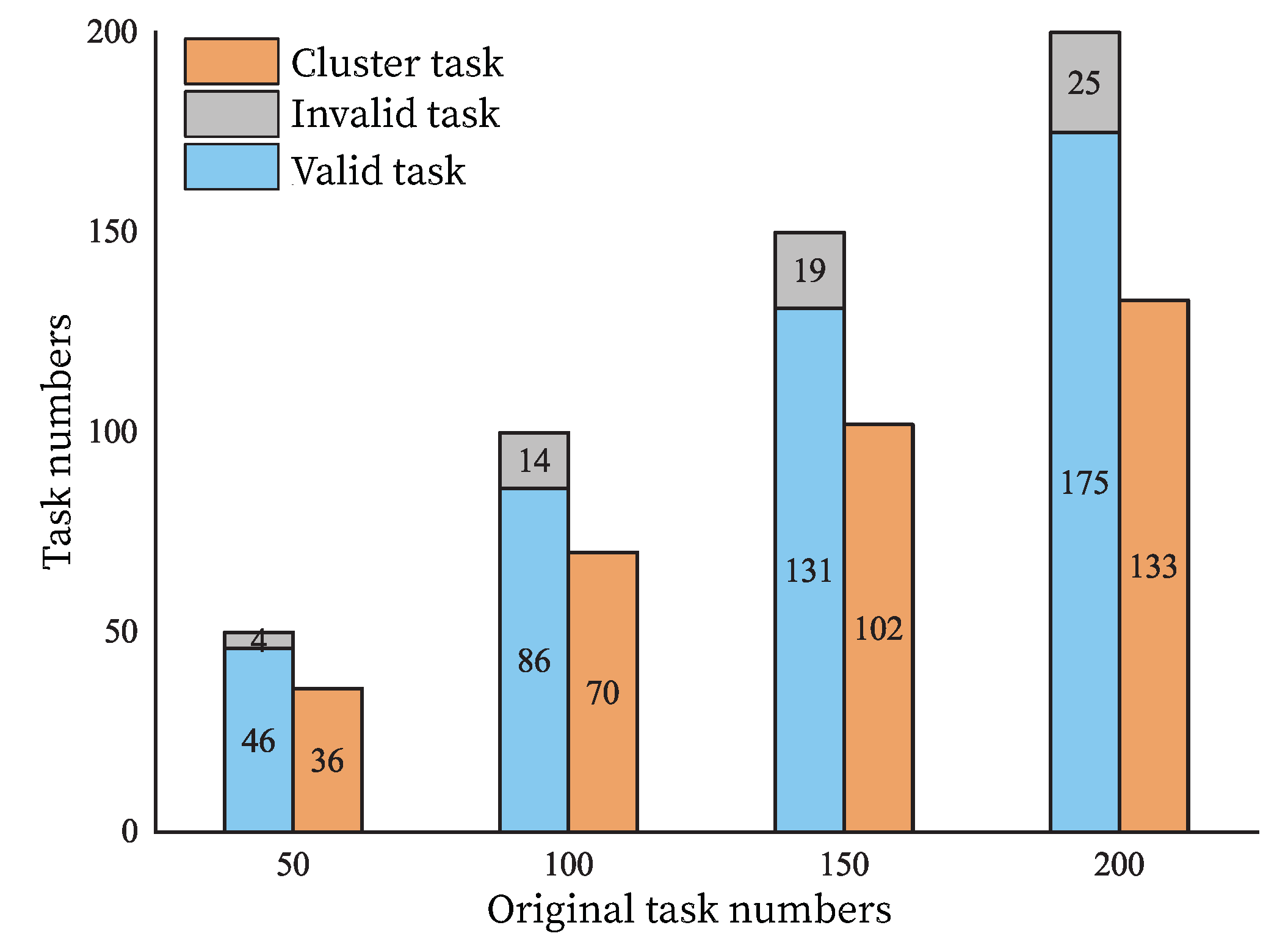

Aiming to enhance the task scheduling efficiency further, an improved graph-based minimum clique partition algorithm is introduced as a task clustering preprocess to decrease the task scale and improve the scheduling algorithm’s effect.

Different from previous studies, the EOSSP was considered as a time-discrete model when solving by RL algorithms. In this paper, a time-continuous model is established for the EOSSP, which could make accurate observation time decisions for each task by the DDPG algorithm.

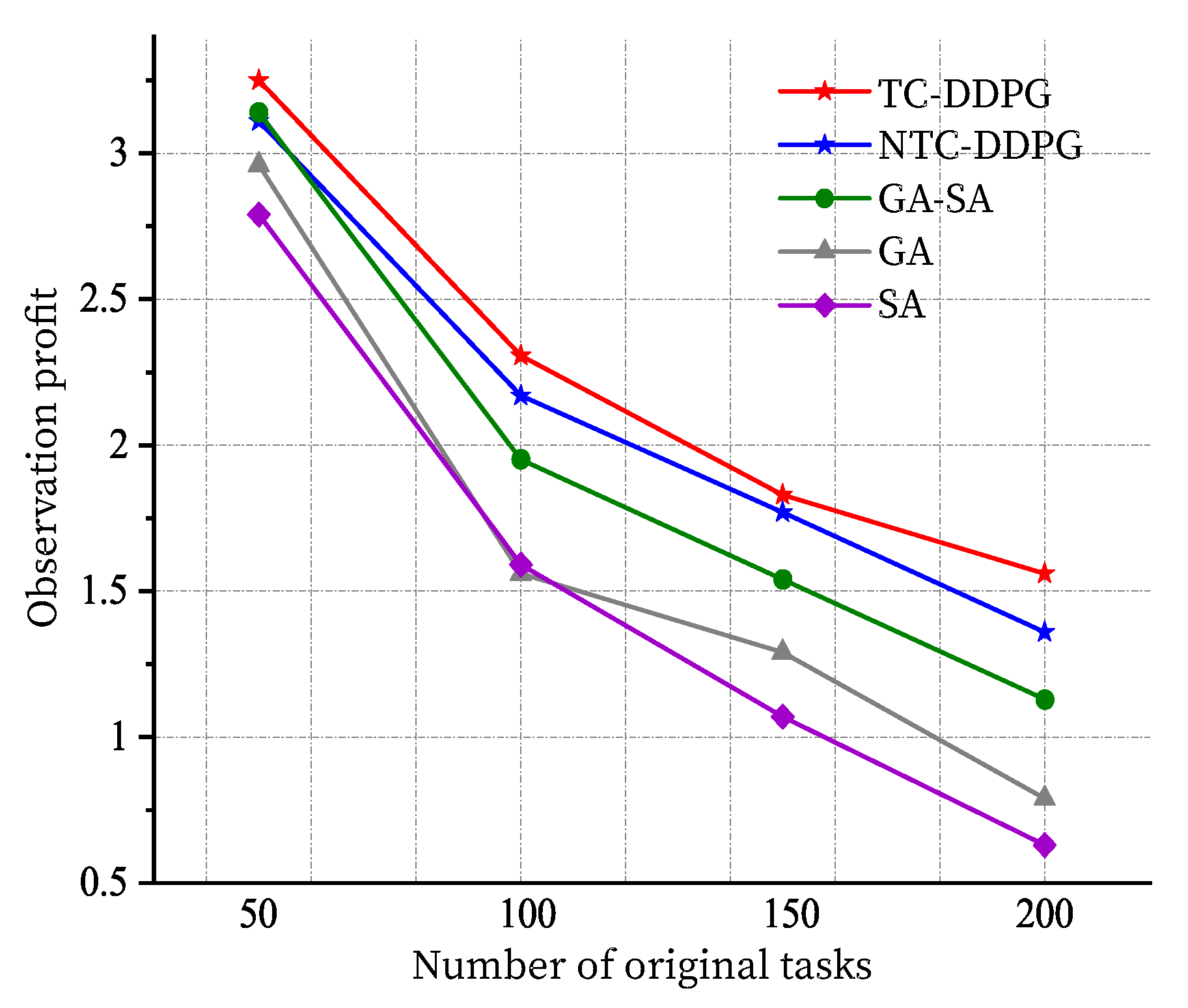

Considering practical engineering constraints, comparison experiments were implemented between the RL method and some metaheuristic methods, such as the GA, SA and GA–SA hybrid algorithm, to validate the feasibility of the DDPG algorithm.

2. Problem Description

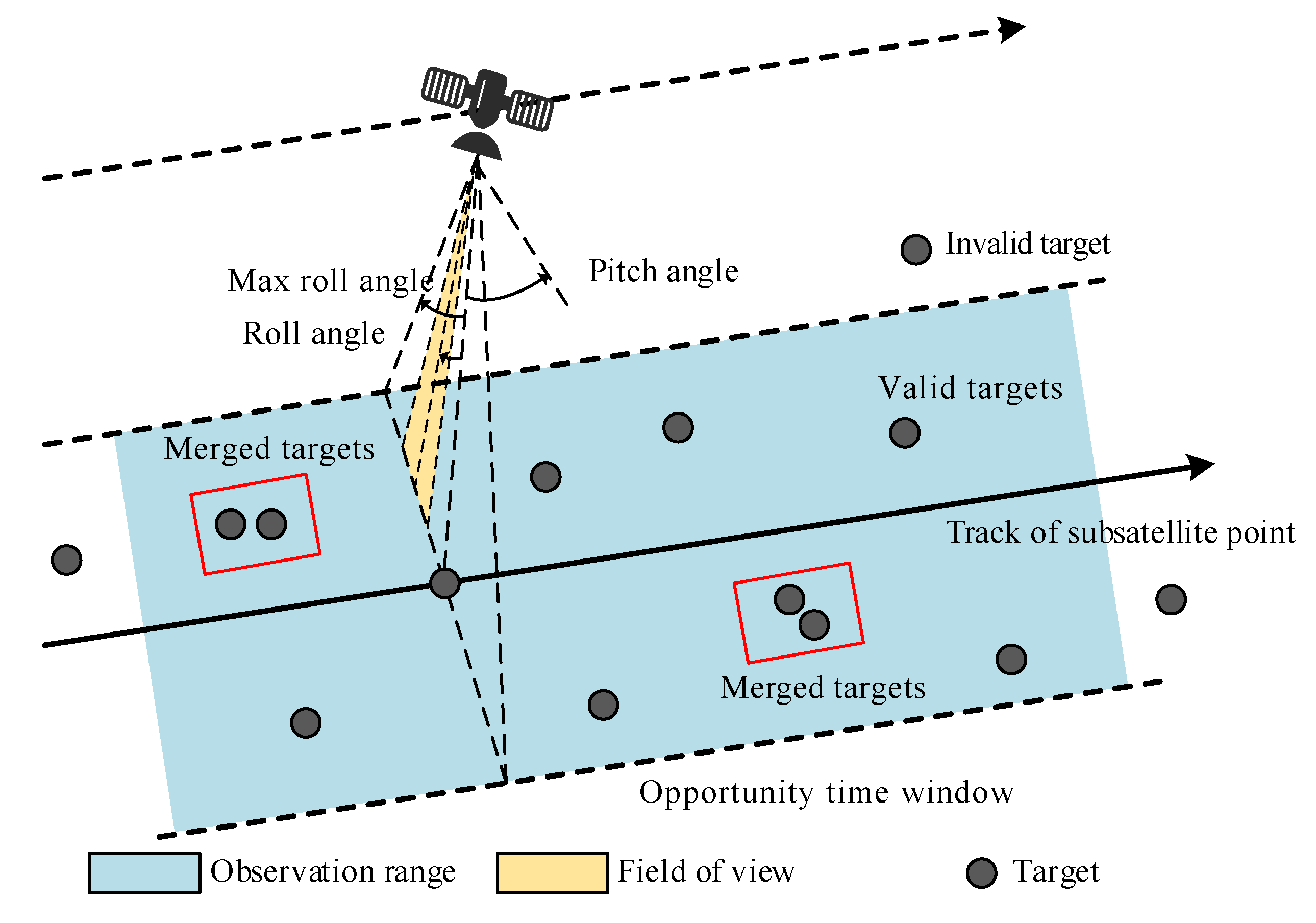

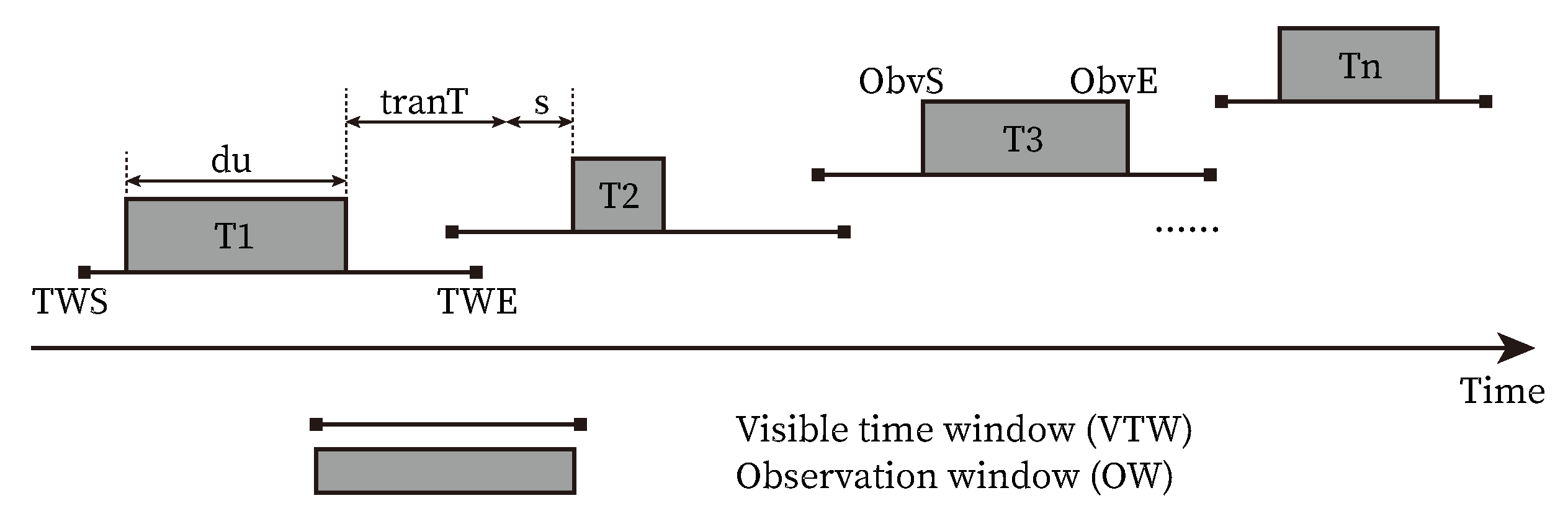

As shown in

Figure 1, an EOS can maneuver in the direction of three axes (roll, pitch and yaw) for transitions between every two sequential observation tasks. Usually, the mobility of the roll angle represents the slewing maneuverability of the EOS. The maneuvering of the pitch angle enables the targets to be observed in advance or over time. Observation targets are accessible within a period of a specific VTW, which is determined by the maximum off-nadir angle. The observation window (OW) defines the start and the end time for observing target in the VTW. Therefore, the task scheduling algorithm enables an EOS to conduct certain operations for the transformation between two sequential observation tasks, such as slew maneuvering and payload switching. Simultaneously, observation tasks are restricted in a specific time interval, and the observations must be carried out continuously and completely within the VTW [

37].

It is noted that targets outside the observation range are invisible, and are be seen as invalid, as shown in

Figure 1. An EOS could observe multiple targets simultaneously, and the observation task of the merged targets is defined as a clustered task in this study. Task clustering belongs to preprocessing for EOS task scheduling, which has gained more and more attention as it enables an EOS to finish more tasks at the cost of relatively few optical sensor opening times and satellite maneuver times. To clearly explain the EOSSP, herein, a summary of the most important notations in this paper is given in

Table 1.

2.1. Graph Clustering Model

In contrast to task scheduling without clustering, this strategy could save a lot of energy, especially with frequent observations. In addition, task clustering enables some previously conflicting tasks to be executed at the same time. The condition for merging multiple tasks into a clustering task is that these tasks can be finished with the same slewing angle and OW [

38], which constrains the task clustering process.

(1) Time window-related constraint

The longest observation duration

allowed for a sequential observation is limited because of the characteristic of the sensor. Therefore, the VTW should satisfy the following constraint:

Supposing that clustering task

is clustered from

, where:

The time window of clustered tasks should allow the satellite to finish all the component tasks in a common temporal interval.

(2) Slewing angle-related constraint

Multiple clustered tasks should guarantee that they can be completed with the same slewing angle. Let

denote the slewing angle when observing

and

denote the feasible slewing angle range, then Equation (

4) gives:

For the clustered task

, the slewing angle could be calculated by the mean value of

:

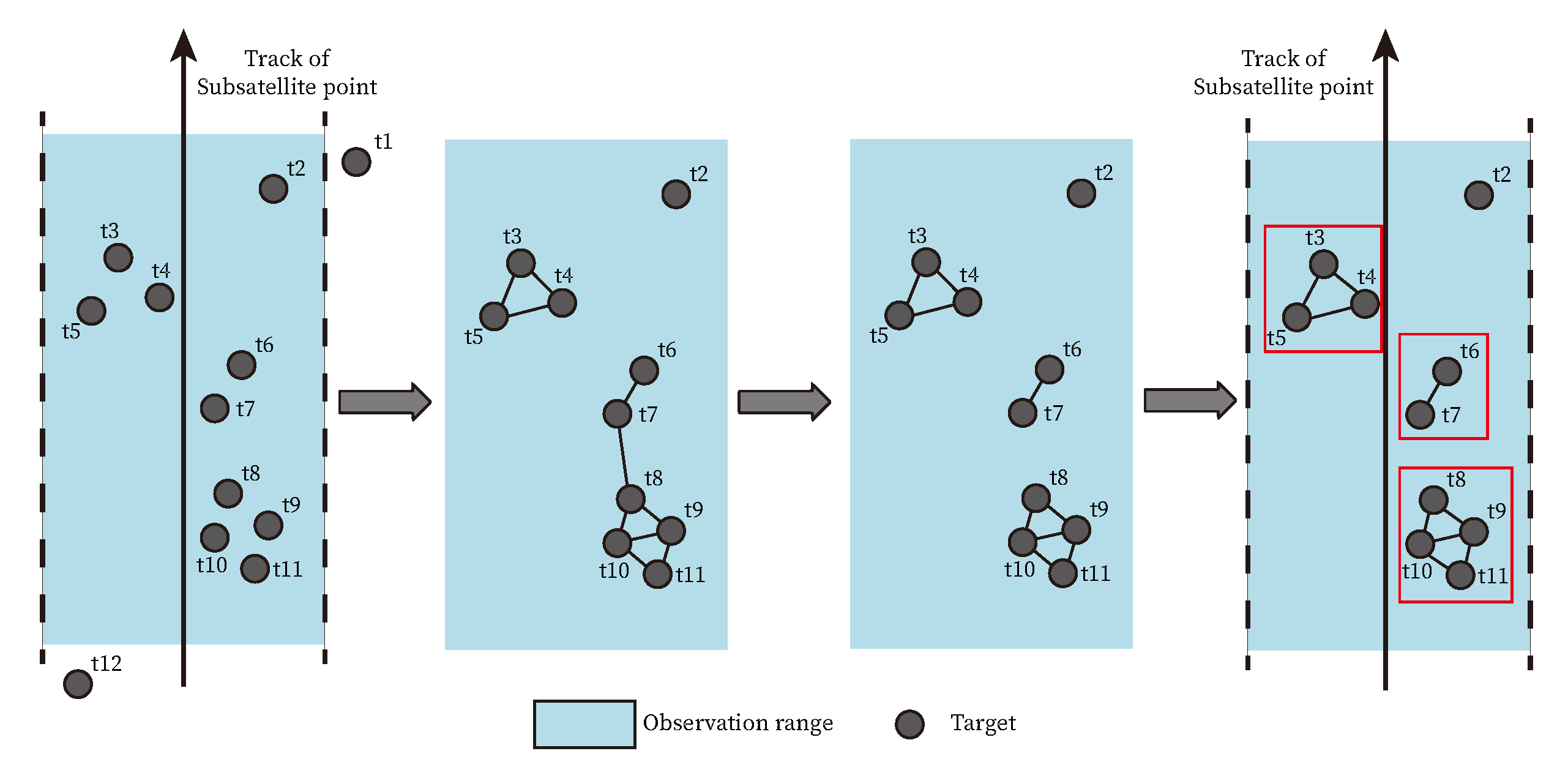

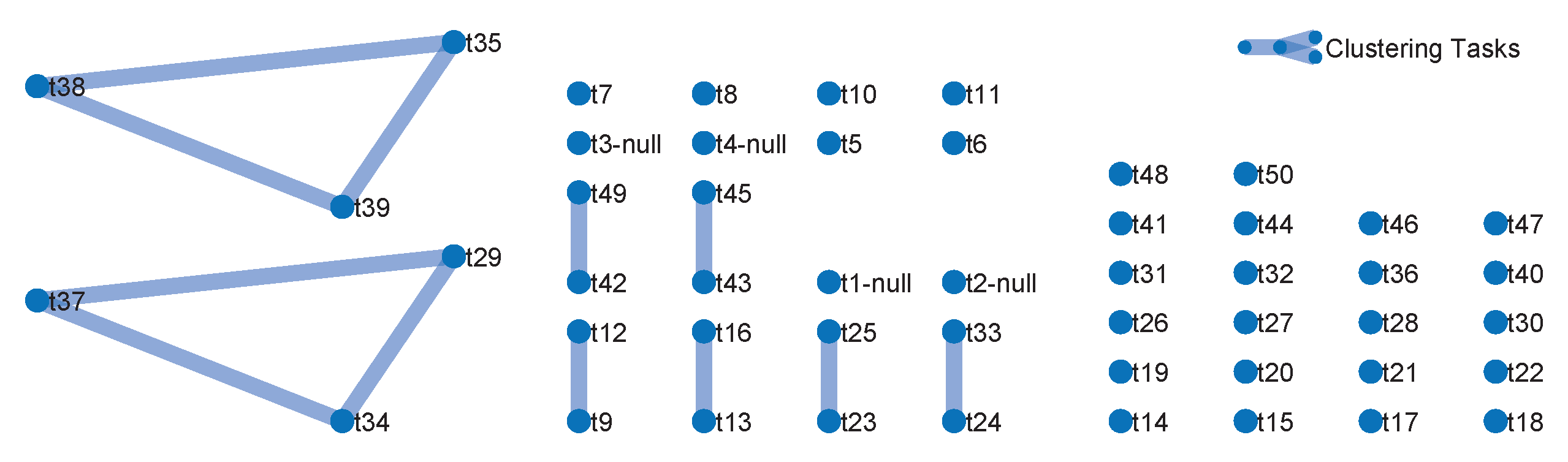

According to the constraints mentioned above, merged tasks need to be screened out, and the graph theory is used to build the clustering model. Firstly, we defined an undirected graph

, where

V is the set of vertexes and

represents all valid observation tasks in the

orbit,

E is the set of edges and

denotes the links between two tasks. In the graph clustering model, any two original observation tasks with the edge connection satisfying the constraint conditions can be regarded as a clustering task. While expanding to a multiple vertex condition, multiple original tasks can be merged into one clustering task if there are edge connections between any two vertexes. The connected vertexes form a clique, where all vertexes are connected with each other, as shown in

Figure 2,

,

and

can be seen as cliques and each clique is regarded as a clustering task.

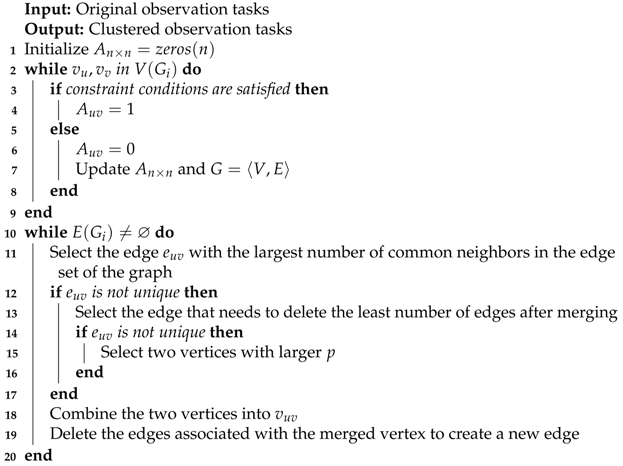

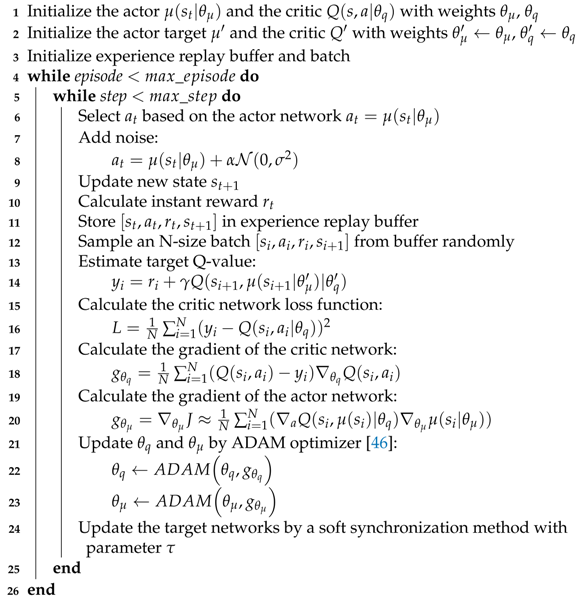

In this paper, an adjacency matrix is adopted to better illustrate the utility of the graph clustering model. These original tasks can be described by a set of vertexes in the graph theory. Consequently, the graph clustering model could be represented by the adjacency matrix . If and meet the clustering constraint conditions, the relationship between two tasks can be described as . Correspondingly, the element in the matrix , otherwise . Finally, the adjacency matrix consisting of 0 and 1 forms the graph clustering model in the orbit.

2.2. Task Scheduling Problem

2.2.1. Scheduling Model

In this paper, a time-continuous resource allocation model for the EOSSP is established. Continuous decision variables are introduced to represent the observation start time within each VTW and decision variables are defined to check whether tasks are scheduled or not.

Figure 3 gives a task sequential execution description in one orbit, where

and

stand for the start and end time of the VTW of an observation task, respectively,

d represents the observation duration time of a task,

and

s represent slewing angle maneuver time and preparation time, respectively, and

and

are the observation start time and end time.

2.2.2. Constraint Conditions

In this paper, the VTW is seen as the allocated resource, and the OWs for tasks are continuous decision variables to decide when to start the observation. The solution of the EOSSP model aims to schedule an observation sequence and maximize the observation profit, subject to corresponding constraints. In practical engineering scenarios, the following constraints are usually taken into account [

24]:

(1) VTW constraint

The VTW constraint ensures that the observation tasks can be executed within the VTW of EOSs in the observation process.

For

, where

N is the number of tasks,

where

represents the observation duration time of task

,

is the observation start time of

in the

orbit,

is defined as the observation end time.

and

represent the start and end time of the VTW for task

.

(2) Conflict constraint for task execution

The conflict constraint for task execution means that there is no crossover between any two tasks as the optical sensor cannot perform two observation tasks at the same time:

where

is a decision variable and denotes whether to transform execution from task

to task

.

means that

will be executed after

.

(3) Task conversion time constraint

Between any two sequential tasks, enough preparation time is required, mainly including slewing maneuvering time and sensor shutdown–restart setup time [

38], which could be described as the following formula:

For

and

,

where

is the preparation time for restarting the sensor and

is the slewing maneuver time from task

to

, and the slewing maneuver time can be calculated as the following formula:

In the above formula, and represent the observation slewing angle of and . denotes the angular velocity of the satellite slewing maneuver.

(4) Optical sensor boot time constraint

According to the power constraint of the optical payload, the observation time of a task cannot exceed the maximum operating time of the optical sensor,

(5) Storage size constraint

Limited by the total storage size in the satellite, the constraint could be described as the following equation,

where

M represents the total data storage capacity of the satellite in one orbit.

is the storage consumption per unit observation time in one orbit.

(6) Power consumption constraint

In each orbit, the energy to be consumed is limited by the maximum capacity, and the corresponding energy consumed by the sensor operation and slewing maneuver is mainly considered in this paper as:

In this formula, represents the energy consumption per unit time of observation operation. represents the energy consumption per unit time of the slewing maneuver from to . E is the total energy available for observation activities in one orbit.

2.2.3. Optimization Objectives

Models of observation satellite scheduling are always built as multiple objective optimization problems, and a scheduling algorithm aims to generate a compromise solution between objectives. Tangpattanakul et al. [

39] implemented an indicator-based multi-objective local search method for the EOSSP, whose objectives were to maximize the total profit and simultaneously to ensure the fairness of resource allocation among multiple users. Sometimes, energy balance and fuel consumption are designed as optimization objectives [

40,

41].

In this paper, to maximize the total observation profit, more tasks and tasks with higher priority were scheduled. Hence, the objective function

f was designed to maximize the total profit by the sum of priority associated with selected tasks.

This optimization objective function is subject to the constraint model mentioned above.

5. Conclusions

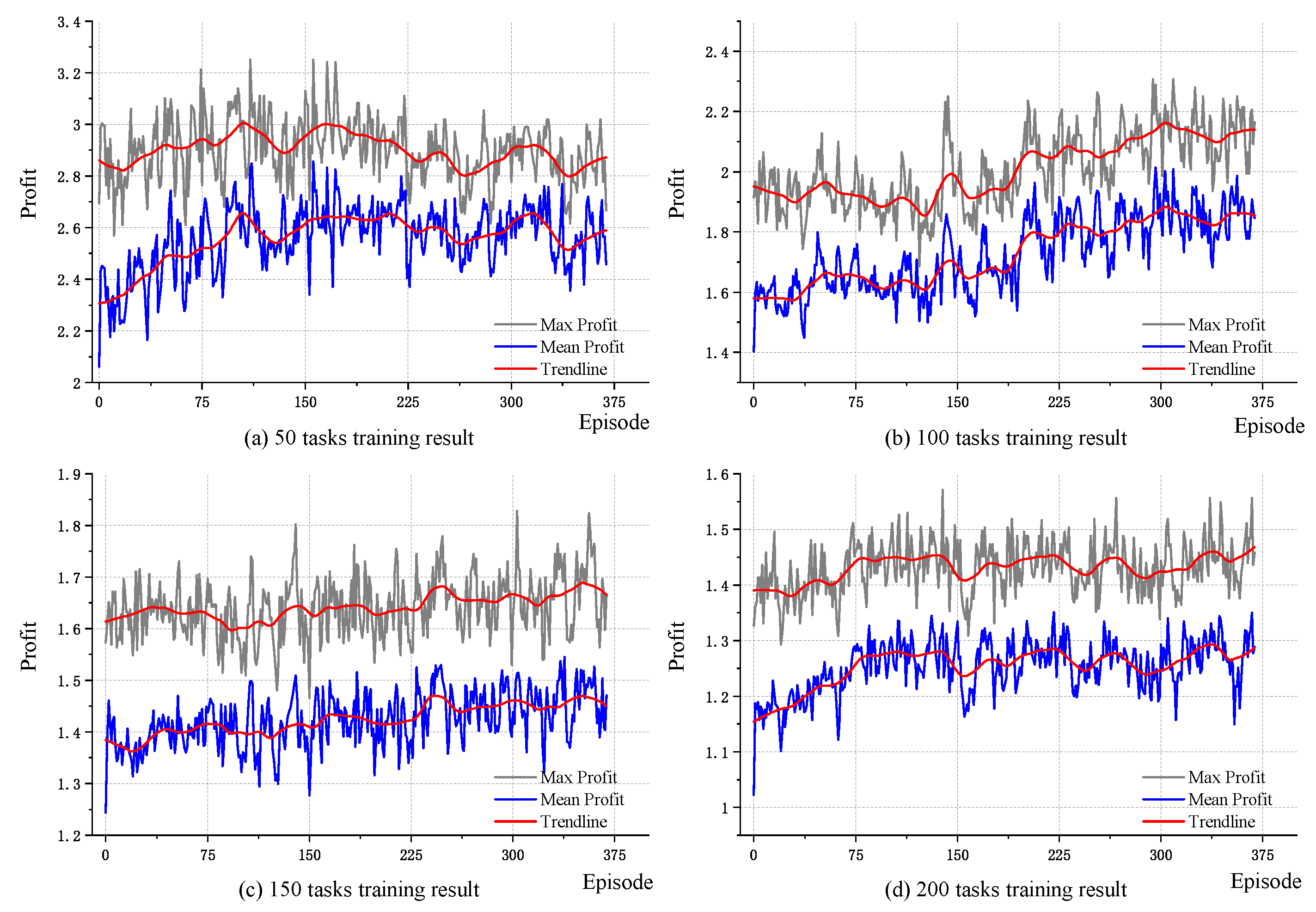

Observation satellite task scheduling policy plays a crucial role in providing high-quality space-based information services. In previous studies, many algorithms based on traditional optimization methods such as GA and SA have been successfully applied in the EOSSP. However, these methods depend upon a mathematical model, and with the increase in the task scale, they may fall into local optimum. In this paper, the EOSSP is considered as a time-continuous model with multiple constraints and, inspired by the progress of DRL and its model-free characteristics, a DRL-based algorithm is proposed to approach the EOSSP. In addition, to decrease the complexity of the solution, an improved graph-based minimum clique partition algorithm is proposed for the task clustering preprocess; this is a relatively new attempt in handling EOSSP optimization. The simulation results show that the DDPG algorithm combined with the task clustering process is practicable and achieves the expected performance. In addition, this solution has a higher optimization performance compared with traditional metaheuristic algorithms (GA, SA and GA–SA hybrid algorithm). In terms of scheduling profits, the experimental results indicate that the DDPG is feasible and efficient for the EOSSP even in a relatively large-scale situation.

Note that, in the present work, satellite constellation task scheduling problems were not addressed. In a future study, we will attempt to adopt multi-agent DRL methods to study the multiple satellite EOSSP.